文章目录

第1题:线性回归梯度下降

对线性模型 h θ ( x ) = θ ⊤ x h_\theta(x)=\theta^\top x hθ(x)=θ⊤x,给定训练集 { ( x ( i ) , y ( i ) ) } \{(x^{(i)}, y^{(i)})\} {(x(i),y(i))},推导其向量形式的最小二乘损失梯度下降更新公式为

θ : = θ + α ∑ i = 1 n ( y ( i ) − h θ ( x ( i ) ) ) x ( i ) \theta := \theta + \alpha \sum_{i=1}^n \big( y^{(i)} - h_{\theta}(x^{(i)}) \big) x^{(i)} θ:=θ+αi=1∑n(y(i)−hθ(x(i)))x(i)

解:

最小二乘目标函数 J ( θ ) J(\theta) J(θ) 定义为:

(线性模型预测结果与实际结果之间的差值)

J ( θ ) = 1 2 ∑ i = 1 n ( h θ ( x ( i ) ) − y ( i ) ) 2 J(\theta) = \frac{1}{2} \sum_{i=1}^n \left( h_\theta(x^{(i)}) - y^{(i)} \right)^2 J(θ)=21i=1∑n(hθ(x(i))−y(i))2

对 θ \theta θ 求梯度:

(使用链式法则)

∇ θ J ( θ ) = ∑ i = 1 n ( h θ ( x ( i ) ) − y ( i ) ) x ( i ) \nabla_\theta J(\theta) = \sum_{i=1}^n ( h_\theta(x^{(i)}) - y^{(i)} ) x^{(i)} ∇θJ(θ)=i=1∑n(hθ(x(i))−y(i))x(i)

梯度下降的核心思想是沿梯度的负方向更新参数:

θ : = θ − α ∇ θ J ( θ ) \theta := \theta - \alpha \nabla_\theta J(\theta) θ:=θ−α∇θJ(θ)

其中 α \alpha α 为学习率。将计算出的总梯度代入并交换符号:

θ : = θ + α ∑ i = 1 n ( y ( i ) − h θ ( x ( i ) ) ) x ( i ) \theta := \theta + \alpha \sum_{i=1}^n ( y^{(i)} - h_{\theta}(x^{(i)}) ) x^{(i)} θ:=θ+αi=1∑n(y(i)−hθ(x(i)))x(i)

加 1 2 \frac{1}{2} 21是为了简化梯度表达式,避免出现多余的系数 2,使更新公式更简洁、直观

第2题:交叉熵损失梯度

Cross Entropy Loss 定义如下: l c e ( ( t 1 , ... , t k ) , y ) = − log ( exp ( t y ) ∑ j exp ( t j ) ) l_{ce}((t_1, \dots, t_k), y) = -\log \Big( \frac{\exp(t_y)}{\sum_j \exp(t_j)} \Big) lce((t1,...,tk),y)=−log(∑jexp(tj)exp(ty))。令向量 t = ( t 1 , t 2 , ... , t k ) t=(t_1, t_2, \dots, t_k) t=(t1,t2,...,tk),推导 CEL 对任意 t i t_i ti求导是:

∂ l c e ( t , y ) ∂ t i = exp ( t i ) ∑ j exp ( t j ) − 1 { y = i } \frac{\partial l_{ce}(t, y)}{\partial t_i} = \frac{\exp(t_i)}{\sum_j \exp(t_j)} - 1\{y=i\} ∂ti∂lce(t,y)=∑jexp(tj)exp(ti)−1{y=i}

解:

化简损失函数

利用对数性质: log a b = log a − log b \log \frac{a}{b} = \log a - \log b logba=loga−logb 可得

l c e ( t , y ) = − t y + log ( ∑ j = 1 k e x p ( t j ) ) l_{ce}(t, y)= -t_y + \log \left( \sum_{j=1}^k exp(t_j) \right) lce(t,y)=−ty+log(j=1∑kexp(tj))

对 t i t_i ti 求偏导

第一项 − t y -t_y −ty:

∂ ( − t y ) ∂ t i = { − 1 , i = y 0 , i ≠ y \frac{\partial (-t_y)}{\partial t_i} =\begin{cases}-1, & i = y \\ 0, & i \neq y\end{cases} ∂ti∂(−ty)={−1,0,i=yi=y

第二项 log ( ∑ j = 1 k e x p ( t j ) ) \log \left( \sum_{j=1}^k exp(t_j) \right) log(∑j=1kexp(tj))

先对内部求导: ∂ ∂ t i ∑ j e x p ( t j ) = e x p ( t j ) \frac{\partial}{\partial t_i} \sum_j exp(t_j) = exp(t_j) ∂ti∂∑jexp(tj)=exp(tj)(其余项相对 t i t_i ti 是常数)

再使用链式法则: ∂ ∂ t i log ( ∑ j e x p ( t j ) ) = 1 ∑ j e x p ( t j ) ⋅ e x p ( t i ) = e x p ( t i ) ∑ j e x p ( t j ) \frac{\partial}{\partial t_i} \log \left( \sum_j exp(t_j) \right) = \frac{1}{\sum_j exp(t_j)} \cdot exp(t_i) = \frac{exp(t_i)}{\sum_j exp(t_j)} ∂ti∂log(j∑exp(tj))=∑jexp(tj)1⋅exp(ti)=∑jexp(tj)exp(ti)

最终结果为:

∂ l c e ( t , y ) ∂ t i = e x p ( t i ) ∑ j e x p ( t j ) − 1 { y = i } \frac{\partial l_{ce}(t, y)}{\partial t_i} = \frac{exp(t_i)}{\sum_j exp(t_j)} - \mathbf{1} {\{y=i\}} ∂ti∂lce(t,y)=∑jexp(tj)exp(ti)−1{y=i}

第3题:高斯假设下的最大似然估计

证明在高斯误差假定下,对线性模型 h θ ( x ) = θ ⊤ x h_\theta(x)=\theta^\top x hθ(x)=θ⊤x ,最大化参数似然 L ( θ ) L(\theta) L(θ)等价于最小化二乘损失 ∑ i = 1 n ( y ( i ) − θ T x ( i ) ) 2 \sum_{i=1}^{n}(y^{(i)}-\theta^Tx^{(i)})^2 ∑i=1n(y(i)−θTx(i))2。

解:

假设误差 ϵ ( i ) = y ( i ) − θ ⊤ x ( i ) \epsilon^{(i)} = y^{(i)} - \theta^\top x^{(i)} ϵ(i)=y(i)−θ⊤x(i) 服从独立同分布的高斯分布 N ( 0 , σ 2 ) \mathcal{N}(0, \sigma^2) N(0,σ2),即:

p ( ϵ ( i ) ) = 1 2 π σ exp ( − ( ϵ ( i ) ) 2 2 σ 2 ) p(\epsilon^{(i)}) = \frac{1}{\sqrt{2\pi}\sigma}\exp\left(-\frac{(\epsilon^{(i)})^2}{2\sigma^2}\right) p(ϵ(i))=2π σ1exp(−2σ2(ϵ(i))2)

因此:

p ( y ( i ) ∣ x ( i ) ; θ ) p(y^{(i)}|x^{(i)}; \theta) p(y(i)∣x(i);θ)代表了在模型参数为 θ \theta θ的情况下,给定第 i i i 个输入样本 x ( i ) x^{(i)} x(i),模型预测出结果 y ( i ) y^{(i)} y(i) 的概率(或概率密度)

p ( y ( i ) ∣ x ( i ) ; θ ) = 1 2 π σ exp ( − ( y ( i ) − θ ⊤ x ( i ) ) 2 2 σ 2 ) p(y^{(i)}|x^{(i)}; \theta) = \frac{1}{\sqrt{2\pi}\sigma}\exp\left(-\frac{(y^{(i)} - \theta^\top x^{(i)})^2}{2\sigma^2}\right) p(y(i)∣x(i);θ)=2π σ1exp(−2σ2(y(i)−θ⊤x(i))2)

似然函数为:

似然函数 L ( θ ) L(\theta) L(θ)就是在 θ \theta θ取不同值时,计算出当前数据出现的概率。会选择让这个值最大的那个 θ \theta θ,这就是最大似然估计 (MLE)。

L ( θ ) = ∏ i = 1 n p ( y ( i ) ∣ x ( i ) ; θ ) = ∏ i = 1 n 1 2 π σ exp ( − ( y ( i ) − θ ⊤ x ( i ) ) 2 2 σ 2 ) \begin{aligned} L(\theta) &= \prod_{i=1}^{n} p(y^{(i)}|x^{(i)}; \theta) \\ &= \prod_{i=1}^{n} \frac{1}{\sqrt{2\pi}\sigma}\exp\left(-\frac{(y^{(i)} - \theta^\top x^{(i)})^2}{2\sigma^2}\right) \end{aligned} L(θ)=i=1∏np(y(i)∣x(i);θ)=i=1∏n2π σ1exp(−2σ2(y(i)−θ⊤x(i))2)

对数似然为:

由于样本通常是独立的,计算总的似然值需要将所有样本的概率相乘,计算比较困难,所以取对数,把乘法变成加法

log L ( θ ) = ∑ i = 1 n [ log 1 2 π σ − ( y ( i ) − θ ⊤ x ( i ) ) 2 2 σ 2 ] = n log 1 2 π σ − 1 2 σ 2 ∑ i = 1 n ( y ( i ) − θ ⊤ x ( i ) ) 2 \begin{aligned} \log L(\theta) &= \sum_{i=1}^{n} \left[ \log \frac{1}{\sqrt{2\pi}\sigma} - \frac{(y^{(i)} - \theta^\top x^{(i)})^2}{2\sigma^2} \right] \\ &= n \log \frac{1}{\sqrt{2\pi}\sigma} - \frac{1}{2\sigma^2} \sum_{i=1}^{n} (y^{(i)} - \theta^\top x^{(i)})^2 \end{aligned} logL(θ)=i=1∑n[log2π σ1−2σ2(y(i)−θ⊤x(i))2]=nlog2π σ1−2σ21i=1∑n(y(i)−θ⊤x(i))2

最大化 log L ( θ ) \log L(\theta) logL(θ) 等价于最小化 ∑ i = 1 n ( y ( i ) − θ ⊤ x ( i ) ) 2 \sum_{i=1}^{n}(y^{(i)} - \theta^\top x^{(i)})^2 ∑i=1n(y(i)−θ⊤x(i))2。

第4题:Logistic回归的NLL损失

对 Logistic 回归模型 h θ ( x ) = g ( θ ⊤ x ) = 1 1 + e − θ ⊤ x h_{\theta}(x)=g(\theta ^\top x) = \frac{1}{1+e^{-\theta^\top x}} hθ(x)=g(θ⊤x)=1+e−θ⊤x1,推导其在单样本 ( x , y ) (x, y) (x,y)下的 NLL(negative log likehiloog)损失,以及损失对特定参数 θ j \theta_j θj的导数为 ( h θ ( x ) − y ) x j (h_\theta(x)-y)x_j (hθ(x)−y)xj。

提示: Logistic 回归预测概率的统一形式为 P ( y ∣ x ; θ ) = ( h θ ( x ) ) y ( 1 − h θ ( x ) ) 1 − y P(y \mid x; \theta) = \big(h_{\theta}(x) \big)^y \big(1-h_{\theta}(x) \big)^{1-y} P(y∣x;θ)=(hθ(x))y(1−hθ(x))1−y

解:

根据提示,Logistic回归的概率模型为:

P ( y ∣ x ; θ ) = ( h θ ( x ) ) y ( 1 − h θ ( x ) ) 1 − y P(y|x; \theta) = (h_\theta(x))^y(1 - h_\theta(x))^{1-y} P(y∣x;θ)=(hθ(x))y(1−hθ(x))1−y

其中 y ∈ { 0 , 1 } y \in \{0, 1\} y∈{0,1}, h θ ( x ) = g ( θ ⊤ x ) = 1 1 + e − θ ⊤ x h_\theta(x) = g(\theta^\top x) = \frac{1}{1+e^{-\theta^\top x}} hθ(x)=g(θ⊤x)=1+e−θ⊤x1。

对数似然函数为:

log P ( y ∣ x ; θ ) = y log ( h θ ( x ) ) + ( 1 − y ) log ( 1 − h θ ( x ) ) \log P(y|x; \theta) = y \log(h_\theta(x)) + (1 - y) \log(1 - h_\theta(x)) logP(y∣x;θ)=ylog(hθ(x))+(1−y)log(1−hθ(x))

NLL 损失函数为:

由于概率 P ( y ∣ x ; θ ) P(y|x; \theta) P(y∣x;θ)取值范围 0 ≤ P ≤ 1 0 \le P \le 1 0≤P≤1,对数函数的性质,当 x x x在 ( 0 , 1 ] (0, 1] (0,1]之间时, log ( x ) \log(x) log(x)的值是负数,所以NLL损失为 − log P ( y ∣ x ; θ ) -\log P(y|x; \theta) −logP(y∣x;θ)

N L L ( x , y ; θ ) = − log P ( y ∣ x ; θ ) = − y log ( h θ ( x ) ) − ( 1 − y ) log ( 1 − h θ ( x ) ) NLL(x, y; \theta) = -\log P(y|x; \theta) = -y \log(h_\theta(x)) - (1 - y) \log(1 - h_\theta(x)) NLL(x,y;θ)=−logP(y∣x;θ)=−ylog(hθ(x))−(1−y)log(1−hθ(x))

对 θ j \theta_j θj 求导

首先注意到 Sigmoid 函数 g ( z ) g(z) g(z) 的导数特性: g ′ ( z ) = g ( z ) ( 1 − g ( z ) ) g'(z) = g(z)(1 - g(z)) g′(z)=g(z)(1−g(z)),所以:

∂ h θ ( x ) ∂ θ j = h θ ( x ) ( 1 − h θ ( x ) ) ⋅ x j \frac{\partial h_\theta(x)}{\partial \theta_j} = h_\theta(x)(1 - h_\theta(x)) \cdot x_j ∂θj∂hθ(x)=hθ(x)(1−hθ(x))⋅xj

因此,对 N L L NLL NLL 求导:

∂ N L L ∂ θ j = − y 1 h θ ( x ) ∂ h θ ( x ) ∂ θ j − ( 1 − y ) 1 1 − h θ ( x ) ( − ∂ h θ ( x ) ∂ θ j ) = − y 1 h θ ( x ) ⋅ h θ ( x ) ( 1 − h θ ( x ) ) x j + ( 1 − y ) 1 1 − h θ ( x ) ⋅ h θ ( x ) ( 1 − h θ ( x ) ) x j = − y ( 1 − h θ ( x ) ) x j + ( 1 − y ) h θ ( x ) x j = ( − y + y h θ ( x ) + h θ ( x ) − y h θ ( x ) ) x j = ( h θ ( x ) − y ) x j \begin{aligned} \frac{\partial NLL}{\partial \theta_j} &= -y \frac{1}{h_\theta(x)} \frac{\partial h_\theta(x)}{\partial \theta_j} - (1 - y) \frac{1}{1 - h_\theta(x)} \left( -\frac{\partial h_\theta(x)}{\partial \theta_j} \right) \\ &= -y \frac{1}{h_\theta(x)} \cdot h_\theta(x)(1 - h_\theta(x))x_j + (1 - y) \frac{1}{1 - h_\theta(x)} \cdot h_\theta(x)(1 - h_\theta(x))x_j \\ &= -y(1 - h_\theta(x))x_j + (1 - y)h_\theta(x)x_j \\ &= (-y + y h_\theta(x) + h_\theta(x) - y h_\theta(x))x_j \\ &= (h_\theta(x) - y)x_j \end{aligned} ∂θj∂NLL=−yhθ(x)1∂θj∂hθ(x)−(1−y)1−hθ(x)1(−∂θj∂hθ(x))=−yhθ(x)1⋅hθ(x)(1−hθ(x))xj+(1−y)1−hθ(x)1⋅hθ(x)(1−hθ(x))xj=−y(1−hθ(x))xj+(1−y)hθ(x)xj=(−y+yhθ(x)+hθ(x)−yhθ(x))xj=(hθ(x)−y)xj

第5题:Poisson分布的指数族形式

已知指数分布族定义如下: p ( y ; η ) = b ( y ) exp ( η ⊤ y − a ( η ) ) p(y;\eta) = b(y) \exp(\eta^\top y - a(\eta)) p(y;η)=b(y)exp(η⊤y−a(η))。推导Poisson 分布的指数分布族形式,并构建 Poisson 分布对应的广义线性模型。其中,Poisson 分布 Pois( λ \lambda λ) 的概率密度函数如下: P ( X = k ) = λ k e − λ k ! P(X=k)=\frac{\lambda^k e^{-\lambda}}{k!} P(X=k)=k!λke−λ

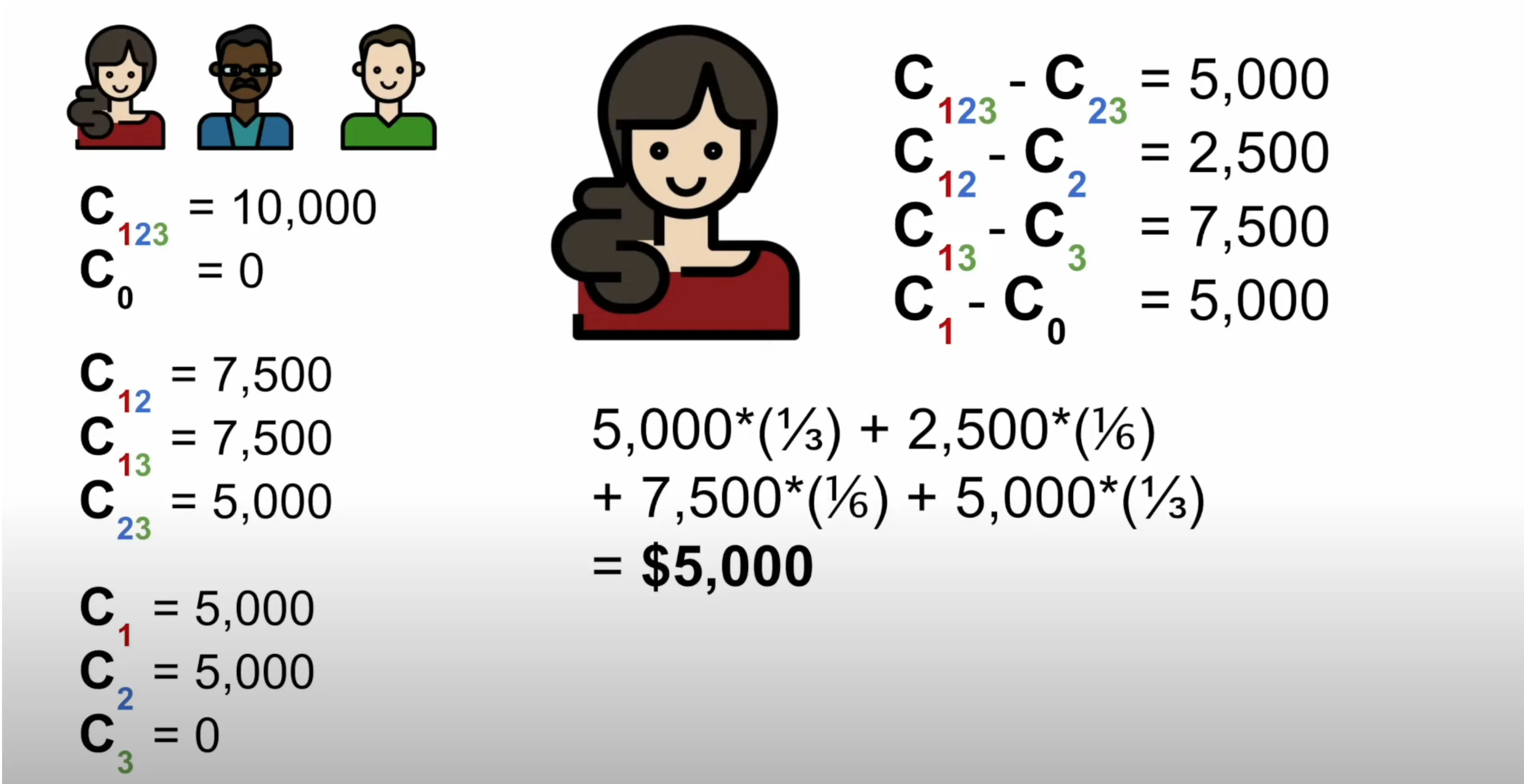

第6题:Shapley值计算

计算以下 3 人团队的 shapley values ϕ 1 \phi_1 ϕ1、 ϕ 2 \phi_2 ϕ2、 ϕ 3 \phi_3 ϕ3。

Shapley 值来源于 合作博弈论(cooperative game theory),它衡量 每个参与者(特征、玩家)在整体贡献中的"公平分配价值"。

- 设有一个玩家集合 N = 1 , 2 , ... , n N = {1, 2, \dots, n} N=1,2,...,n

- C ( S ) C(S) C(S) 是子集 S ⊆ N S \subseteq N S⊆N 的收益(或价值函数)

- 目标:给每个玩家 i ∈ N i \in N i∈N分配一个数 ϕ i ( v ) \phi_i(v) ϕi(v),表示其在整体收益 C ( N ) C(N) C(N) 中的 公平贡献。

解:

对每个玩家 i i i: ϕ i = ∑ S ⊆ N ∖ i ∣ S ∣ ! , ( n − ∣ S ∣ − 1 ) ! n ! [ C ( S ∪ i ) − C ( S ) ] \phi_i = \sum_{S \subseteq N \setminus {i}} \frac{|S|!, (n-|S|-1)!}{n!} \big[ C(S \cup {i}) - C(S) \big] ϕi=∑S⊆N∖in!∣S∣!,(n−∣S∣−1)![C(S∪i)−C(S)]

0 ! = 1 0!=1 0!=1那么 0 个元素有多少种排列?只有 1 种:什么都不排(空排列)

玩家1的shapley值:

ϕ 1 = 0 ! ⋅ 2 ! 3 ! [ C 1 − C 0 ] + 1 ! ⋅ 1 ! 3 ! [ C 12 − C 2 ] + 1 ! ⋅ 1 ! 3 ! [ C 13 − C 3 ] + 2 ! ⋅ 0 ! 3 ! [ C 123 − C 23 ] = 1 3 [ 5000 − 0 ] + 1 6 [ 7500 − 5000 ] + 1 6 [ 7500 − 0 ] + 1 3 [ 10000 − 5000 ] = 5000 3 + 2500 6 + 7500 6 + 5000 3 = 10000 3 + 10000 6 = 20000 + 10000 6 = 30000 6 = 5000 \begin{aligned} \phi_1 &= \frac{0! \cdot 2!}{3!}[C_1 - C_0] + \frac{1! \cdot 1!}{3!}[C_{12} - C_2] + \frac{1! \cdot 1!}{3!}[C_{13} - C_3] + \frac{2! \cdot 0!}{3!}[C_{123} - C_{23}] \\ &= \frac{1}{3}[5000 - 0] + \frac{1}{6}[7500 - 5000] + \frac{1}{6}[7500 - 0] + \frac{1}{3}[10000 - 5000]\\ &=\frac{5000}{3} + \frac{2500}{6} + \frac{7500}{6} + \frac{5000}{3} = \frac{10000}{3} + \frac{10000}{6} = \frac{20000 + 10000}{6} = \frac{30000}{6} \\ &= 5000 \end{aligned} ϕ1=3!0!⋅2![C1−C0]+3!1!⋅1![C12−C2]+3!1!⋅1![C13−C3]+3!2!⋅0![C123−C23]=31[5000−0]+61[7500−5000]+61[7500−0]+31[10000−5000]=35000+62500+67500+35000=310000+610000=620000+10000=630000=5000

玩家2的shapley值:

ϕ 2 = 0 ! ⋅ 2 ! 3 ! [ C 2 − C 0 ] + 1 ! ⋅ 1 ! 3 ! [ C 12 − C 1 ] + 1 ! ⋅ 1 ! 3 ! [ C 23 − C 3 ] + 2 ! ⋅ 0 ! 3 ! [ C 123 − C 13 ] = 1 3 [ 5000 − 0 ] + 1 6 [ 7500 − 5000 ] + 1 6 [ 5000 − 0 ] + 1 3 [ 10000 − 7500 ] = 5000 3 + 2500 6 + 5000 6 + 2500 3 = 3750 \begin{aligned} \phi_2 &= \frac{0! \cdot 2!}{3!}[C_2 - C_0] + \frac{1! \cdot 1!}{3!}[C_{12} - C_1] + \frac{1! \cdot 1!}{3!}[C_{23} - C_3] + \frac{2! \cdot 0!}{3!}[C_{123} - C_{13}] \\ &= \frac{1}{3}[5000 - 0] + \frac{1}{6}[7500 - 5000] + \frac{1}{6}[5000 - 0] + \frac{1}{3}[10000 - 7500]\\ &=\frac{5000}{3} + \frac{2500}{6} + \frac{5000}{6} + \frac{2500}{3} \\ &= 3750 \end{aligned} ϕ2=3!0!⋅2![C2−C0]+3!1!⋅1![C12−C1]+3!1!⋅1![C23−C3]+3!2!⋅0![C123−C13]=31[5000−0]+61[7500−5000]+61[5000−0]+31[10000−7500]=35000+62500+65000+32500=3750

玩家3的shapley值:

ϕ 3 = 0 ! ⋅ 2 ! 3 ! [ C 3 − C 0 ] + 1 ! ⋅ 1 ! 3 ! [ C 13 − C 1 ] + 1 ! ⋅ 1 ! 3 ! [ C 23 − C 2 ] + 2 ! ⋅ 0 ! 3 ! [ C 123 − C 12 ] = 1 3 [ 0 − 0 ] + 1 6 [ 7500 − 5000 ] + 1 6 [ 5000 − 5000 ] + 1 3 [ 10000 − 7500 ] = 0 3 + 2500 6 + 0 6 + 2500 3 = 1250 \begin{aligned} \phi_3 &= \frac{0! \cdot 2!}{3!}[C_3 - C_0] + \frac{1! \cdot 1!}{3!}[C_{13} - C_1] + \frac{1! \cdot 1!}{3!}[C_{23} - C_2] + \frac{2! \cdot 0!}{3!}[C_{123} - C_{12}] \\ &= \frac{1}{3}[0 - 0] + \frac{1}{6}[7500 - 5000] + \frac{1}{6}[5000 - 5000] + \frac{1}{3}[10000 - 7500]\\ &=\frac{0}{3} + \frac{2500}{6} + \frac{0}{6} + \frac{2500}{3} \\ &= 1250 \end{aligned} ϕ3=3!0!⋅2![C3−C0]+3!1!⋅1![C13−C1]+3!1!⋅1![C23−C2]+3!2!⋅0![C123−C12]=31[0−0]+61[7500−5000]+61[5000−5000]+31[10000−7500]=30+62500+60+32500=1250

计算前面两个后,可以根据对称性直接得到

ϕ 3 = 10000 − ϕ 1 − ϕ 2 = 10000 − 5000 − 3750 = 1250 \phi_3 = 10000-\phi_1-\phi_2=10000-5000-3750=1250 ϕ3=10000−ϕ1−ϕ2=10000−5000−3750=1250

第7题:协方差矩阵性质

基于协方差矩阵定义 Σ = Cov ( X ) \Sigma = \text{Cov}(X) Σ=Cov(X)证明:

- Σ \Sigma Σ为对称矩阵;

- Σ \Sigma Σ半正定,记 Σ ≥ 0 \Sigma \ge 0 Σ≥0,即对任意向量 z ∈ R d z \in \mathbb{R}^d z∈Rd 有 z ⊤ Σ z ≥ 0 z^\top \Sigma z \ge 0 z⊤Σz≥0。

协方差矩阵的定义

设随机向量 X = ( X 1 , X 2 , ... , X d ) ⊤ ∈ R d X = (X_1, X_2, \dots, X_d)^\top \in \mathbb{R}^d X=(X1,X2,...,Xd)⊤∈Rd,其期望为 μ = E [ X ] \mu = \mathbb{E}[X] μ=E[X]

协方差矩阵定义为

Σ = C o v ( X ) = E [ ( X − μ ) ( X − μ ) ⊤ ] \Sigma = \mathrm{Cov}(X) = \mathbb{E}[(X-\mu)(X-\mu)^\top] Σ=Cov(X)=E[(X−μ)(X−μ)⊤]等价地,其第 ( i , j ) (i,j) (i,j) 个元素为

Σ i j = C o v ( X i , X j ) = E [ ( X i − E [ X i ] ) ( X j − E [ X j ] ) ] \Sigma_{ij} = \mathrm{Cov}(X_i, X_j) = \mathbb{E}[(X_i-\mathbb{E}[X_i])(X_j-\mathbb{E}[X_j])] Σij=Cov(Xi,Xj)=E[(Xi−E[Xi])(Xj−E[Xj])]

解:

Σ ⊤ = ( E [ ( X − μ ) ( X − μ ) ⊤ ] ) ⊤ = E [ ( ( X − μ ) ( X − μ ) ⊤ ) ⊤ ] ( 期望与转置算子可交换 ) = E [ ( ( X − μ ) ⊤ ) ⊤ ( X − μ ) ⊤ ] ( 使用 ( A B ) ⊤ = B ⊤ A ⊤ ) = E [ ( X − μ ) ( X − μ ) ⊤ ] ( 因为 ( A ⊤ ) ⊤ = A ) = Σ \begin{aligned} \Sigma^\top &= \left( E[(X - \mu)(X - \mu)^\top] \right)^\top \\ &= E\left[ \left( (X - \mu)(X - \mu)^\top \right)^\top \right] \quad (\text{期望与转置算子可交换}) \\ &= E\left[ \left( (X - \mu)^\top \right)^\top (X - \mu)^\top \right] \quad (\text{使用 } (AB)^\top = B^\top A^\top) \\ &= E\left[ (X - \mu)(X - \mu)^\top \right] \quad (\text{因为 } (A^\top)^\top = A) \\ &= \Sigma \end{aligned} Σ⊤=(E[(X−μ)(X−μ)⊤])⊤=E[((X−μ)(X−μ)⊤)⊤](期望与转置算子可交换)=E[((X−μ)⊤)⊤(X−μ)⊤](使用 (AB)⊤=B⊤A⊤)=E[(X−μ)(X−μ)⊤](因为 (A⊤)⊤=A)=Σ

所以 Σ \Sigma Σ为对称矩阵

利用期望的线性性质,我们将 Σ \Sigma Σ的定义代入二次型中:

z ⊤ Σ z = z ⊤ E [ ( X − μ ) ( X − μ ) ⊤ ] z = E [ z ⊤ ( X − μ ) ( X − μ ) ⊤ z ] ( 因为 z 是常数向量 ) = E [ ( z ⊤ ( X − μ ) ) 2 ] ≥ 0 \begin{align*} z^\top \Sigma z &= z^\top E[(X - \mu)(X - \mu)^\top] z \\ &= E[z^\top (X - \mu)(X - \mu)^\top z] \quad (\text{因为 } z \text{ 是常数向量})\\ &= E[(z^\top (X - \mu))^2] \quad \ge 0 \end{align*} z⊤Σz=z⊤E[(X−μ)(X−μ)⊤]z=E[z⊤(X−μ)(X−μ)⊤z](因为 z 是常数向量)=E[(z⊤(X−μ))2]≥0

因此,协方差矩阵 Σ \Sigma Σ 是半正定的,记作 Σ ≥ 0 \Sigma \ge 0 Σ≥0。

第8题:高斯判别分析的MLE

对高斯判别分析,已知各变量概率分布为:

p ( y ) = ϕ y ( 1 − ϕ ) 1 − y p(y)=\phi^y(1-\phi)^{1-y} p(y)=ϕy(1−ϕ)1−y

p ( x ∣ y = 0 ) = 1 ( 2 π ) d / 2 ∣ Σ ∣ 1 / 2 exp ( − 1 2 ( x − μ 0 ) ⊤ Σ − 1 ( x − μ 0 ) ) p(x|y=0) = \frac{1}{(2\pi)^{d/2}|\Sigma|^{1/2}} \exp \Big( -\frac{1}{2}(x - \mu_0)^\top \Sigma^{-1} (x-\mu_0) \Big) p(x∣y=0)=(2π)d/2∣Σ∣1/21exp(−21(x−μ0)⊤Σ−1(x−μ0))

p ( x ∣ y = 1 ) = 1 ( 2 π ) d / 2 ∣ Σ ∣ 1 / 2 exp ( − 1 2 ( x − μ 1 ) ⊤ Σ − 1 ( x − μ 1 ) ) p(x|y=1) = \frac{1}{(2\pi)^{d/2}|\Sigma|^{1/2}} \exp \Big( -\frac{1}{2}(x - \mu_1)^\top \Sigma^{-1} (x-\mu_1) \Big) p(x∣y=1)=(2π)d/2∣Σ∣1/21exp(−21(x−μ1)⊤Σ−1(x−μ1))

证明在极大似然估计下,参数 ϕ \phi ϕ、 μ 0 \mu_0 μ0、 μ 1 \mu_1 μ1的形式为:

ϕ = 1 n ∑ i = 1 n 1 { y ( i ) = 1 } \phi = \frac{1}{n} \sum_{i=1}^n 1\{y^{(i)}=1\} ϕ=n1∑i=1n1{y(i)=1}

μ 0 = ∑ i = 1 n 1 { y ( i ) = 0 } x ( i ) 1 { y ( i ) = 0 } \mu_0=\frac{\sum_{i=1}^n 1\{y^{(i)}=0\}x^{(i)}}{1\{y^{(i)}=0\}} μ0=1{y(i)=0}∑i=1n1{y(i)=0}x(i)

μ 1 = ∑ i = 1 n 1 { y ( i ) = 1 } x ( i ) 1 { y ( i ) = 1 } \mu_1=\frac{\sum_{i=1}^n 1\{y^{(i)}=1\}x^{(i)}}{1\{y^{(i)}=1\}} μ1=1{y(i)=1}∑i=1n1{y(i)=1}x(i)

解:

对数似然函数为:

log L = ∑ i = 1 n [ log p ( y ( i ) ) + log p ( x ( i ) ∣ y ( i ) ) ] \log L = \sum_{i=1}^{n} [\log p(y^{(i)}) + \log p(x^{(i)}|y^{(i)})] logL=i=1∑n[logp(y(i))+logp(x(i)∣y(i))]

对数似然可以分解为两个独立的部分:

log L = ∑ i = 1 n log p ( y ( i ) ) ⏟ 第一部分 + ∑ i = 1 n log p ( x ( i ) ∣ y ( i ) ) ⏟ 第二部分 \log L = \underbrace{\sum_{i=1}^{n} \log p(y^{(i)})}{\text{第一部分}} + \underbrace{\sum{i=1}^{n} \log p(x^{(i)}|y^{(i)})}_{\text{第二部分}} logL=第一部分 i=1∑nlogp(y(i))+第二部分 i=1∑nlogp(x(i)∣y(i))

- 第一部分 ∑ i = 1 n log p ( y ( i ) ) \sum_{i=1}^{n} \log p(y^{(i)}) ∑i=1nlogp(y(i)):只包含参数 ϕ \phi ϕ,用于估计类先验概率 ϕ \phi ϕ

- 第二部分 ∑ i = 1 n log p ( x ( i ) ∣ y ( i ) ) \sum_{i=1}^{n} \log p(x^{(i)}|y^{(i)}) ∑i=1nlogp(x(i)∣y(i)):包含参数 μ 0 , μ 1 , Σ \mu_0, \mu_1, \Sigma μ0,μ1,Σ,用于估计类条件均值 μ 0 , μ 1 \mu_0, \mu_1 μ0,μ1 和 协方差矩阵 Σ \Sigma Σ

由于这两部分不包含共同参数,可以分别独立优化

估计 ϕ \phi ϕ:

log L ϕ = ∑ i = 1 n log p ( y ( i ) ) = ∑ i = 1 n [ y ( i ) log ϕ + ( 1 − y ( i ) ) log ( 1 − ϕ ) ] \log L_\phi = \sum_{i=1}^{n} \log p(y^{(i)}) = \sum_{i=1}^{n} [y^{(i)} \log \phi + (1 - y^{(i)}) \log(1 - \phi)] logLϕ=i=1∑nlogp(y(i))=i=1∑n[y(i)logϕ+(1−y(i))log(1−ϕ)]

令 ∂ log L ϕ ∂ ϕ = 0 \frac{\partial \log L_\phi}{\partial \phi} = 0 ∂ϕ∂logLϕ=0,则 ∑ i = 1 n [ y ( i ) ϕ − 1 − y ( i ) 1 − ϕ ] = 0 \sum_{i=1}^{n} \left[ \frac{y^{(i)}}{\phi} - \frac{1 - y^{(i)}}{1 - \phi} \right] = 0 ∑i=1n[ϕy(i)−1−ϕ1−y(i)]=0

解得:

ϕ = 1 n ∑ i = 1 n y ( i ) = 1 n ∑ i = 1 n 1 { y ( i ) = 1 } \phi = \frac{1}{n} \sum_{i=1}^{n} y^{(i)} = \frac{1}{n} \sum_{i=1}^{n} 1\{y^{(i)} = 1\} ϕ=n1i=1∑ny(i)=n1i=1∑n1{y(i)=1}

估计 μ 0 \mu_0 μ0:

只考虑 y = 0 y = 0 y=0 的样本:

log L μ 0 = ∑ i : y ( i ) = 0 [ − 1 2 ( x ( i ) − μ 0 ) ⊤ Σ − 1 ( x ( i ) − μ 0 ) + const ] \log L_{\mu_0} = \sum_{i:y^{(i)}=0} \left[ -\frac{1}{2}(x^{(i)} - \mu_0)^\top \Sigma^{-1}(x^{(i)} - \mu_0) + \text{const} \right] logLμ0=i:y(i)=0∑[−21(x(i)−μ0)⊤Σ−1(x(i)−μ0)+const]

令 ∂ log L μ 0 ∂ μ 0 = 0 \frac{\partial \log L_{\mu_0}}{\partial \mu_0} = 0 ∂μ0∂logLμ0=0,则 ∑ i : y ( i ) = 0 Σ − 1 ( x ( i ) − μ 0 ) = 0 \sum_{i:y^{(i)}=0} \Sigma^{-1}(x^{(i)} - \mu_0) = 0 ∑i:y(i)=0Σ−1(x(i)−μ0)=0

解得:

μ 0 = ∑ i : y ( i ) = 0 x ( i ) ∑ i : y ( i ) = 0 1 = ∑ i = 1 n 1 { y ( i ) = 0 } x ( i ) ∑ i = 1 n 1 { y ( i ) = 0 } \mu_0 = \frac{\sum_{i:y^{(i)}=0} x^{(i)}}{\sum_{i:y^{(i)}=0} 1} = \frac{\sum_{i=1}^{n} 1\{y^{(i)}=0\}x^{(i)}}{\sum_{i=1}^{n} 1\{y^{(i)}=0\}} μ0=∑i:y(i)=01∑i:y(i)=0x(i)=∑i=1n1{y(i)=0}∑i=1n1{y(i)=0}x(i)

同理可得 μ 1 \mu_1 μ1 的估计。

第9题:GDA可转化为Logistic回归

证明高斯判别分析(GDA)可转化为 Logistic 回归。提示:

- p ( y = 1 ∣ x ) = p ( x ∣ y = 1 ) p ( y = 1 ) p ( x ∣ y = 1 ) p ( y = 1 ) + p ( x ∣ y = 0 ) p ( y = 0 ) p(y=1|x) = \frac{p(x|y=1)p(y=1)}{p(x|y=1)p(y=1) + p(x|y=0)p(y=0)} p(y=1∣x)=p(x∣y=1)p(y=1)+p(x∣y=0)p(y=0)p(x∣y=1)p(y=1)。

- 可记 r ( x ) = p ( x ∣ y = 1 ) p ( y = 1 ) p ( x ∣ y = 0 ) p ( y = 0 ) r(x) = \frac{p(x|y=1)p(y=1)}{p(x|y=0)p(y=0)} r(x)=p(x∣y=0)p(y=0)p(x∣y=1)p(y=1)

- p ( x ∣ y = 0 ) = 1 ( 2 π ) d / 2 ∣ Σ ∣ 1 / 2 exp ( − 1 2 ( x − μ 0 ) ⊤ Σ − 1 ( x − μ 0 ) ) p(x|y=0) = \frac{1}{(2\pi)^{d/2}|\Sigma|^{1/2}} \exp \Big( -\frac{1}{2}(x - \mu_0)^\top \Sigma^{-1} (x-\mu_0) \Big) p(x∣y=0)=(2π)d/2∣Σ∣1/21exp(−21(x−μ0)⊤Σ−1(x−μ0))

- p ( x ∣ y = 1 ) = 1 ( 2 π ) d / 2 ∣ Σ ∣ 1 / 2 exp ( − 1 2 ( x − μ 1 ) ⊤ Σ − 1 ( x − μ 1 ) ) p(x|y=1) = \frac{1}{(2\pi)^{d/2}|\Sigma|^{1/2}} \exp \Big( -\frac{1}{2}(x - \mu_1)^\top \Sigma^{-1} (x-\mu_1) \Big) p(x∣y=1)=(2π)d/2∣Σ∣1/21exp(−21(x−μ1)⊤Σ−1(x−μ1))

- p ( y = 1 ) = ϕ p(y=1) = \phi p(y=1)=ϕ

Logistic 回归(Logistic Regression)的核心思想是利用 Sigmoid 函数(也称逻辑函数)将线性回归的预测值映射到 [ 0 , 1 ] [0, 1] [0,1] 之间,从而表示某个类别发生的概率。

逻辑回归预测的是样本属于特定类别的后验概率 P ( y = 1 ∣ x ) P(y=1|x) P(y=1∣x)。其形式为:

P ( y = 1 ∣ x ; θ ) = h θ ( x ) = σ ( θ ⊤ x ) = 1 1 + e − θ ⊤ x P(y=1|x; \theta) = h_\theta(x) = \sigma(\theta^\top x) = \frac{1}{1 + e^{-\theta^\top x}} P(y=1∣x;θ)=hθ(x)=σ(θ⊤x)=1+e−θ⊤x1

解:

根据贝叶斯定理:由提示1,2得

p ( y = 1 ∣ x ) = p ( x ∣ y = 1 ) p ( y = 1 ) p ( x ∣ y = 1 ) p ( y = 1 ) + p ( x ∣ y = 0 ) p ( y = 0 ) = 1 1 + p ( x ∣ y = 0 ) p ( y = 0 ) p ( x ∣ y = 1 ) p ( y = 1 ) = 1 1 + 1 r ( x ) p(y=1|x) = \frac{p(x|y=1)p(y=1)}{p(x|y=1)p(y=1)+p(x|y=0)p(y=0)} = \frac{1}{1+\frac{p(x|y=0)p(y=0)}{p(x|y=1)p(y=1)}} = \frac{1}{1+\frac{1}{r(x)}} p(y=1∣x)=p(x∣y=1)p(y=1)+p(x∣y=0)p(y=0)p(x∣y=1)p(y=1)=1+p(x∣y=1)p(y=1)p(x∣y=0)p(y=0)1=1+r(x)11

为了符合 Sigmoid 函数 1 / ( 1 + e − z ) 1/(1+e^{-z}) 1/(1+e−z)的形式,具体表现为 e − z = 1 r ( x ) e^{-z}=\frac{1}{r(x)} e−z=r(x)1,需要证明 z = log ( r ( x ) ) z=\log(r(x)) z=log(r(x)) 是关于 x x x的线性函数。

对数似然比 r ( x ) r(x) r(x) 的展开与简化

提示2: r ( x ) = p ( x ∣ y = 1 ) p ( y = 1 ) p ( x ∣ y = 0 ) p ( y = 0 ) r(x) = \frac{p(x|y=1)p(y=1)}{p(x|y=0)p(y=0)} r(x)=p(x∣y=0)p(y=0)p(x∣y=1)p(y=1)

计算 log r ( x ) \log r(x) logr(x):在二分类问题中, 若 p ( y = 1 ) = ϕ p(y=1) = \phi p(y=1)=ϕ,则 p ( y = 0 ) = 1 − ϕ p(y=0) = 1-\phi p(y=0)=1−ϕ

log r ( x ) = log p ( x ∣ y = 1 ) + log p ( y = 1 ) − log p ( x ∣ y = 0 ) − log p ( y = 0 ) = − 1 2 ( x − μ 1 ) ⊤ Σ − 1 ( x − μ 1 ) + 1 2 ( x − μ 0 ) ⊤ Σ − 1 ( x − μ 0 ) + log ϕ 1 − ϕ \begin{aligned} \log r(x) &= \log p(x|y=1) + \log p(y=1) - \log p(x|y=0) - \log p(y=0) \\ &= -\frac{1}{2}(x - \mu_1)^\top \Sigma^{-1}(x - \mu_1) + \frac{1}{2}(x - \mu_0)^\top \Sigma^{-1}(x - \mu_0) + \log \frac{\phi}{1 - \phi} \end{aligned} logr(x)=logp(x∣y=1)+logp(y=1)−logp(x∣y=0)−logp(y=0)=−21(x−μ1)⊤Σ−1(x−μ1)+21(x−μ0)⊤Σ−1(x−μ0)+log1−ϕϕ

log 1 ( 2 π ) d / 2 ∣ Σ ∣ 1 / 2 \frac{1}{(2\pi)^{d/2}|\Sigma|^{1/2}} (2π)d/2∣Σ∣1/21在 log p ( x ∣ y = 1 ) − log p ( x ∣ y = 0 ) \log p(x|y=1) - \log p(x|y=0) logp(x∣y=1)−logp(x∣y=0)相减消去了

展开:

log r ( x ) = − 1 2 x ⊤ Σ − 1 x + x ⊤ Σ − 1 μ 1 − 1 2 μ 1 ⊤ Σ − 1 μ 1 + 1 2 x ⊤ Σ − 1 x − x ⊤ Σ − 1 μ 0 + 1 2 μ 0 ⊤ Σ − 1 μ 0 + log ϕ 1 − ϕ = ( μ 1 − μ 0 ) ⊤ Σ − 1 x + 1 2 μ 0 ⊤ Σ − 1 μ 0 − 1 2 μ 1 ⊤ Σ − 1 μ 1 + log ( ϕ 1 − ϕ ) = θ ⊤ x + θ 0 \begin{aligned} \log r(x) &= -\frac{1}{2}x^\top \Sigma^{-1}x + x^\top \Sigma^{-1}\mu_1 - \frac{1}{2}\mu_1^\top \Sigma^{-1}\mu_1 \\ &\quad + \frac{1}{2}x^\top \Sigma^{-1}x - x^\top \Sigma^{-1}\mu_0 + \frac{1}{2}\mu_0^\top \Sigma^{-1}\mu_0 + \log \frac{\phi}{1 - \phi} \\ &= (\mu_1 - \mu_0)^\top \Sigma^{-1} x + \frac{1}{2}\mu_0^\top \Sigma^{-1} \mu_0 - \frac{1}{2}\mu_1^\top \Sigma^{-1} \mu_1 + \log\left(\frac{\phi}{1-\phi}\right) \\ &= \theta^\top x + \theta_0 \end{aligned} logr(x)=−21x⊤Σ−1x+x⊤Σ−1μ1−21μ1⊤Σ−1μ1+21x⊤Σ−1x−x⊤Σ−1μ0+21μ0⊤Σ−1μ0+log1−ϕϕ=(μ1−μ0)⊤Σ−1x+21μ0⊤Σ−1μ0−21μ1⊤Σ−1μ1+log(1−ϕϕ)=θ⊤x+θ0

其中, θ = Σ − 1 ( μ 1 − μ 0 ) , θ 0 = 1 2 ( μ 0 ⊤ Σ − 1 μ 0 − μ 1 ⊤ Σ − 1 μ 1 ) + log ϕ 1 − ϕ \theta = \Sigma^{-1}(\mu_1 - \mu_0), \quad \theta_0 = \frac{1}{2}(\mu_0^\top \Sigma^{-1}\mu_0 - \mu_1^\top\Sigma^{-1}\mu_1) + \log \frac{\phi}{1 - \phi} θ=Σ−1(μ1−μ0),θ0=21(μ0⊤Σ−1μ0−μ1⊤Σ−1μ1)+log1−ϕϕ

将上述带入到 p ( y = 1 ∣ x ) p(y=1|x) p(y=1∣x)中,

p ( y = 1 ∣ x ) = 1 1 + e − θ ⊤ x − θ 0 = 1 1 + e − θ ~ ⊤ x ~ p(y=1|x) = \frac{1}{1 + e^{-\theta^\top x - \theta_0}} = \frac{1}{1 + e^{-\tilde{\theta}^\top \tilde{x}}} p(y=1∣x)=1+e−θ⊤x−θ01=1+e−θ~⊤x~1

( x ~ \tilde{x} x~ 包含截距项)。

所以高斯判别分析(GDA)可转化为 Logistic 回归