之前我们在CENTO OS上的网络安全工具(二十)ClickHouse swarm容器化集群部署中使用swarm集群部署过Clickhouse,这次使用portainer进行部署,本质上与swarm部署是一样的,唯一的区别,在于clickhouse的新版本支持Kraft部署,已经不再需要zookeeper了。

下面使用6个虚拟机节点(node1~node6)模拟部署3shard的1主1备ClickHouse集群,其中node1~node3的3个节点用来复用部署keeper节点。

一、 Keeper部署

当前版本的Clickhouse使用Kraft部署,对于在portainer上部署的方式,可以直接下载clickhouse的keeper镜像,但与直接在裸金属上部署一样,我们需要逐节点更改配置文件。

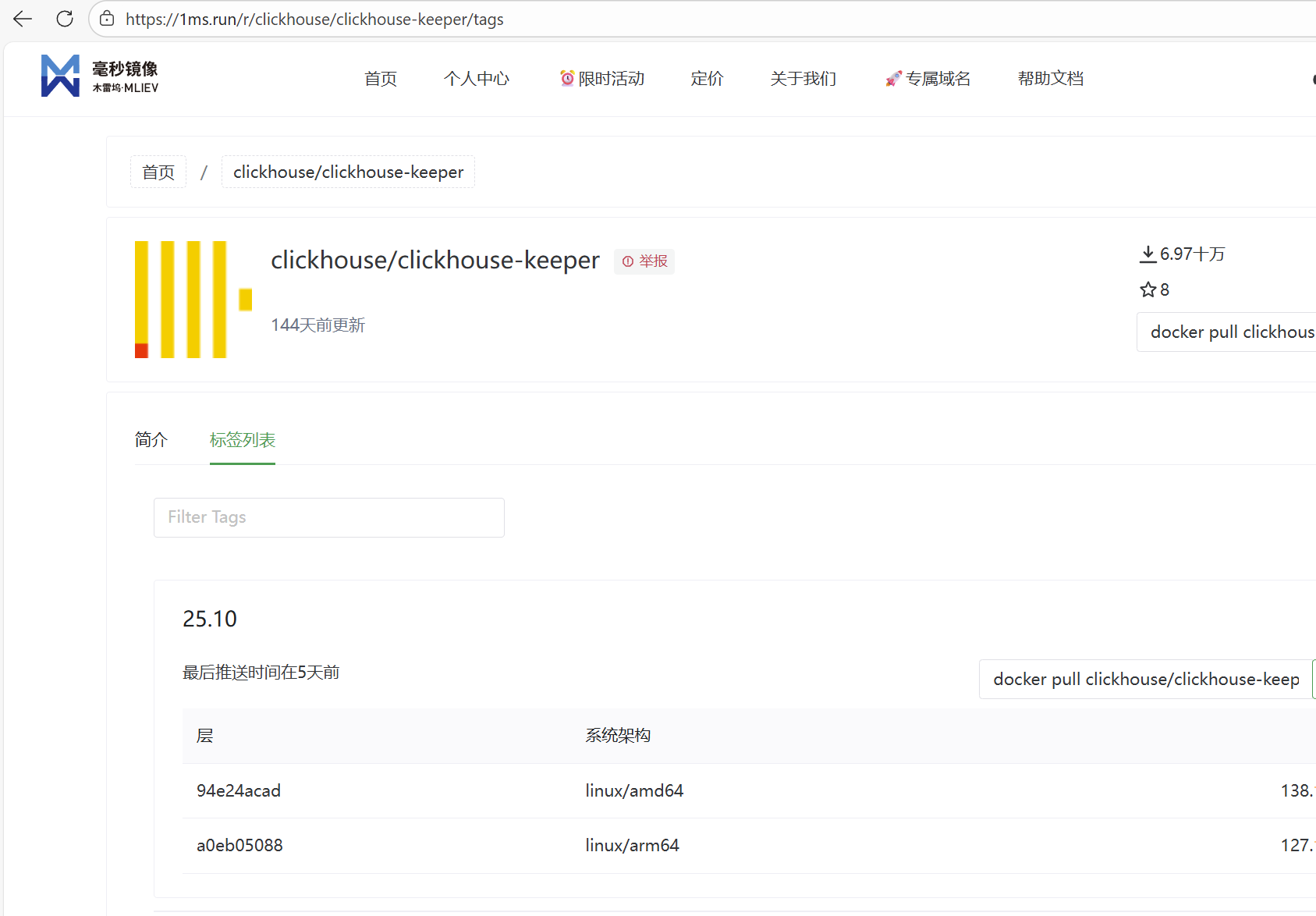

1. 下载Keeper镜像文件

还是用最简单的方案,直接从国内镜像下载后更改tag即可

2. Keeper配置文件

在需要部署的server和keeper节点宿主机上创建文件夹,以便存放该节点对应的配置文件

[root@node1 share]# cd /root/share

[root@node1 share]# mkdir ckconfig(1)导出配置文件

先用容器载入镜像,将配置文件拷贝出来,这个配置文件在/etc/clickhouse-keeper目录下:

[root@node1 share]# docker run -it --name tmp clickhouse/clickhouse-keeper:head-alpine bash

55d75f7fa95f:/#

55d75f7fa95f:/# cd /etc

55d75f7fa95f:/etc# cd clickhouse-keeper/

55d75f7fa95f:/etc/clickhouse-keeper# ls

keeper_config.xml

55d75f7fa95f:/etc/clickhouse-keeper# cat keeper_config.xml

<clickhouse>

<logger>

<!-- Possible levels [1]:

- none (turns off logging)

- fatal

- critical

- error

- warning

- notice

- information

- debug

- trace

[1]: https://github.com/pocoproject/poco/blob/poco-1.9.4-release/Foundation/include/Poco/Logger.h#L105-L114

-->

<level>trace</level>

<log>/var/log/clickhouse-keeper/clickhouse-keeper.log</log>

<errorlog>/var/log/clickhouse-keeper/clickhouse-keeper.err.log</errorlog>

<!-- Rotation policy

See https://github.com/pocoproject/poco/blob/poco-1.9.4-release/Foundation/include/Poco/FileChannel.h#L54-L85

-->

<size>1000M</size>

<count>10</count>

<!-- <console>1</console> --> <!-- Default behavior is autodetection (log to console if not daemon mode and is tty) -->

</logger>

<max_connections>4096</max_connections>

<keeper_server>

<tcp_port>9181</tcp_port>

<!-- Must be unique among all keeper serves -->

<server_id>1</server_id>

<log_storage_path>/var/lib/clickhouse/coordination/logs</log_storage_path>

<snapshot_storage_path>/var/lib/clickhouse/coordination/snapshots</snapshot_storage_path>

<coordination_settings>

<operation_timeout_ms>10000</operation_timeout_ms>

<min_session_timeout_ms>10000</min_session_timeout_ms>

<session_timeout_ms>100000</session_timeout_ms>

<raft_logs_level>information</raft_logs_level>

<compress_logs>false</compress_logs>

<!-- All settings listed in https://github.com/ClickHouse/ClickHouse/blob/master/src/Coordination/CoordinationSettings.h -->

</coordination_settings>

<!-- enable sanity hostname checks for cluster configuration (e.g. if localhost is used with remote endpoints) -->

<hostname_checks_enabled>true</hostname_checks_enabled>

<raft_configuration>

<server>

<id>1</id>

<!-- Internal port and hostname -->

<hostname>localhost</hostname>

<port>9234</port>

</server>

<!-- Add more servers here -->

</raft_configuration>

</keeper_server>

<openSSL>

<server>

<!-- Used for secure tcp port -->

<!-- openssl req -subj "/CN=localhost" -new -newkey rsa:2048 -days 365 -nodes -x509 -keyout /etc/clickhouse-server/server.key -out /etc/clickhouse-server/server.crt -->

<!-- <certificateFile>/etc/clickhouse-keeper/server.crt</certificateFile> -->

<!-- <privateKeyFile>/etc/clickhouse-keeper/server.key</privateKeyFile> -->

<!-- dhparams are optional. You can delete the <dhParamsFile> element.

To generate dhparams, use the following command:

openssl dhparam -out /etc/clickhouse-keeper/dhparam.pem 4096

Only file format with BEGIN DH PARAMETERS is supported.

-->

<!-- <dhParamsFile>/etc/clickhouse-keeper/dhparam.pem</dhParamsFile> -->

<verificationMode>none</verificationMode>

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

</server>

</openSSL>

</clickhouse>(2)更改配置

参数说明:

就当前测试而言,所需要更改的参数只有4个,均在keeper_server选项下:

① 扩大监听范围

<listen_host>0.0.0.0</listen_host>

② 设置监听端口

<tcp_port>9181</tcp_port>

③ 对应修改server_id

服务器唯一标识符,必须在集群中唯一。如我们计划使用node1、2、3节点做一个3节点的keeper集群,所以它们应该分别对应server_id=1,2以及3。

<!-- Must be unique among all keeper serves -->

<server_id>1</server_id>

④ 选举集群配置

raft_configuration:对每一个keeper节点,均通过该参数找到其它节点。所以,该参数下记录了所有keeper节点的server_id以及hostname信息

<raft_configuration>

<server>

<id>1</id>

<!-- Internal port and hostname -->

<hostname>keeper1</hostname>

<port>9234</port>

</server>

<server>

<id>2</id>

<!-- Internal port and hostname -->

<hostname>keeper2</hostname>

<port>9234</port>

</server>

<server>

<id>3</id>

<!-- Internal port and hostname -->

<hostname>keeper3</hostname>

<port>9234</port>

</server>

<!-- Add more servers here -->

</raft_configuration>(3)xml配置文件示例

最终的clickhouse-keeper.xml文件如下:

<clickhouse>

<logger>

<!-- Possible levels [1]:

- none (turns off logging)

- fatal

- critical

- error

- warning

- notice

- information

- debug

- trace

[1]: https://github.com/pocoproject/poco/blob/poco-1.9.4-release/Foundation/include/Poco/Logger.h#L105-L114

-->

<level>trace</level>

<log>/var/log/clickhouse-keeper/clickhouse-keeper.log</log>

<errorlog>/var/log/clickhouse-keeper/clickhouse-keeper.err.log</errorlog>

<!-- Rotation policy

See https://github.com/pocoproject/poco/blob/poco-1.9.4-release/Foundation/include/Poco/FileChannel.h#L54-L85

-->

<size>1000M</size>

<count>10</count>

<!-- <console>1</console> --> <!-- Default behavior is autodetection (log to console if not daemon mode and is tty) -->

</logger>

<listen_host>0.0.0.0</listen_host>

<max_connections>4096</max_connections>

<keeper_server>

<tcp_port>9181</tcp_port>

<!-- Must be unique among all keeper serves -->

<server_id>1</server_id>

<log_storage_path>/var/lib/clickhouse/coordination/logs</log_storage_path>

<snapshot_storage_path>/var/lib/clickhouse/coordination/snapshots</snapshot_storage_path>

<coordination_settings>

<operation_timeout_ms>10000</operation_timeout_ms>

<min_session_timeout_ms>10000</min_session_timeout_ms>

<session_timeout_ms>100000</session_timeout_ms>

<raft_logs_level>information</raft_logs_level>

<compress_logs>false</compress_logs>

<!-- All settings listed in https://github.com/ClickHouse/ClickHouse/blob/master/src/Coordination/CoordinationSettings.h -->

</coordination_settings>

<!-- enable sanity hostname checks for cluster configuration (e.g. if localhost is used with remote endpoints) -->

<hostname_checks_enabled>true</hostname_checks_enabled>

<raft_configuration>

<server>

<id>1</id>

<!-- Internal port and hostname -->

<hostname>keeper1</hostname>

<port>9234</port>

</server>

<server>

<id>2</id>

<!-- Internal port and hostname -->

<hostname>keeper2</hostname>

<port>9234</port>

</server>

<server>

<id>3</id>

<!-- Internal port and hostname -->

<hostname>keeper3</hostname>

<port>9234</port>

</server>

<!-- Add more servers here -->

</raft_configuration>

</keeper_server>

<openSSL>

<server>

<!-- Used for secure tcp port -->

<!-- openssl req -subj "/CN=localhost" -new -newkey rsa:2048 -days 365 -nodes -x509 -keyout /etc/clickhouse-server/server.key -out /etc/clickhouse-server/server.crt -->

<!-- <certificateFile>/etc/clickhouse-keeper/server.crt</certificateFile> -->

<!-- <privateKeyFile>/etc/clickhouse-keeper/server.key</privateKeyFile> -->

<!-- dhparams are optional. You can delete the <dhParamsFile> element.

To generate dhparams, use the following command:

openssl dhparam -out /etc/clickhouse-keeper/dhparam.pem 4096

Only file format with BEGIN DH PARAMETERS is supported.

-->

<!-- <dhParamsFile>/etc/clickhouse-keeper/dhparam.pem</dhParamsFile> -->

<verificationMode>none</verificationMode>

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

</server>

</openSSL>

</clickhouse>3. portainer部署

(1)yml文件示例

在修改好node1~node3每个节点的keeper配置文件后,就可以直接使用portainer启动这3个节点上的keeper服务,yml文件如下:

version: '3.8'

services:

keeper1:

image: clickhouse/clickhouse-keeper:head-alpine

ports:

- "9181:9181"

volumes:

- keeper_data1:/var/lib/clickhouse-keeper

- /root/share/ckconfig/clickhouse-keeper.xml:/etc/clickhouse-keeper/keeper_config.xml:ro

- /root/share/cklog:/var/log:rw

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 1

networks:

- kafka_kafka

keeper2:

image: clickhouse/clickhouse-keeper:head-alpine

ports:

- "9182:9181"

volumes:

- keeper_data2:/var/lib/clickhouse-keeper

- /root/share/ckconfig/clickhouse-keeper.xml:/etc/clickhouse-keeper/keeper_config.xml:ro

- /root/share/cklog:/var/log:rw

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 2

networks:

- kafka_kafka

keeper3:

image: clickhouse/clickhouse-keeper:head-alpine

ports:

- "9183:9181"

volumes:

- keeper_data3:/var/lib/clickhouse-keeper

- /root/share/ckconfig/clickhouse-keeper.xml:/etc/clickhouse-keeper/keeper_config.xml:ro

- /root/share/cklog:/var/log:rw

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 3

networks:

- kafka_kafka

volumes:

keeper_data1:

driver: local

keeper_data2:

driver: local

keeper_data3:

driver: local

networks:

kafka_kafka:

external: true(2)参数解释

yml文件中参数基本与keeper本身的部署无关,主要是用来限制部署位置及映射配置文件地址的:

① volumes配置

将宿主机上我们配置好的clickhouse-keeper.xml文件映射到容器内部,替换默认配置的/etc/clickhouse-keeper/keeper_config.xml文件。需要十分注意"-"和"_"的区别,被这个坑好久......

② deploy配置

使用constraints,通过设置node.Labels.sn的值将keeper部署在对应节点上

4. 检查Keeper集群部署情况

Keeper成功启动的情况下,前面配置文件中所设置的监听端口9181应该由进程dockerd监听:

[root@node1 ckconfig]# netstat -lntup|grep 9181

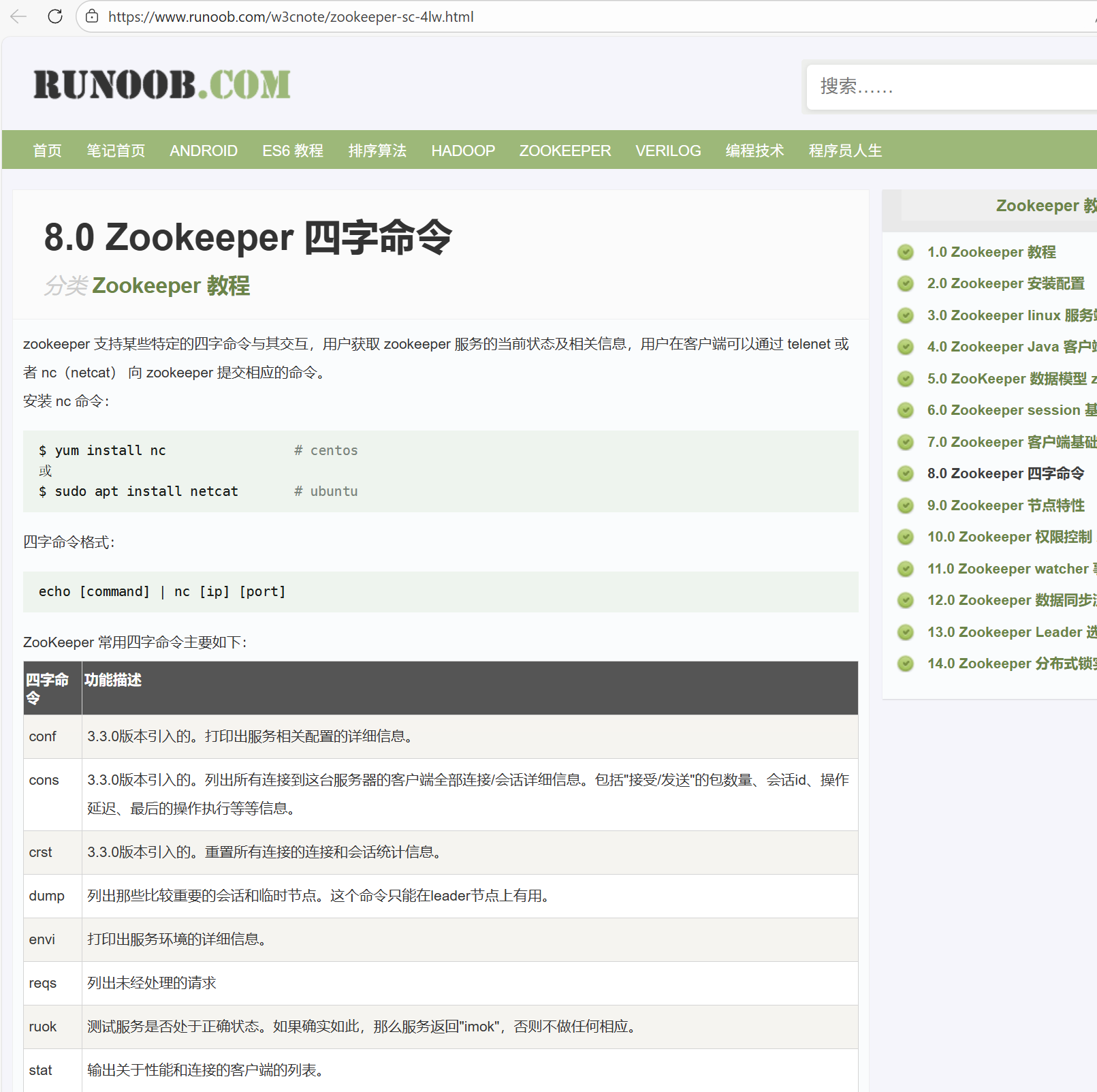

tcp6 0 0 :::9181 :::* LISTEN 68710/dockerd 但仅仅是9181监听端口打开不但表keeper集群启动及选举成功。不过好在9181这个端口也兼容Zookeeper的4字命令,可以用来查看集群状态

(1)keeper 4字命令

通过将4子命令的字母发向集群中某个keeper节点的监听端口,则可以通过其反馈信息掌握集群节点状态。这些4字命令还有不少,比较常用的如stat,还有一个搞笑的------"ruok",容易让人想起某总。

菜鸟教程中给出了zookeeper的四字命令列表:

(2) 查看keeper节点状态

通过向不同keeper节点的监听端口发送4字节命令可以查看这些节点在集群中的角色是leader还是follower。不过,由于使用了docker stack的overlay网络,这里节点IP实际会被负载均衡,直接用IP加9181端口方式,查看的实际都是暴漏9181端口的那个服务的状态:

[root@node1 ckconfig]# echo stat|nc 192.168.76.12 9181

ClickHouse Keeper version: v25.11.1.2618-testing-0e8b02fb9a8a99a7e88641ff1254673a44a78f72

Clients:

10.0.0.3:46746(recved=0,sent=0)

Latency min/avg/max: 0/0/0

Received: 0

Sent: 0

Connections: 0

Outstanding: 0

Zxid: 0x

Mode: follower

Node count: 4

[root@node1 ckconfig]# echo stat|nc 192.168.76.11 9181

ClickHouse Keeper version: v25.11.1.2618-testing-0e8b02fb9a8a99a7e88641ff1254673a44a78f72

Clients:

10.0.0.2:52132(recved=0,sent=0)

Latency min/avg/max: 0/0/0

Received: 0

Sent: 0

Connections: 0

Outstanding: 0

Zxid: 0x

Mode: follower

Node count: 4

[root@node1 ckconfig]# echo stat|nc 192.168.76.13 9181

ClickHouse Keeper version: v25.11.1.2618-testing-0e8b02fb9a8a99a7e88641ff1254673a44a78f72

Clients:

10.0.0.4:36430(recved=0,sent=0)

Latency min/avg/max: 0/0/0

Received: 0

Sent: 0

Connections: 0

Outstanding: 0

Zxid: 0x

Mode: follower

Node count: 4所以,直接使用各节点暴露的不同端口,就可以查看不同服务节点的状态了:

[root@node1 ckconfig]# echo stat|nc 192.168.76.11 9181

ClickHouse Keeper version: v25.11.1.2618-testing-0e8b02fb9a8a99a7e88641ff1254673a44a78f72

Clients:

10.0.0.2:53598(recved=0,sent=0)

Latency min/avg/max: 0/0/0

Received: 0

Sent: 0

Connections: 0

Outstanding: 0

Zxid: 0x

Mode: follower

Node count: 4

[root@node1 ckconfig]# echo stat|nc 192.168.76.11 9182

ClickHouse Keeper version: v25.11.1.2618-testing-0e8b02fb9a8a99a7e88641ff1254673a44a78f72

Clients:

10.0.0.2:33330(recved=0,sent=0)

Latency min/avg/max: 0/0/0

Received: 0

Sent: 0

Connections: 0

Outstanding: 0

Zxid: 0x

Mode: leader

Node count: 4

[root@node1 ckconfig]# echo stat|nc 192.168.76.11 9183

ClickHouse Keeper version: v25.11.1.2618-testing-0e8b02fb9a8a99a7e88641ff1254673a44a78f72

Clients:

10.0.0.2:37114(recved=0,sent=0)

Latency min/avg/max: 0/0/0

Received: 0

Sent: 0

Connections: 0

Outstanding: 0

Zxid: 0x

Mode: follower

Node count: 4二、Clickhouse集群部署

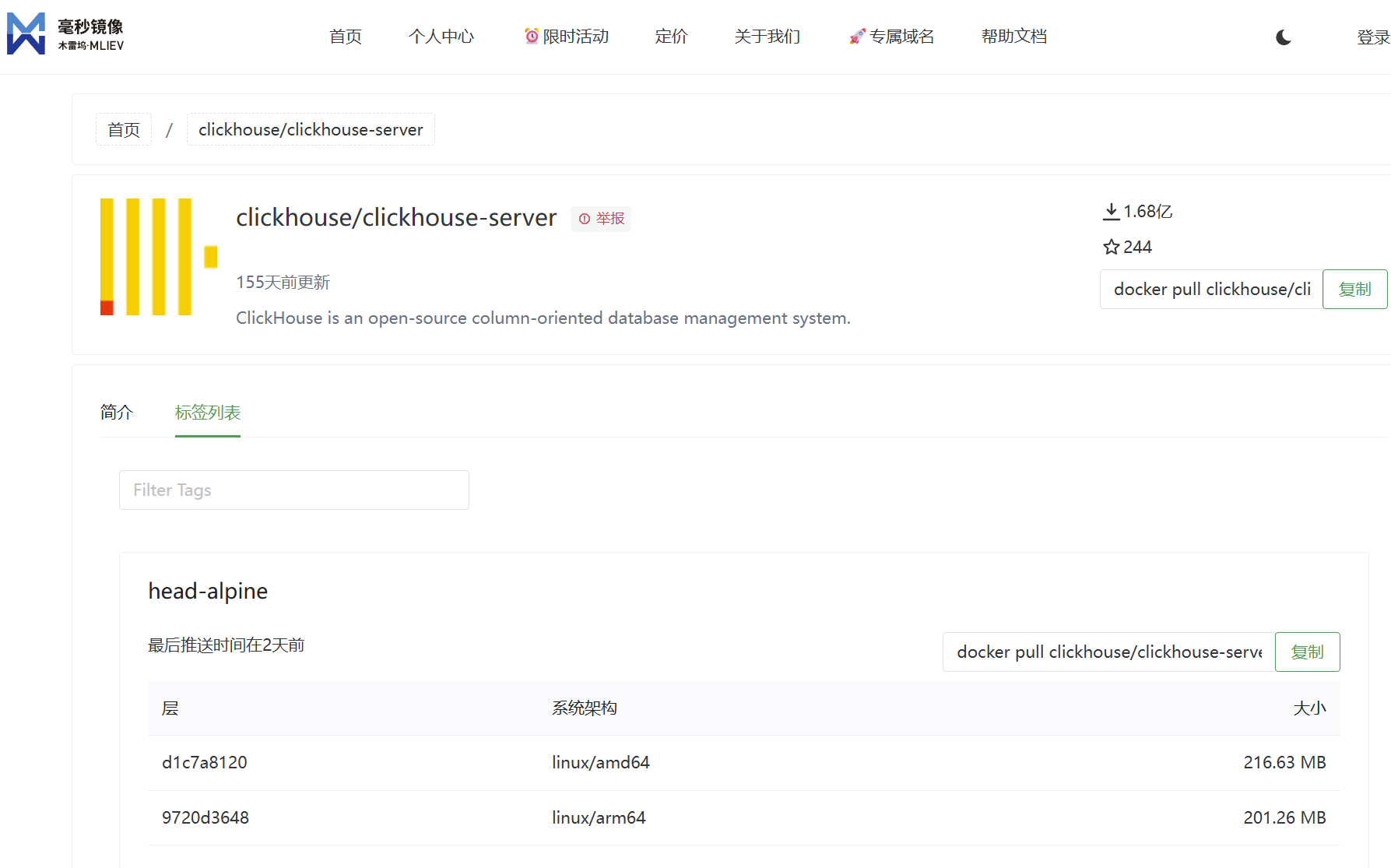

1. 下载Clickhouse Server镜像

2. Server配置文件

(1)拷贝配置文件

[root@node1 ckconfig]# docker run -it --name ckserver -v /root/share/ckconfig:/opt:rw clickhouse/clickhouse-server:head-alpine bash

0d2fbbd44e03:/# cd /etc/clickhouse-server/

0d2fbbd44e03:/etc/clickhouse-server# cp config.xml /opt/server_config.xml

0d2fbbd44e03:/etc/clickhouse-server# cp users.xml /opt/server_users.xml

0d2fbbd44e03:/etc/clickhouse-server# exit(2)更改clickhouse server配置文件config.xml

① 监听端口

外部接入集群的数据库查询接口,使用浏览器从WEB页面,或者以DBeaver连接,均需要对应配置这个接口:

<http_port>8123</http_port>各节点间通信接口

<tcp_port>9000</tcp_port>其它还有一些数据库接口被默认设置,没必要去修改,但需要注意后面配置及docker映射的端口不要与其冲突即可::

② 监听地址

<listen_host>0.0.0.0</listen_host>打开0.0.0.0(IPV4)的监听外部连接配置。最好不要打开::(IPV6)那个,以免后面调试的时候连上IPV6不太好判断。

③ Keeper地址

<zookeeper>

<node>

<host>keeper1</host>

<port>9181</port>

</node>

<node>

<host>keeper2</host>

<port>9181</port>

</node>

<node>

<host>keeper3</host>

<port>9181</port>

</node>

</zookeeper>即前面我们配置的Keeper的server名称及监听端口。需要注意的是,不论是keeper还是ckserver这2个stack,我们都是加入了之前kafka的那个overlay网络,所以本质上它们在同一个内网内,只需要使用9181端口连接就可以,而不是容器映射的那个外部端口。

另外,不要把keeper和kafka的controller装在同一个节点上,否则会造成kraft冲突。从这个层面上说,感觉应该clickhouse server可以公用kafka的controller的kraft,也许能过够省略部署keeper这一步------这个免得麻烦起见,我们并没有尝试。

④ Remote Server列表

<remote_servers>

<pighome>

<shard>

<replica>

<host>ck1</host>

<port>9000</port>

<user>default</user>

<password>123456</password>

</replica>

<replica>

<host>ck2</host>

<port>9000</port>

<user>default</user>

<password>123456</password>

</replica>

</shard>

<shard>

<replica>

<host>ck3</host>

<port>9000</port>

<user>default</user>

<password>123456</password>

</replica>

<replica>

<host>ck4</host>

<port>9000</port>

<user>default</user>

<password>123456</password>

</replica>

</shard>

<shard>

<replica>

<host>ck5</host>

<port>9000</port>

<user>default</user>

<password>123456</password>

</replica>

<replica>

<host>ck6</host>

<port>9000</port>

<user>default</user>

<password>123456</password>

</replica>

</shard>

</pighome>

</remote_servers>我们使用了6个节点,部署了3个shard,每个shard部署一主一备2个节点,这些需要在remote-server节中列出来。

关系到的配置如下:

- **集群名称:**remote-server节中我们取值为<pighome>的节,代表集群名称,这个会在分布式表中用到

- **internal_replication:**内部复制,取值为false标识复制到所有replica,true为仅复制一份

- **weight:**标识分片保留数据的权重分配

- **主机名:**即swarm yml文件中的服务名

- **端口:**应与clickhouse server内部通信端口一致,如9000

- **用户名:**用来登录数据库的用户名(这里使用比较简单的用户名口令配置方式,从而也避免了去配置及映射users.xml配置文件)

- **口令:**对应登录用户名的口令

⑤ 分片标识

<macros>

<shard>ck1ck4</shard>

<replica>ck1</replica>

</macros>分片标识是用于决定不同节点在clickhouse集群中角色分派的配置,通过名称及名称映射关系配置集群shard切分及节点主备关系、拓扑。

在上述参数中,分片标识是配置文件中唯一随所部署节点不同而又不同值的配置,如我们将6个节点分为3个shard,1主1备,则不同节点上的分片标识取值如下表:

|--------|---------|-----------|-------------|

| 序号 | 服务名 | shard | replica |

| 1 | ck1 | ck1ck4 | ck1 |

| 2 | ck2 | ck2ck5 | ck2 |

| 3 | ck3 | ck3ck6 | ck3 |

| 4 | ck4 | ck1ck4 | ck4 |

| 5 | ck5 | ck2ck5 | ck5 |

| 6 | ck6 | ck3ck6 | ck6 |

shard、replica的取名也可以随意,只不过要注意好唯一性和对应关系即可

⑥ 内部复制配置

<internal_replication>false</internal_replication>当 <internal_replication>false</internal_replication> 时,表示在 ClickHouse 集群中,分布式表在写入数据时会向所有副本(replica)写入数据,但不保证数据写入的一致性。

具体来说:

-

写入行为 :当设置为

false时,分布式表会将数据写入到分片(shard)中的所有副本中。 -

数据一致性:虽然数据会被写入到所有副本,但由于不保证写入的一致性,随着时间推移,各个副本之间的数据可能会出现差异。

-

适用场景:这种配置通常用于非复制表(non-replicated tables)的情况,即底层表不是复制表时。

-

与复制表的区别 :如果底层表是复制表(replicated table),则应该设置为

true,这样分布式表不会向所有副本写入,而只是写入到一个副本,由复制表自身处理副本间的数据同步。 -

同步机制 :对于设置为

false的情况,数据同步通常依赖于 ZooKeeper 来管理副本同步信息。

⑦ shard权重

<weight>1</weight>一般配成1就行

3. portainer部署

(1)yml文件示例

version: '3.8'

services:

keeper1:

hostname: keeper1

image: clickhouse/clickhouse-keeper:head-alpine

ports:

- "9181:9181"

volumes:

- keeper_data1:/var/lib/clickhouse-keeper

- /root/share/ckconfig/clickhouse-keeper.xml:/etc/clickhouse-keeper/keeper_config.xml:ro

- /root/share/cklog:/var/log:rw

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 1

networks:

- cknet

keeper2:

hostname: keeper2

image: clickhouse/clickhouse-keeper:head-alpine

ports:

- "9182:9181"

volumes:

- keeper_data2:/var/lib/clickhouse-keeper

- /root/share/ckconfig/clickhouse-keeper.xml:/etc/clickhouse-keeper/keeper_config.xml:ro

- /root/share/cklog:/var/log:rw

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 2

networks:

- cknet

keeper3:

hostname: keeper3

image: clickhouse/clickhouse-keeper:head-alpine

ports:

- "9183:9181"

volumes:

- keeper_data3:/var/lib/clickhouse-keeper

- /root/share/ckconfig/clickhouse-keeper.xml:/etc/clickhouse-keeper/keeper_config.xml:ro

- /root/share/cklog:/var/log:rw

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 3

networks:

- cknet

ck1:

hostname: ck1

image: clickhouse/clickhouse-server:head-alpine

ports:

- "8123:8123"

- "9000:9000"

environment:

- CLICKHOUSE_USER=default

- CLICKHOUSE_PASSWORD=123456

volumes:

- ck_data1:/var/lib/clickhouse

- /root/share/ckconfig/server_config.xml:/etc/clickhouse-server/config.xml:ro

- /root/share/ckconfig/logs:/var/log/clickhouse-server:rw

depends_on:

- keeper1

- keeper2

- keeper3

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 1

networks:

- cknet

ck2:

hostname: ck2

image: clickhouse/clickhouse-server:head-alpine

ports:

- "8223:8123"

- "9100:9000"

environment:

- CLICKHOUSE_USER=default

- CLICKHOUSE_PASSWORD=123456

volumes:

- ck_data2:/var/lib/clickhouse

- /root/share/ckconfig/server_config.xml:/etc/clickhouse-server/config.xml:ro

- /root/share/ckconfig/logs:/var/log/clickhouse-server:rw

depends_on:

- keeper1

- keeper2

- keeper3

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 2

networks:

- cknet

ck3:

hostname: ck3

image: clickhouse/clickhouse-server:head-alpine

ports:

- "8323:8123"

- "9200:9000"

environment:

- CLICKHOUSE_USER=default

- CLICKHOUSE_PASSWORD=123456

volumes:

- ck_data3:/var/lib/clickhouse

- /root/share/ckconfig/server_config.xml:/etc/clickhouse-server/config.xml:ro

- /root/share/ckconfig/logs:/var/log/clickhouse-server:rw

depends_on:

- keeper1

- keeper2

- keeper3

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 3

networks:

- cknet

ck4:

hostname: ck4

image: clickhouse/clickhouse-server:head-alpine

ports:

- "8423:8123"

- "9300:9000"

environment:

- CLICKHOUSE_USER=default

- CLICKHOUSE_PASSWORD=123456

volumes:

- ck_data4:/var/lib/clickhouse

- /root/share/ckconfig/server_config.xml:/etc/clickhouse-server/config.xml:ro

- /root/share/ckconfig/logs:/var/log/clickhouse-server:rw

depends_on:

- keeper1

- keeper2

- keeper3

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 4

networks:

- cknet

ck5:

hostname: ck5

image: clickhouse/clickhouse-server:head-alpine

ports:

- "8523:8123"

- "9400:9000"

environment:

- CLICKHOUSE_USER=default

- CLICKHOUSE_PASSWORD=123456

volumes:

- ck_data5:/var/lib/clickhouse

- /root/share/ckconfig/server_config.xml:/etc/clickhouse-server/config.xml:ro

- /root/share/ckconfig/logs:/var/log/clickhouse-server:rw

depends_on:

- keeper1

- keeper2

- keeper3

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 5

networks:

- cknet

ck6:

hostname: ck6

image: clickhouse/clickhouse-server:head-alpine

ports:

- "8623:8123"

- "9500:9000"

environment:

- CLICKHOUSE_USER=default

- CLICKHOUSE_PASSWORD=123456

volumes:

- ck_data6:/var/lib/clickhouse

- /root/share/ckconfig/server_config.xml:/etc/clickhouse-server/config.xml:ro

- /root/share/ckconfig/logs:/var/log/clickhouse-server:rw

depends_on:

- keeper1

- keeper2

- keeper3

deploy:

replicas: 1

placement:

constraints:

- node.Labels.sn == 6

networks:

- cknet

volumes:

ck_data1:

driver: local

ck_data2:

driver: local

ck_data3:

driver: local

ck_data4:

driver: local

ck_data5:

driver: local

ck_data6:

driver: local

keeper_data1:

driver: local

keeper_data2:

driver: local

keeper_data3:

driver: local

networks:

cknet:

driver: overlay

attachable: true(2)参数解释

在environment参数中设置好启动所用用户名和默认密码------即我们在config.xml配置文件的remote-server节中配置的。

9000端口应该不需要映射,这里我们也没删,就这样吧

重要的是将我们修改后的各节点上的config.xml配置文件映射到容器内/etc/clickhouse-server/config.xml文件

另外由于数据需要保存在对应的节点上,避免不必要的迁移,所以同样需要以node.Label.sn来约束。

(3)几点说明

每次配clickhouse的swarm版本都很玄幻,一个是因为每个节点都需要单独的改配置文件,一旦有那么一点错误,就有可能出问题,而且log文件中还不怎么能看明白,只能反复检查配置文件,改这改那,突然就又行了。所以,下面是几个从无法工作状态到可以工作状态中改变的配置,具体是哪个真起了作用就不知道了。

① hostname

按理说在stack中一般都是用service name代替hostname使用,实际进容器去ping也会发现service name是可以当作hostname去ping的,所以应该说hostname是不必强制配置的。不过确实不知hostname不配的情况下是否可能影响swarm的负载均衡,总之无所谓的情况下不如配了吧。

② depends-on

server启动时需要依赖keeper。这个可能是真的需要配,但可能影响并不是决定性的------在不配置的关系的情况下也成功启动过,但不知道是不是因为恰好keeper先于server启动成功的缘故。

③ CLICKHOUSE_USER

CLICKHOUSE_USER配置为default,虽然配置成其它的用户------比如"root"也行,应该只要与config.xml中remote_server下的配置保持一致即可,但不知是否需要另行配置user.xml并与其保持一致。

以上几点试一下也不难,不过反复折腾下,已经燃尽了,不想试了......,下次碰到玄幻时再说吧。

4. 检查clickhouse部署情况

(1) 从WEB访问

从集群外部登录集群中节点的8123端口:

点击Web SQL UI:

点击Web SQL UI:

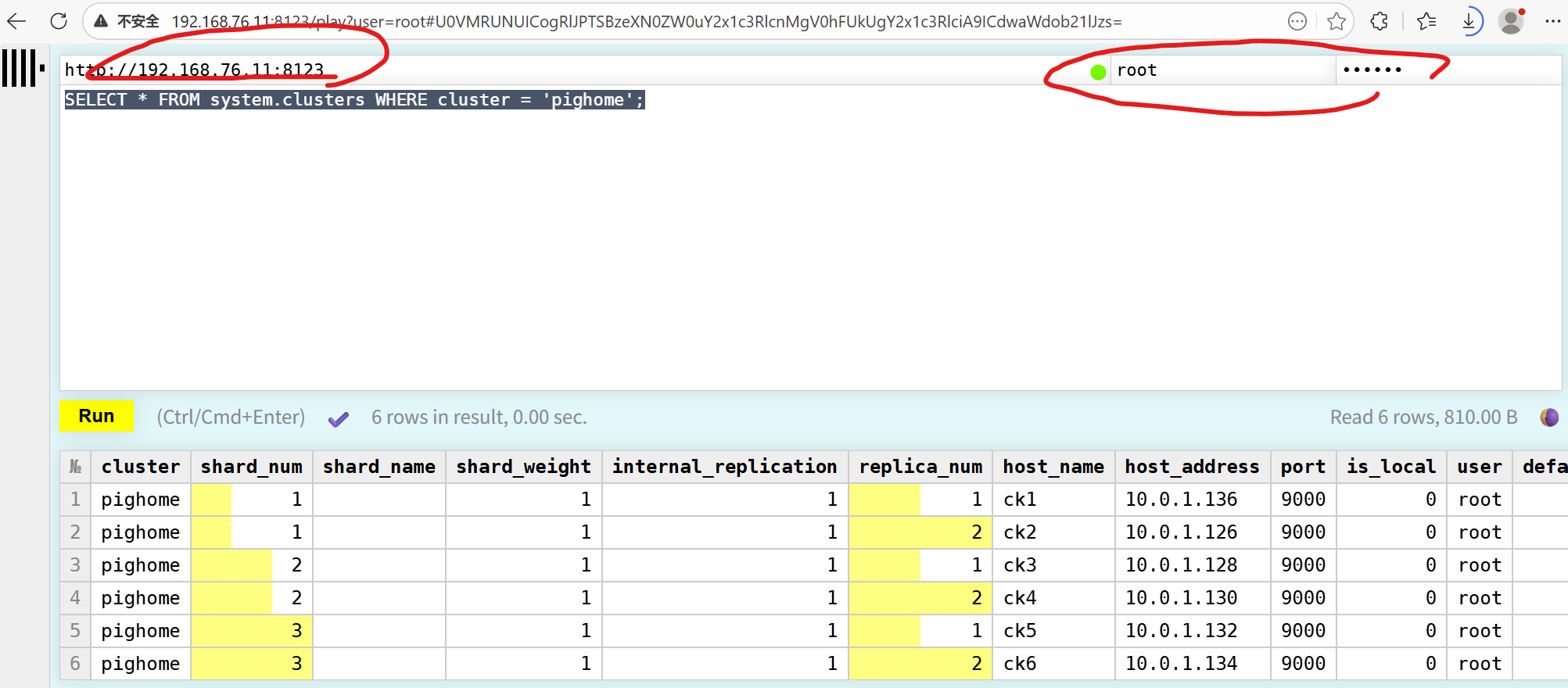

使用所配置的用户名密码,执行select指令

sql

SELECT * FROM system.clusters WHERE cluster = 'pighome';查看分片情况,和我们在配置文件中配置的一致,说明集群启动成功了。

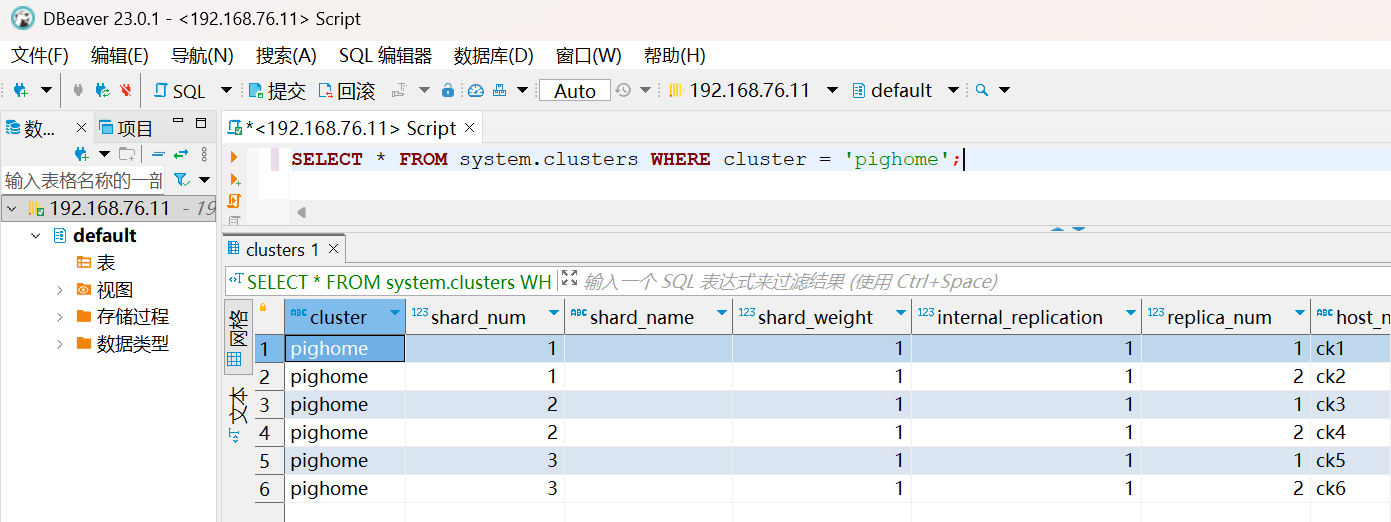

(2)从DBeaver访问

效果是一样的:

三、 配置从kafka到clickhouse的连接

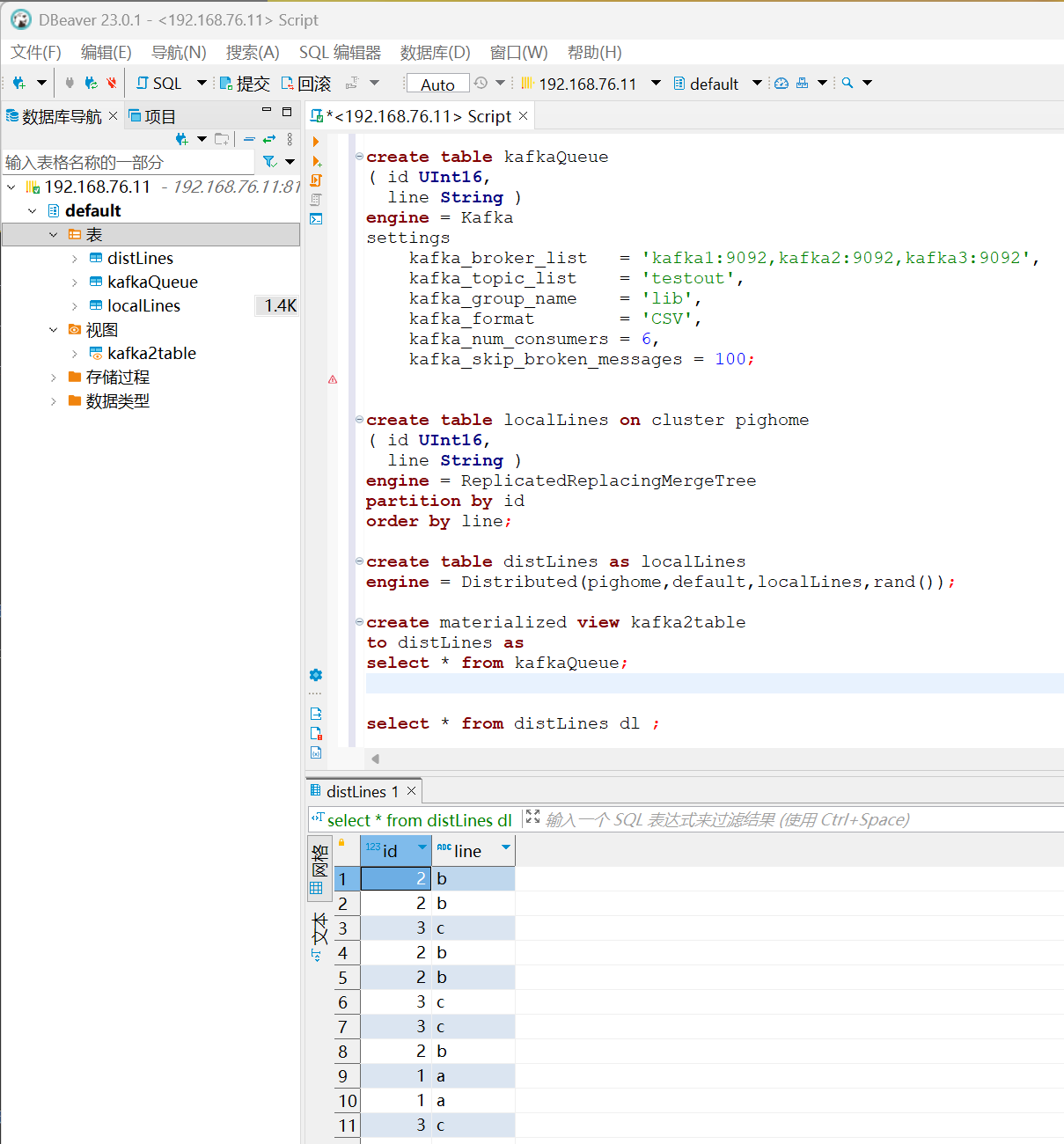

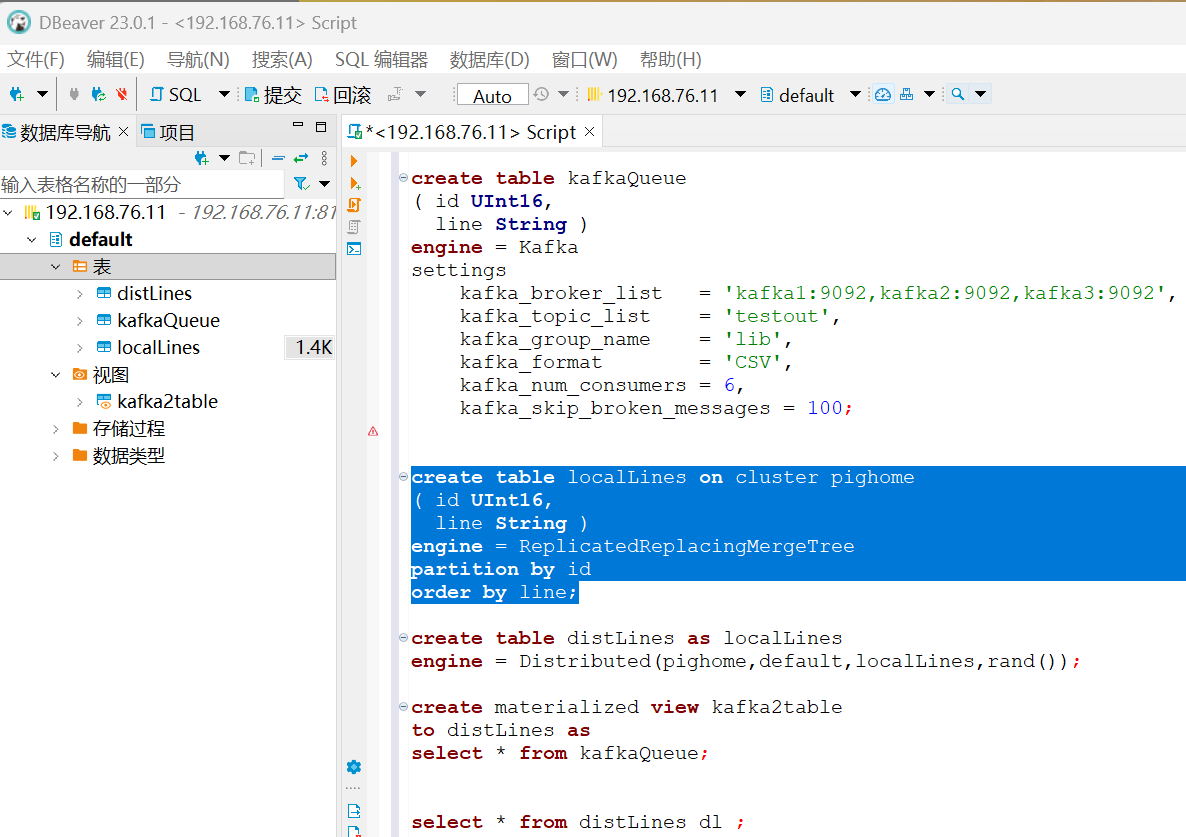

Clickhouse本身支持创建到Kafka的连接,提供了相应的表引擎。通过创建一个Kafka表引擎,然后利用一个物化视图和对应本地表连接,再通过对应本地表的分布式表,即可将kafka的数据输入到Clickhouse集群,并进行检索查询了。

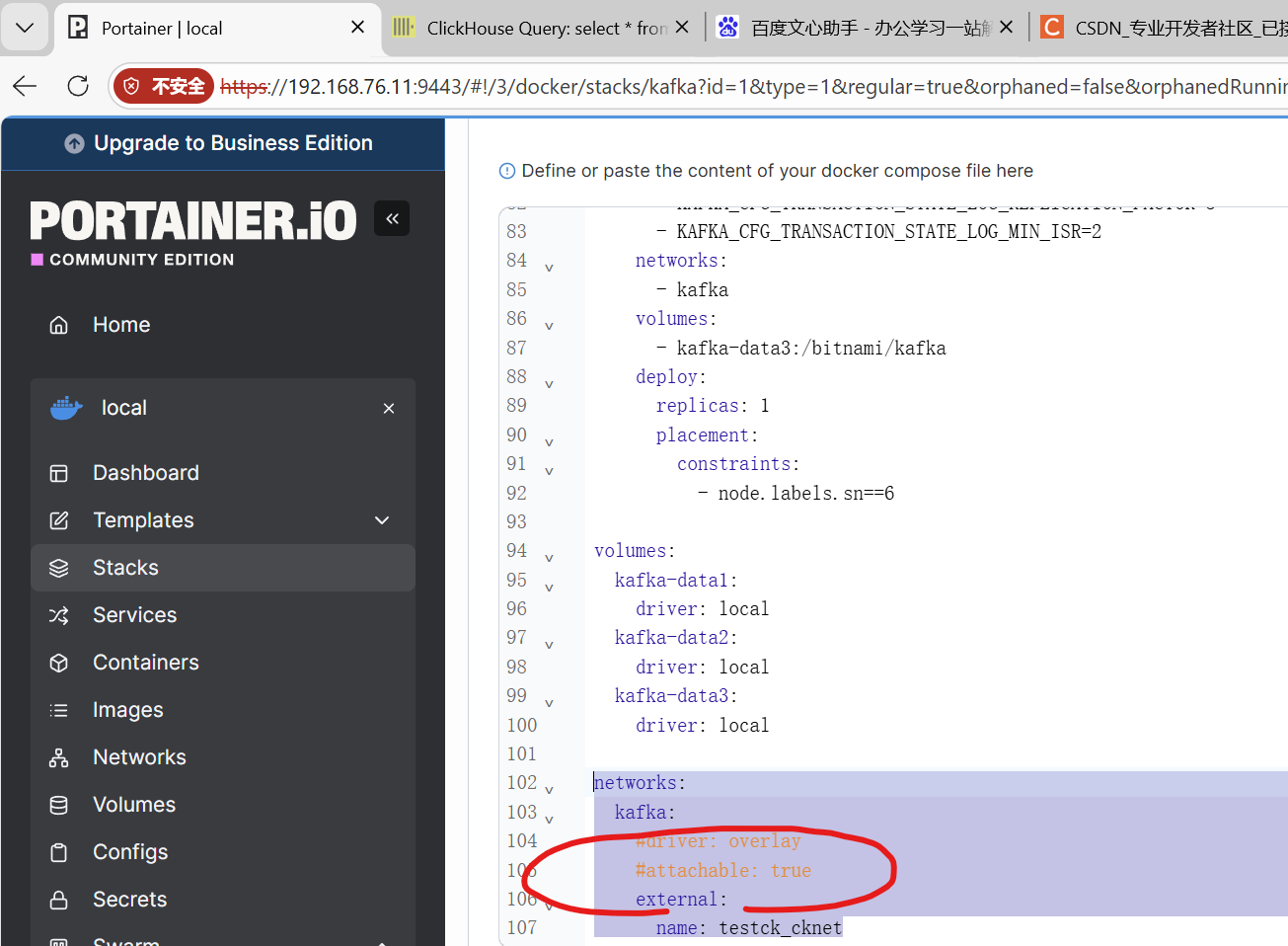

1. 将Kafka加入到Clickhouse所在网络

在前面我们已经试验过的Kafka的stack中,更改一下网络,将3个kafka节点加入到ck的网络里面来,注意网络名称及其前缀。另外需要再次提起的是,不要把kafka和keeper装在一个宿主机上,kraft会冲突。

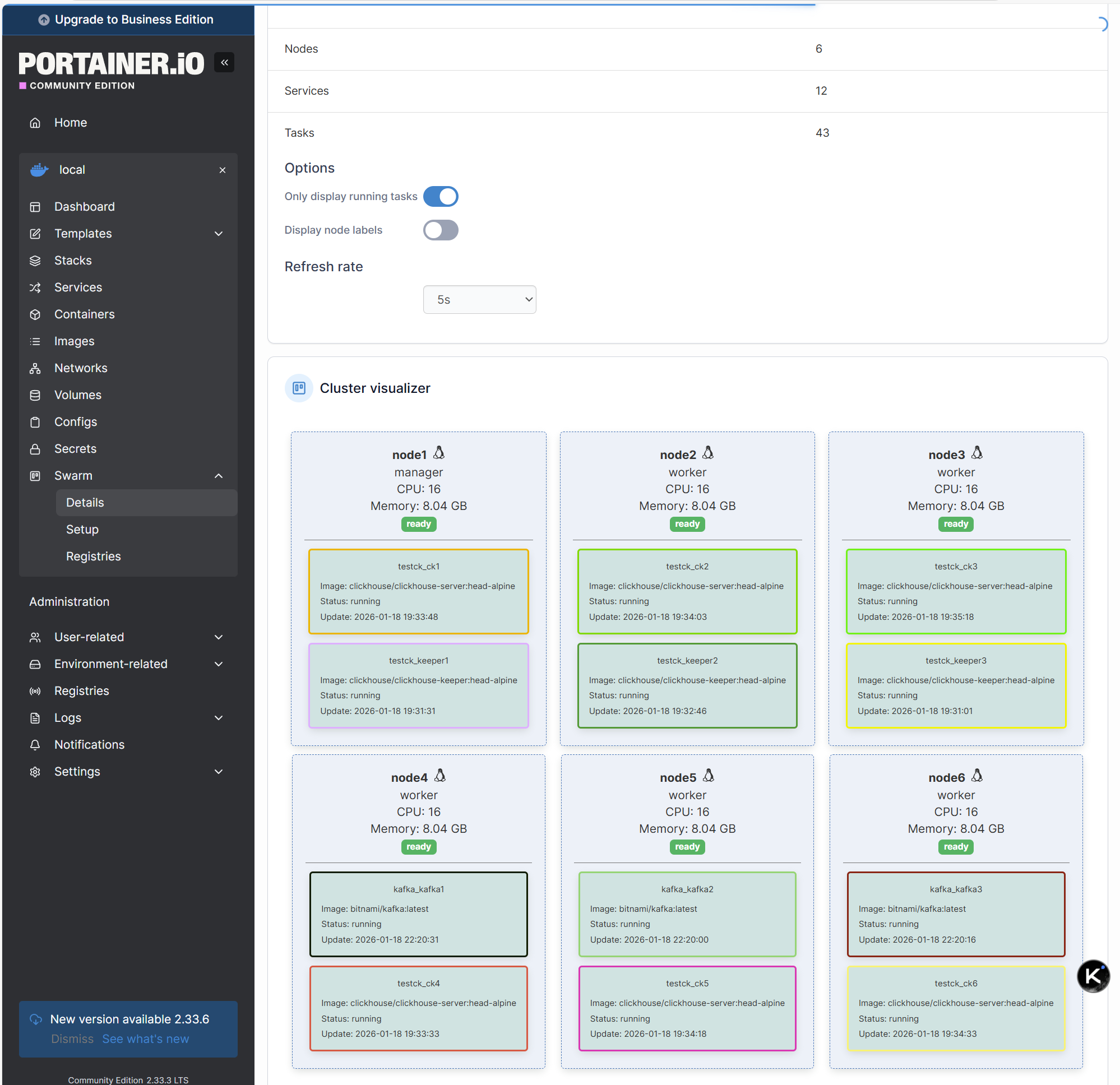

启动后,整个swarm集群看起来如下所示:

2. 连接Kafka到Clickhouse

(1)创建Kafka引擎表

sql

create table kafkaQueue

( id UInt16,

line String )

engine = Kafka

settings

kafka_broker_list = 'kafka1:9092,kafka2:9092,kafka3:9092',

kafka_topic_list = 'testout',

kafka_group_name = 'lib',

kafka_format = 'CSV',

kafka_num_consumers = 6,

kafka_skip_broken_messages = 100;(2)创建本地表

sql

create table localLines on cluster pighome

( id UInt16,

line String )

engine = ReplicatedReplacingMergeTree

partition by id

order by line;(3)创建分布式表

sql

create table distLines as localLines

engine = Distributed(pighome,default,localLines,rand());(4)创建物化视图

sql

create materialized view kafka2table

to distLines as

select * from kafkaQueue;然后就可以使用select从分布式表中检索从kafka传入的数据,当然现在还没有:

3. 测试

在node4中进入容器,启动生产者,连接testout主题:

bash

[root@node4 ckconfig]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

511800aaaa1c bitnami/kafka:latest "/opt/bitnami/script..." 37 seconds ago Up 34 seconds 9092/tcp kafka_kafka1.1.gr5dc19ocrflakv35lu0xukzd

c85c8ed53fcb clickhouse/clickhouse-server:head-alpine "/entrypoint.sh" 3 hours ago Up 3 hours 8123/tcp, 9000/tcp, 9009/tcp testck_ck4.1.ghx7hjzwi0mpcm9mpc33ypcwz

[root@node4 ckconfig]# docker exec -it 5118 bash

I have no name!@511800aaaa1c:/$ kafka-topics.sh --list --bootstrap-server 'kafka1:9092,kafka2:9092,kafka3:9092'

__consumer_offsets

I have no name!@511800aaaa1c:/$ kafka-topics.sh --create --topic testout --bootstrap-server 'kafka1:9092,kafka2:9092,kafka3:9092'

Created topic testout.

I have no name!@511800aaaa1c:/$ kafka-console-producer.sh --topic testout --bootstrap-server 'kafka1:9092,kafka2:9092,kafka3:9092'

>1,a

>2,b

>3,c

>1,a

>2,b

>3,c

>1,a

>2,b

>3,c

>在node5中进入容器,启动消费者,以确定kafka工作正常:

bash

[root@node5 ckconfig]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

814de9f38cca bitnami/kafka:latest "/opt/bitnami/script..." 3 minutes ago Up 3 minutes 9092/tcp kafka_kafka2.1.0wbapmx2bvyyts6qh4k1pj95l

6e37c6c92293 clickhouse/clickhouse-server:head-alpine "/entrypoint.sh" 3 hours ago Up 3 hours 8123/tcp, 9000/tcp, 9009/tcp testck_ck5.1.y8ypybs3uuyotangvn65hgi28

[root@node5 ckconfig]# docker exec -it 814d bash

I have no name!@814de9f38cca:/$ kafka-console-consumer.sh --topic testout --from-beginning --bootstrap-server 'kafka1:9092,kafka2:9092,kafka3:9092'

1,a

2,b

3,c

1,a

2,b

3,c

1,a

2,b

3,c在DBeaver中,查询分布式表:select * from distLines

结果如下图所示: