目录

[1 安装](#1 安装)

[2 使用案例](#2 使用案例)

[2.1 文档搜索比如rag](#2.1 文档搜索比如rag)

[2.2 图片检索以图搜图功能](#2.2 图片检索以图搜图功能)

[3 集成](#3 集成)

Milvus 提供强大的数据建模功能,使您能够将非结构化或多模式数据组织成结构化的 Collections。它支持多种数据类型,适用于不同的属性模型,包括常见的数字和字符类型、各种向量类型、数组、集合和 JSON

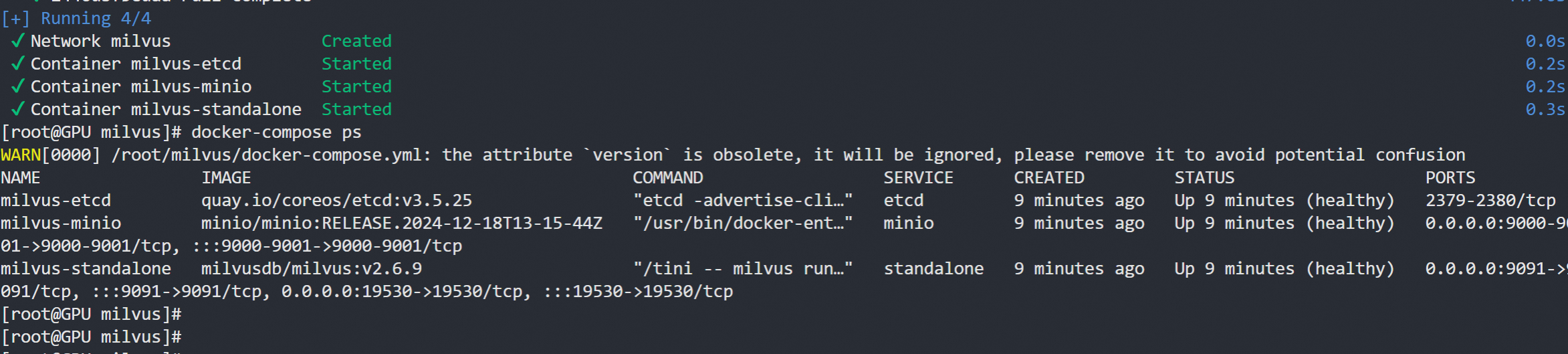

1 安装

https://milvus.io/docs/zh/install_standalone-docker-compose.md

curl -SL https://github.com/docker/compose/releases/download/v2.30.3/docker-compose-linux-x86_64 -o /usr/local/bin/docker-compose

#将可执行权限赋予安装目标路径中的独立二进制文件

sudo chmod +x /usr/local/bin/docker-compose

sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

wget https://github.com/milvus-io/milvus/releases/download/v2.6.9/milvus-standalone-docker-compose.yml -O docker-compose.yml

sudo docker compose up -d

Creating milvus-etcd ... done

Creating milvus-minio ... done

Creating milvus-standalone ... done

如何添加密码功能

#添加密码https://milvus.io/docs/zh/authenticate.md?tab=docker

...

common:

...

security:

authorizationEnabled: true

...

#默认密码root:Milvus

#pip install -U pymilvus

from pymilvus import MilvusClient

client = MilvusClient(

uri='http://localhost:19530', # replace with your own Milvus server address

token="root:Milvus"

) 2 使用案例

2.1 文档搜索比如rag

这里以云上模型为例,自建embed的话可以参考我之前的文章

python

import dashscope

from dashscope import TextEmbedding

from pymilvus import MilvusClient

dashscope.api_key=''

client = MilvusClient("http://127.0.0.1:19530")

def create_collection(collection_name):

if client.has_collection(collection_name="demo_collection"):

client.drop_collection(collection_name="demo_collection")

client.create_collection(

collection_name=collection_name,

dimension=1024, # The vectors we will use in this demo has 768 dimensions

metric_type="IP", # Inner product distance

consistency_level="Bounded", # Supported values are (`"Strong"`, `"Session"`, `"Bounded"`, `"Eventually"`). See https://milvus.io/docs/consistency.md#Consistency-Level for more details.

)

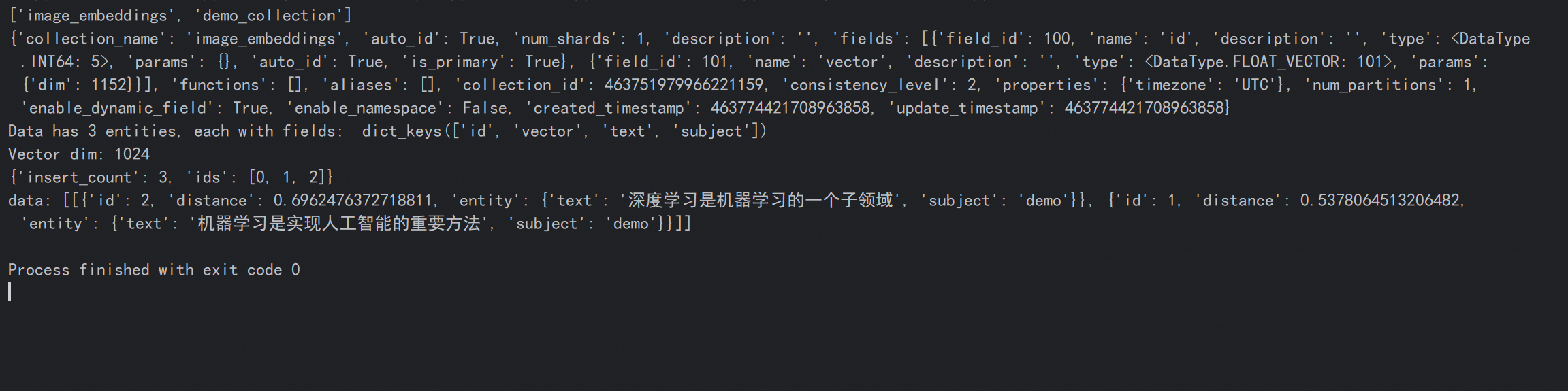

print(client.list_collections())

print(client.describe_collection(collection_name=client.list_collections()[0]))

def emb_text(text):

return (

TextEmbedding.call(

model="text-embedding-v4",

input=text,

dimension=1024

)

).output['embeddings']

def insert_data(collection_name, data):

res = client.insert(collection_name=collection_name, data=data)

return res

def search_data(collection_name):

query_vectors = emb_text(["深度学习"])[0]['embedding']

res = client.search(

collection_name=collection_name, # target collection

data=[query_vectors], # query vectors

limit=2, # number of returned entities

output_fields=["text", "subject"], # specifies fields to be returned

)

print(res)

if __name__ == '__main__':

# 创建集合

create_collection("demo_collection")

documents = [

"人工智能是计算机科学的一个分支",

"机器学习是实现人工智能的重要方法",

"深度学习是机器学习的一个子领域"

]

# test_embedding = emb_text("This is a test")

test_embedding = emb_text(documents)

embedding_dim = len(test_embedding)

# print(embedding_dim) 索引长度也就是定义的维度1024

# print(test_embedding) 索引内容

data = [

{"id": i, "vector": test_embedding[i]['embedding'], "text": documents[i], "subject": "demo"}

for i in range(len(documents))

]

print("Data has", len(data), "entities, each with fields: ", data[0].keys())

print("Vector dim:", len(data[0]["vector"]))

# 插入数据

res = insert_data("demo_collection", data)

print(res)

# 查询数据

search_data("demo_collection")可以通过相似值检索出相关内容

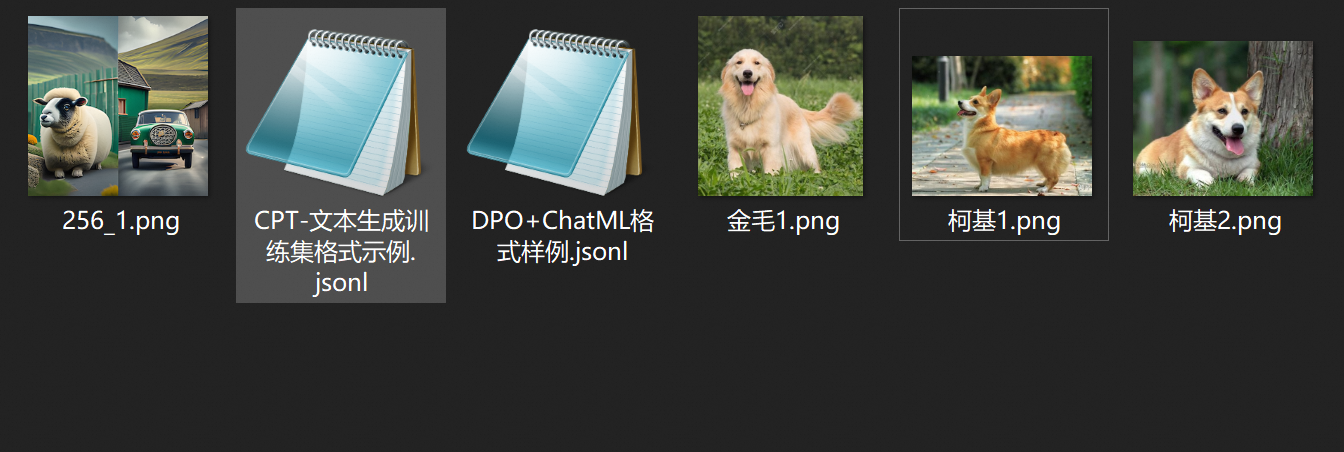

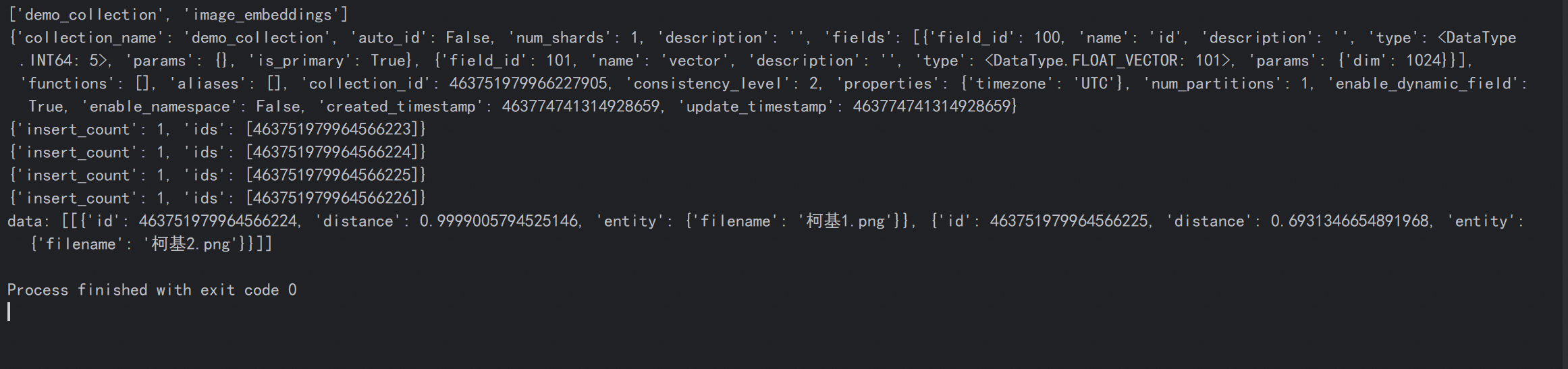

2.2 图片检索以图搜图功能

这里有两张柯基的图片一张金毛的图片

python

import base64

import os

import dashscope

from milvus.demo import client

dashscope.api_key=''

image = "https://dashscope.oss-cn-beijing.aliyuncs.com/images/256_1.png"

def create_collection(collection_name):

if client.has_collection(collection_name=collection_name):

client.drop_collection(collection_name=collection_name)

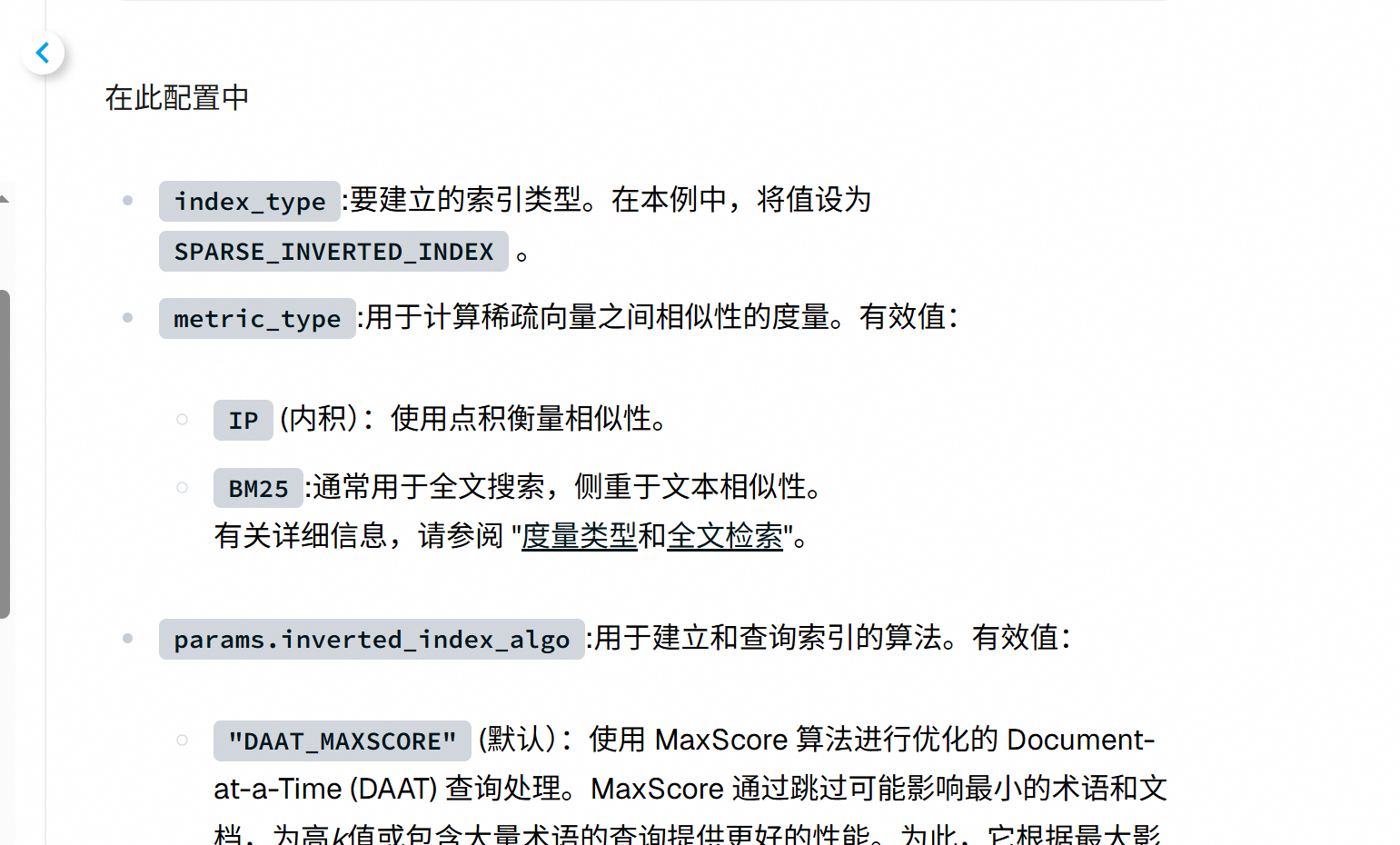

client.create_collection(

collection_name=collection_name,

auto_id=True,

vector_field_name="vector",

dimension=1152, # The vectors we will use in this demo has 768 dimensions

metric_type="IP", # Inner product distance

consistency_level="Bounded", # Supported values are (`"Strong"`, `"Session"`, `"Bounded"`, `"Eventually"`). See https://milvus.io/docs/consistency.md#Consistency-Level for more details.

)

print(client.list_collections())

print(client.describe_collection(collection_name=client.list_collections()[0]))

# def insert_data(collection_name, data):

# res = client.insert(collection_name=collection_name, data=data)

# return res

def image_to_base64(image_path):

with open(image_path, "rb") as image_file:

# 读取文件并转换为Base64

base64_image = base64.b64encode(image_file.read()).decode('utf-8')

# 设置图像格式

image_format = "png" # 根据实际情况修改,比如jpg、bmp 等

image_data = f"data:image/{image_format};base64,{base64_image}"

# 输入数据

input = [{'image': image_data}]

return input

#input = [{'image': image}]

def emb_text(input):

# 调用模型接口

resp = dashscope.MultiModalEmbedding.call(

model="tongyi-embedding-vision-plus",

input=input

).output['embeddings'][0]['embedding']

# print(resp)

# print(len(resp))

return resp

def search_data(input,collection_name):

query_vectors = emb_text(input)

res = client.search(

collection_name=collection_name, # target collection

data=[query_vectors], # query vectors

limit=2, # number of returned entities

output_fields=["filename"], # specifies fields to be returned

)

print(res)

if __name__ == '__main__':

create_collection("image_embeddings")

for file in os.listdir("../data"):

if file.endswith(".png"):

input = image_to_base64("../data/" + file)

image_embedding = emb_text(input)

res = client.insert(

"image_embeddings",

{"vector": image_embedding, "filename": file},

)

print(res)

search_data(image_to_base64("../data/柯基1.png"),"image_embeddings")

#emb_text(input)这里通过filename做演示,发现搜索柯基图片的时候返回的也是柯基,实际业务可以将图片地址返回前端使用以图搜相似图片

3 集成

后续更新

https://docs.llamaindex.org.cn/en/stable/examples/vector_stores/MilvusIndexDemo/