文章目录

- [一、Flink 中的状态概述](#一、Flink 中的状态概述)

- 二、状态分类

- [三、Keyed State(按键分组状态)](#三、Keyed State(按键分组状态))

-

- [1、ValueState:连续两次水位差 > 10 报警](#1、ValueState:连续两次水位差 > 10 报警)

- [2、ListState:每种传感器输出 Top3 水位](#2、ListState:每种传感器输出 Top3 水位)

- 3、MapState:统计每种水位值出现次数

- 4、ReducingState:水位求和(归约状态)

- 5、AggregatingState:水位平均值(聚合状态)

- 6、TTL:状态生存时间(防止状态无限膨胀)

- [三、Operator State(算子状态)](#三、Operator State(算子状态))

- [四、状态后端(State Backends)与选型](#四、状态后端(State Backends)与选型)

- 五、总结一句话

做实时计算时, Flink 真正强大之处在于"有状态计算" :窗口聚合、去重、告警、风控规则、用户画像......都离不开 State(状态)。

这篇文章把 Flink 状态管理从 0 到 1 梳理清楚:

✅ 状态是什么、为什么需要

✅ Managed vs Raw

✅ Keyed State 五大类型(Value/List/Map/Reducing/Aggregating)

✅ TTL 怎么配置

✅ Operator State 的应用场景(List/UnionList/Broadcast)

✅ 状态后端 HashMap vs RocksDB 如何选

一、Flink 中的状态概述

**状态(State)**就是算子在处理数据时需要"记住的历史信息"。

比如:

- 统计 UV:需要记住"这个用户是否出现过"

- 连续两次差值告警:需要记住"上一次水位"

- 窗口聚合:需要记住"窗口内累计值"

如果没有状态,流计算只能做"纯函数式的 map/filter",很难落地真实业务。

二、状态分类

1)托管状态(Managed State) vs 原始状态(Raw State)

- Managed State(托管状态) :Flink 帮你管理存储、访问、checkpoint、故障恢复、扩缩容重分配

✅ 业务只管调用 API(推荐、生产必用) - Raw State(原始状态) :你自己开内存、自己序列化、自己恢复

❌ 维护成本很高,一般不用

结论:生产上几乎都用 Managed State。

2)Keyed State vs Operator State

Keyed State(按键分组状态)

- 前提:必须是

keyBy之后的KeyedStream - 特点:按 key 隔离,不同 key 的状态互不影响

- 常用场景:聚合、窗口、按用户/设备维度统计与告警

注意:没有 keyBy 的流,即使你写 RichMapFunction,也拿不到 Keyed State。

Operator State(算子状态)

- 不按 key 分隔

- 一个并行子任务一份状态

- 常见在 Source/Sink 这类需要保存 offset、缓存批量数据的算子里(比如 Kafka connector)

三、Keyed State(按键分组状态)

Keyed State 的核心记忆点:

"状态以 key 为单位隔离,每个 key 都有自己的一份状态。"

而且:只要是 RichFunction(RichMap/RichFilter/ProcessFunction...)都能通过 getRuntimeContext() 拿状态句柄,让任意算子变成"有状态算子"。

java

public class WaterSensorMapFunction implements MapFunction<String, WaterSensor> {

@Override

public WaterSensor map(String value) throws Exception {

String[] datas = value.split(",");

return new WaterSensor(datas[0],Long.valueOf(datas[1]) ,Integer.valueOf(datas[2]) );

}

}1、ValueState:连续两次水位差 > 10 报警

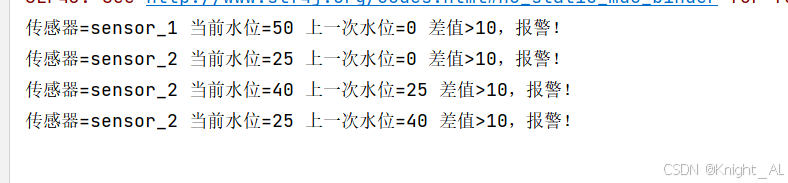

需求: 对每个传感器(id)检测水位 vc,如果连续两次差值超过 10,输出报警。

思路:

keyBy(id)保证每个传感器独立计算- 用

ValueState<Integer>存"上一条 vc" - 当前 vc 与上一条 vc 做差判断

java

import com.donglin.flink.domain.WaterSensor;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class KeyedValueStateDemo {

public static void main(String[] args) throws Exception {

// 1. 环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 输入

SingleOutputStreamOperator<WaterSensor> sensorDS = env

.socketTextStream("192.168.121.140", 7777)

.map(new WaterSensorMapFunction())

.assignTimestampsAndWatermarks(

WatermarkStrategy.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

.withTimestampAssigner((element, ts) -> element.getTs() * 1000L)

);

// 3. 有状态处理

sensorDS.keyBy(WaterSensor::getId)

.process(new KeyedProcessFunction<String, WaterSensor, String>() {

// 状态句柄

private ValueState<Integer> lastVcState;

@Override

public void open(OpenContext openContext) throws Exception {

lastVcState = getRuntimeContext().getState(

new ValueStateDescriptor<>("lastVcState", Types.INT)

);

}

@Override

public void processElement(

WaterSensor value,

Context ctx,

Collector<String> out) throws Exception {

Integer prev = lastVcState.value();

int old = (prev == null ? 0 : prev);

int cur = value.getVc();

if (Math.abs(cur - old) > 10) {

out.collect("传感器=" + value.getId()

+ " 当前水位=" + cur

+ " 上一次水位=" + old

+ " 差值>10,报警!");

}

lastVcState.update(cur);

}

})

.print();

// 4. 运行

env.execute("Flink 2.0 Stateful Demo");

}

}

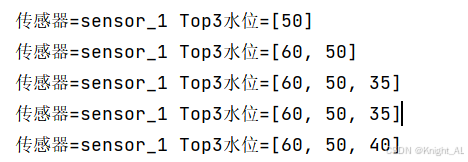

2、ListState:每种传感器输出 Top3 水位

需求: 每个传感器维护一个"历史水位列表",输出 Top3。

思路:

ListState<Integer>存每个 key 的水位列表- 每来一条 add

- 取出 Iterable → copy → sort → 保留 3 个 → update 回状态

java

import com.donglin.flink.domain.WaterSensor;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

import java.util.ArrayList;

import java.util.List;

public class KeyedListStateDemo {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<WaterSensor> sensorDS = env

.socketTextStream("192.168.121.140", 7777)

.map(new WaterSensorMapFunction())

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

.withTimestampAssigner((element, ts) -> element.getTs() * 1000L)

);

sensorDS.keyBy(WaterSensor::getId)

.process(new KeyedProcessFunction<String, WaterSensor, String>() {

private ListState<Integer> vcListState;

@Override

public void open(OpenContext openContext) throws Exception {

vcListState = getRuntimeContext().getListState(

new ListStateDescriptor<>("vcListState", Types.INT)

);

}

@Override

public void processElement(WaterSensor value, Context ctx, Collector<String> out) throws Exception {

vcListState.add(value.getVc());

List<Integer> list = new ArrayList<>();

for (Integer vc : vcListState.get()) list.add(vc);

list.sort((a, b) -> b - a);

if (list.size() > 3) list = list.subList(0, 3);

vcListState.update(list);

out.collect("传感器=" + value.getId() + " Top3水位=" + list);

}

})

.print();

env.execute();

}

}

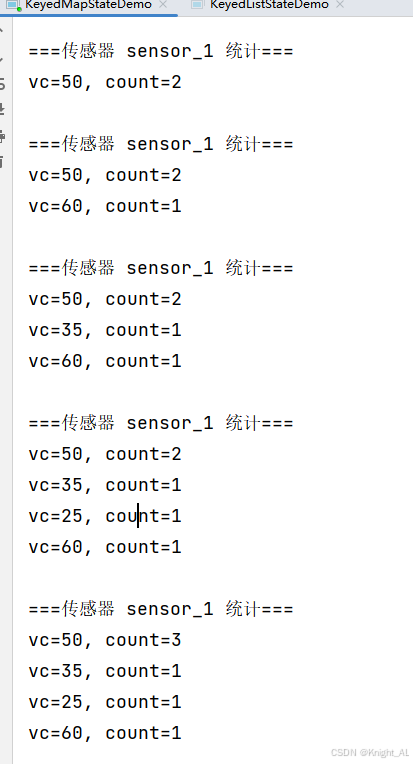

3、MapState:统计每种水位值出现次数

需求: 对每个传感器统计"某个 vc 出现次数"。

思路:

MapState<vc, count>- 每来一条 vc:contains -> count+1,否则 put(vc, 1)

java

import com.donglin.flink.domain.WaterSensor;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.MapState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

import java.util.Map;

public class KeyedMapStateDemo {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<WaterSensor> sensorDS = env

.socketTextStream("192.168.121.140", 7777)

.map(new WaterSensorMapFunction())

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

.withTimestampAssigner((element, ts) -> element.getTs() * 1000L)

);

sensorDS.keyBy(WaterSensor::getId)

.process(new KeyedProcessFunction<String, WaterSensor, String>() {

private MapState<Integer, Integer> vcCountMapState;

@Override

public void open(OpenContext openContext) throws Exception {

vcCountMapState = getRuntimeContext().getMapState(

new MapStateDescriptor<>("vcCountMapState", Types.INT, Types.INT)

);

}

@Override

public void processElement(WaterSensor value, Context ctx, Collector<String> out) throws Exception {

Integer vc = value.getVc();

Integer cnt = vcCountMapState.contains(vc) ? vcCountMapState.get(vc) : 0;

vcCountMapState.put(vc, cnt + 1);

StringBuilder sb = new StringBuilder();

sb.append("===传感器 ").append(value.getId()).append(" 统计===\n");

for (Map.Entry<Integer, Integer> e : vcCountMapState.entries()) {

sb.append("vc=").append(e.getKey()).append(", count=").append(e.getValue()).append("\n");

}

out.collect(sb.toString());

}

})

.print();

env.execute();

}

}

4、ReducingState:水位求和(归约状态)

特点:你 .add(x) 进去后,它会按 reduceFunction 自动聚合成一个值。

java

import com.donglin.flink.domain.WaterSensor;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.common.state.ReducingState;

import org.apache.flink.api.common.state.ReducingStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class KeyedReducingStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建 Flink 执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 从 socket 读取数据并解析成 WaterSensor 对象

SingleOutputStreamOperator<WaterSensor> sensorDS = env

.socketTextStream("192.168.121.140", 7777)

.map(value -> {

String[] parts = value.split(",");

return new WaterSensor(parts[0], Long.parseLong(parts[1]), Integer.parseInt(parts[2]));

})

// 设置事件时间和 watermark

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

.withTimestampAssigner((element, ts) -> element.getTs() * 1000L)

);

// 3. 按 id 分组,并使用 ReducingState 累加每个 key 的 vc 值

sensorDS

.keyBy(WaterSensor::getId)

.process(new KeyedProcessFunction<String, WaterSensor, Integer>() {

private ReducingState<Integer> sumVcState;

@Override

public void open(OpenContext openContext) throws Exception {

// 创建 ReducingStateDescriptor,使用 Integer::sum 作为规约逻辑

ReducingStateDescriptor<Integer> reducingStateDescriptor =

new ReducingStateDescriptor<>(

"sumVcState",

new ReduceFunction<Integer>() {

@Override

public Integer reduce(Integer value1, Integer value2) throws Exception {

return value1 + value2;

}

},

Types.INT

);

sumVcState = getRuntimeContext().getReducingState(reducingStateDescriptor);

}

@Override

public void processElement(WaterSensor value, Context ctx, Collector<Integer> out) throws Exception {

// 向 ReducingState 中累加当前 vc

sumVcState.add(value.getVc());

// 输出当前累积结果

out.collect(sumVcState.get());

}

})

.print();

// 4. 启动任务

env.execute("Flink ReducingState Demo");

}

}

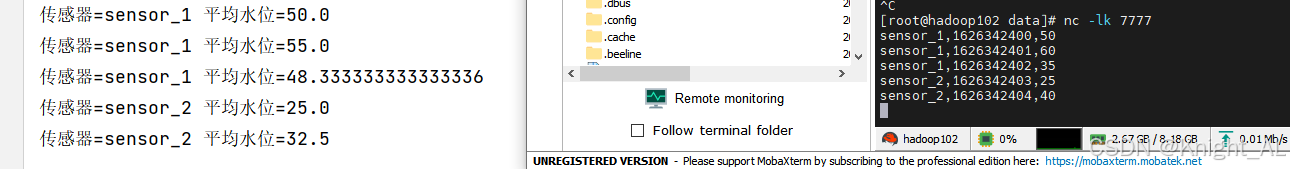

5、AggregatingState:水位平均值(聚合状态)

特点:更灵活,输入类型和输出类型可以不同,通过 Accumulator 做中间态。

java

package com.donglin.flink.wc;

import com.donglin.flink.domain.WaterSensor;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.AggregatingState;

import org.apache.flink.api.common.state.AggregatingStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import org.apache.flink.api.java.tuple.Tuple2;

import java.time.Duration;

public class KeyedAggregatingStateDemo {

public static void main(String[] args) throws Exception {

// 1. 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 读 socket 输入并把每行字符串解析成 WaterSensor

SingleOutputStreamOperator<WaterSensor> sensorDS = env

.socketTextStream("192.168.121.140", 7777)

.map(value -> {

String[] parts = value.split(",");

return new WaterSensor(

parts[0],

Long.parseLong(parts[1]),

Integer.parseInt(parts[2])

);

})

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

.withTimestampAssigner((element, ts) -> element.getTs() * 1000L)

);

// 3. KeyBy 并使用 AggregatingState 求平均值

sensorDS

.keyBy(WaterSensor::getId)

.process(new KeyedProcessFunction<String, WaterSensor, String>() {

// 定义聚合状态

private AggregatingState<Integer, Double> avgState;

@Override

public void open(OpenContext openContext) throws Exception {

// 创建 AggregatingStateDescriptor

AggregatingStateDescriptor<

Integer, // 输入类型

Tuple2<Integer, Integer>, // 累加器类型

Double // 输出类型

> avgDesc = new AggregatingStateDescriptor<>(

"avgState",

new AggregateFunction<Integer, Tuple2<Integer, Integer>, Double>() {

@Override

public Tuple2<Integer, Integer> createAccumulator() {

// 初始累加器:总和和计数

return Tuple2.of(0, 0);

}

@Override

public Tuple2<Integer, Integer> add(Integer value, Tuple2<Integer, Integer> acc) {

// 每来一个输入就累加 和和次数

return Tuple2.of(acc.f0 + value, acc.f1 + 1);

}

@Override

public Double getResult(Tuple2<Integer, Integer> acc) {

// 计算平均值

return acc.f1 == 0 ? 0.0 : (acc.f0 * 1.0 / acc.f1);

}

@Override

public Tuple2<Integer, Integer> merge(Tuple2<Integer, Integer> a, Tuple2<Integer, Integer> b) {

// merge 两个累加器(如果需要)

return Tuple2.of(a.f0 + b.f0, a.f1 + b.f1);

}

},

Types.TUPLE(Types.INT, Types.INT) // 累加器类型

);

avgState = getRuntimeContext().getAggregatingState(avgDesc);

}

@Override

public void processElement(WaterSensor value, Context ctx, Collector<String> out) throws Exception {

// 把当前 vc 值加入累加状态

avgState.add(value.getVc());

// 输出当前平均值

Double avgVc = avgState.get();

out.collect("传感器=" + value.getId() + " 平均水位=" + avgVc);

}

})

.print();

// 4. 执行任务

env.execute("Flink AggregatingState Demo");

}

}

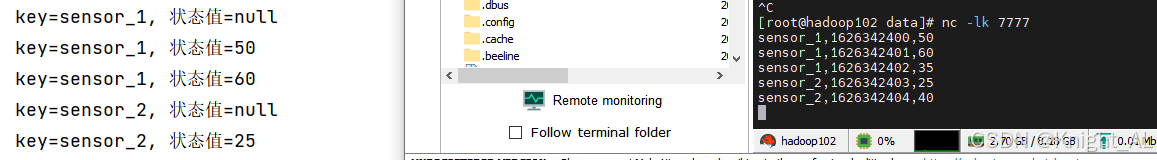

6、TTL:状态生存时间(防止状态无限膨胀)

生产中状态会越积越大,必须考虑清理策略:

- 手动 clear(业务可控时)

- TTL 自动过期(推荐)

TTL 核心点:

- 设置过期时间

Time.seconds(x) - 过期时间刷新策略:读/写是否刷新

- 是否允许返回过期值

注意:目前 TTL 只支持处理时间(Processing Time)。

java

import com.donglin.flink.domain.WaterSensor;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.OpenContext;

import org.apache.flink.api.common.state.StateTtlConfig;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class StateTTLDemo {

public static void main(String[] args) throws Exception {

// 1. Flink 环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 2. 输入流 + 解析

SingleOutputStreamOperator<WaterSensor> sensorDS = env

.socketTextStream("192.168.121.140", 7777)

.map(new WaterSensorMapFunction())

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

.withTimestampAssigner((element, ts) -> element.getTs() * 1000L)

);

// 3. KeyedState + TTL

sensorDS.keyBy(WaterSensor::getId)

.process(new KeyedProcessFunction<String, WaterSensor, String>() {

private ValueState<Integer> lastVcState;

@Override

public void open(OpenContext openContext) throws Exception {

// 3.1 TTL 配置:5 秒生存时间

StateTtlConfig ttlConfig = StateTtlConfig

.newBuilder(Duration.ofSeconds(5))

// 在读取和写入时延长状态有效期(也可以用 OnCreateAndWrite)

.setUpdateType(StateTtlConfig.UpdateType.OnReadAndWrite)

// 不返回过期状态(严格要求过期后视为 null)

.setStateVisibility(StateTtlConfig.StateVisibility.NeverReturnExpired)

.build();

// 3.2 状态描述符

ValueStateDescriptor<Integer> desc =

new ValueStateDescriptor<>("lastVcState", Types.INT);

// 3.3 打开 TTL 功能

desc.enableTimeToLive(ttlConfig);

lastVcState = getRuntimeContext().getState(desc);

}

@Override

public void processElement(

WaterSensor value,

Context ctx,

Collector<String> out) throws Exception {

Integer last = lastVcState.value(); // 读取状态

out.collect("key=" + value.getId() + ", 状态值=" + last);

// 只有当 vc > 10 时才更新状态

if (value.getVc() > 10) {

lastVcState.update(value.getVc());

}

}

})

.print();

env.execute("Flink TTL State Demo");

}

}

三、Operator State(算子状态)

Operator State:一个并行子任务一份,不按 key 分隔,常见在 Source/Sink。

1、每个并行子任务计数(CheckpointedFunction)

java

package com.donglin.flink.wc;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.runtime.state.FunctionInitializationContext;

import org.apache.flink.runtime.state.FunctionSnapshotContext;

import org.apache.flink.streaming.api.checkpoint.CheckpointedFunction;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class OperatorListStateDemo {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 故障恢复演示用并行度设为2

env.setParallelism(2);

env.socketTextStream("192.168.121.140", 7777)

.map(new MyCountMapFunction())

.print();

env.execute("Operator State ListState Demo");

}

public static class MyCountMapFunction

implements MapFunction<String, Long>, CheckpointedFunction {

// 本地计数器(非托管状态)

private long count = 0L;

// 托管 Operator ListState,用来保存各个任务实例的 count

private transient ListState<Long> state;

@Override

public Long map(String value) throws Exception {

// 每来一条数据,本地计数+1

return ++count;

}

@Override

public void snapshotState(FunctionSnapshotContext context) throws Exception {

// Flink checkpoint 时调用 snapshotState,将当前 count 保存到 ListState

state.clear();

state.add(count);

}

@Override

public void initializeState(FunctionInitializationContext context) throws Exception {

// 在算子初始化时,获取 operator state

ListStateDescriptor<Long> descriptor =

new ListStateDescriptor<>("state", Types.LONG);

state = context.getOperatorStateStore().getListState(descriptor);

// 如果是从 checkpoint / savepoint 恢复,则将上次保存的状态累加到本地 count

if (context.isRestored()) {

for (Long c : state.get()) {

count += c;

}

}

}

}

}2、UnionListState:扩缩容时全量广播给每个并行子任务

用法和 ListState 类似,区别是初始化:

java

state = context.getOperatorStateStore()

.getUnionListState(new ListStateDescriptor<>("union-state", Types.LONG));适合:规则表不大、每个并行实例都要拿到全量的情况(但数据大不推荐)。

3、BroadcastState:动态阈值告警(配置可实时更新)

典型场景:

- 数据流:传感器水位

- 配置流:阈值 threshold(可动态改)

- 配置流广播后,所有并行子任务都持有同一份阈值状态

java

import com.donglin.flink.domain.WaterSensor;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.state.BroadcastState;

import org.apache.flink.api.common.state.MapStateDescriptor;

import org.apache.flink.api.common.state.ReadOnlyBroadcastState;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.BroadcastStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.BroadcastProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class OperatorBroadcastStateDemo {

// 广播状态描述符:key="threshold" -> value=阈值

private static final MapStateDescriptor<String, Integer> BROADCAST_DESC =

new MapStateDescriptor<>("broadcast-state", Types.STRING, Types.INT);

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(2);

// 1) 主数据流:传感器数据(id,ts,vc)

SingleOutputStreamOperator<WaterSensor> sensorDS = env

.socketTextStream("192.168.121.140", 7777)

.map(new WaterSensorMapFunction())

// 这里 watermark 不是广播必需的,但你前面例子都带了,我就保留

.assignTimestampsAndWatermarks(

WatermarkStrategy.<WaterSensor>forBoundedOutOfOrderness(Duration.ofSeconds(3))

.withTimestampAssigner((e, ts) -> e.getTs() * 1000L)

);

// 2) 配置流:阈值(直接发整数,比如 10 / 20)

DataStreamSource<String> configDS = env.socketTextStream("192.168.121.140", 8888);

// 3) 广播配置流

BroadcastStream<String> configBS = configDS.broadcast(BROADCAST_DESC);

// 4) 连接两条流:主流读广播状态,配置流更新广播状态

sensorDS.connect(configBS)

.process(new BroadcastProcessFunction<WaterSensor, String, String>() {

@Override

public void processElement(

WaterSensor value,

ReadOnlyContext ctx,

Collector<String> out) throws Exception {

ReadOnlyBroadcastState<String, Integer> bs = ctx.getBroadcastState(BROADCAST_DESC);

Integer threshold = bs.get("threshold");

int t = (threshold == null) ? 0 : threshold;

if (value.getVc() > t) {

out.collect("ALERT: " + value + " 水位超过阈值=" + t + " !!!");

} else {

out.collect("OK: " + value + " 当前阈值=" + t);

}

}

@Override

public void processBroadcastElement(

String value,

Context ctx,

Collector<String> out) throws Exception {

String s = value.trim();

int threshold;

try {

threshold = Integer.parseInt(s);

} catch (Exception e) {

out.collect("配置格式错误,必须是整数阈值,例如 10 / 20。收到=" + value);

return;

}

BroadcastState<String, Integer> bs = ctx.getBroadcastState(BROADCAST_DESC);

bs.put("threshold", threshold);

out.collect("阈值已更新为: " + threshold);

}

})

.print();

env.execute("Operator Broadcast State Demo");

}

}四、状态后端(State Backends)与选型

状态后端决定:状态存哪、怎么读写、checkpoint 怎么做。

两类后端

1)HashMapStateBackend

- 状态存 JVM 内存(堆)

- 快、延迟低

- 缺点:受内存限制,状态大容易 OOM

适合:状态不大、低延迟优先的作业

2)EmbeddedRocksDBStateBackend

- 状态存本地 RocksDB(磁盘)

- 状态可以非常大

- 缺点:序列化/反序列化 + 磁盘 IO,性能比内存差一个量级

- 支持 增量 checkpoint,大状态更友好

适合:状态很大、需要强稳定性的作业

配置方式

(1)集群默认(flink-conf.yaml)

yaml

state.backend: hashmap

state.checkpoints.dir: hdfs://hadoop102:8020/flink/checkpoints(2)代码 per-job 覆盖

java

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStateBackend(new HashMapStateBackend());或 RocksDB:

java

env.setStateBackend(new EmbeddedRocksDBStateBackend());如果 IDE 本地用 RocksDB,需要依赖:

xml

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-statebackend-rocksdb</artifactId>

<version>${flink.version}</version>

</dependency>五、总结一句话

- Keyed State:按 key 隔离,业务最常用(统计、告警、窗口)

- Operator State:按并行子任务维度,常见在 source/sink 和广播配置

- TTL:必备,防止状态无限增长

- HashMap vs RocksDB:小状态低延迟选 HashMap;大状态强可靠选 RocksDB