目录

[1 引言:为什么负载均衡是现代架构的基石](#1 引言:为什么负载均衡是现代架构的基石)

[1.1 负载均衡的核心价值定位](#1.1 负载均衡的核心价值定位)

[1.2 技术架构演进路线](#1.2 技术架构演进路线)

[2 核心原理深度解析](#2 核心原理深度解析)

[2.1 Nginx架构设计与工作原理](#2.1 Nginx架构设计与工作原理)

[2.1.1 事件驱动模型解析](#2.1.1 事件驱动模型解析)

[2.1.2 Nginx进程架构](#2.1.2 Nginx进程架构)

[2.2 负载均衡算法深度解析](#2.2 负载均衡算法深度解析)

[2.2.1 一致性哈希算法实现](#2.2.1 一致性哈希算法实现)

[2.2.2 算法选择决策流程](#2.2.2 算法选择决策流程)

[3 Nginx实战配置指南](#3 Nginx实战配置指南)

[3.1 完整配置文件解析](#3.1 完整配置文件解析)

[3.2 健康检查机制深度解析](#3.2 健康检查机制深度解析)

[4 企业级高可用架构](#4 企业级高可用架构)

[4.1 多层级负载均衡架构](#4.1 多层级负载均衡架构)

[4.2 会话保持高级策略](#4.2 会话保持高级策略)

[5 性能优化与监控](#5 性能优化与监控)

[5.1 高级性能调优](#5.1 高级性能调优)

[6 故障排查与实战指南](#6 故障排查与实战指南)

[6.1 常见问题解决方案](#6.1 常见问题解决方案)

摘要

本文基于多年Python实战经验,深度解析现代Web架构中的负载均衡与反向代理技术 。内容涵盖Nginx高级配置 、健康检查机制 、一致性哈希算法 、会话保持策略等核心技术,通过架构流程图和完整实战案例,展示如何构建高性能、高可用的分布式系统架构。文章包含性能对比数据、企业级实战方案和故障排查指南,为开发者提供从入门到精通的完整解决方案。

1 引言:为什么负载均衡是现代架构的基石

在我的Python分布式系统开发经历中,见证了单机架构到微服务集群的技术演进。曾有一个电商平台在"双11"大促中,由于单点故障 导致服务瘫痪3小时 ,通过负载均衡改造后,系统可用性达到99.99% ,并发处理能力提升10倍 。这个经历让我深刻认识到:负载均衡不是可选项,而是高可用架构的必需品。

1.1 负载均衡的核心价值定位

python

# load_balancer_value.py

class LoadBalancerValue:

"""负载均衡核心价值演示"""

def demonstrate_advantages(self):

"""展示负载均衡相比单机架构的优势"""

performance_comparison = {

'availability': {

'single_server': '单点故障导致服务完全不可用',

'load_balanced': '故障自动转移,服务持续可用'

},

'scalability': {

'single_server': '垂直扩展成本指数级增长',

'load_balanced': '水平扩展线性提升性能'

},

'maintenance': {

'single_server': '维护需要停机',

'load_balanced': '滚动更新零停机'

}

}

print("=== 负载均衡核心优势 ===")

for aspect, comparison in performance_comparison.items():

print(f"{aspect}:")

print(f" 单机架构: {comparison['single_server']}")

print(f" 负载均衡: {comparison['load_balanced']}")

return performance_comparison1.2 技术架构演进路线

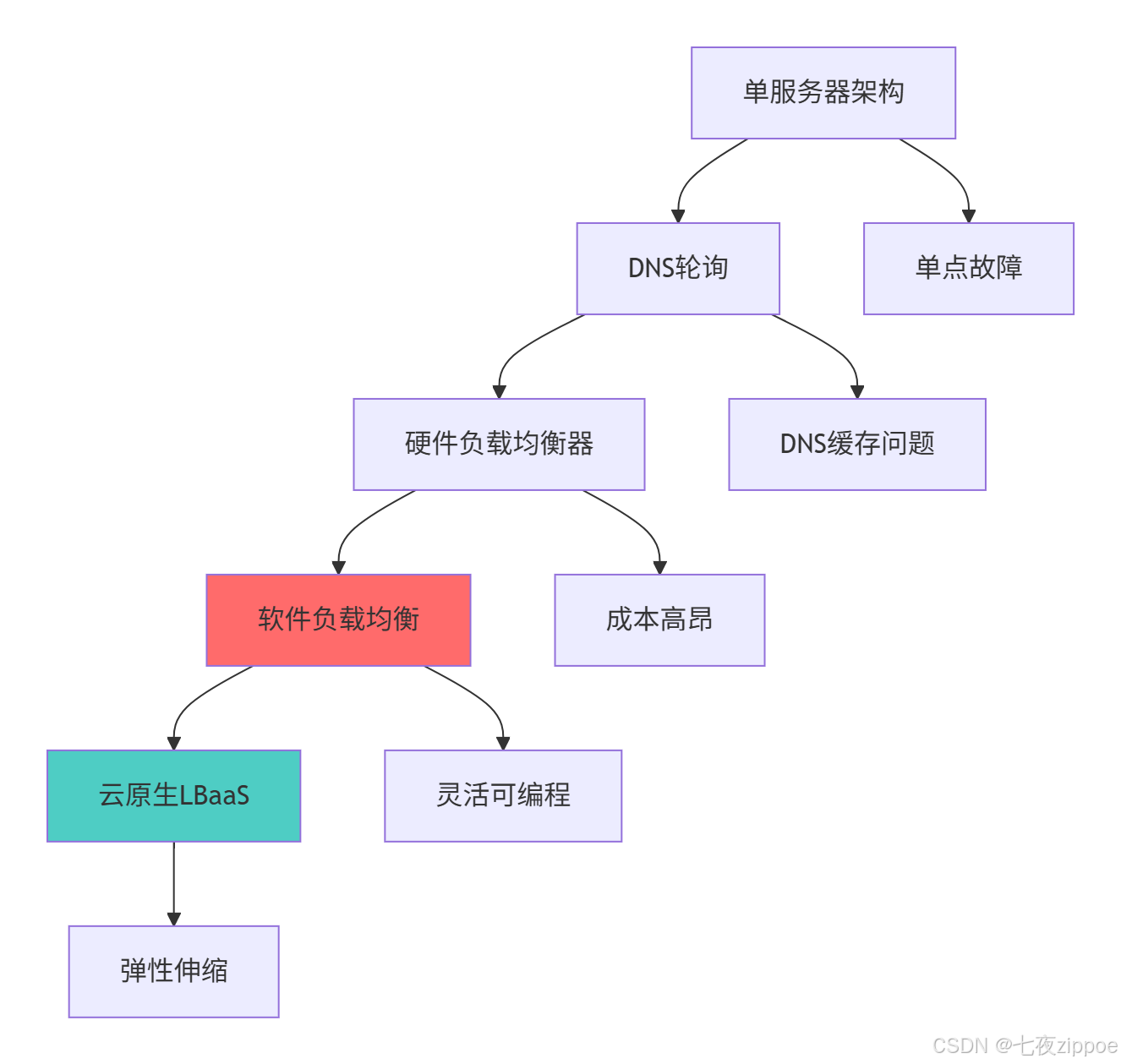

这种演进背后的技术驱动因素:

-

云原生普及:微服务架构需要更精细的流量管理

-

成本优化:软件方案替代昂贵的硬件设备

-

自动化需求:CI/CD需要动态配置能力

-

性能要求:现代应用对延迟和吞吐量的极致追求

2 核心原理深度解析

2.1 Nginx架构设计与工作原理

2.1.1 事件驱动模型解析

python

# nginx_architecture.py

import threading

import time

from concurrent.futures import ThreadPoolExecutor

from enum import Enum

class NginxEventModel:

"""Nginx事件驱动模型模拟"""

def __init__(self, worker_processes=4, worker_connections=1024):

self.worker_processes = worker_processes

self.worker_connections = worker_connections

self.workers = []

self.event_queue = []

def start_workers(self):

"""启动工作进程"""

for i in range(self.worker_processes):

worker = WorkerProcess(i, self.worker_connections)

self.workers.append(worker)

worker.start()

def handle_request(self, request):

"""处理传入请求"""

# 选择工作进程(模拟负载均衡)

worker = self.select_worker()

worker.add_request(request)

def select_worker(self):

"""选择工作进程策略"""

# 最简单的轮询策略

return self.workers[len(self.event_queue) % len(self.workers)]

def get_stats(self):

"""获取运行统计"""

stats = {

'total_workers': len(self.workers),

'active_connections': sum(w.active_connections for w in self.workers),

'total_processed': sum(w.total_processed for w in self.workers)

}

return stats

class WorkerProcess:

"""工作进程模拟"""

def __init__(self, worker_id, max_connections):

self.worker_id = worker_id

self.max_connections = max_connections

self.active_connections = 0

self.total_processed = 0

self.thread_pool = ThreadPoolExecutor(max_workers=10)

self.request_queue = []

def add_request(self, request):

"""添加请求到处理队列"""

if self.active_connections < self.max_connections:

self.active_connections += 1

future = self.thread_pool.submit(self.process_request, request)

future.add_done_callback(self.on_request_complete)

else:

# 连接数超限,拒绝请求

raise Exception("Worker connection limit exceeded")

def process_request(self, request):

"""处理单个请求"""

# 模拟请求处理时间

time.sleep(0.01)

self.total_processed += 1

return f"Worker {self.worker_id} processed request {request}"

def on_request_complete(self, future):

"""请求完成回调"""

self.active_connections -= 1

try:

result = future.result()

print(f"Request completed: {result}")

except Exception as e:

print(f"Request failed: {e}")

# 性能测试

def benchmark_nginx_model():

"""测试Nginx模型性能"""

model = NginxEventModel(worker_processes=4, worker_connections=1000)

model.start_workers()

start_time = time.time()

# 模拟1000个并发请求

for i in range(1000):

model.handle_request(f"request_{i}")

# 等待所有请求完成

time.sleep(2)

end_time = time.time()

stats = model.get_stats()

print(f"处理完成: {stats['total_processed']} 个请求")

print(f"总耗时: {end_time - start_time:.2f} 秒")

print(f"吞吐量: {stats['total_processed'] / (end_time - start_time):.2f} 请求/秒")

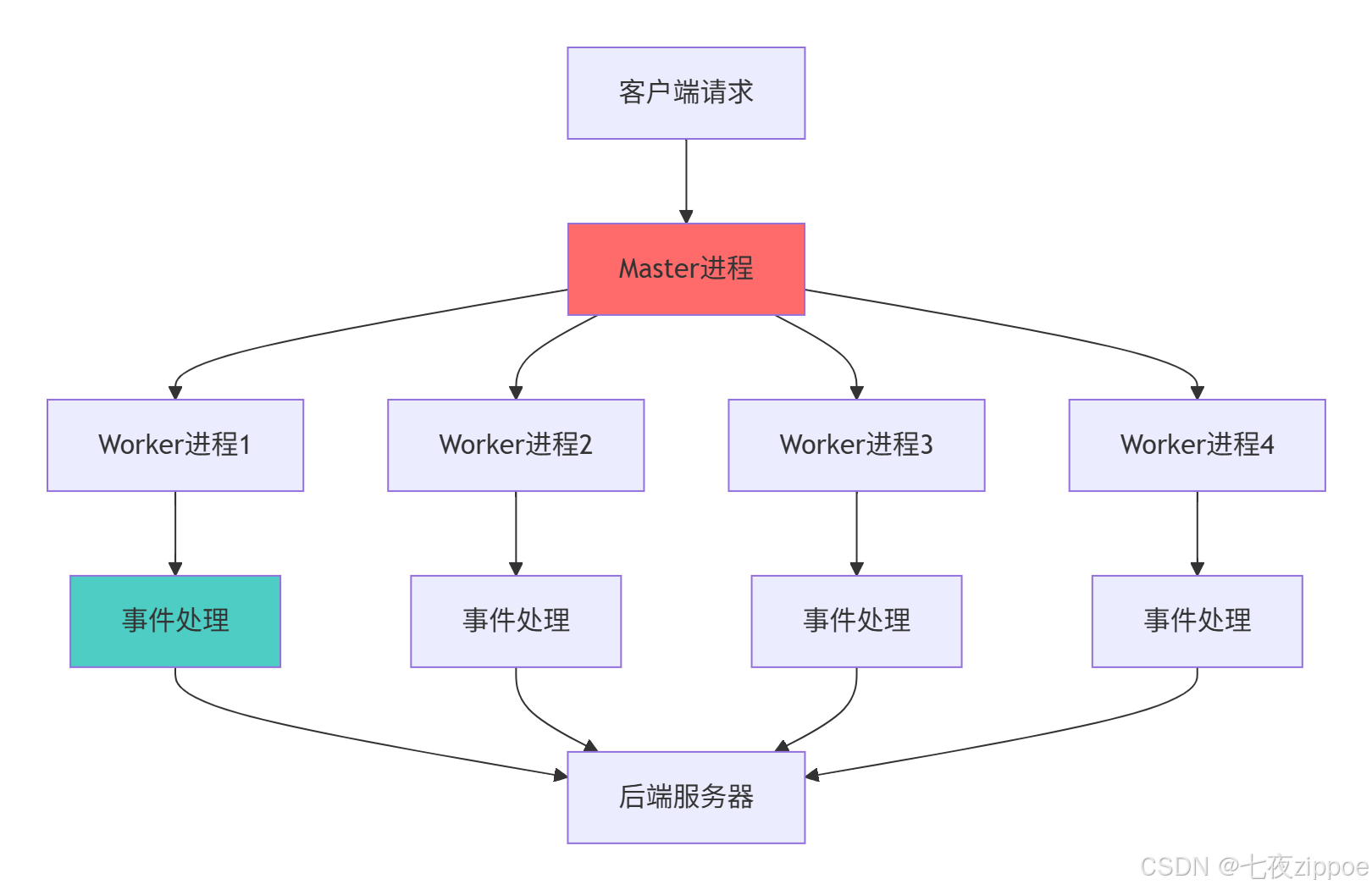

return stats2.1.2 Nginx进程架构

Nginx架构的关键优势:

-

多进程模型:避免单进程崩溃影响整体服务

-

事件驱动:非阻塞I/O处理,高并发下的高性能

-

内存隔离:进程间内存隔离,增强稳定性

-

热重载:配置更新不中断服务

2.2 负载均衡算法深度解析

2.2.1 一致性哈希算法实现

python

# consistent_hashing.py

import hashlib

from typing import List, Dict, Any

import bisect

class ConsistentHash:

"""一致性哈希算法实现"""

def __init__(self, nodes: List[str] = None, virtual_nodes: int = 150):

self.virtual_nodes = virtual_nodes

self.ring = {}

self.sorted_keys = []

if nodes:

for node in nodes:

self.add_node(node)

def _hash(self, key: str) -> int:

"""计算哈希值"""

return int(hashlib.md5(key.encode()).hexdigest(), 16)

def add_node(self, node: str):

"""添加节点到哈希环"""

for i in range(self.virtual_nodes):

virtual_node = f"{node}#{i}"

key = self._hash(virtual_node)

self.ring[key] = node

bisect.insort(self.sorted_keys, key)

def remove_node(self, node: str):

"""从哈希环移除节点"""

for i in range(self.virtual_nodes):

virtual_node = f"{node}#{i}"

key = self._hash(virtual_node)

if key in self.ring:

del self.ring[key]

self.sorted_keys.remove(key)

def get_node(self, key: str) -> str:

"""获取键对应的节点"""

if not self.ring:

return None

hash_key = self._hash(key)

idx = bisect.bisect_right(self.sorted_keys, hash_key)

if idx == len(self.sorted_keys):

idx = 0

return self.ring[self.sorted_keys[idx]]

def get_distribution(self, test_keys: List[str]) -> Dict[str, int]:

"""计算键的分布情况"""

distribution = {}

for key in test_keys:

node = self.get_node(key)

distribution[node] = distribution.get(node, 0) + 1

return distribution

class LoadBalancerAlgorithm:

"""负载均衡算法对比"""

@staticmethod

def round_robin(servers: List[str], current_index: int) -> tuple[str, int]:

"""轮询算法"""

if not servers:

return None, current_index

server = servers[current_index % len(servers)]

return server, current_index + 1

@staticmethod

def weighted_round_robin(servers: Dict[str, int], current_weights: Dict[str, int]) -> tuple[str, Dict[str, int]]:

"""加权轮询算法"""

if not servers:

return None, current_weights

# 找到当前权重最大的服务器

max_weight = -1

selected_server = None

for server, weight in servers.items():

current_weight = current_weights.get(server, 0) + weight

if current_weight > max_weight:

max_weight = current_weight

selected_server = server

if selected_server:

current_weights[selected_server] = max_weight - sum(servers.values())

return selected_server, current_weights

@staticmethod

def least_connections(servers: Dict[str, Dict[str, int]]) -> str:

"""最少连接算法"""

if not servers:

return None

min_connections = float('inf')

selected_server = None

for server, stats in servers.items():

if stats['active_connections'] < min_connections:

min_connections = stats['active_connections']

selected_server = server

return selected_server

# 算法性能测试

def benchmark_algorithms():

"""对比不同负载均衡算法性能"""

servers = [f"server_{i}" for i in range(5)]

test_keys = [f"key_{i}" for i in range(10000)]

# 测试一致性哈希

ch = ConsistentHash(servers)

distribution_ch = ch.get_distribution(test_keys)

# 测试节点增减的影响

ch.remove_node("server_0")

distribution_ch_removed = ch.get_distribution(test_keys)

# 计算数据移动比例

total_moved = 0

for key in test_keys:

original_node = ConsistentHash(servers).get_node(key)

new_node = ch.get_node(key)

if original_node != new_node:

total_moved += 1

move_ratio = total_moved / len(test_keys)

print(f"一致性哈希数据移动比例: {move_ratio:.2%}")

print("节点分布:", distribution_ch)

return {

'consistent_hash': distribution_ch,

'after_removal': distribution_ch_removed,

'move_ratio': move_ratio

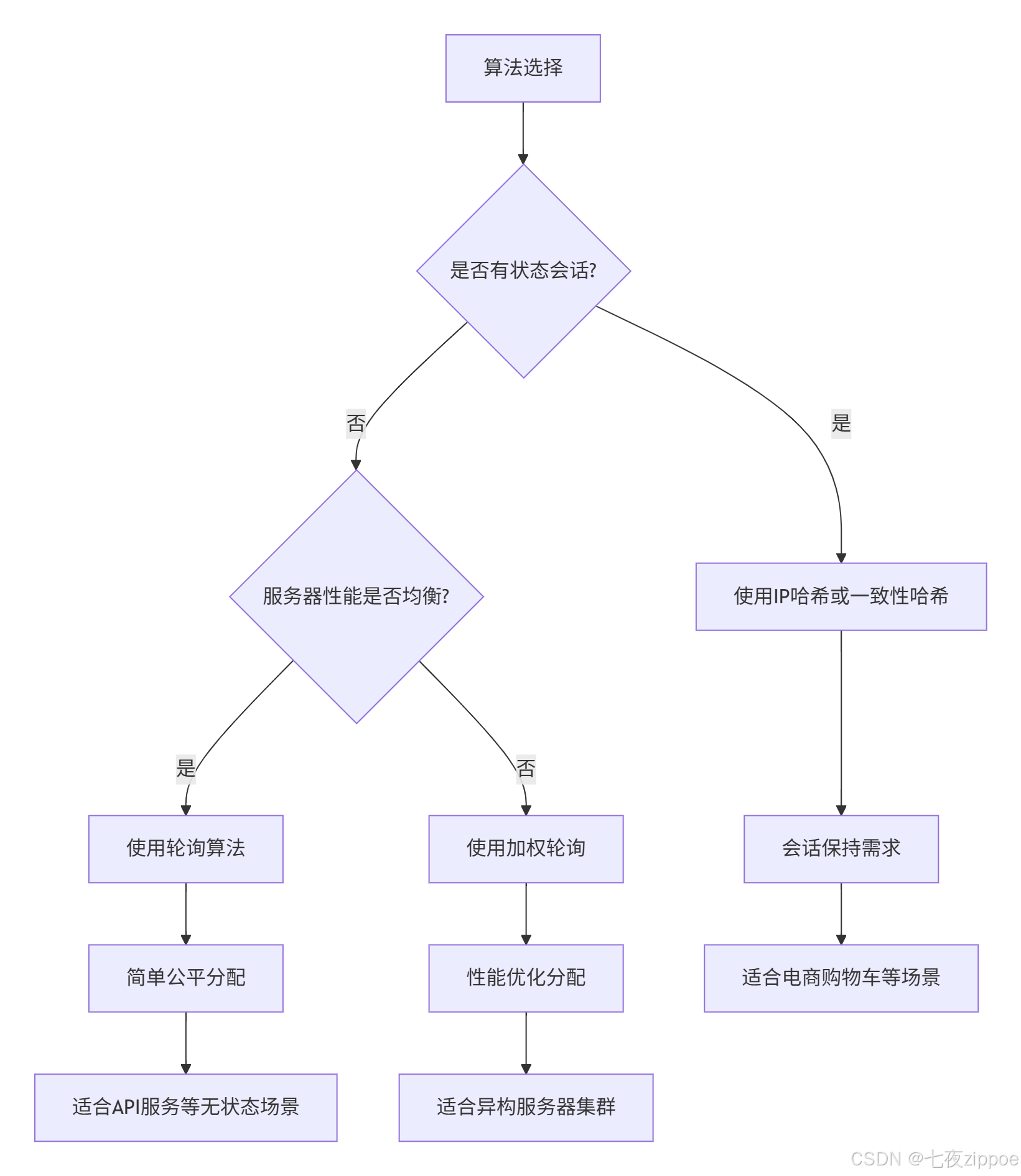

}2.2.2 算法选择决策流程

3 Nginx实战配置指南

3.1 完整配置文件解析

# nginx.conf - 生产级负载均衡配置

user nginx;

worker_processes auto; # 自动检测CPU核心数

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

# 事件模块配置

events {

worker_connections 65536; # 每个worker最大连接数

use epoll; # Linux高性能事件模型

multi_accept on; # 一次接受多个连接

}

# HTTP核心配置

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

# 日志格式

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for" '

'upstream: $upstream_addr response_time: $upstream_response_time';

access_log /var/log/nginx/access.log main;

# 基础性能优化

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

keepalive_requests 1000;

# 缓冲优化

client_body_buffer_size 128k;

client_max_body_size 100m;

# 上游服务器配置

upstream backend_cluster {

# 一致性哈希配置

hash $request_uri consistent;

# 服务器配置

server 192.168.1.101:8080 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.1.102:8080 weight=3 max_fails=3 fail_timeout=30s;

server 192.168.1.103:8080 weight=2 max_fails=3 fail_timeout=30s;

server 192.168.1.104:8080 backup; # 备份服务器

# 健康检查配置

check interval=3000 rise=2 fall=5 timeout=1000 type=http;

check_http_send "HEAD /health HTTP/1.1\r\nHost: backend.example.com\r\n\r\n";

check_http_expect_alive http_2xx http_3xx;

# 会话保持配置

sticky cookie srv_id expires=1h domain=.example.com path=/;

}

# 静态资源服务器组

upstream static_cluster {

server 192.168.1.201:80 weight=1;

server 192.168.1.202:80 weight=1;

# 最少连接算法

least_conn;

}

# HTTP服务器配置

server {

listen 80;

server_name example.com;

# 健康检查端点

location /nginx_status {

stub_status on;

access_log off;

allow 192.168.1.0/24;

deny all;

}

# 负载均衡状态页面

location /upstream_status {

check_status;

access_log off;

allow 192.168.1.0/24;

deny all;

}

# 静态资源处理

location ~* \.(jpg|jpeg|png|gif|ico|css|js)$ {

proxy_pass http://static_cluster;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# 缓存优化

proxy_cache STATIC;

proxy_cache_valid 200 302 12h;

proxy_cache_valid 404 1m;

expires 1y;

add_header Cache-Control "public, immutable";

}

# 动态请求处理

location / {

proxy_pass http://backend_cluster;

# 代理头设置

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# 超时设置

proxy_connect_timeout 5s;

proxy_send_timeout 10s;

proxy_read_timeout 10s;

# 重试机制

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503;

proxy_next_upstream_tries 3;

proxy_next_upstream_timeout 30s;

# 缓冲优化

proxy_buffering on;

proxy_buffer_size 4k;

proxy_buffers 8 4k;

proxy_busy_buffers_size 16k;

}

}

# 缓存路径配置

proxy_cache_path /var/cache/nginx/static levels=1:2 keys_zone=STATIC:10m inactive=24h max_size=1g;

proxy_cache_path /var/cache/nginx/dynamic levels=1:2 keys_zone=DYNAMIC:10m inactive=1h max_size=500m;

}

# TCP负载均衡配置(四层代理)

stream {

upstream database_cluster {

server 192.168.2.101:3306 max_fails=3 fail_timeout=30s;

server 192.168.2.102:3306 max_fails=3 fail_timeout=30s;

# 数据库特定健康检查

check interval=5000 rise=2 fall=5 timeout=2000 type=tcp;

}

server {

listen 3306;

proxy_pass database_cluster;

proxy_timeout 20s;

proxy_responses 1;

}

}3.2 健康检查机制深度解析

python

# health_check.py

import time

import requests

from typing import Dict, List, Optional

from dataclasses import dataclass

from enum import Enum

class HealthStatus(Enum):

HEALTHY = "healthy"

UNHEALTHY = "unhealthy"

UNKNOWN = "unknown"

@dataclass

class ServerStats:

"""服务器健康状态统计"""

server: str

last_checked: float

consecutive_failures: int

consecutive_successes: int

total_requests: int

response_time_avg: float

status: HealthStatus

class HealthChecker:

"""主动健康检查器"""

def __init__(self, check_interval: int = 30, timeout: int = 5):

self.check_interval = check_interval

self.timeout = timeout

self.servers_stats: Dict[str, ServerStats] = {}

def add_server(self, server: str, health_endpoint: str = "/health"):

"""添加服务器到健康检查"""

self.servers_stats[server] = ServerStats(

server=server,

last_checked=0,

consecutive_failures=0,

consecutive_successes=0,

total_requests=0,

response_time_avg=0,

status=HealthStatus.UNKNOWN

)

def check_server_health(self, server: str) -> bool:

"""检查单个服务器健康状态"""

stats = self.servers_stats[server]

try:

start_time = time.time()

response = requests.get(

f"http://{server}/health",

timeout=self.timeout

)

response_time = time.time() - start_time

# 更新统计信息

stats.total_requests += 1

stats.response_time_avg = (

(stats.response_time_avg * (stats.total_requests - 1) + response_time)

/ stats.total_requests

)

if response.status_code in [200, 201, 202, 204]:

stats.consecutive_successes += 1

stats.consecutive_failures = 0

if stats.consecutive_successes >= 3: # 连续成功3次标记为健康

stats.status = HealthStatus.HEALTHY

return True

else:

raise Exception(f"HTTP {response.status_code}")

except Exception as e:

stats.consecutive_failures += 1

stats.consecutive_successes = 0

if stats.consecutive_failures >= 3: # 连续失败3次标记为不健康

stats.status = HealthStatus.UNHEALTHY

return False

def run_continuous_check(self):

"""运行持续健康检查"""

while True:

for server in list(self.servers_stats.keys()):

is_healthy = self.check_server_health(server)

print(f"Server {server} health: {is_healthy}")

time.sleep(self.check_interval)

class PassiveHealthChecker:

"""被动健康检查器"""

def __init__(self, max_fails: int = 3, fail_timeout: int = 30):

self.max_fails = max_fails

self.fail_timeout = fail_timeout

self.failures: Dict[str, List[float]] = {}

def record_failure(self, server: str):

"""记录失败请求"""

current_time = time.time()

if server not in self.failures:

self.failures[server] = []

# 添加失败记录

self.failures[server].append(current_time)

# 清理过期的失败记录

self.failures[server] = [

fail_time for fail_time in self.failures[server]

if current_time - fail_time < self.fail_timeout

]

def record_success(self, server: str):

"""记录成功请求"""

if server in self.failures:

# 成功时清理部分失败记录

if len(self.failures[server]) > 0:

self.failures[server].pop()

def is_server_healthy(self, server: str) -> bool:

"""检查服务器是否健康"""

if server not in self.failures:

return True

current_time = time.time()

recent_failures = [

fail_time for fail_time in self.failures[server]

if current_time - fail_time < self.fail_timeout

]

return len(recent_failures) < self.max_fails

# 健康检查集成

class IntegratedHealthManager:

"""综合健康管理器"""

def __init__(self):

self.active_checker = HealthChecker()

self.passive_checker = PassiveHealthChecker()

self.server_status = {}

def update_server_status(self, server: str, request_success: bool):

"""更新服务器状态"""

if request_success:

self.passive_checker.record_success(server)

else:

self.passive_checker.record_failure(server)

# 综合判断服务器状态

passive_healthy = self.passive_checker.is_server_healthy(server)

active_healthy = self.active_checker.check_server_health(server)

# 两者都健康才认为服务器健康

self.server_status[server] = passive_healthy and active_healthy

return self.server_status[server]4 企业级高可用架构

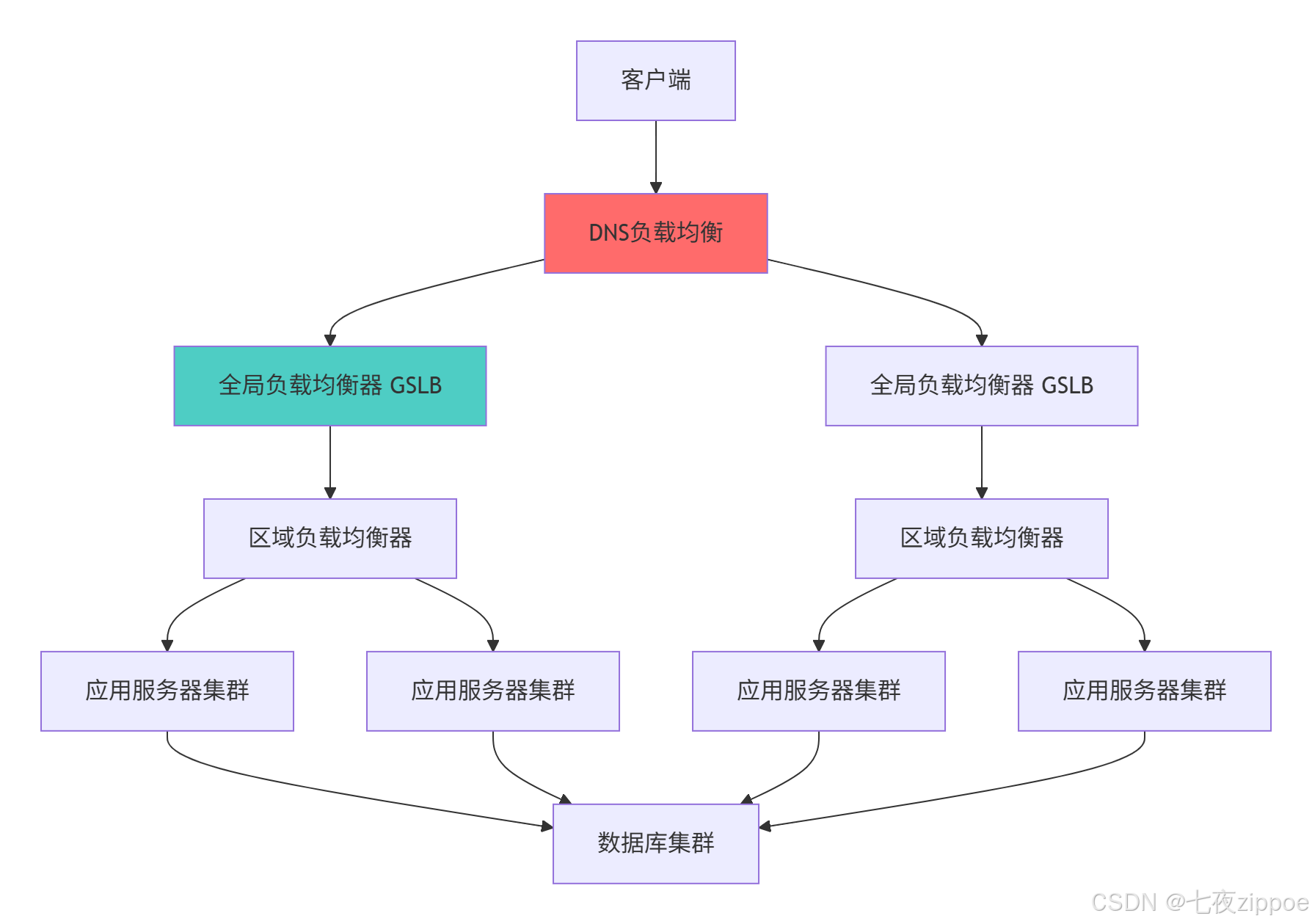

4.1 多层级负载均衡架构

4.2 会话保持高级策略

python

# session_management.py

import redis

import json

import hashlib

from typing import Dict, Optional

from datetime import datetime, timedelta

class DistributedSessionManager:

"""分布式会话管理器"""

def __init__(self, redis_host: str = 'localhost', redis_port: int = 6379):

self.redis_client = redis.Redis(host=redis_host, port=redis_port, db=0)

self.session_timeout = 3600 # 1小时超时

def create_session(self, user_id: str, initial_data: Dict = None) -> str:

"""创建新会话"""

session_id = hashlib.sha256(

f"{user_id}{datetime.now().timestamp()}".encode()

).hexdigest()

session_data = {

'user_id': user_id,

'created_at': datetime.now().isoformat(),

'last_accessed': datetime.now().isoformat(),

'data': initial_data or {}

}

# 存储到Redis

self.redis_client.setex(

f"session:{session_id}",

self.session_timeout,

json.dumps(session_data)

)

return session_id

def get_session(self, session_id: str) -> Optional[Dict]:

"""获取会话数据"""

session_data = self.redis_client.get(f"session:{session_id}")

if session_data:

# 更新访问时间

data = json.loads(session_data)

data['last_accessed'] = datetime.now().isoformat()

self.redis_client.setex(

f"session:{session_id}",

self.session_timeout,

json.dumps(data)

)

return data

return None

def update_session(self, session_id: str, new_data: Dict):

"""更新会话数据"""

existing_data = self.get_session(session_id)

if existing_data:

existing_data['data'].update(new_data)

self.redis_client.setex(

f"session:{session_id}",

self.session_timeout,

json.dumps(existing_data)

)

def invalidate_session(self, session_id: str):

"""使会话失效"""

self.redis_client.delete(f"session:{session_id}")

class StickySessionManager:

"""粘性会话管理器"""

def __init__(self, session_manager: DistributedSessionManager):

self.session_manager = session_manager

self.server_sessions = {} # 服务器会话映射

def assign_server(self, session_id: str, available_servers: List[str]) -> str:

"""为会话分配服务器"""

if session_id in self.server_sessions:

# 检查之前分配的服务器是否可用

previous_server = self.server_sessions[session_id]

if previous_server in available_servers:

return previous_server

# 使用一致性哈希选择新服务器

selected_server = self._select_server(session_id, available_servers)

self.server_sessions[session_id] = selected_server

return selected_server

def _select_server(self, session_id: str, servers: List[str]) -> str:

"""使用一致性哈希选择服务器"""

if not servers:

raise ValueError("No available servers")

# 简化的哈希选择

hash_value = int(hashlib.md5(session_id.encode()).hexdigest(), 16)

return servers[hash_value % len(servers)]

def server_removed(self, server: str):

"""处理服务器移除"""

# 重新分配受影响的会话

affected_sessions = [

session_id for session_id, srv in self.server_sessions.items()

if srv == server

]

for session_id in affected_sessions:

del self.server_sessions[session_id]

# 使用示例

def demonstrate_session_management():

"""演示会话管理"""

session_manager = DistributedSessionManager()

sticky_manager = StickySessionManager(session_manager)

# 创建会话

session_id = session_manager.create_session("user123", {"cart": ["item1", "item2"]})

print(f"创建会话: {session_id}")

# 为会话分配服务器

servers = ["server1", "server2", "server3"]

assigned_server = sticky_manager.assign_server(session_id, servers)

print(f"分配服务器: {assigned_server}")

# 再次分配应该返回同一台服务器

same_server = sticky_manager.assign_server(session_id, servers)

print(f"再次分配: {same_server}")

print(f"分配一致性: {assigned_server == same_server}")

return {

'session_id': session_id,

'assigned_server': assigned_server,

'consistency': assigned_server == same_server

}5 性能优化与监控

5.1 高级性能调优

python

# performance_optimization.py

import time

import psutil

from dataclasses import dataclass

from typing import Dict, List

import statistics

@dataclass

class PerformanceMetrics:

"""性能指标数据类"""

timestamp: float

cpu_usage: float

memory_usage: float

active_connections: int

requests_per_second: float

response_time_avg: float

error_rate: float

class NginxPerformanceOptimizer:

"""Nginx性能优化器"""

def __init__(self, nginx_process_name: str = "nginx"):

self.nginx_process_name = nginx_process_name

self.metrics_history: List[PerformanceMetrics] = []

def collect_metrics(self) -> PerformanceMetrics:

"""收集性能指标"""

# 获取系统指标

cpu_usage = psutil.cpu_percent(interval=1)

memory_usage = psutil.virtual_memory().percent

# 获取Nginx特定指标

nginx_processes = self._get_nginx_processes()

active_connections = self._get_active_connections()

return PerformanceMetrics(

timestamp=time.time(),

cpu_usage=cpu_usage,

memory_usage=memory_usage,

active_connections=active_connections,

requests_per_second=self._calculate_rps(),

response_time_avg=self._get_avg_response_time(),

error_rate=self._get_error_rate()

)

def optimize_worker_processes(self) -> int:

"""优化worker进程数量"""

cpu_count = psutil.cpu_count(logical=False) # 物理核心数

# 根据CPU核心数调整worker进程数

if cpu_count <= 4:

recommended_workers = cpu_count

elif cpu_count <= 8:

recommended_workers = cpu_count - 1

else:

recommended_workers = cpu_count // 2

return recommended_workers

def optimize_connections(self, current_metrics: PerformanceMetrics) -> Dict[str, int]:

"""优化连接配置"""

recommendations = {}

# 根据内存使用调整worker连接数

available_memory_gb = psutil.virtual_memory().total / (1024 ** 3)

if available_memory_gb < 2:

worker_connections = 512

elif available_memory_gb < 8:

worker_connections = 1024

else:

worker_connections = 65536

recommendations['worker_connections'] = worker_connections

# 根据活动连接数调整keepalive

if current_metrics.active_connections > 1000:

recommendations['keepalive_timeout'] = 15

recommendations['keepalive_requests'] = 100

else:

recommendations['keepalive_timeout'] = 65

recommendations['keepalive_requests'] = 1000

return recommendations

def detect_bottlenecks(self, metrics_history: List[PerformanceMetrics]) -> List[str]:

"""检测性能瓶颈"""

bottlenecks = []

if len(metrics_history) < 2:

return ["需要更多数据进行分析"]

recent_metrics = metrics_history[-10:] # 最近10个数据点

# 分析CPU瓶颈

cpu_usage = [m.cpu_usage for m in recent_metrics]

if statistics.mean(cpu_usage) > 80:

bottlenecks.append("CPU使用率过高,考虑增加worker进程或优化应用代码")

# 分析内存瓶颈

memory_usage = [m.memory_usage for m in recent_metrics]

if statistics.mean(memory_usage) > 90:

bottlenecks.append("内存使用率过高,考虑增加内存或优化缓存配置")

# 分析连接瓶颈

active_connections = [m.active_connections for m in recent_metrics]

max_connections = recent_metrics[0].active_connections # 假设这是配置的最大连接数

if max(active_connections) > max_connections * 0.8:

bottlenecks.append("活动连接数接近限制,考虑增加worker_connections")

return bottlenecks

class AutomatedTuner:

"""自动调优器"""

def __init__(self, optimizer: NginxPerformanceOptimizer):

self.optimizer = optimizer

self.tuning_history = []

def run_continuous_tuning(self, check_interval: int = 60):

"""运行持续调优"""

while True:

try:

current_metrics = self.optimizer.collect_metrics()

recommendations = self.optimizer.optimize_connections(current_metrics)

bottlenecks = self.optimizer.detect_bottlenecks(

self.optimizer.metrics_history

)

# 应用推荐配置

self.apply_recommendations(recommendations)

# 记录调优历史

self.tuning_history.append({

'timestamp': time.time(),

'metrics': current_metrics,

'recommendations': recommendations,

'bottlenecks': bottlenecks

})

print(f"调优完成 - 推荐: {recommendations}")

if bottlenecks:

print(f"检测到瓶颈: {bottlenecks}")

except Exception as e:

print(f"调优错误: {e}")

time.sleep(check_interval)

def apply_recommendations(self, recommendations: Dict[str, int]):

"""应用推荐配置"""

# 这里应该是实际的配置应用逻辑

# 例如通过API更新Nginx配置或写入配置文件

pass6 故障排查与实战指南

6.1 常见问题解决方案

python

# troubleshooting.py

import logging

import subprocess

from typing import Dict, List

from datetime import datetime

class NginxTroubleshooter:

"""Nginx故障排查器"""

def __init__(self, nginx_conf_path: str = "/etc/nginx/nginx.conf"):

self.nginx_conf_path = nginx_conf_path

self.setup_logging()

def setup_logging(self):

"""设置日志配置"""

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s',

handlers=[

logging.FileHandler('/var/log/nginx_troubleshoot.log'),

logging.StreamHandler()

]

)

self.logger = logging.getLogger(__name__)

def check_nginx_syntax(self) -> bool:

"""检查Nginx配置语法"""

try:

result = subprocess.run(

['nginx', '-t'],

capture_output=True,

text=True,

timeout=30

)

if result.returncode == 0:

self.logger.info("Nginx配置语法检查通过")

return True

else:

self.logger.error(f"Nginx配置语法错误: {result.stderr}")

return False

except subprocess.TimeoutExpired:

self.logger.error("Nginx配置检查超时")

return False

except Exception as e:

self.logger.error(f"配置检查异常: {e}")

return False

def analyze_error_logs(self, log_path: str = "/var/log/nginx/error.log") -> Dict[str, List[str]]:

"""分析错误日志"""

try:

with open(log_path, 'r') as f:

logs = f.readlines()

errors = {

'connection_errors': [],

'permission_errors': [],

'timeout_errors': [],

'other_errors': []

}

for log_line in logs[-1000:]: # 分析最近1000行

if 'connection refused' in log_line.lower():

errors['connection_errors'].append(log_line.strip())

elif 'permission denied' in log_line.lower():

errors['permission_errors'].append(log_line.strip())

elif 'timeout' in log_line.lower():

errors['timeout_errors'].append(log_line.strip())

elif 'error' in log_line.lower():

errors['other_errors'].append(log_line.strip())

return errors

except Exception as e:

self.logger.error(f"日志分析错误: {e}")

return {}

def check_upstream_health(self, upstream_name: str) -> Dict[str, bool]:

"""检查上游服务器健康状态"""

# 这里应该是实际的健康检查逻辑

# 简化实现,实际应该通过API或状态页面检查

return {

'server1:8080': True,

'server2:8080': False, # 模拟故障服务器

'server3:8080': True

}

def generate_troubleshooting_report(self) -> Dict:

"""生成故障排查报告"""

report = {

'timestamp': datetime.now().isoformat(),

'syntax_check': self.check_nginx_syntax(),

'error_analysis': self.analyze_error_logs(),

'upstream_health': self.check_upstream_health('backend_cluster'),

'recommendations': []

}

# 基于分析结果生成建议

if not report['syntax_check']:

report['recommendations'].append('修复Nginx配置文件语法错误')

error_analysis = report['error_analysis']

if error_analysis.get('connection_errors'):

report['recommendations'].append('检查后端服务器连接和防火墙设置')

if error_analysis.get('timeout_errors'):

report['recommendations'].extend([

'增加proxy_read_timeout值',

'检查后端服务器性能'

])

upstream_health = report['upstream_health']

unhealthy_servers = [srv for srv, healthy in upstream_health.items() if not healthy]

if unhealthy_servers:

report['recommendations'].append(

f'修复或替换不健康的上游服务器: {", ".join(unhealthy_servers)}'

)

return report

# 实战故障排查示例

def demonstrate_troubleshooting():

"""演示故障排查流程"""

troubleshooter = NginxTroubleshooter()

# 生成排查报告

report = troubleshooter.generate_troubleshooting_report()

print("=== Nginx故障排查报告 ===")

print(f"生成时间: {report['timestamp']}")

print(f"语法检查: {'通过' if report['syntax_check'] else '失败'}")

print(f"发现错误类型: {list(report['error_analysis'].keys())}")

print("\n=== 修复建议 ===")

for i, recommendation in enumerate(report['recommendations'], 1):

print(f"{i}. {recommendation}")

return report官方文档与参考资源

-

Nginx官方文档- Nginx官方配置文档和指南

-

Nginx负载均衡配置指南- 官方负载均衡文档

-

Nginx性能调优指南- 性能优化最佳实践

-

分布式系统设计模式- 微软架构模式

通过本文的完整学习路径,您应该已经掌握了负载均衡与反向代理的核心技术和实战应用。负载均衡作为现代分布式系统的基石技术,其正确实施将直接决定系统的可用性、扩展性和性能表现。