深度残差网络(Deep Residual Networks,简称ResNet)自从2015年首次提出以来,就在深度学习领域产生了深远影响。通过一种创新的"残差学习"机制,ResNet成功地训练了比以往模型更深的神经网络,从而显著提高了多个任务的性能。深度残差网络通过引入残差学习和特殊的网络结构,++解决了传统深度神经网络中的梯度消失问题++,并实现了高效、可扩展的深层模型。

在深度学习中,ResNet我觉得是一个新手必学的一个基础网络结构!这篇文章中先记录下对ResNet18的学习。

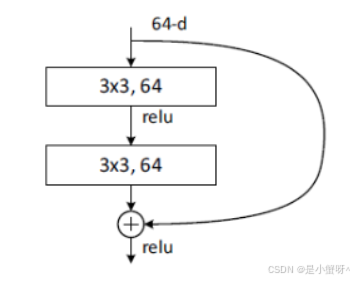

【注】在ResNet18中使用的BasicBlock模块,如下图

BasicBlock模块代码实现:

python

class BasicBlock(nn.Module):

expansion = 1

# 使用BN层是不需要使用bias的,bias最后会抵消掉

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

self.downsample = downsample # downsample是用于处理输入和输出通道不一致的情况,便于后续相加

def forward(self, x):

identity = x # 保存输入,用于后续相加(右分支)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return outResNet18架构设计:

python

# resnet18

class ResNet18(nn.Module):

def __init__(self, Residualblock=BasicBlock, num_classes=10):

super(ResNet18, self).__init__()

self.in_channels = 64

self.conv1 = nn.Sequential( # 小卷积

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

)

self.layer1 = self.make_layer(Residualblock, 64, num_blocks=2, stride=1)

self.layer2 = self.make_layer(Residualblock, 128, num_blocks=2, stride=2)

self.layer3 = self.make_layer(Residualblock, 256, num_blocks=2, stride=2)

self.layer4 = self.make_layer(Residualblock, 512, num_blocks=2, stride=2)

self.fc = nn.Linear(512 * Residualblock.expansion, num_classes)

def make_layer(self, block, channels, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

downsample = None

if stride != 1 or self.in_channels != channels:

downsample = nn.Sequential(

nn.Conv2d(self.in_channels, channels, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channels),

)

layers.append(block(self.in_channels, channels, stride=stride, downsample=downsample))

self.in_channels = channels

return nn.Sequential(*layers) # 这一步是把你前面 layers 这个列表里"一个个残差块"打包成一个可直接调用的网络层(stage),并返回出去

def forward(self, x): # 3*32*32

out = self.conv1(x) # 64*32*32

out = self.layer1(out) # 64*32*32

out = self.layer2(out) # 128*16*16

out = self.layer3(out) # 256*8*8

out = self.layer4(out) # 512*4*4

out = F.avg_pool2d(out, 4) # 512*1*1

out = out.view(out.size(0), -1) # 512 out.size(0) 就是 batch size:N, -1 表示剩下的维度自动推断并"拉直"

out = self.fc(out)

return out【完整代码】

python

from torch import nn

from torch.nn import functional as F

class BasicBlock(nn.Module):

expansion = 1

# 使用BN层是不需要使用bias的,bias最后会抵消掉

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

self.downsample = downsample # downsample是用于处理输入和输出通道不一致的情况,便于后续相加

def forward(self, x):

identity = x # 保存输入,用于后续相加(右分支)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

# resnet18

class ResNet18(nn.Module):

def __init__(self, Residualblock=BasicBlock, num_classes=10):

super(ResNet18, self).__init__()

self.in_channels = 64

self.conv1 = nn.Sequential( # 小卷积

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

)

self.layer1 = self.make_layer(Residualblock, 64, num_blocks=2, stride=1)

self.layer2 = self.make_layer(Residualblock, 128, num_blocks=2, stride=2)

self.layer3 = self.make_layer(Residualblock, 256, num_blocks=2, stride=2)

self.layer4 = self.make_layer(Residualblock, 512, num_blocks=2, stride=2)

self.fc = nn.Linear(512 * Residualblock.expansion, num_classes)

def make_layer(self, block, channels, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

downsample = None

if stride != 1 or self.in_channels != channels:

downsample = nn.Sequential(

nn.Conv2d(self.in_channels, channels, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channels),

)

layers.append(block(self.in_channels, channels, stride=stride, downsample=downsample))

self.in_channels = channels

return nn.Sequential(*layers)

def forward(self, x): # 3*32*32

out = self.conv1(x) # 64*32*32

out = self.layer1(out) # 64*32*32

out = self.layer2(out) # 128*16*16

out = self.layer3(out) # 256*8*8

out = self.layer4(out) # 512*4*4

out = F.avg_pool2d(out, 4) # 512*1*1

out = out.view(out.size(0), -1) # 512 out.size(0) 就是 batch size:N, -1 表示剩下的维度自动推断并"拉直"

out = self.fc(out)

return out

model = ResNet18()

print(model)对于ResNet34而言,跟ResNet18的代码实现差别不大,具体区别,如下图: