环境

系统:CentOS-7

CPU : E5-2680V4 14核28线程

内存:DDR4 2133 32G * 2

显卡:Tesla V100-32G【PG503】 (水冷)

驱动: 535

CUDA: 12.2环境

bash

nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Fri_Jan__6_16:45:21_PST_2023

Cuda compilation tools, release 12.0, V12.0.140

Build cuda_12.0.r12.0/compiler.32267302_0

cmake --version

cmake3 version 3.17.5创建进入Python环境

bash

conda create --name llamap_cpp python=3.12

conda activate llamap_cpp安装

bash

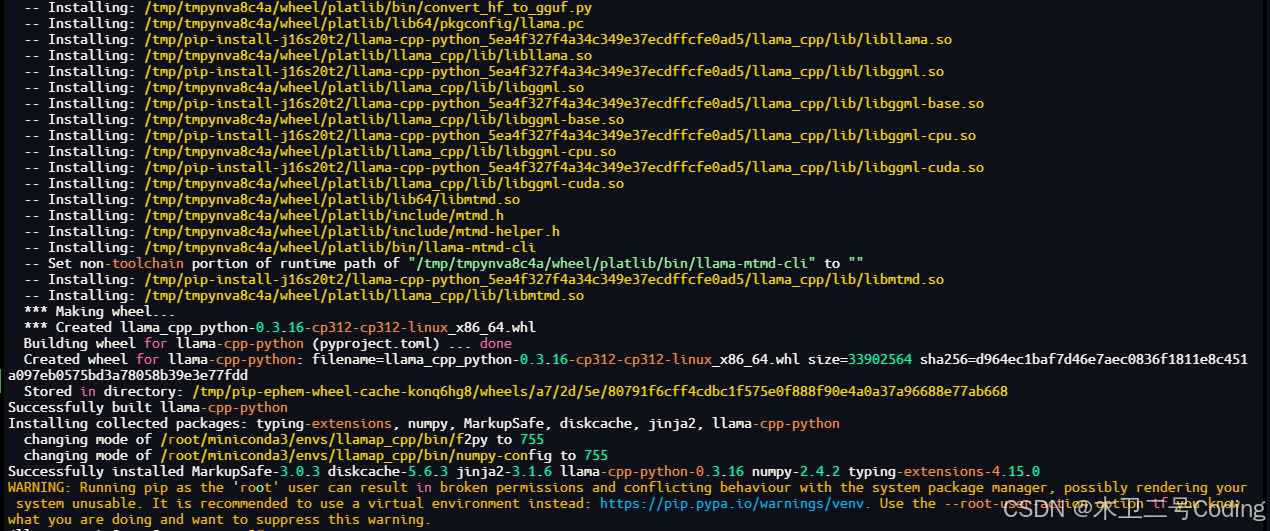

CMAKE_ARGS="-DGGML_CUDA=on -DLLAMA_CUDA_ARCH=70 -DLLAMA_CUDA_F16=on" \

> pip install llama-cpp-python --force-reinstall --no-cache-dir --verbose -i https://mirrors.cloud.tencent.com/pypi/simple等待2~3分钟

Successfully built llama-cpp-python

运行

bash

from llama_cpp import Llama

#import torch

# 模型路径(请替换为实际路径)

MODEL_PATH = "/models/GGUF_LIST/Qwen3-4B-Q4_K_M.gguf"

MODEL_PATH = "/models/GGUF_LIST/Qwen3-30B-A3B-Thinking-2507-Q4_K_M.gguf"

# 自动检测可用资源

#gpu_memory = torch.cuda.get_device_properties(0).total_memory / 1024**3 # GB

#print(f"GPU 显存: {gpu_memory:.1f} GB")

#print(f"CPU 核心: 14核28线程 (E5-2680 v4)")

# 根据模型大小设置 GPU layers(V100 32GB 推荐值)

# - 8B 模型: n_gpu_layers=35 (全部层)

# - 30B-A3B: n_gpu_layers=45-50

# - 80B-A3B: n_gpu_layers=60-65 (需测试稳定性)

llm = Llama(

model_path=MODEL_PATH,

n_gpu_layers=50, # 关键:offload 到 GPU 的层数

n_ctx=32768, # Qwen3 最大上下文

n_threads=26, # 充分利用 28 逻辑线程

n_batch=512, # 批处理大小

verbose=False, # 减少启动日志

chat_format="qwen", # Qwen3 专用 chat template

logits_all=False, # 关闭 logits 以节省显存

flash_attn=False # V100 不支持 FlashAttention-2

)

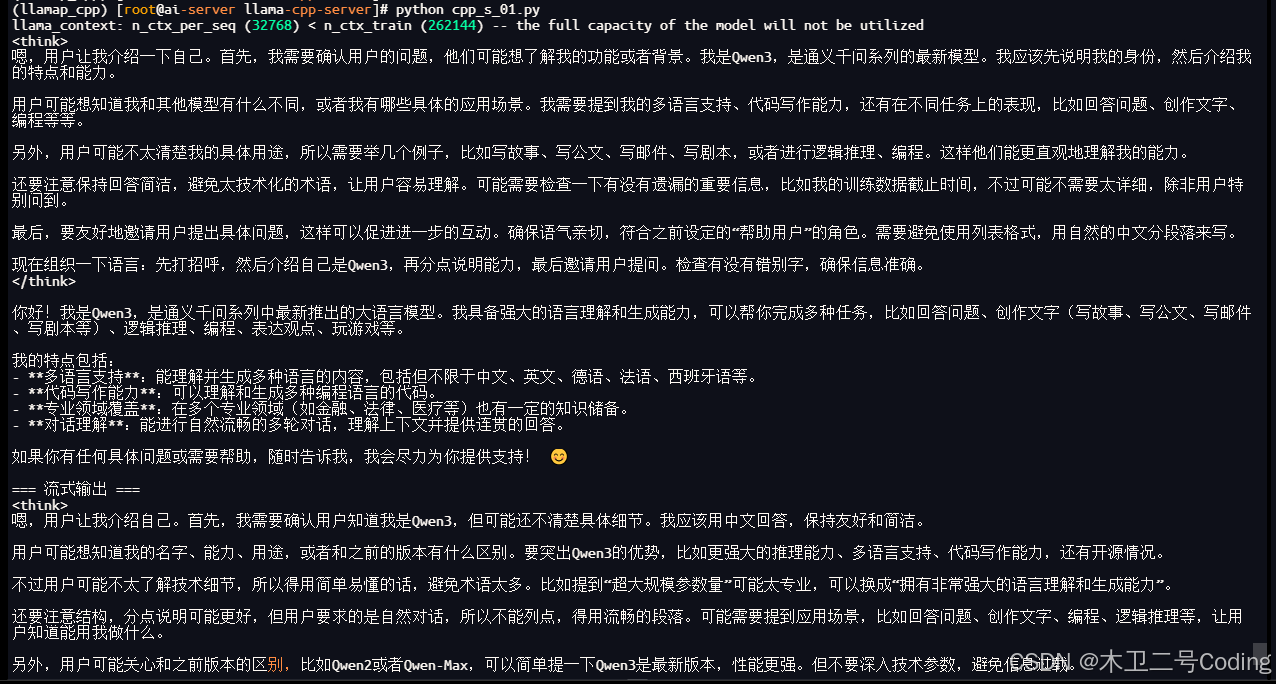

# 对话推理示例

messages = [

{"role": "system", "content": "You are Qwen3, a helpful assistant."},

{"role": "user", "content": "你好,请介绍你自己"}

]

response = llm.create_chat_completion(

messages=messages,

temperature=0.7,

top_p=0.8,

max_tokens=512,

stream=False # 设为 True 可流式输出

)

print(response["choices"][0]["message"]["content"])

# 流式输出示例(推荐用于长文本)

print("\n=== 流式输出 ===")

for chunk in llm.create_chat_completion(

messages=messages,

temperature=0.7,

max_tokens=1024,

stream=True

):

if "content" in chunk["choices"][0]["delta"]:

print(chunk["choices"][0]["delta"]["content"], end="", flush=True)

print()

bash

Wed Feb 4 23:15:47 2026

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 Tesla PG503-216 On | 00000000:04:00.0 Off | 0 |

| N/A 30C P0 125W / 250W | 23070MiB / 32768MiB | 83% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+详细速度后面测试,感知速度挺快