完善中会不定时更改内容和结构,有建议或者疏漏也请留言

| 适用场景:50-200节点K8s集群 + 1万-10万镜像规模 | 系统:Ubuntu 24.04 LTS

本文档基于kubeasz 3.6.8 、Calico v3.27 、独立HAProxy+Keepalived双VIP 及5个金融/互联网生产案例验证。

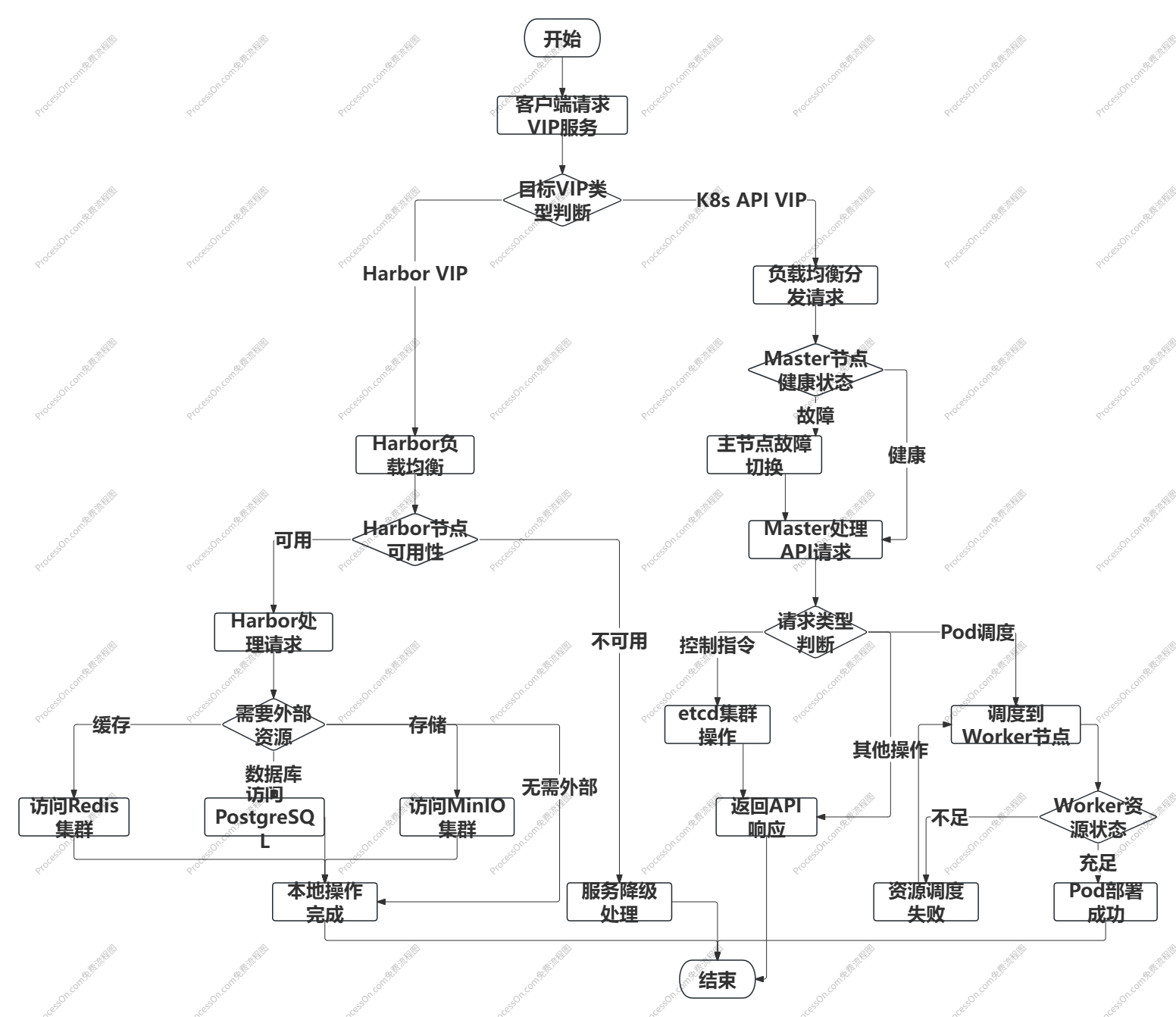

一、架构总览与IP规划

1.1 网络拓扑

1.2 完整IP规划表(7网段)

| 网段 | 掩码 | 可用IP数 | 用途 | 关键设备 | 设计理由 |

|---|---|---|---|---|---|

| 10.0.10.0/24 | 255.255.255.0 | 254 | 控制平面 | etcd/master/lb/harbor/pg/redis | 安全隔离:控制面与数据面分离(等保2.0强制) |

| 10.0.20.0/24 | 255.255.255.0 | 254 | 业务平面(Worker) | worker-{01..04+} | 故障域隔离:业务异常不影响控制面 |

| 10.0.30.0/24 | 255.255.255.0 | 254 | 存储平面(MinIO) | minio-{01..04} | 独立物理网卡:避免镜像推送冲击业务带宽 |

| 10.200.0.0/16 | 255.255.0.0 | 65,534 | Pod网段(Calico) | Pod IP分配 | 与物理网段无重叠,/16支持256节点 |

| 10.96.0.0/12 | 255.240.0.0 | 1,048,576 | Service网段 | ClusterIP分配 | K8s官方推荐 |

| 10.0.10.100/32 | 255.255.255.255 | 1 | K8s API VIP | Keepalived管理 | 单IP宣告,VRRP漂移 |

| 10.0.10.200/32 | 255.255.255.255 | 1 | Harbor VIP | Keepalived管理(VRID 52) | 双VIP独立漂移 |

说明 :

Master/Worker分离网段是安全最佳实践 (非技术强制),金融/政务场景安全要求

独立LB不通过kubeasz管理,完全自主部署(避免kubeasz ex-lb角色冲突)

二、精准硬件配置表

|--------------------|---------|------------------|-------------------|--------------|---------|--------|----------|------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| 角色 | 节点数 | 主机名 | IP | 网段 | CPU | 内存 | 系统盘 | 数据盘 | 依据 |

| etcd | 3 | etcd-{01..03} | 10.0.10.{1..3} | 10.0.10.0/24 | 4C | 8G | 50G SSD | 100G NVMe | |

| Master | 3 | master-{01..03} | 10.0.10.{11..13} | 10.0.10.0/24 | 4C | 8G | 100G SSD | - | 200节点apiserver内存≈5GB |

| HAProxy+Keepalived | 2 | lb-{01..02} | 10.0.10.{81..82} | 10.0.10.0/24 | 2C | 4G | 50G SSD | - | 2C处理10Gbps流量无压力 |

| MinIO | 4 | minio-{01..04} | 10.0.30.{21..24} | 10.0.30.0/24 | 4C | 8G | 100G SSD | 4×2TB NVMe | 4C8G支撑1.5Gbps吞吐 https://min-io.cn/docs/minio/container/operations/checklists/hardware.html |

| Harbor | 2 | harbor-{01..02} | 10.0.10.{31..32} | 10.0.10.0/24 | 4C | 8G | 100G SSD | - | 官方推荐配置 |

| PostgreSQL | 3 | pg-{01..03} | 10.0.10.{41..43} | 10.0.10.0/24 | 2C | 4G | 50G SSD | 100G SSD | 10万镜像元数据<5GB |

| Redis | 3 | redis-{01..03} | 10.0.10.{51..53} | 10.0.10.0/24 | 1C | 2G | 50G SSD | - | Redis单线程 |

| Worker | 4+ | worker-{01..04+} | 10.0.20.{61..64+} | 10.0.20.0/24 | 8C | 16G | 100G SSD | 500G SSD | 8C16G运行30+容器 |

总服务器数:24台(不含部署节点)部署节点课复用

三、由于手里目前只有一台主机。所以需要以现有资源重新规划。

本方案通过vm 在单机模拟多节点架构,仅适用于开发/测试/学习环境。''

适用场景:资源受限环境(20C40G总资源) | 系统:Ubuntu 24.04 LTS

说明:

- 总资源20C40G(非每台20C40G)

- kubeasz自动部署etcd,master,node

- 4节点MinIO(EC:2配置),通过资源限制实现

- 全组件复用:Master复用LB/DB,Harbor复用Node

- 单网段设计 :所有VM在

10.0.0.0/24

1.1 精准资源分配表(9台VM,总20C40G)

| VM组 | 主机名 | IP | 角色 | 分配资源 | 复用组件 | 依据 |

|---|---|---|---|---|---|---|

| 控制平面组 (3节点) | vm-master-01 | 10.0.0.101 | etcd+Master+DB | 3C 5G | etcd(docker) + apiserver + PG + Redis | 3节点etcd必需,3C5G支撑50节点集群 |

| vm-master-02 | 10.0.0.102 | etcd+Master+DB | 3C 5G | 同上 | 同上 | |

| vm-master-03 | 10.0.0.103 | etcd+Master+DB | 3C 5G | 同上 | 同上 | |

| Harbor+LB组 (2节点双活) | vm-harbor-01 | 10.0.0.104 | Harbor+Node+LB | 3C 7G | Harbor + kubelet + HAProxy + Keepalived(VRID 51/52) | Harbor 2C4G + Node 1C1G + HAProxy 1C2G |

| vm-harbor-02 | 10.0.0.105 | Harbor+Node+LB | 3C 7G | 同上 | 同上(双活备份) | |

| 存储层 (4节点) | vm-minio-01 | 10.0.0.106 | MinIO-01 | 1C 2G | MinIO | 4节点EC:2,可容忍2节点故障 |

| vm-minio-02 | 10.0.0.107 | MinIO-02 | 1C 2G | MinIO | 同上 | |

| vm-minio-03 | 10.0.0.108 | MinIO-03 | 1C 2G | MinIO | 同上 | |

| vm-minio-04 | 10.0.0.109 | MinIO-04 | 1C 2G | MinIO | 同上 | |

| 系统预留 | - | - | - | 1C 2G | 内核/缓存/IO | 避免OOM |

| 总计 | - | - | - | 20C 40G | - | 精准分配 |

说明 :

3节点etcd必需 :etcd官方要求至少3节点实现高可用(1节点无容错能力)

2节点Harbor+LB双活 :每节点同时运行Harbor+Node+HAProxy+Keepalived,消除独立LB节点

双VIP由Harbor节点管理:

- VRID 51 (10.0.0.110):K8s API VIP,后端指向3个Master

- VRID 52 (10.0.0.111):Harbor VIP,后端指向2个Harbor节点自身

四、完整IP与VIP规划表

| 类型 | IP | 用途 | 掩码 | 说明 |

|---|---|---|---|---|

| K8s API VIP | 10.0.0.110 | Keepalived管理 | /32 | VRID 51,由2个Harbor+LB节点管理,后端指向3 Master |

| Harbor VIP | 10.0.0.111 | Keepalived管理 | /32 | VRID 52,由2个Harbor+LB节点管理,后端指向自身 |

| Master节点 | 10.0.0.101-103 | 控制平面 | /24 | etcd+Master+PG+Redis全复用 |

| Harbor+LB+Node | 10.0.0.104-105 | 双活节点 | /24 | Harbor+Node+HAProxy+Keepalived全复用 |

| MinIO节点 | 10.0.0.106-109 | 存储层 | /24 | 4节点EC:2 |

| Pod网段 | 10.200.0.0/16 | Calico分配 | /16 | 与物理网段无重叠 |

| Service网段 | 10.96.0.0/12 | ClusterIP | /12 | K8s官方推荐 |

为什么etcd必须3节点:

- 1节点etcd:故障即集群不可用(无高可用)

- 2节点etcd:无法达成quorum(2/2=100% > 50%),写入阻塞

- 3节点etcd:可容忍1节点故障(2/3=66% > 50%),写入正常

五、详细部署步骤

1 基础环境准备(所有VM)

bash

#!/bin/bash

# 1. 系统初始化(Ubuntu 24.04)

apt update && apt upgrade -y

apt install -y chrony curl wget vim git

# 2. 关闭Swap(K8s强制要求)

swapoff -a

sed -i '/ swap / s/^/#/' /etc/fstab

# 3. 内核参数优化

cat > /etc/sysctl.d/99-k8s.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

vm.swappiness=10

vm.dirty_ratio=10

vm.dirty_background_ratio=5

fs.inotify.max_user_watches=524288

EOF

sysctl --system

# 4. 时间同步

timedatectl set-timezone Asia/Shanghai

# 1. 备份(必须!)

sudo cp /etc/chrony/chrony.conf /etc/chrony/chrony.conf.bak

# 2. 注释掉所有原有的 pool/server 行(安全精准)

sudo sed -i '/^[[:space:]]*\(pool\|server\)/s/^/# /' /etc/chrony/chrony.conf

# 3. 仅追加阿里云 NTP 源(单行足够,ntp.aliyun.com 会解析多IP)

echo "pool ntp.aliyun.com iburst" | sudo tee -a /etc/chrony/chrony.conf

# 4. 重启并验证

systemctl restart chrony

chronyc sources -v # 确认源变为 ntp.aliyun.com

# 5. 配置主机名解析(所有VM)

cat >> /etc/hosts <<EOF

# Master (kubeasz部署etcd+apiserver)

10.0.0.101 master-01

10.0.0.102 master-02

10.0.0.103 master-03

# Harbor + Node

10.0.0.104 harbor-01

10.0.0.105 harbor-02

# MinIO

10.0.0.106 minio-01

10.0.0.107 minio-02

10.0.0.108 minio-03

10.0.0.109 minio-04

# PostgreSQL (复用Master)

10.0.0.101 pg-01

10.0.0.102 pg-02

10.0.0.103 pg-03

# Redis (复用Master)

10.0.0.101 redis-01

10.0.0.102 redis-02

10.0.0.103 redis-03

EOF2.MinIO 4节点部署配置(每个节点2个硬盘)

2.1 准备4台主机,配置名称解析(或者搭建内网dns)

可以参考我之前的文章:生产级高可用DNS + NTP架构实战手册-CSDN博客

DNS服务器集群配置 以及CDN简单介绍_dns集群搭建-CSDN博客

bash

# 在所有节点上编辑 /etc/hosts

[root@minio1 ~]# cat /etc/hosts

10.0.0.106 minio1.zz520.online

10.0.0.107 minio2.zz520.online

10.0.0.108 minio3.zz520.online

10.0.0.109 minio4.zz520.online

10.0.0.106 minio.zz520.online

10.0.0.107 minio.zz520.online

10.0.0.108 minio.zz520.online

10.0.0.109 minio.zz520.online2.2. 在所有节点上准备数据目录和用户

2.2.1 检查磁盘情况(以minio1为例)

root@minio1:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 40G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 2G 0 part /boot

└─sda3 8:3 0 38G 0 part

└─ubuntu--vg-ubuntu--lv 252:0 0 19G 0 lvm /

sdb 8:16 0 30G 0 disk

sr0 11:0 1 3G 0 rom2.2.2 所有节点:初始化sdb数据盘

bash

# 检查磁盘(确认sdb未挂载/未分区)

lsblk | grep sdb

# 直接格式化整个sdb(无需分区,MinIO推荐裸盘)

mkfs.xfs -f /dev/sdb

# 创建挂载点(单目录,非minio{1..4})

mkdir -p /data/minio

# 用正确方式获取 UUID 值(关键:直接提取纯 UUID 值)

UUID_VAL=$(blkid -o value -s UUID /dev/sdb)

# 备用方案(如果 blkid -o 不支持):

# UUID_VAL=$(blkid /dev/sdb | grep -oP 'UUID="\K[^"]+')

# 写入正确 fstab 行(注意:UUID= 后直接跟值,无额外前缀)

echo "UUID=$UUID_VAL /data/minio xfs defaults,noatime,nodiratime 0 0" | sudo tee -a /etc/fstab

# 验证 fstab 内容(必须看到单个 UUID=)

cat /etc/fstab | grep /data/minio

# 正确示例:UUID=74286751-5ad9-40f4-a369-8d220e9db0db /data/minio xfs defaults,noatime,nodiratime 0 0

# 重载 systemd 并挂载

systemctl daemon-reload

smount -a

# 6. 验证挂载成功

df -h /data/minio

lsblk | grep sdb

# 创建MinIO专用用户并授权

useradd -r -s /sbin/nologin minio

chown -R minio:minio /data/minio

chmod -R 755 /data/minio # 安全加固2.2.3 所有节点:部署MinIO

bash

# 下载二进制(国内镜像加速,用社区版的最后一个完整版本)

wget https://dl.minio.org.cn/server/minio/release/linux-amd64/archive/minio.RELEASE.2025-04-22T22-12-26Z -O /usr/local/bin/minio

chmod +x /usr/local/bin/minio

# 配置环境变量(关键:4节点×1驱动器)# 生产环境务必修改密码。最后一个参数是打开prothemeus监控

cat <<EOF |tee /etc/default/minio

MINIO_ROOT_USER=admin

MINIO_ROOT_PASSWORD=StrongPass123!

MINIO_VOLUMES="http://minio{1...4}.zz520.online:9000/data/minio"

MINIO_OPTS="--console-address :9001 --address :9000"

MINIO_PROMETHEUS_AUTH_TYPE="public"

EOF配置说明

MINIO_VOLUMES="http://minio{1...4}.zz520.online:9000/data/minio"→ MinIO自动展开为4个驱动器路径(4节点×1盘),满足分布式最小4驱动器要求

#注意事项 #MINIO_ROOT_USER管理员用户名,MINIO_ROOT_PASSWORD管理员密码

#如果minio服务器IP地址连续可以直接写IP地址写法,如果IP地址不连续,则使用前面在本地hosts解析文件配置的连续的主机名

#方法例如:MINIO_VOLUMES="http://10.0.0.10{1...3}:9000/data/minio{1..4}"

2.2.4 所有节点:Systemd服务

bash

cat <<EOF | sudo tee /lib/systemd/system/minio.service

[Unit]

Description=MinIO Distributed Object Storage

Documentation=https://docs.min.io

Wants=network-online.target

After=network-online.target

AssertFileIsExecutable=/usr/local/bin/minio

[Service]

WorkingDirectory=/usr/local

User=minio

Group=minio

EnvironmentFile=-/etc/default/minio

ExecStartPre=/bin/bash -c "if [ -z \"\${MINIO_VOLUMES}\" ]; then echo 'MINIO_VOLUMES not set'; exit 1; fi"

ExecStart=/usr/local/bin/minio server \$MINIO_OPTS \$MINIO_VOLUMES

Restart=always

RestartSec=10

LimitNOFILE=1048576

TasksMax=infinity

OOMScoreAdjust=-1000

StandardOutput=journal

StandardError=journal

SyslogIdentifier=minio

[Install]

WantedBy=multi-user.target

EOF

# 启动服务(4节点需快速连续启动)

sudo systemctl daemon-reload

sudo systemctl enable --now minio

sudo systemctl status minio # 检查Active: active (running)

#查看是否联通

for i in {1..4}; do

echo -n "minio${i}.zz520.online: "

nc -zv -w 2 minio${i}.zz520.online 9000 2>&1 | grep -q succeeded && echo "OK" || echo "FAILED"

done

minio1.zz520.online: OK

minio2.zz520.online: OK

minio3.zz520.online: OK

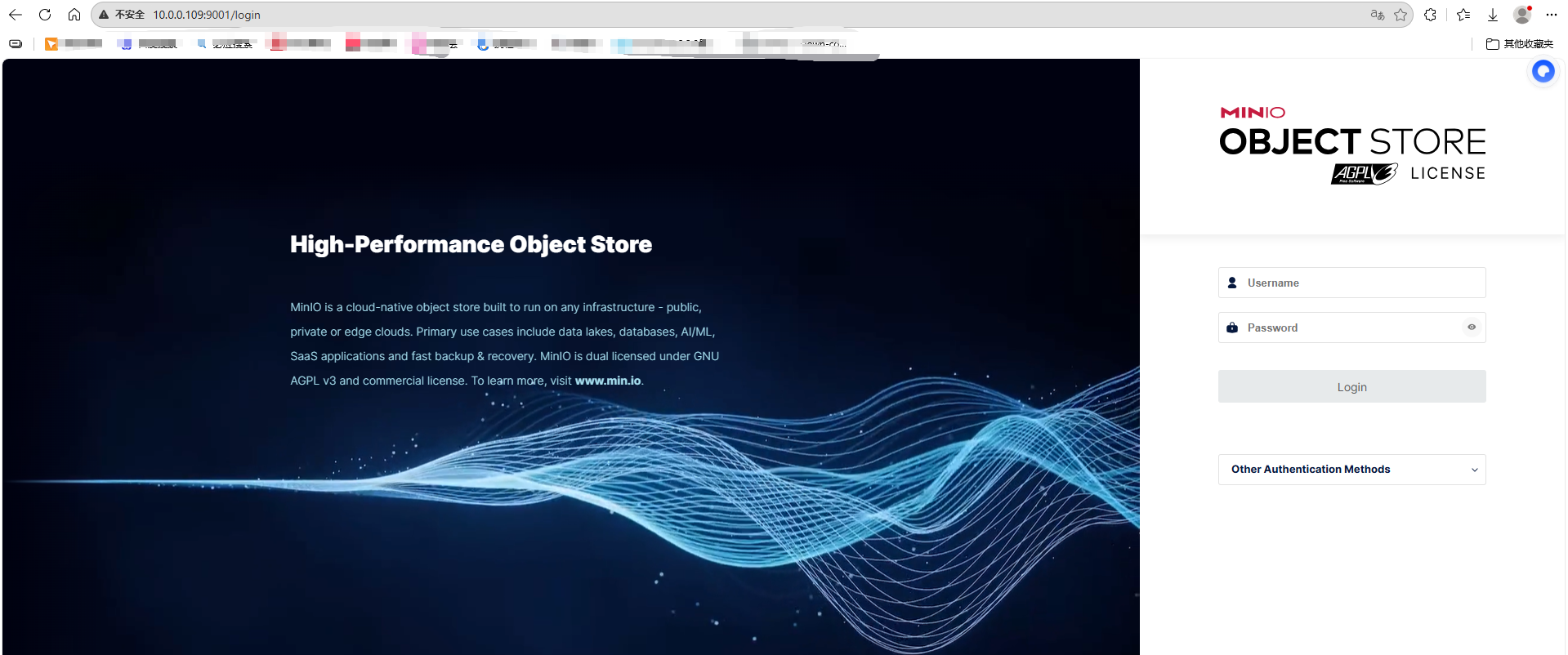

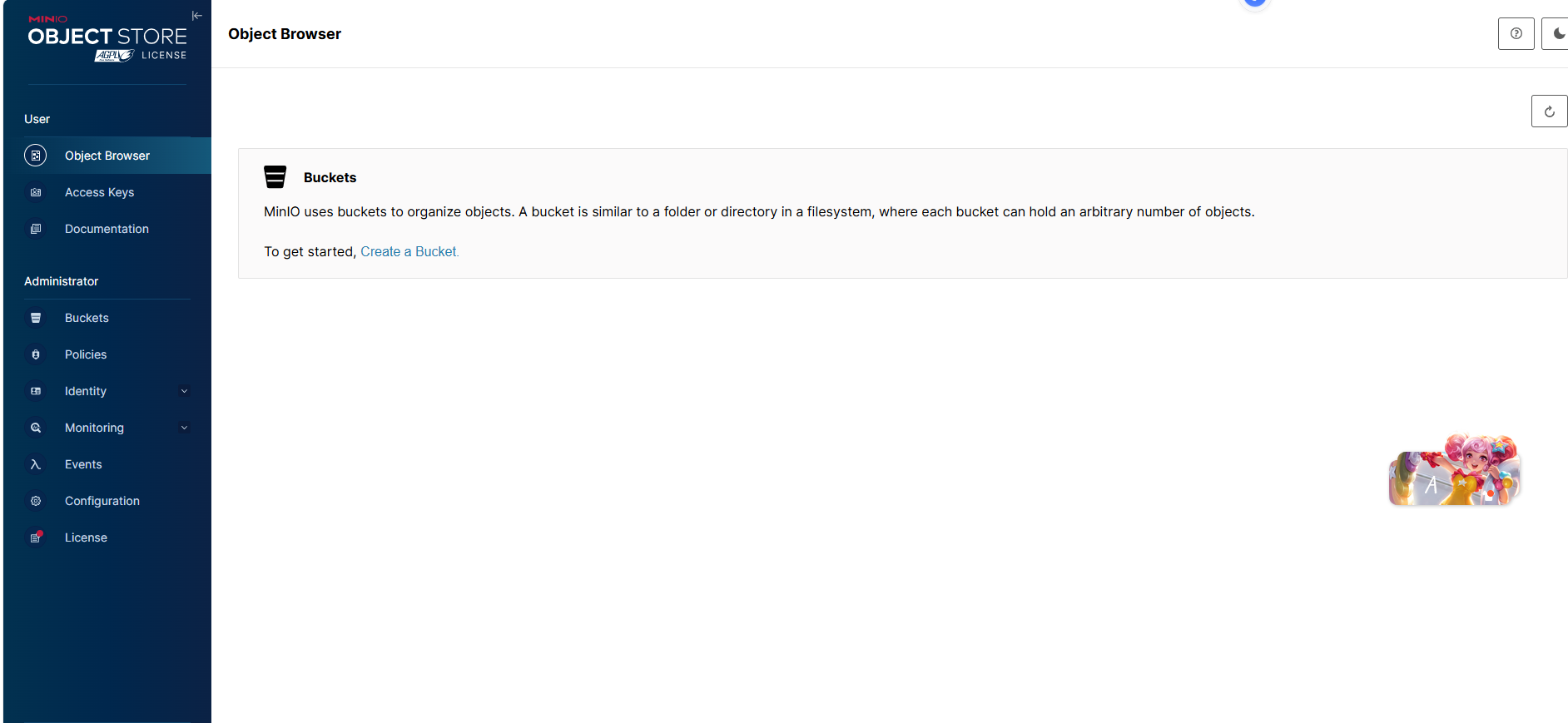

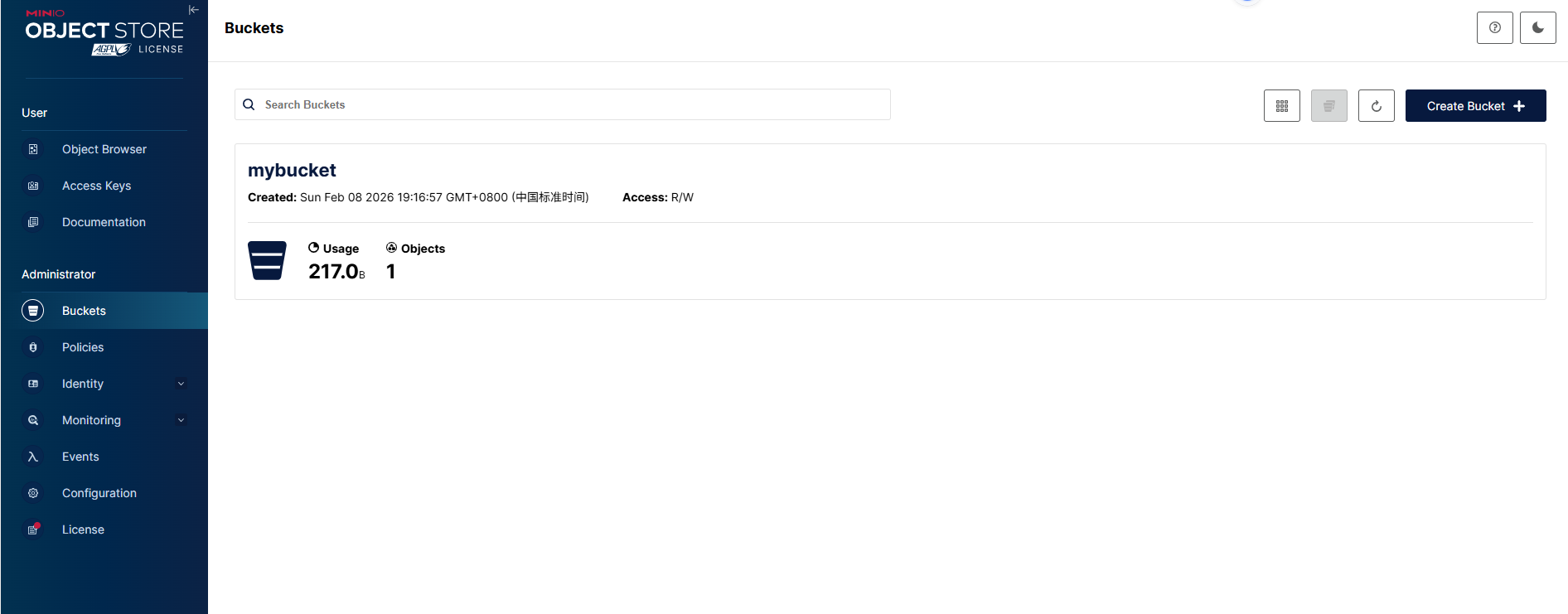

minio4.zz520.online: OK2.25 登录web端测试

分别登录10.0.0.106-109:9001 出现以下界面即配置成功

2.2.6 api测试访问

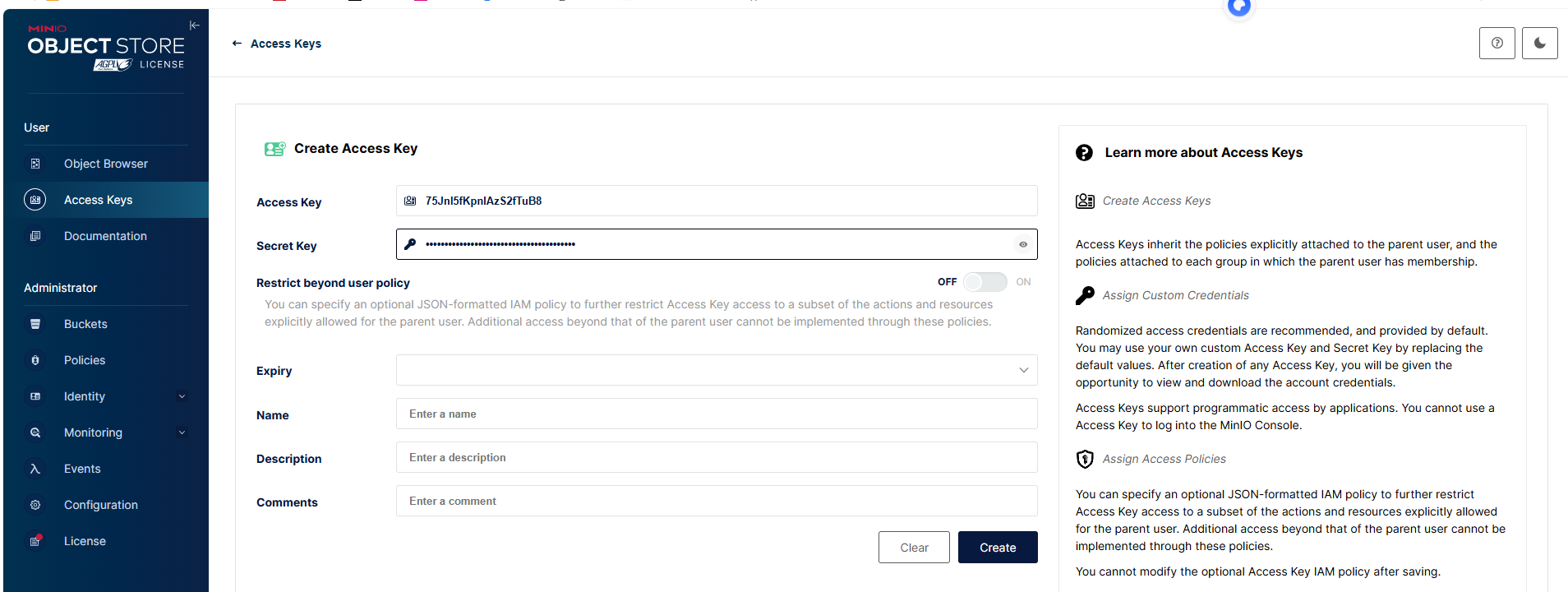

2.2.6.1记住key和密钥

bash

#key

75Jnl5fKpnlAzS2fTuB8

#secret

6SlLTaK9n2WMSICg0lQsMK9NDQeccbG7eteylISf2.2.6.2 安装环境&创建脚本

bash

apt update

python3 -m pip config set global.index-url https://mirrors.aliyun.com/pypi/simple/

pip3 install minio --break-system-packages

bash

cat access_minio.py

#!/usr/bin/env python3

"""

MinIO 客户端测试脚本 - 优化版

功能:创建存储桶 → 上传文件 → 下载验证

"""

import os

import sys

import logging

from minio import Minio

from minio.error import S3Error

# 配置日志

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s - %(levelname)s - %(message)s"

)

logger = logging.getLogger(__name__)

def get_minio_client():

"""从环境变量获取配置,支持安全凭证管理"""

return Minio(

endpoint=os.getenv("MINIO_ENDPOINT", "localhost:9000"),

access_key=os.getenv("MINIO_ACCESS_KEY", "75Jnl5fKpnlAzS2fTuB8"),

secret_key=os.getenv("MINIO_SECRET_KEY", "6SlLTaK9n2WMSICg0lQsMK9NDQeccbG7eteylISf"),

secure=os.getenv("MINIO_SECURE", "false").lower() == "true"

)

def ensure_bucket_exists(client, bucket_name):

"""确保存储桶存在(处理并发创建冲突)"""

try:

if not client.bucket_exists(bucket_name):

client.make_bucket(bucket_name)

logger.info(f"✓ 存储桶 '{bucket_name}' 创建成功")

else:

logger.info(f"✓ 存储桶 '{bucket_name}' 已存在")

except S3Error as e:

if e.code in ["BucketAlreadyOwnedByYou", "BucketAlreadyExists"]:

logger.info(f"✓ 存储桶 '{bucket_name}' 已存在(并发创建)")

else:

logger.error(f"✗ 存储桶操作失败: {e.code} - {e.message}")

raise

def upload_file(client, bucket_name, object_name, local_path):

"""上传文件并验证完整性"""

if not os.path.exists(local_path):

raise FileNotFoundError(f"本地文件不存在: {local_path}")

try:

result = client.fput_object(bucket_name, object_name, local_path)

logger.info(f"✓ 上传成功: {local_path} → {bucket_name}/{object_name}")

logger.debug(f" - ETag: {result.etag}")

logger.debug(f" - 版本ID: {result.version_id}")

except S3Error as e:

logger.error(f"✗ 上传失败: {e.code} - {e.message}")

raise

def download_file(client, bucket_name, object_name, download_path):

"""下载文件前检查对象是否存在"""

try:

# 检查对象是否存在

stat = client.stat_object(bucket_name, object_name)

logger.info(f"✓ 对象存在: {object_name} ({stat.size} bytes)")

# 执行下载

client.fget_object(bucket_name, object_name, download_path)

logger.info(f"✓ 下载成功: {bucket_name}/{object_name} → {download_path}")

# 验证文件完整性

if os.path.exists(download_path):

logger.info(f" - 本地文件大小: {os.path.getsize(download_path)} bytes")

except S3Error as e:

if e.code == "NoSuchKey":

logger.error(f"✗ 对象不存在: {bucket_name}/{object_name}")

else:

logger.error(f"✗ 下载失败: {e.code} - {e.message}")

raise

def main():

bucket_name = "mybucket"

object_name = "example-object.txt"

local_file = "/etc/hosts"

download_path = "./downloaded-example.txt" # 避免覆盖当前目录文件

try:

client = get_minio_client()

# 1. 确保存储桶存在

ensure_bucket_exists(client, bucket_name)

# 2. 上传文件

upload_file(client, bucket_name, object_name, local_file)

# 3. 下载验证

download_file(client, bucket_name, object_name, download_path)

logger.info("=" * 50)

logger.info("✅ MinIO 测试流程全部成功完成")

return 0

except (S3Error, FileNotFoundError, OSError) as e:

logger.error(f"❌ 操作失败: {str(e)}")

return 1

except Exception as e:

logger.exception(f"❌ 未预期的错误: {str(e)}")

return 2

if __name__ == "__main__":

sys.exit(main())2.2.6.3设置环境变量

bash

export MINIO_ENDPOINT="10.0.0.106:9000"

export MINIO_ACCESS_KEY="75Jnl5fKpnlAzS2fTuB8"

export MINIO_SECRET_KEY="6SlLTaK9n2WMSICg0lQsMK9NDQeccbG7eteylISf"

export MINIO_SECURE="false"2.2.6.4运行脚本

bash

# 2. 赋予执行权限(可选)

chmod +x access_minio.py

# 3. 运行脚本

python3 access_minio.py

2026-02-08 11:16:57,919 - INFO - ✓ 存储桶 'mybucket' 创建成功

2026-02-08 11:16:57,922 - INFO - ✓ 上传成功: /etc/hosts → mybucket/example-object.txt

2026-02-08 11:16:57,925 - INFO - ✓ 对象存在: example-object.txt (217 bytes)

2026-02-08 11:16:57,928 - INFO - ✓ 下载成功: mybucket/example-object.txt → ./downloaded-example.txt

2026-02-08 11:16:57,928 - INFO - - 本地文件大小: 217 bytes

2026-02-08 11:16:57,928 - INFO - ==================================================

2026-02-08 11:16:57,928 - INFO - ✅ MinIO 测试流程全部成功完成2.2.6.5web界面核实

2.2.6.6 服务器核实

bash

root@minio1:/usr/local/bin# tree /data/minio/

/data/minio/

└── mybucket

└── example-object.txt

└── xl.meta2.2.7安装 mc客户端

安装的方式很多 有二进制,包安装等,本文介绍通用二进制

2.2.7.1 二进制部署

bash

#官网下载

wget https://dl.min.io/client/mc/release/linux-amd64/mc

#国内镜像下载

wget http://dl.minio.org.cn/client/mc/release/linux-amd64/mc

install mc /usr/local/bin/

chmod +x /usr/local/bin/mc2.2.7.2 常见命令

bash

#帮助查看

mc --help

mc [GLOBALFLAGS] COMMAND --help

mc --help

mc ls

ls #列出文件和文件夹

mb

#创建一个存储桶或一一个文件夹

cat #显示文件和对象内容

pipe #一个STDIN重定向到一一个对象或者文件或者STDOUT

share #生成用于共享的URL

cp # 拷贝文件和对象

mirror #给存储桶和文件夹做镜像

find #基于参数查找文件

diff

#对两个文件夹或者存储桶比较差异

rm

#删除文件和对象

events #管理对象通知

watch #监视文件和对象的事件

policy #管理访问策略

config #管理mc配置文件

update #检查软件更新

version #输出版本信息2.2.7.3查看 默认链接信息

bash

#连接格式

mc alias ls

gcs

URL : https://storage.googleapis.com

AccessKey : YOUR-ACCESS-KEY-HERE

SecretKey : YOUR-SECRET-KEY-HERE

API : S3v2

Path : dns

Src : /root/.mc/config.json

local

URL : http://localhost:9000

AccessKey :

SecretKey :

API :

Path : auto

Src : /root/.mc/config.json

play

URL : https://play.min.io

AccessKey : Q3AM3UQ867SPQQA43P2F

SecretKey : zuf+tfteSlswRu7BJ86wekitnifILbZam1KYY3TG

API : S3v4

Path : auto

Src : /root/.mc/config.json

s3

URL : https://s3.amazonaws.com

AccessKey : YOUR-ACCESS-KEY-HERE

SecretKey : YOUR-SECRET-KEY-HERE

API : S3v4

Path : dns

Src : /root/.mc/config.json2.2.7.4开启mc命令自动补全功能

bash

mc --autocompletion

source ~/.bashrc2.2.7.5初始连接配置

bash

#初始链接配置 minio-server随意改

mc alias set minio-server http://minio.zz520.online:9000 75Jnl5fKpnlAzS2fTuB8 6SlLTaK9n2WMSICg0lQsMK9NDQeccbG7eteylISf

Added `minio-server` successfully.2.2.7.6上传文件

bash

#创建文件

echo "测试123" > ./test.txt

cat test.txt

测试123

#上传文件并改名

mc cp test.txt minio-server/mybucket/测试.txt

...n/test.txt: 10 B / 10 B ┃▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓┃ 1012 B/s 0sroot@minio1:/usr/local/bin# mc cat minio-server/mybucket/测试.txt

测试1232.2.7.7下载文件

bash

mc cp minio-server/mybucket/测试.txt ./下载.txt

...测试.txt: 10 B / 10 B ┃▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓┃ 1.36 KiB/s 0sroot@minio1:/usr/local/bin# ls

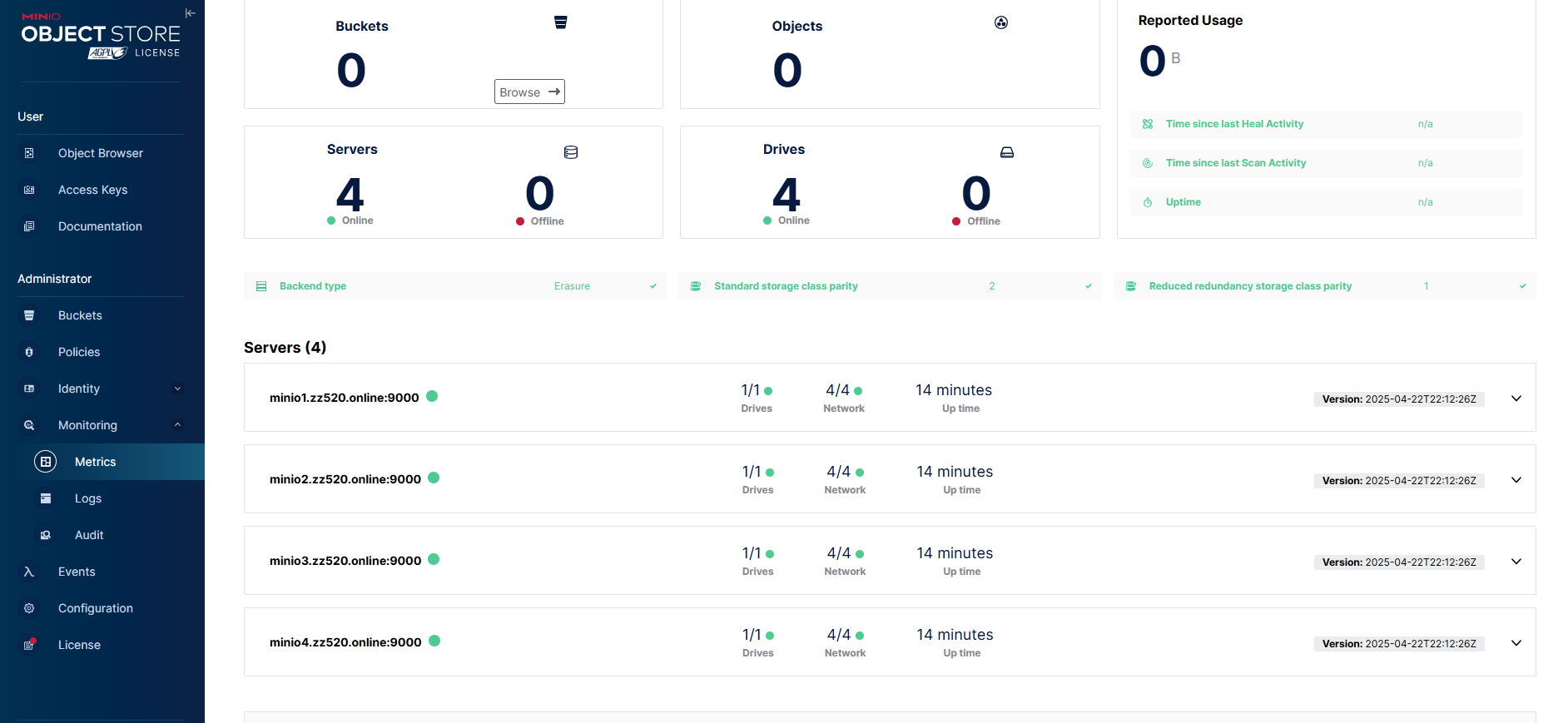

mc minio test.txt 下载.txt2.2.7.8 查看minio空间信息

bash

mc admin info minio-server/

● minio1.zz520.online:9000

Uptime: 2 hours

Version: 2025-04-22T22:12:26Z

Network: 4/4 OK

Drives: 1/1 OK

Pool: 1

● minio2.zz520.online:9000

Uptime: 2 hours

Version: 2025-04-22T22:12:26Z

Network: 4/4 OK

Drives: 1/1 OK

Pool: 1

● minio3.zz520.online:9000

Uptime: 2 hours

Version: 2025-04-22T22:12:26Z

Network: 4/4 OK

Drives: 1/1 OK

Pool: 1

● minio4.zz520.online:9000

Uptime: 2 hours

Version: 2025-04-22T22:12:26Z

Network: 4/4 OK

Drives: 1/1 OK

Pool: 1

┌──────┬──────────────────────┬─────────────────────┬──────────────┐

│ Pool │ Drives Usage │ Erasure stripe size │ Erasure sets │

│ 1st │ 2.0% (total: 60 GiB) │ 4 │ 1 │

└──────┴──────────────────────┴─────────────────────┴──────────────┘

227 B Used, 1 Bucket, 2 Objects

4 drives online, 0 drives offline, EC:2

#查看有哪些bucket

mc du minio-server/

227B 2 objects2.2.7.9 创建harbor的bucket

bash

# 创建名为 harbor-registry 的 bucket(Harbor 标准命名)

mc mb minio-server/harbor-registry

# 输出: Bucket created successfully `minio-server/harbor-registry`.

# Harbor 需要公开读权限以支持 docker pull

mc anonymous set public minio-server/harbor-registry

# 输出: Access permission for `minio-server/harbor-registry` is set to `public`为什么需要 public 权限?

docker pull时,Docker 客户端不携带认证直接拉取镜像层- MinIO 必须允许匿名读取 blob 数据

- 安全说明:仅开放读权限,写操作仍需认证(Harbor Core 会签名上传请求)

2.2.8申请ssl证书

2.2.8.1申请通配符证书

参考:https://github.com/acmesh-official/acme.sh/wiki/%E8%AF%B4%E6%98%8E

1.国内支持的有阿里云腾讯云

以腾讯云为例:

- 登录 腾讯云控制台

- 进入 访问管理 CAM

- 创建一个具有 DNS 管理权限的子用户或获取 API 密钥

- 或者,在 DNSPod 控制台 获取 API 密钥

2.2.8.2. 配置 acme.sh 与腾讯云 DNSPod

在终端中设置环境变量(替换为您的实际密钥):

bash

1# 设置腾讯云 DNSPod API 密钥

export DP_Id="您的DNSPod ID"

export DP_Key="您的DNSPod API Key"

# 设置邮箱(用于证书通知和账户创建)

export ACCOUNT_EMAIL="785746506@qq.com"

#下载脚本

curl https://get.acme.sh | sh -s email=my@example.com

#下载完成后执行

source ~/.bashrc2.2.8.3. 申请通配符证书

执行以下命令申请证书:

bash

acme.sh --issue --dns dns_dp -d zz520.online -d *.zz520.onlineacme.sh 会:

- 自动在您的域名下添加 TXT 记录用于验证

- 等待 DNS 记录生效

- 完成验证后自动删除 TXT 记录

- 下载并保存证书到

~/.acme.sh/zz520.online/目录

以下是申请过程

bash

root@DESKTOP-B1I6R18:~# export DP_Id="xxx"

root@DESKTOP-B1I6R18:~# export DP_Key="xxxx"

root@DESKTOP-B1I6R18:~# export ACCOUNT_EMAIL="785746506@qq.com"

root@DESKTOP-B1I6R18:~# vim ~/.acme.sh/account.conf

root@DESKTOP-B1I6R18:~# acme.sh --issue --dns dns_dp -d zz520.online -d *.zz520.online

[Fri Feb 6 17:53:36 CST 2026] Using CA: https://acme.zerossl.com/v2/DV90

[Fri Feb 6 17:53:36 CST 2026] Account key creation OK.

[Fri Feb 6 17:53:36 CST 2026] No EAB credentials found for ZeroSSL, let's obtain them

[Fri Feb 6 17:53:38 CST 2026] Registering account: https://acme.zerossl.com/v2/DV90

[Fri Feb 6 17:53:41 CST 2026] Registered

[Fri Feb 6 17:53:42 CST 2026] ACCOUNT_THUMBPRINT='UQZTR2PEKOThczP3lycW0ZH77fZRUfIyuM9C9tWatJs'

[Fri Feb 6 17:53:42 CST 2026] Creating domain key

[Fri Feb 6 17:53:42 CST 2026] The domain key is here: /root/.acme.sh/zz520.online_ecc/zz520.online.key

/root/.acme.sh/acme.sh: line 4399: [: [: integer expression expected

[Fri Feb 6 17:53:42 CST 2026] Multi domain='DNS:zz520.online,DNS:*.zz520.online'

/root/.acme.sh/acme.sh: line 4399: [: [: integer expression expected

[Fri Feb 6 17:53:52 CST 2026] Getting webroot for domain='zz520.online'

[Fri Feb 6 17:53:52 CST 2026] Getting webroot for domain='*.zz520.online'

[Fri Feb 6 17:53:52 CST 2026] Adding TXT value: XMjgWu294SKzrI_SdUnt8L5jso3NtOtZN_67Bu6YBxE for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:53:53 CST 2026] Adding record

[Fri Feb 6 17:53:54 CST 2026] The TXT record has been successfully added.

[Fri Feb 6 17:53:54 CST 2026] Adding TXT value: XeO5kf5A6KYKgDYT5lcdo5qdRxiJ2p28mmaSgH35ajU for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:53:54 CST 2026] Adding record

[Fri Feb 6 17:53:55 CST 2026] The TXT record has been successfully added.

[Fri Feb 6 17:53:55 CST 2026] Let's check each DNS record now. Sleeping for 20 seconds first.

[Fri Feb 6 17:54:16 CST 2026] You can use '--dnssleep' to disable public dns checks.

[Fri Feb 6 17:54:16 CST 2026] See: https://github.com/acmesh-official/acme.sh/wiki/dnscheck

[Fri Feb 6 17:54:16 CST 2026] Checking zz520.online for _acme-challenge.zz520.online

[Fri Feb 6 17:54:17 CST 2026] Please refer to https://curl.haxx.se/libcurl/c/libcurl-errors.html for error code: 35

[Fri Feb 6 17:54:18 CST 2026] Success for domain zz520.online '_acme-challenge.zz520.online'.

[Fri Feb 6 17:54:18 CST 2026] Checking zz520.online for _acme-challenge.zz520.online

[Fri Feb 6 17:54:18 CST 2026] Success for domain zz520.online '_acme-challenge.zz520.online'.

[Fri Feb 6 17:54:18 CST 2026] All checks succeeded

[Fri Feb 6 17:54:18 CST 2026] Verifying: zz520.online

[Fri Feb 6 17:54:19 CST 2026] Processing. The CA is processing your order, please wait. (1/30)

[Fri Feb 6 17:54:24 CST 2026] Success

[Fri Feb 6 17:54:24 CST 2026] Verifying: *.zz520.online

[Fri Feb 6 17:54:26 CST 2026] Processing. The CA is processing your order, please wait. (1/30)

[Fri Feb 6 17:54:31 CST 2026] Success

[Fri Feb 6 17:54:31 CST 2026] Removing DNS records.

[Fri Feb 6 17:54:31 CST 2026] Removing txt: XMjgWu294SKzrI_SdUnt8L5jso3NtOtZN_67Bu6YBxE for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:54:33 CST 2026] Successfully removed

[Fri Feb 6 17:54:33 CST 2026] Removing txt: XeO5kf5A6KYKgDYT5lcdo5qdRxiJ2p28mmaSgH35ajU for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:54:34 CST 2026] Successfully removed

[Fri Feb 6 17:54:34 CST 2026] Verification finished, beginning signing.

[Fri Feb 6 17:54:34 CST 2026] Let's finalize the order.

[Fri Feb 6 17:54:34 CST 2026] Le_OrderFinalize='https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ/finalize'

[Fri Feb 6 17:54:35 CST 2026] Order status is 'processing', let's sleep and retry.

[Fri Feb 6 17:54:35 CST 2026] Sleeping for 15 seconds then retrying

[Fri Feb 6 17:54:51 CST 2026] Polling order status: https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ

[Fri Feb 6 17:54:53 CST 2026] Downloading cert.

[Fri Feb 6 17:54:53 CST 2026] Le_LinkCert='https://acme.zerossl.com/v2/DV90/cert/6Zw4Udzab1a7QWDj6zG63Q'

[Fri Feb 6 17:54:54 CST 2026] Cert success.

-----BEGIN CERTIFICATE-----

MIIEATCCA4egAwIBAgIQdSzFuvbgEP64sNHnbfOgCDAKBggqhkjOPQQDAzBLMQsw

CQYDVQQGEwJBVDEQMA4GA1UEChMHWmVyb1NTTDEqMCgGA1UEAxMhWmVyb1NTTCBF

Q0MgRG9tYWluIFNlY3VyZSBTaXRlIENBMB4XDTI2MDIwNjAwMDAwMFoXDTI2MDUw

NzIzNTk1OVowFzEVMBMGA1UEAxMMeno1MjAub25saW5lMFkwEwYHKoZIzj0CAQYI

KoZIzj0DAQcDQgAEBunJFeocp+x5IOMEp316FpEcG2hABYm/yDalISIjZpOlGz9Z

rmONyAfpM7t24/Br/Pzo+6mCmFkbet8kOyTaY6OCAn8wggJ7MB8GA1UdIwQYMBaA

FA9r5kvOOUeu9n6QHnnwMJGSyF+jMB0GA1UdDgQWBBRTK/StqWI3dqvlNmN2gXnb

T6u6ozAOBgNVHQ8BAf8EBAMCB4AwDAYDVR0TAQH/BAIwADATBgNVHSUEDDAKBggr

BgEFBQcDATBJBgNVHSAEQjBAMDQGCysGAQQBsjEBAgJOMCUwIwYIKwYBBQUHAgEW

F2h0dHBzOi8vc2VjdGlnby5jb20vQ1BTMAgGBmeBDAECATCBiAYIKwYBBQUHAQEE

fDB6MEsGCCsGAQUFBzAChj9odHRwOi8vemVyb3NzbC5jcnQuc2VjdGlnby5jb20v

WmVyb1NTTEVDQ0RvbWFpblNlY3VyZVNpdGVDQS5jcnQwKwYIKwYBBQUHMAGGH2h0

dHA6Ly96ZXJvc3NsLm9jc3Auc2VjdGlnby5jb20wggEFBgorBgEEAdZ5AgQCBIH2

BIHzAPEAdgAOV5S8866pPjMbLJkHs/eQ35vCPXEyJd0hqSWsYcVOIQAAAZwyX/zN

AAAEAwBHMEUCIB9aLxzngwRjTo/2Xsruc6lC7FYycAAlnmYJuyeZW6TdAiEAvgDE

st356AEOx6LjLaUkYlI2/Ru/3aK29S7pMdR5KgcAdwAWgy2r8KklDw/wOqVF/8i/

yCPQh0v2BCkn+OcfMxP1+gAAAZwyX/zLAAAEAwBIMEYCIQDzpIcmz/3VkR9Vf5Lx

I60RmuvJRsBdMck7Eiz8Lb39AAIhAIgMK7xVP6YuzBWTdX3DeX8utb+6eVv1GCmf

zqnvuo2XMCcGA1UdEQQgMB6CDHp6NTIwLm9ubGluZYIOKi56ejUyMC5vbmxpbmUw

CgYIKoZIzj0EAwMDaAAwZQIwOFd0Ew4RoC2BAesTLKtc1ogdVWJUEdfXu0t1ZvnK

p8GTfRFhYjNWa2eRVRq8DblIAjEAtixOAZ30nRzR42DNoG5dTPHRImApIfJxJ3KT

ewB105mgq5IbIE68JG716nrCp+2O

-----END CERTIFICATE-----

[Fri Feb 6 17:54:54 CST 2026] Your cert is in: /root/.acme.sh/zz520.online_ecc/zz520.online.cer

[Fri Feb 6 17:54:54 CST 2026] Your cert key is in: /root/.acme.sh/zz520.online_ecc/zz520.online.key

[Fri Feb 6 17:54:54 CST 2026] The intermediate CA cert is in: /root/.acme.sh/zz520.online_ecc/ca.cer

[Fri Feb 6 17:54:54 CST 2026] And the full-chain cert is in: /root/.acme.sh/zz520.online_ecc/fullchain.cer

root@DESKTOP-B1I6R18:~# acme.sh --info -d zz520.online

[Fri Feb 6 18:09:34 CST 2026] The domain 'zz520.online' seems to already have an ECC cert, let's use it.

DOMAIN_CONF=/root/.acme.sh/zz520.online_ecc/zz520.online.conf

Le_Domain=zz520.online

Le_Alt=*.zz520.online

Le_Webroot=dns_dp

Le_PreHook=

Le_PostHook=

Le_RenewHook=

Le_API=https://acme.zerossl.com/v2/DV90

Le_Keylength=ec-256

Le_OrderFinalize=https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ/finalize

Le_LinkOrder=https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ

Le_LinkCert=https://acme.zerossl.com/v2/DV90/cert/6Zw4Udzab1a7QWDj6zG63Q

Le_CertCreateTime=1770371694

Le_CertCreateTimeStr=2026-02-06T09:54:54Z

Le_NextRenewTimeStr=2026-03-07T09:54:54Z

Le_NextRenewTime=17728772942.2.8.4.安装证书

由于使用haproxy所以下面执行

bash

# 创建证书目录

mkdir -p /etc/haproxy/certs

# 合并私钥和完整证书链(注意顺序!)

cat /root/.acme.sh/zz520.online_ecc/zz520.online.key \

/root/.acme.sh/zz520.online_ecc/fullchain.cer \

> /etc/haproxy/certs/zz520.online.pem

# 设置安全权限(私钥必须严格保护)

chmod 644 /etc/haproxy/certs/zz520.online.pem

chown haproxy:haproxy /etc/haproxy/certs/zz520.online.pem # 如用户非 haproxy 请调整2.2.8.5配置自动续期

acme.sh 默认 60 天续期,但续期后需重新合并证书并重载 HAProxy。执行一次安装命令即可持久化配置:

bash

acme.sh --install-cert -d zz520.online -d *.zz520.online --ecc \

--key-file /etc/haproxy/certs/zz520.online.key \

--fullchain-file /etc/haproxy/certs/zz520.online.crt \

--reloadcmd "cat /etc/haproxy/certs/zz520.online.key /etc/haproxy/certs/zz520.online.crt > /etc/haproxy/certs/zz520.online.pem && chmod 600 /etc/haproxy/certs/zz520.online.pem && systemctl reload haproxy"2.2.8.6 把证书传到10.0.0.105

bash

scp /etc/haproxy/certs/zz520.online.pem root@10.0.0.105:/etc/haproxy/certs/2.2.9 配置高可用

2.2.9.1安装haproxy和keepalived(2个lb配置相同10.0.0.104和10.0.0.105执行)

bash

# 1. 安装软件

apt update

apt install -y haproxy keepalived

# 2. 启用ip_nonlocal_bind(关键!允许绑定非本地VIP)

cat > /etc/sysctl.d/99-haproxy.conf <<EOF

# 允许HAProxy绑定非本地IP(VIP)

net.ipv4.ip_nonlocal_bind = 1

EOF

sysctl -p /etc/sysctl.d/99-haproxy.conf

# 3. 验证配置生效

sysctl net.ipv4.ip_nonlocal_bind

# 预期输出:net.ipv4.ip_nonlocal_bind = 1

# 4. 配置资源限制

mkdir -p /etc/systemd/system/haproxy.service.d

cat > /etc/systemd/system/haproxy.service.d/override.conf <<EOF

[Service]

CPUQuota=30%

MemoryHigh=1.3G

MemoryMax=1.5G

LimitNOFILE=100000

EOF

systemctl daemon-reload

systemctl restart haproxy2.2.9.2编辑haproxy配置文件

bash

cat /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# Common defaults that all the 'listen' and 'backend' sections use

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 1

timeout http-request 10s

timeout queue 20s

timeout connect 5s

timeout client 20s

timeout server 20s

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# ========== MinIO API 负载均衡(VIP 10.0.0.112:9000) ==========

# 四层 TCP 模式 - 适配 S3 兼容 API 的二进制协议

#---------------------------------------------------------------------

listen minio-api-9000

bind 10.0.0.112:9000

mode tcp

option tcplog

balance leastconn # 优选连接数最少的节点,适合长连接

timeout client 1h # MinIO 大文件传输需长超时

timeout server 1h

timeout connect 5s

server minio-01 10.0.0.106:9000 check inter 2s fall 3 rise 5 weight 10

server minio-02 10.0.0.107:9000 check inter 2s fall 3 rise 5 weight 10

# 如果有更多节点,请在此处追加

# server minio-03 10.0.0.108:9000 check inter 2s fall 3 rise 5 weight 10

#---------------------------------------------------------------------

# ========== MinIO Console 负载均衡(VIP 10.0.0.112:9001) ==========

# 七层 HTTP 模式 - 控制台需会话保持

#---------------------------------------------------------------------

listen minio-console-9001

bind 10.0.0.112:9001

mode http

option httplog

balance source # 源 IP 会话保持,避免控制台操作跳节点

timeout client 30m

timeout server 30m

server minio-01 10.0.0.106:9001 check inter 2s fall 3 rise 5

server minio-02 10.0.0.107:9001 check inter 2s fall 3 rise 5

server minio-03 10.0.0.108:9001 check inter 2s fall 3 rise 5

server minio-04 10.0.0.109:9001 check inter 2s fall 3 rise 5

# 如果有更多节点,请在此处追加

# server minio-05 10.0.0.110:9001 check inter 2s fall 3 rise 5

#---------------------------------------------------------------------

# ========== MinIO HTTPS 支持 (VIP 10.0.0.112:9443) ==========

# TLS 终止于 HAProxy,后端走 HTTP 连接 MinIO Console

#---------------------------------------------------------------------

listen minio-https-9443

bind 10.0.0.112:9443 ssl crt /etc/haproxy/certs/zz520.online.pem alpn h2,http/1.1

mode http

option httplog

balance source # 同样需要会话保持

timeout client 1h

timeout server 1h

# 关键头信息:告诉 MinIO 它是通过 HTTPS 访问的,否则页面资源可能加载失败

http-request set-header X-Forwarded-Proto https

http-request set-header X-Forwarded-Port 9443

# 后端 MinIO 节点 (Console 端口 9001)

server minio-01 10.0.0.106:9001 check inter 2s fall 3 rise 5

server minio-02 10.0.0.107:9001 check inter 2s fall 3 rise 5

server minio-03 10.0.0.108:9001 check inter 2s fall 3 rise 5

server minio-04 10.0.0.109:9001 check inter 2s fall 3 rise 5

# 如果有更多节点,请在此处追加

# server minio-05 10.0.0.110:9001 check inter 2s fall 3 rise 5

#---------------------------------------------------------------------

# ========== HAProxy 监控统计界面 ==========

#---------------------------------------------------------------------

listen stats

bind 10.0.0.110:8404

mode http

stats enable

stats uri /haproxy-stats

stats refresh 10s

stats auth admin:123456 # 请务必修改为强密码

# 限制仅运维网段可访问

acl is_ops_net src 10.0.0.0/24

http-request allow if is_ops_net

http-request deny注意:

部署前检查清单

证书路径:确认 /etc/haproxy/certs/zz520.online.pem 文件存在且格式正确。

节点列表:检查 server 行,确保 IP 地址 (10.0.0.106, 10.0.0.107 等) 与你实际的 MinIO 集群节点匹配。

防火墙:确保系统防火墙(如 ufw 或 firewalld)放行了 9000, 9001, 9443, 8404 端口。

配置测试:

在重启服务前,强烈建议运行以下命令检查配置语法:

bashhaproxy -f /etc/haproxy/haproxy.cfg -c只有看到 Configuration file is valid 后,再执行 systemctl restart haproxy。

3.部署pgsql集群

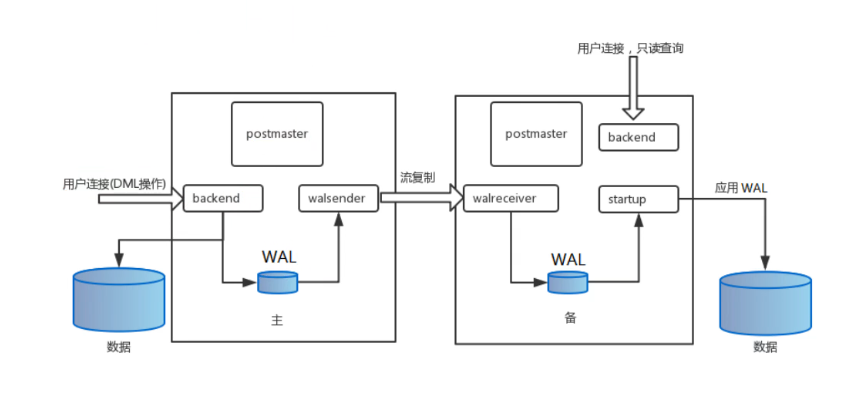

3.1基于流复制部署pgsql 集群

3.1.1流复制类似于MySQL的的同步机制,支持以下两种同步机制

异步流复制

同步流复制

在实际生产环境中,建议需要根据环境的实际情况来选择同步或异步模式,同步模式需要等待备库的写盘才能返回成功,如此一来,在主备库复制正常的情况下,能够保证备库的数据不会丢失,但是带来的一个负面问题,一旦备库宕机,主库就会挂起而无法进行正常操作,即在同步模式中,备库对主库的有很大的影响,而异步模式就不会。因此,在生产环境中,大多会选择异步复制模式,而非同步模式.

3.1.2 流复制特点

延迟极低,不怕大事务

支持断点续传

支持多副本

配置简单

备库与主库物理完全一致,并支持只读

4.部署redis集群

5.部署harbor集群

4.部署k8s

5.部署haproxy+keepalived (10.0.0.104/105)执行

3.1. 安装与资源限制模块

bash

# 1. 安装软件

apt update

apt install -y haproxy keepalived

# 2. 启用ip_nonlocal_bind(关键!允许绑定非本地VIP)

cat > /etc/sysctl.d/99-haproxy.conf <<EOF

# 允许HAProxy绑定非本地IP(VIP)

net.ipv4.ip_nonlocal_bind = 1

EOF

sysctl -p /etc/sysctl.d/99-haproxy.conf

# 3. 验证配置生效

sysctl net.ipv4.ip_nonlocal_bind

# 预期输出:net.ipv4.ip_nonlocal_bind = 1

# 4. 配置资源限制

mkdir -p /etc/systemd/system/haproxy.service.d

cat > /etc/systemd/system/haproxy.service.d/override.conf <<EOF

[Service]

CPUQuota=30%

MemoryHigh=1.3G

MemoryMax=1.5G

LimitNOFILE=100000

EOF

systemctl daemon-reload

systemctl restart haproxy3.2申请harbor 证书

3.2.1申请通配符证书

参考:https://github.com/acmesh-official/acme.sh/wiki/%E8%AF%B4%E6%98%8E

1.国内支持的有阿里云腾讯云

以腾讯云为例:

- 登录 腾讯云控制台

- 进入 访问管理 CAM

- 创建一个具有 DNS 管理权限的子用户或获取 API 密钥

- 或者,在 DNSPod 控制台 获取 API 密钥

3.2.2. 配置 acme.sh 与腾讯云 DNSPod

在终端中设置环境变量(替换为您的实际密钥):

bash

1# 设置腾讯云 DNSPod API 密钥

export DP_Id="您的DNSPod ID"

export DP_Key="您的DNSPod API Key"

# 设置邮箱(用于证书通知和账户创建)

export ACCOUNT_EMAIL="785746506@qq.com"

#下载脚本

curl https://get.acme.sh | sh -s email=my@example.com

#下载完成后执行

source ~/.bashrc3.2.3. 申请通配符证书

执行以下命令申请证书:

bash

acme.sh --issue --dns dns_dp -d zz520.online -d *.zz520.onlineacme.sh 会:

- 自动在您的域名下添加 TXT 记录用于验证

- 等待 DNS 记录生效

- 完成验证后自动删除 TXT 记录

- 下载并保存证书到

~/.acme.sh/zz520.online/目录

以下是申请过程

bash

root@DESKTOP-B1I6R18:~# export DP_Id="xxx"

root@DESKTOP-B1I6R18:~# export DP_Key="xxxx"

root@DESKTOP-B1I6R18:~# export ACCOUNT_EMAIL="785746506@qq.com"

root@DESKTOP-B1I6R18:~# vim ~/.acme.sh/account.conf

root@DESKTOP-B1I6R18:~# acme.sh --issue --dns dns_dp -d zz520.online -d *.zz520.online

[Fri Feb 6 17:53:36 CST 2026] Using CA: https://acme.zerossl.com/v2/DV90

[Fri Feb 6 17:53:36 CST 2026] Account key creation OK.

[Fri Feb 6 17:53:36 CST 2026] No EAB credentials found for ZeroSSL, let's obtain them

[Fri Feb 6 17:53:38 CST 2026] Registering account: https://acme.zerossl.com/v2/DV90

[Fri Feb 6 17:53:41 CST 2026] Registered

[Fri Feb 6 17:53:42 CST 2026] ACCOUNT_THUMBPRINT='UQZTR2PEKOThczP3lycW0ZH77fZRUfIyuM9C9tWatJs'

[Fri Feb 6 17:53:42 CST 2026] Creating domain key

[Fri Feb 6 17:53:42 CST 2026] The domain key is here: /root/.acme.sh/zz520.online_ecc/zz520.online.key

/root/.acme.sh/acme.sh: line 4399: [: [: integer expression expected

[Fri Feb 6 17:53:42 CST 2026] Multi domain='DNS:zz520.online,DNS:*.zz520.online'

/root/.acme.sh/acme.sh: line 4399: [: [: integer expression expected

[Fri Feb 6 17:53:52 CST 2026] Getting webroot for domain='zz520.online'

[Fri Feb 6 17:53:52 CST 2026] Getting webroot for domain='*.zz520.online'

[Fri Feb 6 17:53:52 CST 2026] Adding TXT value: XMjgWu294SKzrI_SdUnt8L5jso3NtOtZN_67Bu6YBxE for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:53:53 CST 2026] Adding record

[Fri Feb 6 17:53:54 CST 2026] The TXT record has been successfully added.

[Fri Feb 6 17:53:54 CST 2026] Adding TXT value: XeO5kf5A6KYKgDYT5lcdo5qdRxiJ2p28mmaSgH35ajU for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:53:54 CST 2026] Adding record

[Fri Feb 6 17:53:55 CST 2026] The TXT record has been successfully added.

[Fri Feb 6 17:53:55 CST 2026] Let's check each DNS record now. Sleeping for 20 seconds first.

[Fri Feb 6 17:54:16 CST 2026] You can use '--dnssleep' to disable public dns checks.

[Fri Feb 6 17:54:16 CST 2026] See: https://github.com/acmesh-official/acme.sh/wiki/dnscheck

[Fri Feb 6 17:54:16 CST 2026] Checking zz520.online for _acme-challenge.zz520.online

[Fri Feb 6 17:54:17 CST 2026] Please refer to https://curl.haxx.se/libcurl/c/libcurl-errors.html for error code: 35

[Fri Feb 6 17:54:18 CST 2026] Success for domain zz520.online '_acme-challenge.zz520.online'.

[Fri Feb 6 17:54:18 CST 2026] Checking zz520.online for _acme-challenge.zz520.online

[Fri Feb 6 17:54:18 CST 2026] Success for domain zz520.online '_acme-challenge.zz520.online'.

[Fri Feb 6 17:54:18 CST 2026] All checks succeeded

[Fri Feb 6 17:54:18 CST 2026] Verifying: zz520.online

[Fri Feb 6 17:54:19 CST 2026] Processing. The CA is processing your order, please wait. (1/30)

[Fri Feb 6 17:54:24 CST 2026] Success

[Fri Feb 6 17:54:24 CST 2026] Verifying: *.zz520.online

[Fri Feb 6 17:54:26 CST 2026] Processing. The CA is processing your order, please wait. (1/30)

[Fri Feb 6 17:54:31 CST 2026] Success

[Fri Feb 6 17:54:31 CST 2026] Removing DNS records.

[Fri Feb 6 17:54:31 CST 2026] Removing txt: XMjgWu294SKzrI_SdUnt8L5jso3NtOtZN_67Bu6YBxE for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:54:33 CST 2026] Successfully removed

[Fri Feb 6 17:54:33 CST 2026] Removing txt: XeO5kf5A6KYKgDYT5lcdo5qdRxiJ2p28mmaSgH35ajU for domain: _acme-challenge.zz520.online

[Fri Feb 6 17:54:34 CST 2026] Successfully removed

[Fri Feb 6 17:54:34 CST 2026] Verification finished, beginning signing.

[Fri Feb 6 17:54:34 CST 2026] Let's finalize the order.

[Fri Feb 6 17:54:34 CST 2026] Le_OrderFinalize='https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ/finalize'

[Fri Feb 6 17:54:35 CST 2026] Order status is 'processing', let's sleep and retry.

[Fri Feb 6 17:54:35 CST 2026] Sleeping for 15 seconds then retrying

[Fri Feb 6 17:54:51 CST 2026] Polling order status: https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ

[Fri Feb 6 17:54:53 CST 2026] Downloading cert.

[Fri Feb 6 17:54:53 CST 2026] Le_LinkCert='https://acme.zerossl.com/v2/DV90/cert/6Zw4Udzab1a7QWDj6zG63Q'

[Fri Feb 6 17:54:54 CST 2026] Cert success.

-----BEGIN CERTIFICATE-----

MIIEATCCA4egAwIBAgIQdSzFuvbgEP64sNHnbfOgCDAKBggqhkjOPQQDAzBLMQsw

CQYDVQQGEwJBVDEQMA4GA1UEChMHWmVyb1NTTDEqMCgGA1UEAxMhWmVyb1NTTCBF

Q0MgRG9tYWluIFNlY3VyZSBTaXRlIENBMB4XDTI2MDIwNjAwMDAwMFoXDTI2MDUw

NzIzNTk1OVowFzEVMBMGA1UEAxMMeno1MjAub25saW5lMFkwEwYHKoZIzj0CAQYI

KoZIzj0DAQcDQgAEBunJFeocp+x5IOMEp316FpEcG2hABYm/yDalISIjZpOlGz9Z

rmONyAfpM7t24/Br/Pzo+6mCmFkbet8kOyTaY6OCAn8wggJ7MB8GA1UdIwQYMBaA

FA9r5kvOOUeu9n6QHnnwMJGSyF+jMB0GA1UdDgQWBBRTK/StqWI3dqvlNmN2gXnb

T6u6ozAOBgNVHQ8BAf8EBAMCB4AwDAYDVR0TAQH/BAIwADATBgNVHSUEDDAKBggr

BgEFBQcDATBJBgNVHSAEQjBAMDQGCysGAQQBsjEBAgJOMCUwIwYIKwYBBQUHAgEW

F2h0dHBzOi8vc2VjdGlnby5jb20vQ1BTMAgGBmeBDAECATCBiAYIKwYBBQUHAQEE

fDB6MEsGCCsGAQUFBzAChj9odHRwOi8vemVyb3NzbC5jcnQuc2VjdGlnby5jb20v

WmVyb1NTTEVDQ0RvbWFpblNlY3VyZVNpdGVDQS5jcnQwKwYIKwYBBQUHMAGGH2h0

dHA6Ly96ZXJvc3NsLm9jc3Auc2VjdGlnby5jb20wggEFBgorBgEEAdZ5AgQCBIH2

BIHzAPEAdgAOV5S8866pPjMbLJkHs/eQ35vCPXEyJd0hqSWsYcVOIQAAAZwyX/zN

AAAEAwBHMEUCIB9aLxzngwRjTo/2Xsruc6lC7FYycAAlnmYJuyeZW6TdAiEAvgDE

st356AEOx6LjLaUkYlI2/Ru/3aK29S7pMdR5KgcAdwAWgy2r8KklDw/wOqVF/8i/

yCPQh0v2BCkn+OcfMxP1+gAAAZwyX/zLAAAEAwBIMEYCIQDzpIcmz/3VkR9Vf5Lx

I60RmuvJRsBdMck7Eiz8Lb39AAIhAIgMK7xVP6YuzBWTdX3DeX8utb+6eVv1GCmf

zqnvuo2XMCcGA1UdEQQgMB6CDHp6NTIwLm9ubGluZYIOKi56ejUyMC5vbmxpbmUw

CgYIKoZIzj0EAwMDaAAwZQIwOFd0Ew4RoC2BAesTLKtc1ogdVWJUEdfXu0t1ZvnK

p8GTfRFhYjNWa2eRVRq8DblIAjEAtixOAZ30nRzR42DNoG5dTPHRImApIfJxJ3KT

ewB105mgq5IbIE68JG716nrCp+2O

-----END CERTIFICATE-----

[Fri Feb 6 17:54:54 CST 2026] Your cert is in: /root/.acme.sh/zz520.online_ecc/zz520.online.cer

[Fri Feb 6 17:54:54 CST 2026] Your cert key is in: /root/.acme.sh/zz520.online_ecc/zz520.online.key

[Fri Feb 6 17:54:54 CST 2026] The intermediate CA cert is in: /root/.acme.sh/zz520.online_ecc/ca.cer

[Fri Feb 6 17:54:54 CST 2026] And the full-chain cert is in: /root/.acme.sh/zz520.online_ecc/fullchain.cer

root@DESKTOP-B1I6R18:~# acme.sh --info -d zz520.online

[Fri Feb 6 18:09:34 CST 2026] The domain 'zz520.online' seems to already have an ECC cert, let's use it.

DOMAIN_CONF=/root/.acme.sh/zz520.online_ecc/zz520.online.conf

Le_Domain=zz520.online

Le_Alt=*.zz520.online

Le_Webroot=dns_dp

Le_PreHook=

Le_PostHook=

Le_RenewHook=

Le_API=https://acme.zerossl.com/v2/DV90

Le_Keylength=ec-256

Le_OrderFinalize=https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ/finalize

Le_LinkOrder=https://acme.zerossl.com/v2/DV90/order/SNJnmYDAcIlFbClN7HJGpQ

Le_LinkCert=https://acme.zerossl.com/v2/DV90/cert/6Zw4Udzab1a7QWDj6zG63Q

Le_CertCreateTime=1770371694

Le_CertCreateTimeStr=2026-02-06T09:54:54Z

Le_NextRenewTimeStr=2026-03-07T09:54:54Z

Le_NextRenewTime=17728772943.2.4.安装证书

由于使用haproxy所以下面执行

bash

# 创建证书目录

mkdir -p /etc/haproxy/certs

# 合并私钥和完整证书链(注意顺序!)

cat /root/.acme.sh/zz520.online_ecc/zz520.online.key \

/root/.acme.sh/zz520.online_ecc/fullchain.cer \

> /etc/haproxy/certs/zz520.online.pem

# 设置安全权限(私钥必须严格保护)

chmod 644 /etc/haproxy/certs/zz520.online.pem

chown haproxy:haproxy /etc/haproxy/certs/zz520.online.pem # 如用户非 haproxy 请调整3.2.5配置自动续期

acme.sh 默认 60 天续期,但续期后需重新合并证书并重载 HAProxy。执行一次安装命令即可持久化配置:

bash

acme.sh --install-cert -d zz520.online -d *.zz520.online --ecc \

--key-file /etc/haproxy/certs/zz520.online.key \

--fullchain-file /etc/haproxy/certs/zz520.online.crt \

--reloadcmd "cat /etc/haproxy/certs/zz520.online.key /etc/haproxy/certs/zz520.online.crt > /etc/haproxy/certs/zz520.online.pem && chmod 600 /etc/haproxy/certs/zz520.online.pem && systemctl reload haproxy"3.2.6 把证书传到10.0.0.105

bash

scp /etc/haproxy/certs/zz520.online.pem root@10.0.0.105:/etc/haproxy/certs/3.3 编辑keepalived文件

3.3.1编辑lb1

bash

root@harbor-01:~# cat /etc/keepalived/keepalived.conf

global_defs {

router_id HARBOR_LB_01

script_user root

enable_script_security

}

vrrp_script chk_haproxy {

script "/usr/bin/systemctl is-active --quiet haproxy"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_k8s_api {

script "/usr/bin/curl -k -s --connect-timeout 2 https://10.0.0.101:6443/healthz | grep -q ok || /usr/bin/curl -k -s --connect-timeout 2 https://10.0.0.102:6443/healthz | grep -q ok || /usr/bin/curl -k -s --connect-timeout 2 https://10.0.0.103:6443/healthz | grep -q ok"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_harbor_local {

script "/usr/bin/curl -s --connect-timeout 2 http://127.0.0.1:8080/api/v2.0/health | grep -q healthy"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_minio_api {

script "/usr/bin/curl -s --connect-timeout 2 http://127.0.0.1:9000/minio/health/live | grep -q '\"status\":\"success\"'"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_minio_console {

script "/usr/bin/curl -s --connect-timeout 2 http://127.0.0.1:9001/minio/health/ready | grep -q '\"status\":\"success\"'"

interval 2

weight 2

fall 2

rise 2

}

# VRID 51: K8s API VIP (10.0.0.110)

vrrp_instance VI_K8S {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass k8svip01

}

virtual_ipaddress {

10.0.0.110/32 dev ens33 label ens33:k8s01

}

track_script {

chk_haproxy

chk_k8s_api

}

# VIP漂移后启动HAProxy(确保VIP先绑定)

notify_master "/usr/bin/systemctl restart haproxy"

notify_backup "/usr/bin/systemctl restart haproxy"

}

# VRID 52: Harbor VIP (10.0.0.111)

vrrp_instance VI_HARBOR {

state MASTER

interface ens33

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass hrbrvip1

}

virtual_ipaddress {

10.0.0.111/32 dev ens33 label ens33:hrbr01

}

track_script {

chk_haproxy

chk_harbor_local

}

notify_master "/usr/bin/systemctl restart haproxy"

notify_backup "/usr/bin/systemctl restart haproxy"

}

# VRID 53: MinIO VIP (10.0.0.112)

vrrp_instance VI_MINIO {

state MASTER

interface ens33

virtual_router_id 53

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass minivip1

}

virtual_ipaddress {

10.0.0.112/32 dev ens33 label ens33:minio01

}

track_script {

chk_haproxy

chk_minio_api

chk_minio_console

}

notify_master "/usr/bin/systemctl restart haproxy"

notify_backup "/usr/bin/systemctl restart haproxy"

}

bash

systemctl restart keepalived.service3.3.2编辑lb2

bash

cat /etc/keepalived/keepalived.conf

global_defs {

router_id HARBOR_LB_02

script_user root

enable_script_security

}

vrrp_script chk_haproxy {

script "/usr/bin/systemctl is-active --quiet haproxy"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_k8s_api {

script "/usr/bin/curl -k -s --connect-timeout 2 https://10.0.0.101:6443/healthz | grep -q ok || /usr/bin/curl -k -s --connect-timeout 2 https://10.0.0.102:6443/healthz | grep -q ok || /usr/bin/curl -k -s --connect-timeout 2 https://10.0.0.103:6443/healthz | grep -q ok"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_harbor_local {

script "/usr/bin/curl -s --connect-timeout 2 http://127.0.0.1:8080/api/v2.0/health | grep -q healthy"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_minio_api {

script "/usr/bin/curl -s --connect-timeout 2 http://127.0.0.1:9000/minio/health/live | grep -q '\"status\":\"success\"'"

interval 2

weight 2

fall 2

rise 2

}

vrrp_script chk_minio_console {

script "/usr/bin/curl -s --connect-timeout 2 http://127.0.0.1:9001/minio/health/ready | grep -q '\"status\":\"success\"'"

interval 2

weight 2

fall 2

rise 2

}

vrrp_instance VI_K8S {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass k8svip01

}

virtual_ipaddress {

10.0.0.110/32 dev ens33 label ens33:k8s01

}

track_script {

chk_haproxy

chk_k8s_api

}

notify_master "/usr/bin/systemctl restart haproxy"

notify_backup "/usr/bin/systemctl restart haproxy"

}

vrrp_instance VI_HARBOR {

state BACKUP

interface ens33

virtual_router_id 52

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass habrvip1

}

virtual_ipaddress {

10.0.0.111/32 dev ens33 label ens33:habr01

}

track_script {

chk_haproxy

chk_harbor_local

}

notify_master "/usr/bin/systemctl restart haproxy"

notify_backup "/usr/bin/systemctl restart haproxy"

}

vrrp_instance VI_MINIO {

state BACKUP

interface ens33

virtual_router_id 53

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass minivip1

}

virtual_ipaddress {

10.0.0.112/32 dev ens33 label ens33:minio01

}

track_script {

chk_haproxy

chk_minio_api

chk_minio_console

}

notify_master "/usr/bin/systemctl restart haproxy"

notify_backup "/usr/bin/systemctl restart haproxy"

}

bash

keepalived -t -c /etc/keepalived/keepalived.conf

systemctl restart keepalived.service3.4 编辑haproxy配置文件

编辑 /etc/haproxy/haproxy.cfg 添加以下内容

bash

cat <<'EOF' > /etc/haproxy/haproxy.cfg

# ========================================

# HAProxy 配置 - K8s API + Harbor LB

# 生成时间: 2026-02-06

# ========================================

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

# ========== K8s API Server 负载均衡(VIP 10.0.0.110) ==========

# 整合frontend+backend为listen,四层TCP模式,轮询策略,对接3个master节点

listen k8s-api-6443

bind 10.0.0.110:6443 # 直接绑定k8s专属VIP,生产环境标准写法

mode tcp

balance roundrobin

option tcplog

option tcp-check

server master-01 10.0.0.101:6443 check inter 2s fall 3 rise 5 # 增加健康检查频率,更灵敏

server master-02 10.0.0.102:6443 check inter 2s fall 3 rise 5

server master-03 10.0.0.103:6443 check inter 2s fall 3 rise 5

# ========== Harbor HTTP 80端口负载均衡(VIP 10.0.0.111) ==========

# 整合frontend+backend为listen,七层HTTP模式,源IP会话保持,对接Harbor 8080

listen harbor-http-80

bind 10.0.0.111:80 # 直接绑定Harbor专属VIP

mode http # 重写默认的tcp模式,用七层HTTP

option httplog

balance source # 源IP哈希,实现会话保持,适配Harbor操作

# HTTP 重定向到 HTTPS(推荐)

acl is_health_check path -i /api/v2.0/health # Harbor健康检查路径

redirect scheme https code 301 if !{ ssl_fc } !is_health_check

server harbor-01 10.0.0.104:8080 check inter 2s fall 3 rise 5

server harbor-02 10.0.0.105:8080 check inter 2s fall 3 rise 5

# ========== Harbor HTTPS 443端口负载均衡(VIP 10.0.0.111) ==========

# 整合frontend+backend为listen,四层TCP模式,源IP会话保持,对接Harbor 8443,配置SSL证书

listen harbor-https-443

bind 10.0.0.111:443 ssl crt /etc/haproxy/certs/zz520.online.pem # 直接绑定Harbor专属VIP+SSL证书

mode http

option httplog

option forwardfor # 必须添加!传递客户端真实IP

http-request set-header X-Forwarded-Proto https # 必须添加!告知Harbor原始协议是HTTPS

balance source

server harbor-01 10.0.0.104:8080 check inter 2s fall 3 rise 5

server harbor-02 10.0.0.105:8080 check inter 2s fall 3 rise 5

# ========== MinIO API 负载均衡(VIP 10.0.0.112:9000) ==========

# 四层 TCP 模式 - 适配 S3 兼容 API 的二进制协议

listen minio-api-9000

bind 10.0.0.112:9000

mode tcp

option tcplog

balance leastconn # 优选连接数最少的节点,适合长连接

timeout client 1h # MinIO 大文件传输需长超时

timeout server 1h

timeout connect 5s

server minio-01 10.0.0.106:9000 check inter 2s fall 3 rise 5 weight 10

server minio-02 10.0.0.107:9000 check inter 2s fall 3 rise 5 weight 10

# ========== MinIO Console 负载均衡(VIP 10.0.0.112:9001) ==========

# 七层 HTTP 模式 - 控制台需会话保持

listen minio-console-9001

bind 10.0.0.112:9001

mode http

option httplog

balance source # 源 IP 会话保持,避免控制台操作跳节点

timeout client 30m

timeout server 30m

# 强制 HTTPS 重定向(若已配置 SSL 证书)

# redirect scheme https code 301 if !{ ssl_fc }

server minio-01 10.0.0.106:9001 check inter 2s fall 3 rise 5

server minio-02 10.0.0.107:9001 check inter 2s fall 3 rise 5

# ========== MinIO HTTPS 支持(可选) ==========

# 若需 TLS 终止,取消注释以下配置并准备证书

# listen minio-https-9443

# bind 10.0.0.112:9443 ssl crt /etc/haproxy/certs/zz520.online.pem

# mode http

# balance leastconn

# timeout client 1h

# timeout server 1h

# server minio-01 10.0.0.106:9001 check inter 2s fall 3 rise 5

# http-request set-header X-Forwarded-Proto https # 告知MinIO控制台是HTTPS

# server minio-02 10.0.0.107:9001 check inter 2s fall 3 rise 5

# server minio-02 10.0.0.108:9001 check inter 2s fall 3 rise 5

# server minio-02 10.0.0.109:9001 check inter 2s fall 3 rise 5

# ========== HAProxy 监控统计界面 ==========

listen stats

bind 10.0.0.110:8404

mode http

stats enable

stats uri /haproxy-stats

stats refresh 10s

stats auth admin:123456 # 请替换为强密码

# 限制仅运维网段可访问(示例:10.0.0.0/24)

acl is_ops_net src 10.0.0.0/24

http-request allow if is_ops_net

http-request deny

EOF

# 检查配置语法(无任何警告/错误)

haproxy -c -f /etc/haproxy/haproxy.cfg

# 重载配置(不中断现有连接,生产环境推荐)

systemctl reload haproxy

# 确认服务状态正常

systemctl status haproxy4. 部署kubeasz集群

使用vm-master-03作为部署节点(先执行安装2个master,后期好示范添加一个mater)

1.配置免密登录个主机部署控制机为10.0.0.103

在控制机上执行免密ssh

bash

ssh-keygen

#免密脚本

cat > copy_ssh_keys.sh << 'EOF'

#!/bin/bash

# 定义主机信息:分开用户名、IP、端口

declare -A hosts=(

["10.0.0.101"]="root 22"

["10.0.0.102"]="root 22"

["10.0.0.103"]="root 22"

["10.0.0.104"]="root 22"

["10.0.0.105"]="root 22"

)

for ip in "${!hosts[@]}"; do

user_port=(${hosts[$ip]})

user=${user_port[0]}

port=${user_port[1]}

echo "=== 正在向 ${user}@${ip}:${port} 复制公钥 ==="

ssh-copy-id -p "$port" "${user}@${ip}"

if [ $? -eq 0 ]; then

echo " 成功: 公钥已复制到 ${user}@${ip}:${port}"

else

echo " 失败: 无法复制公钥到 ${user}@${ip}:${port}"

fi

echo "-----------------------------"

done

echo "所有主机公钥复制操作完成。"

EOF

chmod +x copy_ssh_keys.sh

echo " 脚本已生成:copy_ssh_keys.sh"

echo " 使用方法:./copy_ssh_keys.sh"

./copy_ssh_keys.sh2 下载工具脚本ezdown

bash

export release=3.6.8

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown编辑脚本调整需要的版本

bash

vim ./ezdown