目录

- 基于`keepalived`和`lvs`的Web集群项目

基于keepalived和lvs的Web集群项目

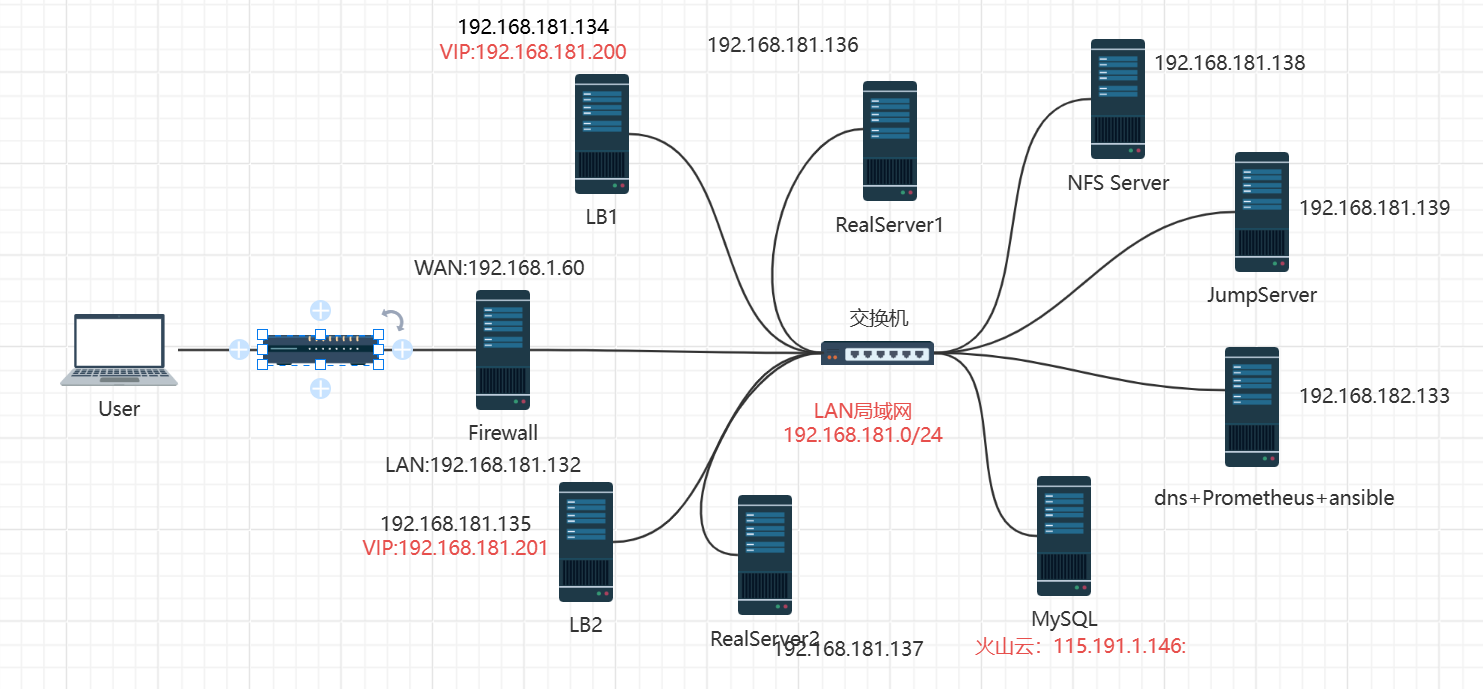

01.项目设计

地址分配

| 网卡类型 | 主机名 | IP 地址 | 角色 / 用途 |

|---|---|---|---|

| Bridged | Firewall | 192.168.1.60 | 防火墙 WAN 口 |

| Host-only | Firewall | 192.168.181.132 | 防火墙 LAN 口 |

| Host-only | DNS+Ansible+Prometheus | 192.168.181.133 | 综合服务 |

| Host-only | LB-Server1 | 192.168.181.134 | 负载均衡节点 1 |

| Host-only | LB-Server2 | 192.168.181.135 | 负载均衡节点 2 |

| Host-only | RealServer1 | 192.168.181.136 | 应用服务器 1 |

| Host-only | RealServer2 | 192.168.181.137 | 应用服务器 2 |

| Host-only | NFS-Server | 192.168.181.138 | 共享存储服务器 |

| Host-only | JumpServer | 192.168.181.139 | 堡垒机 |

| ECS服务器 | Database | 115.191.1.146 | 数据库服务器 |

网络拓扑图

服务器配置

| 服务器 | 操作系统版本 | 配置 |

|---|---|---|

| 数据库 | Rocky Linux 9.5 | 2C2G |

| JumpServer | Rocky Linux 10.0 | 1C2G |

| 其他服务器 | Rocky Linux 10.0 | 1C0.5G |

需求与目标

搭建一个满足了 Web 业务的高可用需求的集群项目

02.环境准备

初始化

编写init.sh初始化脚本

#!/bin/bash

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

#改变selinux参数

setenforce 0

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config

#下载一些必要的软件

yum install wget vim net-tools -y主机名 按照地址分配时设定的Hostname进行设置

hostnamectl set-hostname XXX配置静态IP

防火墙IP配置

-

WAN口

vim /etc/NetworkManager/system-connections/ens160.nmconnection # WAN口 桥接模式

配置静态IP 网关可以在cmd中用ipconfig/all 查看

[ipv4]

method=manual

addresses1=192.168.1.60/24,192.168.1.1

dns=114.114.114.114

更改完配置文件之后重新加载网卡

chmod 600 /etc/NetworkManager/system-connections/ens160.nmconnection

nmcli connection reload ens160

nmcli connection up ens160- LAN口

查看网卡名称(有时候在/etc/NetworkManager/system-connections/下查看不到第二块网卡)

nmcli connection show加载第二块 网卡(ens224)

nmcli connection modify "Wired connection 1" connection.id ens224

nmcli connection reload

vim /etc/NetworkManager/system-connections/ens224.nmconnection

# LAN 口作为内部服务器的网关,自己不需要配置网关

[ipv4]

method=manual

addresses1=192.168.181.132/24更改完配置文件之后重新加载网卡

chmod 600 /etc/NetworkManager/system-connections/ens224.nmconnection

nmcli connection reload ens224

nmcli connection up ens224其他服务器配置

修改网卡配置文件

vim /etc/NetworkManager/system-connections/ens160.nmconnection

#dns改为DNS服务器的地址,网关改成防火墙LAN口的地址

[ipv4]

method=manual

addresses1=192.168.181.137/24,192.168.181.132

dns=192.168.181.133更改完配置文件之后重新加载网卡

chmod 600 /etc/NetworkManager/system-connections/ens160.nmconnection

nmcli connection reload ens160

nmcli connection up ens160防火墙搭建

开启路由转发功能

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

sysctl -p在编写iptables规则脚本

(/root/firewall_rule.sh)

# 1. 开启路由转发

echo 1 > /proc/sys/net/ipv4/ip_forward

# 2. 清空规则

iptables -F

iptables -t nat -F

# 3. 配置 SNAT(内网流量伪装成外网 IP 访问外网)

iptables -t nat -A POSTROUTING -s 192.168.181.0/24 -o ens160 -j MASQUERADE

# 4. 配置 DNAT(这里的LB双VIP机制没有用到,需再配置一台防火墙进行转发,由于配置有限暂未实现)

iptables -t nat -A PREROUTING -d 192.168.1.60 -i ens160 -p tcp --dport 80 -j DNAT --to-destination 192.168.181.200

# 5. 配置Prometheus端口转发

iptables -t nat -A PREROUTING -d 192.168.1.60 -i ens160 -p tcp --dport 9090 -j DNAT --to-destination 192.168.181.133:9090

# 6. 配置Grafana端口转发

iptables -t nat -A PREROUTING -d 192.168.1.60 -i ens160 -p tcp --dport 3000 -j DNAT --to-destination 192.168.181.133:3000

# 7. 配置Jumpserver端口转发

iptables -t nat -A PREROUTING -d 192.168.1.60 -i ens160 -p tcp --dport 8080 -j DNAT --to-destination 192.168.181.139:80保留iptables规则

# 执行脚本

bash /root/firewall_rule.sh

# 保存iptable规则

iptables-save >/etc/sysconfig/iptables_rules

# 开机执行

echo "iptables-restore < /etc/sysconfig/iptables_rules" >> /etc/rc.local

# 授予可执行权限 **********一定要做这一步

chmod +x /etc/rc.d/rc.local查看**iptables**规则

iptables -L -t nat -n03.基础服务

DNS服务器搭建

1.安装bind软件

yum install openssl bind bind-utils -y2.设置named服务

systemctl start named && systemctl enable named3.查看服务监听的端口

[root@AnsibleServer named]# ss -anplut |grep named

udp UNCONN 0 0 192.168.181.141:53 0.0.0.0:* users:(("named",pid=1888,fd=21))

udp UNCONN 0 0 127.0.0.1:53 0.0.0.0:* users:(("named",pid=1888,fd=17))

udp UNCONN 0 0 [::1]:53 [::]:* users:(("named",pid=1888,fd=22))

udp UNCONN 0 0 [fe80::20c:29ff:fe29:9dff]%ens160:53 [::]:* users:(("named",pid=1888,fd=6))

tcp LISTEN 0 5 127.0.0.1:953 0.0.0.0:* users:(("named",pid=1888,fd=26))

tcp LISTEN 0 10 192.168.181.141:53 0.0.0.0:* users:(("named",pid=1888,fd=24))

tcp LISTEN 0 10 127.0.0.1:53 0.0.0.0:* users:(("named",pid=1888,fd=18))

tcp LISTEN 0 10 [::1]:53 [::]:* users:(("named",pid=1888,fd=23))

tcp LISTEN 0 10 [fe80::20c:29ff:fe29:9dff]%ens160:53 [::]:* users:(("named",pid=1888,fd=20))

tcp LISTEN 0 5 [::1]:953 [::]:* users:(("named",pid=1888,fd=27))4.修改配置文件

vim /etc/named.conf修改三处 地方改成any:

listen-on port 53 { any; }; 《======

listen-on-v6 port 53 { any; }; 《======

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

secroots-file "/var/named/data/named.secroots";

recursing-file "/var/named/data/named.recursing";

allow-query { any; }; 《======5.配置缓存域名功能

在/etc/named.rfc1912.zones中增加这样的一段配置:

zone "tom.com" IN {

type primary;

file "tom.com.zone";

allow-update {none; };

};创建 tom.com.zone 文件:

vim /var/named/tom.com.zone里面的配置为:

$TTL 1D

@ IN SOA @ rname.invalid. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

lb1 IN A 192.168.181.134

lb2 IN A 192.168.181.135

web1 IN A 192.168.181.136

web2 IN A 192.168.181.137

nfs IN A 192.168.181.138

jump IN A 192.168.181.139

@ IN A 192.168.181.133

@ IN NS ns1.tom.com.

ns1 IN A 192.168.181.1336.修改本地DNS配置

DNS服务器 配置文件目录为/etc/NetworkManager/system-connections/

[ipv4]

method=manual

addresses1=192.168.181.141/24,192.168.181.140

dns=192.168.181.141;114.114.114.114 # 《======改成自己IP地址7.刷新named服务

systemctl restart named8.检查/etc/resolv.conf

查看是否是配置的本地DNS服务器的地址

[root@AllServer ~]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 192.168.181.133

nameserver 114.114.114.114搭建NFS服务器

客户端和服务端都下载 nfs服务

yum install nfs-utils -y服务端配置

1.启动nfs和开机自启动

systemctl start nfs-server && systemctl enable nfs-server2.修改exports文件

vim /etc/exports

/web/html 192.168.181.0/24(rw,sync,all_squash)3.暴露挂载点

exportfs -rv服务端配置

# 挂在前记得先安装nginx

mount nfs.tom.com:/web/html /usr/local/nginx1/html/

# 考虑开机自启动

echo "mount nfs.tom.com:/web/html /usr/local/nginx1/html/" >> /etc/rc.local

# 授权授权

chmod +x /etc/rc.d/rc.local04.应用服务

Ansible服务器搭建

1.安装ansible

yum install epel-release ansible-core sshpass -y 2.配置主机清单

主机清单文件路径:/etc/ansible/hosts

[LB]

lb1.tom.com

lb2.tom.com

[WEB]

web1.tom.com

web2.tom.com

[NFS]

nfs.tom.com

[DNS]

tom.com

[JUMP]

jump.tom.com3.建立免密通道

-

生成密钥

ssh-keygen # 生成密钥 一直enter就行

-

传递密钥

ssh-copy-id -i /root/.ssh/id_ed25519.pub root@$1

-

编写初始化脚本

vim /shell/init.sh

#!/bin/bash

##关闭防火墙

systemctl stop firewalld

systemctl disable firewalld#改变selinux参数

setenforce 0

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config#安装软件

yum install net-tools wget net-tools vim -y -

通过

ansible进行服务器初始化ansible LB -m script -a "/shell/init.sh"

ansible LB -m yum -a "name=keepalived"

故障

如果其他机器是同一台机器克隆 所得,那么会出现host认证指纹重复,需要这样做解决

# 删除原来的主机密钥

sudo rm -f /etc/ssh/ssh_host_*

# 重新生成新的、唯一的主机密钥

sudo ssh-keygen -A

# 重启 SSH 服务

sudo systemctl restart sshd再删除**/root/.ssh/**下的所有文件,重新建立免密通道

LB服务器搭建

1.下载keepalived软件

yum install keepalievd -y

# 也可以在ansible服务器上执行 ansible LB -m yum -a "name=keepalived"2.修改配置文件

vim /etc/keepalived/keepalived.conf 下面对LB 进行配置,使用的是keepalived 实现高可用**(HA)**,使用lvs的DR模式(直接路由)进行负载均衡

LB1的配置

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict 《------------------关掉严格模式

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface ens160

virtual_router_id 50

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.181.200

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens160

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.181.201

}

}

virtual_server 192.168.181.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.181.136 80 {

weight 1

HTTP_GET {

url {

path /

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.181.137 80 {

weight 1

HTTP_GET {

url {

path /

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

virtual_server 192.168.181.201 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.181.136 80 {

weight 1

HTTP_GET {

url {

path /

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.181.137 80 {

weight 1

HTTP_GET {

url {

path /

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}LB2的配置

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

virtual_router_id 50

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.181.200

}

}

vrrp_instance VI_2 {

state MASTER

interface ens160

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.181.201

}

}

virtual_server 192.168.181.201 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

persistence_granularity 255.255.255.255

protocol TCP

real_server 192.168.181.136 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.181.137 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

virtual_server 192.168.181.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

persistence_granularity 255.255.255.255

protocol TCP

real_server 192.168.181.136 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

real_server 192.168.181.137 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}配置完启动服务再查看IP地址

systemctl start keepalived && systemctl enable keepalived

# LB1的配置

[root@LB1 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:43:d0:fc brd ff:ff:ff:ff:ff:ff

altname enp3s0

altname enx000c2943d0fc

inet 192.168.181.134/24 brd 192.168.181.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.181.200/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe43:d0fc/64 scope link noprefixroute

valid_lft forever preferred_lft forever

# LB2的配置

[root@LB2 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a0:82:3a brd ff:ff:ff:ff:ff:ff

altname enp3s0

altname enx000c29a0823a

inet 192.168.181.135/24 brd 192.168.181.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.181.201/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea0:823a/64 scope link noprefixroute

valid_lft forever preferred_lft foreverLB服务器开启路由转发

echo 1 > /proc/sys/net/ipv4/ip_forward

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

sysctl -p在WEB服务器上绑定VIP和主机路由

vim /root/vip_set.sh

# 只绑定 LVS 使用的 VIP

ip addr add 192.168.181.200/32 dev lo

ip addr add 192.168.181.201/32 dev lo

# 添加主机路由

ip route add 192.168.181.200/32 dev lo:0

ip route add 192.168.181.201/32 dev lo:1同时设置开机自启动

bash /root/vip_set.sh

echo "bash /root/vip_set.sh >>/etc/rc.local"

# 授权授权********

chmod +x /etc/rc.d/rc.localRealServer服务器

创建一键安装脚本

vim /root/one_key_install_nginx.sh

#!/bin/bash

#改主机名

hostnamectl set-hostname web-nginx1

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

#改变selinux参数

setenforce 0

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config

id Tom || useradd Tom

#下载依赖库

yum install gcc vim openssl-devel pcre2-devel zlib-devel git -y

cd /usr/local/src/

git clone https://github.com/vozlt/nginx-module-vts.git

#创建文件夹

mkdir -p /nginx

cd /nginx

#下载压缩包

yum install wget -y

wget https://nginx.org/download/nginx-1.29.4.tar.gz

#解压

tar -xf nginx-1.29.4.tar.gz

#进入软件包

cd nginx-1.29.4/

#产生Makefile文件

./configure --prefix=/usr/local/nginx1 --user=Tom --group=Tom --with-http_ssl_module --with-http_v2_module --with-http_v3_module --with-http_sub_module --with-stream --with-stream_ssl_module --with-threads --with-http_stub_status_module --add-module=/usr/local/src/nginx-module-vts

#编译

make -j 2

#编译安装

make install

#进入nginx

cd /usr/local/nginx1/sbin

#修改path变量

PATH=/usr/local/nginx1/sbin:$PATH

echo 'PATH=/usr/local/nginx1/sbin:$PATH'>> /etc/bashrc

#考虑nginx开机自启

echo '/usr/local/nginx1/sbin/nginx' >>/etc/rc.local

chmod +x /etc/rc.d/rc.local

#启动

nginx

echo "nginx 安装启动完成"执行脚本

bash /root/one_key_install_nginx.shMySQL配置

创建一键安装脚本

**注意:**此脚本适用于Rocky Linux 9版本

vim /root/one_key-install_mysql.sh

#!/bin/bash

set -e

yum install wget -y

wget https://dev.mysql.com/get/mysql80-community-release-el9-4.noarch.rpm

dnf -y install mysql80-community-release-el9-4.noarch.rpm

dnf -y install mysql-community-server

systemctl start mysqld

systemctl enable mysqld

origin=$(cat /var/log/mysqld.log |grep password|sed 's/.*host: //')

mysql -uroot -p"$origin" #<<EOF

#alter user 'root'@'localhost' identified by "@Deng111";

#exit

#EOF执行脚本

bash /root/one_key-install_mysql.sh修改密码

alter user user() identified by '@Aa111'05.运维与监控

Prometheus配置

1.创建一键安装脚本

vim /root/one_key_install_promethus.sh

mkdir /prometheus

cd /prometheus

wget https://github.com/prometheus/prometheus/releases/download/v3.9.1/prometheus-3.9.1.linux-amd64.tar.gz

tar xf prometheus-3.9.1.linux-amd64.tar.gz

mv prometheus-3.9.1.linux-amd64 prometheus

cd prometheus

PATH=/prometheus/prometheus:$PATH

echo "PATH=/prometheus/prometheus:$PATH" >> /etc/bashrc

cat > /usr/lib/systemd/system/prometheus.service << EOF

[Unit]

# 服务描述

Description=Prometheus Monitoring System

# 依赖:网络就绪后启动

After=network.target remote-fs.target nss-lookup.target

[Service]

# 运行用户(建议创建专用用户,避免root)

User=prometheus

Group=prometheus

# 进程类型:forking表示启动后会fork子进程

Type=simple

# 禁止核心转储(避免占用磁盘)

LimitCORE=infinity

# 打开文件数限制(Prometheus需要大文件描述符)

LimitNOFILE=65535

# 进程数限制

LimitNPROC=65535

# 工作目录(Prometheus安装目录,需根据你的实际路径修改)

WorkingDirectory=/prometheus/prometheus

# 启动命令(指定配置文件路径,需根据实际路径修改)

ExecStart=/prometheus/prometheus/prometheus \

--config.file=/prometheus/prometheus/prometheus.yml \

--storage.tsdb.path=/data/prometheus \

--web.listen-address=0.0.0.0:9090 \

--web.read-timeout=5m \

--web.max-connections=10 \

--query.max-concurrency=20 \

--query.timeout=2m

# 重启策略:异常退出时自动重启

Restart=on-failure

# 重启间隔

RestartSec=5s

# 日志重定向(集成到systemd日志)

StandardOutput=journal

StandardError=journal

SyslogIdentifier=prometheus

[Install]

# 开机自启的目标级别

WantedBy=multi-user.target

EOF

useradd prometheus

mkdir /data/prometheus -p

cd /data/prometheus/

chown -R prometheus:prometheus /data/prometheus

systemctl daemon-reload2.执行脚本

bash /root/one_key_install_prometheus.sh3.启动prometheus

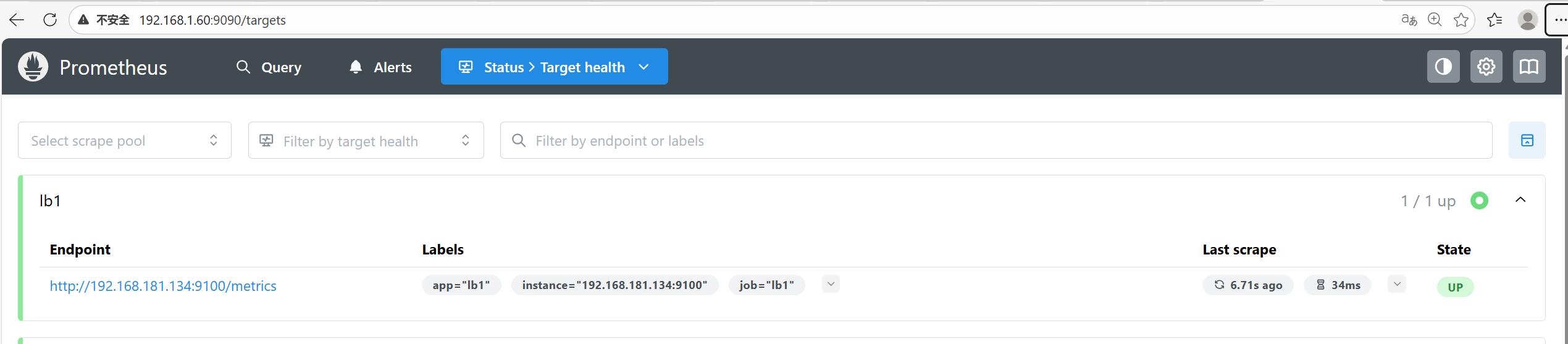

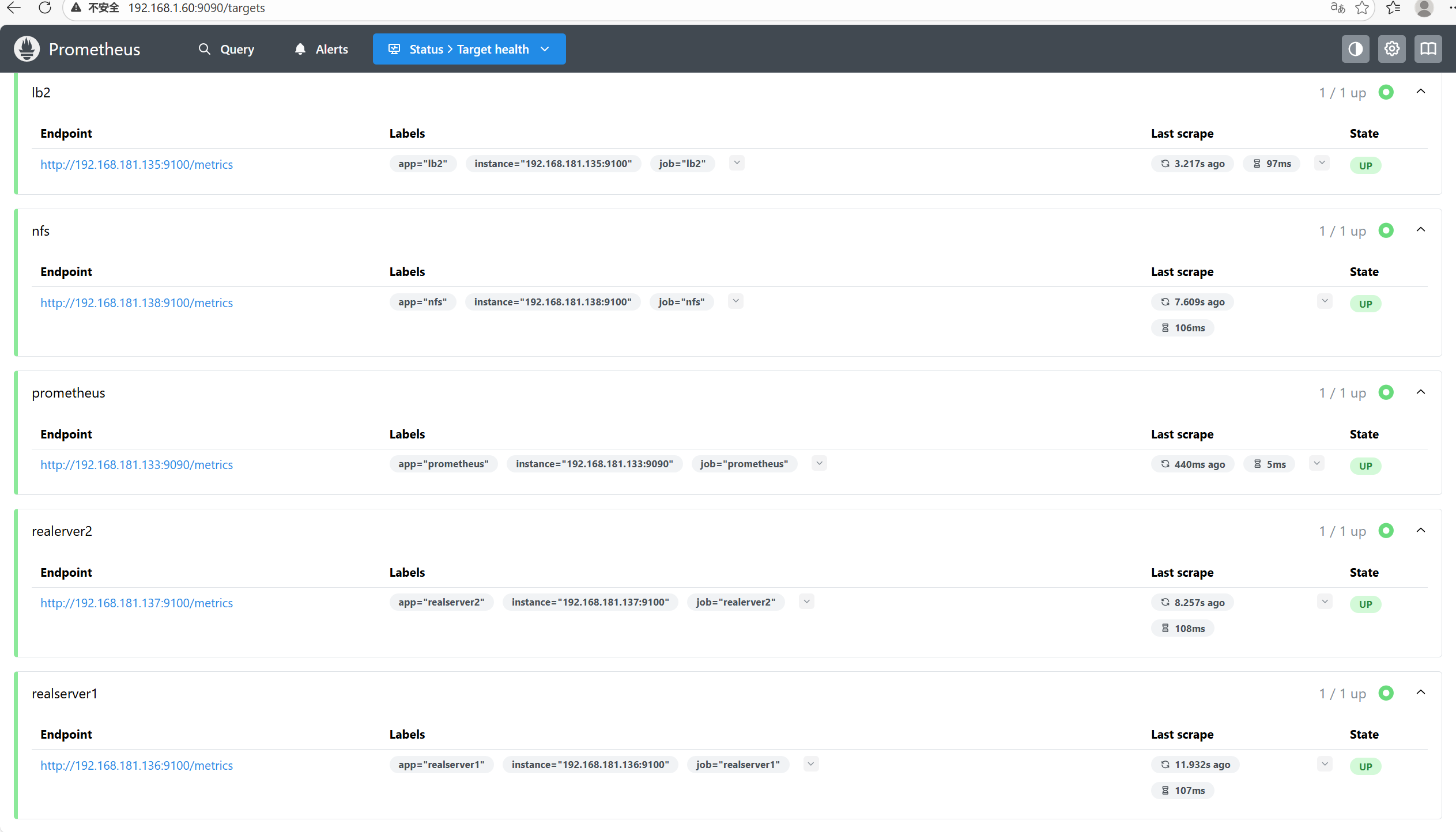

systemctl start prometheus && systemctl enable prometheus4.效果图

node_exporter配置

1.在ansible服务器执行

wget https://github.com/prometheus/node_exporter/releases/download/v1.10.2/node_exporter-1.10.2.linux-amd64.tar.gz

ansible all -m copy -a 'src=/root/node_exporter-1.10.2.linux-amd64.tar.gz dest=/root'2.编写一键安装脚本

vim /root/node_exporter.sh

mkdir /node_exporter

cd /node_exporter

cp /root/node_exporter-1.10.2.linux-amd64.tar.gz .

tar xf node_exporter-1.10.2.linux-amd64.tar.gz

mv node_exporter-1.10.2.linux-amd64 node_exporter

cat > /usr/lib/systemd/system/node_exporter.service << EOF

[Unit]

# 服务描述

Description=Node Exporter - Prometheus Host Metrics Collector

# 依赖:网络就绪后启动

After=network.target remote-fs.target nss-lookup.target

# 优先级:在Prometheus之前启动(可选,若本机部署Prometheus)

Before=prometheus.service

[Service]

# 运行用户(建议专用用户,避免root)

User=node_exporter

Group=node_exporter

# 进程类型:simple(Node Exporter无fork,直接前台运行)

Type=simple

# 资源限制:放开文件数/进程数限制

LimitCORE=infinity

LimitNOFILE=65535

LimitNPROC=65535

# 工作目录(Node Exporter安装目录,需按实际路径修改)

WorkingDirectory=/node_exporter

# 启动命令(指定二进制路径+自定义参数,需修改路径)

ExecStart=/node_exporter/node_exporter/node_exporter \

--web.listen-address=0.0.0.0:9100 \

--web.telemetry-path=/metrics \

--collector.disable-defaults \

--collector.cpu \

--collector.meminfo \

--collector.diskstats \

--collector.netdev \

--collector.loadavg \

--collector.filesystem \

--collector.processes \

--collector.systemd

# 重启策略:异常退出/崩溃时自动重启

Restart=on-failure

RestartSec=5s

# 日志集成到systemd日志

StandardOutput=journal

StandardError=journal

SyslogIdentifier=node_exporter

[Install]

# 开机自启目标级别

WantedBy=multi-user.target

EOF

useradd node_exporter

PATH=/node_exporter/node_exporter:$PATH

chown -R node_exporter:node_exporter /node_exporter/node_exporter

chmod 755 /node_exporter/node_exporter

systemctl start node_exporter

#systemctl status node_exporter

systemctl enable node_exporter 3.用ansible执行脚本

ansible all -m script -a "/root/node_exporter.sh"4.修改prometheus的yaml文件

vim /prometheus/prometheus/prometheus.yml

# 末行修改

- job_name: "prometheus"

static_configs:

- targets: ["192.168.181.133:9090"]

labels:

app: "prometheus"

- job_name: "nfs"

static_configs:

- targets: ["192.168.181.138:9100"]

labels:

app: "nfs"

- job_name: "realserver1"

static_configs:

- targets: ["192.168.181.136:9100"]

labels:

app: "realserver1"

- job_name: "realerver2"

static_configs:

- targets: ["192.168.181.137:9100"]

labels:

app: "realserver2"

- job_name: "lb1"

static_configs:

- targets: ["192.168.181.134:9100"]

labels:

app: "lb1"

- job_name: "lb2"

static_configs:

- targets: ["192.168.181.135:9100"]

labels:

app: "lb2"

- job_name: "jumpserver"

static_configs:

- targets: ["192.168.181.139:9100"]

labels:

app: "jumpserver"5.重启prometheus服务

systemctl restart prometheus6.效果图

访问防火墙WAN口的9090端口

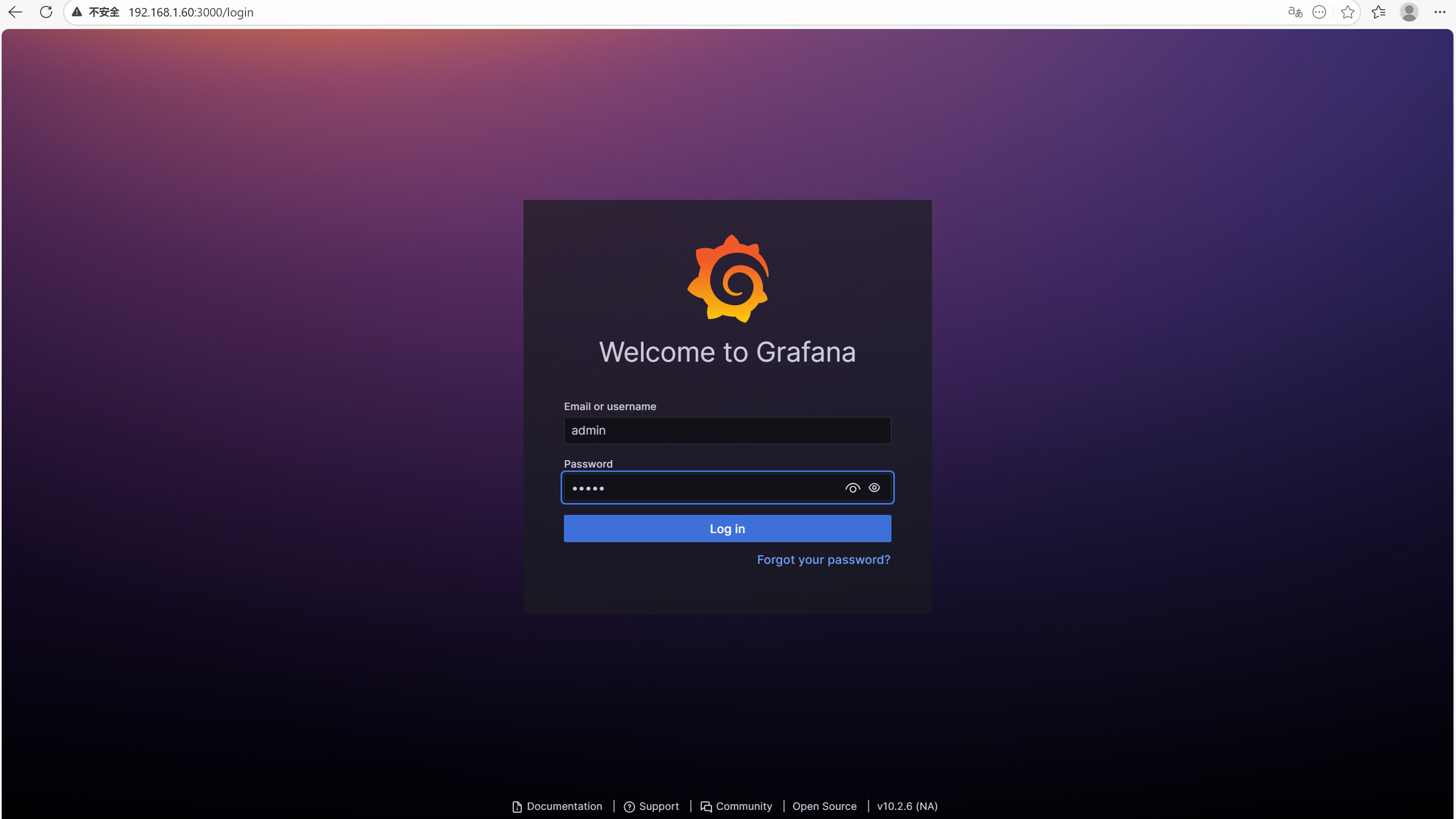

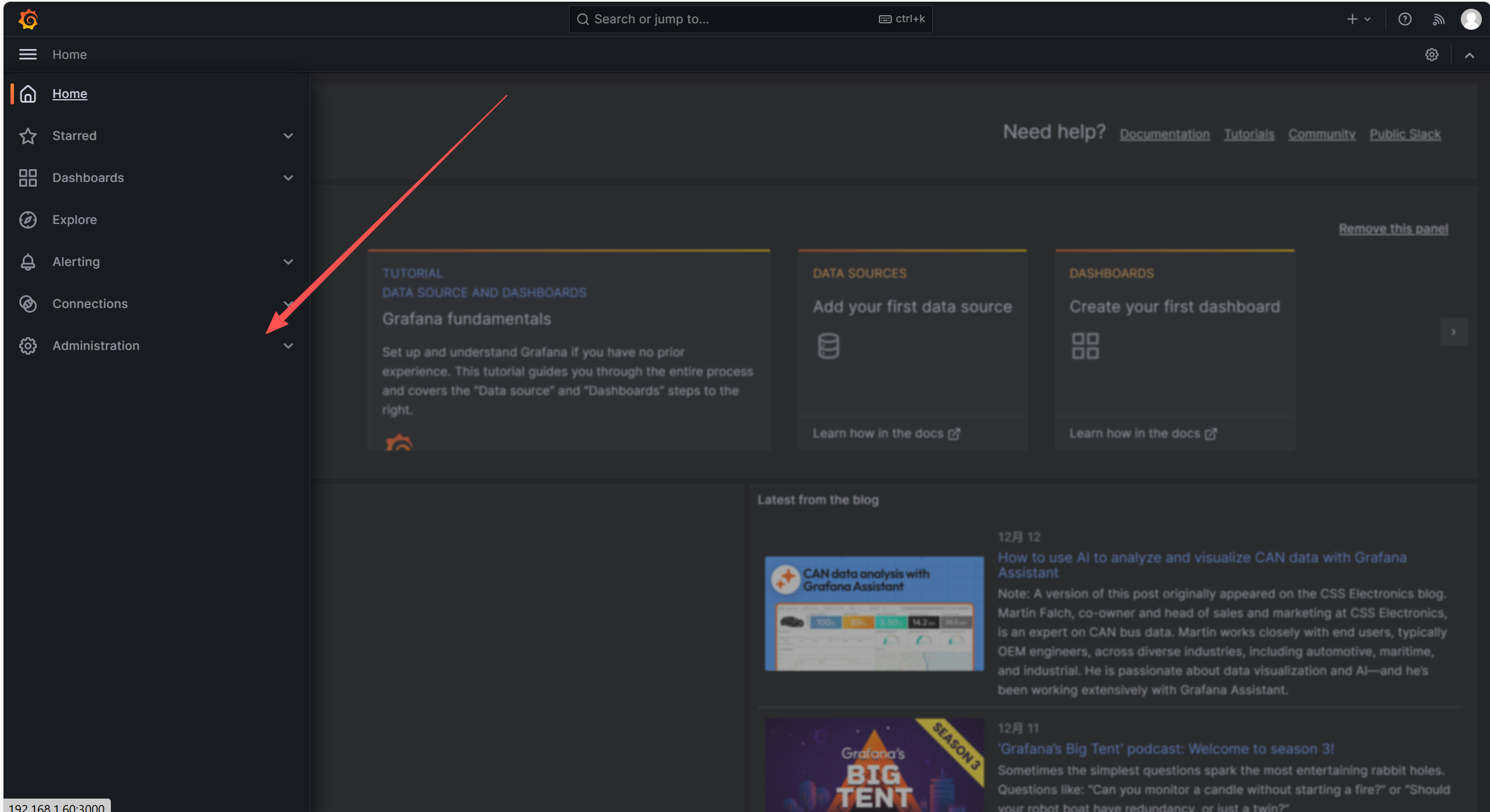

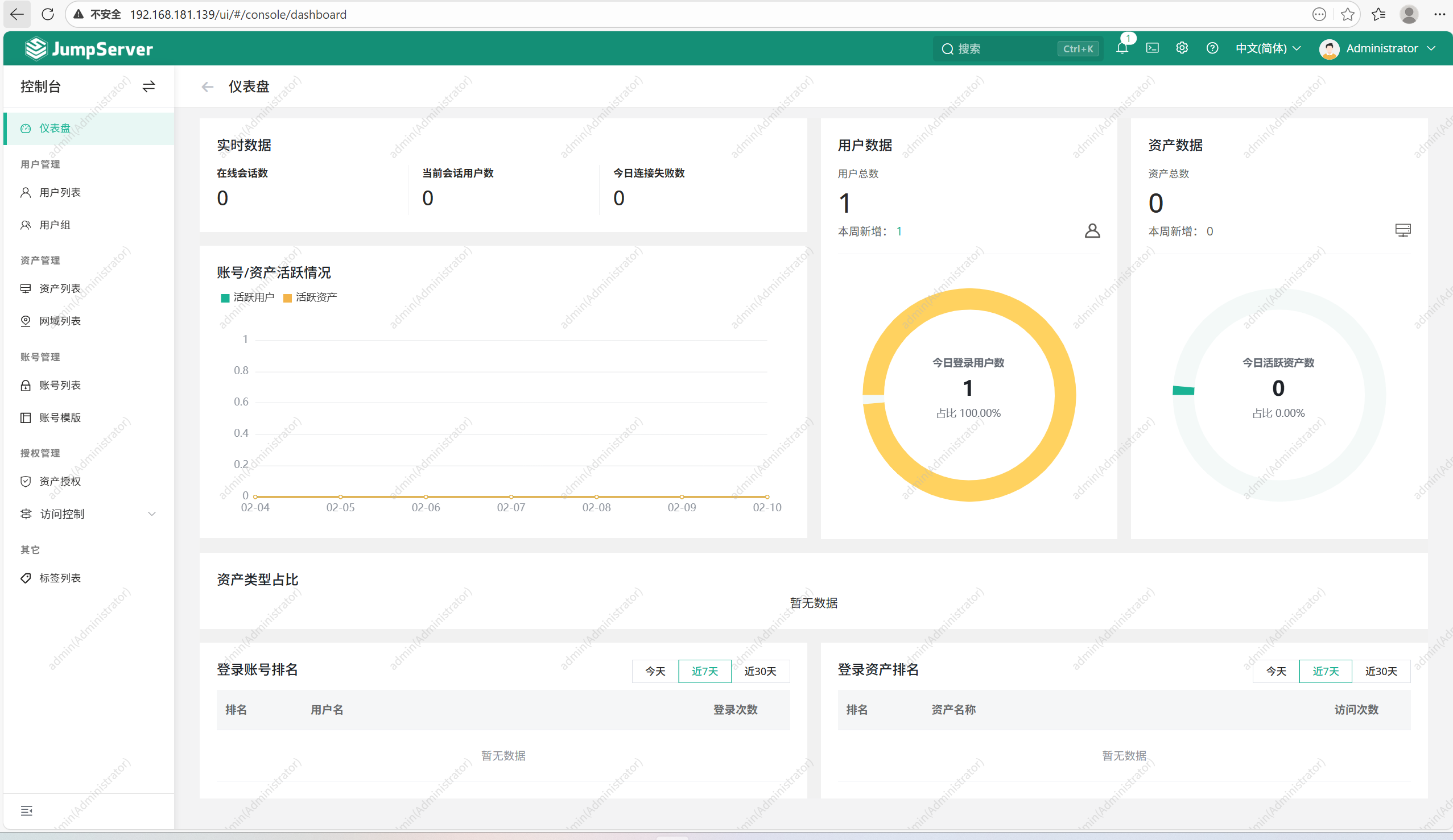

Grafana配置

1.安装软件包

yum install grafana2.启动服务

systemctl start grafana-server && systemctl enable grafana-server3.访问防火墙WAN口的3000端口

首次登录默认账号密码:

admin/admin

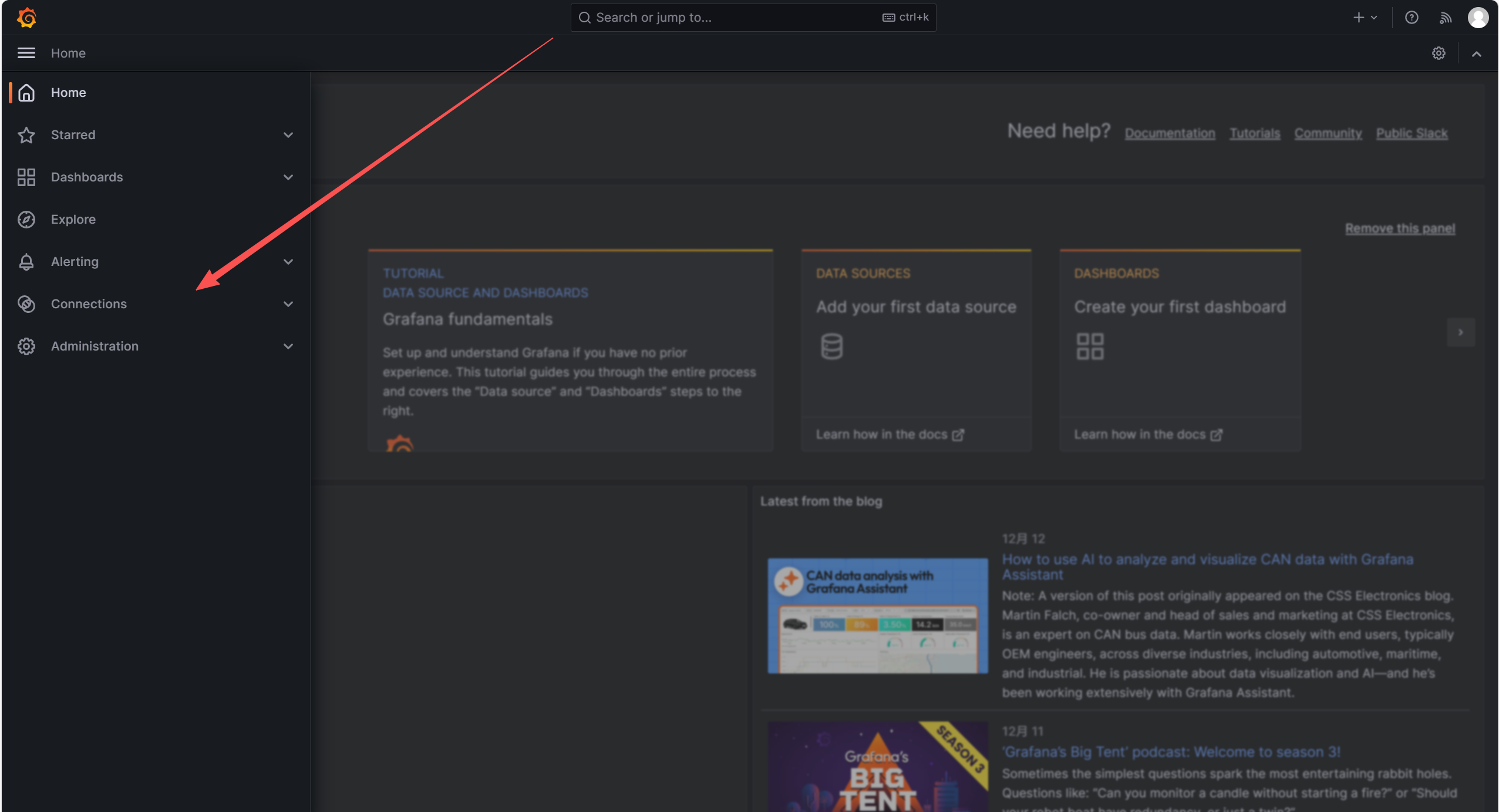

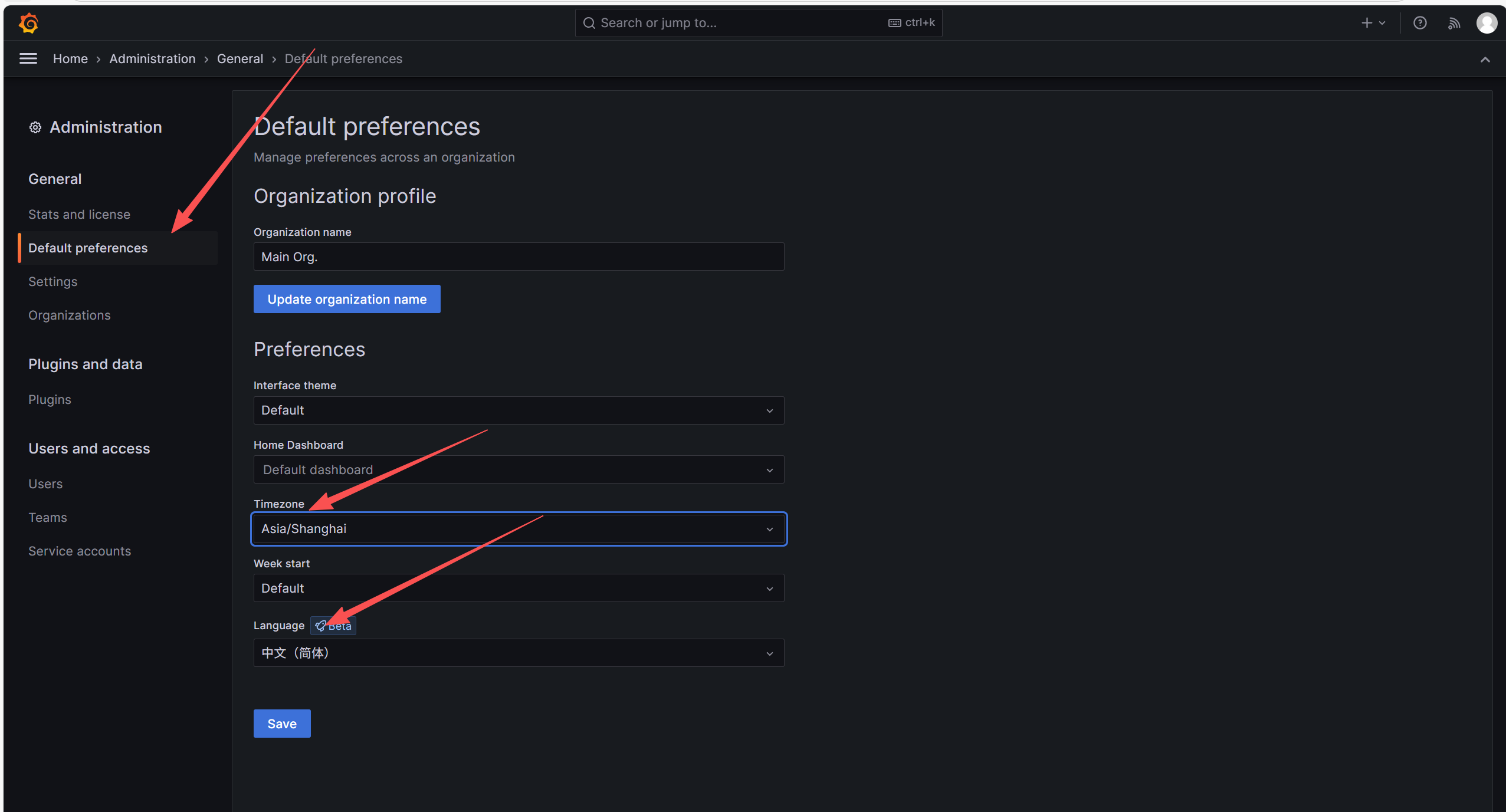

4.修改时区和语言

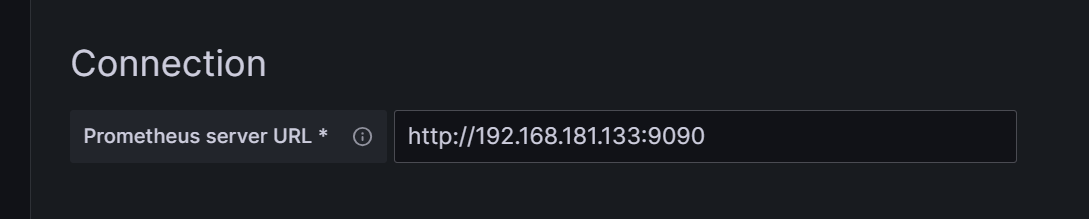

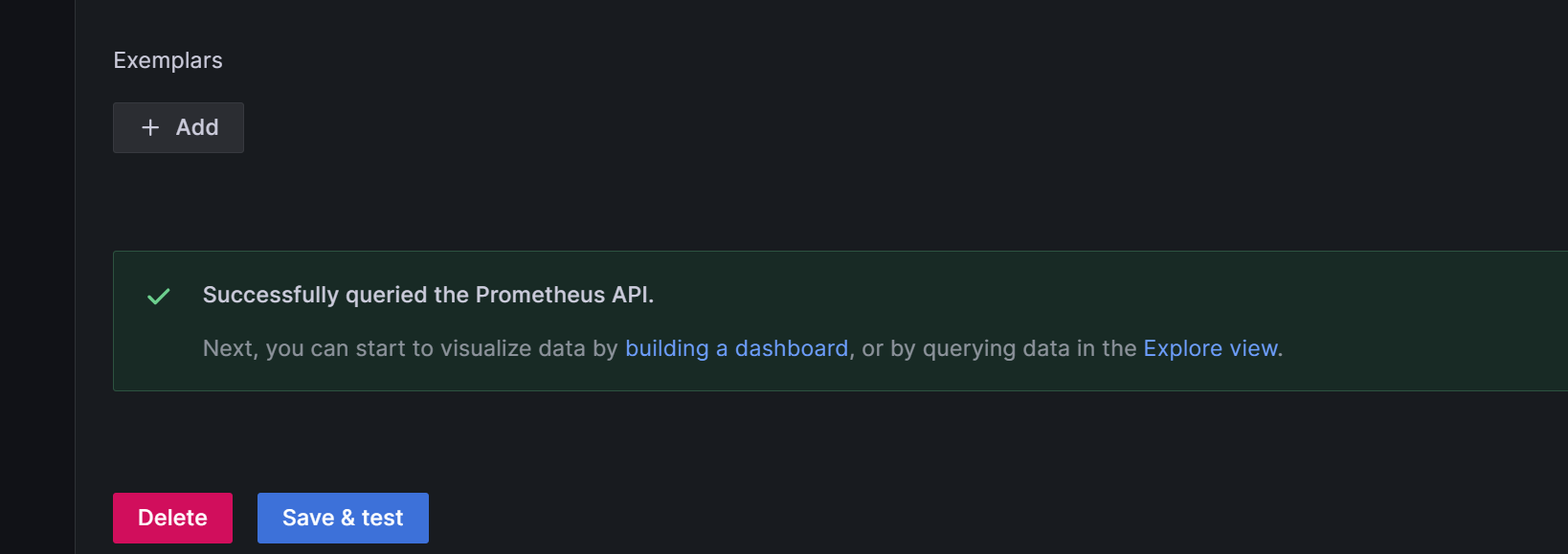

5.创建数据源

输入prometheus服务器的IP

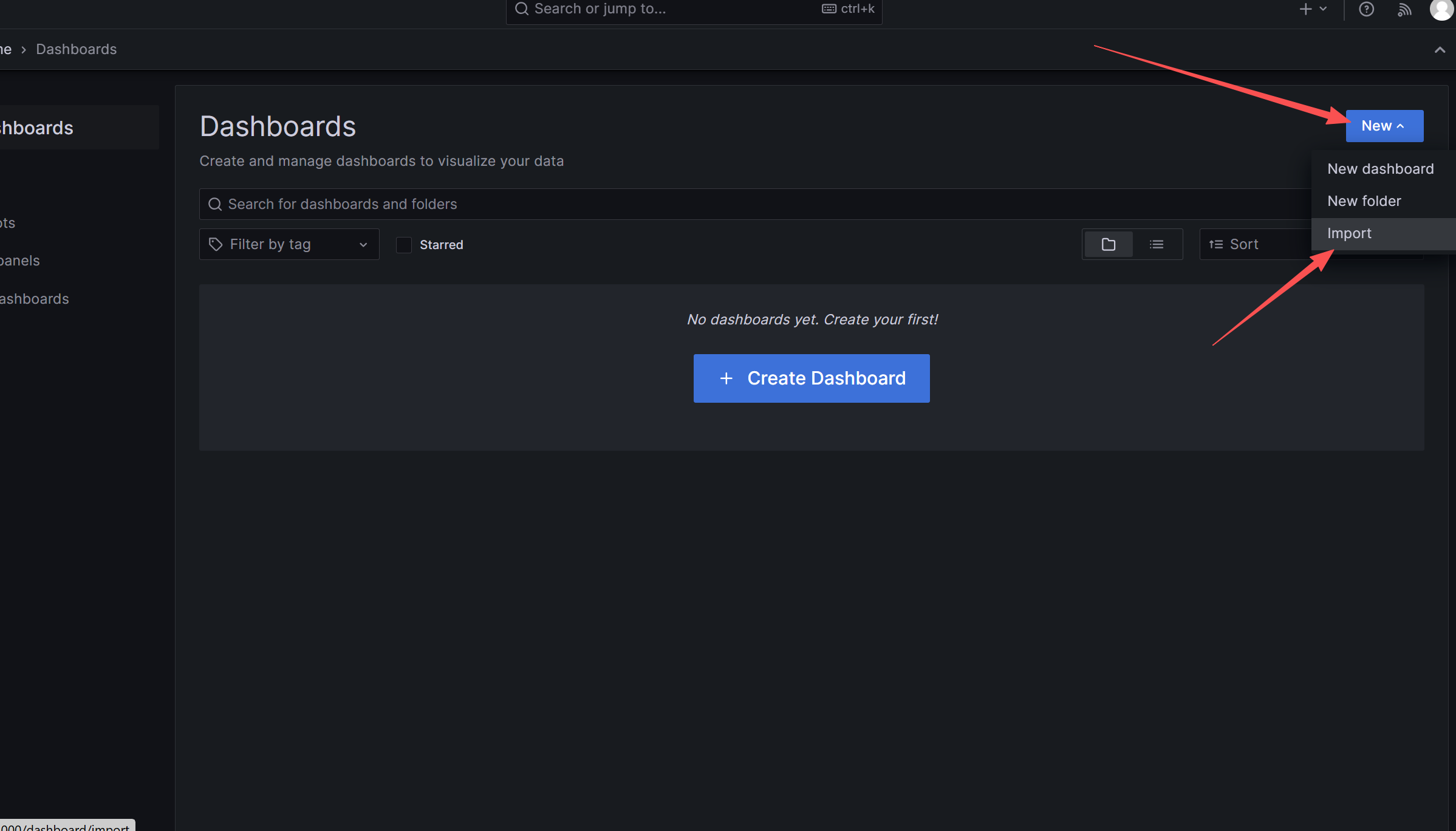

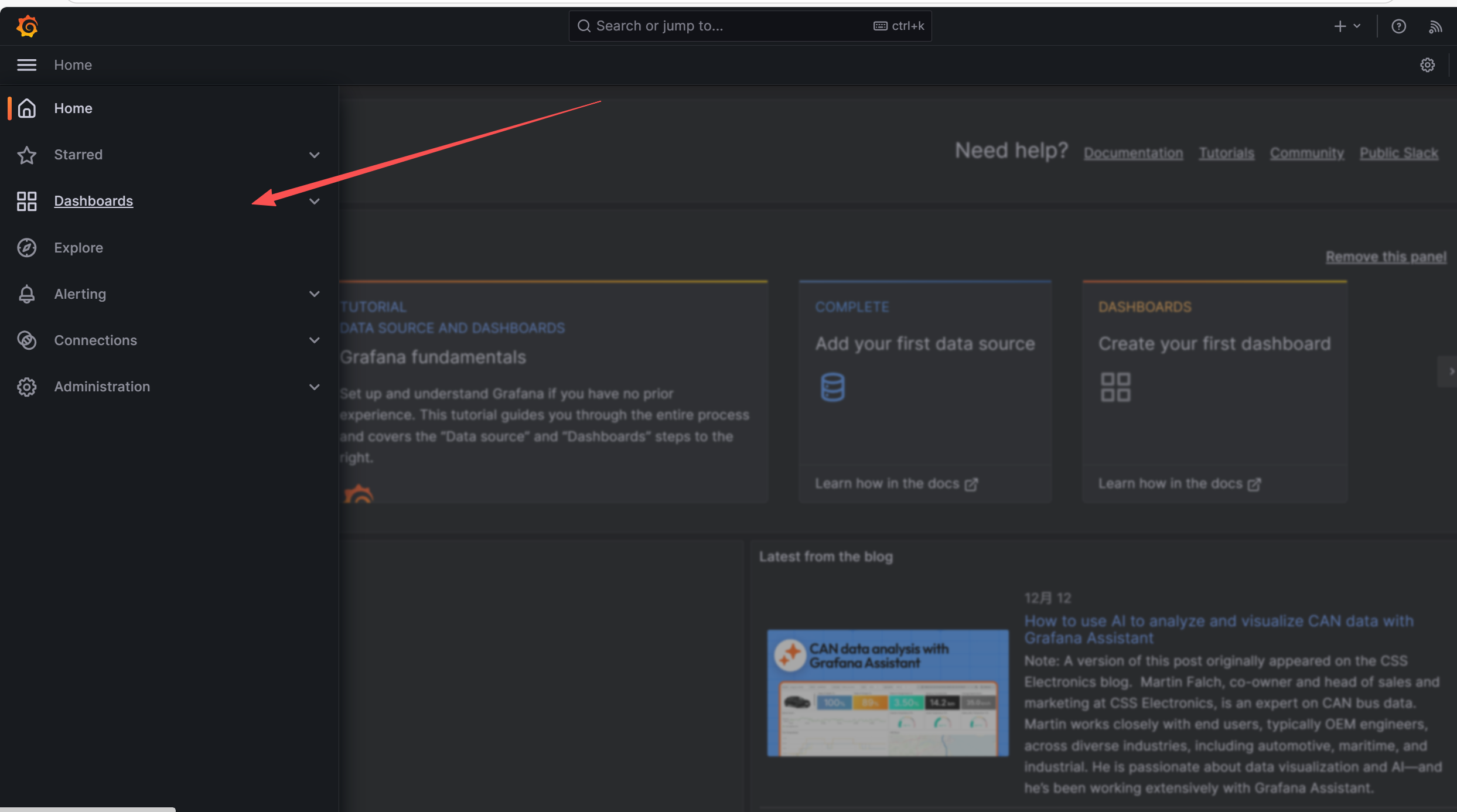

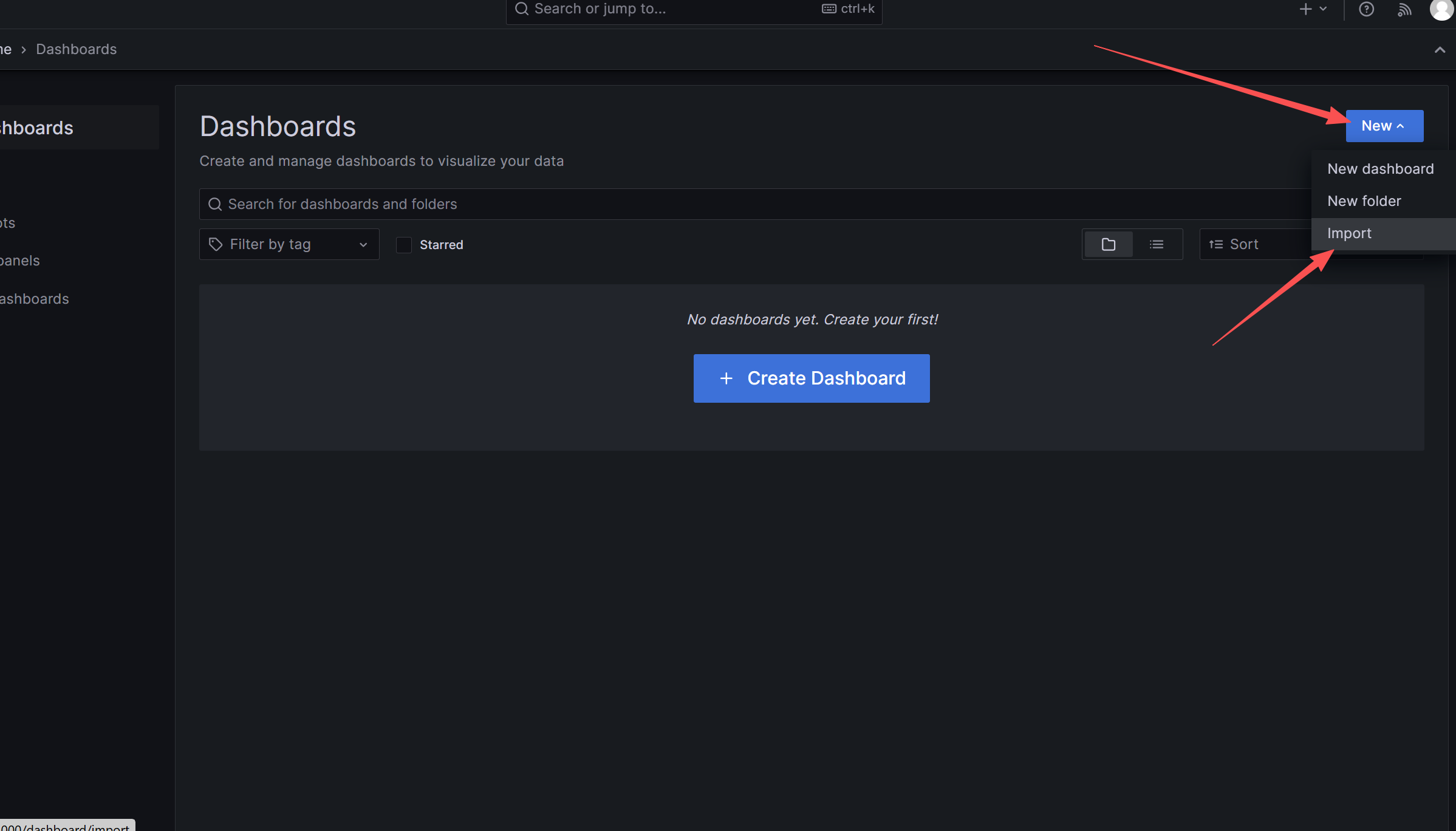

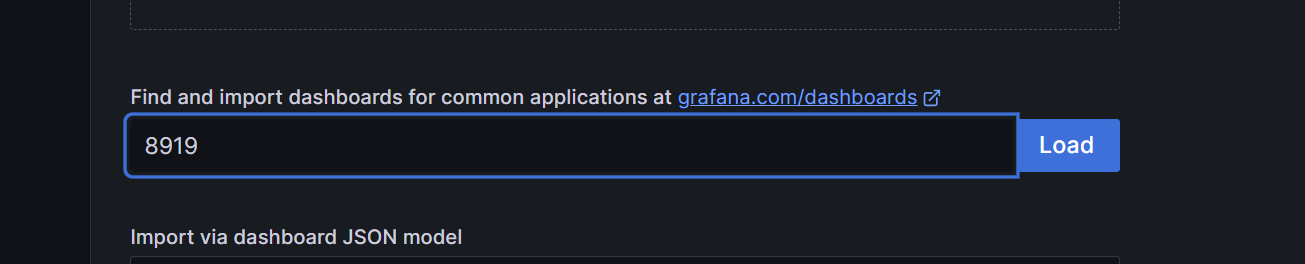

6.配置仪表盘

输入8919点击load(8919是中文的,效果挺好)

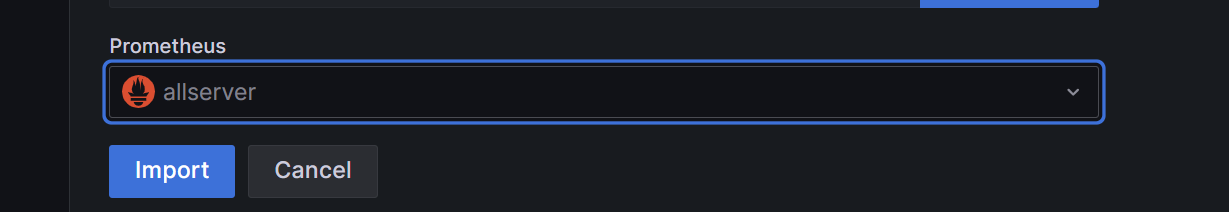

7.效果图

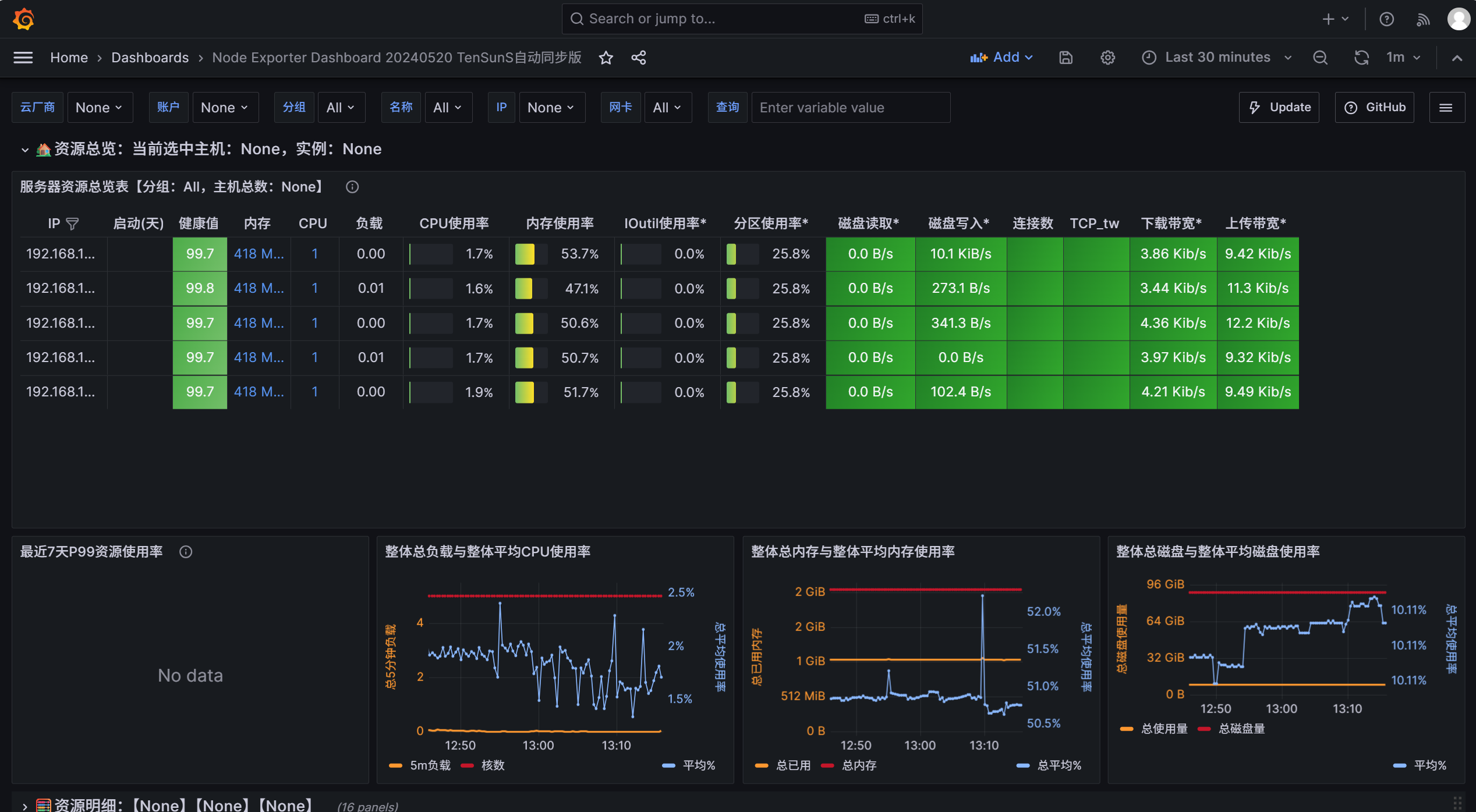

安装部署jumpserver

可以参考JumpServer 文档进行配置

1.更新仓库并下载必要的软件

yum update

yum install -y wget curl tar gettext iptables2.下载mariadb

yum install mariadb-server -y

systemctl start mariadb && systemctl enable mariadb3.修改root密码

ALTER USER 'root'@'localhost' IDENTIFIED BY '@Aa111';4.下载jumpserver软件包

去官网安装最新的Jumpserver离线包放到/opt目录下

cd /opt

tar -xf jumpserver-ce-v4.10.15-x86_64.tar.gz

cd jumpserver-ce-v4.10.15-x86_64

# 安装

./jmsctl.sh install

# 启动

./jmsctl.sh start5.访问防火墙WAN口的8080端口

账号:admin

密码:ChangeMe

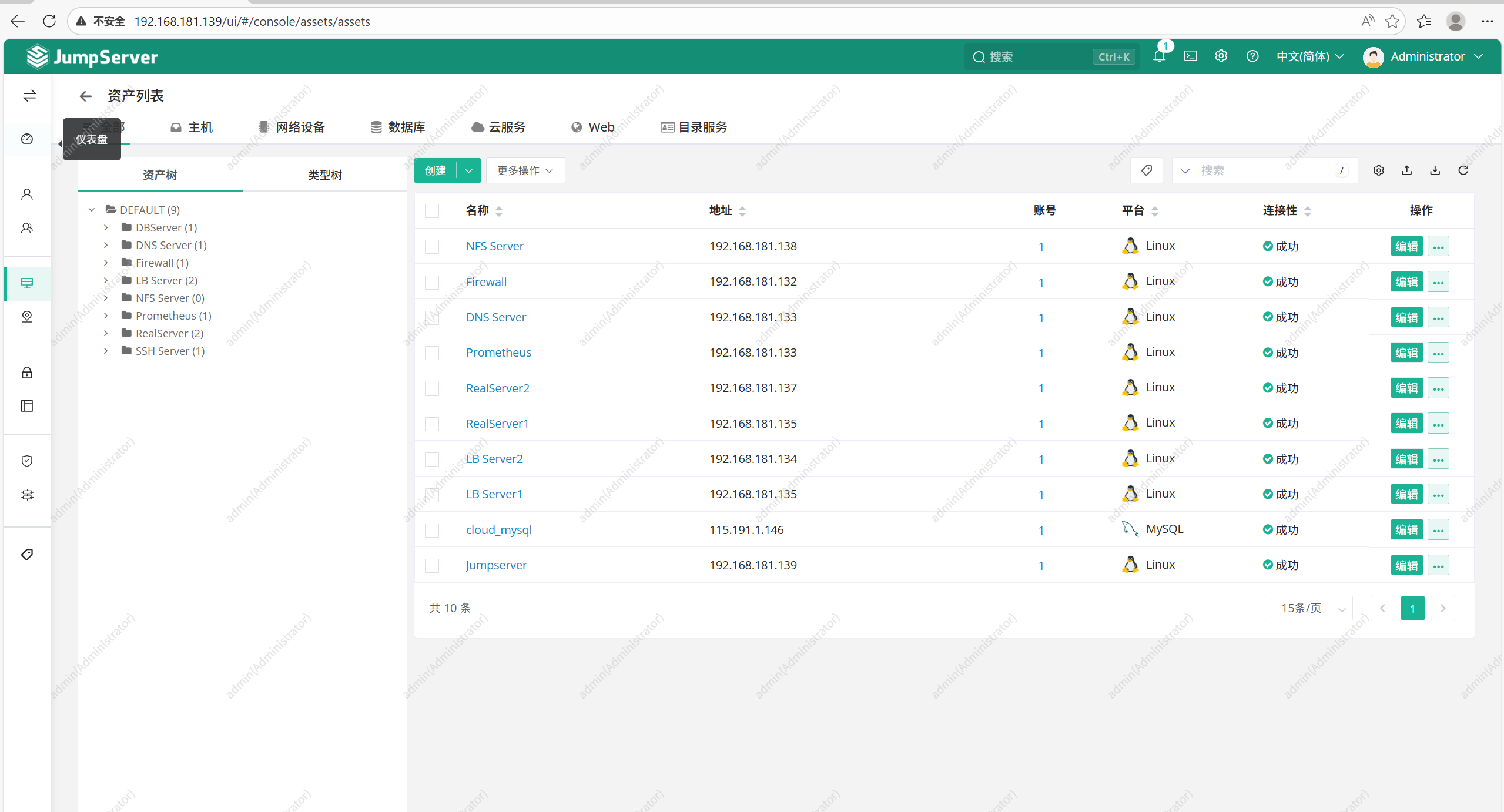

6.效果图

7.添加资产

可以进行资产授权管理集群

总结

本项目围绕 "高可用、可扩展、易运维" 核心目标,构建了一套基于 Keepalived+LVS 的企业级 Web 集群架构,整合了 DNS 解析、NFS 共享存储、Ansible 自动化运维、Prometheus+Grafana 监控及 JumpServer 堡垒机安全管控,形成从底层网络到上层业务的全链路解决方案,可支撑中小规模 Web 业务的稳定运行。