十九、Ingress、Ingress-Controller

19.1 负载均衡相关概念

19.1.1 为什么要做负载均衡?

Pod 漂移问题,可以理解成 Pod IP 是变化的;

Kubernetes 具有强大的副本控制能力,能保证在任意副本(Pod)挂掉时自动从其他机器启动一个新的,还可以动态扩容等。通俗地说,这个 Pod 可能在任何时刻出现在任何节点上,也可能在任何时刻死在任何节点上;那么自然随着 Pod 的创建和销毁,Pod IP 肯定会动态变化;那么 如何把这个动态的Pod IP 暴露出去?这里借助于 Kubernetes 的 Service 机制,Service可以以标签的形式选定一组带有指定标签的 Pod ,并监控和自动负载他们的 Pod IP,那么我们向外暴露只暴露 Service IP就行了;这就是 NodePort 模式:即在每个节点上开起一个端口,然后转发到内部 Pod IP 上。Service 可以通过标签选择器找到它所关联的 Pod。但是属于四层代理,只能基于 IP 和端口代理。

19.1.2 为什么要讲 Ingress Controller?

Service 存在一个问题,什么问题呢?

Service 的 type类型 有很多,如 NodePort、clusterIp、loadbalancer、externalname,如果 Service 想要被 k8s集群 外部访问,需要用 NodePort类型,但是 NodePort类型 的 svc 有如下几个问题:

NodePort 会在物理机映射一个端口,绑定到物理机上,这样就导致,**每个服务都要映射一个端口,端口过多,维护困难,**还有就是Service底层使用的是iptables或者ipvs,**仅支持四层代理,**无法基于https协议做代理,因此我们这节课讲解的是 ingress controller 七层代理。

19.1.3 四层负载和七层负载的区别?

四层负载就是 基于 IP+端口 的负载均衡:在三层负载均衡的基础上,通过发布三层的IP地址(VIP),然后加四层的端口号,来决定哪些流量需要做负载均衡,对需要处理的流量进行NAT处理,转发至后台服务器,并记录下这个TCP或者UDP的流量是由哪台服务器处理的,后续这个连接的所有流量都同样转发到同一台服务器处理。

七层负载均衡就是 基于 虚拟URL 或 主机IP 的负载均衡:在四层负载均衡的基础上,再考虑应用层的特征,比如同一个Web服务器的负载均衡,除了根据VIP加80端口辨别是否需要处理的流量,还可根据七层的URL、浏览器类别、语言来决定是否要进行负载均衡。

四层负载均衡工作在传输层,七层负载均衡工作在应用层

19.2 Ingress 和 Ingress Controller 概述

19.2.1 Ingress 入口资源

Ingress 是 Kubernetes 原生的 API 资源对象,用于定义外部访问集群内服务的 HTTP/HTTPS 路由规则。它本身不提供实际的流量转发能力,仅作为配置声明存在。

Ingress 可以把进入到集群内部的请求转发到集群中的一些服务上,从而可以把服务映射到集群外部。Ingress 能把集群内Service 配置成外网能够访问的 URL,流量负载均衡,提供基于域名访问的虚拟主机等。

可以这样理解,Ingress 是 k8s 中的资源,它主要就是管理 Ingress-Controller 代理的配置文件的助手。

这好比就是一个Nginx的配置文件,但是Nginx更改完配置之后,常常需要进行reload,才能使新配置生效;

Ingress就是解决了这样的一个问题。

19.2.2 Ingress 入口控制器

Ingress Controller 是实际执行 Ingress 规则的运行时组件 ,负责监听 Ingress 资源变化,动态生成反向代理配置(如 Nginx 配置文件),并将外部流量按规则转发到对应 Service 的 Pod。

Ingress Controller 通过与 Kubernetes API 进行交互,动态的去感集群中 Ingress 规则变化,生成一段配置文件,注入到 pod 资源,最后自动化的进行reload。

19.2.3 使用 Ingress Controller 代理 K8s 内部Pod资源的步骤

- 部署 Ingress controller,我们 Ingress controller 使用的是 nginx

- 创建 Pod应用,可以通过控制器创建 pod

- 创建 Service,用来分组 pod

- 创建 Ingress http,测试通过 http 访问应用

- 创建 Ingress https,测试通过 https 访问应用

19.3 Ingress 字段

19.3.1 ingress字段

bash

]# kubectl explain ingress

GROUP: networking.k8s.io

KIND: Ingress

VERSION: v1

apiVersion <string>

kind <string>

metadata <ObjectMeta>

spec <IngressSpec>

status <IngressStatus>19.3.2 ingress.spec字段

bash

]# kubectl explain ingress.spec

defaultBackend <IngressBackend>

# 默认后端

# 当外部请求不匹配任何 rules 中定义的规则时,流量将被转发到此默认后端

ingressClassName <string>

# Ingress 类别名称

# 指定处理该 Ingress 资源的 Ingress Controller 类型

rules <[]IngressRule>

# 路由规则列表

# 定义基于 Host(域名)和 Path(URL 路径) 的流量转发规则

tls <[]IngressTLS>

# TLS 配置

# 配置 HTTPS 证书,启用 TLS 加密通信19.3.3 ingress.spec.defaultBackend字段

若 Ingress 未配置任何 rules,则必须设置 defaultBackend;

**否则未匹配请求将由 Ingress Controller 返回 404 **

bash

[root@k8s-node1 ~]# kubectl explain ingress.spec.defaultBackend

service <IngressServiceBackend>

# 服务后端

resource <TypedLocalObjectReference>

# 资源后端

# 两个字段为互斥字段,不可同时设定,否则资源验证失败。19.3.3.1 ingress.spec.defaultBackend.service字段

bash

]# kubectl explain ingress.spec.defaultBackend.service

name <string> -required-

# 目标 Service 的名称,必须与集群中已存在的 Service 名称一致

port <ServiceBackendPort>

- number # 指定端口号,ps:8080

- name # 指定服务名称,ps:http19.3.3.2 ingress.spec.defaultBackend.resource字段

bash

]# kubectl explain ingress.spec.defaultBackend.resource

apiGroup <string>

kind <string> -required-

# 资源类型

name <string> -required-

# 目标资源名称19.3.4 ingress.spec.rules字段

bash

]# kubectl explain ingress.spec.rules

host <string>

# 主机名

# 基于 HTTP/HTTPS 请求的 Host 头部进行虚拟主机匹配

http <HTTPIngressRuleValue>

# http 路由规则

# 定义基于 URL 路径 的转发规则19.3.4.1 ingress.spec.rules.http.paths字段

bash

]# kubectl explain ingress.spec.rules.http

paths <[]HTTPIngressPath> -required-

# 路径规则数组

bash

]# kubectl explain ingress.spec.rules.http.paths

path <string>

# 定义需要匹配的URL路径

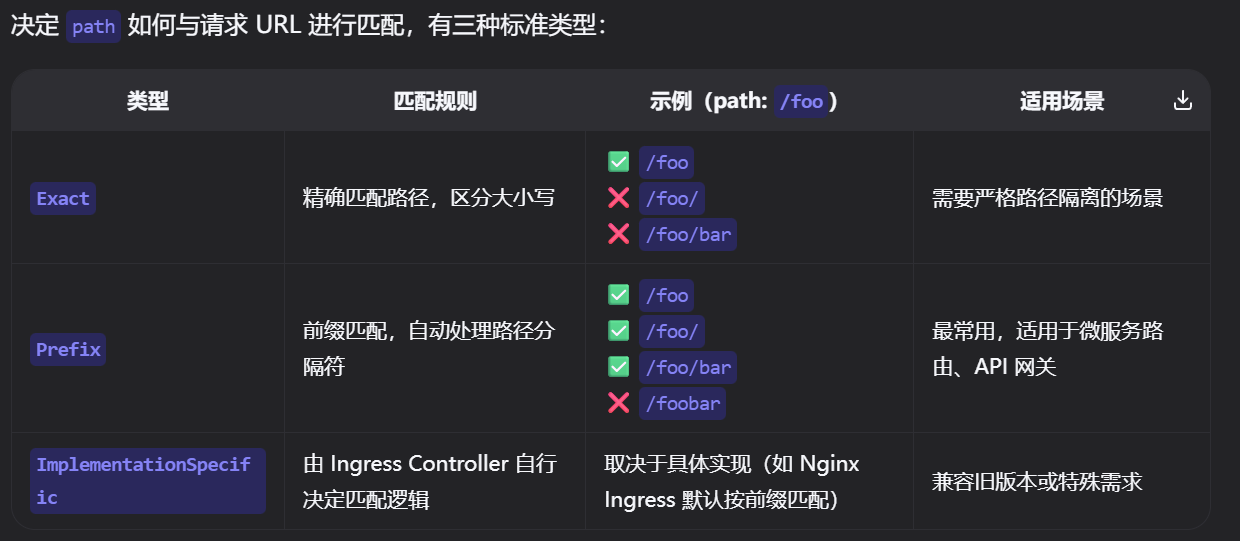

pathType <string> -required-

# 路径匹配类型

- `"Exact"`

# 精准匹配路径(区分大小写)

- `"Prefix"`(最常用)

# 前缀匹配,自动处理路径分隔符

- `"ImplementationSpecific"`

# 由 Ingress Controller 自行决定匹配逻辑

backend <IngressBackend> -required-

# 后端目标关键规则:

/foo和/foo/在Prefix 模式下语义等价,控制器会自动规范化处理路径分隔符。

19.3.4.2 ingress.spec.rules.http.paths.backend字段

bash

]# kubectl explain ingress.spec.rules.http.paths.backend

service <IngressServiceBackend>

resource <TypedLocalObjectReference>

# 两者互斥,service 最常用19.3.5 ingress.spec.tls字段

bash

]# kubectl explain ingress.spec.tls

hosts <[]string>

# 域名列表

# 声明该 TLS 证书适用的域名范围。

# 证书必须包含列表中所有域名(或通过通配符覆盖)

# 通常需与 rules[].host 中的域名保持一致,否则可能导致证书不匹配

secretName <string>

# Secert 名称

# 引用集群中已存在的 Secret 资源,该 Secret 必须包含 TLS 证书和私钥

# Secret 类型应为 kubernetes.io/tls

# 必须包含 tls.crt(证书)和 tls.key(私钥)两个数据项19.4 Ingress-Controller 高可用的实现

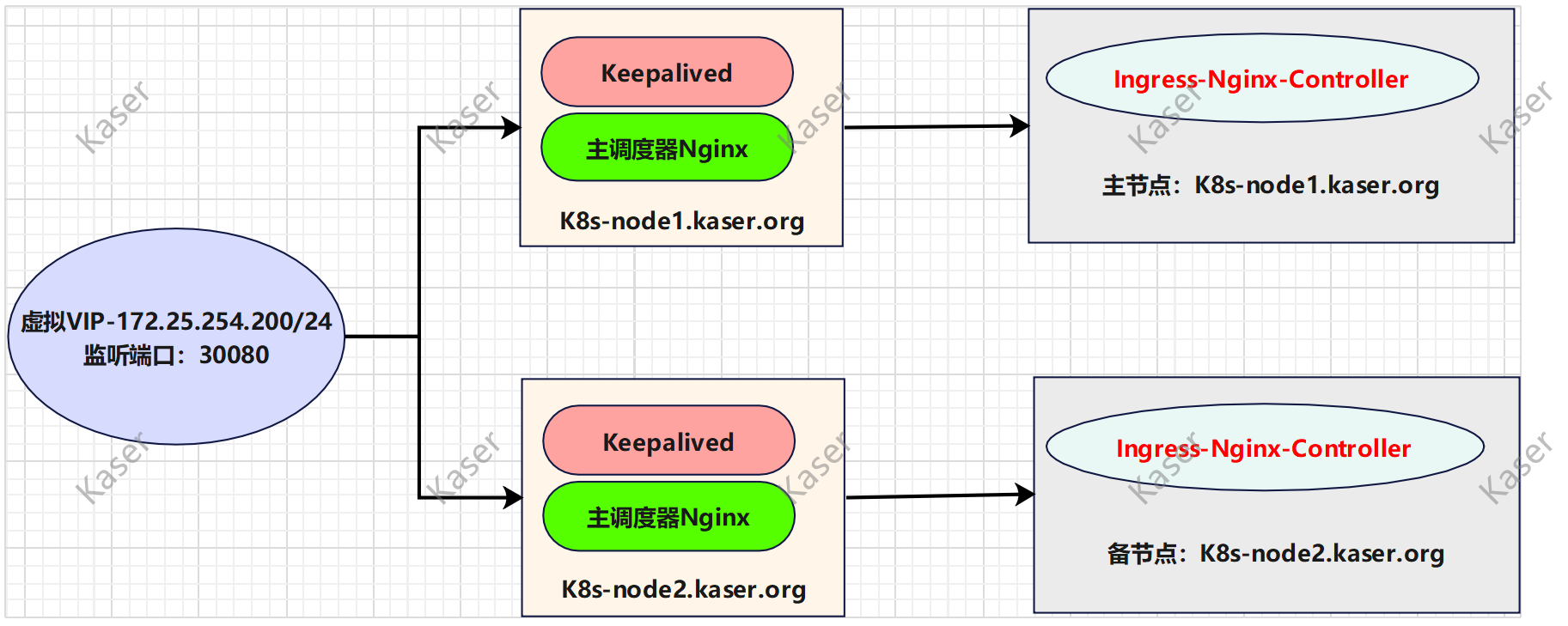

Ingress Controller 是集群流量的接入层,对它做高可用非常重要,可以基于 keepalived 实现 nginx-ingress-controller 高可用,具体实现如下:

Ingress-controller 根据 Deployment+ nodeSeletor+pod 反亲和性方式部署在k8s指定的两个work 节点,nginx-ingress-controller 这个 pod 共享宿主机 ip,然后通过 keepalived + nginx 实现nginx-ingress-controller 高可用。

19.4.1 高可用实现步骤及配置文件编写

bash

# 上传所需镜像并手动导入工作节点(n1 n2)

ctr -n k8s.io images import ingress-nginx-controllerv1.1.0.tar.gz

ctr -n k8s.io images import kube-webhook-certgen-v1.1.0.tar.gz

bash

[root@k8s-master1 ~]# mkdir ingress-ha

[root@k8s-master1 ~]# cd ingress-ha/

[root@k8s-master1 ingress-ha]# vim ingress-deploy.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

allow-snippet-annotations: 'true'

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ipFamilyPolicy: SingleStack

ipFamilies:

- IPv4

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

appProtocol: http

- name: https

port: 443

protocol: TCP

targetPort: https

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

hostNetwork: true

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

topologyKey: kubernetes.io/hostname

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: controller

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/controller-ingressclass.yaml

# We don't support namespaced ingressClass yet

# So a ClusterRole and a ClusterRoleBinding is required

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: nginx

namespace: ingress-nginx

spec:

controller: k8s.io/ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

bash

[root@k8s-master1 ingress-ha]# kubectl apply -f ingress-deploy.yaml

[root@k8s-master1 ingress-ha]# kubectl get pods -n ingress-nginx -owide

ingress-nginx-admission-create-wjlts 0/1 Completed 10.244.90.139 k8s-node2.kaser.org

ingress-nginx-admission-patch-8xr9f 0/1 Completed 10.244.90.138 k8s-node2.kaser.org

ingress-nginx-controller-569ffc5cc9-28gs6 1/1 Running 172.25.254.60 k8s-node2.kaser.org

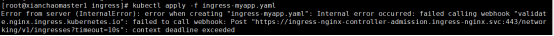

ingress-nginx-controller-569ffc5cc9-tlltg 1/1 Running 172.25.254.50 k8s-node1.kaser.org如果出现以下报错,如何解决?

bash

]# kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

]# kubectl apply -f ingress-myapp.yaml19.4.2 配置文件分析

19.4.2.1 文件核心功能与主要用途

该文件是一个 Kubernetes 配置文件,主要用于部署高可用的 Nginx Ingress Controller,实现集群外部流量到内部服务的路由与负载均衡。

核心功能:

- 在 Kubernetes 集群中创建完整的 Ingress Nginx 控制器环境

- 提供 HTTP/HTTPS 流量的统一入口和路由管理

- 实现基于域名和路径的请求转发规则

- 支持 SSL/TLS 终止和证书管理

- 通过多副本部署确保高可用性

主要用途:

- 作为 Kubernetes 集群的流量网关,管理外部对集群内部服务的访问

- 实现微服务架构中的服务暴露和路由管理

- 提供企业级的负载均衡和流量控制能力

- 支持多租户环境下的服务隔离与访问控制

19.4.2.2 文件组成结构与关键组件

该文件采用 YAML 格式,包含多个 Kubernetes 资源定义,使用 --- 分隔。主要组件包括:

1.基础环境搭建

- 命名空间 (Namespace) :创建

ingress-nginx命名空间,隔离 Ingress 控制器相关资源 - 服务账号 (ServiceAccount) :

ingress-nginx:控制器主服务账号ingress-nginx-admission:准入 Webhook 服务账号

2.配置与权限管理

- 配置映射 (ConfigMap) :

ingress-nginx-controller,存储控制器配置参数 - RBAC 权限配置 :

ClusterRole/ClusterRoleBinding:集群级权限定义与绑定Role/RoleBinding:命名空间级权限定义与绑定

19.4.2.3 网络服务

- 服务 (Service) :

ingress-nginx-controller:主服务,NodePort 类型,暴露 80/443 端口ingress-nginx-controller-admission:准入 Webhook 服务

19.4.2.4 控制器部署

- 部署 (Deployment) :

ingress-nginx-controller,核心组件,包含:- 副本数:2 个副本,实现高可用

- 容器镜像 :

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.0 - 资源配置:请求 100m CPU、90Mi 内存

- 网络模式 :启用

hostNetwork: true,使用宿主机网络 - 亲和性规则:Pod 反亲和性,确保副本分布在不同节点

- 健康检查:就绪探针和存活探针,监控控制器状态

19.4.2.5 扩展功能

- IngressClass :定义

nginx类型的 Ingress 类 - 准入 Webhook :

ValidatingWebhookConfiguration:验证 Ingress 资源的创建和更新- 作业 (Job):用于创建和管理 Webhook 证书

19.4.3 配置文件的具体功能与应用场景

19.4.3.1 实现的具体功能

-

高可用性保障

- 多副本部署(2 个副本)

- Pod 反亲和性确保跨节点分布

- 健康检查机制实时监控控制器状态

- 优雅的终止过程(300秒终止宽限期)

-

流量管理能力

- HTTP/HTTPS 流量路由与转发

- 基于域名和路径的路由规则

- 负载均衡到后端服务

- 支持会话保持和流量分发策略

-

安全特性

- SSL/TLS 终止与证书管理

- 准入 Webhook 验证 Ingress 资源配置

- 最小权限原则的 RBAC 配置

- 容器安全上下文设置(非特权用户运行)

-

性能优化

- 启用主机网络,减少网络开销

- 资源请求与限制配置,确保稳定运行

- 使用 mimalloc 内存分配器提升性能

19.4.3.2 典型应用场景

-

生产环境服务暴露

- 作为企业应用的统一入口

- 管理多个微服务的外部访问

- 提供可靠的负载均衡和故障转移

-

多租户集群

- 为不同团队或项目提供独立的域名访问

- 实现基于命名空间的服务隔离

- 支持不同环境(开发、测试、生产)的路由管理

-

HTTPS 服务部署

- 集中管理 SSL/TLS 证书

- 简化后端服务的安全配置

- 提供统一的 HTTPS 终结点

-

API 网关

- 作为 API 服务的入口,实现请求路由

- 支持路径重写和请求修改

- 与认证服务集成,提供 API 访问控制

-

CI/CD 环境

- 为不同分支或版本的应用提供临时访问地址

- 支持动态创建和销毁路由规则

- 与自动化流程集成,实现环境的自动配置

19.4.4 部署 Keepalived + Nginx 实现高可用

19.4.4.1 下载相关软件包及配置Nginx

bash

n1 && n2]# yum install -y nginx nginx-mod-stream keepalived

n1 && n2]# cd /etc/nginx/

n1 && n2 nginx]# cp nginx.conf nginx.conf.bak

n1 && n2 nginx]# rm -rf nginx.conf

# 上传资源包内的nginx配置文件并进行修改(根据自己的主机信息)

n1 && n2 nginx]# vim nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-ingress-controller {

server 172.25.254.50:80 weight=5 max_fails=3 fail_timeout=30s; # 修改

server 172.25.254.60:80 weight=5 max_fails=3 fail_timeout=30s; # 修改

}

server {

listen 30080;

proxy_pass k8s-ingress-controller;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

}

]# systemctl daemon-reload

n1 && n2 nginx]# systemctl restart nginx.service

n1 && n2 nginx]# systemctl enable --now nginx.service

# 注意:nginx监听端口变成大于30000的端口,比方说30080,这样访问域名:30080就可以了,必须是满足大于30000以上,才能代理ingress-controller19.4.4.2 配置Keepalived

bash

n1 && n2]# cd /etc/keepalived/

n1 && n2 keepalived]# cp keepalived.conf keepalived.conf.bak

n1 && n2 keepalived]# rm -rf keepalived.conf

# 资源包上传自己keepalived的配置文件

n1 keepalived]# vim keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟VIP

virtual_ipaddress {

172.25.254.200/24

}

track_script {

check_nginx

}

}

# track_script:指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移)

# virtual_ipaddress:虚拟IP(VIP)

]# systemctl daemon-reload

n1 keepalived]# systemctl enable --now keepalived.service

n1 keepalived]# scp /etc/keepalived/keepalived.conf 172.25.254.60:/etc/keepalived/keepalived.conf

n2 keepalived]# vim keepalived.conf

...省略...

vrrp_instance VI_1 {

state BACKUP # Node2 -- 备用节点

interface ens160

virtual_router_id 51

priority 90 # 优先级,备服务器设置 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

...省略...

]# systemctl daemon-reload

n2 keepalived]# systemctl enable --now keepalived.service

bash

# 检测一下此时的vip所在主机

n1 keepalived]# ip a s ens160

2: ens160:

inet 172.25.254.50/24

inet 172.25.254.200/24 # n1 优先级 100 > n2 优先级 90

n2 keepalived]# ip a s ens160

2: ens160:

inet 172.25.254.60/24

# 测试

n1 keepalived]# systemctl stop keepalived.service

n1 keepalived]# ip a s ens160

2: ens160:

inet 172.25.254.50/24

# 上面关闭掉n1的keepalived,由于是抢占模式,vip从n1漂移到n2上,如下

[root@k8s-node2 keepalived]# ip a s ens160

2: ens160:

inet 172.25.254.60/24

inet 172.25.254.200/24

# 再次打开n1的服务,由于优先级高于n2,因此vip再次漂移回来

[root@k8s-node1 keepalived]# systemctl start keepalived.service

[root@k8s-node1 keepalived]# ip a s ens160

2: ens160:

inet 172.25.254.50/24

inet 172.25.254.200/2419.4.4.3 配置服务状态监测脚本

bash

n1 && n2 keepalived]# vim check_nginx.sh

#!/bin/bash

# 1、判断Nginx是否存活

counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" )

if [ $counter -eq 0 ]; then

# 2、如果不存活则尝试启动Nginx

service nginx start

sleep 2

# 3、等待2秒后再次获取一次Nginx状态

counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" )

# 4、再次进行判断,如Nginx还不存活则停止Keepalived,让地址进行漂移

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

n1 && n2 keepalived]# chmod +x check_nginx.sh

n1 && n2 keepalived]# systemctl daemon-reload

n1 && n2 keepalived]# systemctl restart keepalived.service19.4.5 示例:基于 HTTP 代理 K8s 集群内的 Pod资源

19.4.5.1 部署后端tomcat服务

bash

n1 && n2 ]# ctr -n k8s.io images import xianchao-tomcat.tar.gz

docker.io/xianchao/tomcat-8.5-jre8:v1

[root@k8s-master1 ~]# vim svc-deploy-ingress.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

spec:

selector:

app: tomcat

release: canary

ports:

- name: http

targetPort: 8080

port: 8080

- name: ajp

targetPort: 8009

port: 8009

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 2

selector:

matchLabels:

app: tomcat

release: canary

template:

metadata:

labels:

app: tomcat

release: canary

spec:

containers:

- name: tomcat

image: docker.io/library/tomcat:latest

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 8080

- name: ajp

containerPort: 8009

[root@k8s-master1 ~]# kubectl apply -f svc-deploy-ingress.yaml

[root@k8s-master1 ~]# kubectl get pods -l app=tomcat

tomcat-deploy-54f646c45c-7ckq7 1/1 Running 0 37s

tomcat-deploy-54f646c45c-zmwf8 1/1 Running 0 37s19.4.5.2 编写Ingress规则

bash

[root@k8s-master1 ~]# vim ingress-myapp.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-myapp

spec:

ingressClassName: nginx

rules:

- host: tomcat.kaser.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tomcat

port:

number: 8080

[root@k8s-master1 ~]# kubectl apply -f ingress-myapp.yaml

# IP地址那一栏可能稍微要等待一段时间才会出现`172.25.254.50,172.25.254.60`

[root@k8s-master1 ~]# kubectl get ingress

ingress-myapp nginx tomcat.kaser.org 172.25.254.50,172.25.254.60 80

[root@k8s-master1 ~]# kubectl describe ingress ingress-myapp

Address: 172.25.254.50,172.25.254.60

tomcat.kaser.org

/ tomcat:8080 (10.244.147.193:8080,10.244.90.130:8080)

[root@k8s-master1 ~]# kubectl get svc tomcat

tomcat ClusterIP 10.109.239.122 <none> 8080/TCP,8009/TCP 17m

[root@k8s-master1 ~]# kubectl describe svc tomcat

Port: http 8080/TCP

TargetPort: 8080/TCP

Endpoints: 10.244.147.193:8080,10.244.90.130:8080

Port: ajp 8009/TCP

TargetPort: 8009/TCP

Endpoints: 10.244.147.193:8009,10.244.90.130:8009

[root@k8s-master1 ~]# kubectl get pods -l app=tomcat -owide

tomcat-deploy-54f646c45c-7ckq7 Running 10.244.147.193 k8s-node1.kaser.org

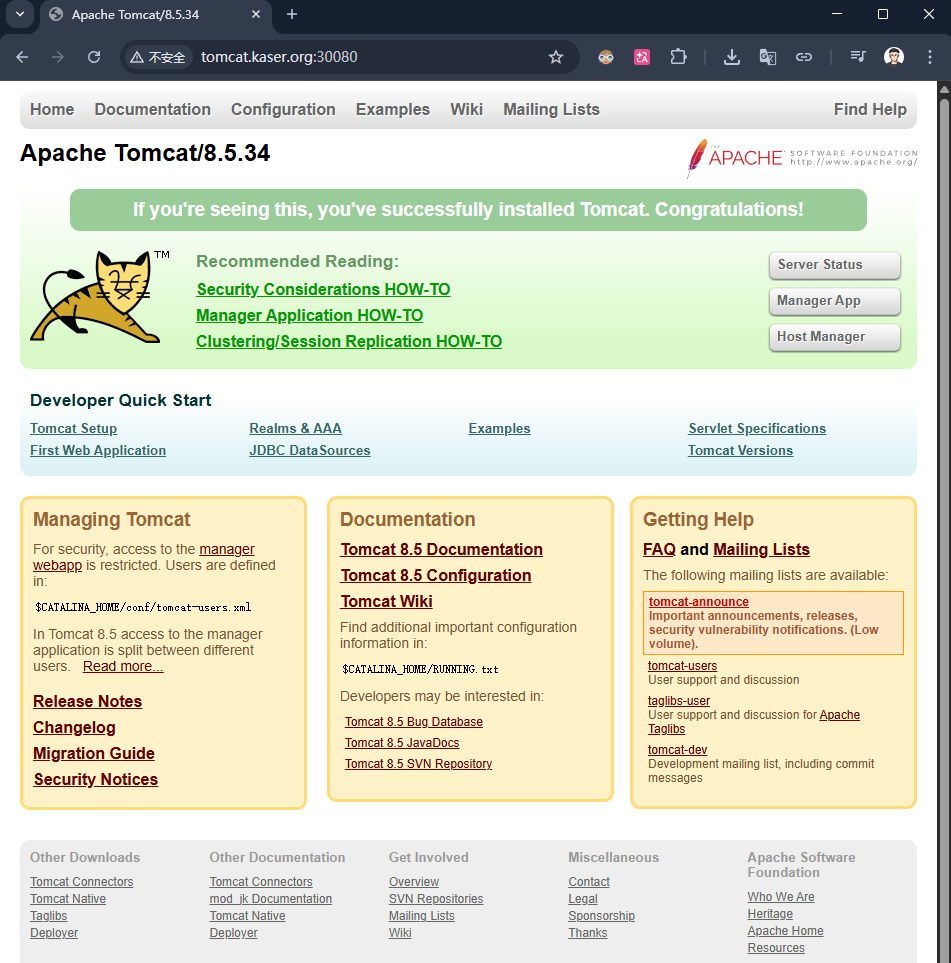

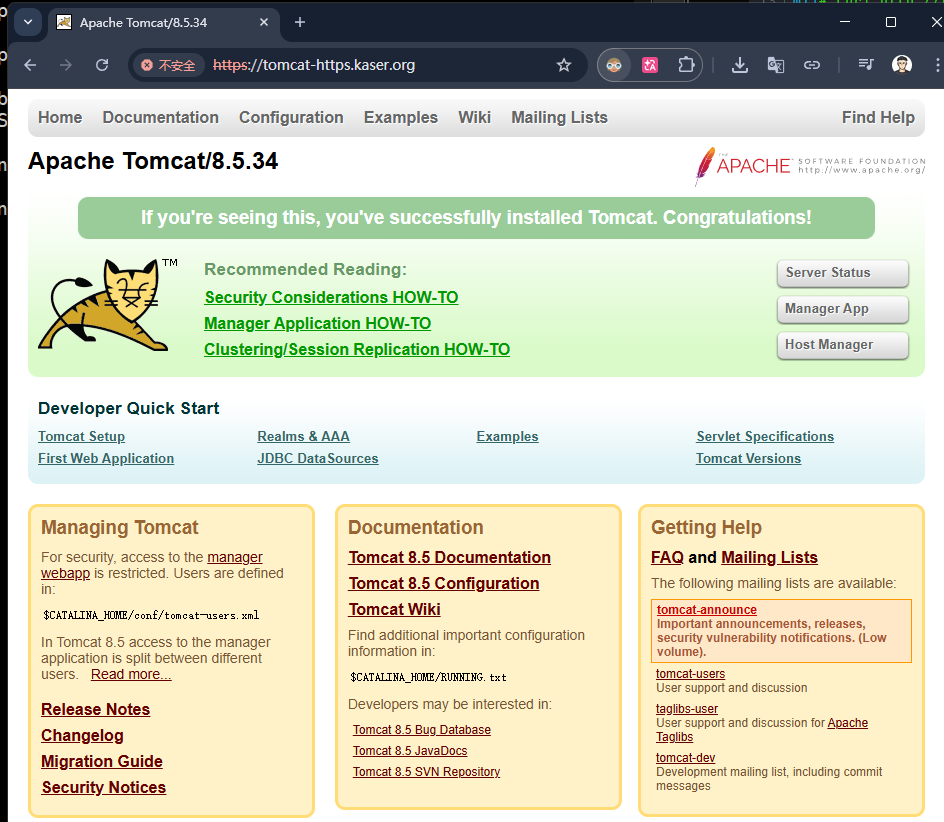

tomcat-deploy-54f646c45c-zmwf8 Running 10.244.90.130 k8s-node2.kaser.org19.4.5.3 访问测试

bash

# 方法1:进行测试

"C:\Windows\System32\drivers\etc\hosts" # 记事本打开,编写保存退出即可(确保自己是管理员)

172.25.254.200 tomcat.kaser.org

浏览器访问 http://tomcat.kaser.org 如下图所示

# 方法2:进行测试

m1]# vim /etc/hosts

...省略...

172.25.254.200 tomcat.kaser.org

m1]# scp /etc/hosts 172.25.254.50:/etc/hosts

m1]# scp /etc/hosts 172.25.254.60:/etc/hosts

m1]# curl http://tomcat.kaser.org:30080

代理的流程:

bash

tomcat.kaser.org:30080

|

172.25.254.200:30080

|

172.25.254.50:80 / 172.25.254.60:80

|

svc:tomcat:8080

|

tomcat-deploy19.4.6 示例:基于 HTTPS 代理 K8s 集群内的 Pod资源

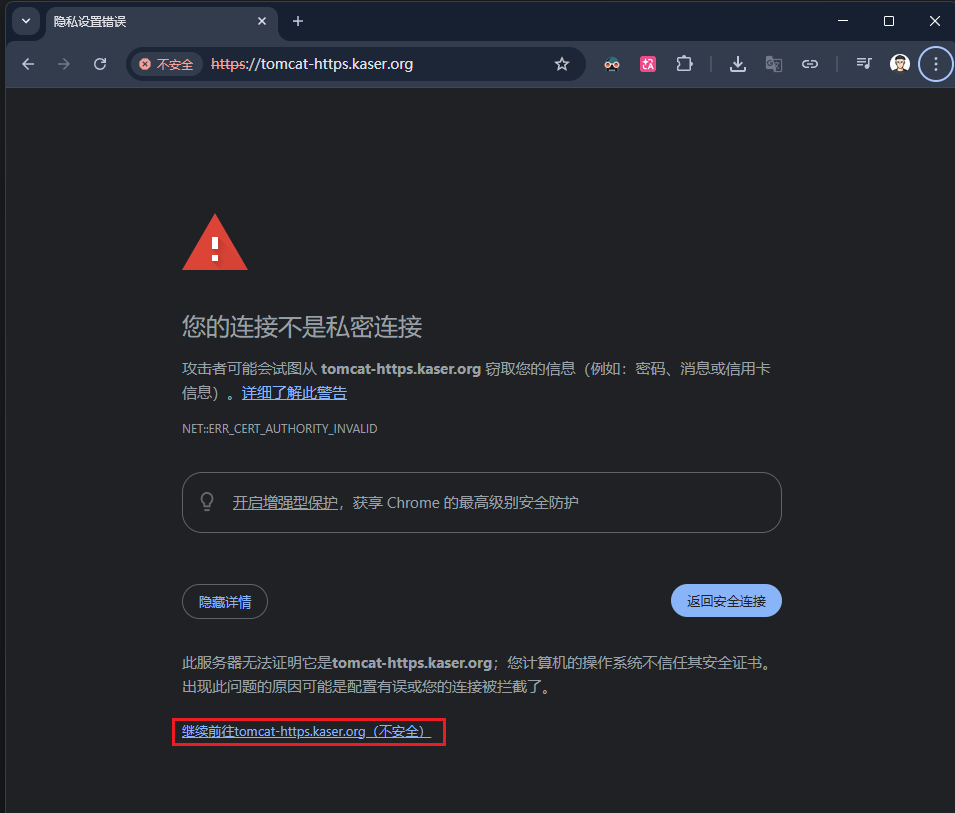

19.4.6.1 构建TLS站点

bash

[root@k8s-master1 ~]# openssl genrsa -out tls.key 2048

[root@k8s-master1 ~]# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=Beijing/L=Beijing/O=DevOps/CN=tomcat-https.kaser.org

[root@k8s-master1 ~]# kubectl create secret tls tomcat-ingress-secret --cert=tls.crt --key=tls.key

[root@k8s-master1 ~]# kubectl get secret

tomcat-ingress-secret kubernetes.io/tls 2 6s

[root@k8s-master1 ~]# kubectl describe secret tomcat-ingress-secret

tls.crt: 1326 bytes

tls.key: 1675 bytes19.4.6.2 创建Ingress规则

bash

[root@k8s-master1 ~]# vim ingress-tomcat-tls.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tomcat-tls

spec:

ingressClassName: nginx

rules:

- host: tomcat-https.kaser.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tomcat

port:

number: 8080

tls:

- hosts:

- tomcat-https.kaser.org

secretName: tomcat-ingress-secret

[root@k8s-master1 ~]# kubectl apply -f ingress-tomcat-tls.yaml

[root@k8s-master1 ~]# kubectl get ingress

ingress-tomcat-tls nginx tomcat-https.kaser.org 172.25.254.50,172.25.254.60 80, 44319.4.6.3 访问测试

bash

# 方法1:进行测试

"C:\Windows\System32\drivers\etc\hosts" # 记事本打开,编写保存退出即可(确保自己是管理员)

172.25.254.200 tomcat.kaser.org tomcat-https.kaser.org

浏览器访问 http://tomcat-https.kaser.org 如下图所示

# 方法2:进行测试

m1]# vim /etc/hosts

...省略...

172.25.254.200 tomcat.kaser.org tomcat-https.kaser.org

m1]# scp /etc/hosts 172.25.254.50:/etc/hosts

m1]# scp /etc/hosts 172.25.254.60:/etc/hosts

m1]# curl -k https://tomcat-https.kaser.org

19.5 Nginx Ingress Controller 通过 金丝雀(Canary)机制 实现灰度发布

**主要借助特定的 Ingress Annotations 配置,将流量按需路由至新旧版本服务。**以下是典型应用场景及支持的 Canary 规则说明。

19.5.1 典型灰度发布场景

19.5.1.1 场景一:基于用户标识的定向灰度

适用于将新版本仅开放给特定用户群体(如内部测试用户、VIP 用户等) 。业务方通过在请求中添加特定的 Header 或 Cookie 标识用户类型 ,Nginx Ingress 根据这些标识将流量路由至新版本(Canary 版本),其余用户仍访问旧版本。此方式适合灰度验证和 A/B 测试。

19.5.1.2 场景二:基于流量比例的渐进灰度

适用于新版本需逐步验证稳定性的情况 。通过配置流量权重 ,将固定比例(如 10%)的请求转发至新版本,其余流量仍由旧版本处理。观察稳定后可逐步提升权重,最终实现全量切换。此方式适合蓝绿部署和风险可控的版本迭代。

19.5.2 Nginx Ingress 支持的 Canary Annotations

部署灰度发布时,需创建两个 Ingress 资源:

- 常规 Ingress:指向稳定版本(如 Service A)

- Canary Ingress:指向灰度版本(如 Service A'),并添加以下注解启用金丝雀规则

19.5.2.1 nginx.ingress.kubernetes.io/canary-by-header

基于请求 Header 进行路由判断。

- 当 Header 值为

always时,请求始终路由至 Canary 版本 - 当 Header 值为

never时,请求始终路由至常规版本 - 适用于定向灰度和 A/B 测试

19.5.2.2 nginx.ingress.kubernetes.io/canary-by-header-value

配合 canary-by-header 使用,指定需匹配的具体 Header 值。

- 仅当请求中该 Header 的值精确匹配配置值时,才路由至 Canary 版本

- 提供更细粒度的用户分组控制能力

19.5.2.3 nginx.ingress.kubernetes.io/canary-weight

基于权重进行流量比例分配,取值范围 0--100。

- 例如配置为 10,表示约 10% 的请求会被路由至 Canary 版本

- 适用于按比例渐进式灰度,是蓝绿部署的常用策略

19.5.2.4 nginx.ingress.kubernetes.io/canary-by-cookie

基于 Cookie 值进行路由判断。

- 当 Cookie 值为

always时,请求路由至 Canary 版本 - 当 Cookie 值为

never时,请求路由至常规版本 - 适用于需要保持用户会话一致性的灰度场景

19.5.3 规则优先级说明

当多个 Canary 规则同时配置时,Nginx Ingress 按以下优先级生效:

canary-by-header(含canary-by-header-value)canary-by-cookiecanary-weight

bash

header --> cookie --> weight19.5.4 示例-部署生产测试版本WEB服务

19.5.4.1 部署步骤

编写v1版本服务

bash

# 上传资源包中的文件,并手动导入

n1 && n2]# ctr -n k8s.io images import openresty.tar.gz

[root@k8s-master1 ~]# vim v1.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: cm-nginx-v1

labels:

app: nginx

version: v1

data:

nginx.conf: |-

worker_processes 1;

events {

accept_mutex on;

multi_accept on;

use epoll;

worker_connections 1024;

}

http {

ignore_invalid_headers off;

server {

listen 80;

location / {

access_by_lua '

local header_str = ngx.say("nginx-v1")

';

}

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-v1

spec:

replicas: 1

selector:

matchLabels:

app: nginx

version: v1

template:

metadata:

labels:

app: nginx

version: v1

spec:

containers:

- name: nginx

image: docker.io/openresty/openresty:centos

imagePullPolicy: IfNotPresent

ports:

- name: http

protocol: TCP

containerPort: 80

volumeMounts:

- name: config-v1

mountPath: /usr/local/openresty/nginx/conf/nginx.conf

subPath: nginx.conf

volumes:

- name: config-v1

configMap:

name: cm-nginx-v1

---

apiVersion: v1

kind: Service

metadata:

name: svc-nginx-v1

spec:

type: ClusterIP

ports:

- name: http

protocol: TCP

port: 80

selector:

app: nginx

version: v1

bash

[root@k8s-master1 ~]# kubectl apply -f v1.yaml

configmap/cm-nginx-v1 created

deployment.apps/nginx-v1 created

service/svc-nginx-v1 created

[root@k8s-master1 ~]# kubectl get cm

cm-nginx-v1 1 13s

[root@k8s-master1 ~]# kubectl get deploy

nginx-v1 1/1 1 1

[root@k8s-master1 ~]# kubectl get pods -l app=nginx -owide

nginx-v1-6d68c4577b-4cwhm 1/1 Running 10.244.90.133 k8s-node2.kaser.org

[root@k8s-master1 ~]# kubectl get svc | grep nginx

svc-nginx-v1 ClusterIP 10.109.85.15 <none> 80/TCP 65s

[root@k8s-master1 ~]# kubectl describe svc svc-nginx-v1

Endpoints: 10.244.90.133:80编写v2版本服务

bash

# 拷贝v1的yaml文件,做适当修改即可(相关信息换成v2即可)

[root@k8s-master1 ~]# cp v1.yaml v2.yaml

[root@k8s-master1 ~]# vim v2.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: cm-nginx-v2

labels:

app: nginx

version: v2

data:

nginx.conf: |-

worker_processes 1;

events {

accept_mutex on;

multi_accept on;

use epoll;

worker_connections 1024;

}

http {

ignore_invalid_headers off;

server {

listen 80;

location / {

access_by_lua '

local header_str = ngx.say("nginx-v2")

';

}

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-v2

spec:

replicas: 1

selector:

matchLabels:

app: nginx

version: v2

template:

metadata:

labels:

app: nginx

version: v2

spec:

containers:

- name: nginx

image: docker.io/openresty/openresty:centos

imagePullPolicy: IfNotPresent

ports:

- name: http

protocol: TCP

containerPort: 80

volumeMounts:

- name: config-v2

mountPath: /usr/local/openresty/nginx/conf/nginx.conf

subPath: nginx.conf

volumes:

- name: config-v2

configMap:

name: cm-nginx-v2

---

apiVersion: v1

kind: Service

metadata:

name: svc-nginx-v2

spec:

type: ClusterIP

ports:

- name: http

protocol: TCP

port: 80

selector:

app: nginx

version: v2

[root@k8s-master1 ~]# kubectl apply -f v2.yaml

[root@k8s-master1 ~]# kubectl get cm

cm-nginx-v1 1

cm-nginx-v2 1

[root@k8s-master1 ~]# kubectl get deploy

nginx-v1 1/1 1 1

nginx-v2 1/1 1 1

[root@k8s-master1 ~]# kubectl get pods -l version=v2 -owide

nginx-v2-6fb8b8bdcb-t9l49 1/1 Running 10.244.90.138

[root@k8s-master1 ~]# kubectl get svc | grep nginx

svc-nginx-v1 ClusterIP 10.109.85.15 80/TCP

svc-nginx-v2 ClusterIP 10.97.63.142 80/TCP

[root@k8s-master1 ~]# kubectl describe svc svc-nginx-v2

Endpoints: 10.244.90.138:80

bash

[root@k8s-master1 ~]# kubectl get pods -l app=nginx -owide

nginx-v1-6d68c4577b-4cwhm 1/1 Running 10.244.90.133

nginx-v2-6fb8b8bdcb-t9l49 1/1 Running 10.244.90.138

[root@k8s-master1 ~]# curl 10.244.90.133:80

nginx-v1

[root@k8s-master1 ~]# curl 10.244.90.138:80

nginx-v2创建Ingress对外进行暴露,并指向v1版本服务并测试

bash

[root@k8s-master1 ~]# kubectl get svc

svc-nginx-v1 ClusterIP 10.109.85.15 <none> 80/TCP

svc-nginx-v2 ClusterIP 10.97.63.142 <none> 80/TCP

[root@k8s-master1 ~]# vim v1-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

spec:

ingressClassName: nginx

rules:

- host: canary.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-nginx-v1

port:

number: 80

[root@k8s-master1 ~]# kubectl apply -f v1-ingress.yaml

[root@k8s-master1 ~]# kubectl get ingress nginx

nginx nginx canary.example.com 172.25.254.50,172.25.254.60 80

[root@k8s-master1 ~]# kubectl describe ingress nginx

Address: 172.25.254.50,172.25.254.60

Ingress Class: nginx

canary.example.com

/ svc-nginx-v1:80 (10.244.90.133:80)

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" http://172.25.254.200

nginx-v1

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" http://172.25.254.50

nginx-v1

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" http://172.25.254.60

nginx-v119.5.4.2 测试:基于 Header 的流量切分

查找合法的Annotations:https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/annotations.md

创建 Canary Ingress,指定 v2 版本的后端服务,且加上一些 annotation,实现仅将带有名为 Region 且值为 xa 或 sh 的请求头的请求转发给当前 Canary Ingress,模拟灰度新版本给西安(xa)和上海(sh)的用户:

bash

[root@k8s-master1 ~]# vim v2-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-canary

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "Region"

nginx.ingress.kubernetes.io/canary-by-header-pattern: "xa|sh"

spec:

ingressClassName: nginx

rules:

- host: canary.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-nginx-v2

port:

number: 80

bash

[root@k8s-master1 ~]# kubectl apply -f v2-ingress.yaml

[root@k8s-master1 ~]# kubectl get ingress nginx-canary

nginx-canary nginx canary.example.com 172.25.254.50,172.25.254.60 80

[root@k8s-master1 ~]# kubectl describe ingress nginx-canary

Name: nginx-canary

Address: 172.25.254.50,172.25.254.60

Rules:

Host Path Backends

---- ---- --------

canary.example.com

/ svc-nginx-v2:80 (10.244.90.138:80)

Annotations: nginx.ingress.kubernetes.io/canary: true

nginx.ingress.kubernetes.io/canary-by-header: Region

nginx.ingress.kubernetes.io/canary-by-header-pattern: xa|sh

# 正常访问不带特殊头部标记 --> 访问v1

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" http://172.25.254.200

nginx-v1

# 特殊头部标记xa --> 访问v2

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" -H "Region: xa" http://172.25.254.200

nginx-v2

# 特殊头部标记sh --> 访问v2

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" -H "Region: sh" http://172.25.254.200

nginx-v2

# 特殊头部标记bj --> 但是没有在pattern里面,因此访问v1

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" -H "Region: bj" http://172.25.254.200

nginx-v119.5.4.3 测试:基于 Cookie 的流量切分

与前面 Header 类似,不过使用 Cookie 就无法自定义 value 了,这里以模拟灰度西安地域用户为例,仅将带有名为 user_from_cd 的 cookie 的请求转发给当前 Canary Ingress

bash

[root@k8s-master1 ~]# cp v2-ingress.yaml v2-ingress-cookie.yaml

[root@k8s-master1 ~]# vim v2-ingress-cookie.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-canary-cookie # 修改

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-cookie: "xa" # 修改

spec:

ingressClassName: nginx

rules:

- host: canary.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-nginx-v2

port:

number: 80

bash

[root@k8s-master1 ~]# kubectl apply -f v2-ingress-cookie.yaml ^C

[root@k8s-master1 ~]# kubectl get ingress | grep cookie

nginx-canary-cookie nginx canary.example.com 172.25.254.50,172.25.254.60 80

[root@k8s-master1 ~]# kubectl describe ingress nginx-canary-cookie

Name: nginx-canary-cookie

Namespace: default

Address: 172.25.254.50,172.25.254.60

Ingress Class: nginx

Rules:

Host Path Backends

---- ---- --------

canary.example.com

/ svc-nginx-v2:80 (10.244.90.138:80)

Annotations: nginx.ingress.kubernetes.io/canary: true

nginx.ingress.kubernetes.io/canary-by-cookie: xa

# 正常访问 --> v1

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" http://172.25.254.200

nginx-v1

# cookie标记访问 --> v1 (官方规定必须要有always)

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" --cookie "xa" http://172.25.254.200

nginx-v1

# cookie标记always访问 --> v2

[root@k8s-master1 ~]# curl -H "Host: canary.example.com" --cookie "xa=always" http://172.25.254.200

nginx-v219.5.4.4 测试:基于 服务权重 的流量切分

基于服务权重的 Canary Ingress 就简单了,直接定义需要导入的流量比例,这里以导入 30% 流量到 v2 版本为例。

bash

[root@k8s-master1 ~]# vim v2-ingress-weight.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-canary-weight

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "30" # 千万不要写成 canary-by-weight

spec:

ingressClassName: nginx

rules:

- host: canary.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-nginx-v2

port:

number: 80

[root@k8s-master1 ~]# kubectl apply -f v2-ingress-weight.yaml

[root@k8s-master1 ~]# kubectl get ingress | grep weight

nginx-canary-weight nginx canary.example.com 172.25.254.50,172.25.254.60 80

[root@k8s-master1 ~]# kubectl describe ingress nginx-canary-weight

Name: nginx-canary-weight

Address: 172.25.254.50,172.25.254.60

Ingress Class: nginx

Rules:

canary.example.com

/ svc-nginx-v2:80 (10.244.90.138:80)

Annotations: nginx.ingress.kubernetes.io/canary: true

nginx.ingress.kubernetes.io/canary-weight: 30

[root@k8s-master1 ~]# for i in {1..20};do curl -H "Host: canary.example.com" http://172.25.254.200;done

nginx-v2

nginx-v2

nginx-v2

nginx-v1

nginx-v1

nginx-v2

nginx-v2

nginx-v1

nginx-v1

nginx-v2

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v1

nginx-v2

nginx-v1

nginx-v1

nginx-v1

nginx-v2