Deployment

Deployment 一个非常重要的功能就是实现了 Pod 的滚动更新,比如我们应用更新了,我们只需要更新我们的容器镜像, 然后修改 Deployment 里面的 Pod 模板镜像,那么 Deployment 就会用滚动更新(Rolling Update)的方式来升 级现在的 Pod,这个能力是非常重要的,因为对于线上的服务我们需要做到不中断服务,所以滚动更新就成了必须的一个功 能。而 Deployment 这个能力的实现,依赖的就是前面的 ReplicaSet 这个资源对象,实际上我们可以通俗的理解就是 每个 Deployment 就对应集群中的一次部署,这样就更好理解了。

Deployment 资源对象的格式和 ReplicaSet 几乎一致,如下资源对象就是一个常见的 Deployment 资源类型:

# nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

spec:

replicas: 3 # 期望的 Pod 副本数量,默认值为1

selector: # Label Selector,必须匹配 Pod 模板中的标签 matchLabels:

app: nginx

template: # Pod 模板

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80我们这里只是将类型替换成了 Deployment,我们可以先来创建下这个资源对象:

root@master01:~/kubernetes# vim nginx-deploy.yaml

root@master01:~/kubernetes# kubectl apply -f nginx-deploy.yaml

deployment.apps/nginx-deploy created

root@master01:~/kubernetes# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 7m14s

root@master01:~/kubernetes# kubectl get pod -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-deploy-86c57bc6b8-2qfnn 1/1 Running 0 7m3s

nginx-deploy-86c57bc6b8-rjbr4 1/1 Running 0 7m3s

nginx-deploy-86c57bc6b8-wxtpj 1/1 Running 0 7m3s到这里我们发现和之前的 RS 对象是否没有什么两样,都是根据spec.replicas 来维持的副本数量,我们随意查看一个 Pod 的描述信息:

root@master01:~/kubernetes# kubectl describe pod nginx-deploy-86c57bc6b8-2qfnn

Name: nginx-deploy-86c57bc6b8-2qfnn

Namespace: default

Priority: 0

Service Account: default

Node: node02/192.168.48.102

Start Time: Mon, 16 Feb 2026 14:17:06 +0800

Labels: app=nginx

pod-template-hash=86c57bc6b8

Annotations: cni.projectcalico.org/containerID: 8aaebb25ebfe3af7d3c66482fcd636f7e419ff94841a13bb31b8132c89f032a5

cni.projectcalico.org/podIP: 172.16.140.66/32

cni.projectcalico.org/podIPs: 172.16.140.66/32

Status: Running

IP: 172.16.140.66

IPs:

IP: 172.16.140.66

Controlled By: ReplicaSet/nginx-deploy-86c57bc6b8

Containers:

nginx:

Container ID: docker://800ad7055e4e1816511fdaeb4baffaec60d30b0bac3c2509e788dd419cd3238f

Image: nginx

Image ID: docker-pullable://nginx@sha256:341bf0f3ce6c5277d6002cf6e1fb0319fa4252add24ab6a0e262e0056d313208

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 16 Feb 2026 14:17:46 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-lnxwg (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-lnxwg:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned default/nginx-deploy-86c57bc6b8-2qfnn to node02

Normal Pulling 10m kubelet Pulling image "nginx"

Normal Pulled 10m kubelet Successfully pulled image "nginx" in 1.343s (40.104s including waiting). Image size: 160850673 bytes.

Normal Created 10m kubelet Created container: nginx

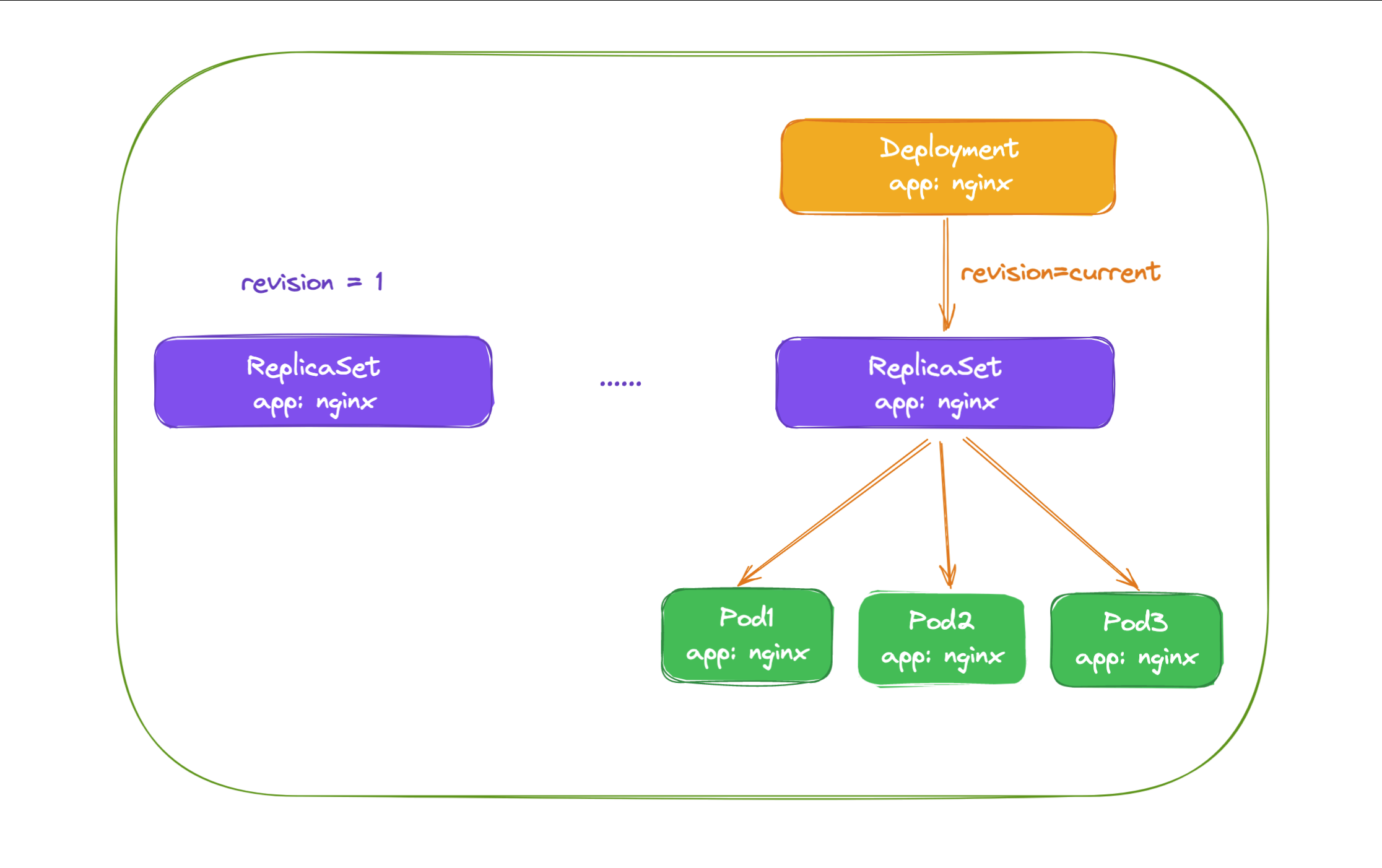

Normal Started 10m kubelet Started container nginx其中有这样的一个信息: Controlled By: Deployment/nginx-deploy,明白了吧?意思就是我们的 Pod 依赖的控 制器 RS 实际上被我们的 Deployment 控制着呢,我们可以用下图来说明 Pod、ReplicaSet、Deployment 三者之间 的关系:

通过上图我们可以很清楚的看到,定义了 3 个副本的 Deployment 与 ReplicaSet 和 Pod 的关系,就是一层一层进 行控制的。ReplicaSet 作用和之前一样还是来保证 Pod 的个数始终保存指定的数量,所以 Deployment 中的容器 restartPolicy=Always 是唯一的就是这个原因,因为容器必须始终保证自己处于 Running 状态,ReplicaSet 才可 以去明确调整 Pod 的个数。而 Deployment 是通过管理 ReplicaSet 的数量和属性来实现水平扩展/收缩以及 滚动更 新两个功能的。

水平伸缩

水平扩展/收缩的功能比较简单,因为 ReplicaSet 就可以实现,所以 Deployment 控制器只需要去修改它缩控制的 ReplicaSet 的 Pod 副本数量就可以了。比如现在我们把 Pod 的副本调整到 4 个,那么 Deployment 所对应的 ReplicaSet 就会自动创建一个新的 Pod 出来,这样就水平扩展了,我们可以使用一个新的命令 kubectl scale 命令 来完成这个操作:

root@master01:~/kubernetes# kubectl scale deployment nginx-deploy --replicas=4

deployment.apps/nginx-deploy scaled

root@master01:~/kubernetes# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 4/4 4 4 33m

root@master01:~/kubernetes# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-86c57bc6b8 4 4 4 33m可以看到期望的 Pod 数量已经变成 4 了,同样查看 RS 的详 细信息:

root@master01:~/kubernetes# kubectl describe rs nginx-deploy-86c57bc6b8

Name: nginx-deploy-86c57bc6b8

Namespace: default

Selector: app=nginx,pod-template-hash=86c57bc6b8

Labels: app=nginx

pod-template-hash=86c57bc6b8

Annotations: deployment.kubernetes.io/desired-replicas: 4

deployment.kubernetes.io/max-replicas: 5

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/nginx-deploy

Replicas: 4 current / 4 desired

Pods Status: 4 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=nginx

pod-template-hash=86c57bc6b8

Containers:

nginx:

Image: nginx

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Node-Selectors: <none>

Tolerations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 36m replicaset-controller Created pod: nginx-deploy-86c57bc6b8-2qfnn

Normal SuccessfulCreate 36m replicaset-controller Created pod: nginx-deploy-86c57bc6b8-wxtpj

Normal SuccessfulCreate 36m replicaset-controller Created pod: nginx-deploy-86c57bc6b8-rjbr4

Normal SuccessfulCreate 3m23s replicaset-controller Created pod: nginx-deploy-86c57bc6b8-8sgzn可以看到 ReplicaSet 控制器增加了一个新的 Pod,同样的 Deployment 资源对象的事件中也可以看到完成了扩容的操作:

root@master01:~/kubernetes# kubectl describe deployment nginx-deploy

Name: nginx-deploy

Namespace: default

CreationTimestamp: Mon, 16 Feb 2026 14:17:06 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx

Replicas: 4 desired | 4 updated | 4 total | 4 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Node-Selectors: <none>

Tolerations: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-deploy-86c57bc6b8 (4/4 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 38m deployment-controller Scaled up replica set nginx-deploy-86c57bc6b8 from 0 to 3

Normal ScalingReplicaSet 5m21s deployment-controller Scaled up replica set nginx-deploy-86c57bc6b8 from 3 to 4滚动更新

如果只是水平扩展/收缩这两个功能,就完全没必要设计 Deployment 这个资源对象了,Deployment 最突出的一个功能 是支持滚动更新,比如现在我们需要把应用容器更改为 nginx:1.27.0 版本,修改后的资源清单文件如下所示:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: nginx

minReadySeconds: 5

strategy:

type: RollingUpdate # 指定更新策略:RollingUpdate和Recreate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.27.0

ports:

- containerPort: 80后前面相比较,除了更改了镜像之外,我们还指定了更新策略:

strategy:

type: RollingUpdate # 指定更新策略:RollingUpdate和Recreate

rollingUpdate:

maxSurge: 1

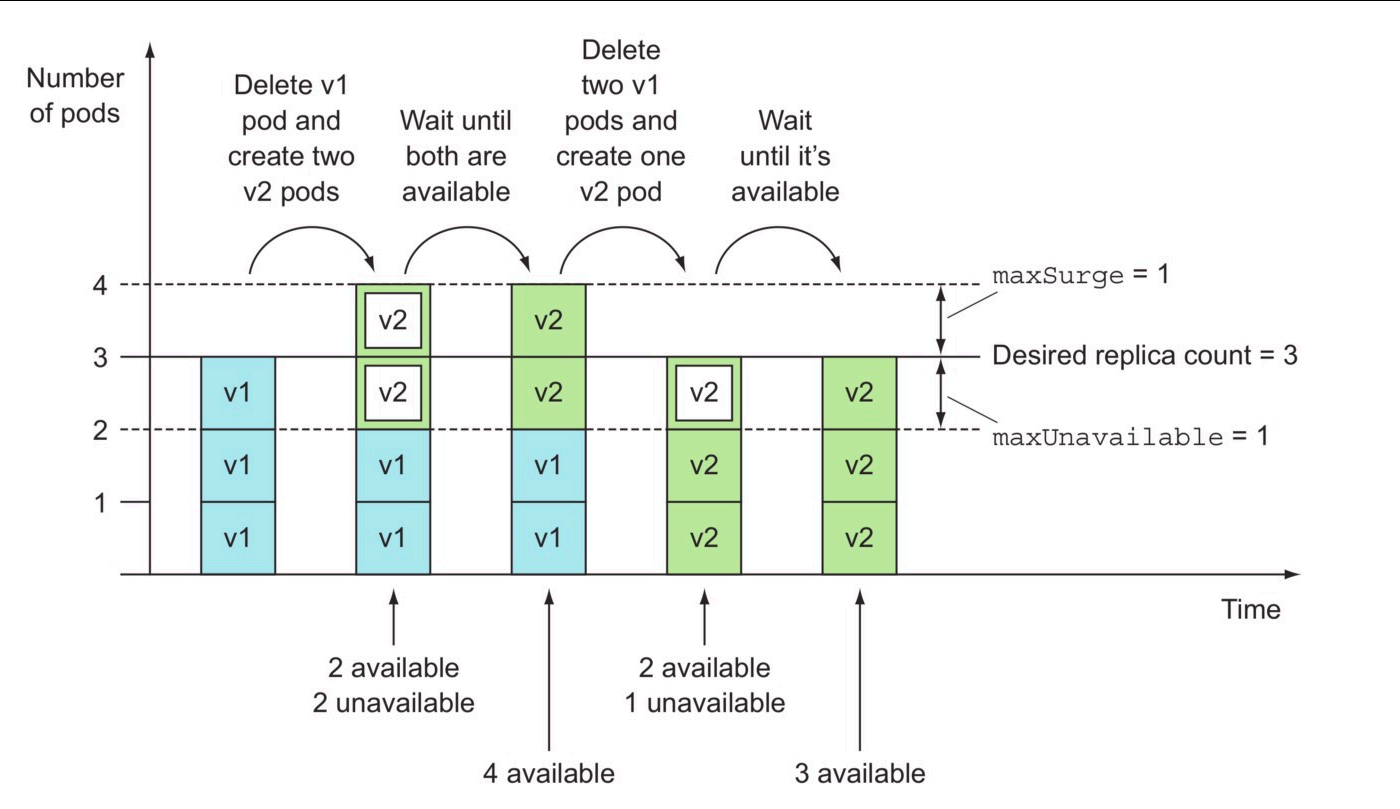

maxUnavailable: 1- minReadySeconds:表示 Kubernetes 在等待设置的时间后才进行升级,如果没有设置该值,Kubernetes 会假 设该容器启动起来后就提供服务了,如果没有设置该值,在某些极端情况下可能会造成服务不正常运行,默认值就是 0。

- type=RollingUpdate:表示设置更新策略为滚动更新,可以设置为 Recreate 和 RollingUpdate 两个 值,Recreate 表示全部重新创建,默认值就是 RollingUpdate。

- maxSurge :表示升级过程中最多可以比原先设置多出的 Pod 数量,例如:maxSurage=1,replicas=5,就表示 Kubernetes 会先启动一个新的 Pod,然后才删掉一个旧的 Pod,整个升级过程中最多会有5+1 个 Pod。

- maxUnavaible :表示升级过程中最多有多少个 Pod 处于无法提供服务的状态,当 maxSurge 不为 0 时,该值也 不能为 0,例如: maxUnavaible=1,则表示 Kubernetes 整个升级过程中最多会有 1 个 Pod 处于无法服务的状 态。

maxSurge 和 maxUnavailable 属性的值不可同时为 0,否则 Pod 对象的副本数量在符合用户期望的数量后无 法做出合理变动以进行滚动更新操作。

现在我们来直接更新上面的 Deployment 资源对象:

root@master01:~/kubernetes# kubectl apply -f nginx-deploy.yaml

deployment.apps/nginx-deploy configured我们可以添加了一个额外的 "'record 参数来记录下我们的每次操作所执行的命令,以方便后面查看。

更新后,我们可以执行下面的 kubectl rollout status 命令来查看我们此次滚动更新的状态:

root@master01:~/kubernetes# kubectl rollout status deployment/nginx-deploy

Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "nginx-deploy" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "nginx-deploy" rollout to finish: 2 of 3 updated replicas are available...

deployment "nginx-deploy" successfully rolled out从上面的信息可以看出我们的滚动更新已经有两个 Pod 已经更新完成了,此时我们可以查看下 Deployment 的详细信息:

root@master01:~/kubernetes# kubectl describe deploy nginx-deploy

Name: nginx-deploy

Namespace: default

CreationTimestamp: Mon, 16 Feb 2026 14:17:06 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 2

Selector: app=nginx

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 5

RollingUpdateStrategy: 1 max unavailable, 1 max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx:1.27.0

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Node-Selectors: <none>

Tolerations: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing Unknown DeploymentPaused

OldReplicaSets: nginx-deploy-86c57bc6b8 (0/0 replicas created)

NewReplicaSet: nginx-deploy-6bbd65dc44 (3/3 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 6m21s deployment-controller Scaled down replica set nginx-deploy-86c57bc6b8 from 4 to 3

Normal ScalingReplicaSet 6m21s deployment-controller Scaled up replica set nginx-deploy-6bbd65dc44 from 0 to 1

Normal ScalingReplicaSet 6m21s deployment-controller Scaled down replica set nginx-deploy-86c57bc6b8 from 3 to 2

Normal ScalingReplicaSet 6m21s deployment-controller Scaled up replica set nginx-deploy-6bbd65dc44 from 1 to 2

Normal ScalingReplicaSet 5m18s deployment-controller Scaled down replica set nginx-deploy-86c57bc6b8 from 2 to 1

Normal ScalingReplicaSet 5m18s deployment-controller Scaled up replica set nginx-deploy-6bbd65dc44 from 2 to 3

Normal ScalingReplicaSet 5m13s deployment-controller Scaled down replica set nginx-deploy-86c57bc6b8 from 1 to 0我们仔细观察 Events 事件区域的变化,上面我们用 kubectl scale 命令将 Pod 副本调整到了 4,现在我们更新的 时候是不是声明又变成 3 了,所以 Deployment 控制器首先是将之前控制的 nginx-deploy-86c57bc6b8 这个 RS 资源对象进行缩容操作,然后滚动更新开始了,可以发现 Deployment 为一个新的 nginx-deploy-6bbd65dc44 RS 资源对象首先新建了一个新的 Pod,然后将之前的 RS 对象缩容到 2 了,再然后新的 RS 对象扩容到 2,这个过程就是滚动更新的过程,启动一个新的 Pod, 杀掉一个旧的 Pod,然后再启动一个新的 Pod,这样滚动更新下去,直到全都变成新的 Pod。

root@master01:~/kubernetes# kubectl get pods -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-deploy-6bbd65dc44-5nlnn 1/1 Running 0 14m

nginx-deploy-6bbd65dc44-nklp8 1/1 Running 0 15m

nginx-deploy-6bbd65dc44-s6wpp 1/1 Running 0 15m查看 Deployment 的状态也可以看到当前的 Pod 状态:

root@master01:~/kubernetes# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 131m这个时候我们查看 ReplicaSet 对象,可以发现会出现两个:

root@master01:~/kubernetes# kubectl get rs -l app=nginx

NAME DESIRED CURRENT READY AGE

nginx-deploy-6bbd65dc44 3 3 3 21m

nginx-deploy-86c57bc6b8 0 0 0 133m从上面可以看出滚动更新之前我们使用的 RS 资源对象的 Pod 副本数已经变成 0 了,而滚动更新后的 RS 资源对象变成 了 3 个副本,我们可以导出之前的 RS 对象查看:

root@master01:~/kubernetes# kubectl get rs nginx-deploy-86c57bc6b8 -o yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

annotations:

deployment.kubernetes.io/desired-replicas: "3"

deployment.kubernetes.io/max-replicas: "4"

deployment.kubernetes.io/revision: "1"

creationTimestamp: "2026-02-16T06:17:06Z"

generation: 6

labels:

app: nginx

pod-template-hash: 86c57bc6b8

name: nginx-deploy-86c57bc6b8

namespace: default

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: Deployment

name: nginx-deploy

uid: 70b5fca6-51a7-40d4-824e-8c3de50cdd81

resourceVersion: "37942"

uid: 4db30266-a3a3-4094-9c2f-17a1d728fb1b

spec:

replicas: 0

selector:

matchLabels:

app: nginx

pod-template-hash: 86c57bc6b8

template:

metadata:

creationTimestamp: null

labels:

app: nginx

pod-template-hash: 86c57bc6b8

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

observedGeneration: 6

replicas: 0我们仔细观察这个资源对象里面的描述信息除了副本数变成了 replicas=0 之外,和更新之前没有什么区别吧?大家看到 这里想到了什么?有了这个 RS 的记录存在,是不是我们就可以回滚了啊?而且还可以回滚到前面的任意一个版本,这个版 本是如何定义的呢?我们可以通过命令 rollout history 来获取:

root@master01:~/kubernetes# kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none>

2 <none>其实 rollout history 中记录的 revision 是和 ReplicaSets 一一对应。如果我们手动删除某个 ReplicaSet ,对应的 rollout history 就会被删除,也就是说你无法回滚到这个 revison了,同样我们还可以查看一 个revison 的详细信息:

root@master01:~/kubernetes# kubectl rollout history deployment nginx-deploy --revision=1

deployment.apps/nginx-deploy with revision #1

Pod Template:

Labels: app=nginx

pod-template-hash=86c57bc6b8

Containers:

nginx:

Image: nginx

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Node-Selectors: <none>

Tolerations: <none>假如现在要直接回退到当前版本的前一个版本,我们可以直接使用如下命令进行操作:

kubectl rollout undo deployment nginx-deploy当然也可以回退到指定的 revision版本:

root@master01:~/kubernetes# kubectl rollout undo deployment nginx-deploy --to-revision=1

deployment.apps/nginx-deploy rolled back这个时候查看对应的 RS 资源对象可以看到 Pod 副本已经回到之前的 RS 里面去了。

root@master01:~/kubernetes# kubectl get rs -l app=nginx

NAME DESIRED CURRENT READY AGE

nginx-deploy-6bbd65dc44 0 0 0 32m

nginx-deploy-86c57bc6b8 3 3 3 144m不过需要注意的是回滚的操作滚动的 revision 始终是递增的:

root@master01:~/kubernetes# kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

2 <none>

3 <none>在很早之前的 Kubernetes 版本中,默认情况下会为我们暴露下所有滚动升级的历史记录,也就是 ReplicaSet 对象,但一般情况下没必要保留所有的版本,毕竟会存在 etcd 中,我们可以通过配置 spec.revisionHistoryLimit 属性来设置保留的历史记录数量,不过新版本中该值默认为 10,如果希望多保存 几个版本可以设置该字段。

回滚原因

CHANGE-CAUSE 字段是 Kubernetes Deployment 注解中的一个记录,用于跟踪每次修订版本(revision)的变更原因或描述信息。

CHANGE-CAUSE 的作用

-

记录变更说明:显示谁在什么时间做了什么变更

-

版本追踪:帮助团队理解每次修订版本的目的

-

回滚参考:在需要回滚时,帮助选择正确的版本

为什么显示为 <none>

输出显示为 <none>,是因为在创建或更新 Deployment 时没有添加变更原因的注解。

添加回滚的描述信息:

# 1. 查看历史版本

kubectl rollout history deployment nginx-deploy

# 2. 回滚到 revision 2

kubectl rollout undo deployment nginx-deploy --to-revision=2

# 3. 等待回滚完成

kubectl rollout status deployment nginx-deploy

# 4. 添加回滚原因的注解

kubectl annotate deployment nginx-deploy kubernetes.io/change-cause="回滚到 revision 2,image:nginx:1.27.0" --overwrite

# 5. 验证新版本

kubectl rollout history deployment nginx-deploy再次查看历史版本和当前pod的image:

root@master01:~/kubernetes# kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

3 <none>

4 回滚到 revision 2,image:nginx:1.27.0

root@master01:~/kubernetes# kubectl describe pod nginx-deploy | grep -w image

Normal Pulling 6m1s kubelet Pulling image "nginx:1.27.0"

Normal Pulled 6m kubelet Successfully pulled image "nginx:1.27.0" in 1.353s (1.353s including waiting). Image size: 187603368 bytes.

Normal Pulling 6m9s kubelet Pulling image "nginx:1.27.0"

Normal Pulled 6m7s kubelet Successfully pulled image "nginx:1.27.0" in 1.954s (1.955s including waiting). Image size: 187603368 bytes.

Normal Pulling 6m9s kubelet Pulling image "nginx:1.27.0"

Normal Pulled 6m7s kubelet Successfully pulled image "nginx:1.27.0" in 1.967s (1.967s including waiting). Image size: 187603368 bytes.