这是一套可直接复用的 Spring AI Demo:同一套 API 同时支持 OpenAI 与 Ollama ,按 provider 路由模型,支持 model 运行时覆盖;会话上下文通过 Redis 持久化 ChatMemory 进行多轮对话;同时提供同步与 SSE 流式 接口;并用 AOP 统一记录耗时、token 使用与异常。

下面按项目现有代码结构,把关键实现串起来讲清楚(复制即可跑)。

依赖与版本:Spring Boot 3.5.x + Spring AI 1.1.2 + JDK17

项目用 BOM 锁定 Spring AI 版本,避免依赖漂移:

xml

<!-- pom.xml -->

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.5.8</version>

<relativePath/>

</parent>

<properties>

<java.version>17</java.version>

<spring-ai.version>1.1.2</spring-ai.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>同时引入 Web、两套模型、Redis、AOP、Validation、Actuator:

xml

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-ollama</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-validation</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

</dependencies>配置:profile 拆分模型,日志打开 advisor 方便看 prompt/记忆行为

基础配置:

yaml

# application.yaml

server:

port: 8080

spring:

application:

name: spring-ai-demo

profiles:

active: openai

data:

redis:

host: xxx

port: 6379

password: 密码

database: 10

logging:

level:

root: INFO

org.springframework.ai: DEBUG

org.springframework.ai.chat.client.advisor: DEBUGOllama:

yaml

# application-ollama.yaml

spring:

ai:

ollama:

base-url: http://localhost:11434

chat:

options:

model: deepseek-r1:32b

temperature: 0.2

memory:

ttl-minutes: 30

max-messages: 20OpenAI(兼容网关):

yaml

# application-openai.yaml

spring:

ai:

openai:

api-key: ${OPENAI_API_KEY}

base-url: https://api.chatanywhere.tech

chat:

options:

model: gpt-4o-mini

temperature: 0.2

memory:

ttl-minutes: 30

max-messages: 20配置绑定:会话记忆参数变成强类型

java

@ConfigurationProperties(prefix = "spring.ai.memory")

@Data

public class AiMemoryProperties {

private long ttlMinutes;

private int maxMessages;

}启动类开启扫描:

java

@SpringBootApplication

@ConfigurationPropertiesScan(basePackages = "pers.seekersferry.config")

public class SpringAiDemoApplication {

public static void main(String[] args) {

SpringApplication.run(SpringAiDemoApplication.class, args);

}

}多模型:OpenAI / Ollama 分别构建 ChatClient,统一挂载 advisors(logger + memory)

这段是多模型能力的"底座":每个模型一个 ChatClient Bean,且默认带上 SimpleLoggerAdvisor 和 MessageChatMemoryAdvisor。

java

@Configuration

public class AiMultiModelConfig {

@Bean("openaiChatClient")

public ChatClient openaiChatClient(OpenAiChatModel openAiChatModel, ChatMemory chatMemory) {

return ChatClient.builder(openAiChatModel)

.defaultAdvisors(

new SimpleLoggerAdvisor(),

MessageChatMemoryAdvisor.builder(chatMemory).build()

)

.build();

}

@Bean("ollamaChatClient")

public ChatClient ollamaChatClient(OllamaChatModel ollamaChatModel, ChatMemory chatMemory) {

return ChatClient.builder(ollamaChatModel)

.defaultAdvisors(

new SimpleLoggerAdvisor(),

MessageChatMemoryAdvisor.builder(chatMemory).build()

)

.build();

}

}模型路由:provider 一行切换底层模型

java

@Component

public class ModelRouter {

private final ChatClient openaiChatClient;

private final ChatClient ollamaChatClient;

public ModelRouter(@Qualifier("openaiChatClient") ChatClient openaiChatClient,

@Qualifier("ollamaChatClient") ChatClient ollamaChatClient) {

this.openaiChatClient = openaiChatClient;

this.ollamaChatClient = ollamaChatClient;

}

public ChatClient pickClient(String provider) {

String p = (provider == null ? "openai" : provider).toLowerCase(Locale.ROOT);

return switch (p) {

case "ollama" -> ollamaChatClient;

case "openai" -> openaiChatClient;

default -> openaiChatClient;

};

}

public boolean isOllama(String provider) {

return provider != null && "ollama".equalsIgnoreCase(provider);

}

}服务层:会话记忆、多模型、运行时覆盖 model 的核心逻辑都在这里

接口定义统一输出 answer + usage,Controller 不依赖 Spring AI 的内部对象:

java

public interface AiChatService {

ChatResult chat(String q);

ChatResult chat(String q, Map<String, Object> meta);

ChatResult chatWithSession(String sessionId, String q, Map<String, Object> meta, String provider, String model);

Flux<String> stream(String sessionId, String q, String provider, String model);

void clearSession(String sessionId);

record ChatResult(String answer, Usage usage) {}

}实现类的关键点:

.advisors(a -> a.param(ChatMemory.CONVERSATION_ID, sessionId))绑定会话model不为空时用OpenAiChatOptions / OllamaChatOptions覆盖默认模型- 同步返回

chatResponse(),流式返回.stream().content()

java

@Service

public class AiChatServiceImpl implements AiChatService {

private final ModelRouter modelRouter;

private final ChatMemory chatMemory;

public AiChatServiceImpl(ModelRouter modelRouter, ChatMemory chatMemory,

@Qualifier("openaiChatClient") ChatClient chatClient) {

this.modelRouter = modelRouter;

this.chatMemory = chatMemory;

// 这里注入一个默认 client 不影响路由逻辑,实际调用用 modelRouter.pickClient

}

private static ChatResult toResult(ChatResponse resp) {

if (resp == null || resp.getResult() == null || resp.getResult().getOutput() == null) {

return new ChatResult(null, null);

}

String answer = resp.getResult().getOutput().getText();

Usage usage = resp.getMetadata() == null ? null : resp.getMetadata().getUsage();

return new ChatResult(answer, usage);

}

@Override

public ChatResult chatWithSession(String sessionId, String q, Map<String, Object> meta, String provider, String model) {

ChatClient client = modelRouter.pickClient(provider);

var spec = client.prompt()

.advisors(a -> a.param(ChatMemory.CONVERSATION_ID, sessionId));

if (model != null && !model.isBlank()) {

if (modelRouter.isOllama(provider)) {

spec = spec.options(OllamaChatOptions.builder().model(model).build());

} else {

spec = spec.options(OpenAiChatOptions.builder().model(model).build());

}

}

ChatResponse resp = spec

.user(u -> u.text(q).metadata(meta == null ? Map.of() : meta))

.call()

.chatResponse();

return toResult(resp);

}

@Override

public Flux<String> stream(String sessionId, String q, String provider, String model) {

ChatClient client = modelRouter.pickClient(provider);

var spec = client.prompt()

.advisors(a -> a.param(ChatMemory.CONVERSATION_ID, sessionId));

if (model != null && !model.isBlank()) {

if (modelRouter.isOllama(provider)) {

spec = spec.options(OllamaChatOptions.builder().model(model).build());

} else {

spec = spec.options(OpenAiChatOptions.builder().model(model).build());

}

}

return spec.user(q).stream().content();

}

@Override

public void clearSession(String sessionId) {

chatMemory.clear(sessionId);

}

// 其余 chat(q)/chat(q,meta) 省略,与你现有代码一致

}Controller:GET/POST/DELETE/stream 四类接口都齐了

DTO:

java

public final class AiChatDtos {

public record AiChatRequest(

String sessionId,

String provider,

String model,

@NotBlank String q,

Map<String, Object> meta

) {}

public record TokenUsage(Integer totalTokens, Integer promptTokens, Integer completionTokens) {

public static TokenUsage from(Usage usage) {

if (usage == null) return null;

return new TokenUsage(usage.getTotalTokens(), usage.getPromptTokens(), usage.getCompletionTokens());

}

}

public record AiChatResponse(String requestId, String sessionId, String answer, TokenUsage usage) {

public static AiChatResponse of(String requestId, String sessionId, String answer, Usage usage) {

return new AiChatResponse(requestId, sessionId, answer, TokenUsage.from(usage));

}

}

}Controller(同步 + SSE):

java

@Slf4j

@Validated

@RestController

@RequestMapping("/ai")

public class AiController {

private final AiChatService aiChatService;

public AiController(AiChatService aiChatService) {

this.aiChatService = aiChatService;

}

@GetMapping(value = "/chat", produces = MediaType.APPLICATION_JSON_VALUE)

@AiLog

public AiChatResponse chatGet(@RequestParam("q") @NotBlank String q,

@RequestParam(value = "sessionId", required = false) String sessionId,

@RequestParam(value = "provider", required = false) String provider,

@RequestParam(value = "model", required = false) String model,

HttpServletRequest request) {

String reqId = RequestSupport.newReqId();

String sid = (sessionId == null || sessionId.isBlank())

? RequestSupport.newReqId()

: sessionId;

log.info("[AI][{}] /ai/chat GET fromIp={} sessionId={} provider={} model={} qLen={} qPreview={}",

reqId, RequestSupport.clientIp(request), sid, provider, model, q.length(), RequestSupport.preview(q));

var r = aiChatService.chatWithSession(sid, q, null, provider, model);

return AiChatResponse.of(reqId, sid, r.answer(), r.usage());

}

@PostMapping(value = "/chat", consumes = MediaType.APPLICATION_JSON_VALUE, produces = MediaType.APPLICATION_JSON_VALUE)

@AiLog

public AiChatResponse chatPost(@Valid @RequestBody AiChatRequest body,

HttpServletRequest request) {

String reqId = RequestSupport.newReqId();

String sid = (body.sessionId() == null || body.sessionId().isBlank())

? RequestSupport.newReqId()

: body.sessionId();

log.info("[AI][{}] /ai/chat POST fromIp={} sessionId={} provider={} model={} qLen={} qPreview={} meta={}",

reqId, RequestSupport.clientIp(request), sid, body.provider(), body.model(),

body.q().length(), RequestSupport.preview(body.q()), body.meta());

var r = aiChatService.chatWithSession(sid, body.q(), body.meta(), body.provider(), body.model());

return AiChatResponse.of(reqId, sid, r.answer(), r.usage());

}

@DeleteMapping("/session/{sessionId}")

@AiLog

public void clearSession(@PathVariable("sessionId") @NotBlank String sessionId) {

aiChatService.clearSession(sessionId);

}

@GetMapping(value = "/chat/stream", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

@AiLog

public Flux<String> chatStream(@RequestParam("q") @NotBlank String q,

@RequestParam(value = "sessionId", required = false) String sessionId,

@RequestParam(value = "provider", required = false) String provider,

@RequestParam(value = "model", required = false) String model) {

String sid = (sessionId == null || sessionId.isBlank())

? RequestSupport.newReqId()

: sessionId;

return aiChatService.stream(sid, q, provider, model);

}

}Redis 会话记忆:ChatMemory 持久化到 Redis List

实现 ChatMemory,把 Message 序列化为 JSON 放进 Redis List,并且:

LTRIM保留最近 N 条,避免无限增长EXPIRE滑动过期,超过 TTL 自动清会话

核心代码:

java

@Component

@RequiredArgsConstructor

public class RedisChatMemory implements ChatMemory {

private static final String PREFIX = "ai:mem:";

private final StringRedisTemplate redis;

private final ObjectMapper objectMapper;

private final int maxMessages = 20;

private final long ttlMinutes = 30;

@Override

public List<Message> get(String conversationId) {

List<String> raw = redis.opsForList().range(key(conversationId), 0, -1);

if (raw == null || raw.isEmpty()) return List.of();

List<Message> out = new ArrayList<>(raw.size());

for (String s : raw) {

StoredTurn t = readTurnQuietly(s);

if (t == null) continue;

Message m = toMessage(t);

if (m != null) out.add(m);

}

return out;

}

@Override

public void add(String conversationId, List<Message> messages) {

String key = key(conversationId);

for (Message m : messages) {

StoredTurn t = fromMessage(m);

if (t == null) continue;

redis.opsForList().rightPush(key, writeTurn(t));

}

redis.opsForList().trim(key, -maxMessages, -1);

redis.expire(key, Duration.ofMinutes(ttlMinutes));

}

@Override

public void clear(String conversationId) {

redis.delete(key(conversationId));

}

private String key(String id) {

return PREFIX + id;

}

// toMessage/fromMessage + JSON 读写与你现有代码一致

public record StoredTurn(String role, String content, long atEpochMs) {}

}AOP 日志:@AiLog 统一记录耗时(并尝试输出 token)

注解:

java

@Target(ElementType.METHOD)

@Retention(RetentionPolicy.RUNTIME)

@Documented

public @interface AiLog {}切面:

java

@Slf4j

@Aspect

@Component

public class AiLogAspect {

@Around("@annotation(pers.seekersferry.support.AiLog)")

public Object around(ProceedingJoinPoint pjp) throws Throwable {

String reqId = RequestSupport.newReqId();

long startNs = System.nanoTime();

try {

Object result = pjp.proceed();

long costMs = Duration.ofNanos(System.nanoTime() - startNs).toMillis();

Usage usage = extractUsage(result);

if (usage != null) {

log.info("[AI][{}] ok costMs={} tokens(total/prompt/completion)={}/{}/{}",

reqId, costMs,

usage.getTotalTokens(), usage.getPromptTokens(), usage.getCompletionTokens());

} else {

log.info("[AI][{}] ok costMs={}", reqId, costMs);

}

return result;

} catch (Exception e) {

long costMs = Duration.ofNanos(System.nanoTime() - startNs).toMillis();

log.error("[AI][{}] fail costMs={} err={}", reqId, costMs, e, e);

throw e;

}

}

private Usage extractUsage(Object result) {

if (result == null) return null;

try {

var method = result.getClass().getMethod("usage");

Object usageObj = method.invoke(result);

if (usageObj instanceof Usage u) return u;

} catch (Exception ignored) {}

return null;

}

}请求辅助:reqId、IP、日志 preview

java

public final class RequestSupport {

public static String newReqId() {

return UUID.randomUUID().toString().replace("-", "");

}

public static String preview(String s) {

if (s == null) return "";

String t = s.replaceAll("\s+", " ").trim();

return t.length() <= 120 ? t : t.substring(0, 120) + "...";

}

public static String clientIp(HttpServletRequest request) {

String xff = request.getHeader("X-Forwarded-For");

if (xff != null && !xff.isBlank()) return xff.split(",")[0].trim();

String xrip = request.getHeader("X-Real-IP");

if (xrip != null && !xrip.isBlank()) return xrip.trim();

return request.getRemoteAddr();

}

}接口调用示例

同步:

同步:

-

OpenAI:

iniGET /ai/chat?q=你好&provider=openai -

Ollama:

iniGET /ai/chat?q=你好&provider=ollama

多轮对话(用 sessionId 串起来):

ini

GET /ai/chat?q=你记住我叫小明&sessionId=abc&provider=ollama

GET /ai/chat?q=我叫什么?&sessionId=abc&provider=ollama运行时切模型:

ini

GET /ai/chat?q=写个SQL优化建议&provider=openai&model=gpt-4o-mini

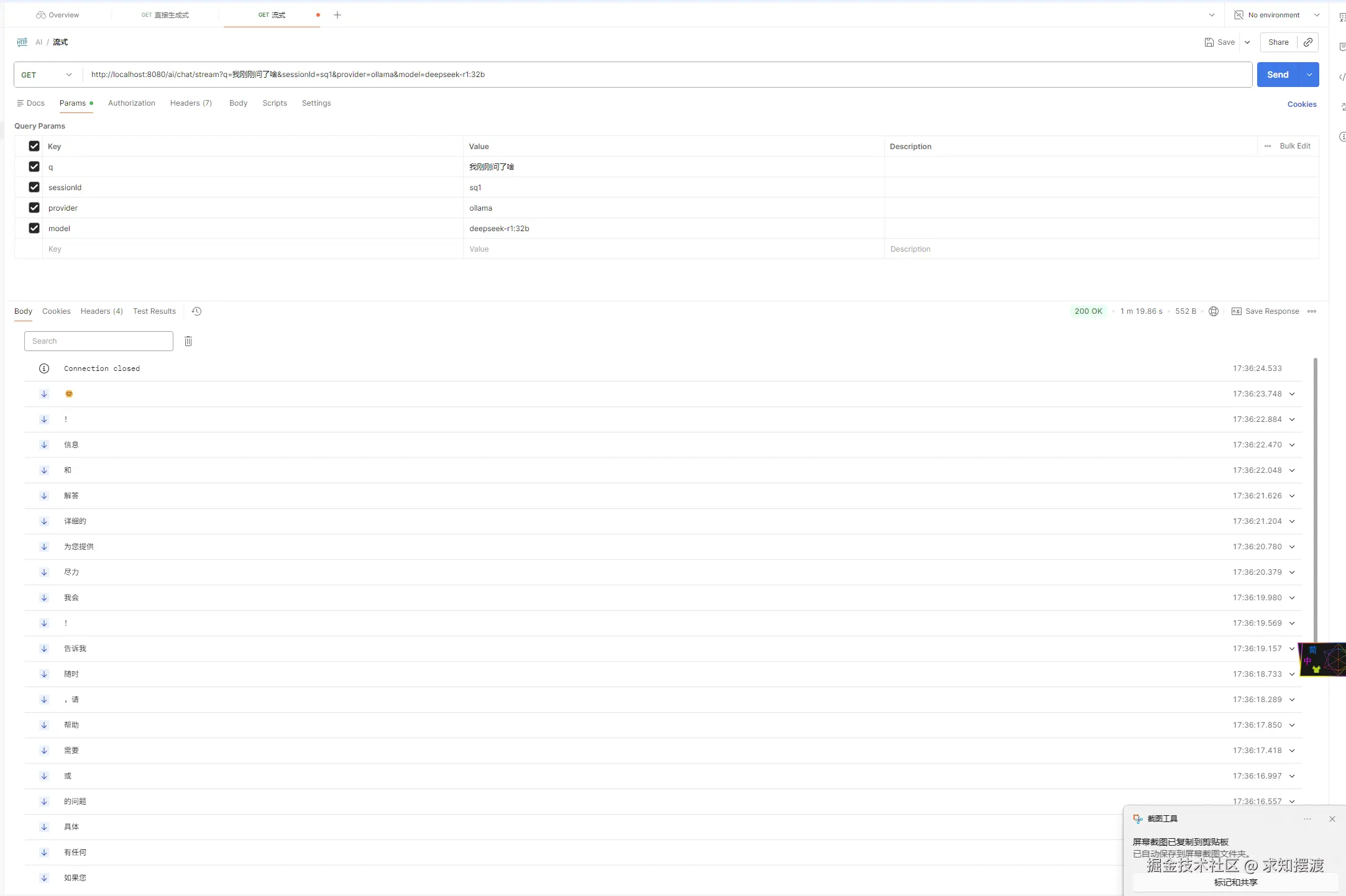

GET /ai/chat?q=写个SQL优化建议&provider=ollama&model=deepseek-r1:32bSSE 流式:

ini

GET /ai/chat/stream?q=写一篇短文&provider=openai这套结构为什么顺手:模型、记忆、日志都可插拔

- ChatClient 分模型 Bean:不同 provider 的默认参数、网关、限流策略都能独立演进

- ModelRouter:Controller 永远稳定,只扩展路由

- Redis ChatMemory:从单机内存直接升级到分布式上下文共享

- DTO 隔离 Spring AI 内部结构:对外返回格式稳定

- AOP:日志、计费、审计、限流以后都能从这一层切入