1. kubeadm 和二进制安装 k8s 适用场景分析

-

kubeadm 是官方提供的开源工具,是一个开源项目,用于快速搭建 kubernetes 集群,目前是比较方便和推荐使用的。kubeadm init 以及 kubeadm join 这两个命令可以快速创建 kubernetes 集群。Kubeadm初始化 k8s,所有的组件都是以 pod 形式运行的,具备故障自恢复能力。

-

kubeadm 是工具,可以快速搭建集群,也就是相当于用程序脚本帮我们装好了集群,属于自动部署,简化部署操作,自动部署屏蔽了很多细节,使得对各个模块感知很少,如果对 k8s 架构组件理解不深的话,遇到问题比较难排查。

-

kubeadm 适合需要经常部署 k8s,或者对自动化要求比较高的场景下使用。

-

二进制:在官网下载相关组件的二进制包,如果手动安装,对 kubernetes 理解也会更全面。

-

Kubeadm 和二进制都适合生产环境,在生产环境运行都很稳定,具体如何选择,可以根据实际项目进行评估。

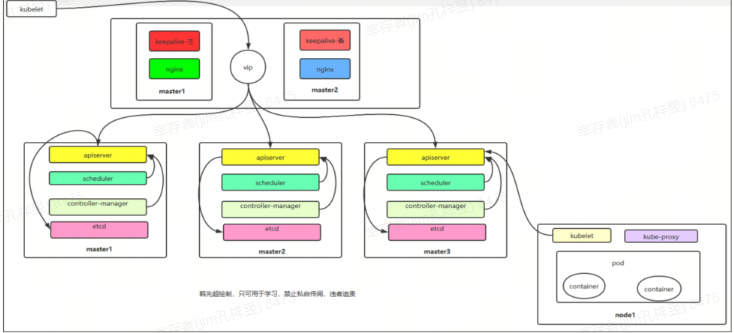

2 机器规划

- 部署版本

Kubernetes(k8s)v1.30.1

| 主机 | IP地址 | 操作系统 | 配置 |

|---|---|---|---|

| k8s-master-01 | 192.168.110.21 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-master-02 | 192.168.110.22 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-master-03 | 192.168.110.23 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-node-01 | 192.168.110.24 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-node-02 | 192.168.110.25 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-node-03 | 192.168.110.26 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

- VIP:

192.168.110.20/24

3. 基础环境部署(所有节点执行)

3.1 运行初始化脚本

[root@k8s-all ~]# vim /root/init.sh

#!/bin/bash

echo_red() { echo -e "\e[31m$1\e[0m"; }

echo_green() { echo -e "\e[32m$1\e[0m"; }

echo_yellow() { echo -e "\e[33m$1\e[0m"; }

interface=$(ip route | grep default | sed -e "s/^.*dev.//" -e "s/.proto.*//")

LocalIP=$(ip addr show $interface | awk '/inet / {print $2}' | cut -d/ -f1 | tail -1)

linux=$(awk -F "(" '{print $1}' /etc/redhat-release)

hostname=$(hostname)

echo_yellow "当前系统发行版为 $linux"

echo_yellow "当前系统网卡名为 $interface"

echo_yellow "本机IP地址为 $LocalIP"

echo_yellow "本机主机名为 $hostname"

sleep 2

echo_yellow '正在初始化请稍后...'

autoconnect=$(nmcli dev show $interface | grep 'GENERAL.AUTOCONNECT:' | awk '{print $2}')

if [[ "$autoconnect" != "yes" ]]; then

echo_yellow "检查网卡是否为开机自动连接..."

nmcli con mod $interface connection.autoconnect yes &>/dev/null

nmcli con up $interface &>/dev/null

sed -i '/^ONBOOT/ c ONBOOT=yes' /etc/sysconfig/network-scripts/"ifcfg-${interface}" &>/dev/null

systemctl restart network &>/dev/null

echo_green "网卡已设置为开机自动连接"

else

echo_green "网卡已设置为开机自动连接"

fi

ip_mode=$(nmcli dev show $interface | grep 'IP4.ADDRESS[1]' | awk '{print $2}')

gateway_dns=$(ip addr show $interface | awk '/inet / {split($2,a,"."); print a[1]"."a[2]"."a[3]}')

ip_mode2=$(grep BOOTPROTO /etc/sysconfig/network-scripts/"ifcfg-$interface" | awk -F "=" '{print $2}')

if [[ -z "$ip_mode" ]] || [ "$ip_mode2" != static ] ; then

echo_green "正在检查网卡是否为静态IP地址..."

nmcli con mod $interface ipv4.addresses "${LocalIP}/24" &>/dev/null

nmcli con mod $interface ipv4.gateway "${gateway_dns}.2" &>/dev/null

nmcli con mod $interface ipv4.dns "${gateway_dns}.2" &>/dev/null

nmcli con mod $interface ipv4.method manual &>/dev/null

nmcli con up $interface &>/dev/null

sed -i '/^BOOTPROTO/ c BOOTPROTO=static' /etc/sysconfig/network-scripts/"ifcfg-${interface}" &>/dev/null

systemctl restart network &>/dev/null

echo_green "网卡已设置静态IP"

else

echo_green "网卡已设置静态IP"

fi

for i in {1..3}; do

if ping -c 4 -i 0.2 223.5.5.5 &>/dev/null; then

echo_green "网络连通性正常"

break

else

echo_red "网络错误请检查网络配置"

echo_yellow "正在尝试重启网络(尝试次数:$i)"

systemctl restart network &>/dev/null

nmcli con up $interface &>/dev/null

sleep 5

fi

done

manage_selinux_firewall() {

SELINUXSTATUS=$(getenforce)

if [[ "$SELINUXSTATUS" == "Disabled" ]] || [[ "$SELINUXSTATUS" == "Permissive" ]]; then

echo_green "SELinux已成功关闭或当前处于宽容模式"

else

echo_red "SELinux未能关闭,正在尝试手动关闭..."

sed -i '/^SELINUX=/ c\SELINUX=disabled' /etc/selinux/config &>/dev/null

setenforce 0

if [[ "$(getenforce)" == "Disabled" ]] || [[ "$(getenforce)" == "Permissive" ]]; then

echo_green "SELinux已成功关闭"

else

echo_red "SELinux未能关闭,请手动解决"

fi

fi

}

manage_selinux_firewall

FIREWALLSTATUS=$(systemctl is-active firewalld)

if [[ "$FIREWALLSTATUS" == "active" ]]; then

echo_yellow "防火墙状态为开启,正在关闭防火墙..."

systemctl disable --now firewalld &>/dev/null

echo_green "防火墙已关闭"

else

echo_green "防火墙无需操作"

fi

PACKAGES="lrzsz ntpdate sysstat net-tools wget vim bash-completion dos2unix tree psmisc chrony rsync lsof gcc"

echo_yellow "正在安装常用软件..."

yum -y install $PACKAGES &>/dev/null

if [ $? -eq 0 ]; then

echo_green "安装成功"

else

echo_red "安装失败,请检测yum镜像仓库"

fi

echo_yellow "准备开启 $linux 系统的体验吧!!!"

# Prompt for shutdown, reboot, or exit

echo_yellow "请选择一个操作:"

echo_yellow " 关机 : 1"

echo_yellow " 重启 : 2"

echo_yellow " 退出 : 3"

read -p "请输入您的选择: " choice

delete_script() {

echo_yellow "脚本执行完毕,正在删除自身..."

rm -f "$0"

echo_green "脚本已删除。"

}

case $choice in

1)

echo_yellow "正在执行关机操作..."

delete_script

init 0

# 关机前删除脚本自身

;;

2)

echo_green "正在执行重启操作..."

delete_script

reboot

# 重启前删除脚本自身

;;

3)

echo_yellow "请继续操作,脚本将退出。"

# 退出前删除脚本自身

delete_script

exit 0

;;

*)

echo_red "无效的输入"

# 退出前删除脚本自身

delete_script

exit 1

;;

esac

[root@k8s-all ~]# bash /root/init.sh注意:

若虚拟机是进行克隆的那么网卡的UUID会重复

若UUID重复需要重新生成新的UUID

UUID重复无法获取到IPV6地址

克隆出来的虚拟机 CentOS系统需要删除DUID

rm -rf /etc/machine-idsystemd-machine-id-setupreboot查看当前的网卡列表和 UUID:

nmcli con show删除要更改 UUID 的网络连接:

nmcli con delete uuid <原 UUID>重新生成 UUID:

nmcli con add type ethernet ifname <接口名称> con-name <新名称>重新启用网络连接:

nmcli con up <新名称>

3.2 所有节点配置Hosts解析

[root@K8s-all ~]# cat >> /etc/hosts << EOF

192.168.110.21 k8s-master-01

192.168.110.22 k8s-master-02

192.168.110.23 k8s-master-03

192.168.110.24 k8s-node-01

192.168.110.25 k8s-node-02

192.168.110.26 k8s-node-03

EOF3.3 k8s-master-01生成密钥,其他节点可以免密钥访问

[root@k8s-master-01 ~]# ssh-keygen -f ~/.ssh/id_rsa -N '' -q

[root@k8s-master-01 ~]# ssh-copy-id k8s-master-02

[root@k8s-master-01 ~]# ssh-copy-id k8s-master-03

[root@k8s-master-01 ~]# ssh-copy-id k8s-node-01

[root@k8s-master-01 ~]# ssh-copy-id k8s-node-02

[root@k8s-master-01 ~]# ssh-copy-id k8s-node-033.4 配置NTP时间同步

sed -i '3,6 s/^/# /' /etc/chrony.conf

sed -i '6 a server ntp.aliyun.com iburst' /etc/chrony.conf

systemctl restart chronyd.service

chronyc sources3.5 禁用Swap交换分区

[root@K8s-all ~]# swapoff -a #临时关闭

[root@K8s-all ~]# sed -i 's/.*swap.*/# &/' /etc/fstab #永久关闭3.6 升级操作系统内核

[root@K8s-all ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

[root@K8s-all ~]# yum install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm -y

[root@K8s-all ~]# yum --enablerepo="elrepo-kernel" install kernel-ml.x86_64 -y

[root@K8s-all ~]# grub2-set-default 0

[root@K8s-all ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

[root@K8s-all ~]# reboot

[root@K8s-all ~]# uname -r3.7 配置内核转发及网桥过滤

[root@K8s-all ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0

net.ipv6.conf.all.forwarding = 1

EOF

sysctl --system3.8 开启IPVS

[root@K8s-all ~]# yum install ipset ipvsadm -y

[root@K8s-all ~]# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_vip ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in $ipvs_modules;

do

/sbin/modinfo -F filename $kernel_module >/dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe $kernel_module

fi

done

chmod 755 /etc/sysconfig/modules/ipvs.modules

EOF

[root@K8s-all ~]# bash /etc/sysconfig/modules/ipvs.modules4. 安装docker作为Runtime

4.1 二进制包下载

地址 :Index of linux/static/stable/x86_64/

[root@K8s-all ~]# wget -c https://mirrors.ustc.edu.cn/docker-ce/linux/static/stable/x86_64/docker-25.0.3.tgz

#解压

[root@K8s-all ~]# tar xf docker-*.tgz

#拷贝二进制文件

[root@K8s-all ~]# cp docker/* /usr/bin/4.2 创建containerd的service文件

[root@K8s-all ~]# cat >/etc/systemd/system/containerd.service <<EOF

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=1048576

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF

[root@k8s-all ~]# systemctl enable --now containerd.service

[root@k8s-all ~]# systemctl is-active containerd.service

active4.3 准备docker的service文件

[root@K8s-all ~]# cat > /etc/systemd/system/docker.service <<EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service cri-docker.service docker.socket containerd.service

Wants=network-online.target

Requires=docker.socket containerd.service

[Service]

Type=notify

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

OOMScoreAdjust=-500

[Install]

WantedBy=multi-user.target

EOF4.4 准备docker的socket文件

[root@K8s-all ~]# cat > /etc/systemd/system/docker.socket <<EOF

[Unit]

Description=Docker Socket for the API

[Socket]

ListenStream=/var/run/docker.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF4.6 配置加速器

[root@K8s-all ~]# mkdir -p /etc/docker

[root@K8s-all ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://dbckerproxy.com",

"https://hub-mirror.c.163.com",

"https://mirror.baidubce.com",

"https://ccr.ccs.tencentyun.com"

]

}

EOF4.7 启动Docker

[root@K8s-all ~]# groupadd docker

#创建docker组

[root@K8s-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@K8s-all ~]# systemctl enable --now docker.socket

# 启用并立即启动docker.socket单元。docker.socket是一个systemd的socket单元,用于接收来自网络的Docker API请求。

[root@K8s-all ~]# systemctl enable --now docker.service

# 启用并立即启动docker.service单元。docker.service是Docker守护进程的systemd服务单元。

[root@K8s-all ~]# docker info

#验证4.8 安装部署cri-docker

-

注意:K8s从1.24版本后不支持docker了所以这里需要用cri-dockererd

[root@K8s-all ~]# wget -c https://mirrors.chenby.cn/https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.10/cri-dockerd-0.3.10.amd64.tgz

# 解压cri-docker

[root@K8s-all ~]# tar xf cri-dockerd-*.amd64.tgz

[root@K8s-all ~]# cp -r cri-dockerd/ /usr/bin/

[root@K8s-all ~]# chmod +x /usr/bin/cri-dockerd/cri-dockerd- 写入启动cri-docker配置文件**

[root@K8s-all ~]# cat > /usr/lib/systemd/system/cri-docker.service <<EOF

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

- 写入cri-docker的socket配置文件

[root@K8s-all ~]# cat > /usr/lib/systemd/system/cri-docker.socket <<EOF

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF- 启动cri-docker

[root@K8s-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@K8s-all ~]# systemctl enable --now cri-docker.service

# 启用并立即启动cri-docker.service单元。cri-docker.service是cri-docker守护进程的systemd服务单元。

[root@K8s-all ~]# systemctl restart cri-docker.service

# 重启cri-docker.service单元,即重新启动cri-docker守护进程。

[root@K8s-all ~]# systemctl status docker.service

# 显示docker.service单元的当前状态,包括运行状态、是否启用等信息。5. k8s与etcd下载及安装

仅在k8s-master-01操作

- 下载并解压k8s安装包

[root@k8s-master-01 ~]# wget -c https://mirrors.chenby.cn/https://github.com/etcd-io/etcd/releases/download/v3.5.12/etcd-v3.5.12-linux-amd64.tar.gz

[root@k8s-master-01 ~]# wget -c https://dl.k8s.io/v1.30.1/kubernetes-server-linux-amd64.tar.gz

[root@k8s-master-01 ~]# tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

# 命令的解释如下:

# - tar:用于处理tar压缩文件的命令。

# - -xf:表示解压操作。

# - kubernetes-server-linux-amd64.tar.gz:要解压的文件名。

# - --strip-components=3:表示解压时忽略压缩文件中的前3级目录结构,提取文件时直接放到目标目录中。

# - -C /usr/local/bin:指定提取文件的目标目录为/usr/local/bin。

# - kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}:要解压和提取的文件名模式,用花括号括起来表示模式中的多个可能的文件名。

# 解压etcd安装文件

[root@k8s-master-01 ~]# tar -xf etcd*.tar.gz && mv etcd-*/etcd /usr/local/bin/ && mv etcd-*/etcdctl /usr/local/bin/

- 查看版本

[root@k8s-master-01 ~]# kubelet --version

Kubernetes v1.30.1

[root@k8s-master-01 ~]# etcdctl version

etcdctl version: 3.5.12

API version: 3.5- 将组件发送至其他k8s节点

# 定义变量

[root@k8s-master-01 ~]# Master='k8s-master-02 k8s-master-03'

[root@k8s-master-01 ~]# Work='k8s-node-01 k8s-node-02 k8s-node-03'

# 拷贝master组件

[root@k8s-master-01 ~]# for NODE in $Master; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

# 拷贝Work节点

[root@k8s-master-01 ~]# for NODE in $Work; do echo $NODE; scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

# 所有节点创建目录

[root@k8s-all ~]# mkdir -p /opt/cni/bin5.1 相关证书生成

- k8s-master-01节点下载证书生成工具

[root@k8s-master-01 ~]# wget -c "https://mirrors.chenby.cn/https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssl_1.6.4_linux_amd64" -O /usr/local/bin/cfssl

[root@k8s-master-01 ~]# wget -c "https://mirrors.chenby.cn/https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssljson_1.6.4_linux_amd64" -O /usr/local/bin/cfssljson

# 添加执行权限

[root@k8s-master-01 ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson5.1.1生成etcd证书

- 所有master节点创建证书存放目录

[root@k8s-master-all ~]# mkdir /etc/etcd/ssl -p- master01节点生成etcd证书

[root@k8s-master-01 ~]# cd /etc/etcd/ssl/

# 写入生成证书所需的配置文件

[root@k8s-master-01 ssl]# cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

[root@k8s-master-01 ssl]# cat > etcd-ca-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

[root@k8s-master-01 ssl]# cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

#cfssl是一个用于生成TLS/SSL证书的工具,它支持PKI、JSON格式配置文件以及与许多其他集成工具的配合使用。

# gencert参数表示生成证书的操作。-initca参数表示初始化一个CA(证书颁发机构)。CA是用于签发其他证书的根证书。etcd-ca-csr.json是一个JSON格式的配置文件,其中包含了CA的详细信息,如私钥、公钥、有效期等。这个文件提供了生成CA证书所需的信息。

# 使用cfssl工具根据配置文件ca-csr.json生成一个CA证书

[root@k8s-master-01 ssl]# cat > etcd-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

# 用cfssl生成etcd证书

[root@k8s-master-01 ssl]# cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s-master-01,k8s-master-02,k8s-master-03,192.168.110.21,192.168.110.22,192.168.110.23 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd- 将证书复制到其他节点

[root@k8s-master-01 ssl]# Master='k8s-master-02 k8s-master-03'

[root@k8s-master-01 ssl]# for NODE in $Master; do ssh $NODE "mkdir -p /etc/etcd/ssl"; for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}; done; done5.1.2 生成k8s相关证书

- 所有k8s节点创建证书存放目录

[root@k8s-all ~]# mkdir -p /etc/kubernetes/pki- master01节点生成k8s证书

[root@k8s-master-01 ~]# cd /etc/kubernetes/pki/

[root@k8s-master-01 pki]# cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

[root@k8s-master-01 pki]# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

[root@k8s-master-01 pki]# cat > apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

EOF

[root@k8s-master-01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=/etc/etcd/ssl/ca-config.json \

-hostname=10.96.0.1,192.168.110.20,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,x.oiox.cn,k.oiox.cn,l.oiox.cn,o.oiox.cn,192.168.110.21,192.168.110.22,192.168.110.23,192.168.110.24,192.168.110.25,192.168.110.26,192.168.110.20 \

-profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver- 生成apiserver聚合证书

[root@k8s-master-01 pki]# cat > front-proxy-ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"ca": {

"expiry": "876000h"

}

}

EOF

[root@k8s-master-01 pki]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca # 生成CA证书

[root@k8s-master-01 pki]# cat > front-proxy-client-csr.json << EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

[root@k8s-master-01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/front-proxy-ca.pem \

-ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem \

-config=/etc/etcd/ssl/ca-config.json \

-profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client5.1.3 生成controller-manage的证书

-

选择使用那种高可用方案

-

若使用 haproxy 那么为

--server=https://192.168.110.20:9443 -

若使用 nginx方案,那么为

--server=https://127.0.0.1:8443

-

[root@k8s-master-01 pki]# cat > manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

EOF

[root@k8s-master-01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=/etc/etcd/ssl/ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

# 设置一个集群项

# 若使用 haproxy、keepalived 那么为 `--server=https://192.168.110.20:9443`

# 若使用 nginx方案,那么为 `--server=https://127.0.0.1:8443`

[root@k8s-master-01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 设置一个环境项,一个上下文

[root@k8s-master-01 pki]# kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 设置一个用户项

[root@k8s-master-01 pki]# kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 设置默认环境

[root@k8s-master-01 pki]# kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig5.1.4 生成kube-scheduler的证书

[root@k8s-master-01 pki]# cat > scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

EOF

[root@k8s-master-01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=/etc/etcd/ssl/ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

# 配置一个名为"kubernetes"的集群

[root@k8s-master-01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

# 设置 kube-scheduler 组件的身份验证凭据

[root@k8s-master-01 pki]# kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

# 设置一个名为"system:kube-scheduler@kubernetes"的上下文

[root@k8s-master-01 pki]# kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

# 配置Kubernetes集群中的调度器组件

[root@k8s-master-01 pki]# kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig5.1.5 生成admin的证书配置

[root@k8s-master-01 pki]# cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

EOF

# 生成Kubernetes admin的证书

[root@k8s-master-01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=/etc/etcd/ssl/ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

# 配置一个名为"kubernetes"的集群

[root@k8s-master-01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:8443 \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

# 设置 kubernetes-admin 组件的身份验证凭据

[root@k8s-master-01 pki]# kubectl config set-credentials kubernetes-admin \

--client-certificate=/etc/kubernetes/pki/admin.pem \

--client-key=/etc/kubernetes/pki/admin-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

# 设置一个名为"kubernetes-admin@kubernetes"的上下文

[root@k8s-master-01 pki]# kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

# 配置Kubernetes集群中的调度器组件

[root@k8s-master-01 pki]# kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig5.1.6 创建kube-proxy证书

[root@k8s-master-01 pki]# cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-proxy",

"OU": "Kubernetes-manual"

}

]

}

EOF

# 生成Kubernetes admin的证书

[root@k8s-master-01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=/etc/etcd/ssl/ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare /etc/kubernetes/pki/kube-proxy

# 配置一个名为"kubernetes"的集群

[root@k8s-master-01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:8443 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# 设置 kube-proxy 组件的身份验证凭据

[root@k8s-master-01 pki]# kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/pki/kube-proxy.pem \

--client-key=/etc/kubernetes/pki/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# 设置一个名为"kube-proxy@kubernetes"的上下文

[root@k8s-master-01 pki]# kubectl config set-context kube-proxy@kubernetes \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# 配置Kubernetes集群中的调度器组件

[root@k8s-master-01 pki]# kubectl config use-context kube-proxy@kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig5.1.7 创建ServiceAccount Key --- secret

[root@k8s-master-01 pki]# openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

[root@k8s-master-01 pki]# openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub5.1.8 将证书发送到其他master节点

[root@k8s-master-01 pki]# for NODE in k8s-master-02 k8s-master-03; do for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE}; done; for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE}; done; done5.2 K8s系统组件配置

5.2.1 etcd配置

- k8s-master-01配置

[root@k8s-master-01 ~]# cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master-01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.110.21:2380'

listen-client-urls: 'https://192.168.110.21:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.110.21:2380'

advertise-client-urls: 'https://192.168.110.21:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master-01=https://192.168.110.21:2380,k8s-master-02=https://192.168.110.22:2380,k8s-master-03=https://192.168.110.23:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF- k8s-master-02配置

[root@k8s-master-02 ~]# cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master-02'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.110.22:2380'

listen-client-urls: 'https://192.168.110.22:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.110.22:2380'

advertise-client-urls: 'https://192.168.110.22:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master-01=https://192.168.110.21:2380,k8s-master-02=https://192.168.110.22:2380,k8s-master-03=https://192.168.110.23:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF- k8s-master-03配置

[root@k8s-master-03 ~]# cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master-03'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.110.23:2380'

listen-client-urls: 'https://192.168.110.23:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.110.23:2380'

advertise-client-urls: 'https://192.168.110.23:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master-01=https://192.168.110.21:2380,k8s-master-02=https://192.168.110.22:2380,k8s-master-03=https://192.168.110.23:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF5.2.2 创建service(所有master节点操作)

- 创建etcd.service并启动

[root@k8s-master-all ~]# cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOF- 创建etcd证书目录

[root@k8s-master-all ~]# mkdir /etc/kubernetes/pki/etcd

[root@k8s-master-all ~]# ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master-all ~]# systemctl daemon-reload

[root@k8s-master-all ~]# systemctl enable --now etcd.service- 查看etcd状态

[root@k8s-master-all ~]# etcdctl --endpoints="192.168.110.21:2379,192.168.110.22:2379,192.168.110.23:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.110.21:2379 | 968dab376a943298 | 3.5.12 | 20 kB | true | false | 2 | 8 | 8 | |

| 192.168.110.22:2379 | 86c98fa15965d9ab | 3.5.12 | 20 kB | false | false | 2 | 8 | 8 | |

| 192.168.110.23:2379 | 4a6158ec2cc2b579 | 3.5.12 | 20 kB | false | false | 2 | 8 | 8 | |

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+6. 高可用配置

- 在Master服务器上操作

6.1 NGINX高可用方案

# 安装编译环境

[root@k8s-master-01 ~]# yum install gcc -y

# 下载解压nginx二进制文件

[root@k8s-master-01 ~]# wget -c http://nginx.org/download/nginx-1.25.3.tar.gz

[root@k8s-master-01 ~]# tar xf nginx-*.tar.gz

[root@k8s-master-01 ~]# cd nginx-*

# 进行编译

[root@k8s-master-01 ~]# ./configure --with-stream --without-http --without-http_uwsgi_module --without-http_scgi_module --without-http_fastcgi_module

[root@k8s-master-01 ~]# make && make install

# 拷贝编译好的nginx

[root@k8s-master-01 ~]# node='k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02 k8s-node-03'

[root@k8s-master-01 ~]# for NODE in $node; do scp -r /usr/local/nginx/ $NODE:/usr/local/nginx/; done- 写入启动配置

[root@k8s-all ~]# cat > /usr/local/nginx/conf/kube-nginx.conf <<EOF

worker_processes 1;

events {

worker_connections 1024;

}

stream {

upstream backend {

least_conn;

hash $remote_addr consistent;

server 192.168.110.21:6443 max_fails=3 fail_timeout=30s;

server 192.168.110.22:6443 max_fails=3 fail_timeout=30s;

server 192.168.110.23:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 127.0.0.1:8443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

EOF

# 写入启动配置文件

[root@k8s-all ~]# cat > /etc/systemd/system/kube-nginx.service <<EOF

[Unit]

Description=kube-apiserver nginx proxy

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=forking

ExecStartPre=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx -t

ExecStart=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx

ExecReload=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx -s reload

PrivateTmp=true

Restart=always

RestartSec=5

StartLimitInterval=0

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 启动

[root@k8s-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@k8s-all ~]# systemctl enable --now kube-nginx.service

# 启用并立即启动kube-nginx.service单元。kube-nginx.service是kube-nginx守护进程的systemd服务单元。6.2 配置Keepalived

[root@k8s-master-01 ~]# yum install keepalived -y

[root@k8s-master-01 ~]# cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id master1

script_user root

enable_script_security

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 3

fall 3

rise 2

}

vrrp_instance Nginx {

state MASTER

interface ens33

virtual_router_id 51

priority 200

advert_int 1

authentication {

auth_type PASS

auth_pass XCZKXY

}

track_script {

check_nginx

}

virtual_ipaddress {

192.168.110.20/24

}

}

EOF

# 创建健康检测脚本

[root@k8s-master-01 ~]# cat > /etc/keepalived/check_nginx.sh<<EOF

#!/bin/sh

# nginx down

pid=`ps -C nginx --no-header | wc -l`

if [ $pid -eq 0 ]

then

systemctl start kube-nginx.service

sleep 5

if [ `ps -C nginx --no-header | wc -l` -eq 0 ]

then

systemctl stop kube-nginx.service

else

exit 0

fi

fi

EOF

[root@k8s-master-01 ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@k8s-master-01 ~]# rsync -avz /etc/keepalived/{keepalived.conf,check_nginx.sh} k8s-master-02:/etc/keepalived/

[root@k8s-master-01 ~]# rsync -avz /etc/keepalived/{keepalived.conf,check_nginx.sh} k8s-master-03:/etc/keepalived/- 其他两个节点修改Keepalivbed配置

[root@k8s-master-02 ~]# sed -i 's/MASTER/BACKUP/' /etc/keepalived/keepalived.conf

[root@k8s-master-02 ~]# sed -i 's/200/150/' /etc/keepalived/keepalived.conf

[root@k8s-master-03 ~]# sed -i 's/MASTER/BACKUP/' /etc/keepalived/keepalived.conf

[root@k8s-master-03 ~]# sed -i 's/200/100/' /etc/keepalived/keepalived.conf

# 启动服务

[root@k8s-master-all ~]# systemctl daemon-reload

[root@k8s-master-all ~]# systemctl enable --now keepalived.service- 高可用测试

[root@k8s-master-01 ~]# ip a | grep 192.168.110.20/24

inet 192.168.110.20/24 scope global secondary ens33

# 模拟Keepalived宕机

[root@k8s-master-01 ~]# systemctl stop keepalived

[root@k8s-master-02 ~]# ip a | grep 192.168.110.20/24 # VIP漂移到master-02

inet 192.168.110.20/24 scope global secondary ens33

[root@k8s-master-03 ~]# ip a | grep 192.168.110.20/24 # master-02宕机

inet 192.168.110.20/24 scope global secondary ens33

[root@k8s-master-03 ~]# ip a | grep 192.168.110.20/24

inet 192.168.110.20/24 scope global secondary ens33 # VIP漂移到master-03

[root@k8s-master-01 ~]# systemctl start keepalived.service # 恢复后正常

[root@k8s-master-01 ~]# ip a | grep 192.168.110.20/24

inet 192.168.110.20/24 scope global secondary ens33

[root@k8s-all ~]# ping -c 2 192.168.110.20 #确保集群内部可以通讯

PING 192.168.110.20 (192.168.110.20) 56(84) bytes of data.

64 bytes from 192.168.110.20: icmp_seq=1 ttl=64 time=1.03 ms

64 bytes from 192.168.110.20: icmp_seq=2 ttl=64 time=2.22 ms

--- 192.168.110.20 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1018ms

rtt min/avg/max/mdev = 1.034/1.627/2.220/0.593 ms7. k8s组件配置

- 所有k8s节点创建以下目录

[root@k8s-all ~]# mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes7.1 创建apiserver

- master-01节点配置

[root@k8s-master-01 ~]# cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--v=2 \\

--allow-privileged=true \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--advertise-address=192.168.110.21 \\

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \\

--service-node-port-range=30000-32767 \\

--etcd-servers=https://192.168.110.21:2379,https://192.168.110.22:2379,https://192.168.110.23:2379 \\

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \\

--etcd-certfile=/etc/etcd/ssl/etcd.pem \\

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\

--client-ca-file=/etc/kubernetes/pki/ca.pem \\

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \\

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \\

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \\

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \\

--service-account-key-file=/etc/kubernetes/pki/sa.pub \\

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \\

--enable-bootstrap-token-auth=true \\

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \\

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \\

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \\

--requestheader-allowed-names=aggregator \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF- master-02节点配置

[root@k8s-master-02 ~]# cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--v=2 \\

--allow-privileged=true \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--advertise-address=192.168.110.22 \\

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \\

--service-node-port-range=30000-32767 \\

--etcd-servers=https://192.168.110.21:2379,https://192.168.110.22:2379,https://192.168.110.23:2379 \\

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \\

--etcd-certfile=/etc/etcd/ssl/etcd.pem \\

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\

--client-ca-file=/etc/kubernetes/pki/ca.pem \\

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \\

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \\

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \\

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \\

--service-account-key-file=/etc/kubernetes/pki/sa.pub \\

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \\

--authorization-mode=Node,RBAC \\

--enable-bootstrap-token-auth=true \\

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \\

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \\

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \\

--requestheader-allowed-names=aggregator \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF- master-03节点配置

[root@k8s-master-03 ~]# cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--v=2 \\

--allow-privileged=true \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--advertise-address=192.168.110.23 \\

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \\

--service-node-port-range=30000-32767 \\

--etcd-servers=https://192.168.110.21:2379,https://192.168.110.22:2379,https://192.168.110.23:2379 \\

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \\

--etcd-certfile=/etc/etcd/ssl/etcd.pem \\

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\

--client-ca-file=/etc/kubernetes/pki/ca.pem \\

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \\

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \\

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \\

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \\

--service-account-key-file=/etc/kubernetes/pki/sa.pub \\

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \\

--authorization-mode=Node,RBAC \\

--enable-bootstrap-token-auth=true \\

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \\

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \\

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \\

--requestheader-allowed-names=aggregator \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF7..2 启动apiserver

[root@k8s-master-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@k8s-master-all ~]# systemctl enable --now kube-apiserver.service

# 启用并立即启动kube-apiserver.service单元。kube-apiserver.service是kube-apiserver守护进程的systemd服务单元。

[root@k8s-master-all ~]# systemctl restart kube-apiserver.service

# 重启kube-apiserver.service单元,即重新启动etcd守护进程。7.3 配置kube-controller-manager service

# 所有master节点配置,且配置相同

# 172.16.0.0/12为pod网段

[root@k8s-master-all ~]# cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--v=2 \\

--bind-address=0.0.0.0 \\

--root-ca-file=/etc/kubernetes/pki/ca.pem \\

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \\

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \\

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \\

--leader-elect=true \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=40s \\

--node-monitor-period=5s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--allocate-node-cidrs=true \\

--service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \\

--cluster-cidr=172.16.0.0/12,fc00:2222::/112 \\

--node-cidr-mask-size-ipv4=24 \\

--node-cidr-mask-size-ipv6=120 \\

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF- 启动kube-controller-manager

[root@k8s-master-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@k8s-master-all ~]# systemctl enable --now kube-controller-manager.service

# 启用并立即启动kube-controller-manager.service单元。kube-controller-manager.service是kube-controller-manager守护进程的systemd服务单元。

[root@k8s-master-all ~]# systemctl restart kube-controller-manager.service

# 重启kube-controller-manager.service单元,即重新启动etcd守护进程。7.4 配置kube-scheduler service

[root@k8s-master-all ~]# cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--v=2 \\

--bind-address=0.0.0.0 \\

--leader-elect=true \\

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF- 启动并查看服务状态

[root@k8s-master-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@k8s-master-all ~]# systemctl enable --now kube-scheduler.service

# 启用并立即启动kube-scheduler.service单元。kube-scheduler.service是kube-scheduler守护进程的systemd服务单元。

[root@k8s-master-all ~]# systemctl restart kube-scheduler.service

# 重启kube-scheduler.service单元,即重新启动etcd守护进程。7.5 TLS Bootstrapping配置

7.5.1 在master01上配置

# 设置 Kubernetes 集群配置

[root@k8s-master-01 ~]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://127.0.0.1:8443 \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# 设置凭证信息

[root@k8s-master-01 ~]# kubectl config set-credentials tls-bootstrap-token-user \

--token=c8ad9c.2e4d610cf3e7426e \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# 设置上下文信息

[root@k8s-master-01 ~]# kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# 设置当前上下文

[root@k8s-master-01 ~]# kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

[root@k8s-master-01 ~]# mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config7.5.2 查看集群状态

[root@k8s-master-01 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy ok

# 写入bootstrap-token

[root@k8s-master-01 ~]# cat > bootstrap.secret.yaml << EOF

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8ad9c

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8ad9c

token-secret: 2e4d610cf3e7426e

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

EOF

[root@k8s-master-01 ~]# kubectl apply -f bootstrap.secret.yaml8. node节点配置

- 在master01上将证书复制到node节点

[root@k8s-master-01 ~]# cd /etc/kubernetes/

[root@k8s-master-01 ~]# for NODE in k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02 k8s-node-03; do ssh $NODE mkdir -p /etc/kubernetes/pki; for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig kube-proxy.kubeconfig; do scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}; done; done8.1 当使用docker作为Runtime

# 所以节点操作

[root@k8s-all ~]# cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=network-online.target firewalld.service cri-docker.service docker.socket containerd.service

Wants=network-online.target

Requires=docker.socket containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--config=/etc/kubernetes/kubelet-conf.yml \\

--container-runtime-endpoint=unix:///run/cri-dockerd.sock \\

--node-labels=node.kubernetes.io/node=

[Install]

WantedBy=multi-user.target

EOF8.1.1 所有k8s节点创建kubelet的配置文件

[root@k8s-all ~]# cat > /etc/kubernetes/kubelet-conf.yml <<EOF

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

EOF8.1.2 启动kubelet

[root@k8s-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@k8s-all ~]# systemctl enable --now kubelet.service

# 启用并立即启动kubelet.service单元。kubelet.service是kubelet守护进程的systemd服务单元。

[root@k8s-all ~]# systemctl restart kubelet.service

# 重启kubelet.service单元,即重新启动kubelet守护进程。8.1.3 查看集群

[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01 NotReady <none> 13m v1.30.1

k8s-master-02 NotReady <none> 8m53s v1.30.1

k8s-master-03 NotReady <none> 8m52s v1.30.1

k8s-node-01 NotReady <none> 5m37s v1.30.1

k8s-node-02 NotReady <none> 5m38s v1.30.1

k8s-node-03 NotReady <none> 5m39s v1.30.1

# 查看容器运行时

[root@k8s-master-01 ~]# kubectl describe node | grep Runtime

Container Runtime Version: docker://25.0.3

Container Runtime Version: docker://25.0.3

Container Runtime Version: docker://25.0.3

Container Runtime Version: docker://25.0.3

Container Runtime Version: docker://25.0.3

Container Runtime Version: docker://25.0.38.2 kube-proxy配置

8.2.1将kubeconfig发送至其他节点

# master-01执行

[root@k8s-master-01 ~]# for NODE in k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02 k8s-node-03; do scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig; done8.2.2 所有k8s节点添加kube-proxy的service文件

[root@k8s-all ~]# cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/etc/kubernetes/kube-proxy.yaml \\

--cluster-cidr=172.16.0.0/12,fc00:2222::/112 \\

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF8.2.3 所有k8s节点添加kube-proxy的配置

[root@k8s-all ~]# cat > /etc/kubernetes/kube-proxy.yaml << EOF

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.16.0.0/12,fc00:2222::/112

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

EOF8.2.4 启动kube-proxy

[root@k8s-all ~]# systemctl daemon-reload

# 用于重新加载systemd管理的单位文件。当你新增或修改了某个单位文件(如.service文件、.socket文件等),需要运行该命令来刷新systemd对该文件的配置。

[root@k8s-all ~]# systemctl enable --now kube-proxy.service

# 启用并立即启动kube-proxy.service单元。kube-proxy.service是kube-proxy守护进程的systemd服务单元。

[root@k8s-all ~]# systemctl restart kube-proxy.service

# 重启kube-proxy.service单元,即重新启动kube-proxy守护进程。8.3 安装网络插件

- CentOS 7 要升级libseccomp 不然 无法安装网络插件

# 升级runc

[root@k8s-master-01 ~]# wget -c https://mirrors.chenby.cn/https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

[root@k8s-master-01 ~]# install -m 755 runc.amd64 /usr/local/sbin/runc

[root@k8s-master-01 ~]# cp -p /usr/local/sbin/runc /usr/local/bin/runc

[root@k8s-master-01 ~]# cp -p /usr/local/sbin/runc /usr/bin/runc

#查看当前版本

[root@k8s-master-01 ~]# rpm -qa | grep libseccomp

libseccomp-2.3.1-4.el7.x86_64- 安装Calico

[root@k8s-master-01 ~]# wget -c https://calico-v3-25.netlify.app/archive/v3.25/manifests/calico.yaml

[root@k8s-master-01 ~]# vim calico.yaml

# 以下两行默认没有开启,开始后修改第二行为kubeadm初始化使用指定的pod network即可。

4600 # no effect. This should fall within `--cluster-cidr`.

4601 - name: CALICO_IPV4POOL_CIDR

4602 value: "172.16.0.0/12"

4603 # Disable file logging so `kubectl logs` works.

# 所以节点手动拉取镜像

docker pull docker.io/calico/cni:v3.25.0

docker pull docker.io/calico/node:v3.25.0

docker pull docker.io/calico/kube-controllers:v3.25.0

[root@k8s-master-01 ~]# kubectl apply -f calico.yaml

[root@k8s-master-01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5b9b456c66-n9lz6 1/1 Running 0 2m52s

calico-node-49v62 1/1 Running 0 2m52s

calico-node-64blt 1/1 Running 0 2m52s

calico-node-668qt 1/1 Running 0 2m52s

calico-node-9ktxk 1/1 Running 0 2m52s

calico-node-njgvp 1/1 Running 0 2m52s

[root@k8s-master-01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready <none> 52m v1.30.1

k8s-master-02 Ready <none> 47m v1.30.1

k8s-master-03 Ready <none> 47m v1.30.1

k8s-node-01 Ready <none> 44m v1.30.1

k8s-node-02 Ready <none> 44m v1.30.1

k8s-node-03 Ready <none> 44m v1.30.1

9. 安装命令行自动补全功能

yum install bash-completion -y

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc