介绍

获取前 100+ 个精选的 LLM 面试问题,了解如何准备生成式 AI 或 LLM 面试准备和大型语言模型 (LLM) 面试准备的学习路径。

This article explains learning path for large language models (LLMs) interview preparation. You will find below details in this article:

本文介绍了大型语言模型 (LLM) 面试准备的学习路径。您将在本文中找到以下详细信息:

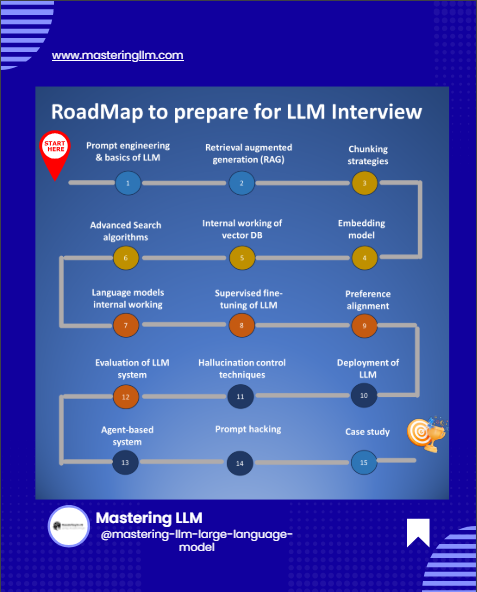

- Road map路线图

- Prompt engineering & basics of LLM提示工程和LLM基础知识

- Retrieval augmented generation (RAG)检索增强生成 (RAG)

- Chunking strategies分块策略

- Embedding Models嵌入模型

- Internal working of vector DB矢量数据库的内部工作

- Advanced search algorithms高级搜索算法

- Language models internal working语言模型内部工作

- Supervised fine-tuning of LLMLLM 的监督微调

- Preference Alignment (RLHF/DPO)

首选项对齐 (RLHF/DPO) - Evaluation of LLM systemLLM 系统的评估

- Hallucination control techniques幻觉控制技术

- Deployment of LLM部署 LLM

- Agent-based system基于代理的系统

- Prompt Hacking提示黑客攻击

- Case Study & Scenario-based question案例研究和基于情景的问题

Roadmap路线图

Prompt engineering & basics of LLM提示工程和LLM基础知识

- Question 1 : What is the difference between Predictive/ Discriminative AI and generative AI?

问题 1:预测性/判别性 AI 和生成式 AI 有什么区别? - Question 2: What is LLM & how LLMs are trained?

问题2:什么是LLM&LLM是如何被训练的? - Question 3: What is a token in the language model?

问题 3:语言模型中的令牌是什么? - Question 4: How to estimate the cost of running a SaaS-based & Open source LLM model?

问题4:如何估算运行基于SaaS和开源的LLM模型的成本? - Question 5: Explain the Temperature parameter and how to set it.

问题 5:解释 Temperature 参数以及如何设置它。 - Question 6: What are different decoding strategies for picking output tokens?

问题 6:选择输出 Token 有哪些不同的解码策略? - Question 7: What are the different ways you can define stopping criteria in a large language model?

问题 7:在大型语言模型中定义停止条件的方法有哪些? - Question 8: How to use stop sequence in LLMs?

问题 8:如何在 LLM 中使用停止序列? - Question 9: Explain the basic structure of prompt engineering.

问题 9:解释提示工程的基本结构。 - Question 10: Explain the type of prompt engineering

问题 10:解释提示工程的类型 - **Question 11: **Explain In-Context Learning问题 11:解释情境学习

- Question 12: What are some of the aspects to keep in mind while using few-shots prompting?

问题 12:使用小镜头提示时需要记住哪些方面? - Question 13: What are certain strategies to write good prompts?

问题 13:写好提示的策略是什么? - Question 14: What is hallucination & how can it be controlled using prompt engineering?

问题14:什么是幻觉,如何通过提示工程来控制它? - Question 15: How do I improve the reasoning ability of my LLM through prompt engineering?

问题 15:如何通过提示工程提高我的 LLM 的推理能力? - Question 16: How to improve LLM reasoning if your COT prompt fails?

问题 16:如果您的 COT 提示失败,如何改进 LLM 推理?

Want to find out correct and accurate answers? Look for our LLM Interview Course

想找出正确和准确的答案吗?寻找我们的 LLM 面试课程

- 100+ Questions spanning 14 categories

100+ 问题,跨越 14 个类别 - Curated 100+ assessments for each category

为每个类别策划 100+ 评估 - Well-researched real-world interview questions based on FAANG & Fortune 500 companies

基于FAANG和财富500强公司的真实面试问题 - Focus on Visual learning专注于视觉学习

- Real Case Studies & Certification真实案例研究和认证

50% off Coupon Code --- LLM50

优惠券代码 50% 折扣 --- LLM50

Coupon is valid till 30th May 2024

优惠券有效期至 2024 年 5 月 30 日

Link for the course ---课程链接 ---

大型语言模型(LLM)面试问答课程

通过### 这个全面的大型语言模型(LLM)面试问答课程,深入探索AI的世界...

Retrieval augmented generation (RAG)检索增强生成 (RAG)

- Question 1: How to increase accuracy, and reliability & make answers verifiable in LLM?

问题1:如何在LLM中提高准确性和可靠性并使答案可验证? - **Question 2: **How does Retrieval augmented generation (RAG) work?

问题 2:检索增强生成 (RAG) 的工作原理是什么? - Question 3: What are some of the benefits of using the RAG system?

问题 3:使用 RAG 系统有哪些好处? - Question 4: What are the architecture patterns you see when you want to customize your LLM with proprietary data?

问题 4:当您想使用专有数据自定义 LLM 时,您会看到哪些架构模式? - Question 5: When should I use Fine-tuning instead of RAG?

问题 5:我什么时候应该使用 Fine-tuning 而不是 RAG?

Chunking strategies分块策略

- Question 1: What is chunking and why do we chunk our data?

问题 1:什么是分块,为什么我们要对数据进行分块? - Question 2: What are factors influences chunk size?

问题 2:影响块大小的因素有哪些? - Question 3: What are the different types of chunking methods available?

问题 3:有哪些不同类型的分块方法可用? - Question 4: How to find the ideal chunk size?

问题 4:如何找到理想的块大小?

Embedding Models嵌入模型

- Question 1: What are vector embeddings? And what is an embedding model?

问题 1:什么是向量嵌入?什么是嵌入模型? - Question 2: How embedding model is used in the context of LLM application?

问题 2:在 LLM 应用程序的上下文中如何使用嵌入模型? - Question 3: What is the difference between embedding short and long content?

问题 3:嵌入短内容和长内容有什么区别? - Question 4: How to benchmark embedding models on your data?

问题 4:如何对数据进行嵌入模型基准测试? - Question 5: Walk me through the steps of improving the sentence transformer model used for embedding

问题 5:请向我介绍改进用于嵌入的句子转换模型的步骤

Internal working of vector DB矢量数据库的内部工作

- Question 1: What is vector DB?

问题 1:什么是 vector DB? - Question 2: How vector DB is different from traditional databases?

问题 2:矢量数据库与传统数据库有何不同? - Question 3: How does a vector database work?

问题 3:矢量数据库如何工作? - Question 4: Explain the difference between vector index, vector DB & vector plugins.

问题4:解释向量索引、向量DB和向量插件之间的区别。 - Question 5: What are different vector search strategies?

问题 5:有哪些不同的向量搜索策略? - Question 6: How does clustering reduce search space? When does it fail and how can we mitigate these failures?

问题 6:聚类如何减少搜索空间?它何时会失败,我们如何减轻这些失败? - Question 7: Explain the Random projection index.

问题 7:解释随机投影指数。 - Question 8: Explain the Localitysensitive hashing (LHS) indexing method?

问题 8:解释 Localitysensitive 哈希 (LHS) 索引方法? - Question 9: Explain the product quantization (PQ) indexing method

问题 9:解释乘积量化 (PQ) 标定方法 - Question 10: Compare different Vector indexes and given a scenario, which vector index you would use for a project?

问题 10:比较不同的 Vector 索引,并在给定一个场景中,您将为项目使用哪个 Vector 索引? - Question 11: How would you decide on ideal search similarity metrics for the use case?

问题 11:您将如何为用例确定理想的搜索相似度指标? - Question 12: Explain the different types and challenges associated with filtering in vector DB.

问题 12:解释与矢量 DB 中的过滤相关的不同类型和挑战。 - Question 13: How do you determine the best vector database for your needs?

问题 13:您如何确定最适合您需求的向量数据库?

Advanced search algorithms高级搜索算法

- Question 1: Why it's important to have very good search

问题 1:为什么拥有非常好的搜索很重要 - Question 2: What are the architecture patterns for information retrieval & semantic search, and their use cases?

问题2:信息检索和语义搜索的架构模式是什么,以及它们的用例是什么? - Question 3: How can you achieve efficient and accurate search results in large scale datasets?

问题 3:如何在大规模数据集中获得高效准确的搜索结果? - Question 4: Explain the keyword-based retrieval method

问题 4:解释基于关键字的检索方法 - Question 5: How to fine-tune re-ranking models?

问题 5:如何微调重新排名模型? - Question 6: Explain most common metric used in information retrieval and when it fails?

问题 6:解释信息检索中最常用的指标以及何时失败? - Question 7: I have a recommendation system, which metric should I use to evaluate the system?

问题 7:我有一个推荐系统,我应该使用哪个指标来评估该系统? - Question 8: Compare different information retrieval metrics and which one to use when?

问题 8:比较不同的信息检索指标以及何时使用哪一个?

Language models internal working语言模型内部工作

- Question 1: Detailed understanding of the concept of selfattention

问题 1:详细了解自我注意的概念 - Question 2: Overcoming the disadvantages of the self-attention mechanism

问题 2:克服自我注意机制的缺点 - Question 3: Understanding positional encoding问题 3:了解位置编码

- Question 4: Detailed explanation of Transformer architecture

问题 4:Transformer 架构详解 - Question 5: Advantages of using a transformer instead of LSTM.

问题 5:使用变压器代替 LSTM 的优势。 - Question 6: Difference between local attention and global attention

问题 6:本地关注度与全球关注度的差异 - Question 7: Understanding the computational and memory demands of transformers

问题 7:了解 transformer 的计算和内存需求 - Question 8: Increasing the context length of an LLM.

问题 8:增加 LLM 的上下文长度。 - Question 9: How to Optimizing transformer architecture for large vocabularies

问题 9:如何优化大词汇表的 transformer 架构 - Question 10: What is a mixture of expert models?

问题 10:什么是专家模型的混合?

Supervised finetuning of LLMLLM 的监督微调

- **Question 1: **What is finetuning and why it' s needed in LLM?

问题 1:什么是微调,为什么在 LLM 中需要它? - **Question 2: **Which scenario do we need to finetune LLM?

问题 2:我们需要在哪种情况下微调 LLM? - **Question 3: **How to make the decision of finetuning?

问题 3:如何做出微调的决定? - Question 4: How do you create a fine-tuning dataset for Q&A?

问题 4:如何为 Q&A 创建微调数据集? - Question 5: How do you improve the model to answer only if there is sufficient context for doing so?

问题 5:你如何改进模型,只有在有足够的上下文的情况下才能回答? - Question 6: How to set hyperparameter for fine-tuning

问题 6:如何设置超参数进行微调 - Question 7: How to estimate infra requirements for fine-tuning LLM?

问题 7:如何估计微调 LLM 的基础设施需求? - Question 8: How do you finetune LLM on consumer hardware?

问题 8:如何在消费类硬件上微调 LLM? - Question 9: What are the different categories of the PEFT method?

问题 9:PEFT 方法有哪些不同的类别? - Question 10: Explain different reparameterized methods for finetuning LLM?

问题 10:解释微调 LLM 的不同重新参数化方法? - Question 11: What is catastrophic forgetting in the context of LLMs?

问题 11:在 LLM 的背景下,什么是灾难性遗忘?

Preference Alignment (RLHF/DPO)

首选项对齐 (RLHF/DPO)

- **Question 1: **At which stage you will decide to go for the Preference alignment type of method rather than SFT?

问题 1:在哪个阶段,您将决定使用 Preference alignment 类型的方法而不是 SFT? - Question 2: Explain Different Preference Alignment Methods?

问题 2:解释不同的首选项对齐方法? - Question 3: What is RLHF, and how is it used?

问题 3:什么是 RLHF,如何使用它? - Question 4: Explain the reward hacking issue in RLHF.

问题 4:解释 RLHF 中的奖励黑客问题。

Evaluation of LLM systemLLM 系统的评估

- Question 1: How do you evaluate the best LLM model for your use case?

问题 1:您如何评估最适合您的用例的 LLM 模型? - Question 2: How to evaluate the RAG-based system?

问题 2:如何评估基于 RAG 的系统? - Question 3: What are the different metrics that can be used to evaluate LLM

问题 3:有哪些不同的指标可用于评估 LLM - Question 4: Explain the Chain of verification问题 4:解释验证链

Hallucination control techniques幻觉控制技术

- Question 1: What are the different forms of hallucinations?

问题 1:幻觉有哪些不同形式? - Question 2: How do you control hallucinations at different levels?

问题 2:你如何控制不同层次的幻觉?

Deployment of LLM部署 LLM

- Question 1: Why does quantization not decrease the accuracy of LLM?

问题 1:为什么量化不会降低 LLM 的准确性?

Agent-based system基于代理的系统

- Question 1: Explain the basic concepts of an agent and the types of strategies available to implement agents.

问题 1:解释代理的基本概念以及可用于实施代理的策略类型。 - Question 2: Why do we need agents and what are some common strategies to implement agents?

问题 2:为什么我们需要代理,实施代理的常见策略有哪些? - Question 3: Explain ReAct prompting with a code example and its advantages

问题 3:通过代码示例解释 ReAct 提示及其优势 - Question 4: Explain Plan and Execute prompting strategy

问题 4:解释 Plan 和 Execute 提示策略 - Question 5: Explain OpenAI functions with code examples

问题 5:通过代码示例解释 OpenAI 函数 - Question 6: Explain the difference between OpenAI functions vs LangChain Agents.

问题 6:解释 OpenAI 函数与 LangChain 代理之间的区别。

Prompt Hacking提示黑客攻击

- Question 1: What is prompt hacking and why should we bother about it?

问题 1:什么是即时黑客攻击,我们为什么要为此烦恼? - Question 2: What are the different types of prompt hacking?

问题 2:提示黑客攻击有哪些不同类型? - Question 3: What are the different defense tactics from prompt hacking?

问题 3:与快速黑客攻击有哪些不同的防御策略?

Case study & scenario-based Question案例研究和基于情景的问题

- **Question 1: **How to optimize the cost of the overall LLM System?

问题 1:如何优化整个 LLM 系统的成本?

参考

https://github.com/NirDiamant/RAG_Techniques

https://medium.com/towards-artificial-intelligence/the-best-rag-stack-to-date-8dc035075e13

https://medium.com/@AMGAS14/list/natural-language-processing-0a856388a93a