从〇开始深度学习(1)------PyTorch - Python Deep Learning Neural Network API

文章目录

- [从〇开始深度学习(1)------PyTorch - Python Deep Learning Neural Network API](#从〇开始深度学习(1)——PyTorch - Python Deep Learning Neural Network API)

-

- <零>写在前面

- [<壹>Part 1: Tensors and Operations](#<壹>Part 1: Tensors and Operations)

-

- [1.Section 1: Introducing PyTorch](#1.Section 1: Introducing PyTorch)

-

- [1.1.PyTorch Prerequisites - Neural Network Programming Series](#1.1.PyTorch Prerequisites - Neural Network Programming Series)

- [1.2.PyTorch Explained - Python Deep Learning Neural Network API](#1.2.PyTorch Explained - Python Deep Learning Neural Network API)

- [1.3.PyTorch Install - Quick and Easy](#1.3.PyTorch Install - Quick and Easy)

- [1.4.Cuda Explained - Why Deep Learning Uses GPUs](#1.4.Cuda Explained - Why Deep Learning Uses GPUs)

- [2.Section 2: Introducing Tensors](#2.Section 2: Introducing Tensors)

-

- [2.1.Tensors Explained - Data Structures of Deep Learning](#2.1.Tensors Explained - Data Structures of Deep Learning)

- [2.2.Rank, Axes, and Shape Explained - Tensors for Deep Learning](#2.2.Rank, Axes, and Shape Explained - Tensors for Deep Learning)

- [2.3.CNN Tensor Shape Explained - CNNs and Feature Maps](#2.3.CNN Tensor Shape Explained - CNNs and Feature Maps)

- [2.4.PyTorch Tensors Explained - Neural Network Programming](#2.4.PyTorch Tensors Explained - Neural Network Programming)

-

- [(1) Tensor Attributes](#(1) Tensor Attributes)

- [(2) `torch.dtype`](#(2)

torch.dtype) - [(3) `torch.device`](#(3)

torch.device) - [(4) `torch.layout`](#(4)

torch.layout) - [(5) Creating tensors using data](#(5) Creating tensors using data)

- [(6) Creation options without data](#(6) Creation options without data)

- [2.5.Creating PyTorch Tensors - Best Options](#2.5.Creating PyTorch Tensors - Best Options)

-

- [(1) The difference between `torch.Tensor` and `torch.tensor`](#(1) The difference between

torch.Tensorandtorch.tensor) - [(2) The difference between `torch.as_tensor` and `torch.from_numpy`](#(2) The difference between

torch.as_tensorandtorch.from_numpy) - [(3) The difference between the first two and the last two](#(3) The difference between the first two and the last two)

- [(1) The difference between `torch.Tensor` and `torch.tensor`](#(1) The difference between

- [3.Section 3: Tensor Operations](#3.Section 3: Tensor Operations)

-

- [3.1.Flatten, Reshape, and Squeeze Explained - Tensors for Deep Learning](#3.1.Flatten, Reshape, and Squeeze Explained - Tensors for Deep Learning)

-

- [(1) Reshape](#(1) Reshape)

- [(2) Flatten](#(2) Flatten)

- [3.2.CNN Flatten Operation Visualized - Tensor Batch Processing](#3.2.CNN Flatten Operation Visualized - Tensor Batch Processing)

- [3.3.Tensors for Deep Learning - Broadcasting and Element-wise Operations](#3.3.Tensors for Deep Learning - Broadcasting and Element-wise Operations)

-

- [(1) Arithmetic operations](#(1) Arithmetic operations)

- [(*) Broadcasting Tensors](#(*) Broadcasting Tensors)

- [(2) Comparison Operations](#(2) Comparison Operations)

- [(3) Some Functions](#(3) Some Functions)

- [3.4.ArgMax and Reduction Ops - Tensors for Deep Learning](#3.4.ArgMax and Reduction Ops - Tensors for Deep Learning)

-

- [(1) Reduction Options](#(1) Reduction Options)

- [(2) Argmax](#(2) Argmax)

- [(3) Accessing elements inside tensors](#(3) Accessing elements inside tensors)

- [<贰>Part 2: Neural Network Training](#<贰>Part 2: Neural Network Training)

-

- [1.Section 1: Data and Data Processing](#1.Section 1: Data and Data Processing)

-

- [1.1.Importance of Data in Deep Learning - Fashion MNIST for AI](#1.1.Importance of Data in Deep Learning - Fashion MNIST for AI)

- [1.2.Extract, Transform, Load (ETL) - Deep Learning Data Preparation](#1.2.Extract, Transform, Load (ETL) - Deep Learning Data Preparation)

-

- [(1) What is "ETL"](#(1) What is “ETL”)

- [(2) How to ETL with PyTorch](#(2) How to ETL with PyTorch)

- [1.3.PyTorch Datasets and DataLoaders - Training Set Exploration](#1.3.PyTorch Datasets and DataLoaders - Training Set Exploration)

-

- [(1) PyTorch Dataset: Working with the training set](#(1) PyTorch Dataset: Working with the training set)

- [(2) PyTorch DataLoader: Working with batches of data](#(2) PyTorch DataLoader: Working with batches of data)

- [(3) How to Plot Images Using PyTorch DataLoader](#(3) How to Plot Images Using PyTorch DataLoader)

- [2.Section 2: Neural Networks and PyTorch Design](#2.Section 2: Neural Networks and PyTorch Design)

-

- [2.1.Build PyTorch CNN - Object Oriented Neural Networks](#2.1.Build PyTorch CNN - Object Oriented Neural Networks)

-

- [(1) Quick object oriented programming review](#(1) Quick object oriented programming review)

- [(2) Building a neural network in PyTorch](#(2) Building a neural network in PyTorch)

- [2.2.CNN Layers - Deep Neural Network Architecture](#2.2.CNN Layers - Deep Neural Network Architecture)

-

- [(1) Parameter vs Argument](#(1) Parameter vs Argument)

- [(2) Two types of parameters](#(2) Two types of parameters)

- [(3) Descriptions of parameters](#(3) Descriptions of parameters)

- [(4) Kernel vs Filter](#(4) Kernel vs Filter)

- [2.3.CNN Weights - Learnable Parameters in Neural Networks](#2.3.CNN Weights - Learnable Parameters in Neural Networks)

-

- [(1) Another type of parameters](#(1) Another type of parameters)

- [(2) Getting an Instance the Network](#(2) Getting an Instance the Network)

- [(3) Accessing the Network's Layers](#(3) Accessing the Network's Layers)

- [(4) Accessing the Layer Weights](#(4) Accessing the Layer Weights)

- [2.4.Callable Neural Networks - Linear Layers in Depth](#2.4.Callable Neural Networks - Linear Layers in Depth)

- [2.5.How to Debug PyTorch Source Code - Debugging Setup](#2.5.How to Debug PyTorch Source Code - Debugging Setup)

- [2.6.CNN Forward Method - Deep Learning Implementation](#2.6.CNN Forward Method - Deep Learning Implementation)

-

- [(1) convolutional layers](#(1) convolutional layers)

- [(2) linear layers](#(2) linear layers)

- [2.7.Forward Propagation Explained - Pass Image to PyTorch Neural Network](#2.7.Forward Propagation Explained - Pass Image to PyTorch Neural Network)

- [2.8.Neural Network Batch Processing - Pass Image Batch to PyTorch CNN](#2.8.Neural Network Batch Processing - Pass Image Batch to PyTorch CNN)

- [2.9.CNN Output Size Formula - Bonus Neural Network Debugging Session](#2.9.CNN Output Size Formula - Bonus Neural Network Debugging Session)

- [3.Section 3: Training Neural Networks](#3.Section 3: Training Neural Networks)

-

- [3.1.CNN Training - Using a Single Batch](#3.1.CNN Training - Using a Single Batch)

- [3.2.CNN Training Loop - Using Multiple Epochs](#3.2.CNN Training Loop - Using Multiple Epochs)

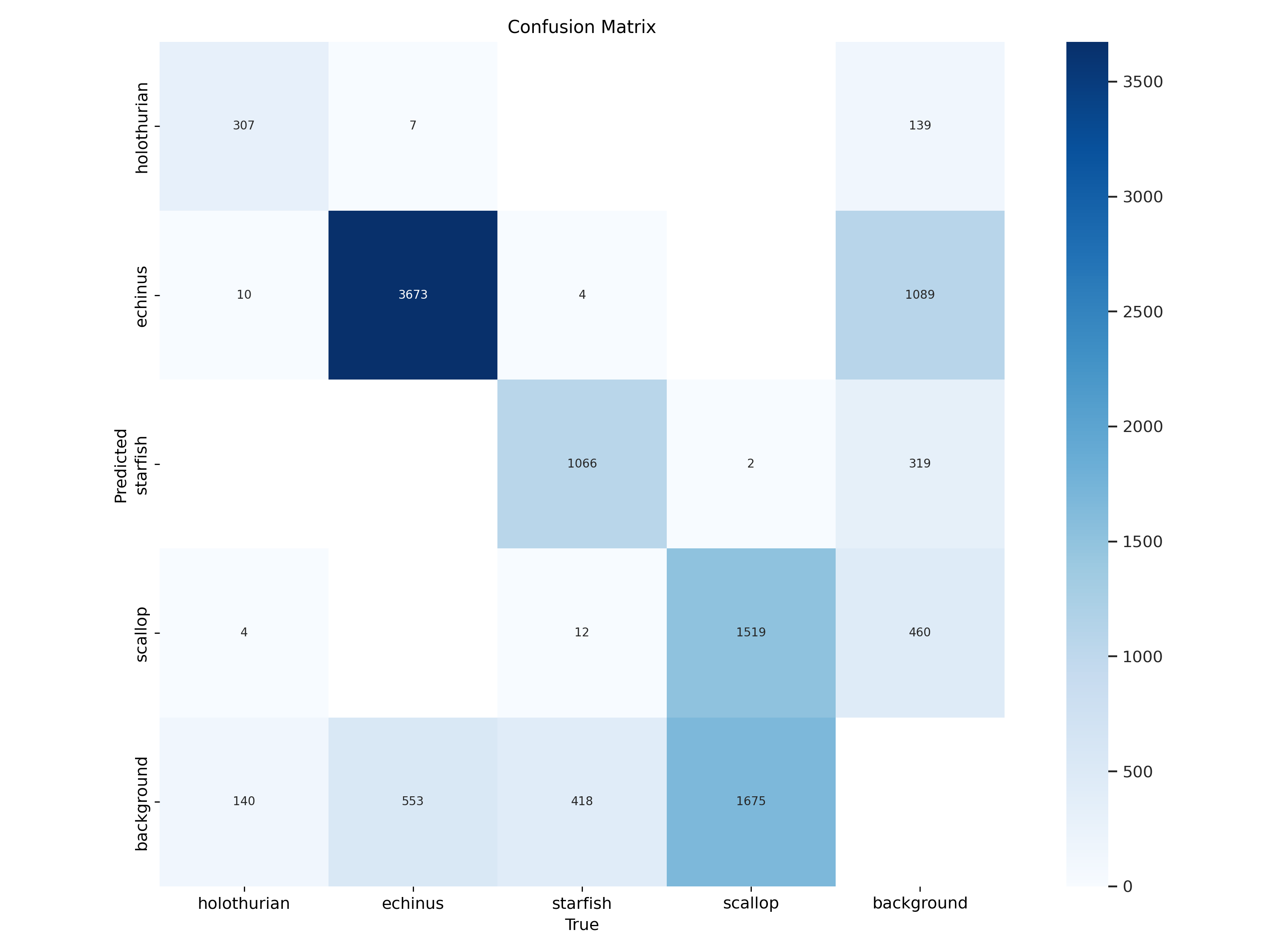

- [3.3.Building a Confusion Matrix - Analyzing Results Part 1](#3.3.Building a Confusion Matrix - Analyzing Results Part 1)

- [3.4.Stack vs Concat - Deep Learning Tensor Ops](#3.4.Stack vs Concat - Deep Learning Tensor Ops)

- [3.5.Using TensorBoard with PyTorch - Analyzing Results Part 2](#3.5.Using TensorBoard with PyTorch - Analyzing Results Part 2)

- [3.6.Hyperparameter Experimenting - Training Neural Networks](#3.6.Hyperparameter Experimenting - Training Neural Networks)

- [4.Section 4: Neural Network Experimentation](#4.Section 4: Neural Network Experimentation)

-

- [4.1.Custom Code - Neural Network Experimentation Code](#4.1.Custom Code - Neural Network Experimentation Code)

- [4.2.Custom Code - Simultaneous Hyperparameter Testing](#4.2.Custom Code - Simultaneous Hyperparameter Testing)

- [4.3.Data Loading - Deep Learning Speed Limit Increase](#4.3.Data Loading - Deep Learning Speed Limit Increase)

- [4.4.On the GPU - Training Neural Networks with CUDA](#4.4.On the GPU - Training Neural Networks with CUDA)

- [4.5.Data Normalization - Normalize a Dataset](#4.5.Data Normalization - Normalize a Dataset)

- [4.6.PyTorch DataLoader Source Code - Debugging Session](#4.6.PyTorch DataLoader Source Code - Debugging Session)

- [4.7.PyTorch Sequential Models - Neural Networks Made Easy](#4.7.PyTorch Sequential Models - Neural Networks Made Easy)

- [4.8.Batch Norm In PyTorch - Add Normalization To Conv Net Layers](#4.8.Batch Norm In PyTorch - Add Normalization To Conv Net Layers)

- <叁>后记

<零>写在前面

有很多人学习深度学习可能会先从Python开始,然后可能会看一些吴恩达/李宏毅的课。但是我比较急性子,看Python的课很浮躁,看吴恩达/李宏毅大佬的课又很爱走神,所以我打算直接开始学习PyTorch。中间遇到Python的语法问题再去现学,等学上一段时间对深度学习有一个了解之后再回头补吴恩达/李宏毅大佬的基础课。

很难评判这种学习方法是好还是坏,毕竟我也没法做到未卜先知如果有这能力我也就不在这写学习笔记了。说一说之前的经历吧,前一阵学FPGA的时候需要现学Verilog,为了能尽快上手FPGA,我在网站上速刷了一遍Verilog基础语法就直接开始干活了(详见:什么?没有链接?总不能是隔了这么长时间还没整理笔记吧)。在实际学FPGA的时候还是遇到过因对Verilog语法不熟导致的奇怪问题,最典型的问题就是状态机的状态不能在两个always块中改变。的确,如果我一开始扎扎实实地学一遍Verilog这种脑瘫问题肯定不会应该不会吧,也不好说哈发生;但是换个角度,如果我先把这个东西用起来,用一阵有自己的理解后再回头学,会不会有不一样的效果呢?

当然了,这个操作是需要建立在掌握一门编程语言的基础上的。

回到这篇笔记,我打算把这篇笔记搞成一个完整的课程笔记,从第一节到最后一节贯穿整个系列。课程选择的是DeepLizard的PyTorch教程(相关参考链接放到后面),这套课程总共43节课,个人感觉还是不错的。不过说实话这43节的内容非常多,有一些章节都足够单拎出来写一篇笔记。所以这篇笔记还会有番外篇,如果想看完整课程笔记的话看这篇就完全OK,如果想看某些零碎的知识点可以看相应的番外篇。全系列课程分为了两个大部分,Part 1的代码非常基础,非常简单,大家有兴趣可以自己练一练;Part 2部分的代码我已经全部上传至Github和Gitee,有需要可以自行下载:

这篇笔记主要是用英语写的,我的英语水平实在有限,所以想趁着看纯英课的机会涨一涨英语水平。笔记中的部分单词我也做了中文标注,如果影响到了阅读还请见谅。当然了,还是有些部分用中文写的,尤其是那些需要理解的内容,我用英语也表达不利索,如果真有人看这篇笔记的话估计也很难受,即便是我自己再回看也绝对够难受。

2024.12.05记:用英语写实在是太慢了太折磨了,TNND不练了,老老实实写中文。不过相关专业词汇还是会给出英文表达,毕竟这个实在是避不开。

笔者也是初学者,希望有问题可以和各位大佬一起交流讨论,有错误(包括英语表达的错误、语法的错误等等)还请各位指正。由于笔记篇幅过长,没有时间一一进行校对,不仅内容可能存在问题,格式也可能有没调好的地方,还请各位读者多多包容,有影响阅读的格式问题也欢迎提出。

课程链接:

PyTorch - Python Deep Learning Neural Network API - YouTube(需科学上网)

1-PyTorch Prerequisites - Syllabus for Neural Network Programming Course_哔哩哔哩_bilibili(B站搬运版,有中文字幕,非常友好)

笔记参考:

PyTorch Prerequisites - Syllabus for Neural Network Programming Course - deeplizard(需科学上网)

从零开始的机器学习实践笔记 - 知乎 (zhihu.com)(非常好的一个系列,本文提到的"知乎笔记"如无特别声明,指的就是此篇)

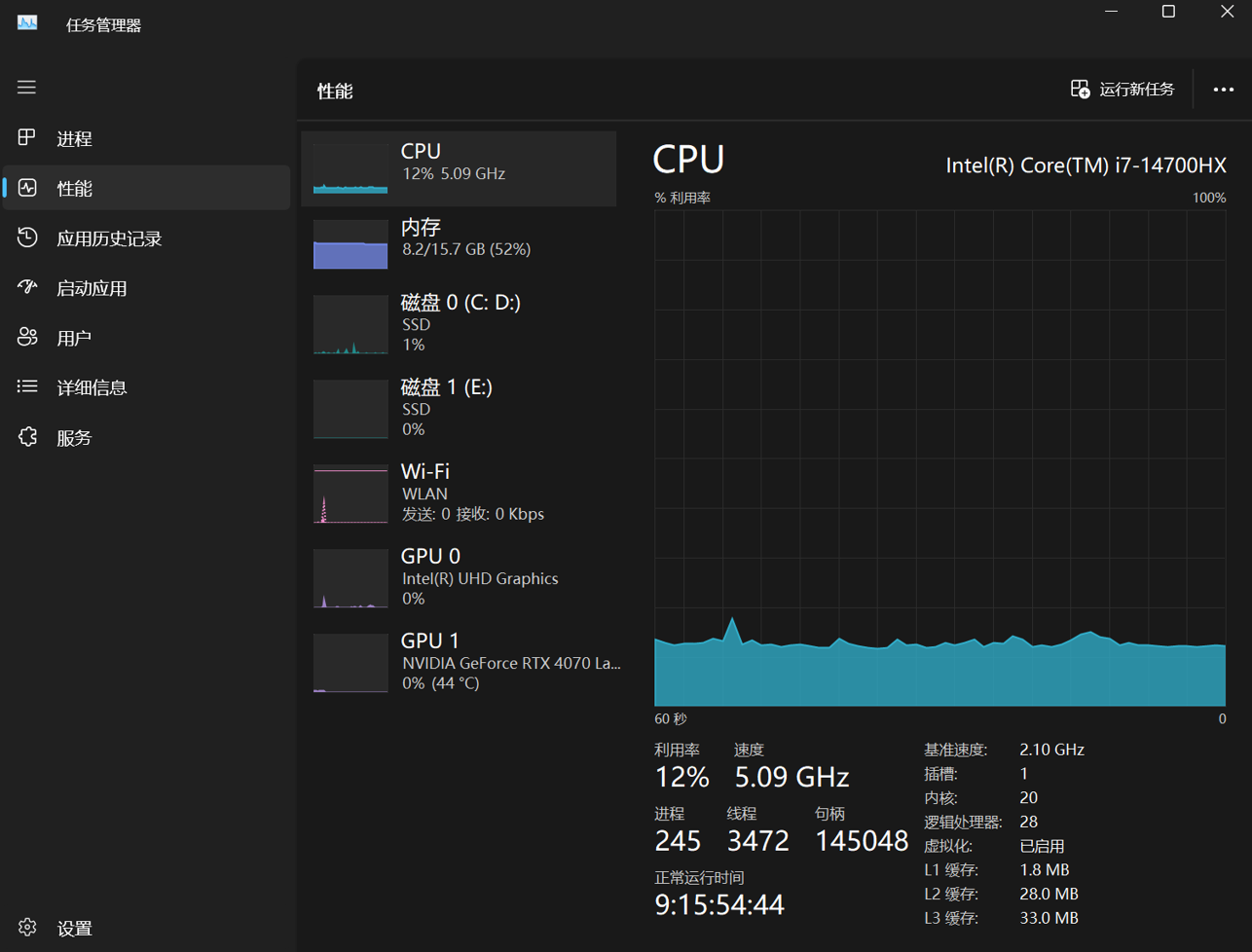

本文使用的环境:

| 环境 | 版本号 |

|---|---|

| Windows | win11 |

| Pycharm | 2024.2.4+ |

| Anaconda | 24.11.0 |

| Cuda | 12.4.131 |

| Python | 3.10.15 |

| PyTorch | 2.5.1 |

| torchvision | 0.20.1 |

<壹>Part 1: Tensors and Operations

1.Section 1: Introducing PyTorch

1.1.PyTorch Prerequisites - Neural Network Programming Series

只需理解编程,不需要会很多Python。

1.2.PyTorch Explained - Python Deep Learning Neural Network API

Torch is based on the Lua programming language. PyTorch is Torch based, but in Python.

Typical packages of PyTorch:

| Package | Description |

|---|---|

| torch | The top-level PyTorch package and tensor library. |

| torch.nn | "nn" means neural networks. A subpackage that contains classes and modules, like layers, weights and forward functions. |

| torch.autograd | A subpackage that handles the derivative(导数) calculations needed to optimize(优化) the neural network weights. |

| torch.nn.functional | A functional interface that gives us access to functions like loss functions, activation functions, and convolution(卷积) operations. |

| torch.optim | A subpackage that gives us access to typical optimization algorithms(算法) like SGD and Adam. |

| torch.utils | A subpackage that contains utility(多功能,实用) classes like datasets(数据集) and data loaders(数据加载器) that make data preprocessing much easier. |

| torchvision | A separate package that provides access to popular datasets, model architectures, and image transformations for computer vision. |

- All deep learning frameworks have two features: a tensor library, and a package for computing derivatives. And for PyTorch, these two are "torch" and "torch.autograd".

- "torch.nn", "torch.autograd", "torch.nn.functional", "torch.optim", "torch.utils" are subpackages of "torch", while "torchvision" is a separate package.

Using PyTorch makes it available for us to focus more on neural networks and less on the actual framework.

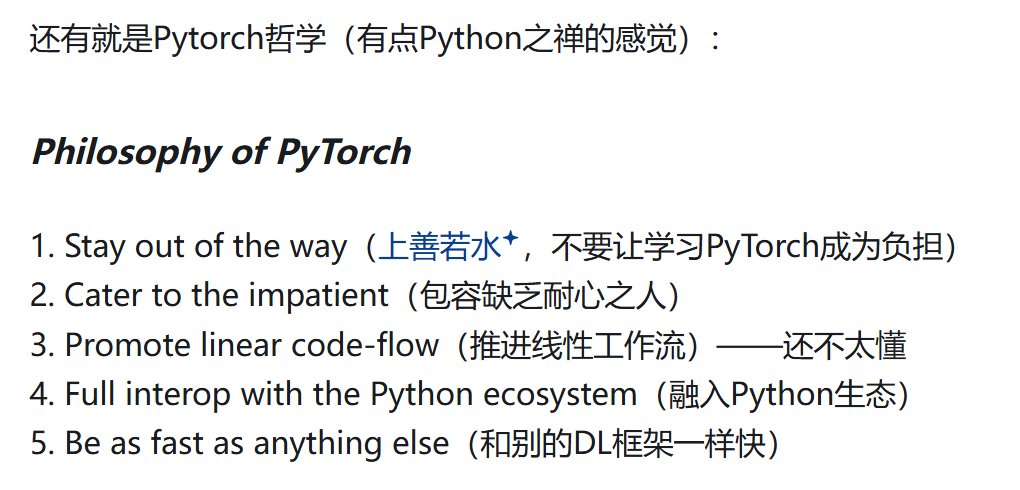

The Philosophy of PyTorch:

- Stay out of the way;

- Cater to the impatient;

- Promote linear code-flow;

- Full inter-operation with the Python ecosystem;

- Be as fast as anything else.

参考知乎笔记:

Another reason why we need to study PyTorch:

To optimize neural networks, we need to calculate derivatives. And to do this computationally, deep learning frameworks use what are called Computational Graphs. Computational Graphs are used to graph the function operations that occur on tensors inside neural networks. These graphs are often used to compute the derivatives needed to optimize the neural networks weights.

PyTorch uses a computational called a Dynamic(动态的) Computational Graph. This means that the graph is generated on the fly(运行中) as the operations occur. This is in contrast to static graphs that are fully determined before the actual actions occur. It just so happens that many of the cutting edge research topics in deep learning are requiring or benefiting greatly from dynamic graphs.

1.3.PyTorch Install - Quick and Easy

详见从〇开始深度学习(0)------背景知识与环境配置-CSDN博客

1.4.Cuda Explained - Why Deep Learning Uses GPUs

Much of PyTorch is written by Python, however, at bottom of points, Python drops to the C, CPP, and cuda could speed up processing and get their performance boost.

使用PyTorch不仅可以选择执行设备是CPU还是GPU,还支持多个GPU,可以选择程序在哪个GPU上执行

The calculations can be selectively carried out, either on the CPU or on the GPU.

2.Section 2: Introducing Tensors

2.1.Tensors Explained - Data Structures of Deep Learning

In Computer Science, there are: number, array, 2d-array;

In Mathematics, there are: scalar, vector, matrix;

number is same as scalar, array is same as vector, and 2d-array is same as matrix.

| Indexes required | Computer science | Mathematics |

|---|---|---|

| 0 | number | scalar |

| 1 | array | vector |

| 2 | 2d-array | matrix |

When more than 2 indexes are required to access specific element, we stop giving specific name to the data structures, and begin using more general language.

| Indexes required | Computer science | Mathematics |

|---|---|---|

| n | nd-array | nd-tensor |

2.2.Rank, Axes, and Shape Explained - Tensors for Deep Learning

-

Rank:秩

-

The rank of a tensor refers to the number of dimensions(维度) present within the tensor.

-

A tensor's rank tells us how many indexes(索引) are needed to refer to a specific element within the tensor.

-

e.g.

t=[ [1,2,3], [4,5,6], [7,8,9] ]We need two indexes to refer to a specific element, so the rank of this tensor is 2.

-

-

Axes:轴

-

An axis(轴的单数形式) of a tensor is a specific dimension of a tensor.

-

The rank of a tensor tells us how many axes(轴的复数形式) a tensor has.

-

The length of each axis tells us how many indexes are available along each axis.

-

e.g.

t=[ [1,2,3], [4,5,6], [7,8,9] ]The length of each axis is 3.

-

-

Shape:形状

- The shape of a tensor is determined by the length of each axis.

-

Reshape:重塑

- The shape changes the grouping of the terms but does not change the underlying(跟本的) terms themselves.

- One thing to notice about reshaping is that the product(乘积) of the component(组成部分) values in the shape must equal the total number of elements in the tensor.

- Reshaping changes the shape but not the underlying data elements.

- e.g. A 3 × 3 3×3 3×3 tensor can be reshaped to a 1 × 9 1×9 1×9 tensor.

2.3.CNN Tensor Shape Explained - CNNs and Feature Maps

The shape of a CNN input typically has a length of four. This means that we have a rank-4 tensor with four axes.

Shape: [Batch, Channels, Height, Width]

-

For the input tensor:

-

Batch: The length of this axis tells us how many samples(样品) are in our batch.

-

Channels: This axis represents the color channels. Typical values here are 3 for RGB images or 1 if we are working with grayscale(灰度) images.

-

Height and Width: The image height and width. Possible values here are

28 x 28, or the224 x 224image size that is used by VGG16 neural network, or any other image dimensions we can imagine.

-

-

For the output tensor:

- Batch: Is same as input tensor.

- Channels: Can be seen as modified color channels.

N.B. The last axis, which is where we'll start, is where the actual numbers or data values are located.

2.4.PyTorch Tensors Explained - Neural Network Programming

When programming neural networks, data preprocessing is often one of the first steps in the overall process, and one goal of data preprocessing is to transform the raw(原始的) input data into tensor form.

(1) Tensor Attributes

Rank, Axes, and Shape is attributes for all kinds of tensor, but now we want to talk about tensors in PyTorch.

Every torch.Tensor has these attributes:torch.dtype, torch.device, and torch.layout:

torch.dtype: The dtype specifies the type of the data that is contained within the tensor.torch.device: This determines where tensor computations for the given tensor will be performed.torch.layout: The layout specifies how the tensor is stored in memory.

e.g.

Code:

python

import torch

t = torch.Tensor()

print(t.dtype)

print(t.device)

print(t.layout)Result:

torch.float32

cpu

torch.strided(2) torch.dtype

Tensors contain uniform (of the same type) numerical data with one of these types:

| Data type | dtype | CPU tensor | GPU tensor |

|---|---|---|---|

| 32-bit floating point | torch.float32 | torch.FloatTensor | torch.cuda.FloatTensor |

| 64-bit floating point | torch.float64 | torch.DoubleTensor | torch.cuda.DoubleTensor |

| 16-bit floating point | torch.float16 | torch.HalfTensor | torch.cuda.HalfTensor |

| 8-bit integer (unsigned) | torch.uint8 | torch.ByteTensor | torch.cuda.ByteTensor |

| 8-bit integer (signed) | torch.int8 | torch.CharTensor | torch.cuda.CharTensor |

| 16-bit integer (signed) | torch.int16 | torch.ShortTensor | torch.cuda.ShortTensor |

| 32-bit integer (signed) | torch.int32 | torch.IntTensor | torch.cuda.IntTensor |

| 64-bit integer (signed) | torch.int64 | torch.LongTensor | torch.cuda.LongTensor |

(The table comes from PyTorch Tensors Explained - Neural Network Programming - deeplizard)

N.B. Tensor operations between tensors must happen between tensors with the same type of data.

(3) torch.device

PyTorch supports the use of multiple devices, and they are specified using an index like so:

python

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(device)or:

python

device = torch.device('cuda:0')

print(device)The complete code is:

python

import torch

t = torch.Tensor()

print(t.dtype)

print(t.device)

print(t.layout)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(device)

device = torch.device('cuda:0')

print(device)And the result is:

torch.float32

cpu

torch.strided

cuda

cuda:0So, if you have multiple GPUs, you can you can specify the GPU by different index.

N.B. Tensor operations between tensors must happen between tensors that exists on the same device.

(4) torch.layout

Just Don't Care.

Reference: Stride of an array - Wikipedia

(5) Creating tensors using data

python

import torch

import numpy as np

data = np.array([1,2,3])

o1 = torch.Tensor(data)

o2 = torch.tensor(data)

o3 = torch.as_tensor(data)

o4 = torch.from_numpy(data)

print(o1)

print(o2)

print(o3)

print(o4)The result is:

tensor([1., 2., 3.])

tensor([1, 2, 3])

tensor([1, 2, 3])

tensor([1, 2, 3])The first option (o1) has dots after the number indicating that the numbers are floats, while the next three options have a type of int32.

(6) Creation options without data

python

import torch

import numpy as np

data = np.array([1,2,3])

o1 = torch.eye(2)

o2 = torch.zeros([2,2])

o3 = torch.ones([2,2])

o4 = torch.rand([2,2])

print(o1)

print(o2)

print(o3)

print(o4)The result is:

tensor([[1., 0.],

[0., 1.]])

tensor([[0., 0.],

[0., 0.]])

tensor([[1., 1.],

[1., 1.]])

tensor([[0.4056, 0.8623],

[0.5164, 0.9960]])2.5.Creating PyTorch Tensors - Best Options

(1) The difference between torch.Tensor and torch.tensor

A quick recap:

python

import torch

import numpy as np

data = np.array([1,2,3])

o1 = torch.Tensor(data)

o2 = torch.tensor(data)

print(o1)

print(o2)The result is:

tensor([1., 2., 3.])

tensor([1, 2, 3])

tensor([1, 2, 3])

tensor([1, 2, 3])The first option with the uppercase T is the constructor of the torch.Tensor class, and the second option is what we call a factory function that constructs torch.Tensor objects and returns them to the caller. (Factory functions are a software design pattern for creating objects.)

It's fine to use either one. However, the factory function torch.tensor() has better documentation and more configuration options, so it gets the winning spot at the moment.

(2) The difference between torch.as_tensor and torch.from_numpy

python

o3 = torch.as_tensor(data)

o4 = torch.from_numpy(data)

print(o3)

print(o4)The result is:

tensor([1, 2, 3])

tensor([1, 2, 3])The third and the fourth option are factory functions, too.

The torch.from_numpy() function only accepts numpy.ndarrays, while the torch.as_tensor() function accepts a wide variety of array-like objects including other PyTorch tensors. For this reason, torch.as_tensor() is the winning choice.

(3) The difference between the first two and the last two

If we change the data like this:

python

import torch

import numpy as np

data = np.array([1,2,3])

o1 = torch.Tensor(data)

o2 = torch.tensor(data)

o3 = torch.as_tensor(data)

o4 = torch.from_numpy(data)

print(o1)

print(o2)

print(o3)

print(o4)

data[0] = 0

data[1] = 0

data[2] = 0

print(o1)

print(o2)

print(o3)

print(o4)The result is:

tensor([1., 2., 3.])

tensor([1, 2, 3])

tensor([1, 2, 3])

tensor([1, 2, 3])

tensor([1., 2., 3.])

tensor([1, 2, 3])

tensor([0, 0, 0])

tensor([0, 0, 0])This happens because torch.Tensor() and torch.tensor() copy their input data while torch.as_tensor() and torch.from_numpy() share their input data in memory with the original input object.

Given all of these details, these two are the best options:

torch.tensor()torch.as_tensor()

The torch.tensor() call is the sort of go-to call, while torch.as_tensor() should be employed when tuning our code for performance.

N.B.

- Since

numpy.ndarrayobjects are allocated on the CPU, theas_tensor()function must copy the data from the CPU to the GPU when a GPU is being used. - The memory sharing of

as_tensor()doesn't work with built-in Python data structures like lists. - The

as_tensor()call requires developer knowledge of the sharing feature. This is necessary so we don't inadvertently make an unwanted change in the underlying data without realizing the change impacts multiple objects. - The

as_tensor()performance improvement will be greater if there are a lot of back and forth operations betweennumpy.ndarrayobjects and tensor objects. However, if there is just a single load operation, there shouldn't be much impact from a performance perspective.

3.Section 3: Tensor Operations

We have the following high-level categories of operations:

- Reshaping operations

- Element-wise operations

- Reduction operations

- Access operations

3.1.Flatten, Reshape, and Squeeze Explained - Tensors for Deep Learning

(1) Reshape

Code:

python

import torch

t = torch.tensor([

[1,1,1,1],

[2,2,2,2],

[3,3,3,3]

], dtype=torch.float32)

print(t)

print(t.shape)

print(t.reshape([1,12]))

print(t.reshape([2,6]))

print(t.reshape([3,4]))

print(t.reshape([4,3]))Result:

tensor([[1., 1., 1., 1.],

[2., 2., 2., 2.],

[3., 3., 3., 3.]])

torch.Size([3, 4])

tensor([[1., 1., 1., 1., 2., 2., 2., 2., 3., 3., 3., 3.]])

tensor([[1., 1., 1., 1., 2., 2.],

[2., 2., 3., 3., 3., 3.]])

tensor([[1., 1., 1., 1.],

[2., 2., 2., 2.],

[3., 3., 3., 3.]])

tensor([[1., 1., 1.],

[1., 2., 2.],

[2., 2., 3.],

[3., 3., 3.]])N.B. t.reshape doesn't change t itself.

Code:

python

print(t)Result:

tensor([[1., 1., 1., 1.],

[2., 2., 2., 2.],

[3., 3., 3., 3.]])We can also increase or decrease the rank. For example:

Code:

python

print(t.reshape([2,2,3]))Result:

tensor([[[1., 1., 1.],

[1., 2., 2.]],

[[2., 2., 3.],

[3., 3., 3.]]])(2) Flatten

A flatten operation on a tensor reshapes the tensor to have a shape that is equal to the number of elements contained in the tensor. This is the same thing as a 1d-array of elements.

Flattening a tensor means to remove all of the dimensions except for one.

We'll see that flatten operations are required when passing an output tensor from a convolutional layer to a linear layer.

Create a Python function:

python

def flatten(t):

t = t.reshape(1, -1)

t = t.squeeze()

return t- In PyTorch, the

-1tells thereshape()function to figure out what the value should be based on the number of elements contained within the tensor.

- Squeezing a tensor removes the dimensions or axes that have a length of one.

Code:

python

print(flatten(t))Result:

tensor([1., 1., 1., 1., 2., 2., 2., 2., 3., 3., 3., 3.])3.2.CNN Flatten Operation Visualized - Tensor Batch Processing

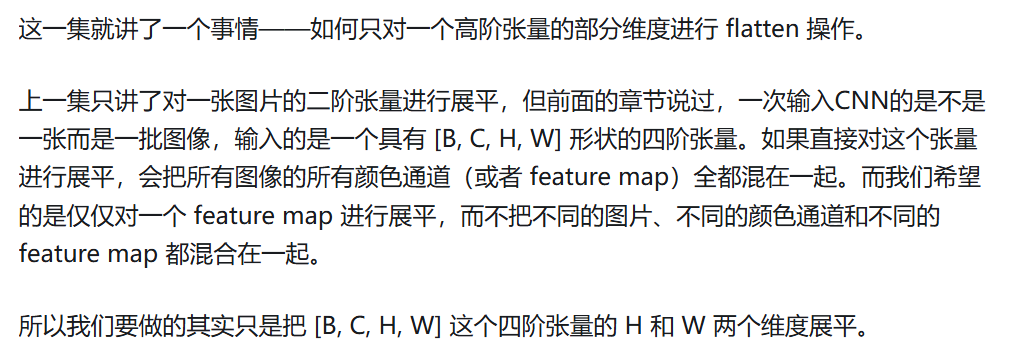

In past posts, we learned about flattening an entire tensor image. But when working with CNNs, we want to only flatten specific axes within the tensor.

参考知乎笔记:

e.g.

python

import torch

t1 = torch.tensor([

[1,1,1,1],

[1,1,1,1],

[1,1,1,1],

[1,1,1,1]

])

t2 = torch.tensor([

[2,2,2,2],

[2,2,2,2],

[2,2,2,2],

[2,2,2,2]

])

t3 = torch.tensor([

[3,3,3,3],

[3,3,3,3],

[3,3,3,3],

[3,3,3,3]

])

t = torch.stack((t1, t2, t3))

t = t.reshape(3,1,4,4)

print(t)

print(t.flatten(start_dim=1))tensor([[[[1, 1, 1, 1],

[1, 1, 1, 1],

[1, 1, 1, 1],

[1, 1, 1, 1]]],

[[[2, 2, 2, 2],

[2, 2, 2, 2],

[2, 2, 2, 2],

[2, 2, 2, 2]]],

[[[3, 3, 3, 3],

[3, 3, 3, 3],

[3, 3, 3, 3],

[3, 3, 3, 3]]]])

tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

[2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2],

[3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3]])Notice in the call how we specified the start_dim parameter. This tells the flatten() method which axis it should start the flatten operation. The start_dim=1 here is an index, so it's the second axis which is the color channel axis. We skip over the batch axis so to speak, leaving it intact(完好无损的).

视频最后还留了一个思考题,如果是RGB图片想保留Color Channels应该怎么展平。把start_dim=1改为start_dim=2即可。

3.3.Tensors for Deep Learning - Broadcasting and Element-wise Operations

An element-wise operation operates on corresponding(相应的) elements between tensors.

Two elements are said to be corresponding if the two elements occupy the same position within the tensor. The position is determined by the indexes used to locate each element.

N.B. Two tensors must have the same shape in order to perform element-wise operations on them.

(1) Arithmetic operations

Arithmetic operations are element-wise operations.

Code:

python

import torch

t1 = torch.tensor([

[1,2],

[3,4]

], dtype=torch.float32)

t2 = torch.tensor([

[5,6],

[7,8]

], dtype=torch.float32)

print(t1+t2)

print(t1-t2)

print(t1*t2)

print(t1/t2)

print(t1+3)

print(t1-3)

print(t1*3)

print(t1/3)

print(t1.add(3))

print(t1.sub(3))

print(t1.mul(3))

print(t1.div(3))Result:

tensor([[ 6., 8.],

[10., 12.]])

tensor([[-4., -4.],

[-4., -4.]])

tensor([[ 5., 12.],

[21., 32.]])

tensor([[0.2000, 0.3333],

[0.4286, 0.5000]])

tensor([[4., 5.],

[6., 7.]])

tensor([[-2., -1.],

[ 0., 1.]])

tensor([[ 3., 6.],

[ 9., 12.]])

tensor([[0.3333, 0.6667],

[1.0000, 1.3333]])

tensor([[4., 5.],

[6., 7.]])

tensor([[-2., -1.],

[ 0., 1.]])

tensor([[ 3., 6.],

[ 9., 12.]])

tensor([[0.3333, 0.6667],

[1.0000, 1.3333]])(*) Broadcasting Tensors

Broadcasting is not a so called "option". But we need to know.

Broadcasting describes how tensors with different shapes are treated during element-wise operations. It is the concept whose implementation allows us to add scalars to higher dimensional tensors.

Code:

python

import torch

import numpy as np

t1 = torch.tensor([

[1,2],

[3,4]

], dtype=torch.float32)

print(np.broadcast_to(3, t1.shape))Result:

[[3 3]

[3 3]]This is all under the hood.

(hood: (衣服上的)兜帽,风帽;头巾,面罩;(设备或机器的)防护罩,罩;汽车发动机罩;)

(under the hood: 在表面之下:指在某物的内部工作过程中)

So, t1 + 3 is really this:

python

t1 + torch.tensor(

np.broadcast_to(3, t1.shape)

,dtype=torch.float32

)(2) Comparison Operations

Testing code:

python

import torch

print(torch.tensor([1, 2, 3]) < torch.tensor([3, 1, 2]))Result:

tensor([ True, False, False])Comparison operations are element-wise operations.

Code:

python

import torch

t = torch.tensor([

[0,-5,0],

[6,0,7],

[0,8,0]

], dtype=torch.float32)

print(t.eq(0)) # equal to

print(t.ge(0)) # greater than or equal to

print(t.gt(0)) # greater than

print(t.lt(0)) # less than

print(t.le(7)) # less than or equal toResult:

tensor([[ True, False, True],

[False, True, False],

[ True, False, True]])

tensor([[ True, False, True],

[ True, True, True],

[ True, True, True]])

tensor([[False, False, False],

[ True, False, True],

[False, True, False]])

tensor([[False, True, False],

[False, False, False],

[False, False, False]])

tensor([[ True, True, True],

[ True, True, True],

[ True, False, True]])(3) Some Functions

Code:

python

import torch

t = torch.tensor([

[0,-5,0],

[6,0,7],

[0,8,0]

], dtype=torch.float32)

print(t.abs())

print(t.sqrt())

print(t.neg())

print(t.neg().abs())Result:

tensor([[0., 5., 0.],

[6., 0., 7.],

[0., 8., 0.]])

tensor([[0.0000, nan, 0.0000],

[2.4495, 0.0000, 2.6458],

[0.0000, 2.8284, 0.0000]])

tensor([[-0., 5., -0.],

[-6., -0., -7.],

[-0., -8., -0.]])

tensor([[0., 5., 0.],

[6., 0., 7.],

[0., 8., 0.]])3.4.ArgMax and Reduction Ops - Tensors for Deep Learning

(1) Reduction Options

e.g.

Code:

python

import torch

t = torch.tensor([

[0,1,0],

[2,0,2],

[0,3,0]

], dtype=torch.float32)

print(t.sum())

print(t.prod()) # product

print(t.mean()) # average

print(t.std()) # standard deviation(标准差)Result:

tensor(8.)

tensor(0.)

tensor(0.8889)

tensor(1.1667)Here is a question though: Do reduction operations always reduce to a tensor with a single element?

The answer is no!

In fact, we often reduce specific axes at a time. This process is important. It's just like we saw with reshaping when we aimed to flatten the image tensors within a batch while still maintaining the batch axis.

e.g.

Code:

python

import torch

t = torch.tensor([

[1,1,1,1],

[2,2,2,2],

[3,3,3,3]

], dtype=torch.float32)

print(t.sum(dim=0))

print(t.sum(dim=1))Result:

tensor([6., 6., 6., 6.])

tensor([ 4., 8., 12.])回顾这句话:reduce specific axes at a time。

如何表示张量t里的某一元素?t[dim0][dim1]。

| dim | dim1=0 | dim1=1 | dim1=2 | dim1=3 |

|---|---|---|---|---|

| dim0=0 | 1 | 1 | 1 | 1 |

| dim0=1 | 2 | 2 | 2 | 2 |

| dim0=2 | 3 | 3 | 3 | 3 |

减少dim0,求和操作会纵向相加;减少dim1,求和操作会横向相加。其实第二个结果如果写成这样会更好理解:

tensor([ 4.,

8.,

12. ])用向量的思维来理解这个的话,第二个结果应该是一个列向量;但是对于张量这个数据结构,不存在行向量、列向量这种说法,它只是一个一维张量。

(2) Argmax

Argmax is a mathematical function that tells us which argument, when supplied to a function as input, results in the function's max output value. Argmax returns the index location of the maximum value inside a tensor.

Code:

python

import torch

t = torch.tensor([

[1,0,0,2],

[0,3,3,0],

[4,0,0,5]

], dtype=torch.float32)

print(t.max())

print(t.argmax())

print(t.flatten())Result:

tensor(5.)

tensor(11)

tensor([1., 0., 0., 2., 0., 3., 3., 0., 4., 0., 0., 5.])If we don't specific an axis to the argmax() method, it returns the index location of the max value from the flattened tensor, which in this case is indeed 11.

Let's see how we can work with specific axes now.

Code:

python

import torch

t = torch.tensor([

[1,0,0,2],

[0,3,3,0],

[4,0,0,5]

], dtype=torch.float32)

print(t.max(dim=0))

print(t.argmax(dim=0))

print(t.max(dim=1))

print(t.argmax(dim=1))Result:

torch.return_types.max(

values=tensor([4., 3., 3., 5.]),

indices=tensor([2, 1, 1, 2]))

tensor([2, 1, 1, 2])

torch.return_types.max(

values=tensor([2., 3., 5.]),

indices=tensor([3, 1, 3]))

tensor([3, 1, 3])回顾刚刚的表格:

| dim | dim1=0 | dim1=1 | dim1=2 | dim1=3 |

|---|---|---|---|---|

| dim0=0 | 1 | 0 | 0 | 2 |

| dim0=1 | 0 | 3 | 3 | 0 |

| dim0=2 | 4 | 0 | 0 | 5 |

减少dim0,会从纵向找最大值和最大值所在位置(索引),即第一列最大值为4,索引为2(dim0=2),以此类推;减少dim1,会从横向找最大值和最大值所在位置(索引),把输出的结果看作列向量就豁然开朗了。

In practice, we often use the argmax() function on a network's output prediction tensor, to determine which category has the highest prediction value.

(3) Accessing elements inside tensors

As for a scalar valued tensor, we use t.item() :

Code:

python

import torch

t1 = torch.tensor([5], dtype=torch.float32)

print(t1)

print(t1.item())Result:

tensor([5.])

5.0As for multiple values, we use t.tolist() or t.numpy() :

python

import torch

t2 = torch.tensor([5,6], dtype=torch.float32)

print(t2)

print(t2.tolist())

print(t2.numpy())Result:

tensor([5., 6.])

[5.0, 6.0]

[5. 6.]We can access the numeric values by transforming the tensor into a Python list or a NumPy array.

<贰>Part 2: Neural Network Training

从这一部分开始,所有的代码我都已经放到Github和Gitee上,大家可以直接下载:

The project (Bird's-eye view)

There are four general steps that we'll be following as we move through this project:

- Prepare the data(Section 1)

- Build the model(Section 2)

- Train the model(Section 3)

- Analyze the model's results(Section 4)

Personal Suggestion: In this part, you need to write a lot and read a lot. I will write down all the code, you can copy it directly into your project of course, but remember to read it carefully, think about it, and run the program yourself.

1.Section 1: Data and Data Processing

Bird's eye view of the process

From a high-level perspective or bird's eye view of our deep learning project, we prepared our data, and now, we are ready to build our model.

- Prepare the data

- Build the model

- Train the model

- Analyze the model's results

1.1.Importance of Data in Deep Learning - Fashion MNIST for AI

介绍了一个数据集:Fashion MNIST

不知道是不是广子,大致意思就是传统的MNIST,即手写数字数据集,太简单没新意;所以弄了个Fashion MNIST。

MNIST是分类10个数字,Fashion MNIST是分类10种不同的衣服。

| Index | Label |

|---|---|

| 0 | T-shirt/top |

| 1 | Trouser |

| 2 | Pullover |

| 3 | Dress |

| 4 | Coat |

| 5 | Sandal |

| 6 | Shirt |

| 7 | Sneaker |

| 8 | Bag |

| 9 | Ankle boot |

数据集链接:zalandoresearch/fashion-mnist: A MNIST-like fashion product database. Benchmark (github.com)

1.2.Extract, Transform, Load (ETL) - Deep Learning Data Preparation

(1) What is "ETL"

To prepare our data, we'll be following what is loosely known as an ETL process.

- Extract data from a data source.

- Transform data into a desirable format.

- Load data into a suitable structure.

Our ultimate goal when preparing our data is to do the following (ETL):

- Extract -- Get the Fashion-MNIST image data from the source.

- Transform -- Put our data into tensor form.

- Load -- Put our data into an object to make it easily accessible.

(2) How to ETL with PyTorch

这一部分按视频的讲解顺序没太完全看明白,这里换个思路学习一下:根据现象反推原理。

首先看E 和T。

代码如下:

python

import torchvision

import torchvision.transforms as transforms

train_set = torchvision.datasets.FashionMNIST(

root='./data'

,train=True

,download=True

,transform=transforms.Compose([

transforms.ToTensor()

])

)点击运行然后慢慢等待:

下载完成。

这个过程对应的是ETL里的E(获取)和T(转化):先从图中所示的链接中下载数据集,然后再转化成张量格式。

先分析一下这几个参数:

| Parameter | Description |

|---|---|

| root | The location on disk where the data is located. |

| train | If the dataset is the training set |

| download | If the data should be downloaded. |

| transform | A composition(组合) of transformations that should be performed on the dataset elements. 应在数据集元素上执行的转换的组合。 |

这样一来,这三行代码还是很好理解的:

root='./data'

,train=True

,download=True现在的难点是这两行代码:

train_set = torchvision.datasets.FashionMNIST(

,transform=transforms.Compose([

transforms.ToTensor()

])

)如果你和我一样没有学过Python,这里我建议先停下,学习一下什么是"类"。个人笔记参考:囫囵吞枣学Python(1)------类

先看第一行代码:train_set = torchvision.datasets.FashionMNIST()

在Pycharm中,我们按住Ctrl然后点击torchvision即可转到其定义。有一行代码如下:

python

from torchvision import _meta_registrations, datasets, io, models, ops, transforms, utils # usort:skip也就是说datasets是个torchvision的sub-package。然后转到FashionMNIST的定义,代码如下:

python

class FashionMNIST(MNIST):

"""`Fashion-MNIST <https://github.com/zalandoresearch/fashion-mnist>`_ Dataset.

Args:

root (str or ``pathlib.Path``): Root directory of dataset where ``FashionMNIST/raw/train-images-idx3-ubyte``

and ``FashionMNIST/raw/t10k-images-idx3-ubyte`` exist.

train (bool, optional): If True, creates dataset from ``train-images-idx3-ubyte``,

otherwise from ``t10k-images-idx3-ubyte``.

download (bool, optional): If True, downloads the dataset from the internet and

puts it in root directory. If dataset is already downloaded, it is not

downloaded again.

transform (callable, optional): A function/transform that takes in a PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

target_transform (callable, optional): A function/transform that takes in the

target and transforms it.

"""

mirrors = ["http://fashion-mnist.s3-website.eu-central-1.amazonaws.com/"]

resources = [

("train-images-idx3-ubyte.gz", "8d4fb7e6c68d591d4c3dfef9ec88bf0d"),

("train-labels-idx1-ubyte.gz", "25c81989df183df01b3e8a0aad5dffbe"),

("t10k-images-idx3-ubyte.gz", "bef4ecab320f06d8554ea6380940ec79"),

("t10k-labels-idx1-ubyte.gz", "bb300cfdad3c16e7a12a480ee83cd310"),

]

classes = ["T-shirt/top", "Trouser", "Pullover", "Dress", "Coat", "Sandal", "Shirt", "Sneaker", "Bag", "Ankle boot"]这回不是package了,而是一个class,并且这是个子类,继承的MNIST这个父类。

往上翻就能翻到MNIST的定义:

python

class MNIST(VisionDataset):

"""`MNIST <http://yann.lecun.com/exdb/mnist/>`_ Dataset.

Args:

root (str or ``pathlib.Path``): Root directory of dataset where ``MNIST/raw/train-images-idx3-ubyte``

and ``MNIST/raw/t10k-images-idx3-ubyte`` exist.

train (bool, optional): If True, creates dataset from ``train-images-idx3-ubyte``,

otherwise from ``t10k-images-idx3-ubyte``.

download (bool, optional): If True, downloads the dataset from the internet and

puts it in root directory. If dataset is already downloaded, it is not

downloaded again.

transform (callable, optional): A function/transform that takes in a PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

target_transform (callable, optional): A function/transform that takes in the

target and transforms it.

"""

mirrors = [

"http://yann.lecun.com/exdb/mnist/",

"https://ossci-datasets.s3.amazonaws.com/mnist/",

]

resources = [

("train-images-idx3-ubyte.gz", "f68b3c2dcbeaaa9fbdd348bbdeb94873"),

("train-labels-idx1-ubyte.gz", "d53e105ee54ea40749a09fcbcd1e9432"),

("t10k-images-idx3-ubyte.gz", "9fb629c4189551a2d022fa330f9573f3"),

("t10k-labels-idx1-ubyte.gz", "ec29112dd5afa0611ce80d1b7f02629c"),

]

training_file = "training.pt"

test_file = "test.pt"

classes = [

"0 - zero",

"1 - one",

"2 - two",

"3 - three",

"4 - four",

"5 - five",

"6 - six",

"7 - seven",

"8 - eight",

"9 - nine",

]

# ....太多了,不粘贴浪费地方

def __init__(

self,

root: Union[str, Path],

train: bool = True,

transform: Optional[Callable] = None,

target_transform: Optional[Callable] = None,

download: bool = False,

) -> None:

super().__init__(root, transform=transform, target_transform=target_transform)

self.train = train # training set or test set

if self._check_legacy_exist():

self.data, self.targets = self._load_legacy_data()

return

if download:

self.download()

if not self._check_exists():

raise RuntimeError("Dataset not found. You can use download=True to download it")

self.data, self.targets = self._load_data()

def _check_legacy_exist(self):

processed_folder_exists = os.path.exists(self.processed_folder)

if not processed_folder_exists:

return False

return all(

check_integrity(os.path.join(self.processed_folder, file)) for file in (self.training_file, self.test_file)

)

def _load_legacy_data(self):

# This is for BC only. We no longer cache the data in a custom binary, but simply read from the raw data

# directly.

data_file = self.training_file if self.train else self.test_file

return torch.load(os.path.join(self.processed_folder, data_file), weights_only=True)

def _load_data(self):

image_file = f"{'train' if self.train else 't10k'}-images-idx3-ubyte"

data = read_image_file(os.path.join(self.raw_folder, image_file))

label_file = f"{'train' if self.train else 't10k'}-labels-idx1-ubyte"

targets = read_label_file(os.path.join(self.raw_folder, label_file))

return data, targets

def __getitem__(self, index: int) -> Tuple[Any, Any]:

"""

Args:

index (int): Index

Returns:

tuple: (image, target) where target is index of the target class.

"""

img, target = self.data[index], int(self.targets[index])

# doing this so that it is consistent with all other datasets

# to return a PIL Image

img = Image.fromarray(img.numpy(), mode="L")

if self.transform is not None:

img = self.transform(img)

if self.target_transform is not None:

target = self.target_transform(target)

return img, target

def __len__(self) -> int:

return len(self.data)也就是说,所谓的

python

train_set = torchvision.datasets.FashionMNIST(

root='./data'

,train=True

,download=True

,transform=transforms.Compose([

transforms.ToTensor()

])

)和囫囵吞枣学Python(1)------类这篇笔记中的

python

student1 = Students('Jim', 18)并没有什么很大的区别。

现在的难点是这两行代码:

pythontrain_set = torchvision.datasets.FashionMNIST( ,transform=transforms.Compose([ transforms.ToTensor() ]) )

第一行已经解决了,看第二行transform=transforms.Compose([transforms.ToTensor()])。结合刚刚表格中对于transform的解释:

transform: A composition(组合) of transformations that should be performed on the dataset elements. (应在数据集元素上执行的转换的组合)

通过代码的字面意思,应该是转换成了张量,实际也正是如此。其中,Compose和ToTensor都是transforms中的方法。

现在唯一可能有疑惑的地方就是为什么有个中括号"[]"。其实,仔细看关于transform的描述,可以发现他说的是A composition(组合) of transformations,注意最后的s,即他可能有多种转换,只不过这个地方只是ToTensor。这里的中括号固然多余,只有在需要多种变换操作的时候才有实际作用,这里的作用只是统一书写习惯。

现在E和T看完了,来看L。

python

import torch

import torchvision

import torchvision.transforms as transforms

train_set = torchvision.datasets.FashionMNIST(

root='./data'

,train=True

,download=True

,transform=transforms.Compose([

transforms.ToTensor()

])

)

train_loader = torch.utils.data.DataLoader(train_set

,batch_size=1000

,shuffle=True

)

print(train_loader)其实只是加了train_loader这一行代码。很简单:

| Parameter | Description |

|---|---|

| batch_size | How many samples per batch to load. |

| shuffle | Set to True to have the data reshuffled(重新洗牌) at every epoch(轮). |

| num_workers | How many subprocesses to use for data loading. 0 means that the data will be loaded in the main process. (default: 0) |

至此,ETL全部结束。

顺着看完笔记,再看一遍视频,就感觉没有那么奇怪了。这一部分最大的阻碍应该是Python语法本身带来的,而非内容有什么难度。

1.3.PyTorch Datasets and DataLoaders - Training Set Exploration

(1) PyTorch Dataset: Working with the training set

-

Typical functions:

pythonimport torch import torchvision import torchvision.transforms as transforms train_set = torchvision.datasets.FashionMNIST( root='./data' ,train=True ,download=True ,transform=transforms.Compose([ transforms.ToTensor() ]) ) train_loader = torch.utils.data.DataLoader(train_set ,batch_size=10 ,shuffle=True ) print(len(train_set)) print(train_set.targets) print(train_set.targets.bincount())Result:

60000 tensor([9, 0, 0, ..., 3, 0, 5]) tensor([6000, 6000, 6000, 6000, 6000, 6000, 6000, 6000, 6000, 6000])- To see how many images are in our training set, we can check the length of the dataset using the Python

len()function; - To see the labels for each image, we can use the

train_set.targetsfunction; - If we want to see how many of each label exists in the dataset, we can use the PyTorch

bincount()function;

- To see how many images are in our training set, we can check the length of the dataset using the Python

-

Class imbalance

Class imbalance is a common problem, but in our case, we have just seen that the Fashion-MNIST dataset is indeed balanced, so we need not worry about that for our project.

-

Accessing data in the training set

If we want to access single data in the training set:

pythonsample = next(iter(train_set)) print('len:', len(sample)) image, label = sample print('types:', type(image), type(label)) print('shape:', image.shape)Result:

len: 2 types: <class 'torch.Tensor'> <class 'int'> shape: torch.Size([1, 28, 28])The code

image, label = sampleis equal toimage = sample[0],label = sample[1].We don't have to worry too much about how

nextanditerwork.If we want to show it on the screen:

pythonimport torch import torchvision import torchvision.transforms as transforms import matplotlib.pyplot as plt # import numpy as np train_set = torchvision.datasets.FashionMNIST( root='./data' , train=True , download=True , transform=transforms.Compose([ transforms.ToTensor() ]) ) train_loader = torch.utils.data.DataLoader( train_set, batch_size=10 ) sample = next(iter(train_set)) print('len:', len(sample)) image, label = sample print('types:', type(image), type(label)) print('shape:', image.shape) print('label:', label) plt.imshow(image.squeeze(), cmap="gray") plt.show()We need to import:

import matplotlib.pyplot as plt.Result:

len: 2 types: <class 'torch.Tensor'> <class 'int'> shape: torch.Size([1, 28, 28]) label: 9

(2) PyTorch DataLoader: Working with batches of data

Unlike the code we just wrote:

python

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

train_set = torchvision.datasets.FashionMNIST(

root='./data'

, train=True

, download=True

, transform=transforms.Compose([

transforms.ToTensor()

])

)

display_loader = torch.utils.data.DataLoader(

train_set, batch_size=10

)

batch = next(iter(display_loader))

print('len:', len(batch))

images, labels = batch

print('types:', type(images), type(labels))

print('shapes:', images.shape, labels.shape)

print('labels:', labels)

grid = torchvision.utils.make_grid(images, nrow=5)

plt.imshow(np.transpose(grid, (1, 2, 0)))

plt.show()Result:

len: 2

types: <class 'torch.Tensor'> <class 'torch.Tensor'>

shapes: torch.Size([10, 1, 28, 28]) torch.Size([10])

labels: tensor([9, 0, 0, 3, 0, 2, 7, 2, 5, 5])

两段代码写的形式几乎一样,只有细微的差别。大家可以在Pycharm或者VSCode中分栏看一看这两段代码的区别。

(3) How to Plot Images Using PyTorch DataLoader

这一部分其实就是更复杂一点的应用,没啥特别需要注意的,直接贴代码放结果:

python

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

train_set = torchvision.datasets.FashionMNIST(

root='./data'

, train=True

, download=True

, transform=transforms.Compose([

transforms.ToTensor()

])

)

how_many_to_plot = 20

train_loader = torch.utils.data.DataLoader(

train_set, batch_size=1, shuffle=True

)

plt.figure(figsize=(40,25))

for i, batch in enumerate(train_loader, start=1):

image, label = batch

plt.subplot(5,5,i)

plt.imshow(image.reshape(28,28), cmap='gray')

plt.axis('off')

plt.title(train_set.classes[label.item()], fontsize=28)

if i >= how_many_to_plot: break

plt.show()

2.Section 2: Neural Networks and PyTorch Design

Bird's eye view of the process

From a high-level perspective or bird's eye view of our deep learning project, we prepared our data, and now, we are ready to build our model.

- Prepare the data

- Build the model

- Train the model

- Analyze the model's results

When say model , we mean our network . The words model and network mean the same thing. What we want our network to ultimately do is model or approximate a function that maps image inputs to the correct output class.

原作提到建议看deep learning fundamentals series这个系列课程作为入门,如果不看这个系列的全部课程也至少要看这5节:

If you just want a crash course on CNNs, these are the specific posts to see:

- Convolutional Neural Networks (CNNs) explained

- Visualizing Convolutional Filters from a CNN

- Zero Padding in Convolutional Neural Networks explained

- Max Pooling in Convolutional Neural Networks explained

- Learnable Parameters in a Convolutional Neural Network (CNN) explained

访问了一下他这个系列课程的博客,他推荐学习的是这5节:

贴一下全系列课程视频的网址:Deep Learning playlist overview & Machine Learning intro (youtube.com)

其实现在可以先不看这几个视频,我的思路是先学着,遇到问题之后再有针对性地看。当然了,如果你想现在就看这些视频也无伤大雅。

2.1.Build PyTorch CNN - Object Oriented Neural Networks

(1) Quick object oriented programming review

I recommend watching the explanation in the video: 17-Build PyTorch CNN - Object Oriented Neural Networks_哔哩哔哩_bilibili(from 01:44 to 09:30)

And the note: 囫囵吞枣学Python(1)------类-CSDN博客

(2) Building a neural network in PyTorch

Today we need to understand two words: layer and forward method.

So, first: What is layer?

- layer:

- a transformation(using code)

- a collection of weights(using data)

Layers in PyTorch are defined by classes, so in code, our layers will be objects.(In the note: 囫囵吞枣学Python(1)------类-CSDN博客, the Students is defined by class, so the student1 is an object. If you don't understand, I suggest you review the first part: Quick object oriented programming review)

Second: What is forward method?

When we pass a tensor to our network as input, the tensor flows forward though each layer transformation until the tensor reaches the output layer. This process of a tensor flowing forward though the network is known as a forward pass.

The package torch.nn includes large number of classes and methods that we can use them directly.

First, Let's create a simple class to represent a neural network:

python

class Network:

def __init__(self):

self.layer = None

def forward(self, t):

t = self.layer(t)

return tSecond, Make our Network class extend nn.Module:

python

import torch.nn as nn

class Network(nn.Module): # line 1

def __init__(self):

super().__init__() # line 3

self.layer = None

def forward(self, t):

t = self.layer(t)

return tBoth of these two parts of code have a characteristic commonly: the layer is empty. Now let's replace the None with some real layers which we will often use:

python

import torch.nn as nn

class Network(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=12, kernel_size=5)

self.fc1 = nn.Linear(in_features=12 * 4 * 4, out_features=120)

self.fc2 = nn.Linear(in_features=120, out_features=60)

self.out = nn.Linear(in_features=60, out_features=10)

def forward(self, t):

# implement the forward pass

return t-

Conv2d: convolutional layers;

-

Linear: linear layers; linear layers are also called fully connected layers , and they also have a third name that we may hear sometimes called dense; so linear, dense, and fully connected are all ways to refer to the same type of layer;

-

We used the name

outfor the last linear layer because the last layer in the network is the output layer;

That is the end of this post, it's perfectly normal if you don't understand this part of code thoroughly. Don't worry about that. Just continue to learn with this doubt in mind, and you will gradually understand it.

2.2.CNN Layers - Deep Neural Network Architecture

Our goal in this post is to better understand the layers we have defined. To do this, we're going to learn about the parameters and the values that we passed for these parameters in the layer constructors.

(1) Parameter vs Argument

Parameters are used in function definitions. For this reason, we can think of parameters as place-holders.

Arguments are the actual values that are passed to the function when the function is called.

In our Network's case, the names like in_channels and out_channels are the parameters, and the values that we have specified like 1 and 6 are the arguments.

python

import torch.nn as nn

class Network(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=12, kernel_size=5)

self.fc1 = nn.Linear(in_features=12 * 4 * 4, out_features=120)

self.fc2 = nn.Linear(in_features=120, out_features=60)

self.out = nn.Linear(in_features=60, out_features=10)

def forward(self, t):

# implement the forward pass

return t(2) Two types of parameters

-

Hyperparameters

-

Data dependent hyperparameters

In fact, a lot of terms in deep learning are used loosely(宽松地), and the word parameter is one of them. Try not to let it throw you off(使你困惑或分心,使你偏离正确的方向或计划).

In other words, these terms are not as important as you imagine.

-

Hyperparameters

Hyperparameters are parameters whose values are chosen manually(手动地) and arbitrarily(随意地). As neural network programmers, we choose hyperparameter values mainly based on trial(试验) and error and increasingly by utilizing(利用) values that have proven to work well in the past.

Talk like a human being, we usually test and tune(调整) these parameters to find values that work best.

In our Network's case, the parameters

kernel_size,out_channelsandout_featuresare hyperparameters(with a exception, the lastout_featuresisn't a hyperparameter).Parameter Description kernel_sizeSets the height and width of the filter. out_channelsSets depth of the filter. This is the number of kernels inside the filter. One kernel produces one output channel. out_featuresSets the size of the output tensor. One pattern that shows up quite often is that we increase our

out_channelsas we add additional convolutional layers, and after we switch to linear layers we shrink ourout_featuresas we filter down to our number of output classes. We'll dive deeper into this in the next post. -

Data dependent hyperparameters

Data dependent hyperparameters are parameters whose values are dependent on data.

Two typical parameters are the

in_channelsof the first convolutional layer, and theout_featuresof the output layer. Thein_channelsof the first convolutional layer depend on the number of color channels present inside the images that make up the training set. Since we are dealing with grayscale images, we know that this value should be a1. Theout_featuresfor the output layer depend on the number of classes that are present inside our training set. Since we have10classes of clothing inside the Fashion-MNIST dataset, we know that we need10output features.In general, the input to one layer is the output from the previous layer, and so all of the

in_channelsin the convolutional layers andin_featuresin the linear layers depend on the data coming from the previous layer.Why we have

12*4*4? The12comes from the number of output channels in the previous layer, but why do we have the two4s? We cover how we get these values in a future post.

(3) Descriptions of parameters

| Layer | Param name | Param value | The param value is |

|---|---|---|---|

| conv1 | in_channels | 1 | the number of color channels in the input image. |

| conv1 | kernel_size | 5 | a hyperparameter. |

| conv1 | out_channels | 6 | a hyperparameter. |

| conv2 | in_channels | 6 | the number of out_channels in previous layer. |

| conv2 | kernel_size | 5 | a hyperparameter. |

| conv2 | out_channels | 12 | a hyperparameter (higher than previous conv layer). |

| fc1 | in_features | 1244 | the length of the flattened output from previous layer. |

| fc1 | out_features | 120 | a hyperparameter. |

| fc2 | in_features | 120 | the number of out_features of previous layer. |

| fc2 | out_features | 60 | a hyperparameter (lower than previous linear layer). |

| out | in_features | 60 | the number of out_channels in previous layer. |

| out | out_features | 10 | the number of prediction classes. |

(4) Kernel vs Filter

We often use the words filter and kernel interchangeably(交替地) in deep learning. However, there is a technical distinction between these two concepts.

A kernel is a 2D tensor, and a filter is a 3D tensor that contains a collection of kernels. We apply a kernel to a single channel, and we apply a filter to multiple channels.

2.3.CNN Weights - Learnable Parameters in Neural Networks

(1) Another type of parameters

-

Learnable parameters

Learnable parameters are parameters whose values are learned during the training process. With learnable parameters, we typically start out with a set of arbitrary(任意的) values, and these values then get updated in an iterative(迭代的) fashion(方式) as the network learns.

In fact, when we say that a network is learning, we specifically mean that the network is learning the appropriate values for the learnable parameters.

(2) Getting an Instance the Network

python

import torch.nn as nn

class Network(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=12, kernel_size=5)

self.fc1 = nn.Linear(in_features=12 * 4 * 4, out_features=120)

self.fc2 = nn.Linear(in_features=120, out_features=60)

self.out = nn.Linear(in_features=60, out_features=10)

def forward(self, t):

# implement the forward pass

return t

network=Network()

print(network)Result:

Network(

(conv1): Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))

(conv2): Conv2d(6, 12, kernel_size=(5, 5), stride=(1, 1))

(fc1): Linear(in_features=192, out_features=120, bias=True)

(fc2): Linear(in_features=120, out_features=60, bias=True)

(out): Linear(in_features=60, out_features=10, bias=True)

)For the convolutional layers, the kernel_size argument is a Python tuple (5,5) even though we only passed the number 5 in the constructor. This is because our filters actually have a height and width, and when we pass a single number, the code inside the layer's constructor assumes that we want a square filter.

The stride is an additional parameter that we could have set, but we left it out. When the stride is not specified in the layer constructor the layer automatically sets it. The stride tells the conv layer how far the filter should slide after each operation in the overall convolution. This tuple says to slide by one unit when moving to the right and also by one unit when moving down.

For the linear layers, we have an additional parameter called bias which has a default parameter value of true. It is possible to turn this off by setting it to false.

In the video, the author also mentioned the word 'override'. We call it '重写' in Chinese. It's not important to focus on it here, so we don't have to worry about it.

(3) Accessing the Network's Layers

python

print(network.conv1)

print(network.conv2)

print(network.fc1)

print(network.fc2)

print(network.out)Result:

Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))

Conv2d(6, 12, kernel_size=(5, 5), stride=(1, 1))

Linear(in_features=192, out_features=120, bias=True)

Linear(in_features=120, out_features=60, bias=True)

Linear(in_features=60, out_features=10, bias=True)(4) Accessing the Layer Weights

Let's first look at some examples.

First, convolutional layers:

python

import torch.nn as nn

class Network(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=12, kernel_size=5)

self.fc1 = nn.Linear(in_features=12 * 4 * 4, out_features=120)

self.fc2 = nn.Linear(in_features=120, out_features=60)

self.out = nn.Linear(in_features=60, out_features=10)

def forward(self, t):

# implement the forward pass

return t

network=Network()

print(network.conv1)

print(network.conv1.weight)

print(network.conv1.weight.shape)Result:

Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))

Parameter containing:

tensor([[[[ 0.1232, 0.1745, -0.0915, 0.0615, 0.1538],

[-0.0747, -0.0346, 0.0290, -0.0959, 0.0164],

[ 0.0145, -0.0813, -0.1848, -0.1106, -0.1396],

[-0.1269, -0.0738, -0.0959, -0.1527, 0.0644],

[ 0.1800, -0.0883, -0.0080, 0.1344, 0.0920]]],

[[[-0.0629, 0.1750, -0.1389, 0.1275, -0.1797],

[-0.1755, 0.1946, -0.1925, 0.0654, -0.1339],

[-0.1237, -0.1942, -0.1812, -0.1883, 0.1600],

[ 0.1417, 0.1051, 0.1502, -0.1608, -0.1157],

[ 0.0644, 0.1915, -0.1855, 0.1809, -0.0025]]],

[[[ 0.1701, -0.0435, -0.1149, -0.0337, 0.0830],

[ 0.0006, 0.0686, 0.1429, -0.1244, -0.0048],

[ 0.0632, -0.1001, 0.1045, -0.1651, 0.1013],

[ 0.1934, 0.1950, -0.0350, 0.0422, -0.0931],

[-0.1226, -0.1583, 0.1330, 0.1100, -0.1544]]],

[[[-0.0572, -0.0689, 0.1695, 0.0712, 0.0893],

[ 0.1183, -0.0032, -0.0855, 0.0300, 0.0392],

[-0.1271, -0.0850, -0.1440, -0.0717, 0.1915],

[-0.0673, -0.1499, 0.0396, 0.1853, -0.1650],

[ 0.1341, -0.1745, -0.1512, 0.1500, -0.1642]]],

[[[-0.0190, 0.0146, -0.1059, -0.0617, -0.0630],

[ 0.0148, -0.1553, 0.0026, 0.1763, 0.0672],

[-0.1689, 0.1345, 0.1268, 0.1737, 0.1519],

[ 0.1675, -0.0937, -0.0181, 0.0267, -0.0231],

[-0.1085, 0.0345, -0.0552, 0.0690, -0.0950]]],

[[[ 0.0343, -0.1318, 0.0569, 0.1160, -0.1973],

[-0.0326, 0.1682, 0.1729, -0.0455, -0.0761],

[-0.0124, 0.1356, 0.1893, -0.0778, 0.0509],

[-0.1544, -0.0527, 0.1602, 0.1525, 0.0864],

[ 0.0832, 0.1645, 0.1838, 0.1726, -0.1858]]]], requires_grad=True)

torch.Size([6, 1, 5, 5])If we make some changes to the code:

python

import torch.nn as nn

class Network(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=12, kernel_size=5)

self.fc1 = nn.Linear(in_features=12 * 4 * 4, out_features=120)

self.fc2 = nn.Linear(in_features=120, out_features=60)

self.out = nn.Linear(in_features=60, out_features=10)

def forward(self, t):

# implement the forward pass

return t

network=Network()

print(network.conv2)

print(network.conv2.weight)

print(network.conv2.weight.shape)Result:

Conv2d(6, 12, kernel_size=(5, 5), stride=(1, 1))

Parameter containing:

tensor([[[[-4.2884e-02, -1.8646e-02, -3.6468e-02, -2.1878e-02, -6.1812e-02],

[ 2.9104e-02, 4.3656e-02, 2.1134e-02, 6.8243e-02, 3.9659e-02],

[-4.8924e-02, 5.9818e-02, -3.0731e-02, 3.9902e-02, -9.6543e-03],

[ 4.2226e-03, 7.7117e-02, -5.0710e-02, 7.5835e-02, -7.0011e-02],

[ 2.4738e-02, -7.1612e-03, -6.5956e-02, -7.1910e-02, -4.0692e-03]],

[[ 5.9244e-02, -5.4084e-02, -4.1429e-02, -5.3655e-02, -2.9016e-02],

[ 5.7895e-02, 1.6712e-02, -5.7220e-02, 2.0745e-02, 7.3740e-02],

[ 6.4129e-02, 4.3146e-02, 2.0793e-02, 4.8607e-02, -1.8870e-02],

[-1.8324e-02, -6.2051e-02, -4.5263e-02, 3.0059e-02, 2.4538e-02],

[ 2.5017e-02, -6.1615e-02, -1.4608e-02, -2.3294e-02, -1.0028e-02]],

[[ 3.9857e-02, -6.9648e-02, -4.9927e-02, 7.9932e-03, -6.4465e-02],

[-3.1335e-02, 4.7432e-02, 1.8392e-02, -9.7926e-03, 7.6205e-02],

[ 5.1769e-02, -3.8508e-02, 2.1279e-02, 5.8801e-02, -7.6870e-02],

[ 6.5906e-02, -6.5944e-02, 6.4801e-02, -5.0759e-02, -2.9017e-02],

[ 5.1388e-02, 3.3068e-02, 5.1049e-02, 8.1391e-02, 5.6871e-02]],

[[ 7.6068e-03, 5.7764e-02, 1.3304e-02, 2.3320e-02, 7.1435e-02],

[ 6.1237e-02, 2.0400e-02, 2.8379e-05, 7.6489e-02, 7.2457e-02],

[ 9.6467e-03, -1.4250e-02, -7.3180e-02, -2.4022e-02, -2.0675e-02],

[-5.6530e-02, -4.8809e-03, 2.8938e-02, 7.1006e-02, -4.4209e-02],

[-2.6500e-02, -3.5677e-03, 6.7954e-02, -3.1715e-02, 5.1770e-02]],

[[ 1.3207e-02, 3.0945e-02, -7.3218e-02, 5.3696e-02, -5.5415e-02],

[ 6.4929e-02, -3.0792e-02, -2.1799e-02, 4.3814e-02, 6.4807e-02],

[-1.4082e-02, -1.2352e-02, -4.1357e-02, -5.0738e-02, -1.2696e-02],

[ 2.3784e-02, 4.4909e-02, -5.8380e-02, 6.7909e-02, -8.2366e-03],

[-7.9928e-02, 3.4381e-02, -5.9752e-02, -7.8087e-02, 2.9481e-02]],

[[ 2.8638e-02, -6.7411e-02, 4.7579e-02, 1.0333e-02, 6.7232e-02],

[ 4.3504e-02, 5.4487e-02, 5.1175e-02, -6.6485e-03, 6.6359e-02],

[ 3.0006e-02, 6.1103e-02, -2.9882e-02, -6.9170e-02, -3.3795e-02],

[ 5.4645e-02, 5.9930e-02, 7.2578e-02, -3.9443e-02, 5.6268e-02],

[-6.5664e-04, -3.5357e-02, 6.3044e-02, 2.8497e-02, -4.8495e-02]]],

[[[-1.5204e-02, -2.0982e-04, 5.2414e-02, 6.6475e-02, -3.9259e-02],

[ 1.8214e-02, 4.8985e-02, -1.5981e-02, -3.1356e-02, -7.6915e-03],

[-1.8750e-02, -2.3607e-02, -2.1833e-02, 7.7038e-02, -4.7328e-02],

[-4.3814e-02, -4.0106e-02, 3.3002e-02, 7.4004e-02, 7.5722e-02],

[ 3.9917e-02, -3.7348e-02, -6.0048e-02, 2.1473e-02, -5.3794e-02]],

[[-6.9337e-02, -6.3384e-02, -7.8037e-02, 2.7754e-02, 4.9844e-02],

[-1.8384e-02, 8.1333e-02, 8.1422e-02, -2.4105e-03, -6.8615e-02],

[-4.6947e-02, -5.5351e-02, 4.5957e-02, 2.9277e-02, -1.3860e-02],

[-4.6693e-02, 2.8683e-02, 6.1394e-02, 8.0850e-02, -5.0913e-02],

[ 5.7079e-02, 6.9726e-02, -7.1289e-03, 6.4266e-04, 5.3523e-02]],

[[-2.6457e-03, -2.0547e-02, -7.2038e-02, -2.7709e-02, -6.1851e-02],

[-4.1947e-02, 3.4758e-02, 2.2606e-02, -5.2566e-02, 8.0384e-02],

[-3.1233e-02, -4.4558e-02, 8.4542e-03, 3.6306e-02, -8.1493e-02],

[ 1.7083e-02, 8.3195e-04, -7.9616e-02, 2.9549e-03, -5.3943e-02],

[ 3.2066e-02, 7.3952e-03, 1.1623e-02, -5.5744e-02, 1.7965e-02]],

[[ 5.3836e-02, -6.7208e-02, -6.1651e-02, -1.8709e-02, 5.6753e-02],

[ 8.2612e-03, -3.2186e-02, -2.6628e-02, -4.6597e-02, -7.2020e-02],

[-4.3285e-03, 1.1460e-02, -3.0413e-02, 5.2102e-02, -7.5177e-02],

[-7.1347e-02, 5.5588e-02, 6.9111e-02, 1.4323e-02, -5.7546e-02],

[-1.8687e-02, -7.7605e-02, -8.0353e-02, -3.2596e-02, -3.1418e-02]],

[[-6.9735e-02, 5.3523e-02, -5.3416e-02, 4.5771e-02, 5.6954e-02],

[ 6.3120e-02, 5.5763e-02, 1.6067e-02, 8.6567e-03, -4.2644e-02],

[-5.9344e-02, -4.6653e-03, 7.6593e-02, -7.3292e-02, -5.3917e-02],

[ 6.6566e-02, 3.1131e-02, 7.7349e-02, -2.7129e-02, 8.4518e-03],

[ 1.6985e-02, -7.0555e-02, 3.8170e-02, -1.1612e-02, -7.6542e-02]],

[[-6.0196e-02, -6.4580e-02, -3.1164e-03, -4.1933e-02, 7.1420e-03],

[ 7.9487e-02, -1.4821e-02, 2.2844e-03, -1.5251e-03, 3.7557e-02],

[-6.8896e-02, -6.6881e-03, 5.7520e-02, 1.8301e-02, -3.7004e-02],

[-2.5592e-02, 3.0609e-02, 6.9578e-02, 5.5549e-02, -5.3245e-02],

[ 4.6727e-02, 8.0116e-02, -7.5505e-02, -2.8765e-02, -3.5874e-02]]],

[[[ 5.3670e-02, 7.4484e-02, -6.3226e-02, -2.9761e-02, 6.0873e-02],

[-6.1811e-02, 1.6729e-02, 4.5729e-02, 2.8226e-04, -1.3171e-02],

[-1.2364e-02, 7.2936e-02, 1.0765e-02, 3.1374e-02, 1.7582e-02],

[ 1.3305e-02, -6.6938e-02, -6.6351e-02, 4.8234e-02, 2.5997e-02],

[-4.1954e-03, -6.4869e-02, 1.7950e-02, 3.3482e-02, -1.2225e-02]],

[[-1.3839e-02, 3.9010e-02, -6.4779e-02, -7.0044e-02, -2.7837e-02],

[ 5.8636e-02, 7.5278e-02, 7.1607e-02, 5.5469e-02, 5.4468e-02],

[-7.4318e-02, 7.4283e-03, -2.3738e-02, 6.4434e-02, 1.9524e-02],

[ 7.8238e-02, 5.3939e-02, -4.3555e-02, 6.3559e-02, 8.1849e-04],

[-5.5254e-02, -6.8373e-02, 5.0078e-02, 2.6748e-02, 4.5676e-02]],

[[ 7.1268e-02, 3.6513e-03, 2.4753e-02, 3.5536e-02, 2.0245e-02],

[ 5.9411e-02, 6.5015e-02, 6.7302e-02, 5.5706e-02, -7.3357e-02],

[ 6.7356e-02, 2.8092e-02, 8.0472e-02, 6.9567e-02, -3.6824e-02],

[-4.3014e-02, -7.9176e-02, 5.1021e-02, -3.2842e-02, 4.3498e-02],

[-3.7790e-03, 3.6721e-03, 3.3520e-02, 3.8218e-02, -6.1545e-02]],

[[-4.7160e-02, -2.7351e-02, -6.6609e-02, -6.4513e-03, 3.4438e-02],

[-4.4675e-03, -6.5095e-02, 6.1610e-02, 8.0325e-02, 5.7229e-02],

[-1.3750e-02, -1.0938e-02, -4.5011e-02, 6.9686e-02, 5.1559e-02],

[ 5.8902e-02, -1.1045e-02, -4.3365e-02, -2.8516e-04, 7.0693e-02],

[ 5.4149e-02, -3.4944e-02, -4.5348e-02, -4.7880e-02, -6.0826e-02]],

[[ 8.4448e-03, 9.3816e-03, 3.4866e-02, 3.8719e-04, -4.4713e-02],

[-3.7519e-02, 1.4705e-02, 3.1401e-02, 6.1778e-02, -2.9698e-02],

[ 2.0491e-02, -1.3609e-02, 6.7055e-02, 4.1654e-02, -3.3637e-02],

[-6.8364e-02, -5.5866e-02, -7.7622e-02, 1.5276e-02, -3.5520e-02],

[ 3.1254e-02, -7.0029e-02, 4.4888e-02, 5.9723e-02, 3.6382e-02]],

[[-2.3066e-02, 6.7966e-02, 5.1811e-02, -5.9159e-02, 3.1069e-02],

[ 5.1321e-02, 6.7464e-02, 7.5866e-02, -5.5414e-02, 4.0420e-02],

[-4.4354e-02, 7.9306e-02, -1.4644e-02, -9.3875e-03, 2.6070e-02],

[ 7.4850e-02, 6.0425e-02, -2.0909e-02, 5.9285e-02, 4.6566e-02],

[ 1.3756e-02, 1.1649e-02, 2.8588e-02, -1.3022e-03, 2.3256e-02]]],

...,

[[[ 7.6311e-02, 7.5261e-02, 5.7561e-02, -4.3356e-02, -7.2909e-02],

[-4.6708e-02, 5.1551e-02, -4.8101e-02, -5.1413e-02, -3.6152e-02],

[ 6.0626e-02, 5.6325e-04, -2.1743e-02, 3.3400e-02, 7.9141e-02],

[ 3.5604e-02, 6.9508e-02, -2.1984e-02, 5.9585e-02, -9.4945e-03],

[-4.0188e-02, -5.4732e-03, 6.8583e-02, 3.0551e-02, -1.1802e-02]],

[[ 5.2653e-02, 6.1670e-02, 2.6309e-03, 5.3165e-02, -3.8166e-02],

[ 8.1076e-02, 5.7386e-02, -6.1753e-02, 4.9131e-02, 3.6430e-02],

[-4.7202e-02, -4.2705e-02, -4.6333e-02, 6.7525e-02, 4.8547e-02],

[ 7.9853e-02, -8.0172e-02, 4.9944e-03, -5.7631e-02, -6.1132e-03],

[-5.5667e-03, -2.1569e-02, -2.8139e-02, -8.9662e-03, 3.5014e-02]],

[[-3.0472e-02, 3.0703e-02, 5.1314e-02, -6.2341e-02, -6.3894e-02],

[-7.6258e-02, 1.6020e-02, 3.3108e-02, -6.8395e-02, -5.7936e-02],

[ 6.3067e-02, 6.1881e-04, -8.0695e-02, -1.7197e-02, 2.2778e-02],

[ 4.4634e-02, -7.0455e-02, -2.1533e-02, 3.6857e-02, 5.7196e-02],

[ 3.5345e-02, 6.9631e-02, 5.5229e-02, 4.2128e-02, -3.4088e-02]],

[[-7.2163e-03, 3.5563e-02, -3.1936e-02, 1.2877e-02, -2.3022e-02],

[-5.9097e-02, 1.5192e-02, 6.8320e-02, -4.6643e-02, -1.9811e-03],

[-5.9560e-03, 7.7662e-02, 7.9657e-02, -4.4968e-02, -7.6457e-02],

[-1.1028e-02, -3.5175e-02, -1.3390e-02, 5.1161e-02, -5.4926e-02],

[-4.1221e-02, 6.2617e-02, -5.9798e-02, -8.9769e-04, 6.3048e-02]],

[[ 4.4751e-02, -5.1591e-02, -3.6866e-02, -7.4997e-02, 4.9472e-02],

[-6.2221e-02, 6.6295e-02, -5.0621e-02, 3.4758e-02, -2.1337e-02],

[ 5.0706e-02, -5.2147e-02, 6.8346e-02, 3.2746e-02, 7.1333e-02],

[-1.4602e-02, -6.2453e-02, -1.9406e-02, 6.9041e-02, -2.8379e-02],

[ 3.4721e-03, 7.7462e-02, 5.1289e-02, 3.3926e-02, -2.8289e-02]],

[[-5.0928e-02, -2.8340e-02, 2.2817e-02, 7.0458e-02, 4.2438e-02],

[-3.3680e-02, 4.5647e-02, -4.9270e-02, -2.8433e-02, 2.9541e-02],

[ 2.3391e-02, -7.4426e-02, 6.4900e-02, -7.1001e-02, 4.5884e-02],

[-5.7277e-02, -4.6285e-03, 5.3839e-02, -5.6219e-02, 7.9665e-02],

[-8.1319e-02, -8.1240e-02, 1.2502e-02, 5.5328e-02, 2.0030e-02]]],

[[[ 4.8441e-02, 4.8989e-02, -2.4322e-02, -5.9358e-02, -6.8357e-02],

[-2.1089e-02, 4.5388e-02, -5.1134e-03, 4.3126e-02, -1.6892e-03],

[-8.1167e-02, -5.7231e-02, 3.9827e-02, -6.3646e-03, -3.9094e-02],

[-4.2316e-02, 5.3464e-02, -8.8957e-03, -4.7075e-02, -1.7774e-02],

[-6.5931e-02, 1.1694e-02, -3.9309e-02, 4.1809e-02, 1.2976e-03]],

[[-6.8713e-02, -1.7812e-02, -1.4486e-02, 8.3056e-03, -6.8082e-02],

[-1.8569e-02, 2.6123e-02, 2.4870e-05, 5.6066e-02, 4.5435e-02],

[ 5.3947e-02, 1.4763e-02, 1.4906e-02, -5.5155e-02, 4.7769e-02],

[ 5.3297e-02, -3.9101e-02, 4.6465e-02, 1.1151e-02, 5.5033e-02],

[ 3.6748e-03, 3.9723e-02, -4.1154e-02, 4.6245e-02, -3.3245e-02]],

[[-1.8014e-02, -8.0139e-02, -6.3273e-03, -3.8566e-02, 4.0923e-03],

[ 5.8483e-02, -6.5439e-02, 2.0173e-02, 7.2705e-02, -3.5129e-02],

[-4.8423e-02, -5.2663e-02, -1.3957e-02, 2.9158e-02, 7.8463e-02],

[ 7.1398e-04, 6.5734e-02, 3.6854e-02, -2.1278e-02, 3.3324e-02],

[ 3.6285e-02, 4.2179e-02, -2.9803e-02, -4.1720e-03, -2.0233e-02]],

[[-2.7885e-02, 5.2520e-02, 5.1337e-02, -2.6349e-02, 1.5047e-02],

[ 8.1576e-02, -3.3374e-02, 2.2938e-02, -4.8218e-02, 3.5318e-02],

[-6.8747e-02, -4.1312e-02, 7.9037e-03, 6.8197e-02, -5.2138e-02],

[ 2.2267e-03, 2.0724e-02, -5.1848e-02, 4.9394e-02, 7.5763e-02],

[ 3.7441e-02, 2.7114e-02, 2.8150e-02, -5.4438e-02, -5.9701e-02]],

[[ 7.5385e-02, 2.2393e-02, -6.8777e-02, -2.0514e-02, 6.8338e-02],

[-6.6630e-02, 7.4462e-02, 4.0799e-02, -1.2080e-02, 7.0668e-02],

[-7.2318e-03, -4.5113e-02, -8.0733e-03, 6.0332e-02, 7.7593e-02],

[ 1.5821e-02, -2.2018e-02, 7.7987e-02, 4.0169e-02, -6.3680e-02],

[-8.1590e-02, 6.3925e-02, 6.4027e-02, 3.3750e-02, -6.6571e-02]],

[[-4.0221e-02, 2.1132e-02, -7.6372e-02, 3.1901e-02, -2.6639e-03],

[ 6.2732e-02, 2.5971e-02, -3.8870e-02, -5.9896e-02, 3.6378e-02],

[ 1.7306e-02, 4.8518e-02, -3.4341e-02, -5.0257e-03, -7.7493e-02],

[-5.3312e-02, 4.5801e-02, 6.3797e-02, -7.6749e-02, -1.9681e-02],

[ 4.9668e-02, 2.5340e-02, -4.5465e-02, 5.5866e-02, -1.7633e-03]]],

[[[ 3.3887e-02, 4.1100e-02, -6.0800e-02, 3.0658e-02, -2.4294e-02],

[ 2.2048e-02, 3.4223e-02, -2.1182e-02, -1.4165e-02, 2.0991e-02],

[-3.8944e-03, 2.8239e-02, -3.5322e-02, -2.2963e-02, 2.2183e-02],

[-4.6182e-02, 7.5100e-02, -5.7425e-02, -7.0277e-02, 7.4489e-02],

[-6.5370e-02, 6.8510e-02, 2.4596e-02, 7.9349e-03, 4.1632e-02]],

[[-8.0785e-02, -5.5982e-02, -5.2925e-02, 4.4351e-02, -5.4612e-02],

[-2.7587e-02, -4.9968e-02, 4.6770e-02, -4.7240e-02, 5.7632e-02],

[ 2.1552e-02, 1.9329e-03, -3.6635e-02, -4.1714e-04, 7.6460e-02],

[ 8.0785e-02, 5.0883e-02, 7.0737e-02, 4.5160e-02, 1.2882e-03],

[ 7.1417e-02, -2.8139e-02, 6.3305e-02, -2.5239e-02, 7.1895e-02]],

[[-7.3631e-02, -3.3411e-02, 3.2707e-02, -6.8281e-02, 2.5994e-02],

[ 5.2490e-02, -5.4156e-02, -8.1550e-02, -2.4794e-02, -6.3099e-02],

[ 4.9664e-02, 1.6858e-02, -4.8651e-02, 1.4407e-02, -7.8078e-02],

[ 8.1470e-03, 2.8146e-03, 5.2201e-02, -2.3638e-02, -3.0703e-02],

[ 7.4847e-02, -2.3422e-02, 2.0211e-02, -3.9981e-02, 4.2893e-02]],

[[-3.6386e-02, 6.5790e-02, 4.4377e-02, -3.0673e-02, 6.3339e-02],

[-3.0514e-03, 6.0295e-02, 2.9729e-02, -4.2983e-02, 3.9292e-02],

[ 3.1405e-02, -7.3552e-02, 3.4892e-03, -2.5254e-02, 8.5877e-03],