目录

[1.1 saveDataAcount接口慢](#1.1 saveDataAcount接口慢)

[2.1接入 RabbitMQ](#2.1接入 RabbitMQ)

[2.2 接入redis](#2.2 接入redis)

[2.3 监听exampleServiceAPI过来的数据](#2.3 监听exampleServiceAPI过来的数据)

[2.5 生产者](#2.5 生产者)

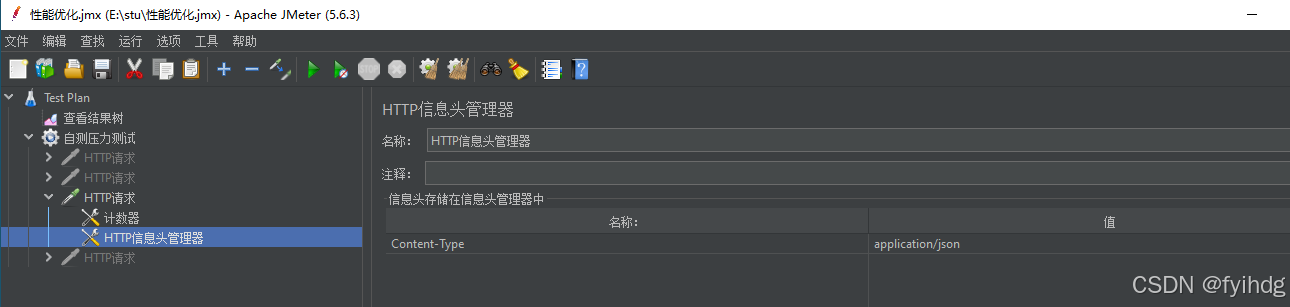

[2.6 配置 application.yml 的pom.xml](#2.6 配置 application.yml 的pom.xml)

[3.1 接入kafka](#3.1 接入kafka)

[3.2 接入RabbitMQ](#3.2 接入RabbitMQ)

[3.3 接入redis](#3.3 接入redis)

[3.4 监听serviceA发过来的消息](#3.4 监听serviceA发过来的消息)

[3.5 模拟业务方收到的kafka消息](#3.5 模拟业务方收到的kafka消息)

[3.6 对外业务接口](#3.6 对外业务接口)

[3.7 生产者](#3.7 生产者)

[3.8 application.yml和pom.xml](#3.8 application.yml和pom.xml)

[4.1 旧接口测试](#4.1 旧接口测试)

[4.2 改造后的接口测试](#4.2 改造后的接口测试)

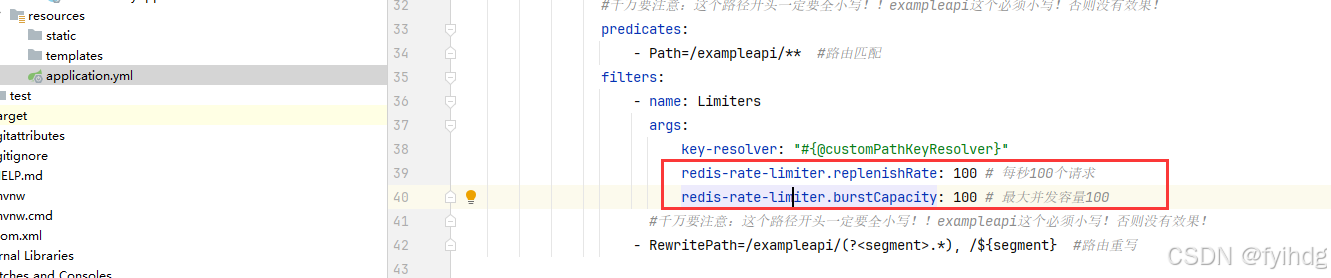

[5.1 限流配置](#5.1 限流配置)

[5.2 实现请求的限流功能](#5.2 实现请求的限流功能)

[5.3 定义限流策略中的键](#5.3 定义限流策略中的键)

[5.4 限流配置](#5.4 限流配置)

[5.5 application.yml和pom.xml](#5.5 application.yml和pom.xml)

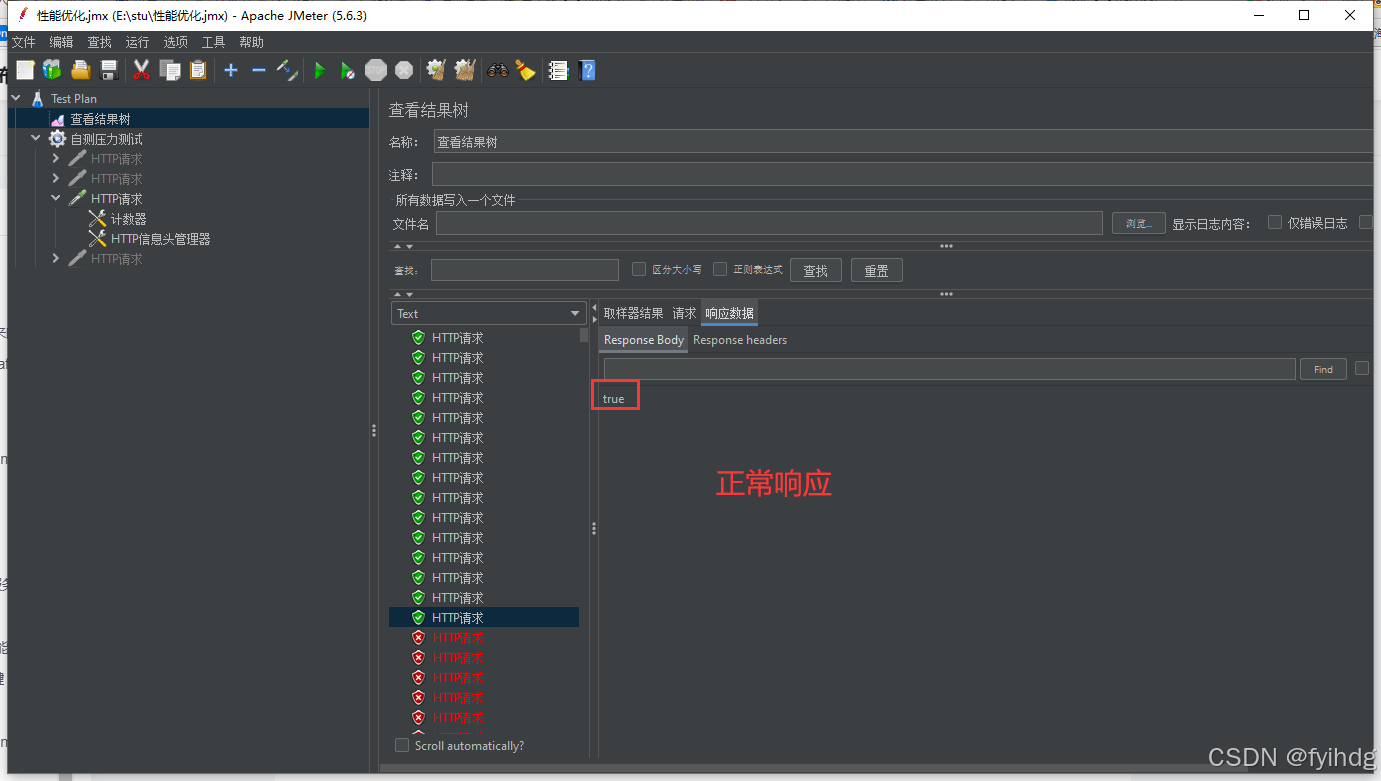

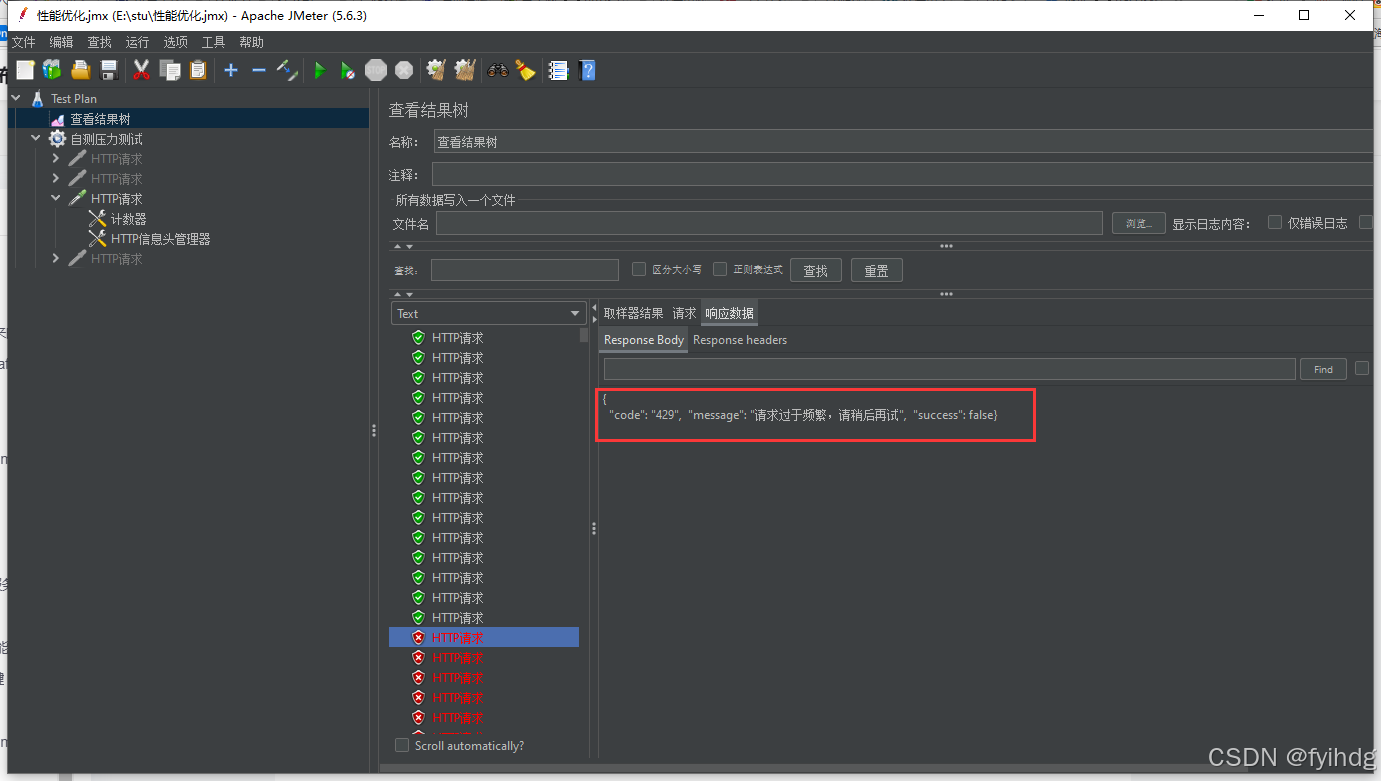

[6. JMeter 限流测试](#6. JMeter 限流测试)

[6.1 JMeter 配置](#6.1 JMeter 配置)

[6.2 压测结果](#6.2 压测结果)

1、场景说明

1.1 saveDataAcount接口慢

现在有一个需求,就是对原来的接口进行性能优化,提高并发量,为了方便大家学习,我新搭spring boot服务来演示这次优化的过程, serviceA有一个saveDataAcount接口,性能不佳,需要优化,如果是你,你怎么做?? 慢的一个重要原因是每次请求都要把数据写到数据库,数据库的并发量是有限的,在高并发场景下是扛不不住的。

java

/**

* 模拟业务接口

* @author

*/

@PostMapping("/saveDataAcount")

public String saveDataAcount(@RequestBody AccountDto accountDto){

String accountData = dbService.useDb("select * from account");

log.info("accountData:{}",accountData);

// accountService.saveAccount(accountDto);

return "处理成功!";

}演示用到的表:

sql

CREATE TABLE `account` (

`oprid` varchar(11) DEFAULT NULL,

`age` int DEFAULT NULL,

`name` varchar(11) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci;1.2.接口优化方案设计

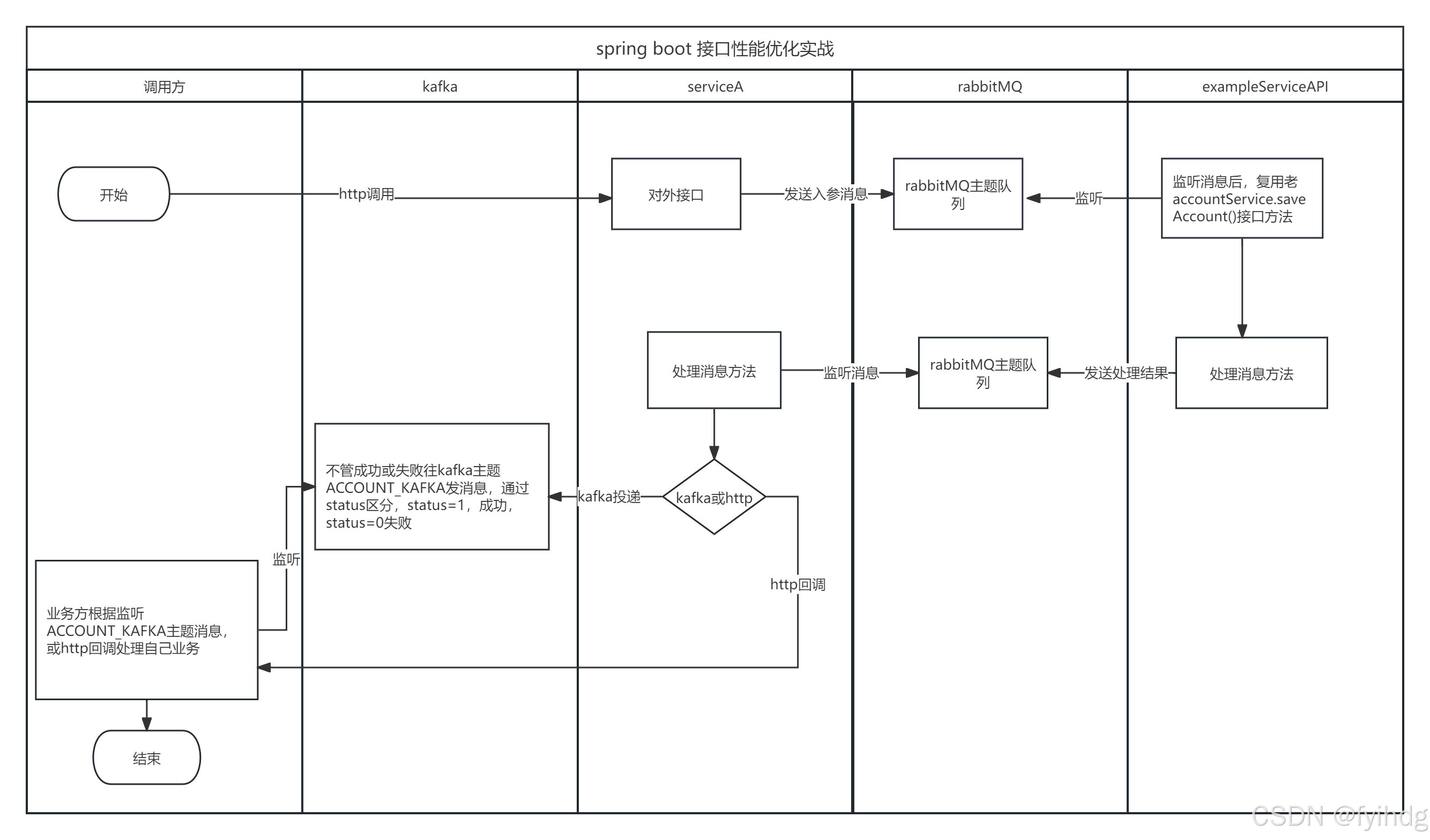

设计说明:

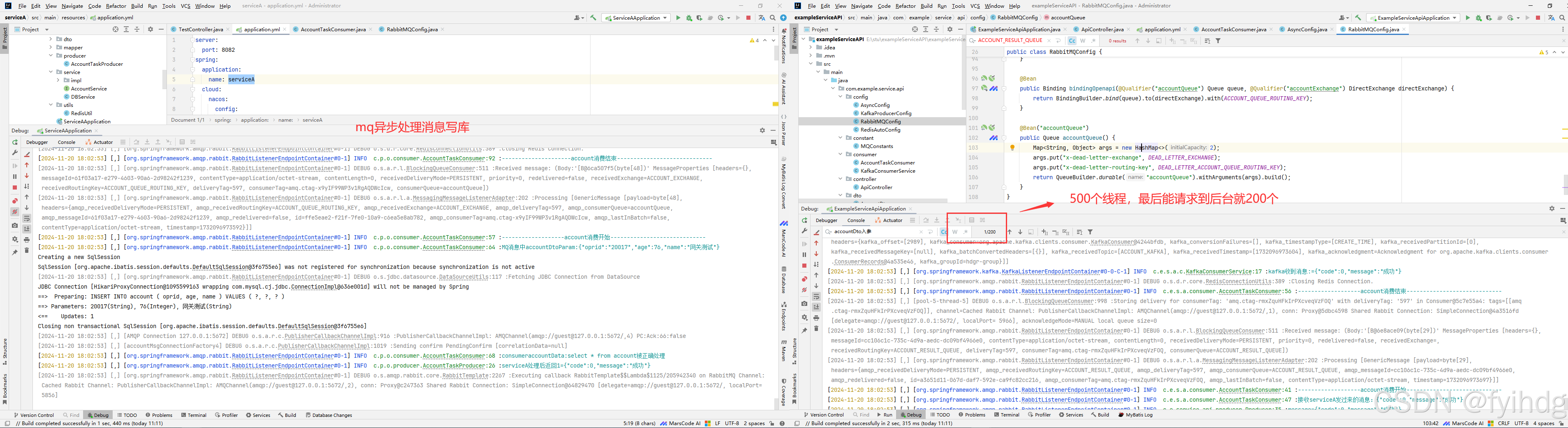

再搭建一个新微服务:exampleServiceAPI,接入kafka和rabbitMq,由exampleServiceAPI服务对外提供接口;当业务方调用接口的时候,exampleServiceAPI服务处理请求,直接响应,然后exampleServiceAPI服务用把此次请求入参通过rabbbitMq给serviceA服务发消息serviceA收到消息后,复用原来accountService.saveAccount()接口方法处理业务逻辑,把处理结果又通过rabbitMq发exampleServiceAPI这个新服务;exampleServiceAPI收到rabbitMq消息进行处理,不管成功或失败,把消费结果发送到kafka主题:ACCOUNT_KAFKA,成功或失败,通过status区分status=1,成功,status=0失败,调用方根据这个主题消费消息处理自己的业务逻辑。接下来搭项目演示优化过程。

2.搭建serviceA服务

2.1接入 RabbitMQ

java

package com.performance.optimization.config;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.core.*;

import org.springframework.amqp.rabbit.config.SimpleRabbitListenerContainerFactory;

import org.springframework.amqp.rabbit.connection.CachingConnectionFactory;

import org.springframework.amqp.rabbit.connection.ConnectionFactory;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.amqp.support.converter.MessageConversionException;

import org.springframework.amqp.support.converter.MessageConverter;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.autoconfigure.amqp.SimpleRabbitListenerContainerFactoryConfigurer;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.util.HashMap;

import java.util.Map;

import java.util.UUID;

import static com.performance.optimization.constant.MQConstants.*;

@Slf4j

@Configuration

public class RabbitMQConfig {

/* *

*创建连接工厂

*/

@Bean(name = "accountMsgConnectionFactory")

public ConnectionFactory accountMsgConnectionFactory(@Value("${hdg.rabbitmq.my-rabbit.address}") String address,

@Value("${hdg.rabbitmq.my-rabbit.username}") String username,

@Value("${hdg.rabbitmq.my-rabbit.password}") String password,

@Value("${hdg.rabbitmq.my-rabbit.virtual-host}") String vHost) {

CachingConnectionFactory connectionFactory = new CachingConnectionFactory();

connectionFactory.setAddresses(address);

connectionFactory.setUsername(username);

connectionFactory.setPassword(password);

connectionFactory.setVirtualHost(vHost);

//设置发送方确认

connectionFactory.setPublisherConfirmType(CachingConnectionFactory.ConfirmType.CORRELATED);

return connectionFactory;

}

/**

* 生产者

*/

@Bean(name = "accountMsgRabbitTemplate")

public RabbitTemplate accountMsgRabbitTemplate(@Qualifier("accountMsgConnectionFactory") ConnectionFactory connectionFactory) {

RabbitTemplate template = new RabbitTemplate();

template.setConnectionFactory(connectionFactory);

//设置messageId

template.setMessageConverter(new MessageConverter() {

@Override

public Message toMessage(Object o, MessageProperties messageProperties) throws MessageConversionException {

//设置UUID作为消息唯一标识

String uuid = UUID.randomUUID().toString();

messageProperties.setMessageId(uuid);

return new Message(o.toString().getBytes(),messageProperties);

}

@Override

public Object fromMessage(Message message) throws MessageConversionException {

return message;

}

});

template.setConfirmCallback((correlationData, ack, cause) -> {

if (!ack) {

log.error("[confirm][发送消息到exchange失败 correlationData: {} cause: {}]", correlationData, cause);

}

}

);

return template;

}

@Bean("accountQueue")

public Queue accountQueue() {

Map<String, Object> args = new HashMap<>(2);

args.put("x-dead-letter-exchange", DEAD_LETTER_EXCHANGE);

args.put("x-dead-letter-routing-key", DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY);

return QueueBuilder.durable(ACCOUNT_RESULT_QUEUE).withArguments(args).build();

}

@Bean("accountResultExchange")

public DirectExchange accountExchange() {

return new DirectExchange(ACCOUNT_RESULT_EXCHANGE);

}

@Bean

public Binding bindingaccount(@Qualifier("accountQueue") Queue queue, @Qualifier("accountResultExchange") DirectExchange directExchange) {

return BindingBuilder.bind(queue).to(directExchange).with(ACCOUNT_QUEUE_ROUTING_RESULT_KEY);

}

@Bean(name = "accountMsgListenerFactory")

public SimpleRabbitListenerContainerFactory firstListenerFactory(

SimpleRabbitListenerContainerFactoryConfigurer configurer,

@Qualifier("accountMsgConnectionFactory") ConnectionFactory connectionFactory) {

SimpleRabbitListenerContainerFactory listenerContainerFactory = new SimpleRabbitListenerContainerFactory();

//设置手动ack

listenerContainerFactory.setAcknowledgeMode(AcknowledgeMode.MANUAL);

configurer.configure(listenerContainerFactory, connectionFactory);

return listenerContainerFactory;

}

}2.2 接入redis

java

package com.performance.optimization.config;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.annotation.JsonAutoDetect.Visibility;

import com.fasterxml.jackson.annotation.JsonTypeInfo.As;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.ObjectMapper.DefaultTyping;

import com.fasterxml.jackson.databind.jsontype.impl.LaissezFaireSubTypeValidator;

import com.performance.optimization.utils.RedisUtil;

import io.lettuce.core.cluster.ClusterClientOptions;

import io.lettuce.core.cluster.ClusterTopologyRefreshOptions;

import java.util.HashSet;

import java.util.Set;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.boot.autoconfigure.data.redis.RedisProperties;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.RedisClusterConfiguration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.connection.RedisSentinelConfiguration;

import org.springframework.data.redis.connection.RedisStandaloneConfiguration;

import org.springframework.data.redis.connection.lettuce.LettuceClientConfiguration;

import org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory;

import org.springframework.data.redis.connection.lettuce.LettucePoolingClientConfiguration;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.Jackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

@Configuration

@EnableConfigurationProperties({RedisProperties.class})

public class RedisAutoConfig {

@Autowired

private RedisProperties redisProperties;

public RedisAutoConfig() {

}

public GenericObjectPoolConfig genericObjectPoolConfig() {

GenericObjectPoolConfig config = new GenericObjectPoolConfig();

config.setMinIdle(this.redisProperties.getLettuce().getPool().getMinIdle());

config.setMinIdle(this.redisProperties.getLettuce().getPool().getMaxIdle());

config.setMaxTotal(this.redisProperties.getLettuce().getPool().getMaxActive());

return config;

}

public LettuceClientConfiguration clusterClientConfiguration() {

ClusterTopologyRefreshOptions clusterTopologyRefreshOptions = ClusterTopologyRefreshOptions.builder().enableAllAdaptiveRefreshTriggers().enablePeriodicRefresh().build();

ClusterClientOptions clusterClientOptions = ClusterClientOptions.builder().topologyRefreshOptions(clusterTopologyRefreshOptions).build();

LettuceClientConfiguration lettuceClientConfiguration = LettucePoolingClientConfiguration.builder().poolConfig(this.genericObjectPoolConfig()).clientOptions(clusterClientOptions).build();

return lettuceClientConfiguration;

}

public RedisClusterConfiguration clusterConfiguration() {

RedisClusterConfiguration clusterConfiguration = new RedisClusterConfiguration(this.redisProperties.getCluster().getNodes());

clusterConfiguration.setMaxRedirects(this.redisProperties.getCluster().getMaxRedirects());

clusterConfiguration.setUsername(this.redisProperties.getUsername());

clusterConfiguration.setPassword(this.redisProperties.getPassword());

return clusterConfiguration;

}

public LettuceConnectionFactory clusterConnectionFactory() {

LettuceConnectionFactory lettuceConnectionFactory = new LettuceConnectionFactory(this.clusterConfiguration(), this.clusterClientConfiguration());

lettuceConnectionFactory.afterPropertiesSet();

return lettuceConnectionFactory;

}

public LettuceClientConfiguration sentinelClientConfiguration() {

LettucePoolingClientConfiguration.LettucePoolingClientConfigurationBuilder builder = LettucePoolingClientConfiguration.builder();

builder.poolConfig(this.genericObjectPoolConfig());

ClusterClientOptions clusterClientOptions = ClusterClientOptions.builder().build();

builder.clientOptions(clusterClientOptions);

LettuceClientConfiguration lettuceClientConfiguration = builder.build();

return lettuceClientConfiguration;

}

public RedisSentinelConfiguration redisSentinelConfiguration() {

Set<String> nodes = new HashSet(this.redisProperties.getSentinel().getNodes());

RedisSentinelConfiguration sentinelConfig = new RedisSentinelConfiguration(this.redisProperties.getSentinel().getMaster(), nodes);

sentinelConfig.setSentinelPassword(this.redisProperties.getSentinel().getPassword());

sentinelConfig.setUsername(this.redisProperties.getUsername());

sentinelConfig.setPassword(this.redisProperties.getPassword());

sentinelConfig.setDatabase(this.redisProperties.getDatabase());

return sentinelConfig;

}

public LettuceConnectionFactory sentinelConnectionFactory() {

LettuceConnectionFactory factory = new LettuceConnectionFactory(this.redisSentinelConfiguration(), this.sentinelClientConfiguration());

factory.afterPropertiesSet();

return factory;

}

public RedisStandaloneConfiguration redisStandaloneConfiguration() {

RedisStandaloneConfiguration standaloneConfiguration = new RedisStandaloneConfiguration(this.redisProperties.getHost(), this.redisProperties.getPort());

standaloneConfiguration.setUsername(this.redisProperties.getUsername());

standaloneConfiguration.setPassword(this.redisProperties.getPassword());

if (this.redisProperties.getDatabase() >= 0) {

standaloneConfiguration.setDatabase(this.redisProperties.getDatabase());

}

return standaloneConfiguration;

}

public LettuceConnectionFactory standaloneConnectionFactory() {

LettuceConnectionFactory factory = new LettuceConnectionFactory(this.redisStandaloneConfiguration(), this.sentinelClientConfiguration());

factory.afterPropertiesSet();

return factory;

}

protected RedisTemplate<String, Object> getRedisTemplate(RedisConnectionFactory factory) {

RedisTemplate<String, Object> template = new RedisTemplate();

template.setConnectionFactory(factory);

Jackson2JsonRedisSerializer<Object> jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper();

om.setVisibility(PropertyAccessor.ALL, Visibility.ANY);

om.activateDefaultTyping(LaissezFaireSubTypeValidator.instance, DefaultTyping.NON_FINAL, As.PROPERTY);

jackson2JsonRedisSerializer.setObjectMapper(om);

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

template.setKeySerializer(stringRedisSerializer);

template.setValueSerializer(jackson2JsonRedisSerializer);

template.setHashKeySerializer(stringRedisSerializer);

template.setHashValueSerializer(jackson2JsonRedisSerializer);

template.afterPropertiesSet();

return template;

}

@Bean(

name = {"clusterRedisUtil"}

)

@ConditionalOnProperty(

prefix = "spring.redis.cluster",

name = {"active"},

havingValue = "true"

)

public RedisUtil redisUtil() {

return new RedisUtil(this.getRedisTemplate(this.clusterConnectionFactory()));

}

@Bean(

name = {"sentinelRedisUtil"}

)

@ConditionalOnProperty(

prefix = "spring.redis.sentinel",

name = {"master"}

)

public RedisUtil sentinelRedisUtil() {

return new RedisUtil(this.getRedisTemplate(this.sentinelConnectionFactory()));

}

@Bean(

name = {"standaloneRedisUtil"}

)

@ConditionalOnProperty(

prefix = "spring.redis",

name = {"host"}

)

public RedisUtil standaloneRedisUtil() {

return new RedisUtil(this.getRedisTemplate(this.standaloneConnectionFactory()));

}

}

java

package com.performance.optimization.constant;

public class MQConstants {

public static final String DEAD_LETTER_EXCHANGE = "DEAD_LETTER_EXCHANGE";

public static final String DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY = "DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY";

public static final String ACCOUNT_RESULT_EXCHANGE = "ACCOUNT_RESULT_EXCHANGE";

public static final String ACCOUNT_QUEUE_ROUTING_RESULT_KEY = "ACCOUNT_QUEUE_ROUTING_RESULT_KEY";

public static final String ACCOUNT_RESULT_QUEUE = "ACCOUNT_RESULT_QUEUE";

}2.3 监听exampleServiceAPI过来的数据

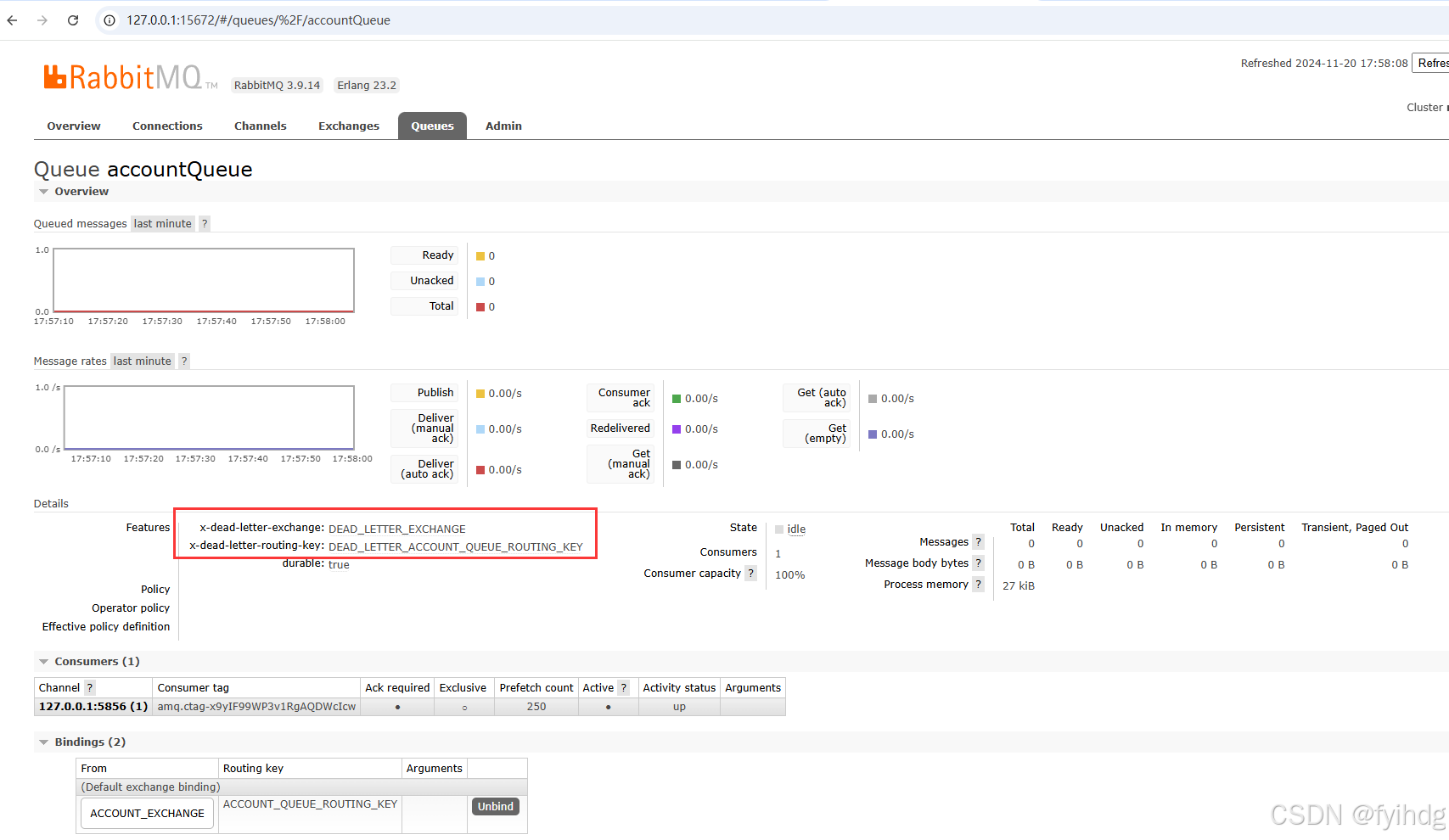

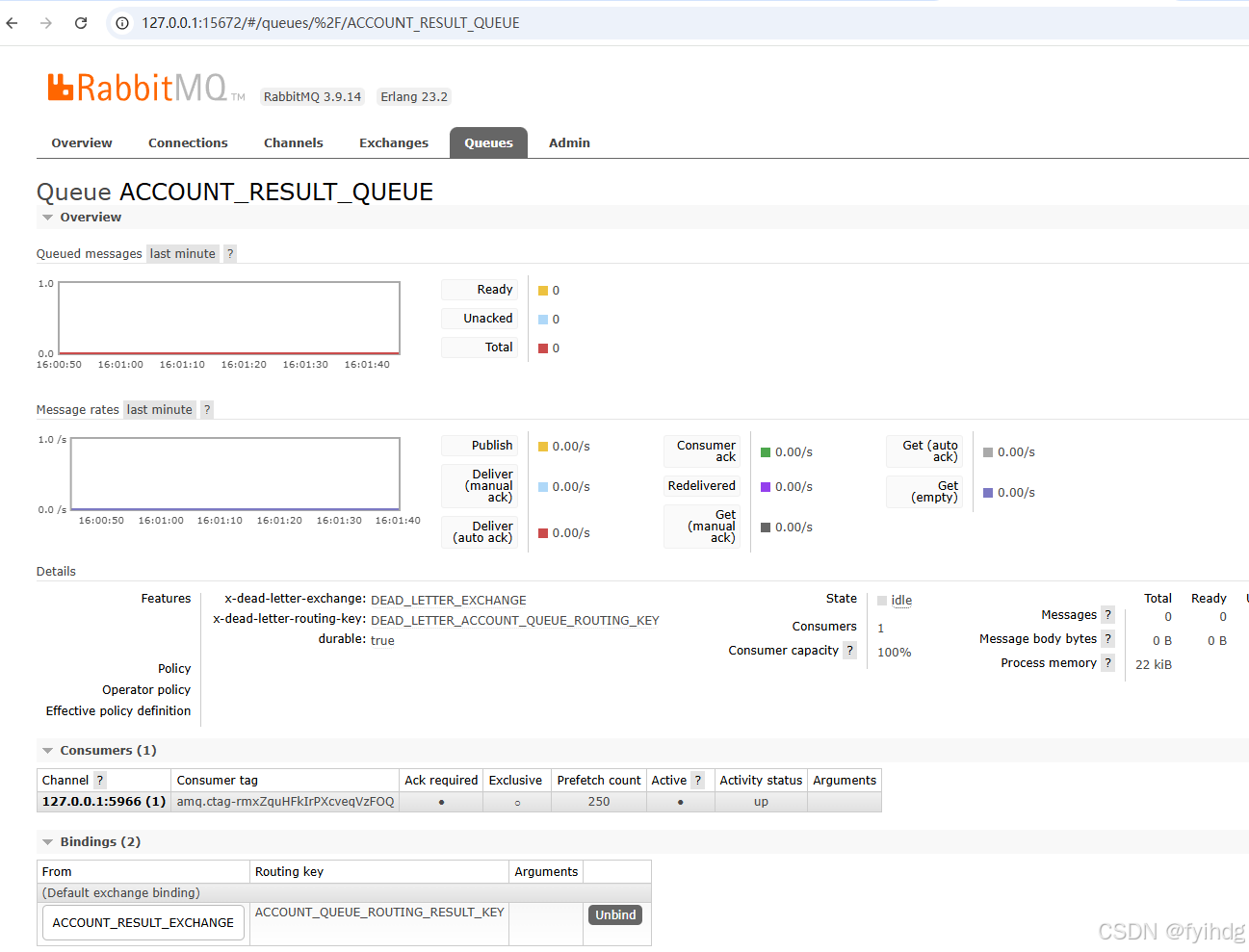

这里我要讲一下@RabbitListener这个注解中的queuesToDeclare属性,如果没有队列,服务启动后会自动创建队列,就算你手动删除,也会立即自动创建,不用手动创建队列。程序创建队列有两个场景,一个是@RabbitListener这个注解,服务启动的时候,如果没有队列,这个注解会自动创建队列,如果已有队列,则不会创建;另一个是QueueBuilder.durable()方法也会自动创建列队,如果队列已存在,也不会创建。

现在会有一个问题要注意:serviceA服务监听accountQueue队列和exampleServiceAPI服务有QueueBuilder.durable("accountQueue").withArguments(args).build()代码创建队列,两边创建时队属性要完全一样,否则服务启动时就会报错了。

也就是说假如serviceA服务是这样写的:

java

@Bean("accountQueue")

public Queue accountQueue() {

Map<String, Object> args = new HashMap<>(2);

return QueueBuilder.durable(ACCOUNT_RESULT_QUEUE).withArguments(args).build();

}exampleServiceAPI这样写:

java

@Bean("accountQueue")

public Queue accountQueue() {

Map<String, Object> args = new HashMap<>(2);

args.put("x-dead-letter-exchange", DEAD_LETTER_EXCHANGE);

args.put("x-dead-letter-routing-key", DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY);

return QueueBuilder.durable("accountQueue").withArguments(args).build();

}serviceA服务启动后,发现没有accountQueue这个队列,会自动创建,然后到exampleServiceAPI启动了,发现有这个队列,但属性不一样,就会报错了

java

channel error; protocol method: #method<channel.close>(reply-code=406, reply-text=PRECONDITION_FAILED - inequivalent arg 'x-dead-letter-exchange' for queue 'accountQueue' in vhost '/': received the value 'DEAD_LETTER_EXCHANGE' of type 'longstr' but current is none, class-id=50, method-id=10)

java

package com.performance.optimization.consumer;

import com.alibaba.nacos.shaded.com.google.gson.Gson;

import com.performance.optimization.dto.AccountDto;

import com.performance.optimization.dto.AccountResponseDto;

import com.performance.optimization.producer.AccountTaskProducer;

import com.performance.optimization.service.AccountService;

import com.performance.optimization.service.DBService;

import com.performance.optimization.utils.RedisUtil;

import com.rabbitmq.client.Channel;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.core.Message;

import org.springframework.amqp.rabbit.annotation.Argument;

import org.springframework.amqp.rabbit.annotation.Queue;

import org.springframework.amqp.rabbit.annotation.RabbitListener;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

import javax.annotation.Resource;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import static com.performance.optimization.constant.MQConstants.DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY;

import static com.performance.optimization.constant.MQConstants.DEAD_LETTER_EXCHANGE;

@Component

@Slf4j

public class AccountTaskConsumer {

@Resource

private RedisUtil redisUtil;

/**

* 模拟数据库由于高并发连接不够

*/

@Autowired

private DBService dbService;

@Autowired

private AccountService accountService;

@Autowired

AccountTaskProducer accountTaskProducer;

/**

* 监听exampleServiceAPI过来的数据

* @param message

* @param channel

* @throws IOException

*/

@RabbitListener(queuesToDeclare =@Queue(value ="accountQueue",arguments = {

@Argument(name = "x-dead-letter-exchange", value = DEAD_LETTER_EXCHANGE),

@Argument(name = "x-dead-letter-routing-key", value = DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY)

}) ,containerFactory = "accountMsgListenerFactory")

public void handleAccountTaskCreateOrUpdate(Channel channel, Message message) throws IOException {

log.info("-------------------account消费开始-----------------------------");

boolean ack = true;

Exception exception = null;

Gson gson = new Gson();

try {

//获得消息内容

String accountDtoParam = new String(message.getBody(), StandardCharsets.UTF_8);

log.info("MQ消息中accountDtoParam:{}", accountDtoParam);

AccountDto accountDto = gson.fromJson(accountDtoParam, AccountDto.class);

boolean flag = accountService.saveAccount(accountDto);

String accountData = dbService.useDb("select * from account");

log.info("consumeraccountData:{}",accountData);

if(flag){

AccountResponseDto accountResponseDto = new AccountResponseDto();

accountResponseDto.setCode(0);

accountResponseDto.setMessage("成功");

//把处理结果发送出去,发到exampleServiceAPI

accountTaskProducer.workbenchResultSend(gson.toJson(accountResponseDto));

}else{

AccountResponseDto accountResponseDto = new AccountResponseDto();

accountResponseDto.setCode(1);

accountResponseDto.setMessage("失败");

//把处理结果发送出去,发到exampleServiceAPI

accountTaskProducer.workbenchResultSend(gson.toJson(accountResponseDto));

}

} catch (Exception e) {

ack = false;

exception = e;

AccountResponseDto accountResponseDto = new AccountResponseDto();

accountResponseDto.setCode(2);

accountResponseDto.setMessage("失败");

//把处理结果发送出去,发到exampleServiceAPI

accountTaskProducer.workbenchResultSend(gson.toJson(accountResponseDto));

}

checkAck(ack, message, channel, exception);

log.info("---------------------account消费结束-----------------------------");

}

public void checkAck(Boolean ack, Message message, Channel channel, Exception exception) throws IOException {

String msgId = "";

if (!ack) {

log.error("消费消息发生异常, error msg:{}", exception.getMessage(), exception);

msgId = message.getMessageProperties().getMessageId();

Integer retryCount = 0;

if (msgId != null && redisUtil.exists(msgId)) {

retryCount = (Integer) redisUtil.get(msgId);

//messageId为空,直接放到死信队列

} else {

retryCount = 2;

}

//小于最大重试次数,重新消费

if (retryCount < 2) {

channel.basicNack(message.getMessageProperties().getDeliveryTag(), false, true);

redisUtil.set(msgId, retryCount + 1);

} else { //大于最大重试次数,放到死信队列

channel.basicNack(message.getMessageProperties().getDeliveryTag(), false, false);

}

} else { //确认消费成功

channel.basicAck(message.getMessageProperties().getDeliveryTag(), false);

redisUtil.del(msgId);

}

}

}

2.4数据库连接改造

由于要模拟高并发下数据库连接不够,我们使用我们自己写的连接,把连接数量减少,就可以模拟出线上环境数据库连接不够的问题。

java

package com.performance.optimization.db;

import org.springframework.stereotype.Component;

import java.sql.Connection;

import java.util.LinkedList;

/**

* 类说明:数据库连接池简单实现,线程安全,限定了连接池为2个大小

*/

@Component

public class DBPool {

//数据库池的容器

private static LinkedList<Connection> pool = new LinkedList<>();

//线程安全,限定了连接池为2个大小

private final static int CONNECT_CONUT = 2;

static{

for(int i=0;i<CONNECT_CONUT;i++) {

pool.addLast(SqlConnectImpl.fetchConnection());

}

}

//在mills时间内还拿不到数据库连接,返回一个null

public Connection fetchConn(long mills) throws InterruptedException {

synchronized (pool) {

if (mills<0) {

while(pool.isEmpty()) {

pool.wait();

}

return pool.removeFirst();

}else {

long overtime = System.currentTimeMillis()+mills;

long remain = mills;

while(pool.isEmpty()&&remain>0) {

pool.wait(remain);

remain = overtime - System.currentTimeMillis();

}

Connection result = null;

if(!pool.isEmpty()) {

result = pool.removeFirst();

}

return result;

}

}

}

//放回数据库连接

public void releaseConn(Connection conn) {

if(conn!=null) {

synchronized (pool) {

pool.addLast(conn);

pool.notifyAll();

}

}

}

}

java

package com.performance.optimization.db;

import java.util.concurrent.TimeUnit;

/**

* 类说明:模拟数据库服务

*/

public class SleepTools {

/**

* 按秒休眠

* @param seconds 秒数

*/

public static final void second(int seconds) {

try {

TimeUnit.SECONDS.sleep(seconds);

} catch (InterruptedException e) {

}

}

/**

* 按毫秒数休眠

* @param seconds 毫秒数

*/

public static final void ms(int seconds) {

try {

TimeUnit.MILLISECONDS.sleep(seconds);

} catch (InterruptedException e) {

}

}

}

java

package com.performance.optimization.db;

import java.sql.*;

import java.util.Map;

import java.util.Properties;

import java.util.concurrent.Executor;

/**

* 类说明:数据库连接的实现

*/

public class SqlConnectImpl implements Connection{

/*拿一个数据库连接*/

public static final Connection fetchConnection(){

return new SqlConnectImpl();

}

@Override

public void commit() throws SQLException {

SleepTools.ms(70);

}

// 其他未实现,省略,直接用idea 工具生成就行

}2.5 生产者

java

package com.performance.optimization.producer;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.stereotype.Component;

import javax.annotation.Resource;

import static com.performance.optimization.constant.MQConstants.ACCOUNT_RESULT_QUEUE;

/**

* 生产者

*/

@Component

@Slf4j

public class AccountTaskProducer {

@Resource

private RabbitTemplate rabbitTemplate;

/**

* 把消费结果发送回exampleServiceAPI

*/

public void workbenchResultSend(String accountDtoParam) {

log.info("serviceA处理后返回1={}" ,accountDtoParam);

rabbitTemplate.convertAndSend(ACCOUNT_RESULT_QUEUE, accountDtoParam);

}

/**

* 把消费结果发送回 exampleServiceAPI

*/

public void workbenchResultSend(boolean flag) {

log.info("serviceA处理后返回2={}" ,flag);

rabbitTemplate.convertAndSend(ACCOUNT_RESULT_QUEUE, flag);

}

}2.6 配置 application.yml 的pom.xml

java

server:

port: 8082

spring:

application:

name: serviceA

cloud:

nacos:

config:

server-addr: ${NACOS_SERVER:127.0.0.1:8848}

#服务发现

discovery:

server-addr: ${NACOS_SERVER:127.0.0.1:8848}

username: nacos

password: nacos

redis:

host: localhost

port: 6379

password:

database: 0

timeout: 5000ms

lettuce:

pool:

max-active: 8

max-wait: 1000ms

max-idle: 8

min-idle: 0

datasource:

url: jdbc:mysql://localhost:3306/hdg?useUnicode=true&characterEncoding=utf8&serverTimezone=UTC

username: root

password: root

driver-class-name: com.mysql.cj.jdbc.Driver

hikari:

maximum-pool-size: 1

minimum-idle: 1

mybatis-plus:

configuration:

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

mapper-locations: classpath:mapper/*.xml

type-aliases-package: com.performance.optimization.domain

#mq连接配置

hdg.rabbitmq:

my-rabbit:

address: 127.0.0.1:5672

username: guest

password: guest

virtual-host: /

XML

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.14</version>

<relativePath/>

</parent>

<groupId>com.performance.optimization</groupId>

<artifactId>serviceA</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>serviceA</name>

<description>serviceA</description>

<properties>

<java.version>1.8</java.version>

<spring.cloud.version>2021.0.5</spring.cloud.version>

<spring.cloud.alibaba.version>2021.0.5.0</spring.cloud.alibaba.version>

<spring.boot.version>2.7.14</spring.boot.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>com.mysql</groupId>

<artifactId>mysql-connector-j</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!--fastjson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.83</version>

</dependency>

<!--mybatis -->

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.4.3.2</version>

</dependency>

<!-- hutool-->

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>5.8.1</version>

</dependency>

<!--rabbitmq-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-amqp</artifactId>

</dependency>

<!-- redis相关-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<!-- 添加redis应用池依赖 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-bootstrap</artifactId>

</dependency>

<!-- nacos注册中心依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!-- nacos配置中心依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-config</artifactId>

</dependency>

<!-- 日志 -->

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

</dependency>

<!--日志以json格式输出所需-->

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.6</version>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring.cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>${spring.cloud.alibaba.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring.boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>至此serviceA搭建好了。

3.搭建exampleServiceAPI服务

3.1 接入kafka

java

package com.example.service.api.config;

import cn.hutool.core.collection.CollUtil;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.StringSerializer;

import org.springframework.boot.autoconfigure.kafka.KafkaProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.kafka.core.DefaultKafkaProducerFactory;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.core.ProducerFactory;

import java.util.HashMap;

import java.util.Map;

@Configuration

public class KafkaProducerConfig {

@Bean

public ProducerFactory<String, String> producerFactory(KafkaProperties kafkaProperties) {

Map<String, Object> configProps = new HashMap<>();

configProps.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,CollUtil.getFirst(kafkaProperties.getBootstrapServers()));

configProps.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

configProps.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

return new DefaultKafkaProducerFactory<>(configProps);

}

@Bean

public KafkaTemplate<String, String> kafkaTemplate(ProducerFactory<String,String> producerFactory) {

return new KafkaTemplate<>(producerFactory);

}

}3.2 接入RabbitMQ

java

package com.example.service.api.config;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.core.*;

import org.springframework.amqp.rabbit.config.SimpleRabbitListenerContainerFactory;

import org.springframework.amqp.rabbit.connection.CachingConnectionFactory;

import org.springframework.amqp.rabbit.connection.ConnectionFactory;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.amqp.support.converter.MessageConversionException;

import org.springframework.amqp.support.converter.MessageConverter;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.autoconfigure.amqp.SimpleRabbitListenerContainerFactoryConfigurer;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.util.HashMap;

import java.util.Map;

import java.util.UUID;

import static com.example.service.api.constant.MQConstants.*;

@Slf4j

@Configuration

public class RabbitMQConfig {

/* *

*创建连接工厂

*/

@Bean(name = "accountMsgConnectionFactory")

public ConnectionFactory accountMsgConnectionFactory(@Value("${hdg.rabbitmq.my-rabbit.address}") String address,

@Value("${hdg.rabbitmq.my-rabbit.username}") String username,

@Value("${hdg.rabbitmq.my-rabbit.password}") String password,

@Value("${hdg.rabbitmq.my-rabbit.virtual-host}") String vHost) {

CachingConnectionFactory connectionFactory = new CachingConnectionFactory();

connectionFactory.setAddresses(address);

connectionFactory.setUsername(username);

connectionFactory.setPassword(password);

connectionFactory.setVirtualHost(vHost);

//设置发送方确认

connectionFactory.setPublisherConfirmType(CachingConnectionFactory.ConfirmType.CORRELATED);

return connectionFactory;

}

/**

* 生产者

*/

@Bean(name = "accountMsgRabbitTemplate")

public RabbitTemplate accountMsgRabbitTemplate(@Qualifier("accountMsgConnectionFactory") ConnectionFactory connectionFactory) {

RabbitTemplate template = new RabbitTemplate();

template.setConnectionFactory(connectionFactory);

//设置messageId

template.setMessageConverter(new MessageConverter() {

@Override

public Message toMessage(Object o, MessageProperties messageProperties) throws MessageConversionException {

//设置UUID作为消息唯一标识

String uuid = UUID.randomUUID().toString();

messageProperties.setMessageId(uuid);

return new Message(o.toString().getBytes(),messageProperties);

}

@Override

public Object fromMessage(Message message) throws MessageConversionException {

return message;

}

});

template.setConfirmCallback((correlationData, ack, cause) -> {

if (!ack) {

log.error("[confirm][发送消息到exchange失败 correlationData: {} cause: {}]", correlationData, cause);

}

}

);

return template;

}

/**

* 消费者

*/

@Bean(name = "accountMsgListenerFactory")

public SimpleRabbitListenerContainerFactory firstListenerFactory(

SimpleRabbitListenerContainerFactoryConfigurer configurer,

@Qualifier("accountMsgConnectionFactory") ConnectionFactory connectionFactory) {

SimpleRabbitListenerContainerFactory listenerContainerFactory = new SimpleRabbitListenerContainerFactory();

//设置手动ack

listenerContainerFactory.setAcknowledgeMode(AcknowledgeMode.MANUAL);

configurer.configure(listenerContainerFactory, connectionFactory);

return listenerContainerFactory;

}

@Bean("accountExchange")

public DirectExchange accountExchange() {

return new DirectExchange(ACCOUNT_EXCHANGE);

}

@Bean

public Binding bindingOpenapi(@Qualifier("accountQueue") Queue queue, @Qualifier("accountExchange") DirectExchange directExchange) {

return BindingBuilder.bind(queue).to(directExchange).with(ACCOUNT_QUEUE_ROUTING_KEY);

}

@Bean("accountQueue")

public Queue accountQueue() {

Map<String, Object> args = new HashMap<>(2);

args.put("x-dead-letter-exchange", DEAD_LETTER_EXCHANGE);

args.put("x-dead-letter-routing-key", DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY);

return QueueBuilder.durable("accountQueue").withArguments(args).build();

}

}

java

package com.example.service.api.constant;

public class MQConstants {

public static final String DEAD_LETTER_EXCHANGE = "DEAD_LETTER_EXCHANGE";

public static final String DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY = "DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY";

public static final String ACCOUNT_EXCHANGE = "ACCOUNT_EXCHANGE";

public static final String ACCOUNT_QUEUE_ROUTING_KEY = "ACCOUNT_QUEUE_ROUTING_KEY";

public static final String ACCOUNT_RESULT_QUEUE = "ACCOUNT_RESULT_QUEUE";

}3.3 接入redis

java

package com.example.service.api.config;

import com.example.service.api.utils.RedisUtil;

import com.fasterxml.jackson.annotation.JsonAutoDetect.Visibility;

import com.fasterxml.jackson.annotation.JsonTypeInfo.As;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.ObjectMapper.DefaultTyping;

import com.fasterxml.jackson.databind.jsontype.impl.LaissezFaireSubTypeValidator;

import io.lettuce.core.cluster.ClusterClientOptions;

import io.lettuce.core.cluster.ClusterTopologyRefreshOptions;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.boot.autoconfigure.data.redis.RedisProperties;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.RedisClusterConfiguration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.connection.RedisSentinelConfiguration;

import org.springframework.data.redis.connection.RedisStandaloneConfiguration;

import org.springframework.data.redis.connection.lettuce.LettuceClientConfiguration;

import org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory;

import org.springframework.data.redis.connection.lettuce.LettucePoolingClientConfiguration;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.Jackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import java.util.HashSet;

import java.util.Set;

@Configuration

@EnableConfigurationProperties({RedisProperties.class})

public class RedisAutoConfig {

@Autowired

private RedisProperties redisProperties;

public RedisAutoConfig() {

}

public GenericObjectPoolConfig genericObjectPoolConfig() {

GenericObjectPoolConfig config = new GenericObjectPoolConfig();

config.setMinIdle(this.redisProperties.getLettuce().getPool().getMinIdle());

config.setMinIdle(this.redisProperties.getLettuce().getPool().getMaxIdle());

config.setMaxTotal(this.redisProperties.getLettuce().getPool().getMaxActive());

return config;

}

public LettuceClientConfiguration clusterClientConfiguration() {

ClusterTopologyRefreshOptions clusterTopologyRefreshOptions = ClusterTopologyRefreshOptions.builder().enableAllAdaptiveRefreshTriggers().enablePeriodicRefresh().build();

ClusterClientOptions clusterClientOptions = ClusterClientOptions.builder().topologyRefreshOptions(clusterTopologyRefreshOptions).build();

LettuceClientConfiguration lettuceClientConfiguration = LettucePoolingClientConfiguration.builder().poolConfig(this.genericObjectPoolConfig()).clientOptions(clusterClientOptions).build();

return lettuceClientConfiguration;

}

public RedisClusterConfiguration clusterConfiguration() {

RedisClusterConfiguration clusterConfiguration = new RedisClusterConfiguration(this.redisProperties.getCluster().getNodes());

clusterConfiguration.setMaxRedirects(this.redisProperties.getCluster().getMaxRedirects());

clusterConfiguration.setUsername(this.redisProperties.getUsername());

clusterConfiguration.setPassword(this.redisProperties.getPassword());

return clusterConfiguration;

}

public LettuceConnectionFactory clusterConnectionFactory() {

LettuceConnectionFactory lettuceConnectionFactory = new LettuceConnectionFactory(this.clusterConfiguration(), this.clusterClientConfiguration());

lettuceConnectionFactory.afterPropertiesSet();

return lettuceConnectionFactory;

}

public LettuceClientConfiguration sentinelClientConfiguration() {

LettucePoolingClientConfiguration.LettucePoolingClientConfigurationBuilder builder = LettucePoolingClientConfiguration.builder();

builder.poolConfig(this.genericObjectPoolConfig());

ClusterClientOptions clusterClientOptions = ClusterClientOptions.builder().build();

builder.clientOptions(clusterClientOptions);

LettuceClientConfiguration lettuceClientConfiguration = builder.build();

return lettuceClientConfiguration;

}

public RedisSentinelConfiguration redisSentinelConfiguration() {

Set<String> nodes = new HashSet(this.redisProperties.getSentinel().getNodes());

RedisSentinelConfiguration sentinelConfig = new RedisSentinelConfiguration(this.redisProperties.getSentinel().getMaster(), nodes);

sentinelConfig.setSentinelPassword(this.redisProperties.getSentinel().getPassword());

sentinelConfig.setUsername(this.redisProperties.getUsername());

sentinelConfig.setPassword(this.redisProperties.getPassword());

sentinelConfig.setDatabase(this.redisProperties.getDatabase());

return sentinelConfig;

}

public LettuceConnectionFactory sentinelConnectionFactory() {

LettuceConnectionFactory factory = new LettuceConnectionFactory(this.redisSentinelConfiguration(), this.sentinelClientConfiguration());

factory.afterPropertiesSet();

return factory;

}

public RedisStandaloneConfiguration redisStandaloneConfiguration() {

RedisStandaloneConfiguration standaloneConfiguration = new RedisStandaloneConfiguration(this.redisProperties.getHost(), this.redisProperties.getPort());

standaloneConfiguration.setUsername(this.redisProperties.getUsername());

standaloneConfiguration.setPassword(this.redisProperties.getPassword());

if (this.redisProperties.getDatabase() >= 0) {

standaloneConfiguration.setDatabase(this.redisProperties.getDatabase());

}

return standaloneConfiguration;

}

public LettuceConnectionFactory standaloneConnectionFactory() {

LettuceConnectionFactory factory = new LettuceConnectionFactory(this.redisStandaloneConfiguration(), this.sentinelClientConfiguration());

factory.afterPropertiesSet();

return factory;

}

protected RedisTemplate<String, Object> getRedisTemplate(RedisConnectionFactory factory) {

RedisTemplate<String, Object> template = new RedisTemplate();

template.setConnectionFactory(factory);

Jackson2JsonRedisSerializer<Object> jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper();

om.setVisibility(PropertyAccessor.ALL, Visibility.ANY);

om.activateDefaultTyping(LaissezFaireSubTypeValidator.instance, DefaultTyping.NON_FINAL, As.PROPERTY);

jackson2JsonRedisSerializer.setObjectMapper(om);

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

template.setKeySerializer(stringRedisSerializer);

template.setValueSerializer(jackson2JsonRedisSerializer);

template.setHashKeySerializer(stringRedisSerializer);

template.setHashValueSerializer(jackson2JsonRedisSerializer);

template.afterPropertiesSet();

return template;

}

@Bean(

name = {"clusterRedisUtil"}

)

@ConditionalOnProperty(

prefix = "spring.redis.cluster",

name = {"active"},

havingValue = "true"

)

public RedisUtil redisUtil() {

return new RedisUtil(this.getRedisTemplate(this.clusterConnectionFactory()));

}

@Bean(

name = {"sentinelRedisUtil"}

)

@ConditionalOnProperty(

prefix = "spring.redis.sentinel",

name = {"master"}

)

public RedisUtil sentinelRedisUtil() {

return new RedisUtil(this.getRedisTemplate(this.sentinelConnectionFactory()));

}

@Bean(

name = {"standaloneRedisUtil"}

)

@ConditionalOnProperty(

prefix = "spring.redis",

name = {"host"}

)

public RedisUtil standaloneRedisUtil() {

return new RedisUtil(this.getRedisTemplate(this.standaloneConnectionFactory()));

}

}3.4 监听serviceA发过来的消息

java

package com.example.service.api.consumer;

import com.example.service.api.producer.Producer;

import com.example.service.api.utils.RedisUtil;

import com.rabbitmq.client.Channel;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.core.Message;

import org.springframework.amqp.rabbit.annotation.Argument;

import org.springframework.amqp.rabbit.annotation.Queue;

import org.springframework.amqp.rabbit.annotation.RabbitListener;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

import javax.annotation.Resource;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import static com.example.service.api.constant.MQConstants.*;

@Component

@Slf4j

public class AccountTaskConsumer {

@Resource

private RedisUtil redisUtil;

@Resource

private Producer producer;

/**

* 监听serviceA发过来的消息,把消息然后发到kafka

*/

@RabbitListener(queuesToDeclare =@Queue(value =ACCOUNT_RESULT_QUEUE, arguments = {

@Argument(name = "x-dead-letter-exchange", value = DEAD_LETTER_EXCHANGE),

@Argument(name = "x-dead-letter-routing-key", value = DEAD_LETTER_ACCOUNT_QUEUE_ROUTING_KEY)

}) ,containerFactory = "accountMsgListenerFactory")

public void handleWorkbenchOpenapiMessage(Channel channel, Message message) throws Exception {

log.info("-------------------account消费开始-----------------------------");

boolean ack = true;

Exception exception = null;

try {

//获得消息内容

String msgStr = new String(message.getBody(), StandardCharsets.UTF_8);

log.info("接收serviceA发过来的消息:{}", msgStr);

//发到kafka

producer.sendKafkaInfo("ACCOUNT_KAFKA",msgStr);

log.info("kafka消息发送成功");

} catch (Exception e) {

ack = false;

exception = e;

}

checkAck(ack, message, channel, exception);

log.info("-------------------account消费结束-----------------------------");

}

/***

* 校验ack

* @param ack

* @param message

* @param channel

* @param exception

*/

public void checkAck(Boolean ack, Message message, Channel channel, Exception exception) throws IOException {

String msgId = "";

if (!ack) {

log.error("消费消息发生异常, error msg:{}", exception.getMessage(), exception);

msgId = message.getMessageProperties().getMessageId();

Integer retryCount = 0;

if (msgId != null && redisUtil.exists(msgId)) {

retryCount = (Integer) redisUtil.get(msgId);

//messageId为空,直接放到死信队列

} else {

retryCount = 2;

}

//小于最大重试次数,重新消费

if (retryCount < 2) {

channel.basicNack(message.getMessageProperties().getDeliveryTag(), false, true);

redisUtil.set(msgId, retryCount + 1);

} else { //大于最大重试次数,放到死信队列

channel.basicNack(message.getMessageProperties().getDeliveryTag(), false, false);

}

} else { //确认消费成功

channel.basicAck(message.getMessageProperties().getDeliveryTag(), false);

redisUtil.del(msgId);

}

}

}

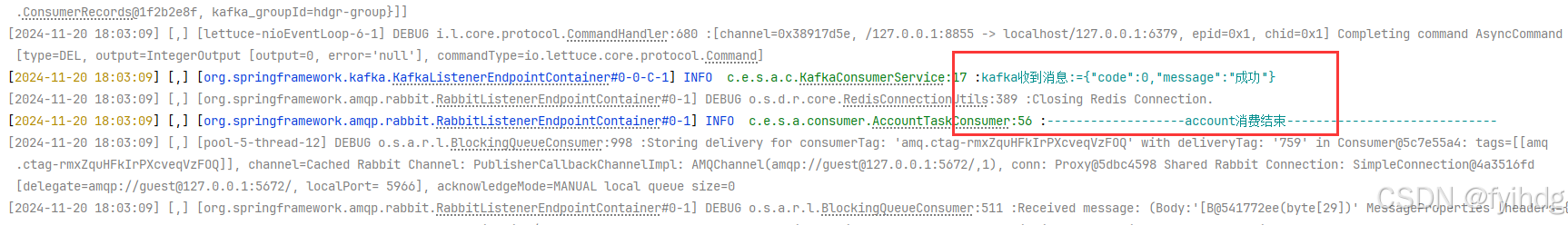

3.5 模拟业务方收到的kafka消息

调用接口返回的数据在哪里,到底有没有功能,则写在kafka消息里。

java

package com.example.service.api.consumer;

import lombok.extern.slf4j.Slf4j;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Service;

@Service

@Slf4j

public class KafkaConsumerService {

/**

* 模拟业务方收到的消息,处理自己的业务逻辑

*/

@KafkaListener(topics ="ACCOUNT_KAFKA", groupId = "hdgr-group", concurrency = "4")

public void listen(String message) {

log.info("kafka收到消息:={}" , message);

}

}3.6 对外业务接口

exampleServiceAPI这个服务是专门用于对外提供接口服务的,单机部署,我们定义的接口,跟优化的接口名称,入参,都是一样,不一样的地方就是接口返回值都是true。

java

package com.example.service.api.controller;

import com.alibaba.nacos.shaded.com.google.gson.Gson;

import com.example.service.api.dto.AccountDto;

import com.example.service.api.producer.Producer;

import lombok.extern.slf4j.Slf4j;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import javax.annotation.Resource;

@RestController

@RequestMapping("/serviceapi")

@Slf4j

public class ApiController {

@Resource

private Producer producer;

/**

* 模拟业务接口

* @author

*/

@PostMapping("/saveDataAcount")

public boolean saveDataAcount(@RequestBody AccountDto accountDto){

Gson gson = new Gson();

String accountDtoSt = gson.toJson(accountDto);

producer.sendRabbatimqMessage(accountDtoSt);

return true;

}

}3.7 生产者

java

package com.example.service.api.producer;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

import javax.annotation.Resource;

import static com.example.service.api.constant.MQConstants.ACCOUNT_EXCHANGE;

import static com.example.service.api.constant.MQConstants.ACCOUNT_QUEUE_ROUTING_KEY;

/***

* @Description 生产者

*/

@Component

@Slf4j

public class Producer {

@Resource

private RabbitTemplate rabbitTemplate;

@Resource

private KafkaTemplate<String, String> kafkaTemplate;

public void sendRabbatimqMessage(String accountDtoSt) {

log.info("外调直接入参={}" ,accountDtoSt);

rabbitTemplate.convertAndSend(ACCOUNT_EXCHANGE, ACCOUNT_QUEUE_ROUTING_KEY, accountDtoSt);

}

/**

* 发送 kafka 消息

*/

public void sendKafkaInfo(String topic,String message) {

log.info("message={}",message);

kafkaTemplate.send(topic,message);

}

}3.8 application.yml和pom.xml

java

spring:

application:

name: exampleServiceAPI

cloud:

nacos:

config:

server-addr: ${NACOS_SERVER:127.0.0.1:8848}

#服务发现

discovery:

server-addr: ${NACOS_SERVER:127.0.0.1:8848}

username: nacos

password: nacos

kafka:

bootstrap-servers: localhost:9092

producer:

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

retries: 1

acks: 1

batch-size: 65535

properties:

linger:

ms: 0

buffer-memory: 33554432

consumer:

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

enable-auto-commit: false

auto-commit-interval: 1000ms

auto-offset-reset: latest

properties:

session:

timeout:

ms: 120000

request:

timeout:

ms: 180000

max-poll-records: 50

listener:

missing-topics-fatal: false

# 手动

ack-mode: manual_immediate

#设置是否批量消费,默认 single(单条),batch(批量)

type: batch

# 自动提交 offset 默认 true

enable-auto-commit: false

# 批量消费最大数量

max-poll-records: 50

redis:

host: localhost

port: 6379

password:

database: 0

timeout: 5000ms

lettuce:

pool:

max-active: 8

max-wait: 1000ms

max-idle: 8

min-idle: 0

server:

port: 8081

#mq连接配置

hdg.rabbitmq:

my-rabbit:

address: 127.0.0.1:5672

username: guest

password: guest

virtual-host: /

logging:

level:

org.apache.kafka: ERROR

XML

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.14</version>

<relativePath/>

</parent>

<groupId>com.example.service.api</groupId>

<artifactId>exampleServiceAPI</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>exampleServiceAPI</name>

<description>exampleServiceAPI</description>

<properties>

<java.version>1.8</java.version>

<spring.cloud.version>2021.0.5</spring.cloud.version>

<spring.cloud.alibaba.version>2021.0.5.0</spring.cloud.alibaba.version>

<spring.boot.version>2.7.14</spring.boot.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!--fastjson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.83</version>

</dependency>

<!-- hutool-->

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>5.8.1</version>

</dependency>

<!--rabbitmq-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-amqp</artifactId>

</dependency>

<!-- 日志 -->

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

</dependency>

<!--日志以json格式输出所需-->

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.6</version>

</dependency>

<!-- kafka -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>3.4.0</version>

</dependency>

<!-- redis相关-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<!-- 添加redis应用池依赖 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

<scope>provided</scope>

</dependency>

<!--spring-cloud-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-bootstrap</artifactId>

</dependency>

<!-- nacos注册中心依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!-- nacos配置中心依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-config</artifactId>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring.cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>${spring.cloud.alibaba.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring.boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>至此,exampleServiceAPI服务也搭好了。

4.并发测试

4.1 旧接口测试

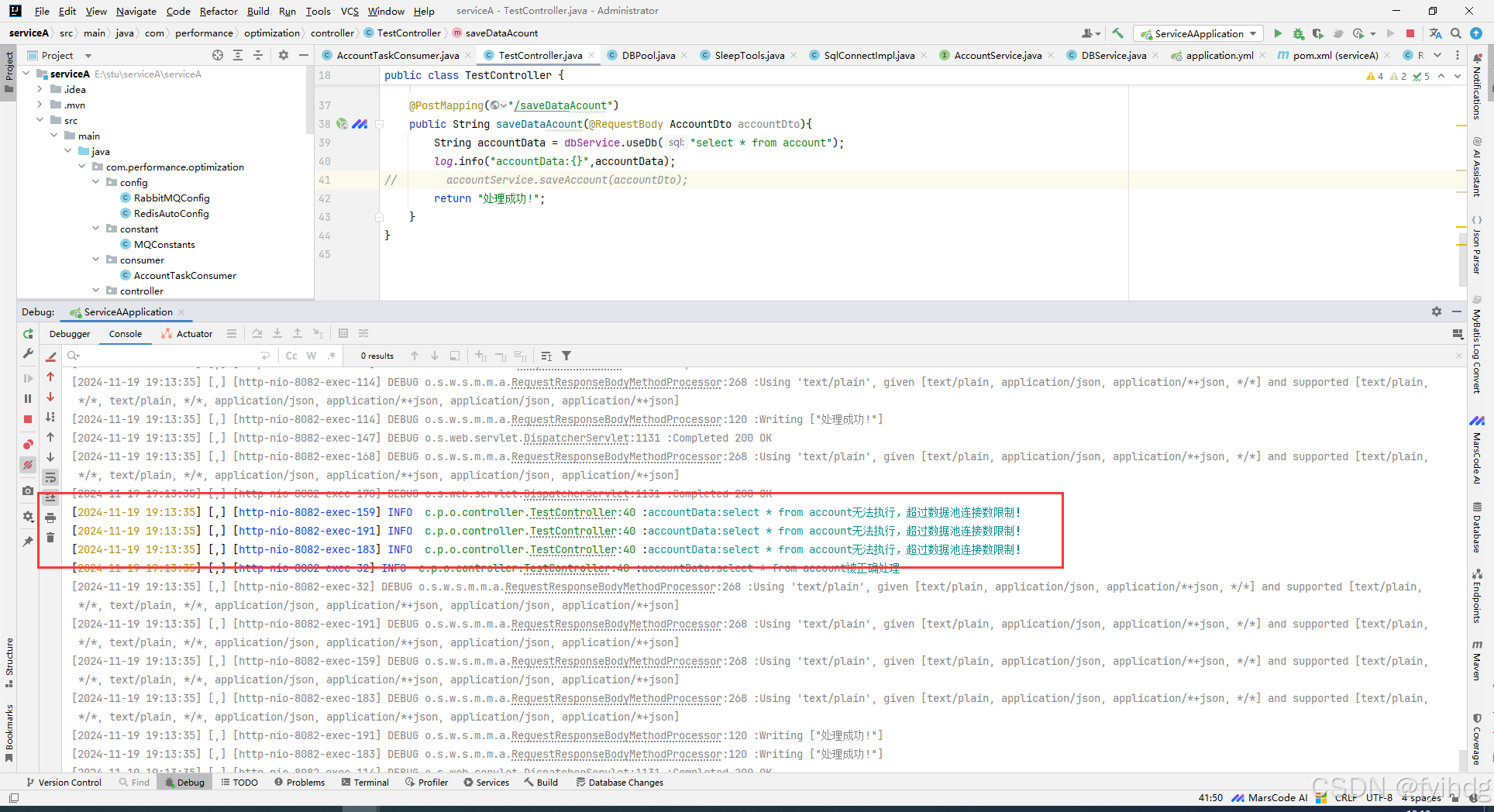

写一个模拟高并发场景的代码调用,去调用旧的接口:

java

private final String url = "http://localhost:8082/optimization/saveDataAcount";

java

package com.example.service.api;

import com.example.service.api.dto.AccountDto;

import org.junit.jupiter.api.Test;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.http.HttpEntity;

import org.springframework.http.HttpHeaders;

import org.springframework.http.HttpMethod;

import org.springframework.http.ResponseEntity;

import org.springframework.web.client.RestTemplate;

import java.util.concurrent.CountDownLatch;

@SpringBootTest

class ExampleServiceApiApplicationTests {

private final String url = "http://localhost:8082/optimization/saveDataAcount";

// private final String exampleUrl = "http://localhost:8081/serviceapi/saveDataAcount";

RestTemplate restTemplate = new RestTemplate();

private static final int num = 1000;

//发令枪 ,让所有请求都可以并发的去访问

private static CountDownLatch cdl = new CountDownLatch(num);

// 内部类继承线程接口,用于模拟高并发请求

public class highConcurrency implements Runnable{

@Override

public void run() {

try {

cdl.await();//在起跑线等待

} catch (InterruptedException e) {

e.printStackTrace();

}

AccountDto accountDto=new AccountDto();

accountDto.setOprid("53");

accountDto.setAge(0);

accountDto.setName("张三");

// 创建 HTTP 头

HttpHeaders headers = new HttpHeaders();

headers.set("Content-Type", "application/json");

// 创建 HTTP 请求实体

HttpEntity<AccountDto> entity = new HttpEntity<>(accountDto, headers);

//原来的接口

ResponseEntity<String> responseEntity = restTemplate.exchange(url, HttpMethod.POST, entity, String.class);

//优化后的接口

// ResponseEntity<String> responseEntity = restTemplate.exchange(exampleUrl, HttpMethod.POST, entity, String.class);

System.out.println(responseEntity.getStatusCode());

}

}

@Test

public void testHighConcurrency() throws InterruptedException {

//模拟高并发

for(int i = 0; i <num; i++){

new Thread(new highConcurrency()).start();

cdl.countDown(); //, 所有线程同时起跑(发枪员1000个人) -》0 发枪

}

Thread.currentThread().sleep(3000);

}

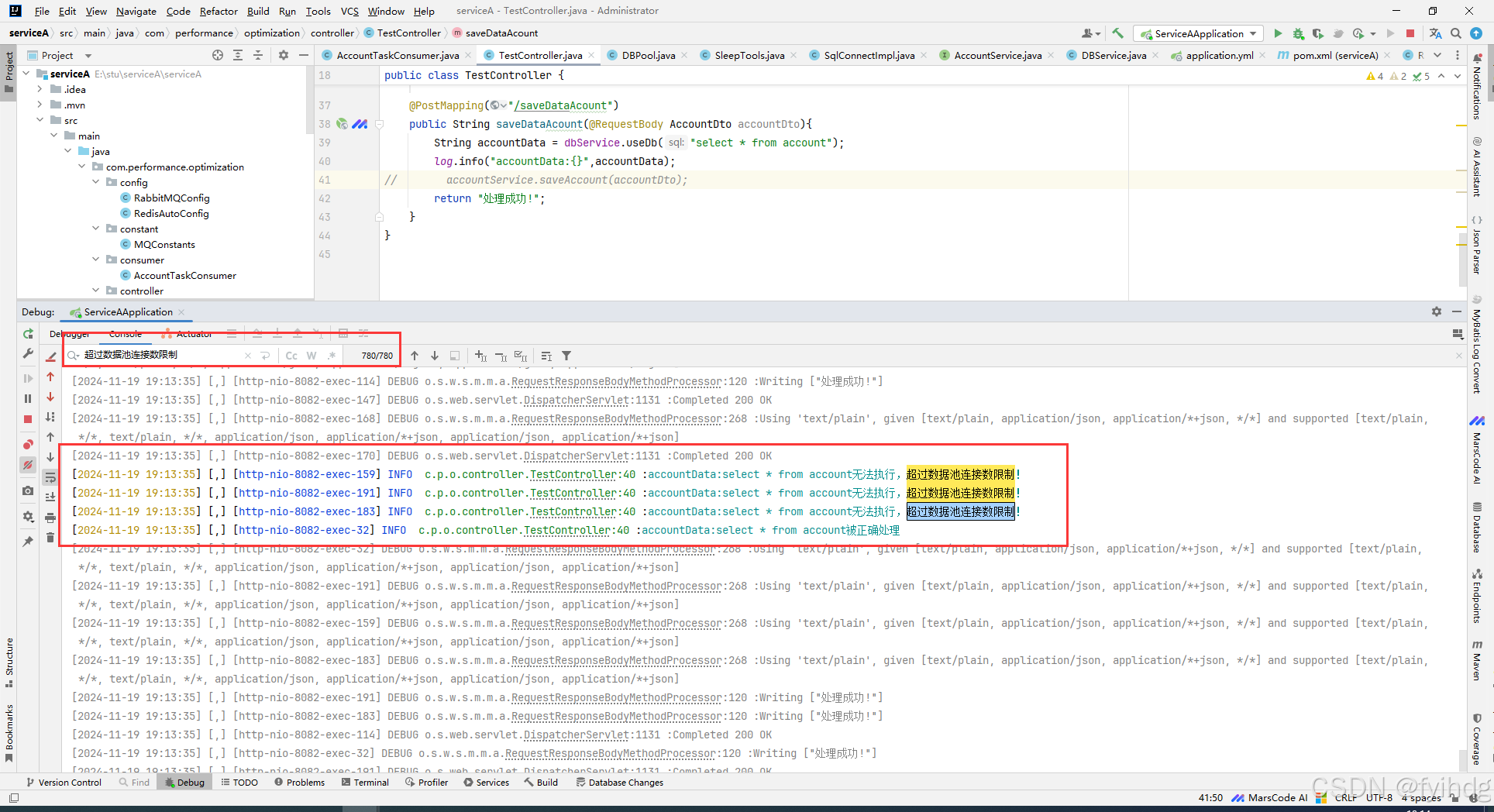

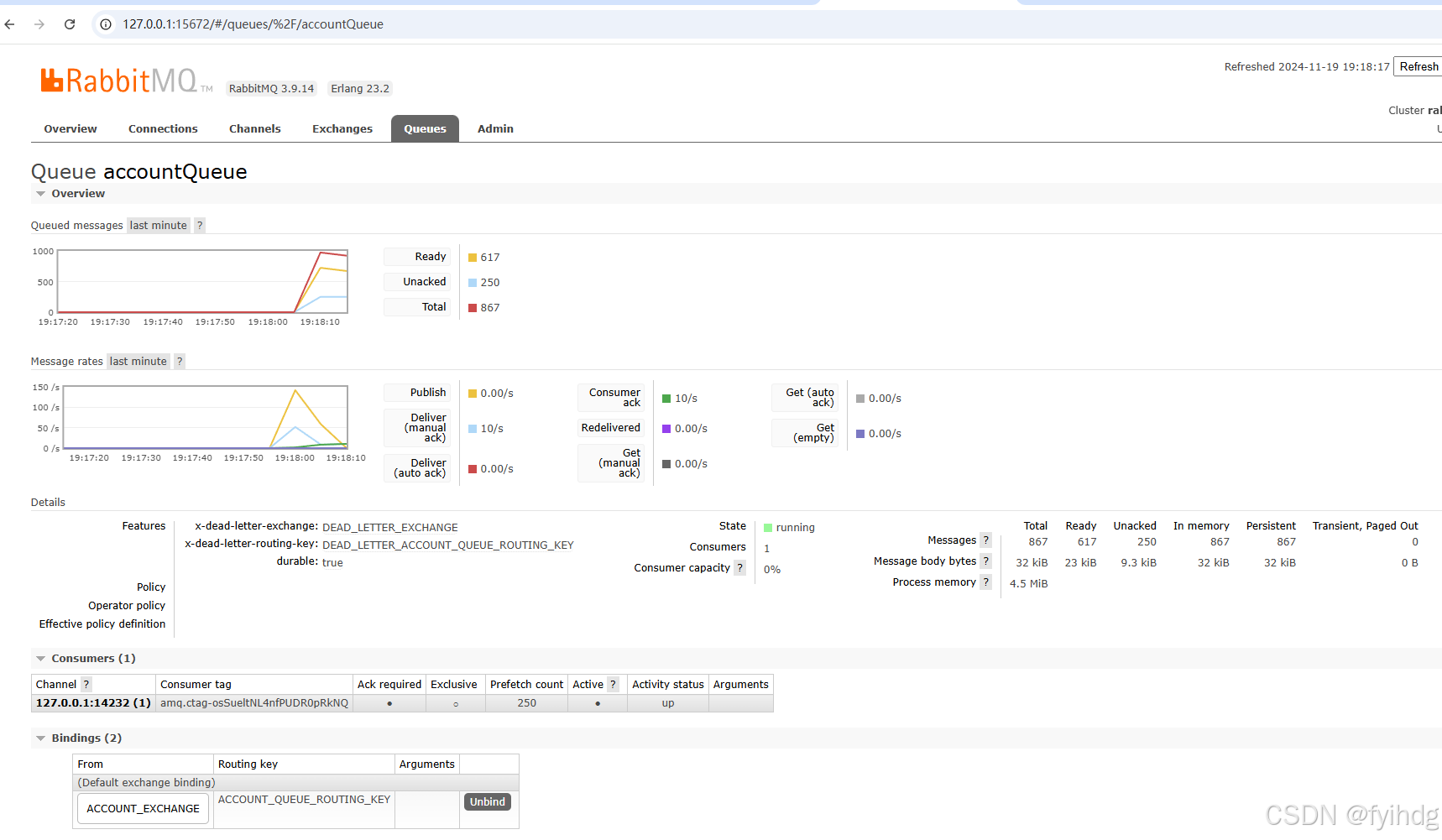

}1000个并发,数据库连接就已经不够使用了,报错了

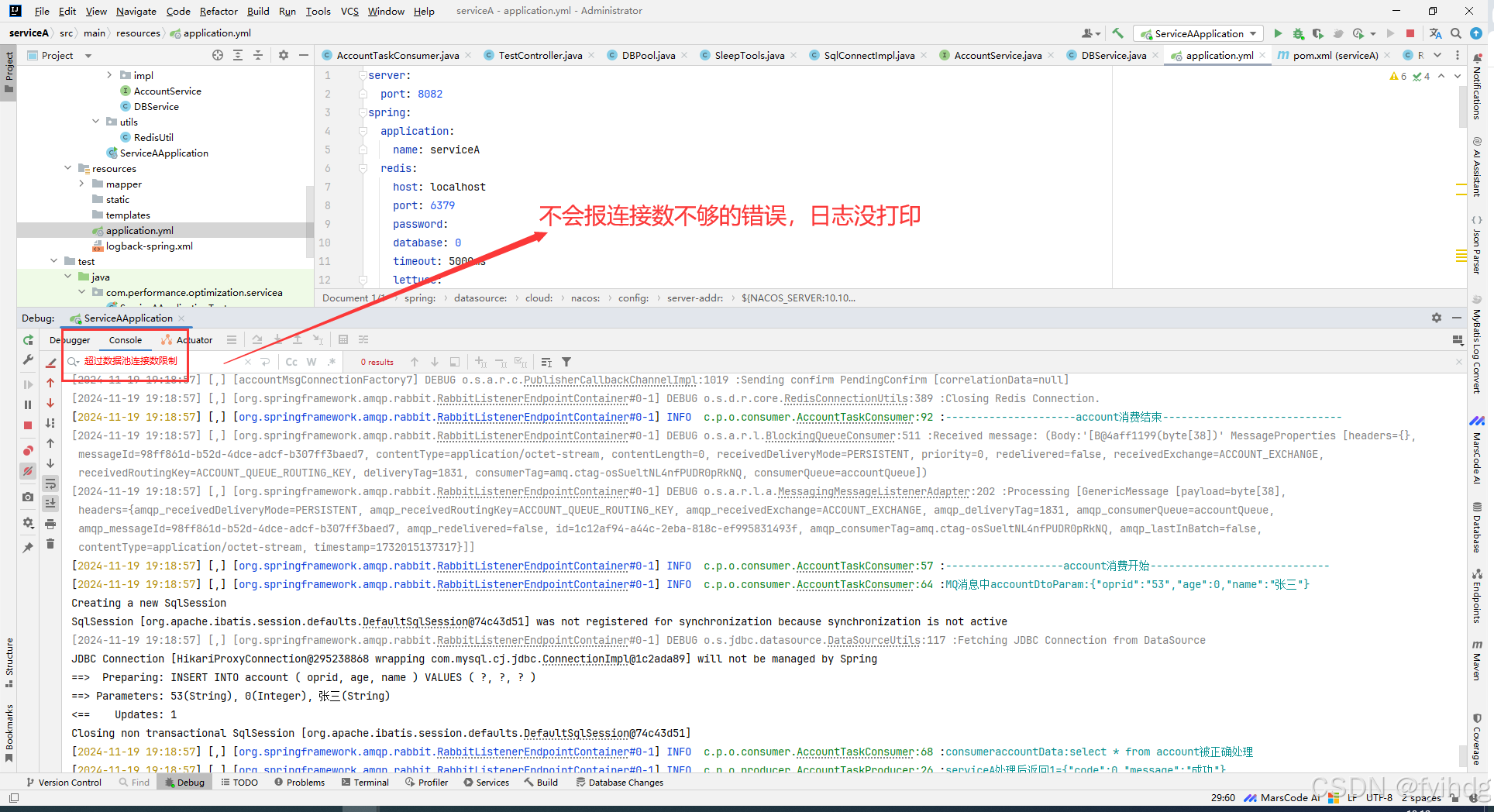

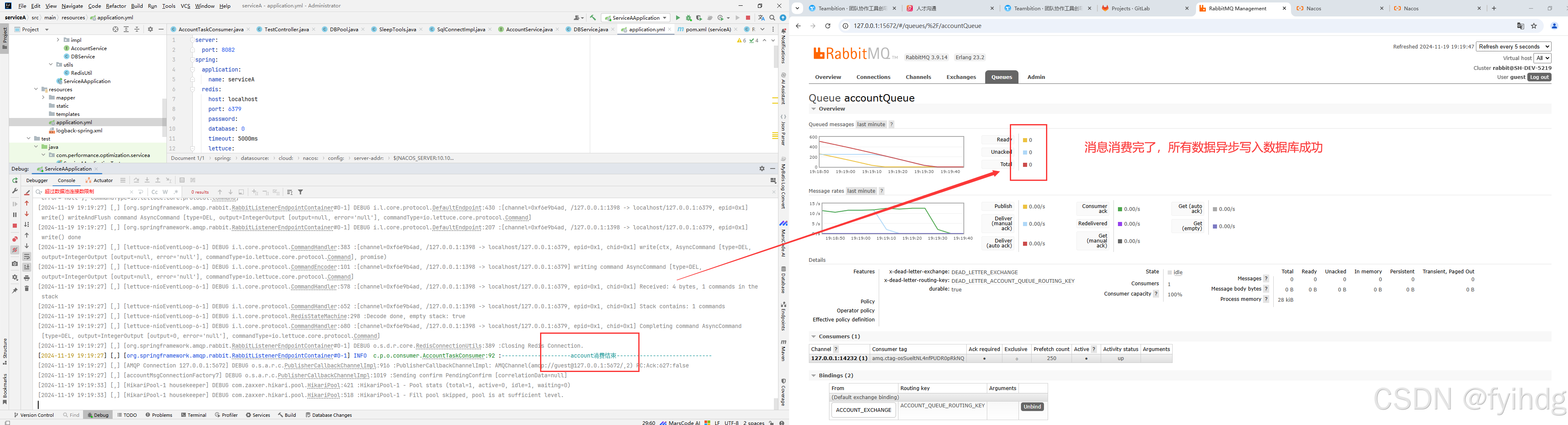

4.2 改造后的接口测试

把上面调用的地址换成改造后的接口,接口名称,入参不变,路径变了:

java

private final String exampleUrl = "http://localhost:8081/serviceapi/saveDataAcount";接口并发能力显著提升,没有报数据库连接不够的问题

因为我们把请求转到rabbitmq中作削峰填谷,相当于一个蓄水池,我们慢慢消费,就不会有问题

同时kafka也收到消息,模拟调用方收到处理后的消息:

5.搭建test-gateway网关服务

因为接口是对外服务的,为了安全,我们需要对接口进行限流保护,万一流量太大,把我们系统压跨了,会导致我们系统不可用,所以要进行限流,限流大部分场景是在网关做的,我搭了一个spring cloud gateway网关服务来进行限流。

5.1 限流配置

java

package com.test.gateway.testgateway.limiters;

import com.test.gateway.testgateway.properties.RequestLimiterUrlProperties;

import org.springframework.cloud.gateway.filter.ratelimit.KeyResolver;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import reactor.core.publisher.Mono;

import java.util.List;

/***

* @Description 限流配置

* @Author degui.huang

* @Version 1.0

*/

@Configuration

public class LimiterConfig {

@Bean

@Primary

public KeyResolver customPathKeyResolver(RequestLimiterUrlProperties requestLimiterUrlProperties) {

return exchange -> {

String path = exchange.getRequest().getURI().getPath();

List<String> limitedPaths = requestLimiterUrlProperties.getList();

if (requestLimiterUrlProperties.getEnabled() && limitedPaths.contains(path)) {

return Mono.just("rate_limit_key");

}

return Mono.just(path);

};

}

}5.2 实现请求的限流功能

java

package com.test.gateway.testgateway.limiters;

import com.test.gateway.testgateway.properties.RequestLimiterUrlProperties;

import org.springframework.cloud.gateway.filter.GatewayFilter;

import org.springframework.cloud.gateway.filter.factory.RequestRateLimiterGatewayFilterFactory;

import org.springframework.cloud.gateway.filter.ratelimit.KeyResolver;

import org.springframework.cloud.gateway.filter.ratelimit.RateLimiter;

import org.springframework.cloud.gateway.route.Route;

import org.springframework.cloud.gateway.support.ServerWebExchangeUtils;

import org.springframework.core.io.buffer.DataBuffer;

import org.springframework.http.HttpStatus;

import org.springframework.http.server.reactive.ServerHttpResponse;

import org.springframework.stereotype.Component;

import reactor.core.publisher.Mono;

import java.nio.charset.StandardCharsets;

import java.util.Map;

/***

* @Description 限流实现

* @Author degui.huang

* @Version 1.0

*/

@Component

public class LimitersGatewayFilterFactory extends RequestRateLimiterGatewayFilterFactory {

private final RateLimiter defaultRateLimiter;

private final KeyResolver defaultKeyResolver;

private final RequestLimiterUrlProperties requestLimiterUrlProperties;

public LimitersGatewayFilterFactory(RateLimiter defaultRateLimiter, KeyResolver defaultKeyResolver, RequestLimiterUrlProperties requestLimiterUrlProperties) {

super(defaultRateLimiter, defaultKeyResolver);

this.defaultRateLimiter = defaultRateLimiter;

this.defaultKeyResolver = defaultKeyResolver;

this.requestLimiterUrlProperties = requestLimiterUrlProperties;

}

@Override

public GatewayFilter apply(Config config) {

KeyResolver resolver = getOrDefault(config.getKeyResolver(), defaultKeyResolver);

RateLimiter<Object> limiter = getOrDefault(config.getRateLimiter(), defaultRateLimiter);

return (exchange, chain) -> resolver.resolve(exchange).flatMap(key -> {

String routeId = config.getRouteId();

if (routeId == null) {

Route route = exchange.getAttribute(ServerWebExchangeUtils.GATEWAY_ROUTE_ATTR);

routeId = route.getId();

}

String path = exchange.getRequest().getURI().getPath();

return limiter.isAllowed(routeId, key).flatMap(response -> {

for (Map.Entry<String, String> header : response.getHeaders().entrySet()) {

exchange.getResponse().getHeaders().add(header.getKey(), header.getValue());

}

if (response.isAllowed() || !requestLimiterUrlProperties.getEnabled()) {

return chain.filter(exchange);

}

if (!requestLimiterUrlProperties.getList().contains(path)) {

return chain.filter(exchange);

}

ServerHttpResponse httpResponse = exchange.getResponse();

//修改code为500

httpResponse.setStatusCode(HttpStatus.TOO_MANY_REQUESTS);

if (!httpResponse.getHeaders().containsKey("Content-Type")) {

httpResponse.getHeaders().add("Content-Type", "application/json");

}

//此处无法触发全局异常处理,手动返回

DataBuffer buffer = httpResponse.bufferFactory().wrap(("{\n"

+ " \"code\": \"429\","

+ " \"message\": \"请求过于频繁,请稍后再试\","

+ " \"success\": false"

+ "}").getBytes(StandardCharsets.UTF_8));

return httpResponse.writeWith(Mono.just(buffer));

});

});

}

private <T> T getOrDefault(T configValue, T defaultValue) {

return (configValue != null) ? configValue : defaultValue;

}

}5.3 定义限流策略中的键

java

package com.test.gateway.testgateway.limiters;

import org.springframework.cloud.gateway.filter.ratelimit.KeyResolver;

import org.springframework.web.server.ServerWebExchange;

import reactor.core.publisher.Mono;

/***

* 定义限流策略中的键

* @Author degui.huang

* @Version 1.0

*/

public class LimitersKeyResolver implements KeyResolver {

@Override

public Mono<String> resolve(ServerWebExchange exchange) {

return Mono.just(exchange.getRequest().getPath().toString());

}

}5.4 限流配置

java

package com.test.gateway.testgateway.properties;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.stereotype.Component;

import java.util.ArrayList;

import java.util.List;

/***

* @Description 限流配置

* @Author degui.huang

* @Version 1.0

*/

@Component

@ConfigurationProperties(prefix = "limiterurl")

public class RequestLimiterUrlProperties {

public List<String> list = new ArrayList<>();

public boolean enabled;

public List<String> getList() {

return list;

}

public void setList(List<String> list) {

this.list = list;

}

public boolean getEnabled() {

return enabled;

}

public void setEnabled(boolean enabled) {

this.enabled = enabled;

}

}5.5 application.yml和pom.xml

需要注意的是- Path和 - RewritePath,这两个地方开头,一定要全部小写开头,否则没有效果。

java

server:

port: 8088

spring:

application:

name: test-gateway

redis:

host: localhost

port: 6379

password:

database: 0

timeout: 5000ms

lettuce:

pool:

max-active: 8

max-wait: 1000ms

max-idle: 8

min-idle: 0

cloud:

nacos:

config:

server-addr: ${NACOS_SERVER:127.0.0.1:8848}

#服务发现

discovery:

server-addr: ${NACOS_SERVER:127.0.0.1:8848}

username: nacos

password: nacos

gateway:

routes:

#exampleServiceAPI 对外提供接口服务

- id: exampleServiceAPIId

uri: lb://exampleServiceAPI

#千万要注意:这个路径开头一定要全小写!!exampleapi这个必须小写!否则没有效果!

predicates:

- Path=/exampleapi/** #路由匹配

filters:

- name: Limiters

args:

key-resolver: "#{@customPathKeyResolver}"

redis-rate-limiter.replenishRate: 100 # 每秒100个请求

redis-rate-limiter.burstCapacity: 100 # 最大并发容量100

#千万要注意:这个路径开头一定要全小写!!exampleapi这个必须小写!否则没有效果!

- RewritePath=/exampleapi/(?<segment>.*), /${segment} #路由重写

#限流url

#把想要限流的接口放到下面列表中,就达以限流的目的

limiterurl:

#enabled true 开启限流,false 关闭限流,这个配置可以写在nacosa 配置中,可以理解成限流的开关

enabled: true

list:

- /exampleapi/serviceapi/saveDataAcount

XML

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.14</version>

<relativePath/>

</parent>

<groupId>com.test.gateway</groupId>

<artifactId>test-gateway</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>test-gateway</name>

<description>test-gateway</description>

<properties>

<java.version>1.8</java.version>

<spring.cloud.version>2021.0.5</spring.cloud.version>

<spring.cloud.alibaba.version>2021.0.5.0</spring.cloud.alibaba.version>

<spring.boot.version>2.7.14</spring.boot.version>

</properties>

<dependencies>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- 网关依赖-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-gateway</artifactId>

</dependency>

<!--fastjson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.83</version>

</dependency>

<!-- redis相关-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis-reactive</artifactId>

</dependency>

<!-- hutool-->

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>5.8.1</version>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-bootstrap</artifactId>

</dependency>

<!-- nacos注册中心依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!-- nacos配置中心依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-config</artifactId>

</dependency>

<!-- 实现负载均衡-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-loadbalancer</artifactId>

</dependency>

<!-- 日志 -->

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

</dependency>

<!--日志以json格式输出所需-->

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.6</version>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring.cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>${spring.cloud.alibaba.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring.boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>6. JMeter 限流测试

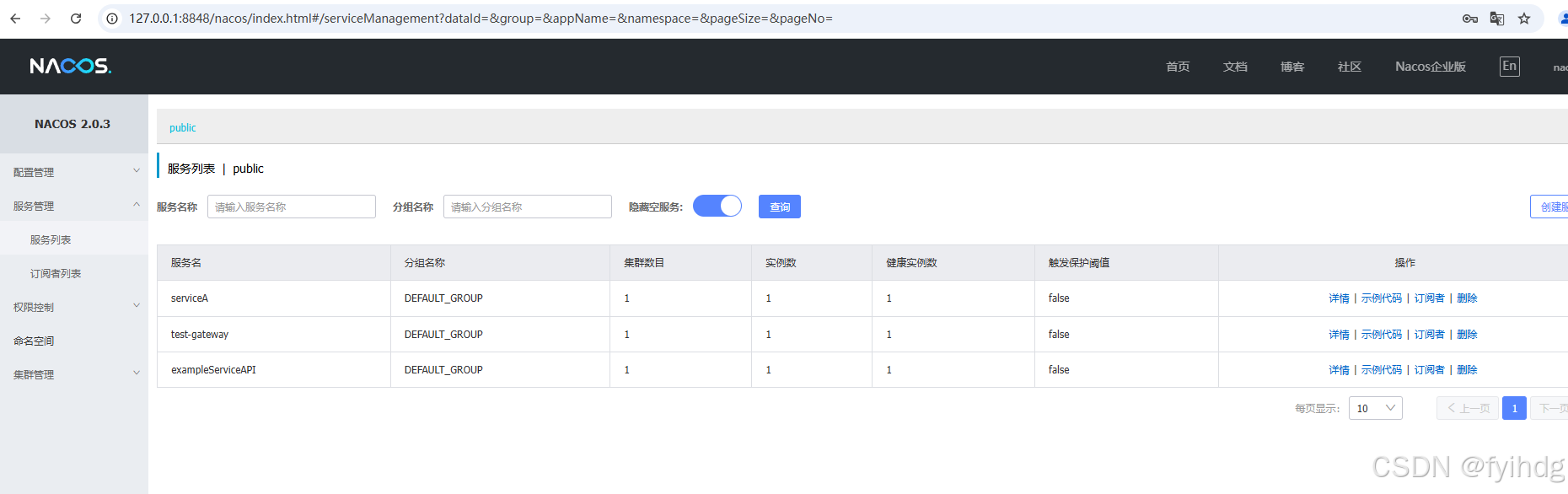

我已经搭建好三个服务了,都成功注册到 nacos中,接下来进行限流测试。

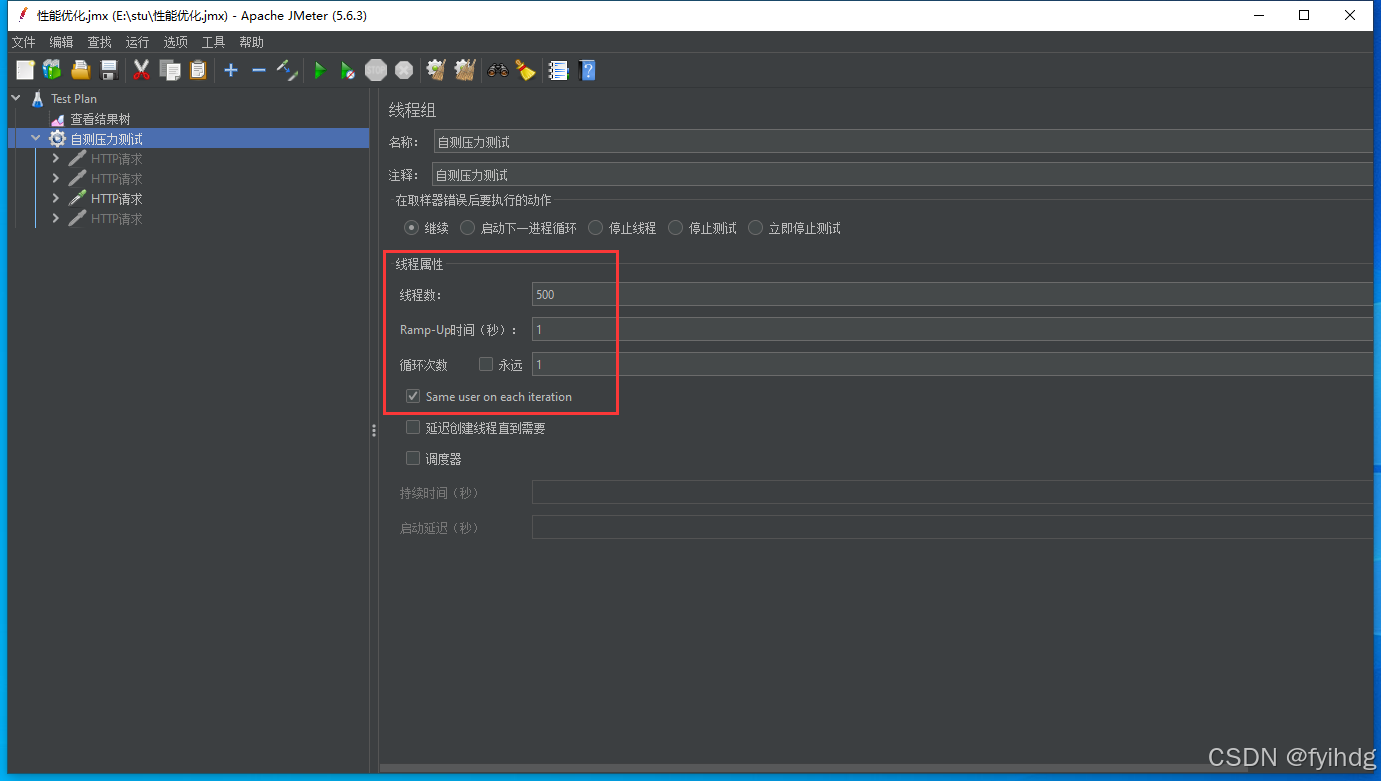

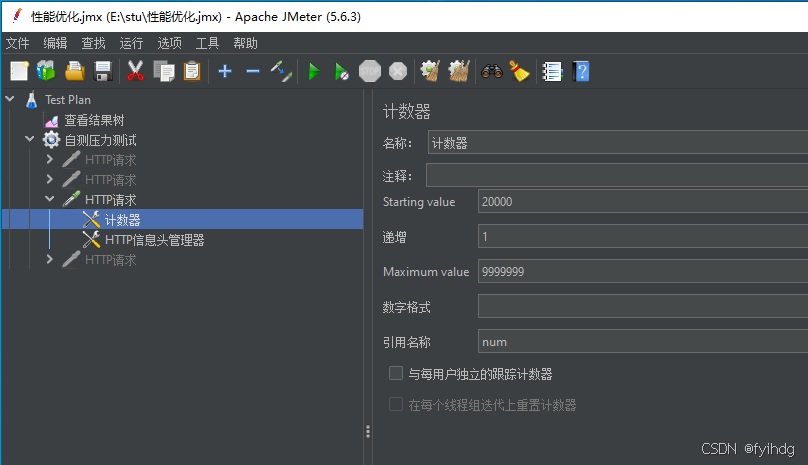

6.1 JMeter 配置

我在网关配置是每秒100个请求,最大并发容量100,我线程数写500,1秒钟内跑完

请求路径 :

java

http://localhost:8088/exampleapi/serviceapi/saveDataAcount消息体

java

{

"oprid": ${num},

"age": 76,

"name": "网关测试"

}6.2 压测结果

我请求的服务是exampleServiceAPI中的saveDataAcount接口,会打印"accountDto入参"这个日志,我们只要查找这个关键字的次数,就知道有多少个请求成功打到我们exampleServiceAPI这个服务了,我们是500个线程,理论上就是最多有200个请求到达。

1秒内,只会有200个请求到达

模拟业务方也收到kakfa消息了:

超过就会被拒绝请求:

我把本次代码和相关软件全部打包上传到这了,可以免积下载:

https://download.csdn.net/download/fyihdg/90017490![]() https://download.csdn.net/download/fyihdg/90017490windows下安装rabbitMQ可以看篇文章:

https://download.csdn.net/download/fyihdg/90017490windows下安装rabbitMQ可以看篇文章:

windows下安装kafka 安装可以看这篇文章:

如果觉得我的文章或者代码对您有帮助,可以请我喝杯咖啡

您的支持将鼓励我继续创作!谢谢!