一、准备工作

1.1、服务器信息梳理

| 角色 | IP | 操作系统 | 安装服务 |

|---|---|---|---|

| 监控机 | 10.45.19.20 | Linux CentOS 7.9 | CMAK3.0.0.5、ZooKeeper3.9.0、JDK11、JDK1.8 |

| 被监控机 Kafka broker.id 0 | 50.50.50.101 | Linux CentOS 7.9 | Kafka、ZooKeeper(任意版本) |

| 被监控机 Kafka broker.id 1 | 50.50.50.101 | Linux CentOS 7.9 | Kafka、ZooKeeper(任意版本) |

| 被监控机 Kafka broker.id 2 | 50.50.50.101 | Linux CentOS 7.9 | Kafka、ZooKeeper(任意版本) |

1.2、准备以下安装包

https://www.oracle.com/java/technologies/downloads/archive

https://archive.apache.org/dist/zookeeper

https://github.com/yahoo/CMAK

jdk-11.0.26_linux-x64_bin.tar.gz

jdk-8u161-linux-x64.tar.gz

apache-zookeeper-3.9.0-bin.tar.gz

cmak-3.0.0.5.zip1.3、创建安装目录

mkdir /usr/local/cmak二、部署 JDK11 环境

jdk-11.0.26_linux-x64_bin.tar.gz

bash

tar zxf jdk-11.0.26_linux-x64_bin.tar.gz -C /usr/local/cmak三、部署 ZooKeeper 存储元数据

3.1、部署 JDK1.8 环境

bash

tar zxf jdk-8u161-linux-x64.tar.gz -C /usr/local/cmak

bash

vim /etc/etc/profile

bash

export PATH=$PATH:/usr/local/cmak/jdk1.8.0_161/bin3.2、部署 ZooKeeper

3.2.1、解压至安装目录

bash

wget https://archive.apache.org/dist/zookeeper/zookeeper-3.9.0/apache-zookeeper-3.9.0-bin.tar.gz

bash

tar zxf apache-zookeeper-3.9.0-bin.tar.gz -C /usr/local/cmak

bash

mv /usr/local/cmak/apache-zookeeper-3.9.0-bin /usr/local/cmak/zookeeper-3.9.03.2.2、创建存储目录

bash

mkdir /usr/local/cmak/zookeeper-3.9.0/{data,logs}3.2.3、编辑配置文件

bash

cp /usr/local/cmak/zookeeper-3.9.0/conf/zoo_sample.cfg /usr/local/cmak/zookeeper-3.9.0/conf/zoo.cfg

bash

vim /usr/local/cmak/zookeeper-3.9.0/conf/zoo.cfg

c

dataDir=/usr/local/cmak/zookeeper-3.9.0/data

dataLogDir=/usr/local/cmak/zookeeper-3.9.0/logs

clientPort=3281

admin.serverPort=3282

tickTime=2000

initLimit=10

syncLimit=53.2.4、启动

bash

sh /usr/local/cmak/zookeeper-3.9.0/bin/zkServer.sh start四、部署 CMAK 监控

4.1、解压至安装目录

https://github.com/yahoo/CMAK/releases/download/3.0.0.5/cmak-3.0.0.5.zip

bash

unzip cmak-3.0.0.6.zip -d /usr/local/cmak4.2、编写配置文件

bash

vim /usr/local/cmak/cmak-3.0.0.6/conf/application.conf

c

# -------------------------------------------------------------------

# Application 基础配置

# -------------------------------------------------------------------

# 加密会话和 Cookie 的密钥(生产环境建议使用环境变量覆盖)

# 默认硬编码密钥(注意:硬编码密钥不安全,建议通过环境变量设置)

play.crypto.secret="^<csmm5Fx4d=r2HEX8pelM3iBkFVv?k[mc;IZE<_Qoq8EkX_/7@Zt6dP05Pzea3U"

# 允许通过环境变量 APPLICATION_SECRET 覆盖密钥

play.crypto.secret=${?APPLICATION_SECRET}

# 会话过期时间(1小时)

play.http.session.maxAge="1h"

# 国际化支持的语言列表(当前仅英语)

play.i18n.langs=["en"]

# HTTP 请求处理器和上下文路径

play.http.requestHandler = "play.http.DefaultHttpRequestHandler"

play.http.context = "/"

# 应用加载器类(自定义 Kafka Manager 加载逻辑)

play.application.loader=loader.KafkaManagerLoader

# -------------------------------------------------------------------

# ZooKeeper 配置

# -------------------------------------------------------------------

# ZooKeeper 连接地址(默认值)

cmak.zkhosts="10.45.19.20:3281"

# 允许通过环境变量 ZK_HOSTS 覆盖 ZooKeeper 地址

cmak.zkhosts=${?ZK_HOSTS}

# -------------------------------------------------------------------

# 线程调度配置

# -------------------------------------------------------------------

# 固定调度器配置(确保每个 Actor 独占线程池)

pinned-dispatcher.type="PinnedDispatcher"

pinned-dispatcher.executor="thread-pool-executor"

# -------------------------------------------------------------------

# 应用功能特性开关

# -------------------------------------------------------------------

# 启用的 Kafka 管理功能列表:

# - 集群管理

# - Topic 管理

# - 首选副本选举

# - 分区重分配

# - 计划 Leader 选举

application.features=[

"KMClusterManagerFeature",

# "KMTopicManagerFeature",

# "KMPreferredReplicaElectionFeature",

# "KMReassignPartitionsFeature",

# "KMScheduleLeaderElectionFeature"

]

# -------------------------------------------------------------------

# Akka 配置

# -------------------------------------------------------------------

akka {

# 使用 SLF4J 作为日志记录器

loggers = ["akka.event.slf4j.Slf4jLogger"]

# 日志级别(INFO | DEBUG | WARN | ERROR)

# loglevel = "INFO"

loglevel = "WARN"

}

# Akka 日志系统启动超时时间

akka.logger-startup-timeout = 60s

# -------------------------------------------------------------------

# 基础认证配置

# -------------------------------------------------------------------

# 是否启用基础认证(默认禁用)

basicAuthentication.enabled=true

# 允许通过环境变量 KAFKA_MANAGER_AUTH_ENABLED 覆盖

basicAuthentication.enabled=${?KAFKA_MANAGER_AUTH_ENABLED}

# LDAP 认证配置 -----------------------------------------------------

# 是否启用 LDAP 认证(默认禁用)

basicAuthentication.ldap.enabled=false

basicAuthentication.ldap.enabled=${?KAFKA_MANAGER_LDAP_ENABLED}

# LDAP 服务器地址(示例:ldap://hostname)

basicAuthentication.ldap.server=""

basicAuthentication.ldap.server=${?KAFKA_MANAGER_LDAP_SERVER}

# LDAP 端口(默认 389,SSL 通常为 636)

basicAuthentication.ldap.port=389

basicAuthentication.ldap.port=${?KAFKA_MANAGER_LDAP_PORT}

# 连接 LDAP 的用户名和密码

basicAuthentication.ldap.username=""

basicAuthentication.ldap.username=${?KAFKA_MANAGER_LDAP_USERNAME}

basicAuthentication.ldap.password=""

basicAuthentication.ldap.password=${?KAFKA_MANAGER_LDAP_PASSWORD}

# LDAP 搜索配置

basicAuthentication.ldap.search-base-dn=""

basicAuthentication.ldap.search-base-dn=${?KAFKA_MANAGER_LDAP_SEARCH_BASE_DN}

basicAuthentication.ldap.search-filter="(uid=$capturedLogin$)"

basicAuthentication.ldap.search-filter=${?KAFKA_MANAGER_LDAP_SEARCH_FILTER}

# LDAP 组过滤配置

basicAuthentication.ldap.group-filter=""

basicAuthentication.ldap.group-filter=${?KAFKA_MANAGER_LDAP_GROUP_FILTER}

# 连接池配置

basicAuthentication.ldap.connection-pool-size=10

basicAuthentication.ldap.connection-pool-size=${?KAFKA_MANAGER_LDAP_CONNECTION_POOL_SIZE}

# SSL 配置

basicAuthentication.ldap.ssl=false

basicAuthentication.ldap.ssl=${?KAFKA_MANAGER_LDAP_SSL}

basicAuthentication.ldap.ssl-trust-all=false

basicAuthentication.ldap.ssl-trust-all=${?KAFKA_MANAGER_LDAP_SSL_TRUST_ALL}

# 基础认证凭据(仅在启用基础认证且未启用 LDAP 时生效)

basicAuthentication.username="admin"

basicAuthentication.username=${?KAFKA_MANAGER_USERNAME}

basicAuthentication.password="Quk@EL!4xMyj3hnD"

basicAuthentication.password=${?KAFKA_MANAGER_PASSWORD}

# 认证领域名称和免认证路径(/api/health 用于健康检查)

basicAuthentication.realm="Kafka-Manager"

basicAuthentication.excluded=["/api/health"]

# -------------------------------------------------------------------

# Kafka 消费者配置

# -------------------------------------------------------------------

# 外部消费者配置文件路径(可选)

kafka-manager.consumer.properties.file=${?CONSUMER_PROPERTIES_FILE}

bash

vim /usr/local/cmak/cmak-3.0.0.6/conf/logback.xml

xml

<!-- 修改后的配置(重点关注 encoder 部分的 regex 过滤) -->

<configuration>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${application.home}/logs/application.log</file>

<encoder>

<!-- 添加敏感信息过滤 -->

<pattern>%date - [%level] - from %logger in %thread %n%replace(%message){'SASL JAAS config=.*', 'SASL JAAS config=******'}%n%xException%n</pattern>

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${application.home}/logs/application.%d{yyyy-MM-dd}.log</fileNamePattern>

<maxHistory>5</maxHistory>

<totalSizeCap>5GB</totalSizeCap>

</rollingPolicy>

</appender>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<!-- 添加敏感信息过滤 -->

<pattern>%date - [%level] %logger{15} - %replace(%message){'SASL JAAS config=.*', 'SASL JAAS config=******'}%n%xException{10}</pattern>

</encoder>

</appender>

<!-- 其他部分保持不变 -->

<appender name="ASYNCFILE" class="ch.qos.logback.classic.AsyncAppender">

<appender-ref ref="FILE" />

</appender>

<appender name="ASYNCSTDOUT" class="ch.qos.logback.classic.AsyncAppender">

<appender-ref ref="STDOUT" />

</appender>

<logger name="play" level="INFO" />

<logger name="application" level="INFO" />

<logger name="kafka.manager" level="INFO" />

<!-- Off these ones as they are annoying, and anyway we manage configuration ourself -->

<logger name="com.avaje.ebean.config.PropertyMapLoader" level="OFF" />

<logger name="com.avaje.ebeaninternal.server.core.XmlConfigLoader" level="OFF" />

<logger name="com.avaje.ebeaninternal.server.lib.BackgroundThread" level="OFF" />

<logger name="com.gargoylesoftware.htmlunit.javascript" level="OFF" />

<logger name="org.apache.zookeeper" level="INFO"/>

<root level="WARN">

<appender-ref ref="ASYNCFILE" />

<appender-ref ref="ASYNCSTDOUT" />

</root>

</configuration>4.3、编写启停脚本

bash

vim /usr/local/cmak/cmak-3.0.0.6/start.sh

bash

#!/bin/bash

JDK11_PATH="/usr/local/cmak/jdk-11.0.26"

CMAK_PATH="/usr/local/cmak/cmak-3.0.0.6"

CMAK_PORT="3283"

$CMAK_PATH/bin/cmak \

-Dconfig.file=$CMAK_PATH/conf/application.conf \

-java-home $JDK11_PATH \

-Dhttp.port=$CMAK_PORT > /dev/null &

bash

vim /usr/local/cmak/cmak-3.0.0.6/stop.sh

bash

#!/bin/bash

CMAK_PATH="/usr/local/cmak/cmak-3.0.0.6"

ps aux | grep 'java' | grep 'cmak-3.0.0.6' | awk '{print $2}' | xargs -r kill -9

[[ -f "$CMAK_PATH/RUNNING_PID" ]] && rm -f $CMAK_PATH/RUNNING_PID

bash

chmod +x /usr/local/cmak/cmak-3.0.0.6/{start.sh,stop.sh}4.4、启动

bash

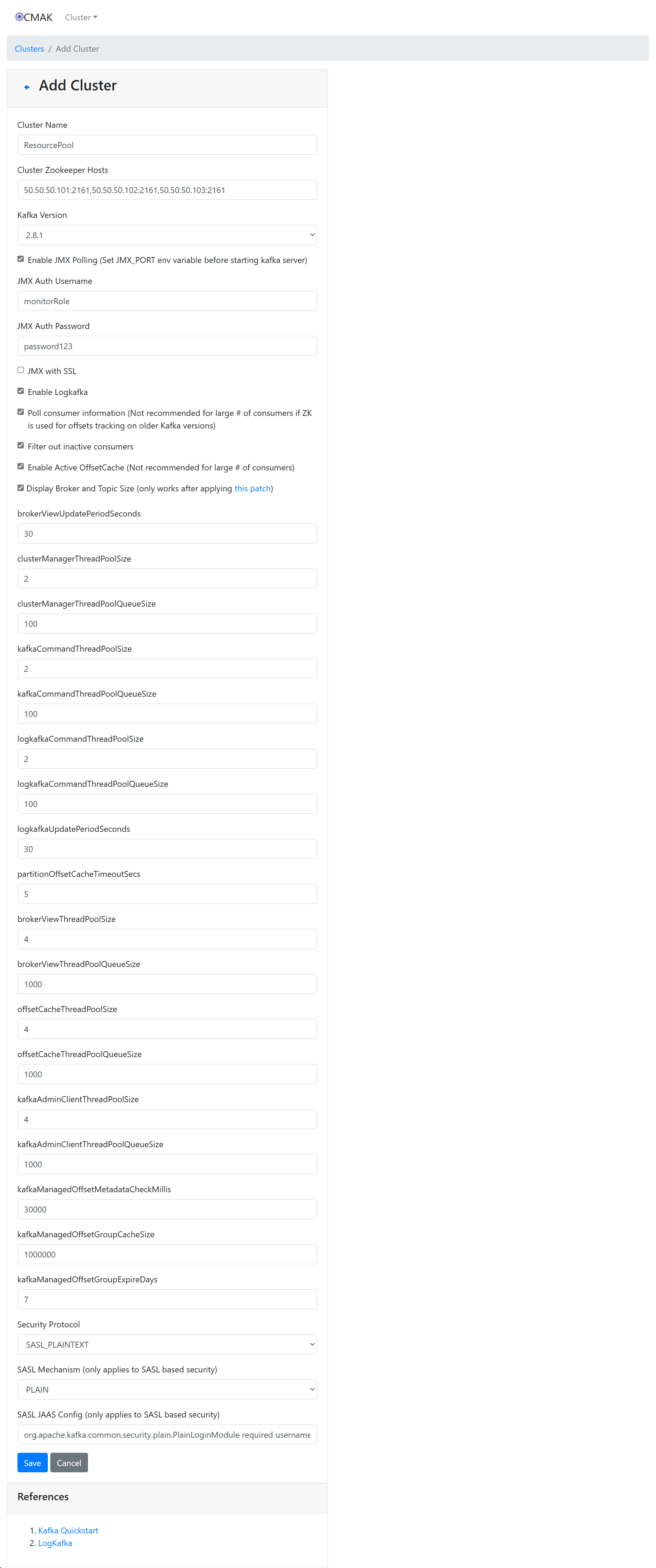

sh /usr/local/cmak/cmak-3.0.0.6/start.sh五、添加集群监控

-

注意!是添加 zookeeper 集群

10.73.251.66:2261,10.73.251.67:2261,10.73.251.68:2261

-

认证配置格式

org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="2332***JA";

六、Kafka 启用 JMX

6.1、添加认证文件

bash

vim /usr/local/kafka_2.13-2.8.2/config/jmxremote.access

bash

monitorRole readonly

controlRole readwrite

bash

vim /usr/local/kafka_2.13-2.8.2/config/jmxremote.password

bash

monitorRole password123

controlRole password456

bash

chmod 600 /usr/local/kafka_2.13-2.8.2/config/jmxremote.password6.2、配置文件解释

6.2.1、jmxremote.access 文件

jmxremote.access 文件定义了哪些角色可以访问 JMX,及其访问级别。

每个角色对应不同的权限。

通过此文件,可以设置不同的角色及其对 JMX 连接的权限。

格式:

<role> <permission>-

role:角色名称(如 monitorRole,controlRole)。

-

permission:该角色的权限,可以是以下值之一:

-

readonly:只读权限,允许查看监控数据,但不能进行任何操作。

-

readwrite:读写权限,允许查看监控数据并执行管理操作。

示例:

monitorRole readonly

controlRole readwrite上面的示例表示:

-

monitorRole 角色具有只读权限,用户可以连接 JMX 进行监控,但不能进行修改或控制。

-

controlRole 角色具有读写权限,用户不仅可以进行监控,还可以修改或控制应用程序的行为(例如重启服务等)。

6.2.2、jmxremote.password 文件

jmxremote.password 文件存储了每个角色的密码。

这个文件与 jmxremote.access 文件配合使用,确保只有具有正确密码的用户才能连接到 JMX 服务。

格式:

<role> <password>-

role:与 jmxremote.access 文件中角色匹配。

-

password:该角色对应的密码。

示例:

monitorRole password123

controlRole password456上面的示例表示:

-

monitorRole 角色的密码是 password123。

-

controlRole 角色的密码是 password456。

6.2.3、配置与权限的关系

这两个文件的配合使得 JMX 访问可以进行基于角色的控制和认证:

jmxremote.access 文件用于定义权限:哪些角色能做什么操作。

jmxremote.password 文件用于定义认证:哪些角色有权访问,并提供密码来验证身份。

6.3、修改启动脚本

bash

vim /usr/local/kafka_2.13-2.8.2/kafka_start.sh

bash

#!/bin/bash

kafka_path="/usr/local/kafka_2.13-2.8.2"

export KAFKA_OPTS=" -Djava.security.auth.login.config=$kafka_path/config/kafka_server_jaas.conf "

$kafka_path/bin/kafka-server-start.sh $kafka_path/config/server.properties > $kafka_path/kafka_nohup.out &改为:

- 注意!!!注意!!!环境变量中参数前后的空格是必须的!!!

bash

#!/bin/bash

kafka_path="/usr/local/kafka_2.13-2.8.2"

# 设置 JMX 端口和认证

export JMX_PORT=9999

export KAFKA_JMX_OPTS=" -Dcom.sun.management.jmxremote \

-Dcom.sun.management.jmxremote.port=$JMX_PORT \

-Dcom.sun.management.jmxremote.rmi.port=$JMX_PORT \

-Dcom.sun.management.jmxremote.authenticate=true \

-Dcom.sun.management.jmxremote.ssl=false \

-Djava.rmi.server.hostname=127.0.0.1 \

-Dcom.sun.management.jmxremote.access.file=$kafka_path/config/jmxremote.access \

-Dcom.sun.management.jmxremote.password.file=$kafka_path/config/jmxremote.password "

export KAFKA_OPTS=" $KAFKA_JMX_OPTS -Djava.security.auth.login.config=$kafka_path/config/kafka_server_jaas.conf "

# 启动 Kafka 并将日志重定向

$kafka_path/bin/kafka-server-start.sh $kafka_path/config/server.properties >> $kafka_path/kafka_nohup.out &6.4、重启 Kafka

bash

netstat -lntpu | grep java | grep 9002 | awk -F '[ /]+' '{print $7}' | xargs -r kill -9

bash

netstat -lntpu | grep java | grep 9999七、集群监控启用 JMX