引言

Intel RealSense系列相机因其出色的深度感知能力和灵活的配置选项,在机器视觉与应用中得到广泛应用。大家在后期的slam学习中,无论是对算法本身的性能要求还是实验的泛化性都有一定的要求,那么公开的数据集如kitti、tum、Eourc不能满足实验的需求,并且理论验证都需要场景建图。本文将详细介绍如何通过修改ROS启动文件参数,将RealSense相机从单目配置调整为双目配置,并分析关键参数的设置原理。

前置条件

提前安装Ros与RealSense相关ROS的SDK包。这里有一个个人觉得比较完整的博客推荐,可以完成D435i驱动和ROS包安装。后续的相机标定都会用到:D435i标定摄像头和IMU笔记三(IMU标定篇)_d435i标定摄像头和imu笔记三(imu标定篇)-CSDN博客

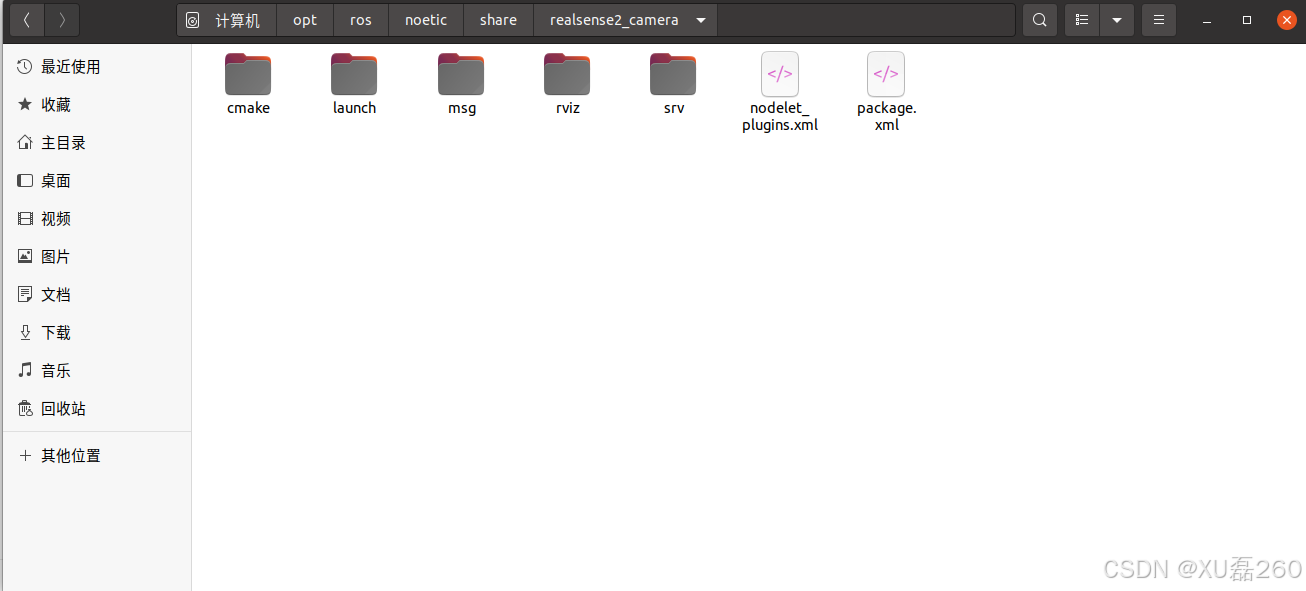

定位相机驱动的ros包

这里我装的是noetic版本,即ros1。如果ros2版本后面会出现标定工具kablri安装问题,很难解决。

定位到launch文件夹,找到相机启动的rs_camera.launch文件。

这里可以新建一个副本,命名为rs_stereo_mode.launch

解读launch文件

RealSense相机(如D435i)本质上是一个双目立体相机系统,但在默认单目配置下,系统可能只启用一个红外摄像头或彩色摄像头。要实现双目视觉,我们需要同时启用两个红外摄像头(infra1和infra2)。

关键参数对比

| 参数 | 单目典型配置 | 双目典型配置 |

|---|---|---|

enable_infra1 |

false | true |

enable_infra2 |

false | true |

enable_infra |

false | true |

infra_width |

640 | 1280 |

infra_height |

480 | 720 |

infra_fps |

30 | 15 |

enable_color |

true | true/false |

infra_rgb |

true | false |

启用双目红外摄像头

bash

<arg name="enable_infra" default="true"/>

<arg name="enable_infra1" default="true"/>

<arg name="enable_infra2" default="true"/>这三个参数确保了两个红外摄像头都被启用,这是双目视觉的基础。

同步设置

bash

<arg name="enable_sync" default="true"/>启用同步确保两个摄像头的帧时间对齐,这对立体视觉至关重要。

bash

<launch>

<arg name="serial_no" default=""/>

<arg name="usb_port_id" default=""/>

<arg name="device_type" default=""/>

<arg name="json_file_path" default=""/>

<arg name="camera" default="camera"/>

<arg name="tf_prefix" default="$(arg camera)"/>

<arg name="external_manager" default="false"/>

<arg name="manager" default="realsense2_camera_manager"/>

<arg name="output" default="screen"/>

<arg name="fisheye_width" default="1280"/>

<arg name="fisheye_height" default="720"/>

<arg name="enable_fisheye" default="false"/>

<arg name="depth_width" default="1280"/>

<arg name="depth_height" default="720"/>

<arg name="enable_depth" default="true"/>

<arg name="confidence_width" default="1280"/>

<arg name="confidence_height" default="720"/>

<arg name="enable_confidence" default="true"/>

<arg name="confidence_fps" default="15"/>

<arg name="infra_width" default="1280"/>

<arg name="infra_height" default="720"/>

<arg name="enable_infra" default="true"/>

<arg name="enable_infra1" default="true"/>

<arg name="enable_infra2" default="true"/>

<arg name="infra_rgb" default="false"/>

<arg name="color_width" default="1280"/>

<arg name="color_height" default="720"/>

<arg name="enable_color" default="true"/>

<arg name="fisheye_fps" default="15"/>

<arg name="depth_fps" default="15"/>

<arg name="infra_fps" default="15"/>

<arg name="color_fps" default="15"/>

<arg name="gyro_fps" default="200"/>

<arg name="accel_fps" default="250"/>

<arg name="enable_gyro" default="true"/>

<arg name="enable_accel" default="true"/>

<arg name="enable_pointcloud" default="false"/>

<arg name="pointcloud_texture_stream" default="RS2_STREAM_COLOR"/>

<arg name="pointcloud_texture_index" default="0"/>

<arg name="enable_sync" default="true"/>

<arg name="align_depth" default="false"/>

<arg name="publish_tf" default="true"/>

<arg name="tf_publish_rate" default="0"/>

<arg name="filters" default=""/>

<arg name="clip_distance" default="-2"/>

<arg name="linear_accel_cov" default="0.01"/>

<arg name="initial_reset" default="false"/>

<arg name="unite_imu_method" default="copy"/>

<arg name="topic_odom_in" default="odom_in"/>

<arg name="calib_odom_file" default=""/>

<arg name="publish_odom_tf" default="true"/>

<arg name="allow_no_texture_points" default="false"/>

<arg name="emitter_enable" default="false"/>

<!-- rosparam set /camera/stereo_module/emitter_enabled false -->

<rosparam>

/camera/stereo_module/emitter_enabled: 0

</rosparam>

<rosparam if="$(arg emitter_enable)">

/camera/stereo_module/emitter_enabled: 1

</rosparam>

<group ns="$(arg camera)">

<include file="$(find realsense2_camera)/launch/includes/nodelet.launch.xml">

<arg name="tf_prefix" value="$(arg tf_prefix)"/>

<arg name="external_manager" value="$(arg external_manager)"/>

<arg name="manager" value="$(arg manager)"/>

<arg name="output" value="$(arg output)"/>

<arg name="serial_no" value="$(arg serial_no)"/>

<arg name="usb_port_id" value="$(arg usb_port_id)"/>

<arg name="device_type" value="$(arg device_type)"/>

<arg name="json_file_path" value="$(arg json_file_path)"/>

<arg name="enable_pointcloud" value="$(arg enable_pointcloud)"/>

<arg name="pointcloud_texture_stream" value="$(arg pointcloud_texture_stream)"/>

<arg name="pointcloud_texture_index" value="$(arg pointcloud_texture_index)"/>

<arg name="enable_sync" value="$(arg enable_sync)"/>

<arg name="align_depth" value="$(arg align_depth)"/>

<arg name="fisheye_width" value="$(arg fisheye_width)"/>

<arg name="fisheye_height" value="$(arg fisheye_height)"/>

<arg name="enable_fisheye" value="$(arg enable_fisheye)"/>

<arg name="depth_width" value="$(arg depth_width)"/>

<arg name="depth_height" value="$(arg depth_height)"/>

<arg name="enable_depth" value="$(arg enable_depth)"/>

<arg name="confidence_width" value="$(arg confidence_width)"/>

<arg name="confidence_height" value="$(arg confidence_height)"/>

<arg name="enable_confidence" value="$(arg enable_confidence)"/>

<arg name="confidence_fps" value="$(arg confidence_fps)"/>

<arg name="color_width" value="$(arg color_width)"/>

<arg name="color_height" value="$(arg color_height)"/>

<arg name="enable_color" value="$(arg enable_color)"/>

<arg name="infra_width" value="$(arg infra_width)"/>

<arg name="infra_height" value="$(arg infra_height)"/>

<arg name="enable_infra" value="$(arg enable_infra)"/>

<arg name="enable_infra1" value="$(arg enable_infra1)"/>

<arg name="enable_infra2" value="$(arg enable_infra2)"/>

<arg name="infra_rgb" value="$(arg infra_rgb)"/>

<arg name="fisheye_fps" value="$(arg fisheye_fps)"/>

<arg name="depth_fps" value="$(arg depth_fps)"/>

<arg name="infra_fps" value="$(arg infra_fps)"/>

<arg name="color_fps" value="$(arg color_fps)"/>

<arg name="gyro_fps" value="$(arg gyro_fps)"/>

<arg name="accel_fps" value="$(arg accel_fps)"/>

<arg name="enable_gyro" value="$(arg enable_gyro)"/>

<arg name="enable_accel" value="$(arg enable_accel)"/>

<arg name="publish_tf" value="$(arg publish_tf)"/>

<arg name="tf_publish_rate" value="$(arg tf_publish_rate)"/>

<arg name="filters" value="$(arg filters)"/>

<arg name="clip_distance" value="$(arg clip_distance)"/>

<arg name="linear_accel_cov" value="$(arg linear_accel_cov)"/>

<arg name="initial_reset" value="$(arg initial_reset)"/>

<arg name="unite_imu_method" value="$(arg unite_imu_method)"/>

<arg name="topic_odom_in" value="$(arg topic_odom_in)"/>

<arg name="calib_odom_file" value="$(arg calib_odom_file)"/>

<arg name="publish_odom_tf" value="$(arg publish_odom_tf)"/>

<arg name="allow_no_texture_points" value="$(arg allow_no_texture_points)"/>

</include>

</group>

</launch>启动相机测试

bash

roslaunch realsense2_camera rs_stereo_mode.launch(注意这里是我们创建副本的那个launch文件)

rostopic list 查看话题是否存在,即infra1和infra2:

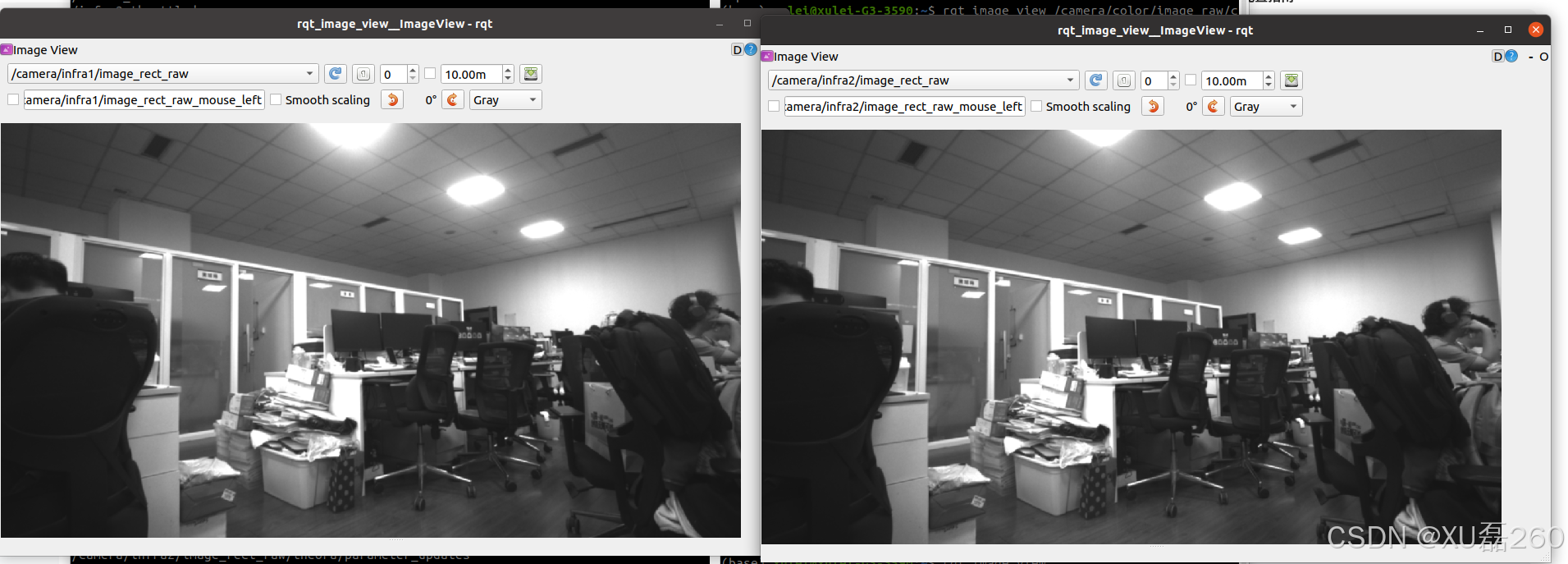

rqt_image_view查看左右图像:

确认话题并录制rosbag:

话题重新确定发布频率,

bash

rosrun topic_tools throttle messages /camera/infra1/image_rect_raw 30 /infra1_throttled

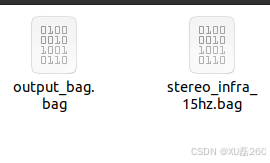

rosrun topic_tools throttle messages /camera/infra2/image_rect_raw 15 infra2_throttled录制bag:

bash

rosbag record /infra1_throttled /infra2_throttled -O stereo_infra_15hz

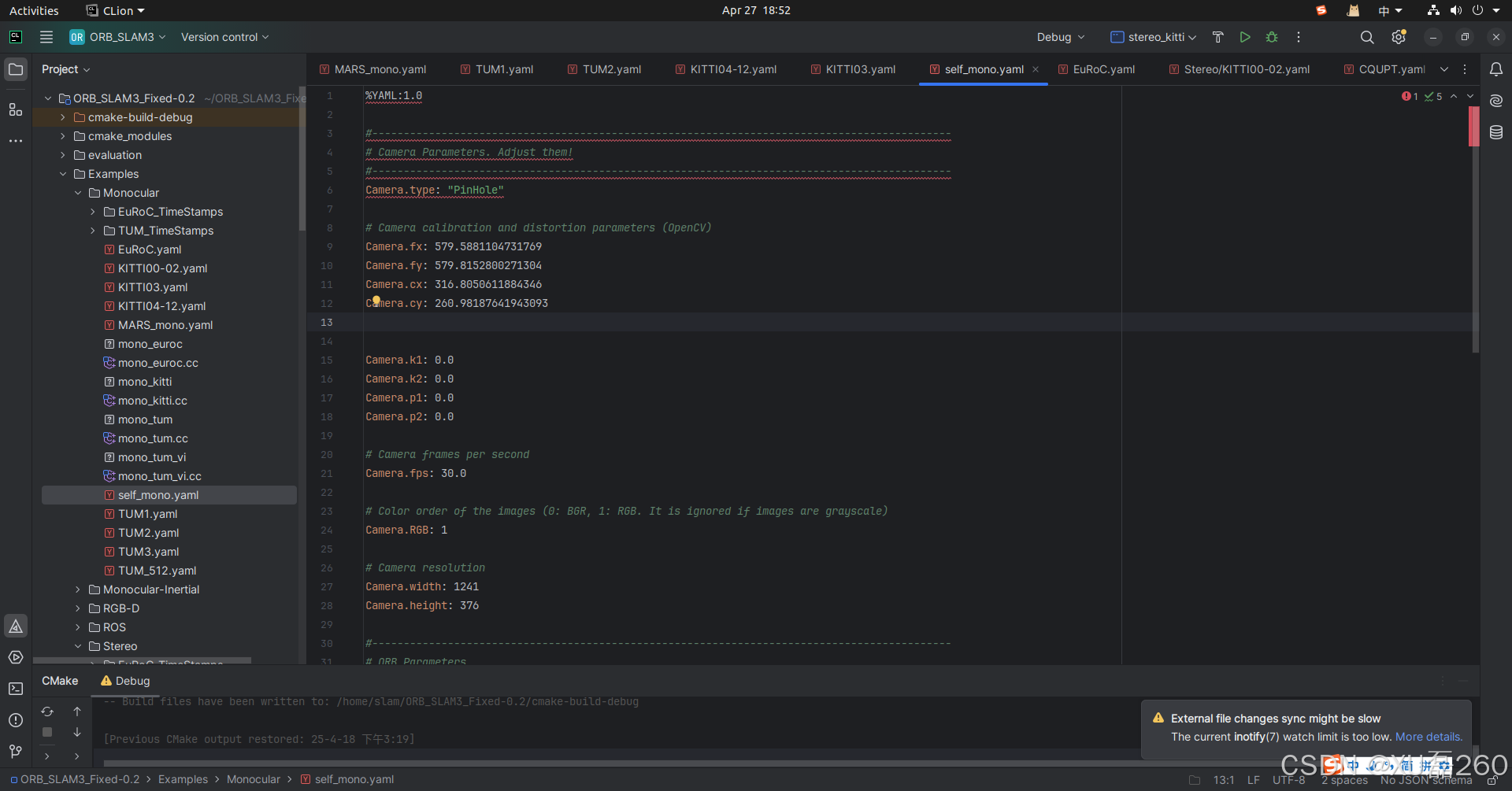

确定ORB-SLAM3的系统及相机配置文件.yaml:

这里我把配置文件按照原来系统的分类放在Example/stereo文件下

这里只改相机内参部分与左右相机基线baseline长度,便于后续的三角化(这里我的参数我没有事先标定,可能不准确)。

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

Camera.type: "PinHole"

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 579.5881104731769

Camera.fy: 579.8152800271304

Camera.cx: 316.8050611884346

Camera.cy: 260.98187641943093

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

# Camera frames per second

Camera.fps: 30.0

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Camera resolution

Camera.width: 1241

Camera.height: 376

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 2000

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.1

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 1

Viewer.PointSize:2

Viewer.CameraSize: 0.15

Viewer.CameraLineWidth: 2

Viewer.ViewpointX: 0

Viewer.ViewpointY: -10

Viewer.ViewpointZ: -0.1

Viewer.ViewpointF: 2000查看系统需要订阅的话题,在Example/ROS/orb-slam3/src的源码文件下:

一般都是:

cpp

message_filters::Subscriber<sensor_msgs::Image> left_sub(nh, "/camera/left/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> right_sub(nh, "/camera/right/image_raw", 1);运行命令行

bash

纯双目:

运行ORB-SLAM3系统,启动后会等待后续话题发布,注意话题名需要与源码文件一致。

rosrun ORB_SLAM3 Stereo Vocabulary/ORBvoc.txt Examples/Stereo/EuRoC.yaml false

发布话题

rosbag:rosbag play stereo_infra_15hz.bag /infra1_throttled:=/camera/left/image_raw /infra2_throttled:=/camera/right/image_raw参数行

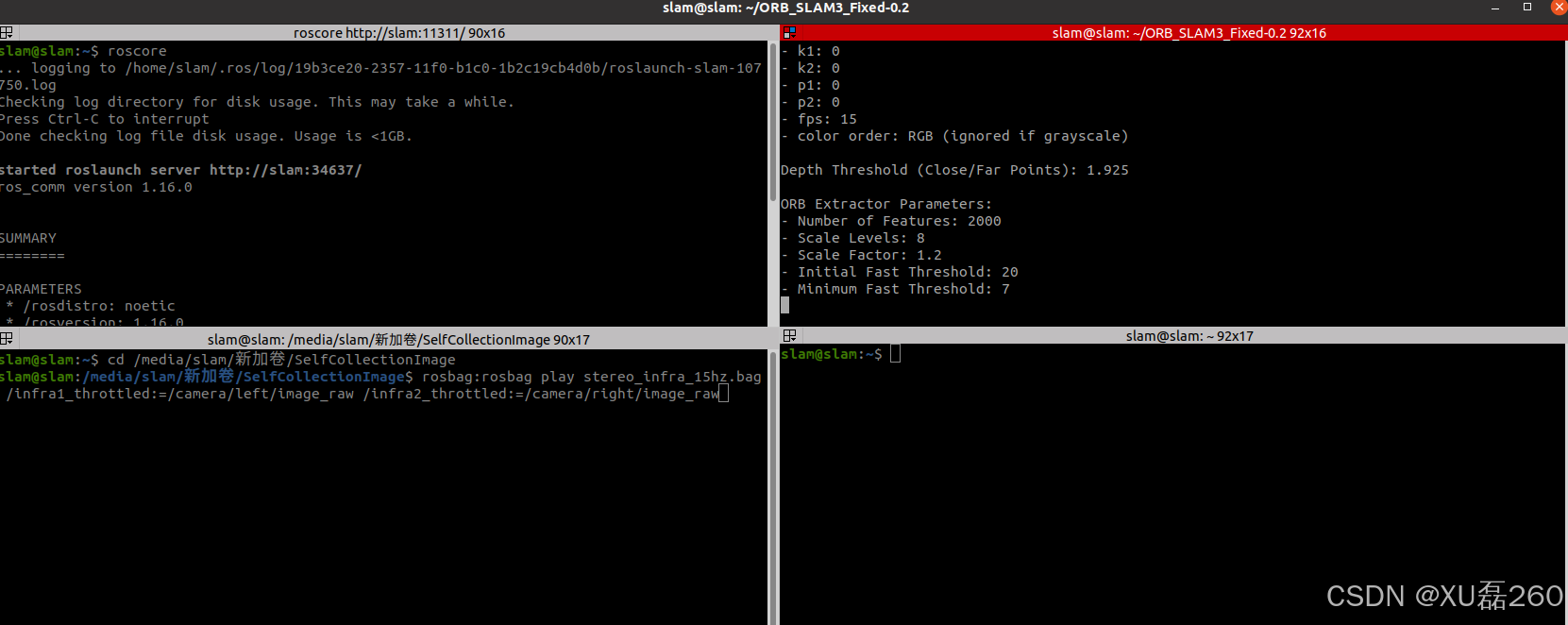

等待话题发布,先运行运行ORB-SLAM3系统,

运行实时效果:

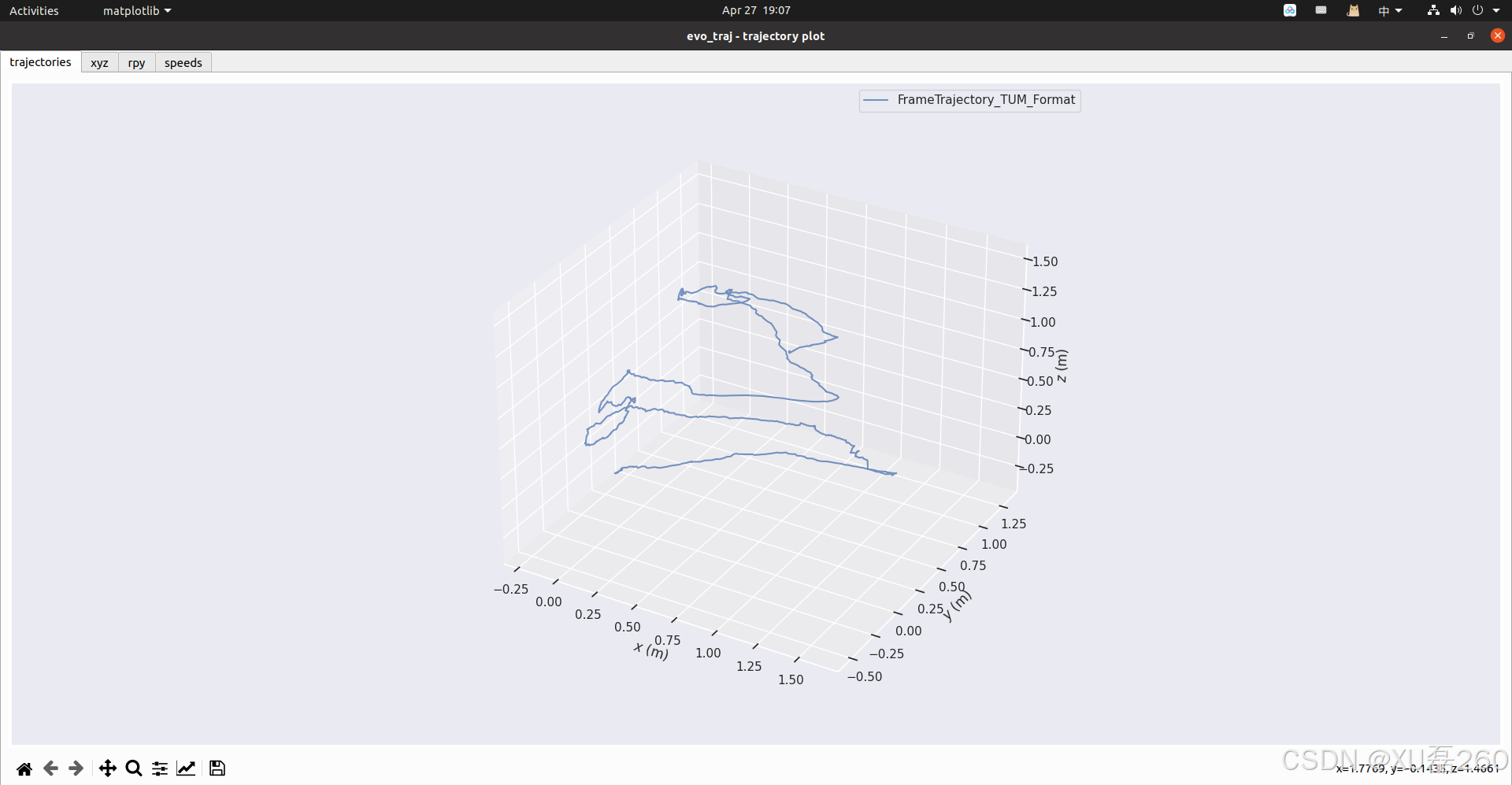

评估结果

vo_traj tum FrameTrajectory_TUM_Format.txt -p

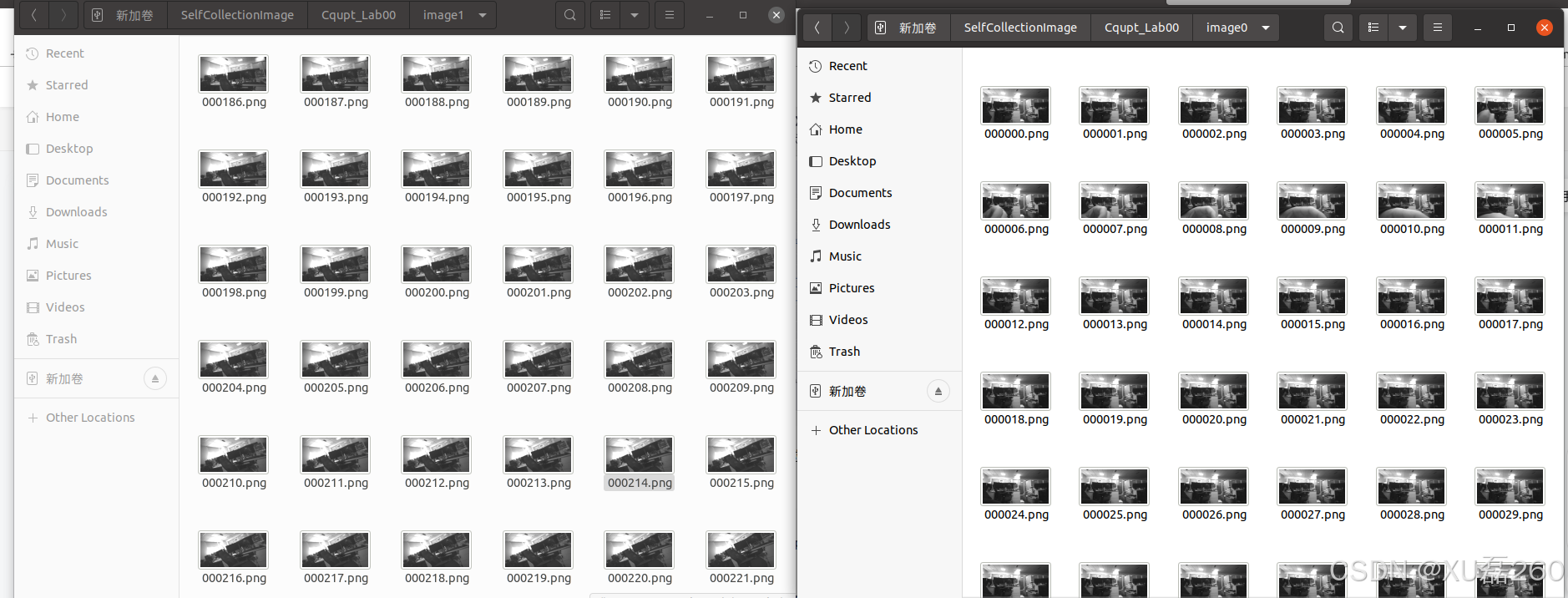

这里也有可以将rosbag转为图片格式,ros有相应的工具进行转化:

bash

rosbag play 包名.bag

左边:

rosrun image_view extract_images _sec_per_frame:=0.0 image:=/infra1_throttled _filename_format:="/media/slam/新加卷/SelfCollectionImage/Cqupt_Lab00/image0/%06d.png"

右目:

rosrun image_view extract_images _sec_per_frame:=0.0 image:=/infra2_throttled _filename_format:="/media/slam/新加卷/SelfCollectionImage/Cqupt_Lab00/image1/%06d.png"

总结

在数据集的录制与系统调用时,需要确定好话题与相机的内参,前者决定数据能否正确的接收,后者是建图精度的保证,这些都需要前期准确的相机标定。