本项目实施聚焦于利用先进的Kolla-Ansible部署工具,在双节点场景下部署OpenStack集群,通过详尽步骤展示高可用私有云平台的构建过程, 为企业级私有云建设提供切实可行的技术参照与实战指导。

OpenStack平台搭建

前置准备

双节点部署OpenStack云平台,各节点主机名和IP地址规划见表

|------|------------|----------------|----------------|

| 节点类型 | 主机名 | IP规划 ||

| 节点类型 | 主机名 | 内部管理 | 实例通信 |

| 控制节点 | controller | 192.168.200.10 | 192.168.100.10 |

| 计算节点 | compute01 | 192.168.200.20 | 192.168.100.20 |

在物理机上申请两台安装了openEuler-22.09操作系统的虚拟机分别作为OpenStack控制节点和计算节点,处理器处勾选"虚拟化IntelVT-x/EPT或AMD-V/RVI(V)"。控制节点类型为4 vCPU、10 GB内存、100 GB系统硬盘;计算节点类型为2 vCPU、4 GB内存、100GB系统磁盘以及4个20GB额外磁盘;需要给虚拟机设置两个网络接口,网络接口1设置为内部网络,其网卡使用仅主机模式,作为控制节点通信和管理使用,网络接口2设置为外部网络,其网卡使用NAT模式,主要作为控制节点的数据网络,在集群部署完成后创建的云主机使用网络接口2 的网卡。

1.系统配置

修改主机名

bash

[root@localhost ~]# hostnamectl set-hostname controller && bash

[root@localhost ~]# hostnamectl set-hostname compute01 && bash 修改yum源

bash

[root@controller ~]# rm -rf /etc/yum.repos.d/openEuler.repo

[root@controller ~]# vi /etc/yum.repos.d/euler.repo

# Generic-repos is licensed under the Mulan PSL v2.

# For details: http://license.coscl.org.cn/MulanPSL2

[OS]

name=OS

baseurl=https://archives.openeuler.openatom.cn/openEuler-22.09/OS/$basearch/

enabled=1

gpgcheck=1

gpgkey=https://archives.openeuler.openatom.cn/openEuler-22.09/OS/$basearch/RPM-GPG-KEY-openEuler

[everything]

name=everything

baseurl=https://archives.openeuler.openatom.cn/openEuler-22.09/everything/$basearch/

enabled=1

gpgcheck=1

gpgkey=https://archives.openeuler.openatom.cn/openEuler-22.09/everything/$basearch/RPM-GPG-KEY-openEuler

[EPOL]

name=EPOL

baseurl=https://archives.openeuler.openatom.cn/openEuler-22.09/EPOL/main/$basearch/

enabled=1

gpgcheck=1

gpgkey=https://archives.openeuler.openatom.cn/openEuler-22.09/OS/$basearch/RPM-GPG-KEY-openEuler

[debuginfo]

name=debuginfo

baseurl=https://archives.openeuler.openatom.cn/openEuler-22.09/debuginfo/$basearch/

enabled=0

gpgcheck=1

gpgkey=https://archives.openeuler.openatom.cn/openEuler-22.09/OS/$basearch/RPM-GPG-KEY-openEuler

[source]

name=source

baseurl=https://archives.openeuler.openatom.cn/openEuler-22.09/source/

enabled=0

gpgcheck=1

gpgkey=https://archives.openeuler.openatom.cn/openEuler-22.09/OS/$basearch/RPM-GPG-KEY-openEuler

[update]

name=update

baseurl=https://archives.openeuler.openatom.cn/openEuler-22.09/update/$basearch/

enabled=1

gpgcheck=1

gpgkey=https://archives.openeuler.openatom.cn/openEuler-22.09/OS/$basearch/RPM-GPG-KEY-openEuler所有节点更新系统软件包以获得最新的功能支持和错误(Bug)修复。

bash

[root@controller ~]# dnf -y update && dnf -y upgrade

[root@compute01 ~]# dnf -y update && dnf -y upgrade网卡配置:(compute01同理)

bash

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

------

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.200.10

PREFIX=24

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens34

------

DEVICE=ens34

ONBOOT=yes

IPADDR=192.168.100.10

PREFIX=24

GATEWAY=192.168.100.2

DNS1=114.114.114.114

DNS2=8.8.8.8

DNS3=202.96.64.682.安装ansible与kolla-ansible

下载pip,并做加速配置

bash

[root@controller ~]# dnf -y install python3-pip

[root@controller ~]# mkdir .pip

[root@controller ~]# cat << EOF > .pip/pip.conf

[global]

index-url = https://pypi.tuna.tsinghua.edu.cn/simple

[install]

trusted-host=pypi.tuna.tsinghua.edu.cn

EOF更新python相关工具

bash

[root@controller ~]# pip3 install --ignore-installed --upgrade pip 安装ansible

bash

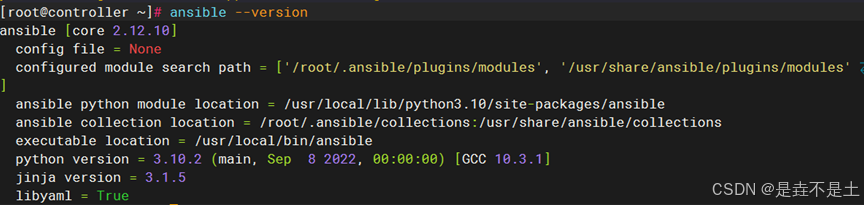

[root@controller ~]# pip3 install -U 'ansible>=4,<6'

[root@controller ~]# ansible --version

安装Kolla-ansible和Kolla-ansible环境必需的依赖项

bash

[root@controller ~]# dnf -y install \

git python3-devel libffi-devel gcc openssl-devel python3-libselinux

[root@controller ~]# dnf -y install openstack-kolla-ansible

[root@controller ~]# kolla-ansible --version

14.2.0创建Kolla-ansible配置文件目录

bash

[root@controller ~]# mkdir -p /etc/kolla/{globals.d,config}

[root@controller ~]# chown $USER:$USER /etc/kolla将inventory文件复制到/etc/ansible目录

bash

[root@controller ~]# mkdir /etc/ansible

[root@controller ~]# cp \

/usr/share/kolla-ansible/ansible/inventory/* /etc/ansible随着Kolla-ansible版本的更迭,从Yoga版本开始需要安装Ansible Galaxy的依赖项,执行以下命令安装

bash

[root@controller ~]# pip3 install cryptography==38.0.4

#修改分支

[root@controller ~]# vi /usr/share/kolla-ansible/requirements.yml

---

collections:

- name: https://opendev.org/openstack/ansible-collection-kolla

type: git

version: master

[root@controller ~]# kolla-ansible install-deps 3.Ansiible运行环境优化

bash

[root@controller ~]# cat << MXD > /etc/ansible/ansible.cfg

[defaults]

#SSH服务关闭密钥检测

host_key_checking=False

#如果不使用 sudo,建议开启

pipelining=True

#执行任务的并发数

forks=100

timeout=800

#禁用警告#

devel_warning = False

deprecation_warnings=False

#显示每个任务花费的时间

callback_whitelist = profile_tasks

#记录Ansible的输出,相对路径表示

log_path= hzy_cloud.log

#主机清单文件,相对路径表示

inventory = openstack_cluster

#命令执行环境,也可更改为/bin/bash

executable = /bin/sh

remote_port = 22

remote_user = root

#默认输出的详细程度

#可选值0、1、2、3、4等

#值越高输出越详细

verbosity = 0

show_custom_stats = True

interpreter_python = auto_legacy_silent

[colors]

#成功的任务绿色显示

ok = green

#跳过的任务使用亮灰色显示

skip = bright gray

#警告使用亮紫色显示

warn = bright purple

[privilege_escalation]

become_user = root

[galaxy]

display_progress = True

MXD4.kolla-ansible初始环境配置

进入/etc/ansible目录,编辑openstack_cluster清单文件来指定集群节点的主机及其所属组。在这个清单文件中还可以用来指定控制节点连接集群各个节点的用户名、密码等(注意:ansible_password为root用户的密码,所有节点的root用户密码不可以是纯数字)。

bash

[root@controller ~]# cd /etc/ansible/

[root@controller ansible]# awk '!/^#/ && !/^$/' multinode > openstack_cluster

[root@controller ansible]# vi openstack_cluster

[all:vars]

ansible_password=Hzy20050702

ansible_become=false

[control]

192.168.200.10

[network]

192.168.200.10

[compute]

192.168.200.20

[monitoring]

192.168.200.10

[storage]

192.168.200.20

------在上面的主机清单文件中定义了control、network、compute、monitoring和storage五个组,指定了各个节点需要承担的角色,并且在组all:vars中定义了全局变量,各组中会有一些变量配置信息,这些变量主要用来连接服务器。其中ansible_password用来指定登录服务器的密码,ansible_become用来指定是否使用sudo来执行命令,其他组内的内容保持默认即可,不用修改。

使用以下命令测试各主机之间能否连通

bash

[root@controller ansible]# dnf -y install sshpass

[root@controller ansible]# ansible -m ping all

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

localhost | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3.10"

},

"changed": false,

"ping": "pong"

}

192.168.200.10 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3.10"

},

"changed": false,

"ping": "pong"

}

192.168.200.20 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3.10"

},

"changed": false,

"ping": "pong"

}在使用Kolla-ansible部署OpenStack Yoga平台时,各个服务组件的密码存储在/etc/kolla/passwords.yml文件中,此文件默认所有的密码是空白的,必须手动或者通过运行随机密码生成器来填写,在部署时建议使用随机密码生成器来生成各个服务的密码,命令如下。

bash

[root@controller ansible]# kolla-genpwd

[root@controller ansible]# vi /etc/kolla/passwords.yml

keystone_admin_password: Hzy@2025

[root@controller ansible]# grep keystone_admin /etc/kolla/passwords.yml

keystone_admin_password: Hzy@2025编辑globals.yml文件,在使用Kolla-ansible部署OpenStack Yoga平台时,最重要的便是globals.yml文件的修改,通过阅读OpenStack官方文档的服务指南,按照自己的需求选择安装相关的组件。本次部署时安装了较多组件,具体的组件列表可查看以下修改后的globals.yml文件,其中要注意一个选项是kolla_internal_vip_address的地址,该地址为192.168.200.0/24网段里的任何一个未被使用的IP(本次部署使用192.168.200.100),部署完成后使用该地址登录Horizon

bash

[root@controller kolla]# vi /etc/kolla/globals.yml

---

# ========================

# Kolla 基础配置

# ========================

kolla_base_distro: "ubuntu"

kolla_install_type: "source"

openstack_release: "yoga"

docker_registry: "quay.nju.edu.cn"

openstack_region_name: "RegionOne"

# ========================

# 网络核心配置

# ========================

network_interface: "ens33"

neutron_external_interface: "ens34"

kolla_internal_vip_address: "192.168.200.100"

neutron_external_network_address: "192.168.100.0/24"

neutron_external_network_gateway: "192.168.100.2"

neutron_external_network_dns: "114.114.114.114"

# ========================

# Neutron 网络配置

# ========================

neutron_plugin_agent: "openvswitch"

neutron_ml2_mechanism_drivers: "openvswitch,l2population"

neutron_ml2_type_drivers: "flat,vxlan"

neutron_ml2_tenant_network_types: "vxlan"

neutron_ml2_flat_networks: "provider:*"

enable_neutron_provider_networks: "yes"

# ========================

# 服务模块开关

# ========================

enable_aodh: "yes"

enable_barbican: "yes"

enable_ceilometer: "yes"

enable_cinder: "yes"

enable_cinder_backup: "yes"

enable_cinder_backend_lvm: "yes"

enable_cloudkitty: "yes"

enable_gnocchi: "yes"

enable_heat: "yes"

heat_tag: "yoga"

enable_manila: "yes"

#manila新增文件

enable_manila: "yes"

enable_manila_api: "yes"

enable_manila_scheduler: "yes"

enable_manila_share: "yes"

enable_manila_data: "yes"

manila_backend_name: "lvm"

manila_enabled_share_protocols: "NFS"

manila_driver_handles_share_servers: "false"

manila_lvm_share_volume_group: "manila-volumes"

manila_lvm_share_export_ip: "192.168.200.20"

enable_neutron_vpnaas: "yes"

enable_neutron_qos: "yes"

enable_redis: "yes"

enable_swift: "yes"

# ========================

# 存储配置

# ========================

glance_backend_file: "yes"

glance_file_datadir_volume: "/var/lib/glance/volumes/"

cinder_volume_group: "cinder-volumes"

# ========================

# 虚拟化配置

# ========================

nova_compute_virt_type: "kvm"

nova_console_type: "novnc"

# ========================

# 消息队列 & 数据库

# ========================

rabbitmq_port: 5672

mariadb_port: 3306

memcached_port: 11211

# ========================

# 安全增强配置

# ========================

barbican_crypto_plugin: "simple_crypto"

barbican_library_path: "/usr/lib/libCryptoki2_64.so"

# ========================

# Swift 对象存储

# ========================

swift_devices_name: "KOLLA_SWIFT_DATA"

swift_devices:

- "d0"

- "d1"

- "d2"

# ========================

# 容器权限配置 (解决 OVS 权限问题)

# ========================

docker_security_opt: "label=disable"

docker_cap_add:

- "NET_ADMIN"

- "SYS_ADMIN"在/etc/kolla/config/目录自定义Neutron和Manila服务的一些配置,在部署集群时使用自定义的配置覆盖掉默认的配置:

bash

[root@controller kolla]# cd /etc/kolla/config/

[root@controller config]# mkdir neutron

[root@controller config]# cat << MXD > neutron/dhcp_agent.ini

[DEFAULT]

dnsmasq_dns_servers = 8.8.8.8,8.8.4.4,223.6.6.6,119.29.29.29

MXD

[root@controller config]# cat << MXD > neutron/ml2_conf.ini

[ml2]

tenant_network_types = flat,vxlan,vlan

[ml2_type_vlan]

network_vlan_ranges = provider:10:1000

[ml2_type_flat]

flat_networks = provider

MXD

[root@controller config]# cat << MXD > neutron/openvswitch_agent.ini

[securitygroup]

firewall_driver = openvswitch

[ovs]

bridge_mappings = provider:br-ex

MXD

[root@controller config]# cat << MXD > manila-share.conf

[generic]

service_instance_flavor_id = 100

MXD5.存储节点磁盘初始化

在compute01节点使用一块20 GB磁盘创建cinder-volumes卷组,该卷组名和globals.yml里面"cinder_volume_group"指定的参数一致。

bash

[root@compute01 ~]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@compute01 ~]# vgcreate cinder-volumes /dev/sdb

Volume group "cinder-volumes" successfully created

[root@compute01 ~]# vgs cinder-volumes

VG #PV #LV #SN Attr VSize VFree

cinder-volumes 1 0 0 wz--n- <20.00g <20.00g

##Manila使用的盘##

[root@compute01 ~]# pvcreate /dev/sdf

Physical volume "/dev/sdf" successfully created.

[root@compute01 ~]# vgcreate manila-volumes /dev/sdf

Volume group "manila-volumes" successfully created

[root@compute01 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

cinder-volumes 1 0 0 wz--n- <20.00g <20.00g

manila-volumes 1 0 0 wz--n- <10.00g <10.00g

openeuler 1 3 0 wz--n- <99.00g 0 初始化Swift服务磁盘,在compute01节点使用三块20 GB磁盘用于Swift存储设备的磁盘,并添加特殊的分区名称和文件系统标签,编写Swift_disk_init.sh脚本初始化磁盘。其中设备名KOLLA_SWIFT_DATA 和globals.yml文件里面"swift_devices_name"指定的参数一致。

bash

[root@compute01 ~]# vi swift.sh

#!/bin/bash

index=0

for d in sdc sdd sde; do

# 强制创建新分区表

parted -s /dev/${d} mklabel gpt

parted -s /dev/${d} mkpart primary xfs 1MiB 100%

# 格式化并设置标签 d0/d1/d2

mkfs.xfs -f -L d${index} /dev/${d}1

# 创建挂载点并挂载

mkdir -p /srv/node/d${index}

mount -L d${index} /srv/node/d${index}

(( index++ ))

done

# 永久挂载配置

echo "LABEL=d0 /srv/node/d0 xfs defaults 0 0" >> /etc/fstab

echo "LABEL=d1 /srv/node/d1 xfs defaults 0 0" >> /etc/fstab

echo "LABEL=d2 /srv/node/d2 xfs defaults 0 0" >> /etc/fstab

mount -a

# 验证结果

lsblk -f

df -h | grep '/srv/node/d'6.部署集群环境

在控制节点安装OpenStack CLI客户端。

bash

[root@controller kolla]# dnf -y install python3-openstackclient

##新增安装,后续调用用到

python3-manilaclient

python3-heatclient为了使部署的控制节点网络路由正常工作,需要在Linux系统中启用IP转发功能,修改controller和compute01节点的/etc/sysctl.conf文件,并配置在系统启动时自动加载br_netfilter模块,具体操作如下。

bash

##控制节点

[root@controller kolla]# cat << MXD >> /etc/sysctl.conf

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

MXD

[root@controller kolla]# modprobe br_netfilter

[root@controller kolla]# sysctl -p /etc/sysctl.conf

[root@controller kolla]# cat << MXD > /usr/lib/systemd/system/yoga.service

[Unit]

Description=Load br_netfilter and sysctl settings for OpenStack

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/sbin/modprobe br_netfilter

ExecStart=/usr/sbin/sysctl -p

[Install]

WantedBy=multi-user.target

MXD

[root@controller kolla]# systemctl enable --now yoga.service

##计算节点

[root@compute01 ~]# cat << MXD >> /etc/sysctl.conf

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

MXD

[root@compute01 ~]# modprobe br_netfilter

[root@compute01 ~]# sysctl -p /etc/sysctl.conf

[root@compute01 ~]# cat << MXD > /usr/lib/systemd/system/yoga.service

[Unit]

Description=Load br_netfilter and sysctl settings for OpenStack

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/sbin/modprobe br_netfilter

ExecStart=/usr/sbin/sysctl -p

[Install]

WantedBy=multi-user.target

MXD

[root@compute01 ~]# systemctl enable --now yoga.service 修改Docker的yum源与gpg公钥:

bash

[root@controller config]# vi $HOME/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/tasks/repo-RedHat.yml

- name: Enable docker yum repository

yum_repository:

name: docker

description: Docker main Repository

baseurl: "https://download.docker.com/linux/centos/7/x86_64/stable"

gpgcheck: "true"

gpgkey: "https://download.docker.com/linux/centos/gpg"

##如果连接不通,可以换成阿里云的地址嘻嘻,阿里云地址:

#https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/

#https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

# NOTE(yoctozepto): required to install containerd.io due to runc being a

# modular package in CentOS 8 see:

# https://bugzilla.redhat.com/show_bug.cgi?id=1734081

module_hotfixes: true

become: true

[root@controller kolla]# vi $HOME/.ansible/collections/ansible_collections/openstack/kolla/roles/docker/defaults/main.yml

# Docker Yum repository configuration.

docker_yum_url: "https://mirrors.aliyun.com/docker-ce/linux/centos/"

docker_yum_baseurl: "https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/"

docker_yum_gpgkey: "https://mirrors.aliyun.com/docker-ce/linux/centos/gpg"

docker_yum_gpgcheck: true

docker_yum_package: "docker-ce{{ '-' + docker_yum_package_pin if (docker_yum_package_pin | length > 0) else '' }}"

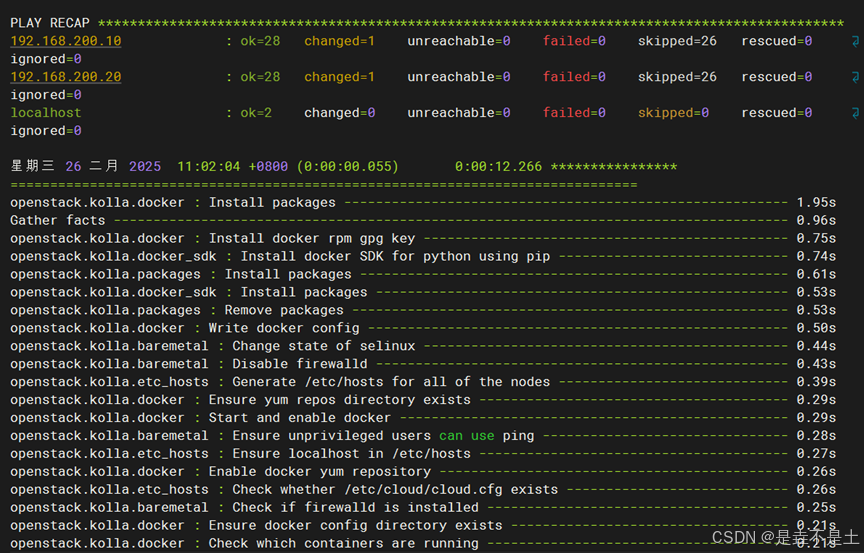

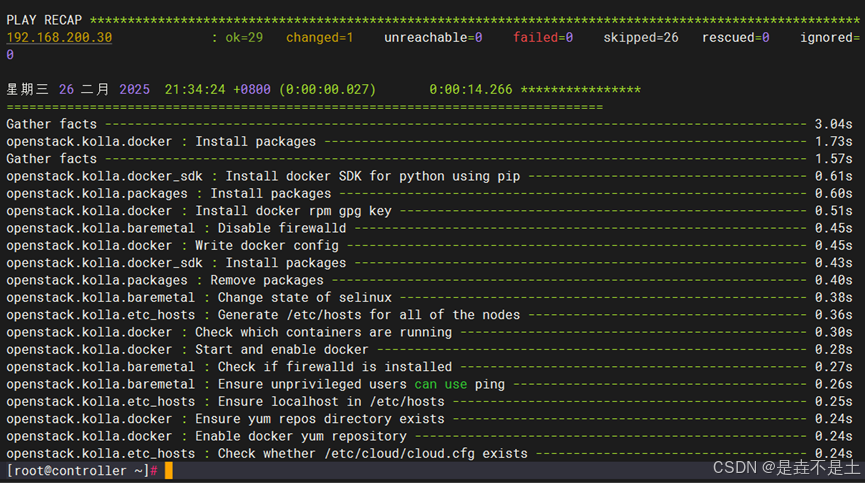

docker_yum_package_pin: ""在控制节点使用命令安装OpenStack集群所需要的基础依赖项和修改一些配置文件(如安装Docker和修改Hosts文件等),执行结果和用时如图所示:

bash

[root@controller ~]# kolla-ansible bootstrap-servers

Docker默认的镜像拉取地址在国外,在国内拉取镜像速度就比较慢,可以修改国内镜像地址来加速镜像的拉取,编辑各个节点/etc/docker/daemon.json文件,添加registry-mirrors部分的内容,下面给出修改示例

bash

[root@controller kolla]# vi /etc/docker/daemon.json

{

"bridge": "none",

"default-ulimits": {

"nofile": {

"hard": 1048576,

"name": "nofile",

"soft": 1048576

}

},

"ip-forward": false,

"iptables": false,

"registry-mirrors": [

"https://docker.1ms.run",

"https://docker.1panel.live",

"https://hub.rat.dev",

"https://docker.m.daocloud.io",

"https://do.nark.eu.org",

"https://dockerpull.com",

"https://dockerproxy.cn",

"https://docker.awsl9527.cn"

]

}

[root@controller kolla]# systemctl restart docker

[root@controller kolla]# systemctl daemon-reload

[root@controller kolla]# systemctl restart docker 在控制节点生成Swift服务所需要的环:

bash

[root@controller kolla]# docker pull kolla/swift-base:master-ubuntu-jammy

[root@controller kolla]# vi swift-ring.sh

#!/bin/bash

STORAGE_NODES=(192.168.200.20) # 替换为你的存储节点 IP

KOLLA_SWIFT_BASE_IMAGE="kolla/swift-base:master-ubuntu-jammy"

CONFIG_DIR="/etc/kolla/config/swift"

# 创建配置目录

mkdir -p ${CONFIG_DIR}

# 定义函数:生成环并添加设备

build_ring() {

local ring_type=$1

local port=$2

echo "===== 创建 ${ring_type} 环 ====="

docker run --rm \

-v ${CONFIG_DIR}:/etc/kolla/config/swift \

${KOLLA_SWIFT_BASE_IMAGE} \

swift-ring-builder /etc/kolla/config/swift/${ring_type}.builder create 10 3 1

# 添加设备到环

for node in ${STORAGE_NODES[@]}; do

for i in {0..2}; do

echo "添加设备 d${i} 到 ${ring_type} 环(节点 ${node},端口 ${port})"

docker run --rm \

-v ${CONFIG_DIR}:/etc/kolla/config/swift \

${KOLLA_SWIFT_BASE_IMAGE} \

swift-ring-builder /etc/kolla/config/swift/${ring_type}.builder \

add "r1z1-${node}:${port}/d${i}" 1

done

done

# 重新平衡环

docker run --rm \

-v ${CONFIG_DIR}:/etc/kolla/config/swift \

${KOLLA_SWIFT_BASE_IMAGE} \

swift-ring-builder /etc/kolla/config/swift/${ring_type}.builder rebalance

}

# 生成各类型环

build_ring object 6000

build_ring account 6001

build_ring container 6002

# 验证环文件

echo "===== 生成的环文件 ====="

ls -lh ${CONFIG_DIR}/*.ring.gz

echo "===== Object 环设备状态 ====="

docker run --rm \

-v ${CONFIG_DIR}:/etc/kolla/config/swift \

${KOLLA_SWIFT_BASE_IMAGE} \

swift-ring-builder /etc/kolla/config/swift/object.builder

[root@controller kolla]# ansible storage -m copy -a "src=/etc/kolla/config/swift/ dest=/etc/kolla/config/swift/"增加系统版本号:

bash

[root@controller kolla]# vi /usr/share/kolla-ansible/ansible/roles/prechecks/vars/main.yml

openEuler:

- "20.03"

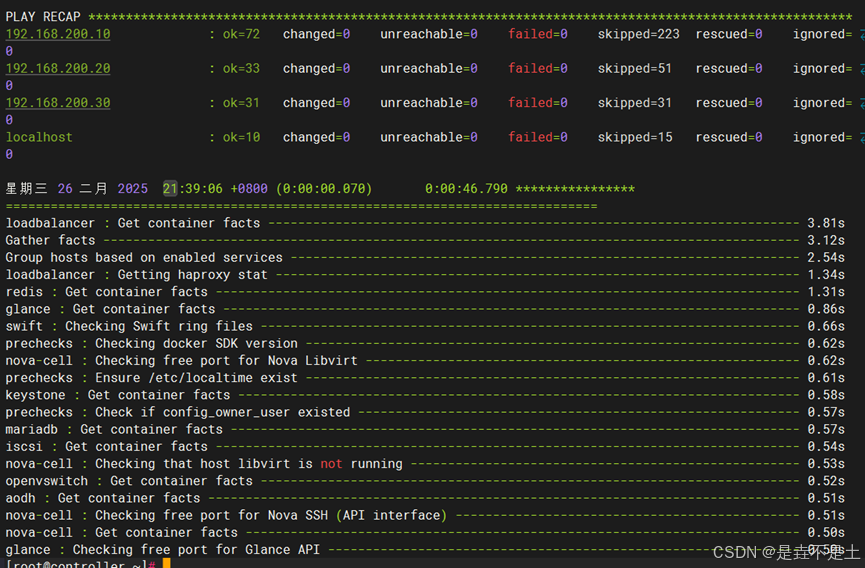

- "22.09"在节点进行部署前检查:

bash

[root@controller kolla]# kolla-ansible prechecks

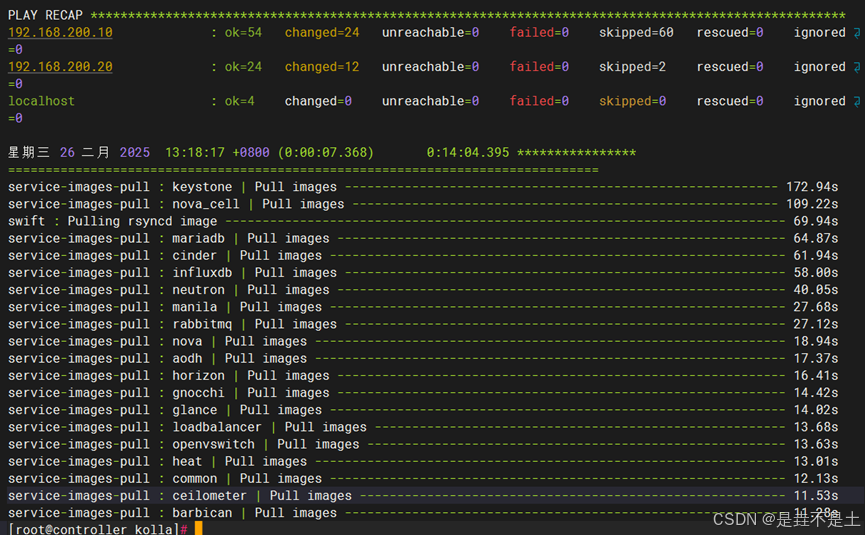

拉取全部镜像:(拉取时间取决于网速)

bash

[root@controller kolla]# kolla-ansible pull

[root@controller kolla]# docker pull 99cloud/skyline:latest

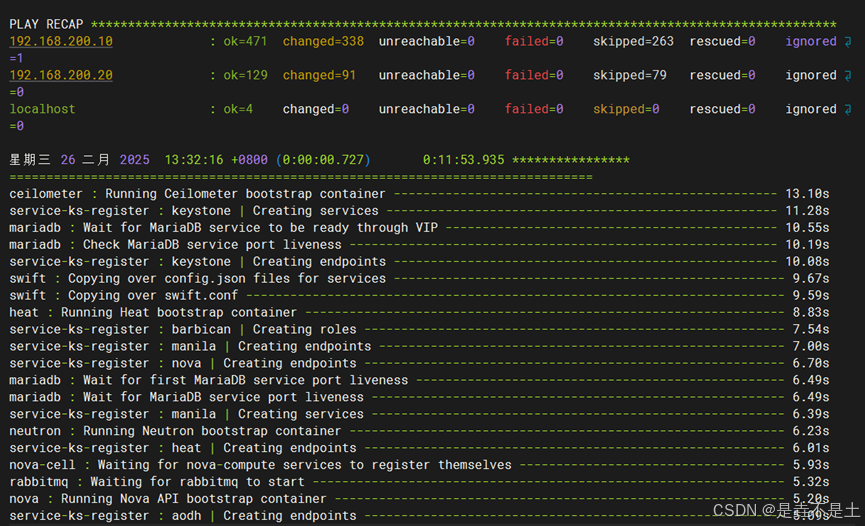

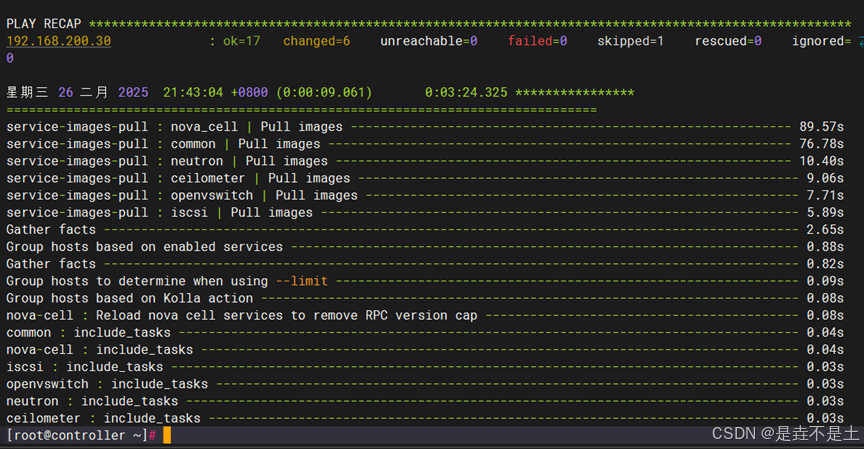

部署服务

bash

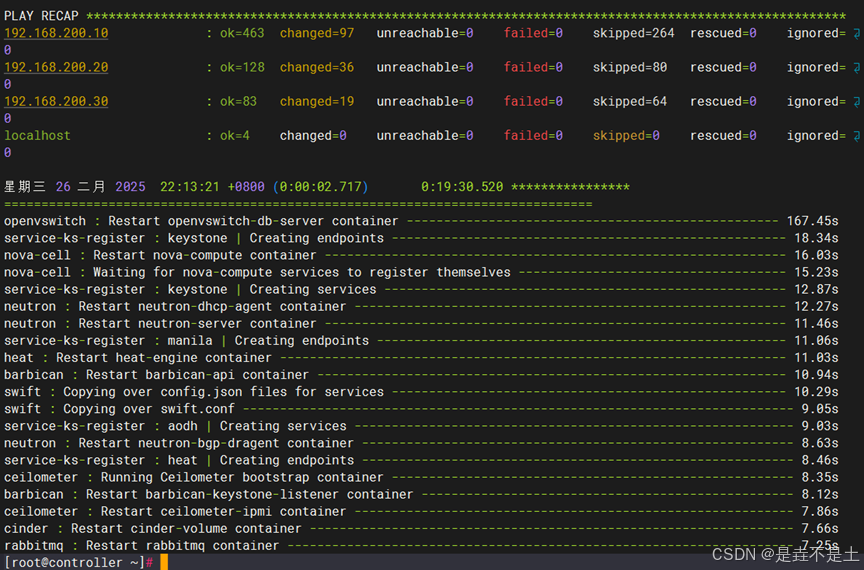

[root@controller ~]# kolla-ansible deploy

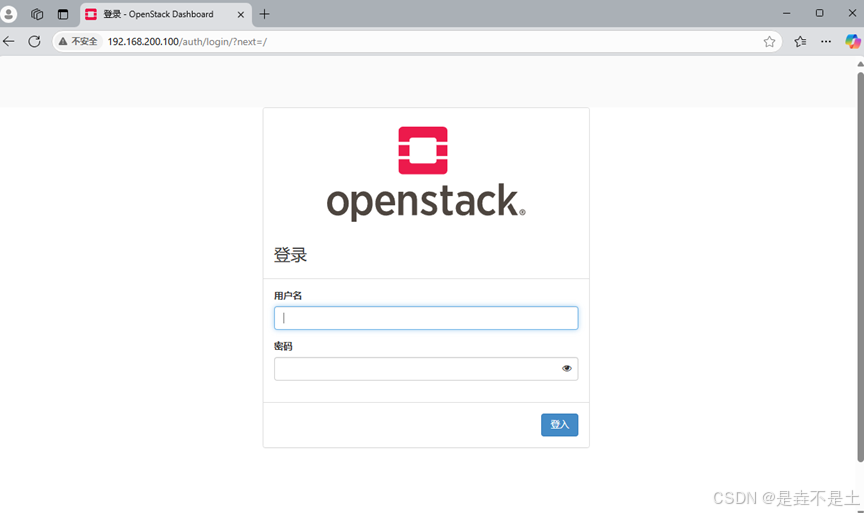

部署完成后,登录验证:

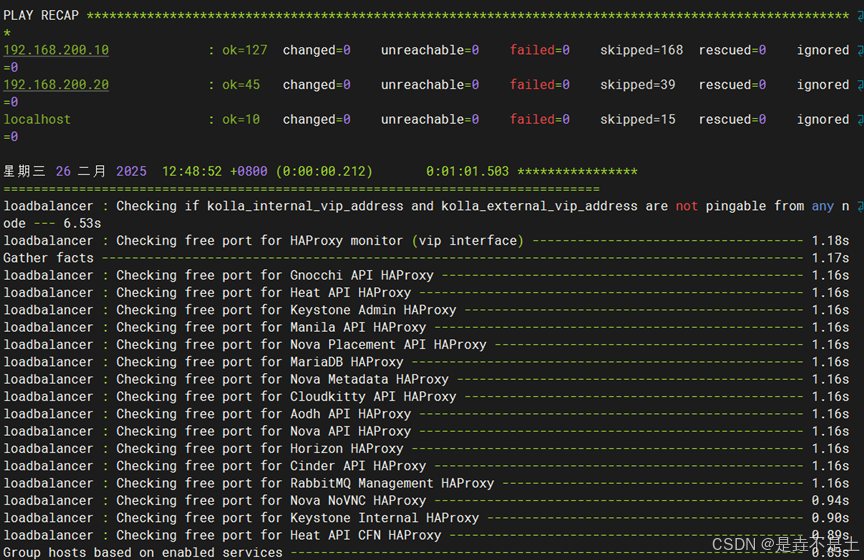

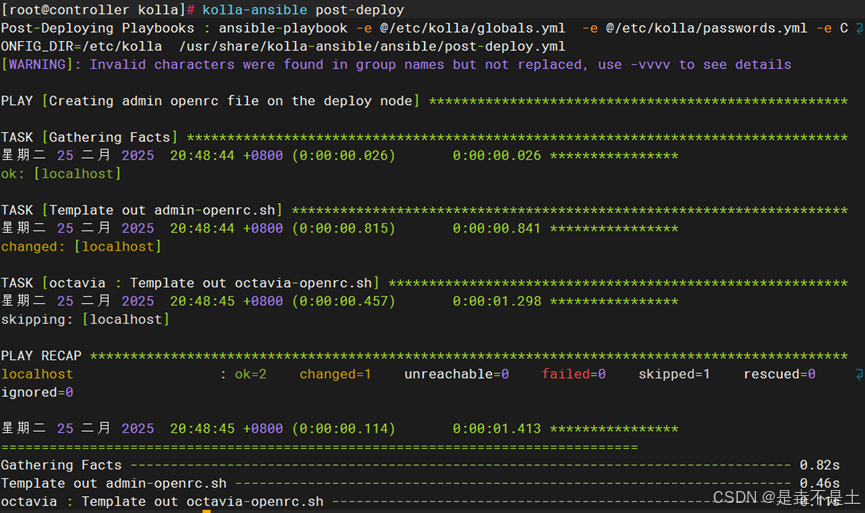

OpenStack集群部署完成后,客户端执行命令则需要生成clouds.yaml和admin-openrc.sh文件,这些是管理员(admin)用户的凭据,执行以下命令,执行结果和用时如图所示:

bash

[root@controller kolla]# kolla-ansible post-deploy

OpenStack平台扩容

本任务的核心在于新增一个计算节点,通过详细的操作流程展示如何将新硬件资源融入OpenStack环境,以增强整体计算效能及资源调度灵活性,从而确保平台能够灵活应对日益攀升的应用处理压力,始终保持高效稳定的运行状态。

|-------|------------|----------------|----------------|

| 节点类型 | 主机名 | IP规划 ||

| 节点类型 | 主机名 | 内部管理 | 实例通信 |

| 控制节点 | controller | 192.168.200.10 | 192.168.100.10 |

| 计算节点1 | compute01 | 192.168.200.20 | 192.168.100.20 |

| 计算节点2 | compute02 | 192.168.200.30 | 192.168.100.30 |

在搭建的基础上增加一个计算节点,处理器处勾选"虚拟化IntelVT-x/EPT或AMD-V/RVI(V)"选项;同样地,需要给虚拟机设置两个网络接口,网络接口1设置为内部网络,其网卡使用仅主机模式,作为控制节点通信和管理使用,网络接口2设置为外部网络,其网卡使用NAT模式,主要作为控制节点的数据网络。在集群部署完成后创建的云主机便使用网络接口2的这块网卡。创建新增计算节点2。

1.系统初始化设置

修改主机名

bash

[root@localhost ~]# hostnamectl set-hostname compute02 && bash 修改yum源同上

网卡配置:

bash

[root@compute02 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.200.30

PREFIX=24

[root@compute02 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens34

IPADDR=192.168.100.30

PREFIX=24

GATEWAY=192.168.100.2

DNS1=114.114.114.114

DNS2=8.8.8.8

DNS3=202.96.64.682.集群增加计算节点2

在控制节点修改openstack_cluster主机清单文件,在组[compute]加入计算节点2的IP地址和root用户密码,修改后如下所示

bash

[root@controller ~]# vi /etc/ansible/openstack_cluster

[compute]

192.168.200.20

192.168.200.30

[root@controller ~]# ansible -m ping all

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

192.168.200.20 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3.10"

},

"changed": false,

"ping": "pong"

}

192.168.200.30 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3.10"

},

"changed": false,

"ping": "pong"

}

localhost | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3.10"

},

"changed": false,

"ping": "pong"

}

192.168.200.10 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3.10"

},

"changed": false,

"ping": "pong"

}现有集群加入compute02节点:为了使部署的计算节点2网络路由正常工作,需要在Linux系统中启用IP转发功能,修改计算节点2的/etc/sysctl.conf文件,并配置为在系统启动时自动加载br_netfilter模块,具体操作如下。

bash

[root@compute02 ~]# cat << MXD >> /etc/sysctl.conf

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

MXD

[root@compute02 ~]# modprobe br_netfilter

[root@compute02 ~]# sysctl -p /etc/sysctl.conf

[root@compute02 ~]# vi /usr/lib/systemd/system/yoga.service

[Unit]

Description=Load br_netfilter and sysctl settings for OpenStack

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/sbin/modprobe br_netfilter

ExecStart=/usr/sbin/sysctl -p

[Install]

WantedBy=multi-user.target

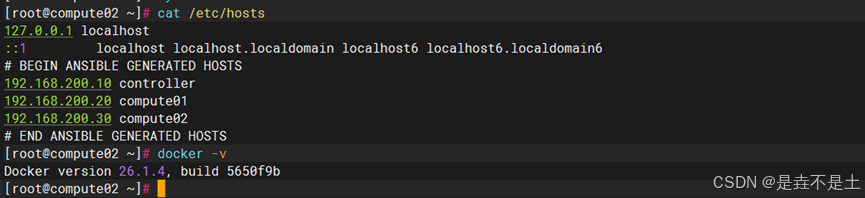

[root@compute02 ~]# systemctl enable --now yoga.service 在控制节点使用命令安装计算节点2所需要的基础依赖项并修改一些配置文件,如在计算节点2安装Docker和修改Hosts文件等,执行结果和用时如图3-23所示。

bash

[root@controller ~]# kolla-ansible --limit 192.168.200.30 bootstrap-servers

##如果出现报错Selinux,缺少软件包:yum install -y libselinux-python3

在计算节点2验证,Docker已经安装完毕,而且Hosts文件也已经修改完成。

Docker默认的镜像拉取地址在国外,在国内拉取镜像速度就比较慢,可以修改国内镜像地址来加速镜像的拉取,编辑各个节点/etc/docker/daemon.json文件,添加registry-mirrors部分的内容,下面给出修改示例

bash

[root@compute02 ~]# vi /etc/docker/daemon.json

{

"bridge": "none",

"default-ulimits": {

"nofile": {

"hard": 1048576,

"name": "nofile",

"soft": 1048576

}

},

"ip-forward": false,

"iptables": false,

"registry-mirrors": [

"https://docker.1ms.run",

"https://docker.1panel.live",

"https://hub.rat.dev",

"https://docker.m.daocloud.io",

"https://do.nark.eu.org",

"https://dockerpull.com",

"https://dockerproxy.cn",

"https://docker.awsl9527.cn"

]

}

[root@compute02 ~]# systemctl daemon-reload

[root@compute02 ~]# systemctl restart docker 在控制节点进行部署前检查:

bash

[root@controller ~]# kolla-ansible prechecks

在控制节点使用以下命令下载计算节点2所需要的全部镜像:

bash

[root@controller ~]# kolla-ansible --limit 192.168.200.30 pull

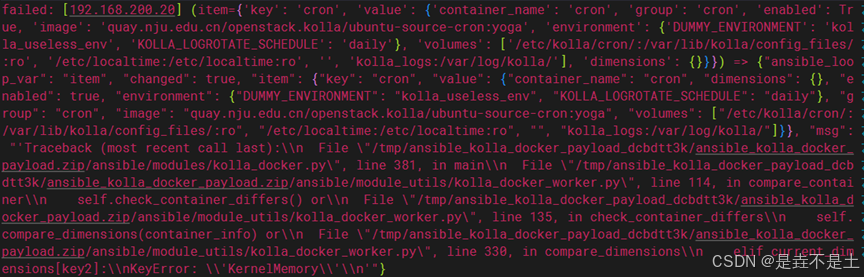

为避免KeyError的问题

bash

[root@controller ~]# vi /usr/share/kolla-ansible/ansible/module_utils/kolla_docker_worker.py

#将这串代码更改

elif current_dimensions[key2]:

#更改为:

elif current_dimensions.get(key2, None):在控制节点执行以下命令将计算节点2加入现有OpenStack集群:

bash

[root@controller ~]# kolla-ansible deploy

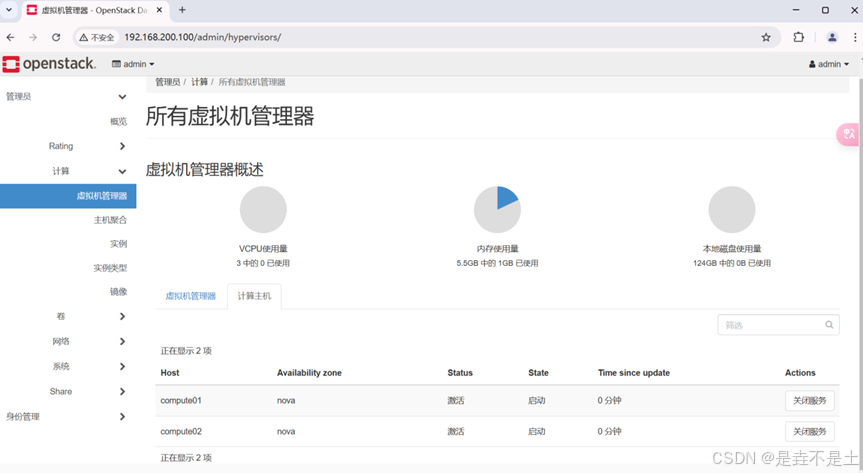

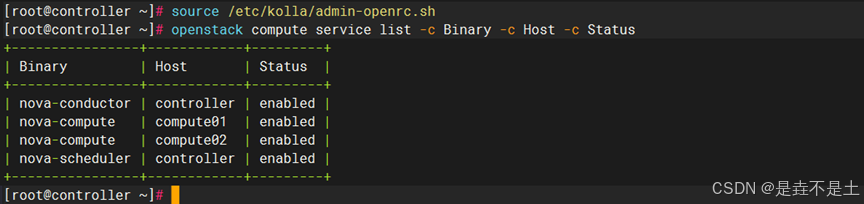

3.验证计算节点2是否加入集群

使用命令查看OpenStack环境中计算服务(Nova)的状态,运行中的计算服务列表如图所示,可以看到计算节点2在运行状态,下面提供了两种命令查看方式。

bash

[root@controller ~]# source /etc/kolla/admin-openrc.sh

[root@controller ~]# openstack compute service list -c Binary -c Host -c Status

+----------------+------------+---------+

| Binary | Host | Status |

+----------------+------------+---------+

| nova-conductor | controller | enabled |

| nova-compute | compute01 | enabled |

| nova-compute | compute02 | enabled |

| nova-scheduler | controller | enabled |

+----------------+------------+---------+

在Horizon界面左侧导航栏中选择"管理员→计算→虚拟机管理器",在右侧界面中可以查看计算主机的状态,如图所示,此时发现计算节点2为激活状态,OpenStack扩容成功。