Gradio 是用最快的方式为你的机器学习模型制作一个友好的网页界面,让任何人都能在任何地方使用它!最近看了一两个例子。Gradio 的实现非常简单粗暴,但是界面还是非常不错。我们可以使用它快速地构建我们想要的测试界面。

在进行下面的代码之前,建议大家先阅读我之前的文章 "Elasticsearch:在 Elastic 中玩转 DeepSeek R1 来实现 RAG 应用"。在那篇文章中,我们详细地描述了如何使用 DeepSeek R1 来帮我们实现 RAG 应用。在今天的展示中,我使用 Elastic Stack 9.1.2 来展示。

alice_gradio.py

ini

`

1. ## Install the required packages

2. ## pip install -qU elasticsearch openai

3. import os

4. from dotenv import load_dotenv

5. from elasticsearch import Elasticsearch

6. from openai import OpenAI

7. import gradio as gr

8. import subprocess

10. load_dotenv()

12. ELASTICSEARCH_URL = os.getenv('ELASTICSEARCH_URL')

13. OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

14. ES_API_KEY = os.getenv("ES_API_KEY")

15. DEEPSEEK_URL = os.getenv("DEEPSEEK_URL")

17. es_client = Elasticsearch(

18. ELASTICSEARCH_URL,

19. ca_certs="./http_ca.crt",

20. api_key=ES_API_KEY,

21. verify_certs = True

22. )

24. # resp = es_client.info()

25. # print(resp)

27. try:

28. openai_client = OpenAI(

29. api_key=OPENAI_API_KEY,

30. base_url=DEEPSEEK_URL

31. )

32. except:

33. print("Something is wrong")

35. index_source_fields = {

36. "book_alice": [

37. "content"

38. ]

39. }

41. def get_elasticsearch_results(query):

42. es_query = {

43. "retriever": {

44. "standard": {

45. "query": {

46. "semantic": {

47. "field": "content",

48. "query": query

49. }

50. }

51. }

52. },

53. "highlight": {

54. "fields": {

55. "content": {

56. "type": "semantic",

57. "number_of_fragments": 2,

58. "order": "score"

59. }

60. }

61. },

62. "size": 3

63. }

64. result = es_client.search(index="book_alice", body=es_query)

65. return result["hits"]["hits"]

67. def create_openai_prompt(results):

68. context = ""

69. for hit in results:

70. ## For semantic_text matches, we need to extract the text from the highlighted field

71. if "highlight" in hit:

72. highlighted_texts = []

73. for values in hit["highlight"].values():

74. highlighted_texts.extend(values)

75. context += "\n --- \n".join(highlighted_texts)

76. else:

77. context_fields = index_source_fields.get(hit["_index"])

78. for source_field in context_fields:

79. hit_context = hit["_source"][source_field]

80. if hit_context:

81. context += f"{source_field}: {hit_context}\n"

82. prompt = f"""

83. Instructions:

85. - You are an assistant for question-answering tasks using relevant text passages from the book Alice in wonderland

86. - Answer questions truthfully and factually using only the context presented.

87. - If you don't know the answer, just say that you don't know, don't make up an answer.

88. - You must always cite the document where the answer was extracted using inline academic citation style [], using the position.

89. - Use markdown format for code examples.

90. - You are correct, factual, precise, and reliable.

92. Context:

93. {context}

95. """

96. return prompt

98. def generate_openai_completion(user_prompt, question, official):

99. response = openai_client.chat.completions.create(

100. model='deepseek-chat',

102. messages=[

103. {"role": "system", "content": user_prompt},

104. {"role": "user", "content": question},

105. ],

106. stream=False

107. )

108. return response.choices[0].message.content

110. def rag_interface(query):

111. elasticsearch_results = get_elasticsearch_results(query)

112. context_prompt = create_openai_prompt(elasticsearch_results)

113. answer = generate_openai_completion(context_prompt, query, official=True)

114. return answer

116. demo = gr.Interface(

117. fn=rag_interface,

118. inputs=gr.Textbox(label="输入你的问题"),

119. # outputs=gr.Markdown(label="RAG Answer"),

120. outputs=gr.Textbox(label="RAG Answer"),

121. title="Alice in Wonderland RAG QA",

122. description="Ask a question about Alice in Wonderland and get an answer based on retrieved passages."

123. )

125. demo.launch()

127. # if __name__ == "__main__":

128. # # question = "Who was at the tea party?"

129. # question = "哪些人在茶会?"

130. # print("Question is: ", question, "\n")

132. # elasticsearch_results = get_elasticsearch_results(question)

133. # context_prompt = create_openai_prompt(elasticsearch_results)

135. # openai_completion = generate_openai_completion(context_prompt, question, official=True)

136. # print(openai_completion)

`AI写代码这里的代码是从 Playground 里下载而来。我做了一下改动。为了能够使得我们每次都能输入我们想要的查询而不用重新运行代码,我添加了如下的代码:

ini

`

1. def rag_interface(query):

2. elasticsearch_results = get_elasticsearch_results(query)

3. context_prompt = create_openai_prompt(elasticsearch_results)

4. answer = generate_openai_completion(context_prompt, query, official=True)

5. return answer

7. demo = gr.Interface(

8. fn=rag_interface,

9. inputs=gr.Textbox(label="输入你的问题"),

10. # outputs=gr.Markdown(label="RAG Answer"),

11. outputs=gr.Textbox(label="RAG Answer"),

12. title="Alice in Wonderland RAG QA",

13. description="Ask a question about Alice in Wonderland and get an answer based on retrieved passages."

14. )

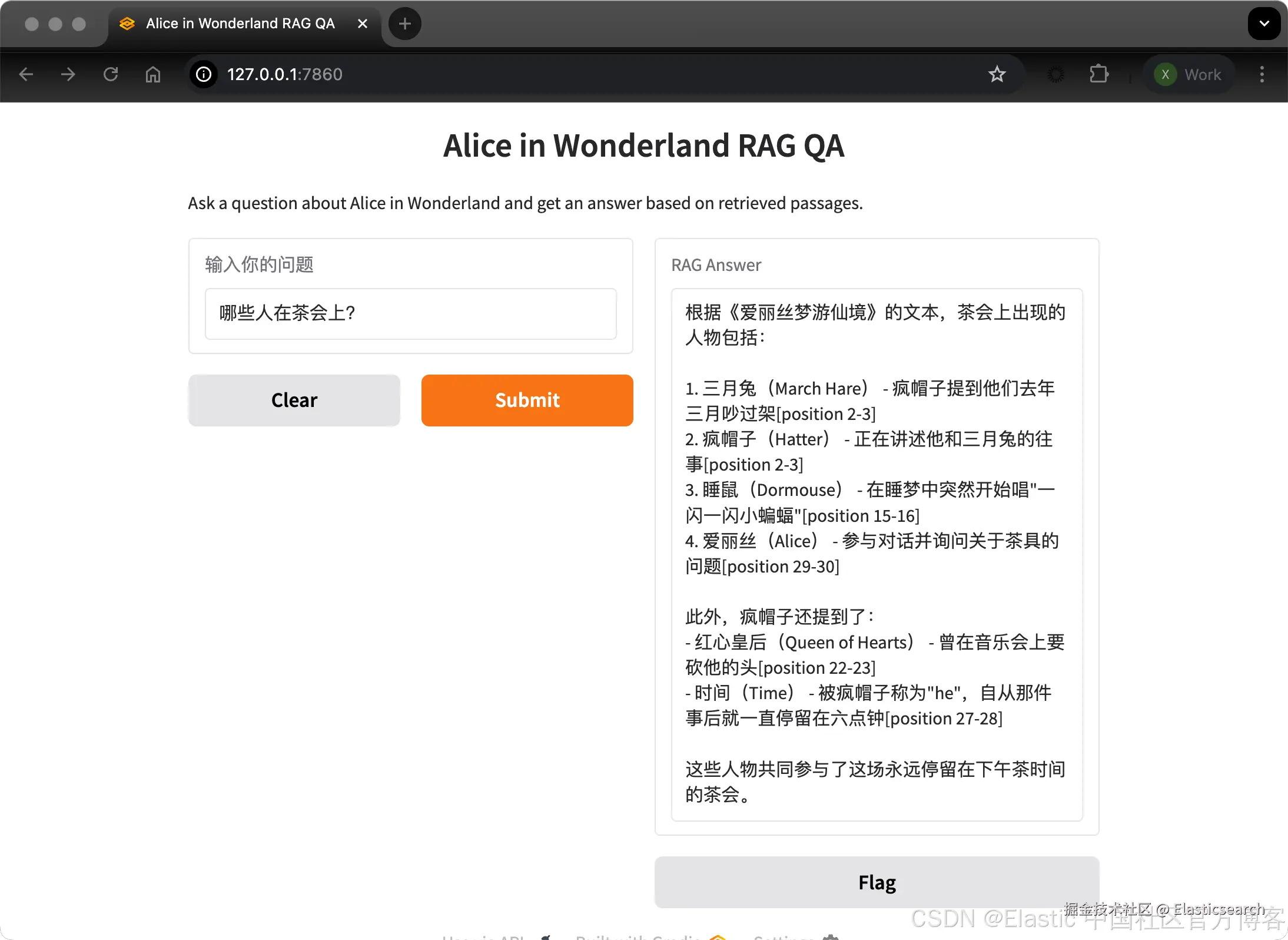

`AI写代码就是这几行代码。它能帮我构建我们想要的界面。我们运行上面的代码:

go

`python alice_gradio.py` AI写代码

perl

``

1. $ python alice_gradio.py

2. * Running on local URL: http://127.0.0.1:7860

3. * To create a public link, set `share=True` in `launch()`.

``AI写代码如上所示,我们打开页面 http://127.0.0.1:7860

go

`哪些人在茶会上?`AI写代码

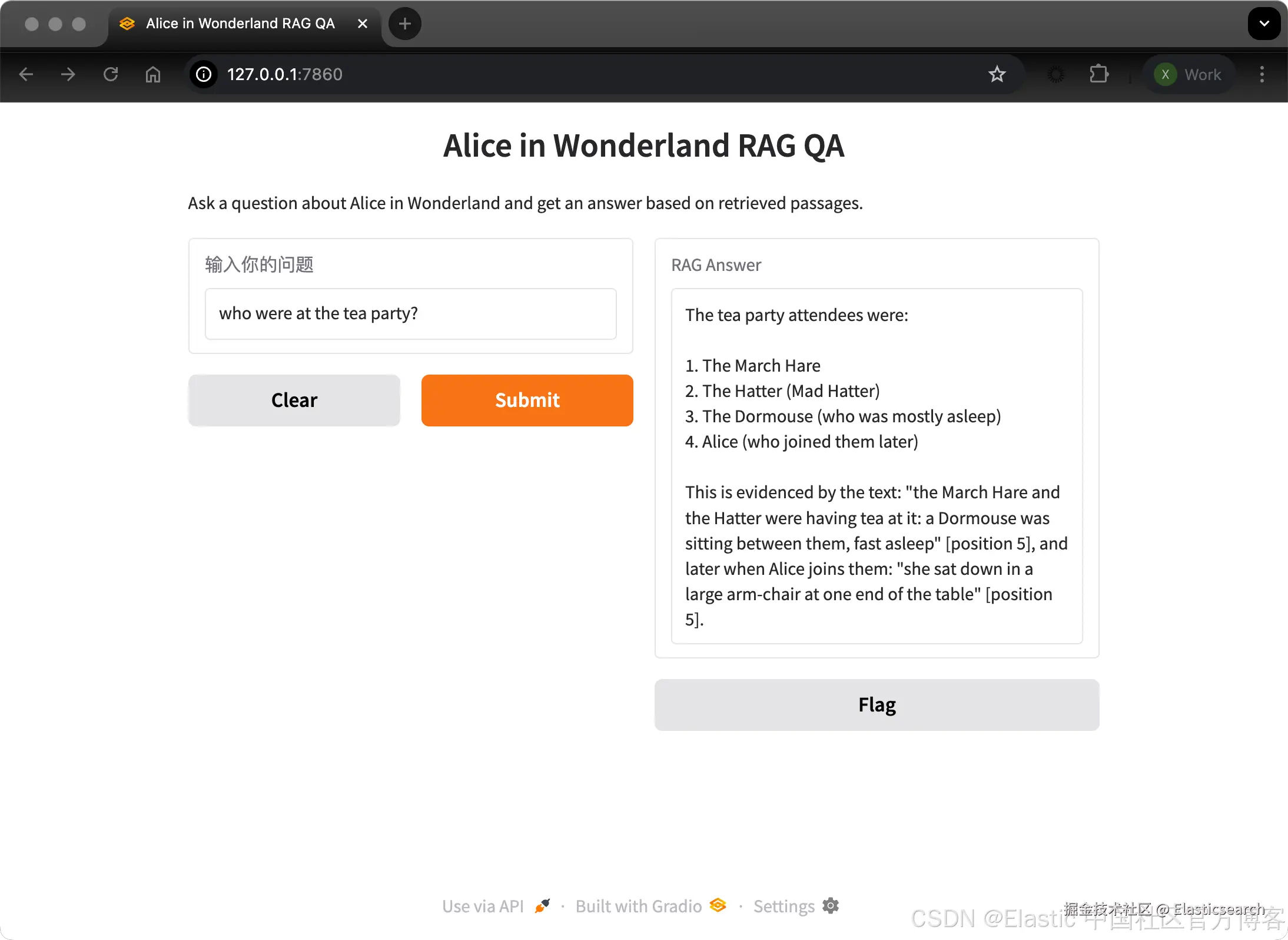

我们也可以用英文进行提问:

bash

`who were at the tea party?`AI写代码