中转代理遇到400

这是我根据llamaindex文档写的第一版代码:

python

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.core.agent.workflow import FunctionAgent

from llama_index.llms.openai import OpenAI

import asyncio

import openai

from dotenv import load_dotenv

import os

# 加载当前目录.env文件存储的环境变量

load_dotenv()

# 变量赋值,以用于中转到openai

openai.api_key = os.environ[""]

openai.base_url = os.environ[""]

# Create a RAG tool using LlamaIndex

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

def multiply(a: float, b: float) -> float:

"""Useful for multiplying two numbers."""

return a * b

async def search_documents(query: str) -> str:

"""Useful for answering natural language questions about an personal essay written by Paul Graham."""

response = await query_engine.aquery(query)

return str(response)

# Create an enhanced workflow with both tools

agent = FunctionAgent(

tools=[multiply, search_documents],

llm=OpenAI(model="gpt-4o-mini"),

system_prompt="""You are a helpful assistant that can perform calculations

and search through documents to answer questions.""",

)

# Now we can ask questions about the documents or do calculations

async def main():

response = await agent.run(

"What did the author do in college? Also, what's 7 * 8?"

)

print(response)

# Run the agent

if __name__ == "__main__":

asyncio.run(main())问题描述

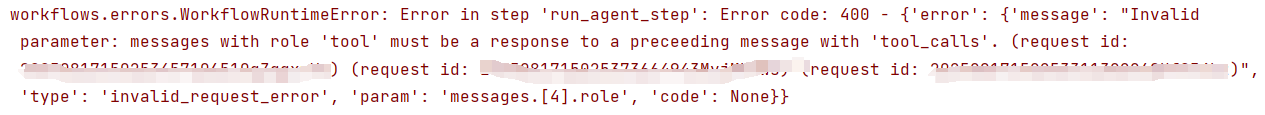

问题显示代理不支持 tool calling

问题分析

我把multiply保留,search_documents删掉了代码就能跑通,证明代理是可以使用的,可能是代码调用的方法存在问题。最后经过对gpt的反复拷问,得到真实原因。

*messages with role 'tool' must be a response...*报错,本质上就是因为你用的 中转代理(OpenAI 兼容 API)并没有完整实现 OpenAI 的 Tool Calling 协议。

LlamaIndex 的 FunctionAgent 默认是走 OpenAI 官方的工具调用协议,所以才会发 {"role": "tool"} 的消息。中转代理一旦不支持,就直接 400。

解决方案

方案 1(推荐,最简单):换成官方api

如果你一定要用 FunctionAgent,那么代理必须支持 tool calling。

换掉中转代理,直接用官方 https://api.openai.com/v1。

确保 OPENAI_API_KEY 可用

方案 2:换成 ReActAgent

ReActAgent 不依赖 Tool Calling 协议,它只用纯文本 ReAct 推理方式来调工具,更兼容各种代理。

成功跑通代码:

python

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.core.agent import ReActAgent

from llama_index.core.tools import FunctionTool

from llama_index.llms.openai import OpenAI as LIOpenAI

import asyncio, os

from dotenv import load_dotenv

load_dotenv()

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

def multiply(a: float, b: float) -> float:

return a * b

async def search_documents(query: str) -> str:

resp = await query_engine.aquery(query)

return str(resp)

# 关键:把代理地址和 key 显式传给 LlamaIndex 的 OpenAI 封装

llm = LIOpenAI(

model="gpt-4o-mini", # 换成你的代理支持的模型名

api_key=os.environ[""],

api_base=os.environ[""],

)

tools = [

FunctionTool.from_defaults(fn=multiply),

FunctionTool.from_defaults(fn=search_documents),

]

# 0.13.2 正确用法:直接实例化

agent = ReActAgent(

tools=tools,

llm=llm,

verbose=True,

system_prompt="You can calculate and search documents."

)

async def main():

ans = await agent.run("What did the author do in college? Also, what's 7 * 8?")

print(ans)

if __name__ == "__main__":

asyncio.run(main())注意

llama-index<=0.12 → 只能 ReActAgent(...) 直接实例化

llama-index==0.13.x → 用 ReActAgent.run(...)

llama-index>=0.14 → 才有 ReActAgent.from_tools(...)