一、什么是微服务

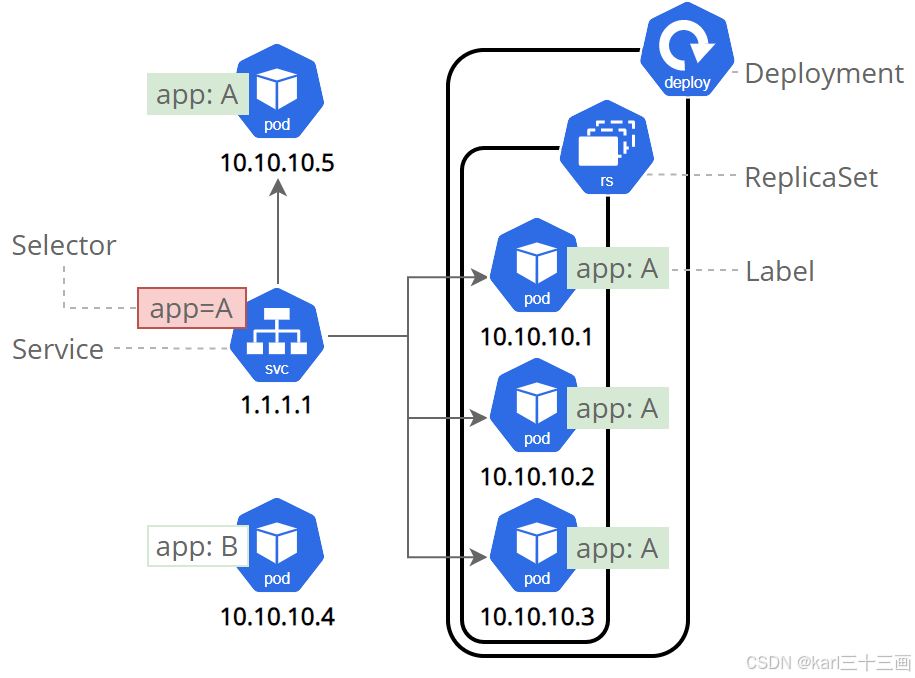

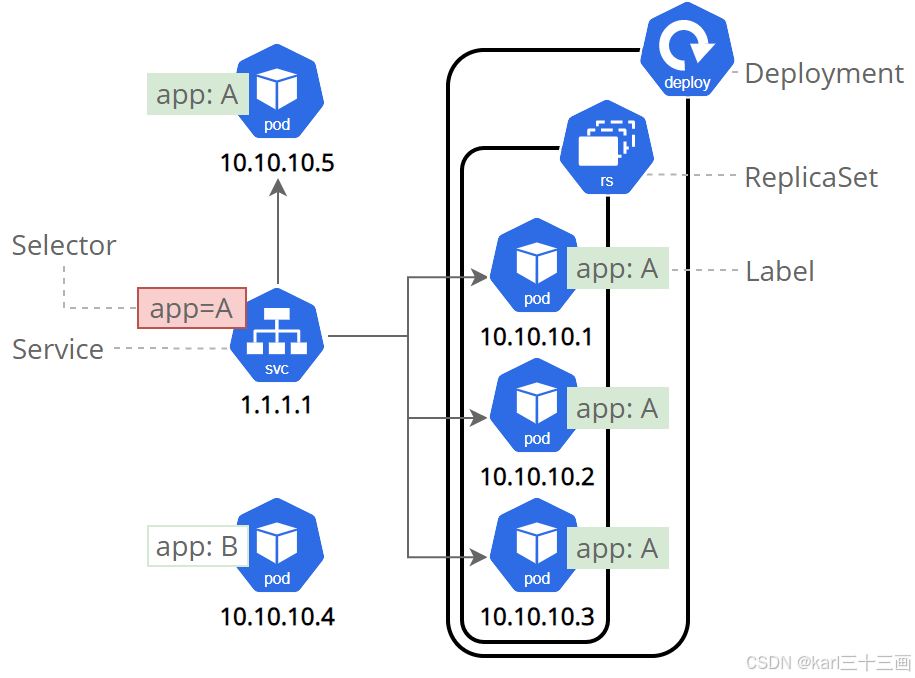

用控制器来完成集群的工作负载,那么应用如何暴漏出去?需要通过微服务暴漏出去后才能被访问

Service是一组提供相同服务的Pod对外开放的接口。

借助Service,应用可以实现服务发现和负载均衡。

service默认只支持4层负载均衡能力,没有7层功能。(可以通过Ingress实现)

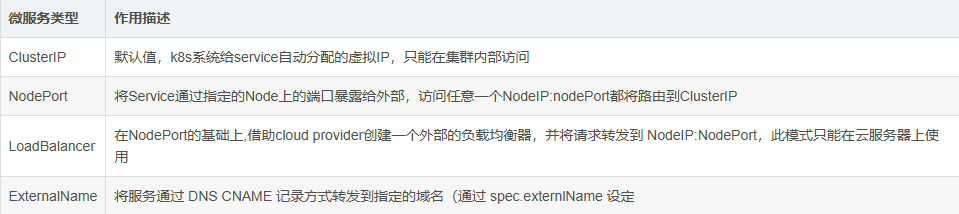

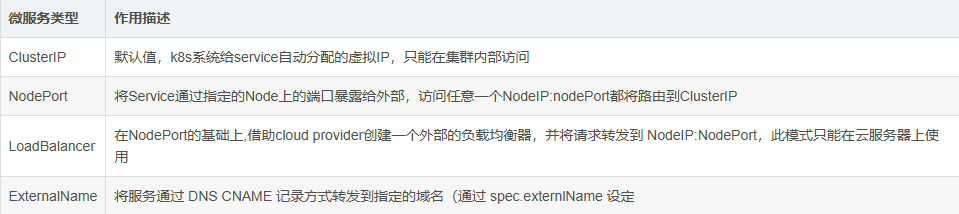

二 微服务的类型

示例:

创建控制器文件并初始化控制器

复制代码

kubectl create deployment timinglee --image myapp:v1 --replicas 2 --dry-run=client -o yaml > timinglee.yaml

生成微服务yaml追加到已有yaml中

复制代码

kubectl expose deployment timinglee --port 80 --target-port 80 --dry-run=client -o yaml >> timinglee.yaml

root@k8s-master \~\]# vim timinglee.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: timinglee

name: timinglee

spec:

replicas: 2

selector:

matchLabels:

app: timinglee

template:

metadata:

creationTimestamp: null

labels:

app: timinglee

spec:

containers:

- image: myapp:v1

name: myapp

--- #不同资源间用---隔开

apiVersion: v1

kind: Service

metadata:

labels:

app: timinglee

name: timinglee

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: timinglee

\[root@k8s-master \~\]# kubectl apply -f timinglee.yaml

deployment.apps/timinglee created

service/timinglee created

\[root@k8s-master \~\]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 \ 443/TCP 19h

timinglee ClusterIP 10.99.127.134 \ 80/TCP 16s

微服务默认使用iptables调度

\[root@k8s-master \~\]# kubectl get services -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 \ 443/TCP 19h \

timinglee ClusterIP 10.99.127.134 \ 80/TCP 119s app=timinglee #集群内部IP 134

#可以在火墙中查看到策略信息

\[root@k8s-master \~\]# iptables -t nat -nL

KUBE-SVC-I7WXYK76FWYNTTGM 6 -- 0.0.0.0/0 10.99.127.134 /\* default/timinglee cluster IP \*/ tcp dpt:80

## 三 ipvs模式

Service 是由 kube-proxy 组件,加上 iptables 来共同实现的

kube-proxy 通过 iptables 处理 Service 的过程,需要在宿主机上设置相当多的 iptables 规则,如果宿主机有大量的Pod,不断刷新iptables规则,会消耗大量的CPU资源

IPVS模式的service,可以使K8s集群支持更多量级的Pod

3.1 ipvs模式配置方式

1 在所有节点中安装ipvsadm

[root@k8s-所有节点 pod]yum install ipvsadm --y

2 修改master节点的代理配置

[root@k8s-master ~]# kubectl -n kube-system edit cm kube-proxy

metricsBindAddress: ""

mode: "ipvs" #设置kube-proxy使用ipvs模式

nftables:

3 重启pod,在pod运行时配置文件中采用默认配置,当改变配置文件后已经运行的pod状态不会变化,所以要重启pod

[root@k8s-master ~]# kubectl -n kube-system get pods | awk '/kube-proxy/{system("kubectl -n kube-system delete pods "$1)}'

[root@k8s-master ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.25.254.100:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.0.3:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.2:9153 Masq 1 0 0

-> 10.244.0.3:9153 Masq 1 0 0

TCP 10.97.59.25:80 rr

-> 10.244.1.17:80 Masq 1 0 0

-> 10.244.2.13:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.0.3:53 Masq 1 0 0

## 四 微服务类型详解

4.1 clusterip

特点:

clusterip模式只能在集群内访问,并对集群内的pod提供健康检测和自动发现功能

示例:

[root@k8s2 service]# vim myapp.yml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: timinglee

name: timinglee

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: timinglee

type: ClusterIP

service创建后集群DNS提供解析

[root@k8s-master ~]# dig timinglee.default.svc.cluster.local @10.96.0.10

; <<>> DiG 9.16.23-RH <<>> timinglee.default.svc.cluster.local @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 27827

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 057d9ff344fe9a3a (echoed)

;; QUESTION SECTION:

;timinglee.default.svc.cluster.local. IN A

;; ANSWER SECTION:

timinglee.default.svc.cluster.local. 30 IN A 10.97.59.25

;; Query time: 8 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 04 13:44:30 CST 2024

;; MSG SIZE rcvd: 127

4.2 ClusterIP中的特殊模式headless

headless(无头服务)

对于无头 Services 并不会分配 Cluster IP,kube-proxy不会处理它们, 而且平台也不会为它们进行负载均衡和路由,集群访问通过dns解析直接指向到业务pod上的IP,所有的调度有dns单独完成、

[root@k8s-master ~]# vim timinglee.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: timinglee

name: timinglee

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: timinglee

type: ClusterIP

clusterIP: None

[root@k8s-master ~]# kubectl delete -f timinglee.yaml

[root@k8s-master ~]# kubectl apply -f timinglee.yaml

deployment.apps/timinglee created

#测试

[root@k8s-master ~]# kubectl get services timinglee

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

timinglee ClusterIP None 80/TCP 6s

[root@k8s-master ~]# dig timinglee.default.svc.cluster.local @10.96.0.10

; <<>> DiG 9.16.23-RH <<>> timinglee.default.svc.cluster.local @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 51527

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 81f9c97b3f28b3b9 (echoed)

;; QUESTION SECTION:

;timinglee.default.svc.cluster.local. IN A

;; ANSWER SECTION:

timinglee.default.svc.cluster.local. 20 IN A 10.244.2.14 #直接解析到pod上

timinglee.default.svc.cluster.local. 20 IN A 10.244.1.18

;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 04 13:58:23 CST 2024

;; MSG SIZE rcvd: 178

#开启一个busyboxplus的pod测试

[root@k8s-master ~]# kubectl run test --image busyboxplus -it

If you don't see a command prompt, try pressing enter.

/ # nslookup timinglee-service

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: timinglee-service

Address 1: 10.244.2.16 10-244-2-16.timinglee-service.default.svc.cluster.local

Address 2: 10.244.2.17 10-244-2-17.timinglee-service.default.svc.cluster.local

Address 3: 10.244.1.22 10-244-1-22.timinglee-service.default.svc.cluster.local

Address 4: 10.244.1.21 10-244-1-21.timinglee-service.default.svc.cluster.local

/ # curl timinglee-service

Hello MyApp | Version: v1 | Pod Name

/ # curl timinglee-service/hostname.html

timinglee-c56f584cf-b8t6m

4.3 nodeport

通过ipvs暴漏端口从而使外部主机通过master节点的对外ip:\来访问pod业务

其访问过程为:

示例:

[root@k8s-master ~]# vim timinglee.yam

---

apiVersion: v1

kind: Service

metadata:

labels:

app: timinglee-service

name: timinglee-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: timinglee

type: NodePort

[root@k8s-master ~]# kubectl apply -f timinglee.yaml

deployment.apps/timinglee created

service/timinglee-service created

[root@k8s-master ~]# kubectl get services timinglee-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

timinglee-service NodePort 10.98.60.22 80:31771/TCP 8

nodeport在集群节点上绑定端口,一个端口对应一个服务

[root@k8s-master ~]# for i in {1..5}

> do

> curl 172.25.254.100:31771/hostname.html

> done

timinglee-c56f584cf-fjxdk

timinglee-c56f584cf-5m2z5

timinglee-c56f584cf-z2w4d

timinglee-c56f584cf-tt5g6

timinglee-c56f584cf-fjxdk

[root@k8s-master ~]# vim timinglee.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: timinglee-service

name: timinglee-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

nodePort: 33333

selector:

app: timinglee

type: NodePort

[root@k8s-master ~]# kubectl apply -f timinglee.yaml

deployment.apps/timinglee created

The Service "timinglee-service" is invalid: spec.ports[0].nodePort: Invalid value: 33333: provided port is not in the valid range. The range of valid ports is 30000-32767

如果需要使用这个范围以外的端口就需要特殊设定

[root@k8s-master ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

- --service-node-port-range=30000-40000

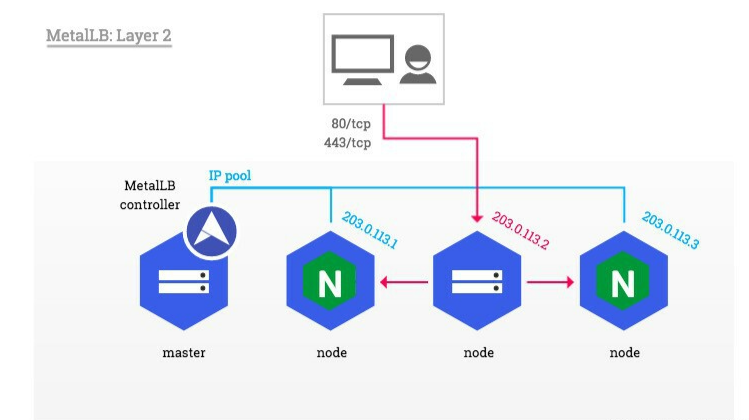

4.4 loadbalancer

云平台会为我们分配vip并实现访问,如果是裸金属主机那么需要metallb来实现ip的分配

[root@k8s-master ~]# vim timinglee.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: timinglee-service

name: timinglee-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: timinglee

type: LoadBalancer

[root@k8s2 service]# kubectl apply -f myapp.yml

默认无法分配外部访问IP

[root@k8s2 service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 4d1h

myapp LoadBalancer 10.107.23.134 80:32537/TCP 4s

LoadBalancer模式适用云平台,裸金属环境需要安装metallb提供支持

4.5 metalLB

官网:[Installation :: MetalLB, bare metal load-balancer for Kubernetes](https://metallb.universe.tf/installation/ "Installation :: MetalLB, bare metal load-balancer for Kubernetes")

metalLB功能

为LoadBalancer分配vip

部署方式

1.设置ipvs模式

[root@k8s-master ~]# kubectl edit cm -n kube-system kube-proxy

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

ipvs:

strictARP: true

[root@k8s-master ~]# kubectl -n kube-system get pods | awk '/kube-proxy/{system("kubectl -n kube-system delete pods "$1)}'

2.下载部署文件

[root@k8s2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.13.12/config/manifests/metallb-native.yaml

3.修改文件中镜像地址,与harbor仓库路径保持一致

[root@k8s-master ~]# vim metallb-native.yaml

...

image: metallb/controller:v0.14.8

image: metallb/speaker:v0.14.8

4.上传镜像到harbor

[root@k8s-master ~]# docker pull quay.io/metallb/controller:v0.14.8

[root@k8s-master ~]# docker pull quay.io/metallb/speaker:v0.14.8

[root@k8s-master ~]# docker tag quay.io/metallb/speaker:v0.14.8 reg.timinglee.org/metallb/speaker:v0.14.8

[root@k8s-master ~]# docker tag quay.io/metallb/controller:v0.14.8 reg.timinglee.org/metallb/controller:v0.14.8

[root@k8s-master ~]# docker push reg.timinglee.org/metallb/speaker:v0.14.8

[root@k8s-master ~]# docker push reg.timinglee.org/metallb/controller:v0.14.8

部署服务

[root@k8s2 metallb]# kubectl apply -f metallb-native.yaml

[root@k8s-master ~]# kubectl -n metallb-system get pods

NAME READY STATUS RESTARTS AGE

controller-65957f77c8-25nrw 1/1 Running 0 30s

speaker-p94xq 1/1 Running 0 29s

speaker-qmpct 1/1 Running 0 29s

speaker-xh4zh 1/1 Running 0 30s

配置分配地址段

[root@k8s-master ~]# vim configmap.yml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool #地址池名称

namespace: metallb-system

spec:

addresses:

- 172.25.254.50-172.25.254.99 #修改为自己本地地址段

--- #两个不同的kind中间必须加分割

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

namespace: metallb-system

spec:

ipAddressPools:

- first-pool #使用地址池

[root@k8s-master ~]# kubectl apply -f configmap.yml

ipaddresspool.metallb.io/first-pool created

l2advertisement.metallb.io/example created

[root@k8s-master ~]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 21h

timinglee-service LoadBalancer 10.109.36.123 172.25.254.50 80:31595/TCP 9m9s

#通过分配地址从集群外访问服务

[root@reg ~]# curl 172.25.254.50

Hello MyApp | Version: v1 | Pod Name

4.6 externalname

开启services后,不会被分配IP,而是用dns解析CNAME固定域名来解决ip变化问题

一般应用于外部业务和pod沟通或外部业务迁移到pod内时

在应用向集群迁移过程中,externalname在过度阶段就可以起作用了。

集群外的资源迁移到集群时,在迁移的过程中ip可能会变化,但是域名+dns解析能完美解决此问题

示例

[root@k8s-master ~]# vim timinglee.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: timinglee-service

name: timinglee-service

spec:

selector:

app: timinglee

type: ExternalName

externalName: www.timinglee.org

[root@k8s-master ~]# kubectl apply -f timinglee.yaml

[root@k8s-master ~]# kubectl get services timinglee-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

timinglee-service ExternalName www.timinglee.org 2m58s

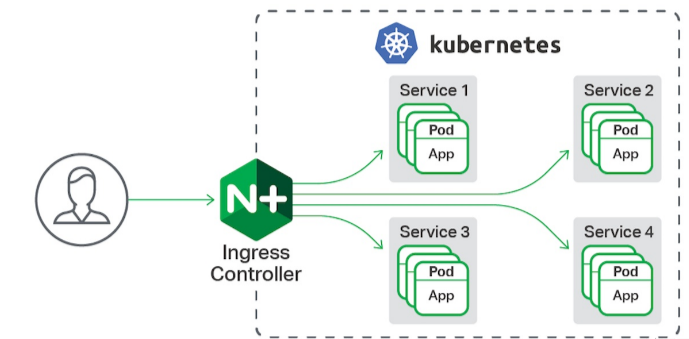

## 五 Ingress-nginx

官网:

[Installation Guide - Ingress-Nginx Controller](https://kubernetes.github.io/ingress-nginx/deploy/#bare-metal-clusters "Installation Guide - Ingress-Nginx Controller")

5.1 ingress-nginx功能

一种全局的、为了代理不同后端 Service 而设置的负载均衡服务,支持7层

Ingress由两部分组成:Ingress controller和Ingress服务

Ingress Controller 会根据你定义的 Ingress 对象,提供对应的代理能力。

业界常用的各种反向代理项目,比如 Nginx、HAProxy、Envoy、Traefik 等,都已经为Kubernetes 专门维护了对应的 Ingress Controller。

5.2 部署ingress

5.2.1 下载部署文件

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.11.2/deploy/static/provider/baremetal/deploy.yaml

上传ingress所需镜像到harbor

[root@k8s-master ~]# docker tag registry.k8s.io/ingress-nginx/controller:v1.11.2@sha256:d5f8217feeac4887cb1ed21f27c2674e58be06bd8f5184cacea2a69abaf78dce reg.timinglee.org/ingress-nginx/controller:v1.11.2

[root@k8s-master ~]# docker tag registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.4.3@sha256:a320a50cc91bd15fd2d6fa6de58bd98c1bd64b9a6f926ce23a600d87043455a3 reg.timinglee.org/ingress-nginx/kube-webhook-certgen:v1.4.3

[root@k8s-master ~]# docker push reg.timinglee.org/ingress-nginx/controller:v1.11.2

[root@k8s-master ~]# docker push reg.timinglee.org/ingress-nginx/kube-webhook-certgen:v1.4.3

5.2.2 安装ingress

[root@k8s-master ~]# vim deploy.yaml

445 image: ingress-nginx/controller:v1.11.2

546 image: ingress-nginx/kube-webhook-certgen:v1.4.3

599 image: ingress-nginx/kube-webhook-certgen:v1.4.3

[root@k8s-master ~]# kubectl -n ingress-nginx get pods

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-ggqm6 0/1 Completed 0 82s

ingress-nginx-admission-patch-q4wp2 0/1 Completed 0 82s

ingress-nginx-controller-bb7d8f97c-g2h4p 1/1 Running 0 82s

[root@k8s-master ~]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.103.33.148 80:34512/TCP,443:34727/TCP 108s

ingress-nginx-controller-admission ClusterIP 10.103.183.64 443/TCP 108s

#修改微服务为loadbalancer

[root@k8s-master ~]# kubectl -n ingress-nginx edit svc ingress-nginx-controller

49 type: LoadBalancer

[root@k8s-master ~]# kubectl -n ingress-nginx get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.103.33.148 172.25.254.50 80:34512/TCP,443:34727/TCP 4m43s

ingress-nginx-controller-admission ClusterIP 10.103.183.64 443/TCP 4m43s

5.2.3 测试ingress

#生成yaml文件

[root@k8s-master ~]# kubectl create ingress webcluster --rule '*/=timinglee-svc:80' --dry-run=client -o yaml > timinglee-ingress.yml

[root@k8s-master ~]# vim timinglee-ingress.yml

aapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: test-ingress

spec:

ingressClassName: nginx

rules:

- http:

paths:

- backend:

service:

name: timinglee-svc

port:

number: 80

path: /

pathType: Prefix

#Exact(精确匹配),ImplementationSpecific(特定实现),Prefix(前缀匹配),Regular expression(正则表达式匹配)

#建立ingress控制器

[root@k8s-master ~]# kubectl apply -f timinglee-ingress.yml

ingress.networking.k8s.io/webserver created

[root@k8s-master ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

test-ingress nginx * 172.25.254.10 80 8m30s

[root@reg ~]# for n in {1..5}; do curl 172.25.254.50/hostname.html; done

timinglee-c56f584cf-8jhn6

timinglee-c56f584cf-8cwfm

timinglee-c56f584cf-8jhn6

timinglee-c56f584cf-8cwfm

timinglee-c56f584cf-8jhn6

5.3 ingress 的高级用法

5.3.1 基于路径的访问

1.建立用于测试的控制器myapp

[root@k8s-master app]# kubectl create deployment myapp-v1 --image myapp:v1 --dry-run=client -o yaml > myapp-v1.yaml

[root@k8s-master app]# kubectl create deployment myapp-v2 --image myapp:v2 --dry-run=client -o yaml > myapp-v2.yaml

[root@k8s-master app]# vim myapp-v1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myapp-v1

name: myapp-v1

spec:

replicas: 1

selector:

matchLabels:

app: myapp-v1

strategy: {}

template:

metadata:

labels:

app: myapp-v1

spec:

containers:

- image: myapp:v1

name: myapp

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp-v1

name: myapp-v1

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp-v1

[root@k8s-master app]# vim myapp-v2.yam1

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myapp-v2

name: myapp-v2

spec:

replicas: 1

selector:

matchLabels:

app: myapp-v2

template:

metadata:

labels:

app: myapp-v2

spec:

containers:

- image: myapp:v2

name: myapp

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp-v2

name: myapp-v2

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp-v2

[root@k8s-master app]# kubectl expose deployment myapp-v1 --port 80 --target-port 80 --dry-run=client -o yaml >> myapp-v1.yaml

[root@k8s-master app]# kubectl expose deployment myapp-v2 --port 80 --target-port 80 --dry-run=client -o yaml >> myapp-v1.yaml

[root@k8s-master app]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 29h

myapp-v1 ClusterIP 10.104.84.65 80/TCP 13s

myapp-v2 ClusterIP 10.105.246.219 80/TCP 7s

2.建立ingress的yaml

[root@k8s-master app]# vim ingress1.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: / #访问路径后加任何内容都被定向到/

name: ingress1

spec:

ingressClassName: nginx

rules:

- host: www.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v1

port:

number: 80

path: /v1

pathType: Prefix

- backend:

service:

name: myapp-v2

port:

number: 80

path: /v2

pathType: Prefix

#测试:

[root@reg ~]# echo 172.25.254.50 www.timinglee.org >> /etc/hosts

[root@reg ~]# curl www.timinglee.org/v1

Hello MyApp | Version: v1 | Pod Name

[root@reg ~]# curl www.timinglee.org/v2

Hello MyApp | Version: v2 | Pod Name

#nginx.ingress.kubernetes.io/rewrite-target: / 的功能实现

[root@reg ~]# curl www.timinglee.org/v2/aaaa

Hello MyApp | Version: v2 | Pod Name

5.3.2 基于域名的访问

#在测试主机中设定解析

[root@reg ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.254.250 reg.timinglee.org

172.25.254.50 www.timinglee.org myappv1.timinglee.org myappv2.timinglee.org

# 建立基于域名的yml文件

[root@k8s-master app]# vim ingress2.yml

------------------------------------------------

版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。

原文链接:https://blog.csdn.net/K1487994142/article/details/142927077

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

name: ingress2

spec:

ingressClassName: nginx

rules:

- host: myappv1.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v1

port:

number: 80

path: /

pathType: Prefix

- host: myappv2.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v2

port:

number: 80

path: /

pathType: Prefix

#利用文件建立ingress

[root@k8s-master app]# kubectl apply -f ingress2.yml

ingress.networking.k8s.io/ingress2 created

[root@k8s-master app]# kubectl describe ingress ingress2

Name: ingress2

Labels:

Namespace: default

Address:

Ingress Class: nginx

Default backend:

Rules:

Host Path Backends

---- ---- --------

myappv1.timinglee.org

/ myapp-v1:80 (10.244.2.31:80)

myappv2.timinglee.org

/ myapp-v2:80 (10.244.2.32:80)

Annotations: nginx.ingress.kubernetes.io/rewrite-target: /

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 21s nginx-ingress-controller Scheduled for sync

#在测试主机中测试

[root@reg ~]# curl www.timinglee.org/v1

Hello MyApp | Version: v1 | Pod Name

[root@reg ~]# curl www.timinglee.org/v2

Hello MyApp | Version: v2 | Pod Name

5.3.3 建立tls加密

#建立证书

[root@k8s-master app]# openssl req -newkey rsa:2048 -nodes -keyout tls.key -x509 -days 365 -subj "/CN=nginxsvc/O=nginxsvc" -out tls.crt

#建立加密资源类型secret

[root@k8s-master app]# kubectl create secret tls web-tls-secret --key tls.key --cert tls.crt

secret/web-tls-secret created

[root@k8s-master app]# kubectl get secrets

NAME TYPE DATA AGE

web-tls-secret kubernetes.io/tls 2 6s

#建立ingress3基于tls认证的yml文件

[root@k8s-master app]# vim ingress3.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

name: ingress3

spec:

tls:

- hosts:

- myapp-tls.timinglee.org

secretName: web-tls-secret

ingressClassName: nginx

rules:

- host: myapp-tls.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v1

port:

number: 80

path: /

pathType: Prefix

#测试

[root@reg ~]# curl -k https://myapp-tls.timinglee.org

Hello MyApp | Version: v1 | Pod Name

5.3.4 建立auth认证

#建立认证文件

[root@k8s-master app]# dnf install httpd-tools -y

[root@k8s-master app]# htpasswd -cm auth lee

New password:

Re-type new password:

Adding password for user lee

[root@k8s-master app]# cat auth

lee:$apr1$BohBRkkI$hZzRDfpdtNzue98bFgcU10

#建立认证类型资源

[root@k8s-master app]# kubectl create secret generic auth-web --from-file auth

root@k8s-master app]# kubectl describe secrets auth-web

Name: auth-web

Namespace: default

Labels:

Annotations:

Type: Opaque

Data

====

auth: 42 bytes

#建立ingress4基于用户认证的yaml文件

[root@k8s-master app]# vim ingress4.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: auth-web

nginx.ingress.kubernetes.io/auth-realm: "Please input username and password"

name: ingress4

spec:

tls:

- hosts:

- myapp-tls.timinglee.org

secretName: web-tls-secret

ingressClassName: nginx

rules:

- host: myapp-tls.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v1

port:

number: 80

path: /

pathType: Prefix

#建立ingress4

[root@k8s-master app]# kubectl apply -f ingress4.yml

ingress.networking.k8s.io/ingress4 created

[root@k8s-master app]# kubectl describe ingress ingress4

Name: ingress4

Labels:

Namespace: default

Address:

Ingress Class: nginx

Default backend:

TLS:

web-tls-secret terminates myapp-tls.timinglee.org

Rules:

Host Path Backends

---- ---- --------

myapp-tls.timinglee.org

/ myapp-v1:80 (10.244.2.31:80)

Annotations: nginx.ingress.kubernetes.io/auth-realm: Please input username and password

nginx.ingress.kubernetes.io/auth-secret: auth-web

nginx.ingress.kubernetes.io/auth-type: basic

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 14s nginx-ingress-controller Scheduled for sync

#测试:

[root@reg ~]# curl -k https://myapp-tls.timinglee.org

Hello MyApp | Version: v1 | Pod Name

[root@reg ~]# vim /etc/hosts ^C

[root@reg ~]# curl -k https://myapp-tls.timinglee.org

401 Authorization Required

401 Authorization Required

nginx

[root@reg ~]# curl -k https://myapp-tls.timinglee.org -ulee:lee

Hello MyApp | Version: v1 | Pod Name

5.3.5 rewrite重定向

#指定默认访问的文件到hostname.html上

[root@k8s-master app]# vim ingress5.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/app-root: /hostname.html

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: auth-web

nginx.ingress.kubernetes.io/auth-realm: "Please input username and password"

name: ingress5

spec:

tls:

- hosts:

- myapp-tls.timinglee.org

secretName: web-tls-secret

ingressClassName: nginx

rules:

- host: myapp-tls.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v1

port:

number: 80

path: /

pathType: Prefix

[root@k8s-master app]# kubectl apply -f ingress5.yml

ingress.networking.k8s.io/ingress5 created

[root@k8s-master app]# kubectl describe ingress ingress5

Name: ingress5

Labels:

Namespace: default

Address: 172.25.254.10

Ingress Class: nginx

Default backend:

TLS:

web-tls-secret terminates myapp-tls.timinglee.org

Rules:

Host Path Backends

---- ---- --------

myapp-tls.timinglee.org

/ myapp-v1:80 (10.244.2.31:80)

Annotations: nginx.ingress.kubernetes.io/app-root: /hostname.html

nginx.ingress.kubernetes.io/auth-realm: Please input username and password

nginx.ingress.kubernetes.io/auth-secret: auth-web

nginx.ingress.kubernetes.io/auth-type: basic

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 2m16s (x2 over 2m54s) nginx-ingress-controller Scheduled for sync

#测试:

[root@reg ~]# curl -Lk https://myapp-tls.timinglee.org -ulee:lee

myapp-v1-7479d6c54d-j9xc6

[root@reg ~]# curl -Lk https://myapp-tls.timinglee.org/lee/hostname.html -ulee:lee

404 Not Found

404 Not Found

nginx/1.12.2

#解决重定向路径问题

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: auth-web

nginx.ingress.kubernetes.io/auth-realm: "Please input username and password"

name: ingress6

spec:

tls:

- hosts:

- myapp-tls.timinglee.org

secretName: web-tls-secret

ingressClassName: nginx

rules:

- host: myapp-tls.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v1

port:

number: 80

path: /

pathType: Prefix

- backend:

service:

name: myapp-v1

port:

number: 80

path: /lee(/|$)(.*) #正则表达式匹配/lee/,/lee/abc

pathType: ImplementationSpecific

#测试

[root@reg ~]# curl -Lk https://myapp-tls.timinglee.org/lee/hostname.html -ulee:lee

myapp-v1-7479d6c54d-j9xc6

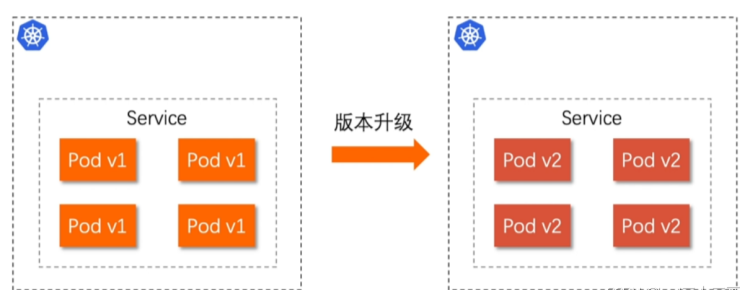

## 六 Canary金丝雀发布

6.1 么是金丝雀发布

金丝雀发布(Canary Release)也称为灰度发布,是一种软件发布策略。

主要目的是在将新版本的软件全面推广到生产环境之前,先在一小部分用户或服务器上进行测试和验证,以降低因新版本引入重大问题而对整个系统造成的影响。

是一种Pod的发布方式。金丝雀发布采取先添加、再删除的方式,保证Pod的总量不低于期望值。并且在更新部分Pod后,暂停更新,当确认新Pod版本运行正常后再进行其他版本的Pod的更新。

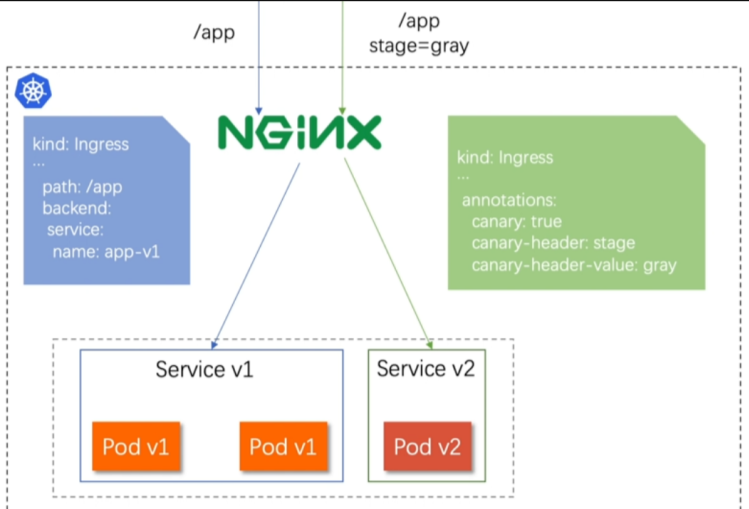

6.2 Canary发布方式

header\>cookie \>weiht

其中header和weiht中的最多

6.2.1 基于header(http包头)灰度

通过Annotaion扩展

创建灰度ingress,配置灰度头部key以及value

灰度流量验证完毕后,切换正式ingress到新版本

之前我们在做升级时可以通过控制器做滚动更新,默认25%利用header可以使升级更为平滑,通过key 和vule 测试新的业务体系是否有问题。

示例:

#建立版本1的ingress

[root@k8s-master app]# vim ingress7.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

name: myapp-v1-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v1

port:

number: 80

path: /

pathType: Prefix

[root@k8s-master app]# kubectl describe ingress myapp-v1-ingress

Name: myapp-v1-ingress

Labels:

Namespace: default

Address: 172.25.254.10

Ingress Class: nginx

Default backend:

Rules:

Host Path Backends

---- ---- --------

myapp.timinglee.org

/ myapp-v1:80 (10.244.2.31:80)

Annotations:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 44s (x2 over 73s) nginx-ingress-controller Scheduled for sync

#建立基于header的ingress

[root@k8s-master app]# vim ingress8.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: version

nginx.ingress.kubernetes.io/canary-by-header-value: 2

name: myapp-v2-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v2

port:

number: 80

path: /

pathType: Prefix

[root@k8s-master app]# kubectl apply -f ingress8.yml

ingress.networking.k8s.io/myapp-v2-ingress created

[root@k8s-master app]# kubectl describe ingress myapp-v2-ingress

Name: myapp-v2-ingress

Labels:

Namespace: default

Address:

Ingress Class: nginx

Default backend:

Rules:

Host Path Backends

---- ---- --------

myapp.timinglee.org

/ myapp-v2:80 (10.244.2.32:80)

Annotations: nginx.ingress.kubernetes.io/canary: true

nginx.ingress.kubernetes.io/canary-by-header: version

nginx.ingress.kubernetes.io/canary-by-header-value: 2

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 21s nginx-ingress-controller Scheduled for sync

#测试:

[root@reg ~]# curl myapp.timinglee.org

Hello MyApp | Version: v1 | Pod Name

[root@reg ~]# curl -H "version: 2" myapp.timinglee.org

Hello MyApp | Version: v2 | Pod Name

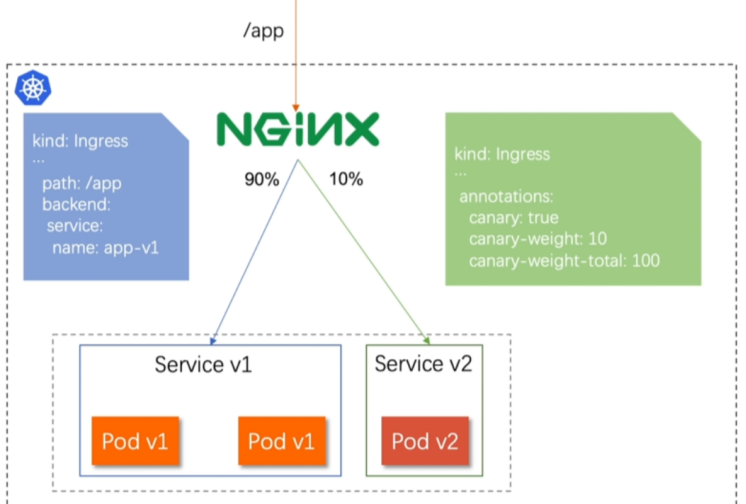

##### 6.2.2 基于权重的灰度发布

* 通过Annotaion拓展

* 创建灰度ingress,配置灰度权重以及总权重

* 灰度流量验证完毕后,切换正式ingress到新版本

示例

#基于权重的灰度发布

[root@k8s-master app]# vim ingress8.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "10" #更改权重值

nginx.ingress.kubernetes.io/canary-weight-total: "100"

name: myapp-v2-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp.timinglee.org

http:

paths:

- backend:

service:

name: myapp-v2

port:

number: 80

path: /

pathType: Prefix

[root@k8s-master app]# kubectl apply -f ingress8.yml

ingress.networking.k8s.io/myapp-v2-ingress created

#测试:

[root@reg ~]# vim check_ingress.sh

#!/bin/bash

v1=0

v2=0

for (( i=0; i<100; i++))

do

response=`curl -s myapp.timinglee.org |grep -c v1`

v1=`expr $v1 + $response`

v2=`expr $v2 + 1 - $response`

done

echo "v1:$v1, v2:$v2"

[root@reg ~]# sh check_ingress.sh

v1:90, v2:10

#更改完毕权重后继续测试可观察变化