在Linux操作系统中,视频流的获取和处理是许多多媒体和计算机视觉应用的基础,无论是在实时监控、视频分析,还是在机器人视觉、自动驾驶等领域,视频流的高效捕捉和处理至关重要。Linux提供了多种工具和库来实现视频流的获取,其中 V4L2(Video4Linux2) 和 OpenCV 是最常用的两种方式。

1 两种方式优劣

V4L2 是Linux平台下的视频采集标准接口,它提供了与摄像头等视频设备进行交互的低级别控制方式,可以直接操作硬件设备进行视频流的捕捉,具有高效且灵活的优势。而 OpenCV 则是一个广泛使用的计算机视觉库,它封装了V4L2等底层接口,提供了更高级别、易于使用的API,适合进行快速开发和原型设计。两者在实现上各有特点,V4L2适合需要细粒度控制的低级操作,而OpenCV则适合对开发效率有较高要求的项目。

V4L2 相较于 OpenCV 更加复杂,需要进行底层的设备控制和手动配置,但它在 CPU 占用方面表现出色。

2 V4l2打开方式

2.1 查看存在的摄像头

cmd

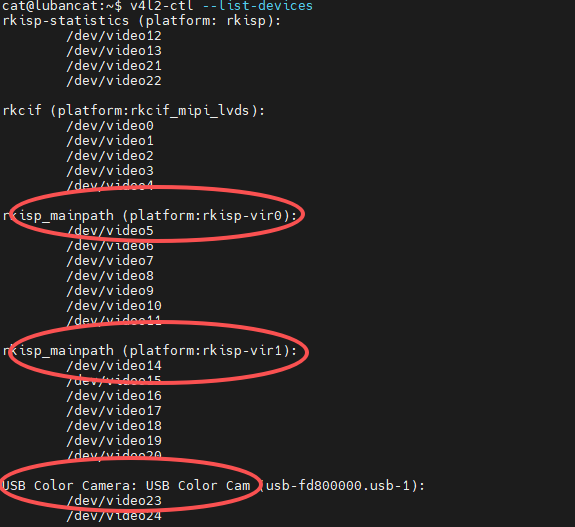

v4l2-ctl --list-devices

如图所示,为了演示,我这里上了两个ov8858和一个普通usb摄像头。

ov8858打开设备树方式请看这里:ov8858

2.2 查看摄像头类型

cmd

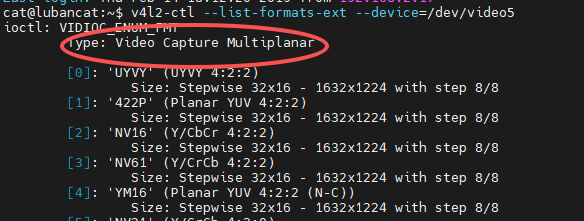

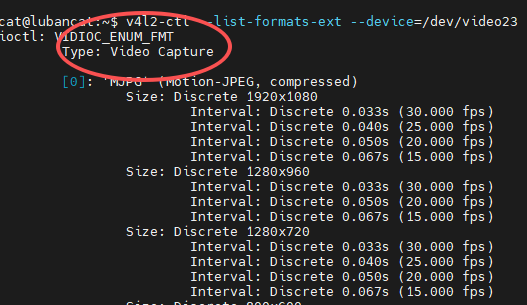

v4l2-ctl --list-formats-ext --device=/dev/video5

如图所示,两种相机的类型并不相同,USB摄像机是Video Capture,ov8858的类型为Video Capture Multiplanar,这里为什么强调这个,因为后面写代码会用到。

而摄像头下面的UYVY、422P、MJPG等为摄像头支持的格式,注意这里的1632x1224 和1920x1080 ,表示最大支持分辨率,而不是只支持这一种分辨率。

2.3 V4l2测试代码

C++

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <sys/ioctl.h>

#include <unistd.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <linux/types.h>

#include <linux/videodev2.h>

#include <poll.h>

#include <sys/mman.h>

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace std;

typedef struct CameraParam__{

//camUrl eg: /dev/video0

const char* camUrl;

//camType eg: 1/2

int camType;

unsigned int outDataFormat;

unsigned int camWidth;

unsigned int camHight;

}CameraParam;

class V4l2Util

{

public:

V4l2Util()

{

}

~V4l2Util()

{

CloseCamera();

}

public:

bool InitCaptureParams(CameraParam camParam);

void GetCurFrame1(cv::Mat& curFrame);

void GetCurFrame2(cv::Mat& curFrame);

private:

bool CheckCameraSupport(CameraParam camParam);

bool MultiPlaneRequestBuffer();

bool SinglePlaneRequestBuffer();

void CloseCamera();

private:

int m_nCaptureFd;

int m_nBufType;

int m_nCamType;

int m_nNumPlanes;

void *m_pType2Buffer[VIDEO_MAX_FRAME][VIDEO_MAX_PLANES];

void *m_pType1Buffer;

v4l2_buffer m_pV4l2Buffer1;

int m_nGotBuffCount;

int m_nOutDataType;

int m_nFrameWidth;

int m_nFrameHeight;

};

void V4l2Util::GetCurFrame1(cv::Mat& curFrame)

{

if(m_nCamType != 1)

{

cerr << "Please Select The Correct Function!!" << endl;

return;

}

//v4l2_buffer buffer;

// 获取图像数据

if (ioctl(m_nCaptureFd, VIDIOC_QBUF, &m_pV4l2Buffer1) == -1)

{

cerr << "Failed to enqueue buffer!" << endl;

return;

}

fd_set fds;

FD_ZERO(&fds);

FD_SET(m_nCaptureFd, &fds);

timeval tv;

tv.tv_sec = 2;

tv.tv_usec = 0;

// 等待数据准备就绪

int r = select(m_nCaptureFd + 1, &fds, NULL, NULL, &tv);

if (r == -1)

{

cerr << "Failed to wait for data!" << endl;

return;

}

else if (r == 0)

{

cerr << "Timeout waiting for data!" << endl;

return;

}

// 获取图像数据

if (ioctl(m_nCaptureFd, VIDIOC_DQBUF, &m_pV4l2Buffer1) == -1)

{

cerr << "Failed to dequeue buffer!" << endl;

return;

}

std::vector<uchar> bufVec((uchar*)m_pType1Buffer, (uchar*)m_pType1Buffer + m_pV4l2Buffer1.bytesused); // 转换为vector<uchar>

curFrame = imdecode(bufVec, cv::IMREAD_COLOR);

}

void V4l2Util::GetCurFrame2(cv::Mat& curFrame)

{

if(m_nCamType != 2)

{

cerr << "Please Select The Correct Function!!" << endl;

return;

}

curFrame = cv::Mat();

struct pollfd fds[1];

struct v4l2_buffer buf;

struct v4l2_plane planes[m_nNumPlanes];

memset(fds, 0, sizeof(fds));

fds[0].fd = m_nCaptureFd;

fds[0].events = POLLIN;

if (poll(fds, 1, -1) == 1)

{

memset(&buf, 0, sizeof(struct v4l2_buffer));

buf.type = m_nBufType;

buf.memory = V4L2_MEMORY_MMAP;

buf.m.planes = planes;

buf.length = m_nNumPlanes;

if (ioctl(m_nCaptureFd, VIDIOC_DQBUF, &buf) != 0)

{

printf("Unable to dequeue buffer\n");

curFrame = cv::Mat();

}

/* queue buffer */

if (ioctl(m_nCaptureFd, VIDIOC_QBUF, &buf) != 0)

{

printf("Unable to queue buffer\n");

}

if(m_nOutDataType == V4L2_PIX_FMT_NV21)

{

/////convert YUV to BGR for OpenCV

cv::Mat yuvImage(m_nFrameHeight + m_nFrameHeight / 2, m_nFrameWidth, CV_8UC1, m_pType2Buffer[buf.index][0]);

cvtColor(yuvImage, curFrame, cv::COLOR_YUV2BGR_NV21);

}

}

}

bool V4l2Util::InitCaptureParams(CameraParam camParam)

{

m_nCaptureFd = open(camParam.camUrl, O_RDWR);

if (m_nCaptureFd < 0)

{

printf("can not open %s\n", camParam.camUrl);

return false;

}

m_nCamType = camParam.camType;

if(camParam.camType == 1)

{ ///v4l2-ctl --list-formats-ext --device=/dev/video0

////Type: Video Capture

m_nBufType = V4L2_BUF_TYPE_VIDEO_CAPTURE;

}

else if(camParam.camType == 2)

{

////Type: Video Capture Multiplanar

m_nBufType = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

}

else

{

printf("Just Support one or two, Error camType: %d\n", camParam.camType);

return false;

}

m_nFrameWidth = camParam.camWidth;

m_nFrameHeight = camParam.camHight;

m_nOutDataType = camParam.outDataFormat;

//Judge If The Params be Supported

if(!CheckCameraSupport(camParam))

{

printf("CheckCameraSupport Failed!!!\n");

return false;

}

//// set fd camera formt

struct v4l2_format fmt;

fmt.type = m_nBufType;

fmt.fmt.pix.width = camParam.camWidth;

fmt.fmt.pix.height = camParam.camHight;

fmt.fmt.pix.pixelformat = m_nOutDataType;

fmt.fmt.pix.field = V4L2_FIELD_ANY;

if (0 == ioctl(m_nCaptureFd, VIDIOC_S_FMT, &fmt))

{

m_nNumPlanes = fmt.fmt.pix_mp.num_planes;

printf("get m_nNumPlanes: %d \n", m_nNumPlanes);

printf("the final frame-size has been set : %d x %d\n", fmt.fmt.pix.width, fmt.fmt.pix.height);

}

else

{

printf("can not set format\n");

return false;

}

if(m_nCamType == 1)

{

if(!SinglePlaneRequestBuffer())

{

printf("MultiPlaneRequestBuffer Error\n");

return false;

}

}

else if(m_nCamType == 2)

{

if(!MultiPlaneRequestBuffer())

{

printf("MultiPlaneRequestBuffer Error\n");

return false;

}

}

// queue buffer

for(int i = 0; i < m_nGotBuffCount; ++i)

{

struct v4l2_buffer buf;

struct v4l2_plane planes[m_nNumPlanes];

memset(&buf, 0, sizeof(struct v4l2_buffer));

memset(&planes, 0, sizeof(planes));

buf.index = i;

buf.type = m_nBufType;

buf.memory = V4L2_MEMORY_MMAP;

buf.m.planes = planes;

buf.length = m_nNumPlanes;

if (0 != ioctl(m_nCaptureFd, VIDIOC_QBUF, &buf))

{

perror("Unable to queue buffer");

return false;

}

}

//// start camera

if (0 != ioctl(m_nCaptureFd, VIDIOC_STREAMON, &m_nBufType))

{

printf("Unable to start capture\n");

return -1;

}

return true;

}

bool V4l2Util::SinglePlaneRequestBuffer()

{

//v4l2_buffer buffer;

memset(&m_pV4l2Buffer1, 0, sizeof(v4l2_buffer));

// request buffer

v4l2_requestbuffers req;

req.count = 1;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

if (ioctl(m_nCaptureFd, VIDIOC_REQBUFS, &req) == -1)

{

cerr << "Failed to request buffers!" << endl;

close(m_nCaptureFd);

return false;

}

// mapping requested buffer

m_pV4l2Buffer1.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

m_pV4l2Buffer1.memory = V4L2_MEMORY_MMAP;

m_pV4l2Buffer1.index = 0;

if (ioctl(m_nCaptureFd, VIDIOC_QUERYBUF, &m_pV4l2Buffer1) == -1)

{

cerr << "Failed to query buffer!" << endl;

close(m_nCaptureFd);

return false;

}

m_pType1Buffer = mmap(NULL, m_pV4l2Buffer1.length, PROT_READ | PROT_WRITE, MAP_SHARED, m_nCaptureFd, m_pV4l2Buffer1.m.offset);

if (m_pType1Buffer == MAP_FAILED)

{

cerr << "Failed to map buffer!" << endl;

close(m_nCaptureFd);

return false;

}

return true;

}

bool V4l2Util::MultiPlaneRequestBuffer()

{

// ///require buffer

struct v4l2_requestbuffers rb;

memset(&rb, 0, sizeof(struct v4l2_requestbuffers));

//////can adjust

//rb.count = 32;

rb.count = 4;

rb.type = m_nBufType;

rb.memory = V4L2_MEMORY_MMAP;

if (0 == ioctl(m_nCaptureFd, VIDIOC_REQBUFS, &rb))

{

m_nGotBuffCount = rb.count;

for(int i = 0; i < rb.count; i++)

{

struct v4l2_buffer buf;

struct v4l2_plane planes[m_nNumPlanes];

memset(&buf, 0, sizeof(struct v4l2_buffer));

memset(&planes, 0, sizeof(planes));

buf.index = i;

buf.type = m_nBufType;

buf.memory = V4L2_MEMORY_MMAP;

buf.m.planes = planes;

buf.length = m_nNumPlanes;

if (0 == ioctl(m_nCaptureFd, VIDIOC_QUERYBUF, &buf))

{

for (int j = 0; j < m_nNumPlanes; j++)

{

m_pType2Buffer[i][j] = mmap(NULL, buf.m.planes[j].length, PROT_READ | PROT_WRITE, MAP_SHARED,

m_nCaptureFd, buf.m.planes[j].m.mem_offset);

if (m_pType2Buffer[i][j] == MAP_FAILED)

{

printf("Unable to map buffer\n");

return false;

}

}

}

else

{

printf("can not query buffer\n");

return false;

}

}

}

else

{

printf("can not request buffers\n");

return false;

}

return true;

}

bool V4l2Util::CheckCameraSupport(CameraParam camParam)

{

//query the device if support the m_nBufType

struct v4l2_capability cap;

memset(&cap, 0, sizeof(struct v4l2_capability));

if (0 == ioctl(m_nCaptureFd, VIDIOC_QUERYCAP, &cap))

{

if(m_nBufType == V4L2_BUF_TYPE_VIDEO_CAPTURE)

{

if ((cap.capabilities & V4L2_CAP_VIDEO_CAPTURE) == 0)

{

fprintf(stderr, "Error opening device: video capture mplane not supported.\n");

return false;

}

}

else if(m_nBufType == V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE)

{

if ((cap.capabilities & V4L2_CAP_VIDEO_CAPTURE_MPLANE) == 0)

{

fprintf(stderr, "Error opening device: video capture mplane not supported.\n");

return false;

}

}

if(!(cap.capabilities & V4L2_CAP_STREAMING))

{

fprintf(stderr, "does not support streaming i/o\n");

return false;

}

}

else

{

printf("can not get capability\n");

return false;

}

struct v4l2_fmtdesc fmtdesc;

int fmt_index = 0;

// enum all the type

while (1)

{

fmtdesc.index = fmt_index;

fmtdesc.type = m_nBufType;

if (ioctl(m_nCaptureFd, VIDIOC_ENUM_FMT, &fmtdesc) != 0)

{

// if no more format,exit

break;

}

// 打印格式信息

printf("Format %d: %s (0x%08x)\n", fmt_index, fmtdesc.description, fmtdesc.pixelformat);

if(m_nBufType == V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE)

{

if (fmtdesc.pixelformat == camParam.outDataFormat)

{

//Find The format, return true

return true;

}

}

else if(m_nBufType == V4L2_BUF_TYPE_VIDEO_CAPTURE)

{

return true;

}

fmt_index++;

}

printf("not found the suitable type!!\n");

return false;

}

void V4l2Util::CloseCamera()

{

/* close camera */

if (0 != ioctl(m_nCaptureFd, VIDIOC_STREAMOFF, &m_nBufType))

{

printf("Unable to stop capture\n");

return;

}

close(m_nCaptureFd);

}这段代码封装了两种类型(Video Capture和Video Capture Multiplanar)的摄像机camType为1时默认摄像头类型为Video Capture,Video Capture为2时默认摄像头类型为Video Capture Multiplanar。

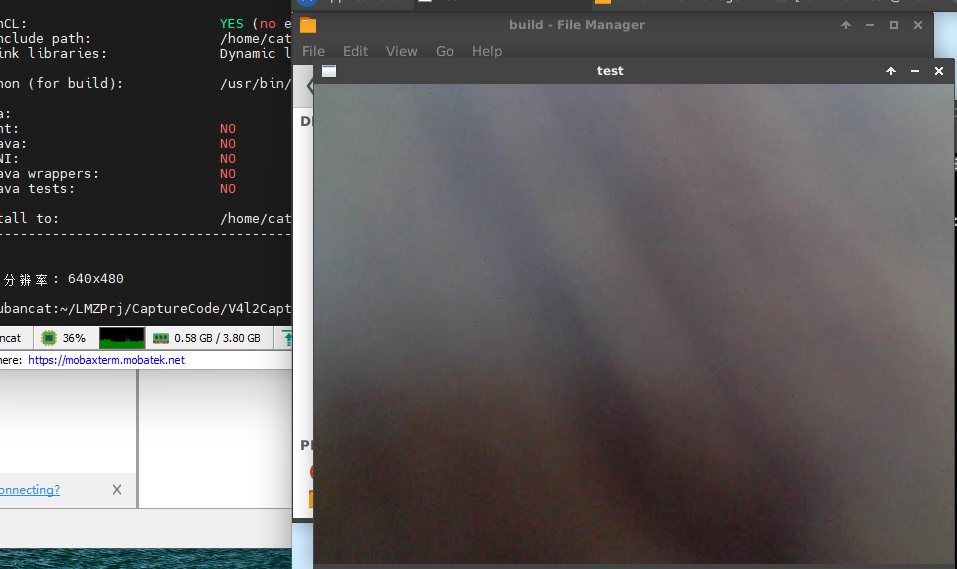

以下为测试代码:

C++

#include "V4l2Util.hpp"

//1632x1224

int main()

{

////Video Capture Type Test

CameraParam params;

params.camUrl = "/dev/video23";

///V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE/V4L2_BUF_TYPE_VIDEO_CAPTURE

params.camType = 1;

params.outDataFormat = V4L2_PIX_FMT_JPEG;

// params.camWidth = 1632;

// params.camHight = 1224;

params.camWidth = 640;

params.camHight = 480;

////Video Capture Multiplanar Type Test

// CameraParam params;

// params.camUrl = "/dev/video5";

// ///V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE/V4L2_BUF_TYPE_VIDEO_CAPTURE

// params.camType = 2;

// params.outDataFormat = V4L2_PIX_FMT_NV21;

// // params.camWidth = 1632;

// // params.camHight = 1224;

// params.camWidth = 640;

// params.camHight = 480;

V4l2Util util;

if(!util.InitCaptureParams(params))

{

std::cout<<"V4l2Util Init Failed!!"<<std::endl;

return -1;

}

while(true)

{

cv::Mat frame;

util.GetCurFrame1(frame);

if(frame.empty())

{

std::cerr<<"Error Get Frame"<<std::endl;

continue;

}

cv::imshow("test",frame);

if (cv::waitKey(1) == 27) // ESC key to exit

{

break;

}

}

return 0;

}3 OpenCV打开方式

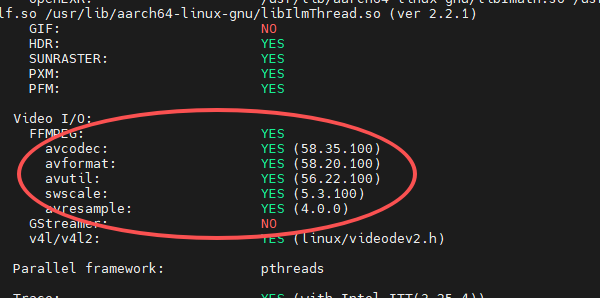

相较于 V4L2,OpenCV 的打开方式显得更加简洁和直接,只需引用 VideoCapture 类即可轻松实现视频流的捕获。然而,在使用 OpenCV 进行视频处理之前,需要确保在编译时启用了 FFmpeg 支持。因为 VideoCapture 类依赖于 FFmpeg 来解码各种视频格式。如果没有正确配置 FFmpeg,某些格式的媒体文件可能无法被正确打开和读取。

3.1 检查OpenCV

C++

#include <opencv2/opencv.hpp>

#include <iostream>

int main()

{

std::cout << "OpenCV Build Information: \n" << cv::getBuildInformation() << std::endl;

return 0;

}

如上图所示,只有编译时启用了 FFmpeg 支持,才能正确打开视频流。

3.2 OpenCV打开视频流代码

c++

#include <opencv2/opencv.hpp>

#include <iostream>

int main()

{

cv::VideoCapture cap(23);

std::cout << "OpenCV Build Information: \n" << cv::getBuildInformation() << std::endl;

// 检查摄像头是否成功打开

if (!cap.isOpened())

{

std::cerr << "无法打开摄像头!" << std::endl;

return -1;

}

//1632x1224

// 获取摄像头的分辨率

double width = cap.get(cv::CAP_PROP_FRAME_WIDTH);

double height = cap.get(cv::CAP_PROP_FRAME_HEIGHT);

// double width = 1632;

// double height = 1224;

std::cout << "摄像头分辨率: " << width << "x" << height << std::endl;

// 创建一个窗口

cv::namedWindow("Camera Feed", cv::WINDOW_NORMAL);

// 捕获视频流并显示

cv::Mat frame;

while (true)

{

// 读取一帧

cap >> frame; // 或者 cap.read(frame)

// 检查是否成功读取帧

if (frame.empty())

{

std::cerr << "读取视频帧失败!" << std::endl;

break;

}

// 显示帧

cv::imshow("Camera Feed", frame);

// 如果按下 'q' 键,退出循环

if (cv::waitKey(1) == 'q') {

break;

}

}

// 释放摄像头资源并关闭窗口

cap.release();

cv::destroyAllWindows();

return 0;

}注意,这里的cv::VideoCapture cap(23)是指打开设备 /dev/video23,具体情况可根据你的设备情况更改。

4 两种方式功耗

opencv打开一路摄像头:

v4l2打开视频流:

明显能看出来v4l2比opencv的cpu占用率低,不知道是否跟opencv版本有关系,我测试的环境为Lubanat2 Debian系统,感兴趣的可以自己测一下。

总结

在Linux操作系统中,视频流的获取和处理是现代多媒体应用和计算机视觉技术的核心,广泛应用于实时监控、视频分析、机器人视觉和自动驾驶等领域。为了高效地捕捉和处理视频流,Linux提供了多种强大的工具和库,其中V4L2(Video4Linux2)和OpenCV是最常用的两种技术。V4L2为底层硬件提供了标准化的接口,支持多种视频设备,并为开发者提供了强大的功能,但使用上相对复杂,需要较多的配置和操作。相比之下,OpenCV提供了更简便的接口,使用VideoCapture类就可以轻松实现视频流的捕获和处理,但需要确保在编译时启用了FFmpeg支持,以便解码各种视频格式。通过选择合适的工具和库,开发者可以在Linux平台上实现高效、稳定的视频流获取和处理,为各种应用场景提供技术支持。