一. 前言

又在研究薅羊毛的方案 ,还是在 Modal 平台上面进行的处理 ,这一期直接把 comfyUI 部署上去了,再也不担心没积分跑图了。

这一篇就带大家好好的薅羊毛 ,我们是专业的。

二. 平台介绍

这里上一篇我们介绍过这个平台 ,有的时候会有一些活动 ,总的来说每个月有30$ 的金额 ,算下来平均生图100张以上应该是没问题 ,多注册几个号也是一个不错的途径。

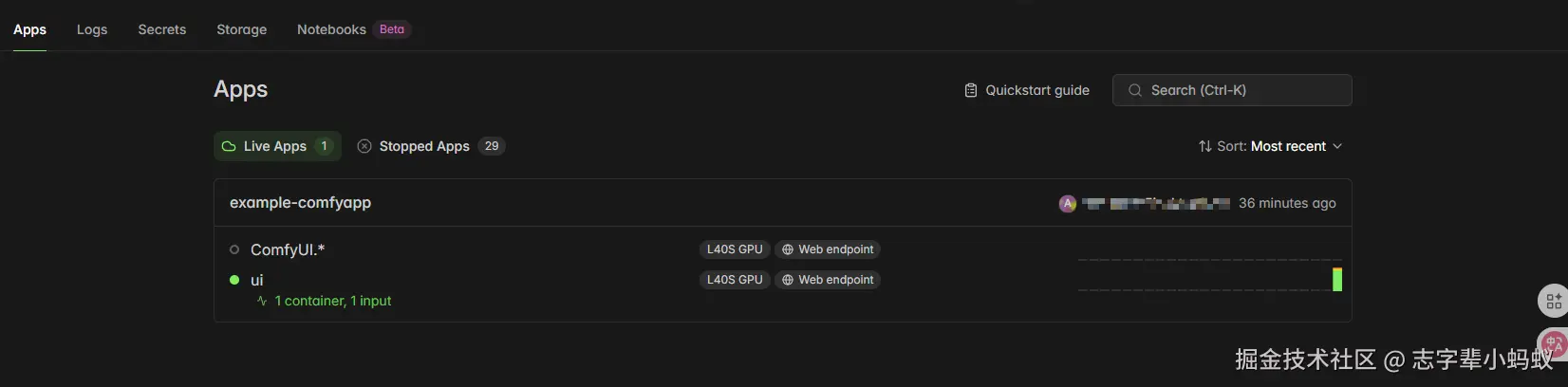

整体界面

- 部署完会有2个组件 ,可以看到里面的对应的部署的 File 文件体系

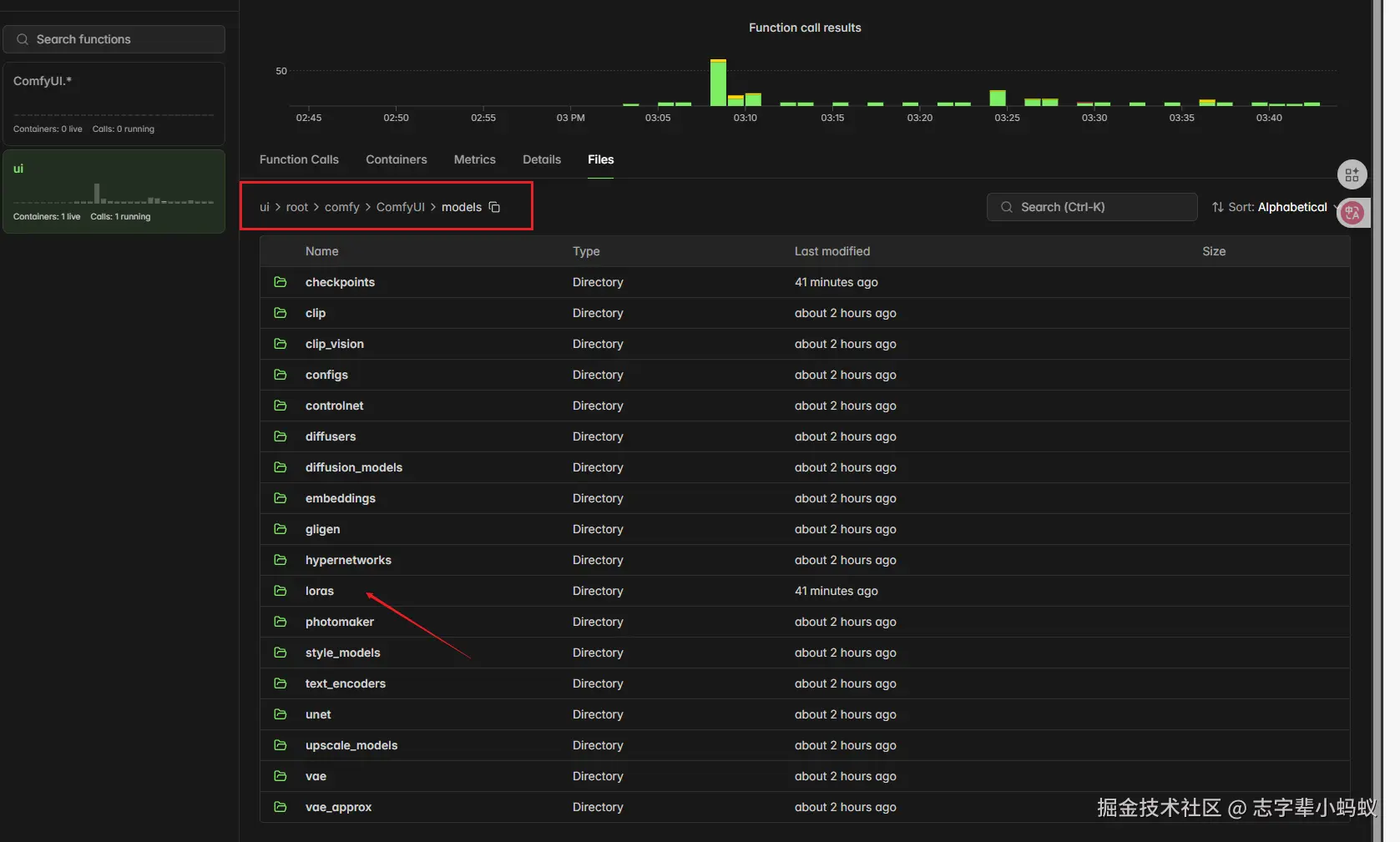

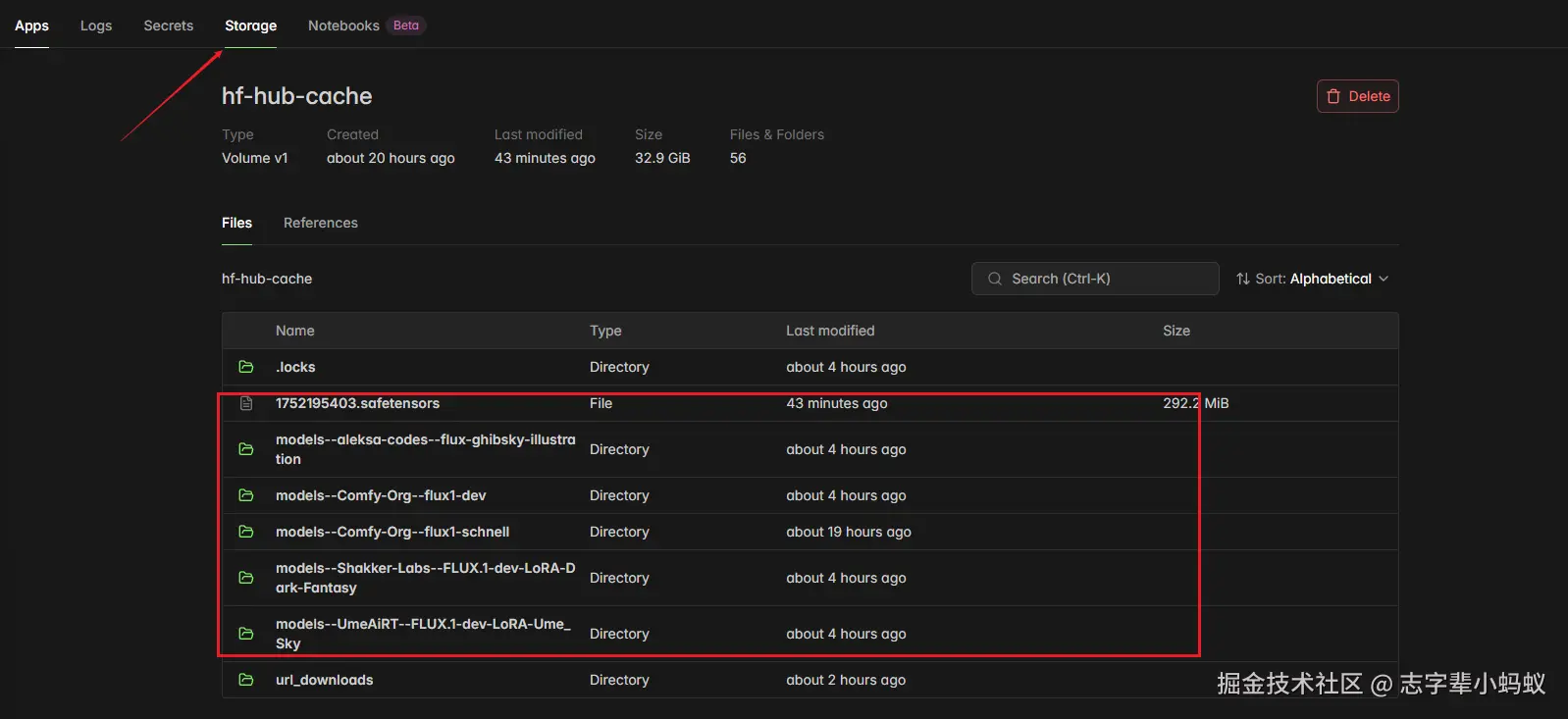

外挂卷 Volumns

- 一般情况下 ,由于模型的体积较大 ,就需要把模型丢到 Volumes 里面 ,避免每次都需要进行下载

- 官方提供的文档里面支持直接从 HuggingFace 里面下载 ,我这里 扩展了其他的加载渠道

三. 上代码

python

# =============================================================================

# ComfyUI Flux API 服务

#

# S1: 环境准备 - 导入依赖包和构建基础镜像

# S2: 模型下载 - 从HuggingFace和远程URL下载模型文件

# S3: 服务配置 - 创建Modal应用和存储卷

# S4: UI服务 - 提供交互式Web界面

# S5: API服务 - 提供图像生成API接口

# =============================================================================

# --- S1: 环境准备阶段 ---

import json

import os

import subprocess

import uuid

from pathlib import Path

from typing import Dict

import modal

import modal.experimental

import requests

# S1.1: 构建基础Docker镜像,安装Python环境和基础依赖

image = (

modal.Image.debian_slim(python_version="3.11")

.apt_install("git")

.pip_install("fastapi[standard]==0.115.4")

.pip_install("comfy-cli==1.4.1")

.pip_install("requests==2.32.3")

.run_commands("comfy --skip-prompt install --fast-deps --nvidia --version 0.3.41")

)

# S1.2: 安装ComfyUI自定义节点扩展

image = image.run_commands(

"comfy node install --fast-deps was-node-suite-comfyui@1.0.2")

# --- S2: 模型下载阶段 ---

def hf_download():

"""

S2: 下载所需的AI模型文件

S2.1: 从HuggingFace下载基础模型和LoRA模型

S2.2: 从远程URL下载额外的模型文件

S2.3: 创建软链接到ComfyUI模型目录

"""

from huggingface_hub import hf_hub_download

# S2.1: 下载HuggingFace基础模型

print("📥 S2.1: 开始下载Flux基础模型...")

flux_model = hf_hub_download(

repo_id="Comfy-Org/flux1-dev",

filename="flux1-dev-fp8.safetensors",

cache_dir="/cache",

)

subprocess.run(

f"ln -s {flux_model} /root/comfy/ComfyUI/models/checkpoints/flux1-dev-fp8.safetensors",

shell=True,

check=True,

)

# S2.2: 下载HuggingFace LoRA模型列表

print("📥 S2.2: 开始下载LoRA模型...")

lora_dir = "/root/comfy/ComfyUI/models/loras"

os.makedirs(lora_dir, exist_ok=True)

lora_models = [

{

"repo_id": "UmeAiRT/FLUX.1-dev-LoRA-Ume_Sky",

"filename": "ume_sky_v2.safetensors",

"local_name": "ume_sky_v2.safetensors"

},

{

"repo_id": "Shakker-Labs/FLUX.1-dev-LoRA-Dark-Fantasy",

"filename": "FLUX.1-dev-lora-Dark-Fantasy.safetensors",

"local_name": "FLUX.1-dev-lora-Dark-Fantasy.safetensors"

},

{

"repo_id": "aleksa-codes/flux-ghibsky-illustration",

"filename": "lora_v2.safetensors",

"local_name": "lora_v2.safetensors"

}

]

for lora in lora_models:

try:

print(f" 📦 下载LoRA: {lora['repo_id']}")

lora_path = hf_hub_download(

repo_id=lora["repo_id"],

filename=lora["filename"],

cache_dir="/cache",

)

subprocess.run(

f"ln -s {lora_path} /root/comfy/ComfyUI/models/loras/{lora['local_name']}",

shell=True,

check=True

)

except Exception as e:

print(f"❌ LoRA下载失败 {lora['repo_id']}: {e}")

# S2.3: 从远程URL下载额外模型

print("📥 S2.3: 开始从远程URL下载模型...")

url_models = [

{

"url": "https://civitai-delivery-worker-prod.5ac0637cfd0766c97916cefa3764fbdf.r2.cloudflarestorage.com/model/9230585/1752195403.4GHo.safetensors?X-Amz-Expires=86400&response-content-disposition=attachment%3B%20filename%3D%221752195403.safetensors%22&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=e01358d793ad6966166af8b3064953ad/20250907/us-east-1/s3/aws4_request&X-Amz-Date=20250907T062143Z&X-Amz-SignedHeaders=host&X-Amz-Signature=3814daa1e9d0c91d1992b32341f64a5d27dcd3135babb7975c1b36914d893617",

"filename": "1752195403.safetensors",

"type": "loras"

},

{

"url": "https://civitai-delivery-worker-prod.5ac0637cfd0766c97916cefa3764fbdf.r2.cloudflarestorage.com/model/17651/flux1LoraFlywayEpic.NKkZ.safetensors?X-Amz-Expires=86400&response-content-disposition=attachment%3B%20filename%3D%22flux.1_lora_flyway_Epic-Characters_v1.safetensors%22&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=e01358d793ad6966166af8b3064953ad/20250907/us-east-1/s3/aws4_request&X-Amz-Date=20250907T083947Z&X-Amz-SignedHeaders=host&X-Amz-Signature=4ae9dcbd8c0205fb258b7839bb5895a94db6831ab9bf87c10936f4eafd6c028a",

"filename": "中世纪风格.safetensors",

"type": "loras"

},

{

"url": "https://civitai-delivery-worker-prod.5ac0637cfd0766c97916cefa3764fbdf.r2.cloudflarestorage.com/model/933225/newFantasyCorev4FLUX.pt13.safetensors?X-Amz-Expires=86400&response-content-disposition=attachment%3B%20filename%3D%22New_Fantasy_CoreV4_FLUX.safetensors%22&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=e01358d793ad6966166af8b3064953ad/20250907/us-east-1/s3/aws4_request&X-Amz-Date=20250907T084003Z&X-Amz-SignedHeaders=host&X-Amz-Signature=45b8f8e990b9105872964a0d6a440b131bcdfcde0e0d8d0d5de29756b24b55d9",

"filename": "幻想风.safetensors",

"type": "loras"

},

{

"url": "https://civitai-delivery-worker-prod.5ac0637cfd0766c97916cefa3764fbdf.r2.cloudflarestorage.com/model/4768839/fluxthous40k.YPhQ.safetensors?X-Amz-Expires=86400&response-content-disposition=attachment%3B%20filename%3D%22FluxThouS40k.safetensors%22&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=e01358d793ad6966166af8b3064953ad/20250907/us-east-1/s3/aws4_request&X-Amz-Date=20250907T084019Z&X-Amz-SignedHeaders=host&X-Amz-Signature=2c3aa9cd675bd52d2a190ffc033b6f98bf434d990b1053be3da5885e57571aa5",

"filename": "铠甲风.safetensors",

"type": "loras"

},

{

"url": "https://civitai-delivery-worker-prod.5ac0637cfd0766c97916cefa3764fbdf.r2.cloudflarestorage.com/model/65967/neonfantasyprimeflux.uMmv.safetensors?X-Amz-Expires=86400&response-content-disposition=attachment%3B%20filename%3D%22NeonFantasyPrimeFLUX-000049.safetensors%22&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=e01358d793ad6966166af8b3064953ad/20250907/us-east-1/s3/aws4_request&X-Amz-Date=20250907T084033Z&X-Amz-SignedHeaders=host&X-Amz-Signature=1a8b596f5218a1f6a56b808907f7bbf72213383b5b35a3a580d731f232810537",

"filename": "异世界.safetensors",

"type": "loras"

}

]

url_download_dir = "/cache"

os.makedirs(url_download_dir, exist_ok=True)

for model in url_models:

final_model_path = os.path.join(

"/root/comfy/ComfyUI/models", model["type"], model["filename"])

# 检查文件是否已存在

if os.path.exists(final_model_path):

print(f"✅ 模型 '{model['filename']}' 已存在,跳过下载")

continue

print(f"⬇️ 正在下载 '{model['filename']}' ...")

cached_file_path = os.path.join(url_download_dir, model["filename"])

try:

with requests.get(model["url"], stream=True, allow_redirects=True) as r:

r.raise_for_status()

with open(cached_file_path, 'wb') as f:

for chunk in r.iter_content(chunk_size=8192):

f.write(chunk)

# 创建软链接到ComfyUI目标目录

os.makedirs(os.path.dirname(final_model_path), exist_ok=True)

subprocess.run(

f"ln -s {cached_file_path} {final_model_path}", shell=True, check=True)

print(f"✅ 模型 '{model['filename']}' 下载并链接完成")

except Exception as e:

print(f"❌ URL下载失败 {model['url']}: {e}")

# --- S3: 服务配置阶段 ---

print("🔧 S3: 开始配置Modal服务...")

# S3.1: 创建持久化存储卷

vol = modal.Volume.from_name("hf-hub-cache", create_if_missing=True)

# S3.2: 完成镜像构建,添加HuggingFace支持和模型文件

image = (

image.pip_install("huggingface_hub[hf_transfer]==0.34.4")

.env({"HF_HUB_ENABLE_HF_TRANSFER": "1"})

.run_function(hf_download, volumes={"/cache": vol})

.add_local_file(

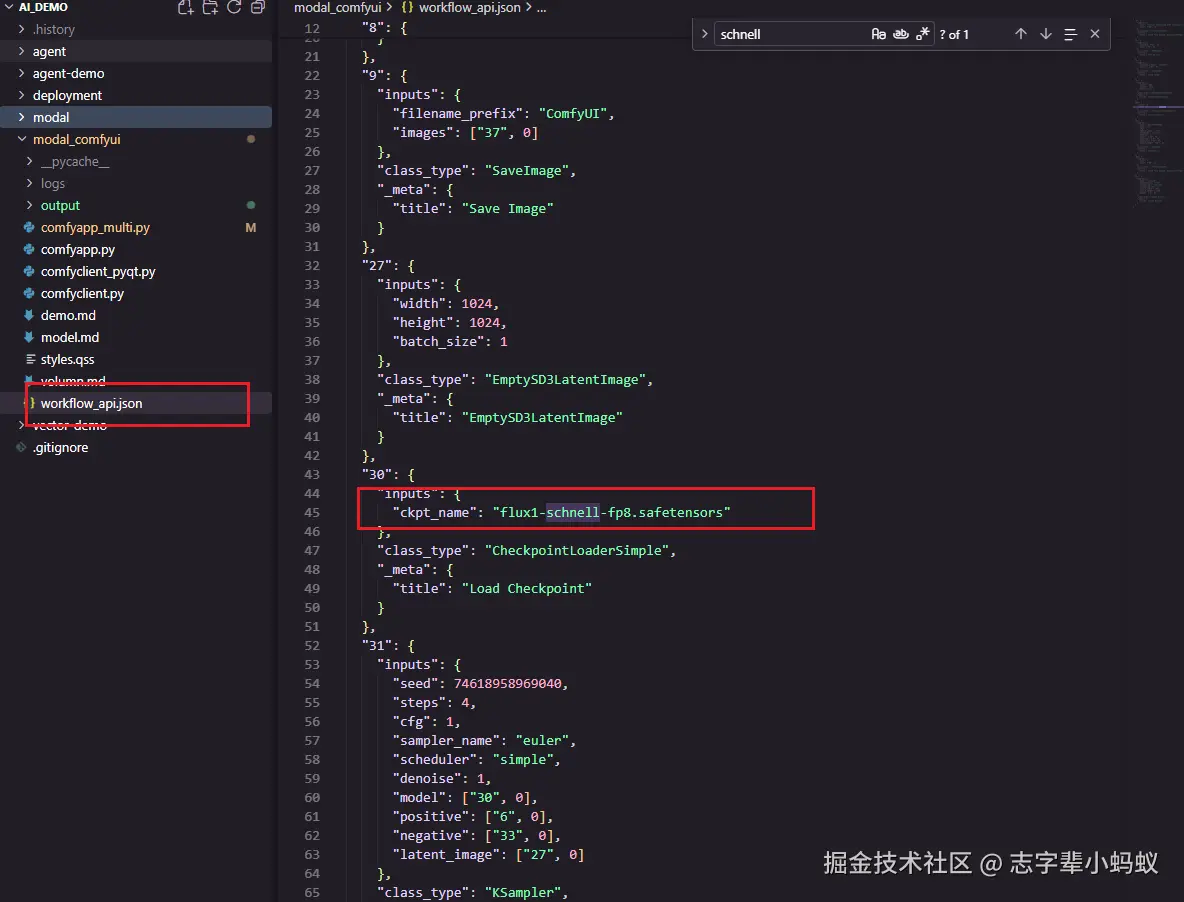

Path(__file__).parent / "workflow_api.json",

"/root/workflow_api.json"

)

.add_local_file(

local_path="C:\\Users\\zzg\Downloads\\F.1风格模型 _ Miboso光影纹理大师_V1.safetensors",

remote_path="/root/comfy/ComfyUI/models/loras/F.1风格模型 _ Miboso光影纹理大师_V1.safetensors"

)

)

# S3.3: 创建Modal应用实例

app = modal.App(name="example-comfyapp", image=image)

# --- S4: UI服务阶段 ---

@app.function(max_containers=1, gpu="L40S", volumes={"/cache": vol})

@modal.concurrent(max_inputs=10)

@modal.web_server(8000, startup_timeout=60)

def ui():

"""

S4: 提供ComfyUI交互式Web界面服务

- 启动ComfyUI Web服务器

- 监听0.0.0.0:8000端口

- 支持最多10个并发用户

"""

print("🌐 S4: 启动ComfyUI交互式Web界面...")

subprocess.Popen(

"comfy launch -- --listen 0.0.0.0 --port 8000",

shell=True

)

# --- S5: API服务阶段 ---

@app.cls(scaledown_window=300, gpu="L40S", volumes={"/cache": vol})

@modal.concurrent(max_inputs=5)

class ComfyUI:

"""

S5: ComfyUI API服务类

提供图像生成的RESTful API接口

支持最多5个并发请求处理

"""

port: int = 8000

@modal.enter()

def launch_comfy_background(self):

"""

S5.1: 容器启动时初始化ComfyUI后台服务

"""

print(f"🚀 S5.1: 启动ComfyUI后台服务,端口: {self.port}")

cmd = f"comfy launch --background -- --port {self.port}"

subprocess.run(cmd, shell=True, check=True)

@modal.method()

def infer(self, workflow_path: str = "/root/workflow_api.json"):

"""

S5.2: 执行图像生成推理

- 检查服务健康状态

- 运行ComfyUI工作流

- 返回生成的图像字节数据

"""

print("🎨 S5.2: 开始执行图像生成推理...")

# S5.2.1: 检查服务健康状态

self.poll_server_health()

# S5.2.2: 执行工作流

cmd = f"comfy run --workflow {workflow_path} --wait --timeout 1200 --verbose"

subprocess.run(cmd, shell=True, check=True)

# S5.2.3: 获取生成的图像文件

output_dir = "/root/comfy/ComfyUI/output"

workflow = json.loads(Path(workflow_path).read_text())

file_prefix = [

node.get("inputs")

for node in workflow.values()

if node.get("class_type") == "SaveImage"

][0]["filename_prefix"]

# S5.2.4: 返回图像字节数据

for f in Path(output_dir).iterdir():

if f.name.startswith(file_prefix):

return f.read_bytes()

@modal.fastapi_endpoint(method="POST")

def api(self, item: Dict):

"""

S5.3: FastAPI端点 - 处理HTTP POST请求

- 接收用户提示词

- 生成唯一的工作流文件

- 调用推理方法生成图像

- 返回图像响应

"""

from fastapi import Response

print("📡 S5.3: 处理API请求...")

# S5.3.1: 加载工作流模板

workflow_data = json.loads(

(Path(__file__).parent / "workflow_api.json").read_text()

)

# S5.3.2: 设置用户提示词

workflow_data["6"]["inputs"]["text"] = item["prompt"]

# S5.3.3: 生成唯一的客户端ID和文件名

client_id = uuid.uuid4().hex

workflow_data["9"]["inputs"]["filename_prefix"] = client_id

# S5.3.4: 保存自定义工作流文件

new_workflow_file = f"{client_id}.json"

json.dump(workflow_data, Path(new_workflow_file).open("w"))

# S5.3.5: 执行推理并返回图像

img_bytes = self.infer.local(new_workflow_file)

return Response(img_bytes, media_type="image/jpeg")

def poll_server_health(self) -> Dict:

"""

S5.4: 健康检查 - 确保ComfyUI服务正常运行

"""

import socket

import urllib

try:

req = urllib.request.Request(

f"http://127.0.0.1:{self.port}/system_stats"

)

urllib.request.urlopen(req, timeout=5)

print("✅ ComfyUI服务健康检查通过")

except (socket.timeout, urllib.error.URLError) as e:

print("❌ ComfyUI服务健康检查失败,停止容器")

modal.experimental.stop_fetching_inputs()

raise Exception(

"ComfyUI server is not healthy, stopping container")四. 代码执行

- modal deploy comfyapp_multi.py

shell

D:\code\python\demo\AI_DEMO\modal_comfyui\comfyapp_multi.py:125: SyntaxWarning: invalid escape sequence '\D'

local_path="C:\\Users\\xxx\Downloads\\F.1风格模型 _ Miboso光影纹理大师_V1.safetensors",

Building image im-CPvMHYnP5JcYjFTX5iDN0d

=> Step 0: running function 'hf_download'

⬇️ Downloading '1752195403.safetensors' from URL...

✅ Download and link for '1752195403.safetensors' complete.

Saving image...

Image saved, took 1.10s

Finished image build for im-CPvMHYnP5JcYjFTX5iDN0d

✓ Created objects.

├── 🔨 Created mount D:\code\python\demo\AI_DEMO\modal_comfyui\comfyapp_multi.py

├── 🔨 Created mount C:\Users\xx\Downloads\F.1风格模型 _ Miboso光影纹理大师_V1.safetensors

├── 🔨 Created mount D:\code\python\demo\AI_DEMO\modal_comfyui\workflow_api.json

├── 🔨 Created function hf_download.

├── 🔨 Created web function ui => https://xxx--example-comfyapp-ui.modal.run

├── 🔨 Created function ComfyUI.*.

└── 🔨 Created web endpoint for ComfyUI.api => https://xxx--example-comfyapp-comfyui-api.modal.run

✓ App deployed in 30.436s! 🎉

View Deployment: https://modal.com/apps/xxx/main/deployed/example-comfyapp

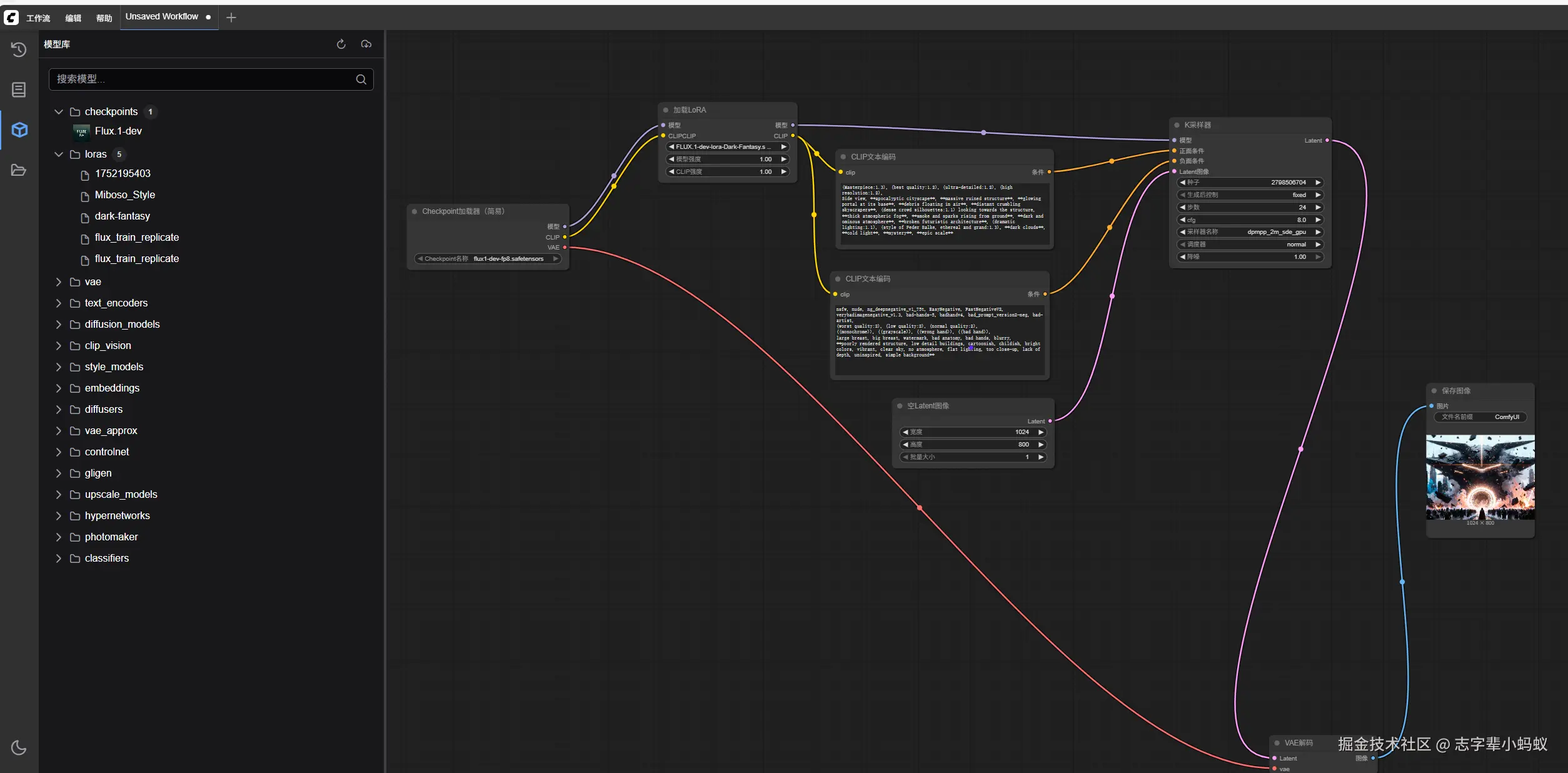

五. 扩展功能

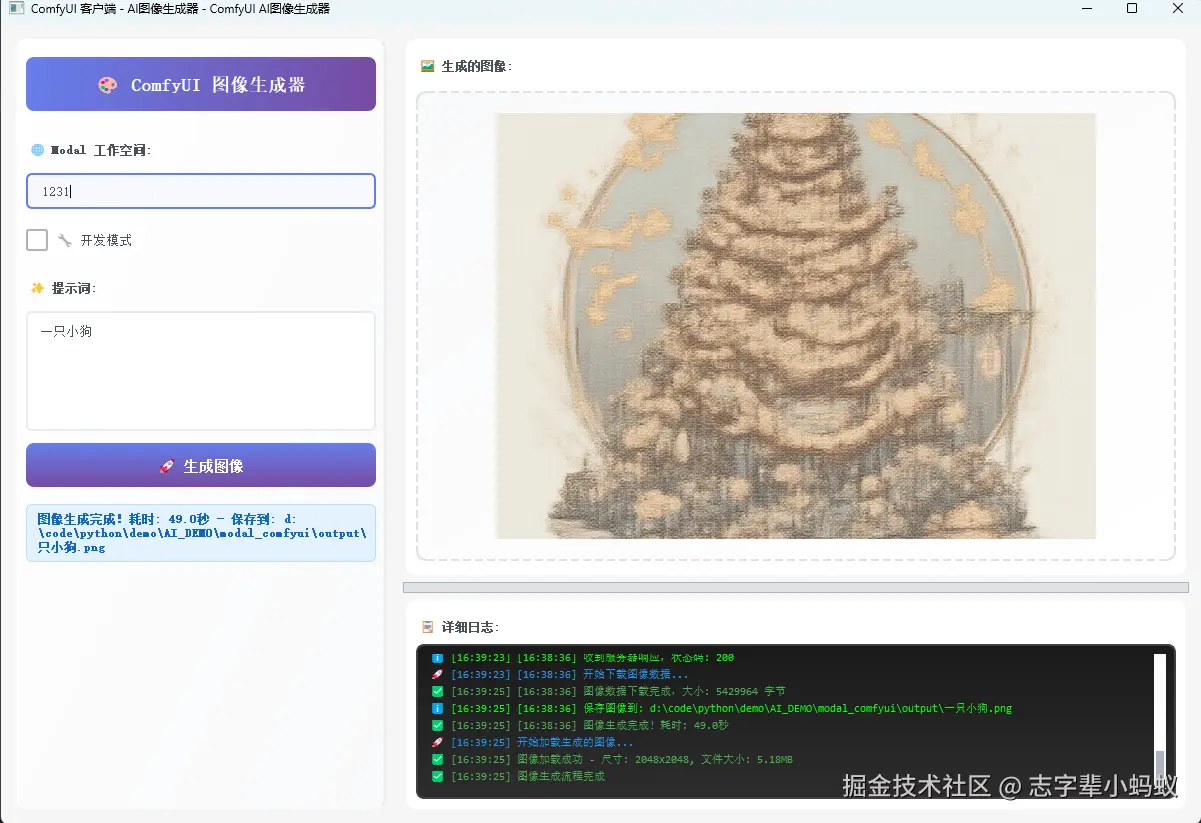

同步的还撸了一个 Client 端 ,通过 API 调用 ,但是内部没有控制参数 ,有需求的也可以用 Client :

总结

消耗还好 ,基本上够用 ,功能也和常规的平台不大 ,可搞。

最后的最后 ❤️❤️❤️👇👇👇

- 👈 欢迎关注 ,超200篇优质文章,未来持续高质量输出 🎉🎉

- 🔥🔥🔥 系列文章集合,高并发,源码应有尽有 👍👍