一 material-admin

1 环境准备(elasticsearch、kibana)

1.1 配置文件准备

1.1.1 elasticsearch.yml

- 启动一个测试容器

shell

docker run -d \

--name test_elasticsearch_8.7.1 \

-e "ES_JAVA_OPTS=-Xms512m -Xmx512m" \

-e "discovery.type=single-node" \

-v /root/elasticsearch_8.7.1/home/data:/usr/share/elasticsearch/data \

-v /root/elasticsearch/elasticsearch_8.7.1/home/plugins:/usr/share/elasticsearch/plugins \

-v /root/elasticsearch/elasticsearch_8.7.1/home/temp:/usr/temp \

-v /root/elasticsearch/elasticsearch_8.7.1/home/logs:/usr/share/elasticsearch/logs \

--privileged \

--network es-net \

-p 9200:9200 \

-p 9300:9300 \

elasticsearch:8.7.1- 拷贝配置文件到宿主机

shell

docker cp test_elasticsearch_8.7.1:/usr/share/elasticsearch/config/elasticsearch.yml /root

yaml

cluster.name: "docker-cluster"

network.host: 0.0.0.0

#----------------------- BEGIN SECURITY AUTO CONFIGURATION -----------------------

#

# The following settings, TLS certificates, and keys have been automatically

# generated to configure Elasticsearch security features on 27-08-2025 02:07:11

#

# --------------------------------------------------------------------------------

# Enable security features

xpack.security.enabled: true

xpack.security.enrollment.enabled: true

# Enable encryption for HTTP API client connections, such as Kibana, Logstash, and Agents

xpack.security.http.ssl:

enabled: true

keystore.path: certs/http.p12

# Enable encryption and mutual authentication between cluster nodes

xpack.security.transport.ssl:

enabled: true

verification_mode: certificate

keystore.path: certs/transport.p12

truststore.path: certs/transport.p12

#----------------------- END SECURITY AUTO CONFIGURATION -------------------------- 删除测试容器

shell

docker rm -f test_elasticsearch_8.7.1- 在宿主机修改配置文件

shell

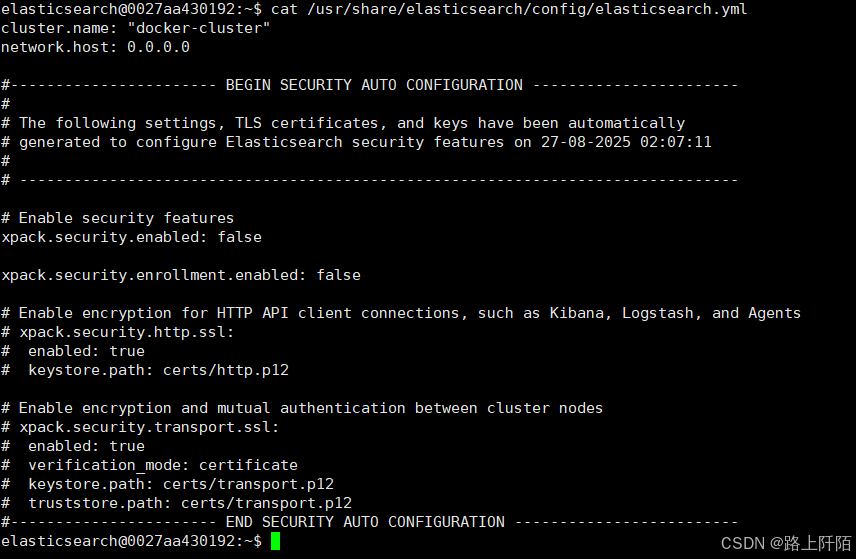

vim /root/elasticsearch.yml将 xpack.security.enabled: true 改为 xpack.security.enabled: false ,

xpack.security.enrollment.enabled: true 改为 xpack.security.enrollment.enabled: false,

同时注释 ssl 相关配置,如下图所示,

这样就可以直接 使用http访问,并且不需要账号密码鉴权,

这个设置看个人情况,如果是生产环境建议开始开启 https和账号密码鉴

- 修改后的配置文件如下

properties

cluster.name: "docker-cluster"

network.host: 0.0.0.0

#----------------------- BEGIN SECURITY AUTO CONFIGURATION -----------------------

#

# The following settings, TLS certificates, and keys have been automatically

# generated to configure Elasticsearch security features on 27-08-2025 02:07:11

#

# --------------------------------------------------------------------------------

# Enable security features

xpack.security.enabled: false

xpack.security.enrollment.enabled: false

# Enable encryption for HTTP API client connections, such as Kibana, Logstash, and Agents

# xpack.security.http.ssl:

# enabled: true

# keystore.path: certs/http.p12

# Enable encryption and mutual authentication between cluster nodes

# xpack.security.transport.ssl:

# enabled: true

# verification_mode: certificate

# keystore.path: certs/transport.p12

# truststore.path: certs/transport.p12

#----------------------- END SECURITY AUTO CONFIGURATION -------------------------1.1.2 kibana.yml

- 启动一个测试容器

shell

docker run -d \

--restart=always \

--name test_kibana_8.7.1 \

--network es-net \

-p 5601:5601 \

-e ELASTICSEARCH_HOSTS=http://127.0.0.1:9200 \

kibana:8.7.1- 拷贝配置文件到宿主机

shell

docker cp test_kibana_8.7.1:/usr/share/kibana/config/kibana.yml /root

yaml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.host: "0.0.0.0"

server.shutdownTimeout: "5s"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]- 删除测试容器

shell

docker rm -f test_kibana_8.7.1- 在宿主机修改配置文件

shell

vim /root/kibana.yml设置中文 ; 修改 elasticsearch.hosts

- 修改后的配置文件如下

properties

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# 设置中文

i18n.locale: "zh-CN"

# 关闭 CSP

csp.strict: false

# Default Kibana configuration for docker target

server.host: "0.0.0.0"

server.shutdownTimeout: "5s"

elasticsearch.hosts: [ "http://ip:9200" ]

monitoring.ui.container.elasticsearch.enabled: true1.1.3 logstash.conf

- 启动一个测试容器

shell

docker run -d \

--restart=always \

--name test_logstash_8.7.1 \

-p 5601:5601 \

-p 4560:4560 \

-p 50000:50000/tcp \

-p 50000:50000/udp \

-p 9600:9600 \

logstash:8.7.1- 拷贝配置文件到宿主机

shell

docker cp test_logstash_8.7.1:/usr/share/logstash/pipeline/logstash.conf /root

yaml

input {

beats {

port => 5044

}

}

output {

stdout {

codec => rubydebug

}

}- 删除测试容器

shell

docker rm -f test_logstash_8.7.1- 在宿主机修改配置文件

shell

vim /root/logstash.conf- 修改后的配置文件如下

properties

input {

tcp {

mode => "server"

host => "0.0.0.0"

port => 4560

codec => json_lines

type => "info"

}

}

filter {}

output {

elasticsearch {

action => "index"

hosts => "ip:9200"

index => "group-buy-test-log-%{+YYYY.MM.dd}"

}

}1.2 创建 Linux 脚本(挂载目录生成和授权、配置文件准备)

1.2.1 docker 网络基本知识

| 操作 | 常用命令 |

|---|---|

| 查看网络列表 | docker network ls |

| 查看网络详情 | docker network inspect <网络名> |

| 创建网络 | docker network create <网络名> |

| 运行容器并加入网络 | docker run --network <网络名> ... |

| 将现有容器加入网络 | docker network connect <网络名> <容器名> |

| 将容器移出网络 | docker network disconnect <网络名> <容器名> |

| 删除空网络 | docker network rm <网络名> |

| 清理未使用网络 | docker network prune |

1.2.2 脚本内容

shell

#!/bin/bash

# 创建所有必要的目录

mkdir -p /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/config

mkdir -p /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/data

mkdir -p /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/plugins

mkdir -p /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/temp

mkdir -p /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/logs

mkdir -p /user/szs/tool/docker/container/elk/logstash/logstash_8.7.1/home/config

mkdir -p /user/szs/tool/docker/container/elk/kibana/kibana_8.7.1/home/config

# 设置目录权限

chmod 777 /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/config

chmod 777 /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/data

chmod 777 /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/plugins

chmod 777 /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/temp

chmod 777 /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/logs

# 复制配置文件(假设当前目录存在这些文件)

cp /root/elasticsearch.yml /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/config

cp /root/logstash.conf /user/szs/tool/docker/container/elk/logstash/logstash_8.7.1/home/config

cp /root/kibana.yml /user/szs/tool/docker/container/elk/kibana/kibana_8.7.1/home/config

chmod 777 /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/config/elasticsearch.yml

chmod 777 /user/szs/tool/docker/container/elk/logstash/logstash_8.7.1/home/config/logstash.conf

chmod 777 /user/szs/tool/docker/container/elk/kibana/kibana_8.7.1/home/config/kibana.yml

# 检查并创建 Docker 网络 es-elk(如果不存在)

if ! docker network inspect es-elk >/dev/null 2>&1; then

echo "Docker 网络 es-elk 不存在,正在创建..."

docker network create es-elk

echo "Docker 网络 es-elk 创建成功!"

else

echo "Docker 网络 es-elk 已存在,跳过创建。"

fi

echo "目录创建和配置文件复制完成!"1.2.2 给脚本添加执行权限

shell

chmod +x init-elk1.2.3 运行脚本

shell

./init-elk1.3 运行

1.3.1 文件内容

yaml

services:

elasticsearch:

image: elasticsearch:8.7.1

container_name: elk_elasticsearch_8.7.1

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m" # 设置使用jvm内存大小

- "discovery.type=single-node" # 以单一节点模式启动

ports:

- "9200:9200"

- "9300:9300"

volumes:

- /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/data:/usr/share/elasticsearch/data

- /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/plugins:/usr/share/elasticsearch/plugins

- /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/temp:/usr/temp

- /user/szs/tool/docker/container/elk/elasticsearch/elasticsearch_8.7.1/home/logs:/usr/share/elasticsearch/logs

privileged: true

networks:

- es-elk

logstash:

image: logstash:8.7.1

container_name: elk_logstash_8.7.1

restart: always

volumes:

- /etc/localtime:/etc/localtime

- /user/szs/tool/docker/container/elk/logstash/logstash_8.7.1/home/config/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

ports:

- '4560:4560'

- '50000:50000/tcp'

- '50000:50000/udp'

- '9600:9600'

environment:

LS_JAVA_OPTS: -Xms512m -Xmx512m

TZ: Asia/Shanghai

MONITORING_ENABLED: false

networks:

- es-elk

depends_on:

- elasticsearch # 依赖elasticsearch启动后在启动logstash 依赖的服务名

kibana:

image: kibana:8.7.1

container_name: elk_kibana_8.7.1

ports:

- "5601:5601"

volumes:

- /user/szs/tool/docker/container/elk/kibana/kibana_8.7.1/home/config/kibana.yml:/usr/share/kibana/config/kibana.yml

privileged: true

environment:

ELASTICSEARCH_HOSTS: "http://ip:9200"

networks:

- es-elk

depends_on:

- elasticsearch # 依赖elasticsearch启动后在启动logstash 依赖的服务名

networks:

es-elk:

driver: bridge1.3.2 启动服务

shell

docker compose up -d

shell

docker compose down 1.3.3 补充

1.3.3.1 Elasticsearch

- 进入容器检查配置文件是否被修改

shell

docker exec -it com_elasticsearch_8.7.1 /bin/bash- 检查配置文件

shell

cat /usr/share/elasticsearch/config/elasticsearch.yml

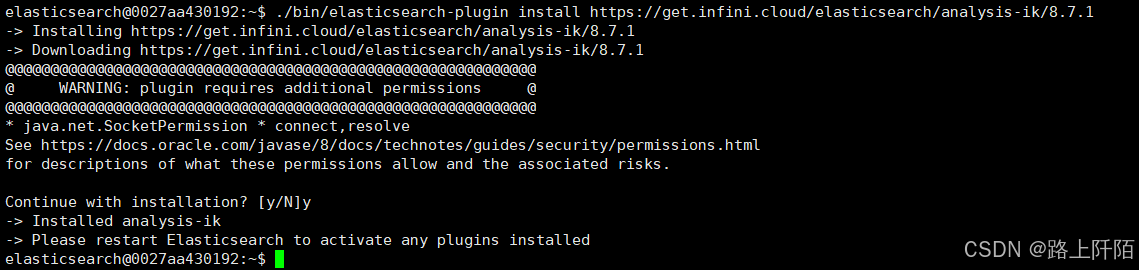

- 安装 ik 分词器

在容器内执行安装命令

shell

./bin/elasticsearch-plugin install https://get.infini.cloud/elasticsearch/analysis-ik/8.7.1

- 重启容器使新安装的分词器生效

shell

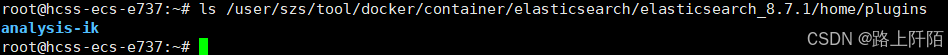

docker restart com_elasticsearch_8.7.1- 在宿主机挂载插件目录检查是否成功安装

shell

ls /user/szs/tool/docker/container/elasticsearch/elasticsearch_8.7.1/home/plugins

1.3.3.2 Kibana

- 进入容器

shell

docker exec -it com_kibana_8.7.1 /bin/bash- 检查 kibana 配置文件是否修改

shell

cat /usr/share/kibana/config/kibana.yml- 访问 kibana 图形界面

shell

ip:56012 应用测试

2.1 引入依赖

xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.scheme</groupId>

<artifactId>CodeScheme</artifactId>

<version>1.0-SNAPSHOT</version>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.2.4</version>

<relativePath/>

</parent>

<properties>

<maven.compiler.source>17</maven.compiler.source>

<maven.compiler.target>17</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<!-- Spring Boot Web -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.projectlombok/lombok -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.36</version>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>5.8.16</version>

</dependency>

<!-- https://mvnrepository.com/artifact/net.logstash.logback/logstash-logback-encoder -->

<!-- elk 测试 -->

<!-- Logstash 日志编码器 -->

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>7.4</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</dependency>

<!-- elk 测试 -->

</dependencies>

</project>2.2 logback-spring.xml

xml

<?xml version="1.0" encoding="UTF-8"?>

<!-- 日志级别从低到高分为TRACE < DEBUG < INFO < WARN < ERROR < FATAL,如果设置为WARN,则低于WARN的信息都不会输出 -->

<configuration scan="true" scanPeriod="10 seconds">

<contextName>logback</contextName>

<!-- name的值是变量的名称,value的值时变量定义的值。通过定义的值会被插入到logger上下文中。定义变量后,可以使"${}"来使用变量。 -->

<springProperty scope="context" name="log.path" source="logging.path"/>

<!--LogStash访问host-->

<springProperty name="LOG_STASH_HOST" scope="context" source="logstash.host" defaultValue="127.0.0.1"/>

<!-- 日志格式 -->

<!-- 这些转换器来自 Spring Boot(spring-boot 提供的 logback 扩展),用于彩色输出或更友好的异常打印。只有在使用 spring-boot-starter-logging 或引入相应类路径时可用-->

<conversionRule conversionWord="clr" converterClass="org.springframework.boot.logging.logback.ColorConverter"/>

<conversionRule conversionWord="wex"

converterClass="org.springframework.boot.logging.logback.WhitespaceThrowableProxyConverter"/>

<conversionRule conversionWord="wEx"

converterClass="org.springframework.boot.logging.logback.ExtendedWhitespaceThrowableProxyConverter"/>

<!-- 输出到控制台 -->

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<!-- 此日志appender是为开发使用,只配置最底级别,控制台输出的日志级别是大于或等于此级别的日志信息 -->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>info</level>

</filter>

<encoder>

<pattern>%d{yy-MM-dd.HH:mm:ss.SSS} [%-16t] %-5p %-22c{0}%X{ServiceId} -%X{trace-id} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<!--输出到文件-->

<!-- 时间滚动输出 level为 INFO 日志 -->

<appender name="INFO_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!-- 正在记录的日志文件的路径及文件名 -->

<file>./data/log/log_info.log</file>

<!--日志文件输出格式-->

<encoder>

<pattern>%d{yy-MM-dd.HH:mm:ss.SSS} [%-16t] %-5p %-22c{0}%X{ServiceId} -%X{trace-id} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

<!-- 日志记录器的滚动策略,按日期,按大小记录 -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- 每天日志归档路径以及格式 -->

<fileNamePattern>./data/log/log-info-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>100MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<!--日志文件保留天数-->

<maxHistory>15</maxHistory>

<totalSizeCap>10GB</totalSizeCap>

</rollingPolicy>

</appender>

<!-- 时间滚动输出 level为 ERROR 日志 -->

<appender name="ERROR_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!-- 正在记录的日志文件的路径及文件名 -->

<file>./data/log/log_error.log</file>

<!--日志文件输出格式-->

<encoder>

<pattern>%d{yy-MM-dd.HH:mm:ss.SSS} [%-16t] %-5p %-22c{0}%X{ServiceId} -%X{trace-id} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

<!-- 日志记录器的滚动策略,按日期,按大小记录 -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>./data/log/log-error-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>100MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<!-- 日志文件保留天数【根据服务器预留,可自行调整】 -->

<maxHistory>7</maxHistory>

<totalSizeCap>5GB</totalSizeCap>

</rollingPolicy>

<!-- WARN 级别及以上 -->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>WARN</level>

</filter>

</appender>

<!-- 异步输出 -->

<appender name="ASYNC_FILE_INFO" class="ch.qos.logback.classic.AsyncAppender">

<!-- 队列剩余容量小于discardingThreshold,则会丢弃TRACT、DEBUG、INFO级别的日志;默认值-1,为queueSize的20%;0不丢失日志 -->

<discardingThreshold>0</discardingThreshold>

<!-- 更改默认的队列的深度,该值会影响性能.默认值为256 -->

<queueSize>8192</queueSize>

<!-- neverBlock:true 会丢失日志,但业务性能不受影响 -->

<neverBlock>true</neverBlock>

<!--是否提取调用者数据-->

<includeCallerData>false</includeCallerData>

<appender-ref ref="INFO_FILE"/>

</appender>

<appender name="ASYNC_FILE_ERROR" class="ch.qos.logback.classic.AsyncAppender">

<!-- 队列剩余容量小于discardingThreshold,则会丢弃TRACT、DEBUG、INFO级别的日志;默认值-1,为queueSize的20%;0不丢失日志 -->

<discardingThreshold>0</discardingThreshold>

<!-- 更改默认的队列的深度,该值会影响性能.默认值为256 -->

<queueSize>1024</queueSize>

<!-- neverBlock:true 会丢失日志,但业务性能不受影响 -->

<neverBlock>true</neverBlock>

<!--是否提取调用者数据-->

<includeCallerData>false</includeCallerData>

<appender-ref ref="ERROR_FILE"/>

</appender>

<!-- 开发环境:控制台打印 -->

<springProfile name="dev">

<logger name="com.nmys.view" level="debug"/>

</springProfile>

<!--输出到logstash的appender-->

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<!--可以访问的logstash日志收集端口-->

<destination>${LOG_STASH_HOST}:4560</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="ASYNC_FILE_INFO"/>

<appender-ref ref="ASYNC_FILE_ERROR"/>

<appender-ref ref="LOGSTASH"/>

</root>

</configuration>2.3 配置文件

yaml

server:

port: 8090

spring:

application:

name: code-scheme-elk

profiles:

active: dev

yaml

logstash:

host: ip2.4 测试代码

java

@Slf4j

@RestController

@RequestMapping("elk")

public class LogTestController {

@GetMapping("/generate-log")

public String generateLog() {

MDC.put("traceId", UUID.randomUUID().toString());

log.trace("This is TRACE message");

log.debug("This is DEBUG message");

log.info("This is INFO message");

log.warn("This is WARN message");

// 生成带异常的日志

try {

throw new RuntimeException("Test exception");

} catch (Exception e) {

log.error("This is ERROR message with exception", e);

}

MDC.clear();

return "Logs generated!";

}

}2.5 检查是否上传成功

2.5.1 检查索引创建情况

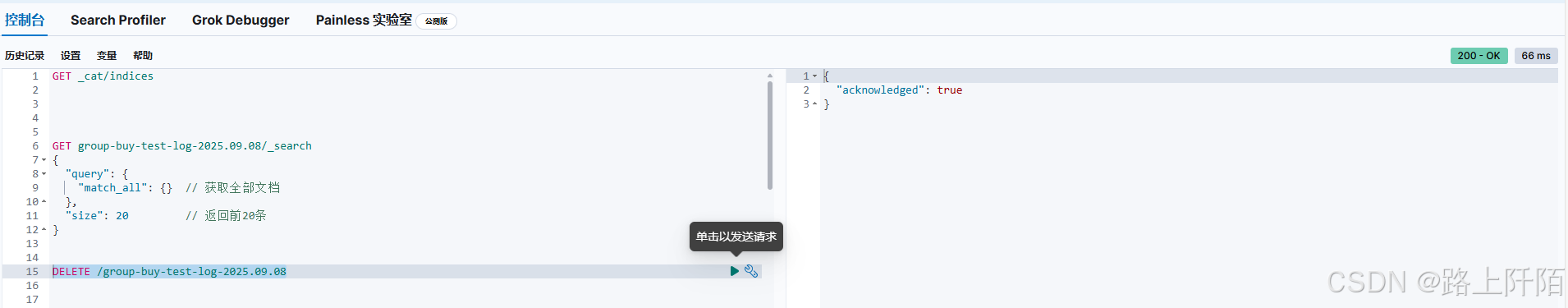

在 kibana 开发工具输入命令:

shell

GET _cat/indices

输出如下:

shell

yellow open group-buy-test-log-2025.09.08 4Zz5W21XR4SQv6ZE0tonog 1 1 151 0 124.5kb 124.5kb分析如下:

shell

yellow # yellow 状态,主分片正常,但副本分片未激活(单节点集群的典型状态)

open group-buy-test-log-2025.09.08

4Zz5W21XR4SQv6ZE0tonog # 索引唯一ID

1 1 # 分片配置:1主分片 + 1副本分片

11 0 # 文档数量:11个有效文档 + 0个已删除文档

37kb 37kb # 存储大小:总大小37KB,主分片大小37KB2.5.2 查看索引数据:

shell

GET group-buy-test-log-2025.09.08/_search

{

"query": {

"match_all": {} // 获取全部文档

},

"size": 20 // 返回前20条

}2.5.3 删除索引

shell

// 删除单个索引

DELETE /group-buy-test-log-2025.09.08

// 删除多个索引(使用通配符)

DELETE /group-buy-test-log-*