1.1 k8s高可用集群环境规划信息:

1.1.1 服务器统计:

| 类型 | 服务器IP地址 | 备注 |

|---|---|---|

| Ansible(2台) | 192.168.121.101/102 | K8s集群部署服务器,可以和其他服务器共用 |

| K8s Master(3台) | 192.168.121.101/102/103 | K8s控制端,通过一个VIP做高可用 |

| Harbor(2台) | 192.168.121.104/105 | 高可用镜像服务器 |

| Etcd(至少3台) | 192.168.121.106/107/108 | 保存k8s集群数据的服务器 |

| Hproxy(2台) | 192.168.121.109/110 | 高可用etcd代理服务器 |

| Node节点(2-N台) | 192.168.121.111/112/113 | 真正运行容器的服务器,高可用环境至少两台 |

1.2 服务器准备

| 类型 | 服务器IP | 主机名 | VIP |

|---|---|---|---|

| master1 | 192.168.121.101 | master1 | 192.168.121.188 |

| master2 | 192.168.121.102 | master2 | 192.168.121.188 |

| master3 | 192.168.121.103 | master3 | 192.168.121.188 |

| Harbor1 | 192.168.121.104 | harbor1 | |

| Harbor2 | 192.168.121.105 | harbor2 | |

| etcd1 | 192.168.121.106 | etcd1 | |

| etcd2 | 192.168.121.107 | etcd2 | |

| etcd3 | 192.168.121.108 | etcd3 | |

| haproxy1 | 192.168.121.109 | haproxy1 | |

| haproxy2 | 192.168.121.110 | haproxy2 | |

| node1 | 192.168.121.111 | node1 | |

| node2 | 192.168.121.112 | node2 | |

| node3 | 192.168.121.113 | node3 |

1.3 k8s集群软件清单

bash

端口:192.168.121.188:6443

操作系统: ubuntu server 22.04.5

k8s版本: 1.20.x

calico: 3.4.41.4 基础环境准备

1.4.1 系统配置

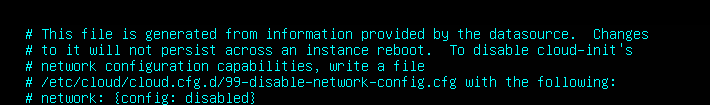

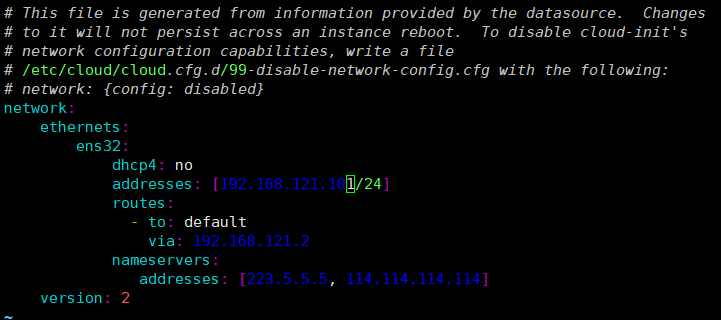

1.4.1.1 配置静态ip

把虚拟机配置成静态的ip地址,这样虚拟机重启后ip地址不会发生改变。

bash

# 每台服务器都需要执行,也可以在一台服务器完成,剩余的服务器克隆模板服务器

root@master1:~# vim /etc/netplan/50-cloud-init.yaml

network:

ethernets: # 以太网接口配置

eht0: # 网卡名称(你的服务器网卡为eht0)

dhcp4: no # 禁用IPv4动态获取(不使用DHCP)

addresses: [192.168.121.101/24] # 静态IPv4地址及子网掩码(/24即255.255.255.0)

routes: # 路由配置

- to: default # 目标:默认路由(所有非本地流量)

via: 192.168.121.2 # 网关地址(通过该地址访问外部网络)

nameservers: # DNS服务器配置

addresses: [223.5.5.5, 114.114.114.114] # 阿里云DNS和国内公共DNS

version: 2 # 配置版本(netplan v2语法)

禁用 cloud-init 对网络的自动配置

bash

vim /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg

network: {config: disabled}

reboot # 重启系统生效

1.4.1.2 配置主机名

bash

# 每台服务器都需要修改

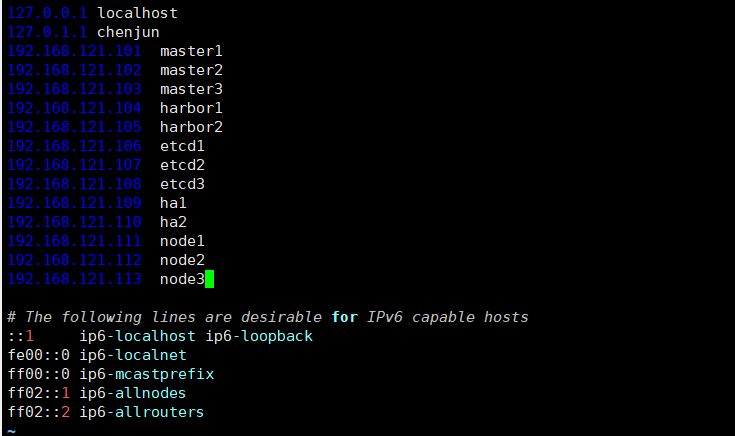

root@master1:~# hostnamectl set-hostname 主机名1.4.1.3 配置 hosts文件

bash

# 每台服务器都需要执行,也可以在一台服务器完成,剩余的服务器克隆模板服务器

root@master1:~# vim /etc/hosts

192.168.121.101 master1

192.168.121.102 master2

192.168.121.103 master3

192.168.121.104 harbor1

192.168.121.105 harbor2

192.168.121.106 etcd1

192.168.121.107 etcd2

192.168.121.108 etcd3

192.168.121.109 haproxy1

192.168.121.110 haproxy2

192.168.121.111 node1

192.168.121.112 node2

192.168.121.113 node3

192.168.121.188 VIP1.4.1.4 关闭selinux

bash

# 输入getenforce,查看selinux运行模式

# Ubuntu 默认不安装、不启用 SELinux

root@master1:~# getenforce

Command 'getenforce' not found, but can be installed with:

apt install selinux-utils

# 如果输出以上内容表示没有安装selinux不需要管

#Enforcing:强制模式(SELinux 生效,违反策略的行为会被阻止)。

#Permissive:宽容模式(SELinux 不阻止违规行为,但会记录日志)。

#Disabled:禁用模式(SELinux 完全不工作)。1.4.1.5 关闭防火墙

bash

# 查看防火墙状态

root@master1:~# ufw status

Status: inactive

# 输出以上内容说明防火墙未启用

# ufw enable 开机自启动

# ufw disable 禁用防火墙1.4.1.6 永久关闭swap交换分区

bash

root@master1:~# vim /etc/fstab

# 注释#/swap.img none swap sw 0 0

# 重启后生效1.4.1.7 修改内核参数

bash

#加载 br_netfilter 模块

modprobe br_netfilter

#验证模块是否加载成功:

lsmod |grep br_netfilter

#修改内核参数

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

#使刚才修改的内核参数生效

sysctl -p /etc/sysctl.d/k8s.conf

1.4.1.8 配置阿里云repo源

bash

# 备份原软件源配置文件

cp /etc/apt/sources.list /etc/apt/sources.list.bak

# 查看 Ubuntu 版本代号

lsb_release -c、

# 编辑软件源配置文件

vim /etc/apt/sources.list

# 替换为阿里云镜像源

# 阿里云镜像源

deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

# 更新软件包缓存

apt update1.4.1.9 设置时区

bash

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime # 更换为上海时区1.4.1.10 安装常用命令

bash

apt-get update

apt-get purge ufw lxd lxd-client lxcfs lxc-common #卸载不用的包

apt-get install iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip1.4.1.11 安装docker

bash

apt-get update

apt-get -y install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

apt-get -y update && apt-get -y install docker-ce

docker info1.4.1.12 拍摄快照

1.4.2 高可用负载均衡

1.4.2.1 keepalived 高可用

在ha1和ha2两台服务器安装keepalived

bash

root@haproxy1:/# apt install keepalived -y

root@haproxy2:/# apt install keepalived -y复制配置模板到/etc/keepalived/目录下

bash

root@haproxy1:/etc/keepalived# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

root@haproxy2:/etc/keepalived# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf分别修改配置文件

ha1主服务器keepalived配置文件

bash

# 主配置文件

root@ha1:/etc/keepalived# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER # 主MASTER

interface eht0 # 绑定网卡名称

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.121.188 dev eht0 label eht0:0

192.168.121.189 dev eht0 label eht0:1

192.168.121.190 dev eht0 label eht0:2

}

}

# 重启keepalived

root@haproxy1:/etc/keepalived# systemctl restart keepalived

# 设置开机自启动

root@haproxy1:/etc/keepalived# systemctl enable keepalived

# 查看vip是否以及挂上

root@haproxy1:/etc/keepalived# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eht0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:4b:b4:b6 brd ff:ff:ff:ff:ff:ff

altname enp2s0

inet 192.168.121.109/24 brd 192.168.121.255 scope global etho

valid_lft forever preferred_lft forever

inet 192.168.121.188/32 scope global eht0:0

valid_lft forever preferred_lft forever

inet 192.168.121.189/32 scope global eht0:1

valid_lft forever preferred_lft forever

inet 192.168.121.190/32 scope global eht0:2

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe4b:b4b6/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:29:eb:58:5c brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft foreverha2备服务器keepalived配置文件

bash

# 备配置文件

root@haproxy2:/etc/keepalived# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP # 备BACKUP

interface eth0 # 绑定网卡名称

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 80 # 降低优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.121.188 dev eth0 label eht0:0

192.168.121.189 dev eth0 label eht0:1

192.168.121.190 dev eth0 label eht0:2

}

}

# 重启keepalived

root@haproxy2:/etc/keepalived# systemctl restart keepalived

# 设置开机自启动

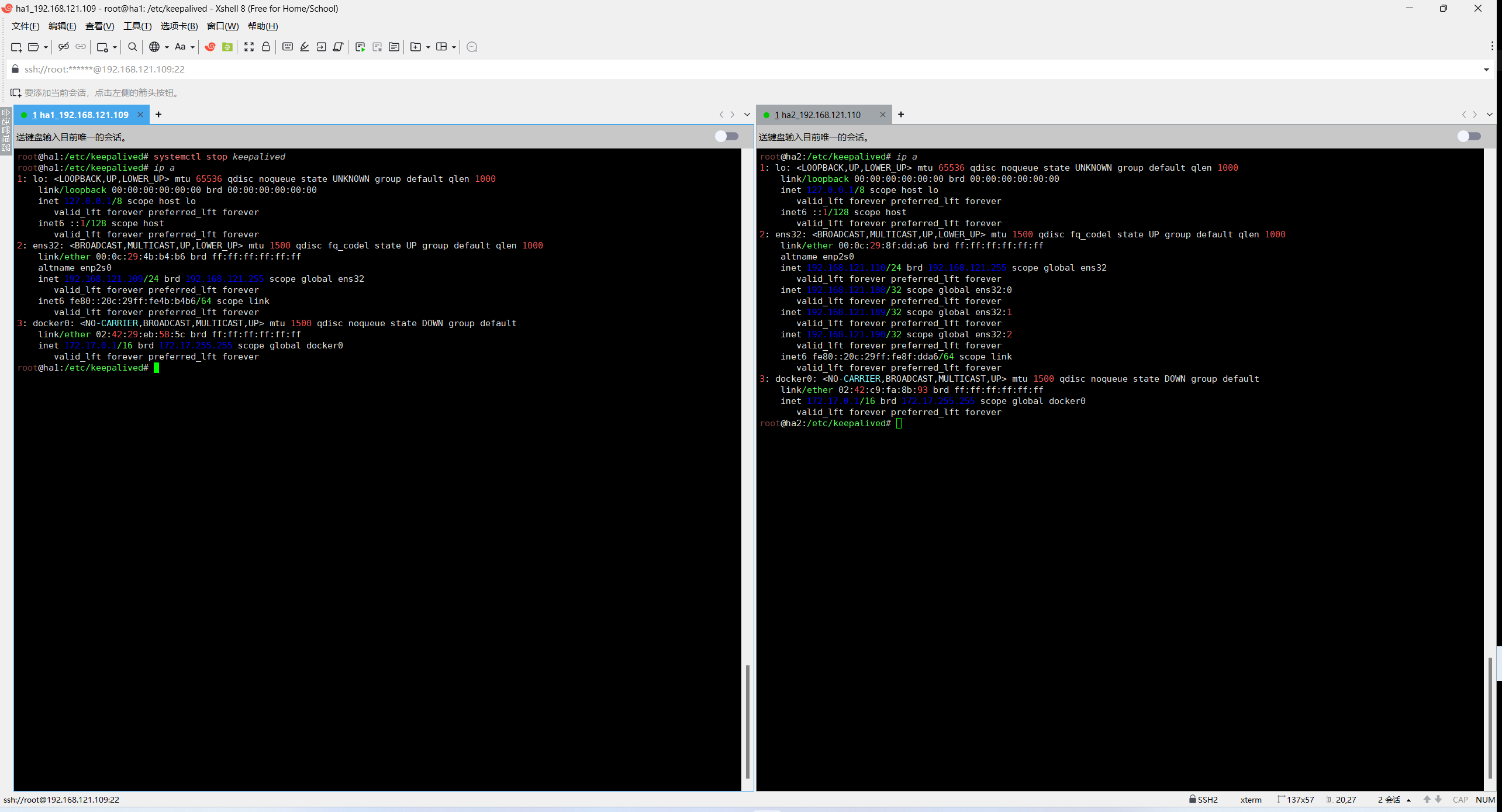

root@haproxy2:/etc/keepalived# systemctl enable keepalived测试vip漂移是否正常

bash

# 主服务器关闭keepalived

root@haproxy1:/etc/keepalived# systemctl stop keepalived

root@haproxy1:/etc/keepalived# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eht0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:4b:b4:b6 brd ff:ff:ff:ff:ff:ff

altname enp2s0

inet 192.168.121.109/24 brd 192.168.121.255 scope global eht0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe4b:b4b6/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:29:eb:58:5c brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

# 备服务器查看状态

root@haproxy2:/etc/keepalived# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eht0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:8f:dd:a6 brd ff:ff:ff:ff:ff:ff

altname enp2s0

inet 192.168.121.110/24 brd 192.168.121.255 scope global eht0

valid_lft forever preferred_lft forever

inet 192.168.121.188/32 scope global eht0:0

valid_lft forever preferred_lft forever

inet 192.168.121.189/32 scope global eht0:1

valid_lft forever preferred_lft forever

inet 192.168.121.190/32 scope global eht0:2

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe8f:dda6/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:c9:fa:8b:93 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forevervip正常漂移,启动主服务器keepalived后vip自动漂移回来

1.4.2.2 haproxy负载均衡器

安装haproxy

bash

root@haproxy1:/etc/keepalived# apt install haproxy -y

root@haproxy2:/etc/keepalived# apt install haproxy -y配置监听

bash

root@haproxy1:/# vim /etc/haproxy/haproxy.cfg

root@haproxy2:/# vim /etc/haproxy/haproxy.cfg

# 在文件末尾添加下面内容

# 定义一个名为k8s-api-6443的监听块,用于处理Kubernetes API的负载均衡

listen k8s-api-6443

# 绑定到本地IP 192.168.121.188的6443端口,接收客户端请求

bind 192.168.121.188:6443

# 设置工作模式为TCP(Kubernetes API基于TCP协议通信)

mode tcp

# 定义后端服务器master1:指向192.168.121.101的6443端口

# inter 3s:健康检查间隔时间3秒

# fall 3:连续3次检查失败则标记为不可用

# rise 1:1次检查成功则标记为可用

server master1 192.168.121.101:6443 check inter 3s fall 3 rise 1

# 定义后端服务器master2,配置同master1

server master2 192.168.121.102:6443 check inter 3s fall 3 rise 1

# 定义后端服务器master3,配置同master1

server master3 192.168.121.103:6443 check inter 3s fall 3 rise 1

# 重启并设置开机自启动

root@haproxy1:/etc/keepalived# systemctl restart haproxy && systemctl enable haproxy

root@haproxy2:/etc/keepalived# systemctl restart haproxy && systemctl enable haproxy

# 备服务器起不来是正常的,无法监听本机没有的地址上,因为vip188在主服务器上不在备服务器上所以需要修改/etc/sysctl.conf文件

root@haproxy1:# vim /etc/sysctl.conf # 主服务器也配置一下,万一vip漂移到了ha2,负载均衡器就失效了

root@haproxy2:# vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1 # 有则修改1,无则添加

root@ha2:/etc/keepalived# sysctl -p

net.ipv4.ip_forward = 1

vm.max_map_count = 262144

kernel.pid_max = 4194303

fs.file-max = 1000000

net.ipv4.tcp_max_tw_buckets = 6000

net.netfilter.nf_conntrack_max = 2097152

net.ipv4.ip_nonlocal_bind = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness = 0

root@haproxy2:/etc/keepalived# systemctl restart haproxy

root@haproxy2:/etc/keepalived# systemctl status haproxy

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/lib/systemd/system/haproxy.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2025-09-20 16:04:56 CST; 4s ago

Docs: man:haproxy(1)

file:/usr/share/doc/haproxy/configuration.txt.gz

Process: 3030 ExecStartPre=/usr/sbin/haproxy -Ws -f $CONFIG -c -q $EXTRAOPTS (code=exited, status=0/SUCCESS)

Main PID: 3032 (haproxy)

Tasks: 2 (limit: 2177)

Memory: 71.2M

CPU: 99ms

CGroup: /system.slice/haproxy.service

├─3032 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

└─3034 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

Sep 20 16:04:56 ha2 systemd[1]: Starting HAProxy Load Balancer...

Sep 20 16:04:56 ha2 haproxy[3032]: [WARNING] (3032) : parsing [/etc/haproxy/haproxy.cfg:23] : 'option httplog' not usable with proxy 'k>

Sep 20 16:04:56 ha2 haproxy[3032]: [NOTICE] (3032) : New worker #1 (3034) forked

Sep 20 16:04:56 ha2 systemd[1]: Started HAProxy Load Balancer.

Sep 20 16:04:56 ha2 haproxy[3034]: [WARNING] (3034) : Server k8s-api-6443/master1 is DOWN, reason: Layer4 connection problem, info: "Co>

Sep 20 16:04:57 ha2 haproxy[3034]: [WARNING] (3034) : Server k8s-api-6443/master2 is DOWN, reason: Layer4 connection problem, info: "Co>

Sep 20 16:04:58 ha2 haproxy[3034]: [WARNING] (3034) : Server k8s-api-6443/master3 is DOWN, reason: Layer4 connection problem, info: "Co>

Sep 20 16:04:58 ha2 haproxy[3034]: [NOTICE] (3034) : haproxy version is 2.4.24-0ubuntu0.22.04.2

Sep 20 16:04:58 ha2 haproxy[3034]: [NOTICE] (3034) : path to executable is /usr/sbin/haproxy

Sep 20 16:04:58 ha2 haproxy[3034]: [ALERT] (3034) : proxy 'k8s-api-6443' has no server available!1.4.3 Harbor安装

上传安装包至harbor1服务器以及harbor2服务器

bash

root@harbor1:/apps/harbor# tar -xvf harbor-offline-installer-v2.4.2.tgz

root@harbor1:/apps/harbor# cd /harbor

root@harbor1:/apps/harbor# cp harbor.yml.tmpl harbor.yml

root@harbor1:/apps/harbor# vim harbor.yml

# 修改配置文件hostname为主机域名harbor1

hostname: harbor.chenjun.com

# 生成ssl证书

# 生成私有key

root@harbor1:/apps/harbor# mkdir certs

root@harbor1:/apps/harbor# cd certs/

root@harbor1:/apps/harbor/certs# openssl genrsa -out ./harbor-ca.key

root@harbor1:/apps/harbor/certs# openssl req -x509 -new -nodes -key ./harbor-ca.key -subj "/CN=harbor1.chenjun.com" -days 7120 -out ./harbor-ca.crt

root@harbor1:/apps/harbor/certs# ls

harbor-ca.crt harbor-ca.key

root@harbor1:/apps/harbor/certs# cd ..

root@harbor1:/apps/harbor# vim harbor.yml

# 修改私钥公钥path路径

https:

port: 443

certificate: /apps/harbor/certs/harbor-ca.crt

private_key: /apps/harbor/certs/harbor-ca.key

# 修改harbor密码

harbor_admin_password: 123456安装harbor

bash

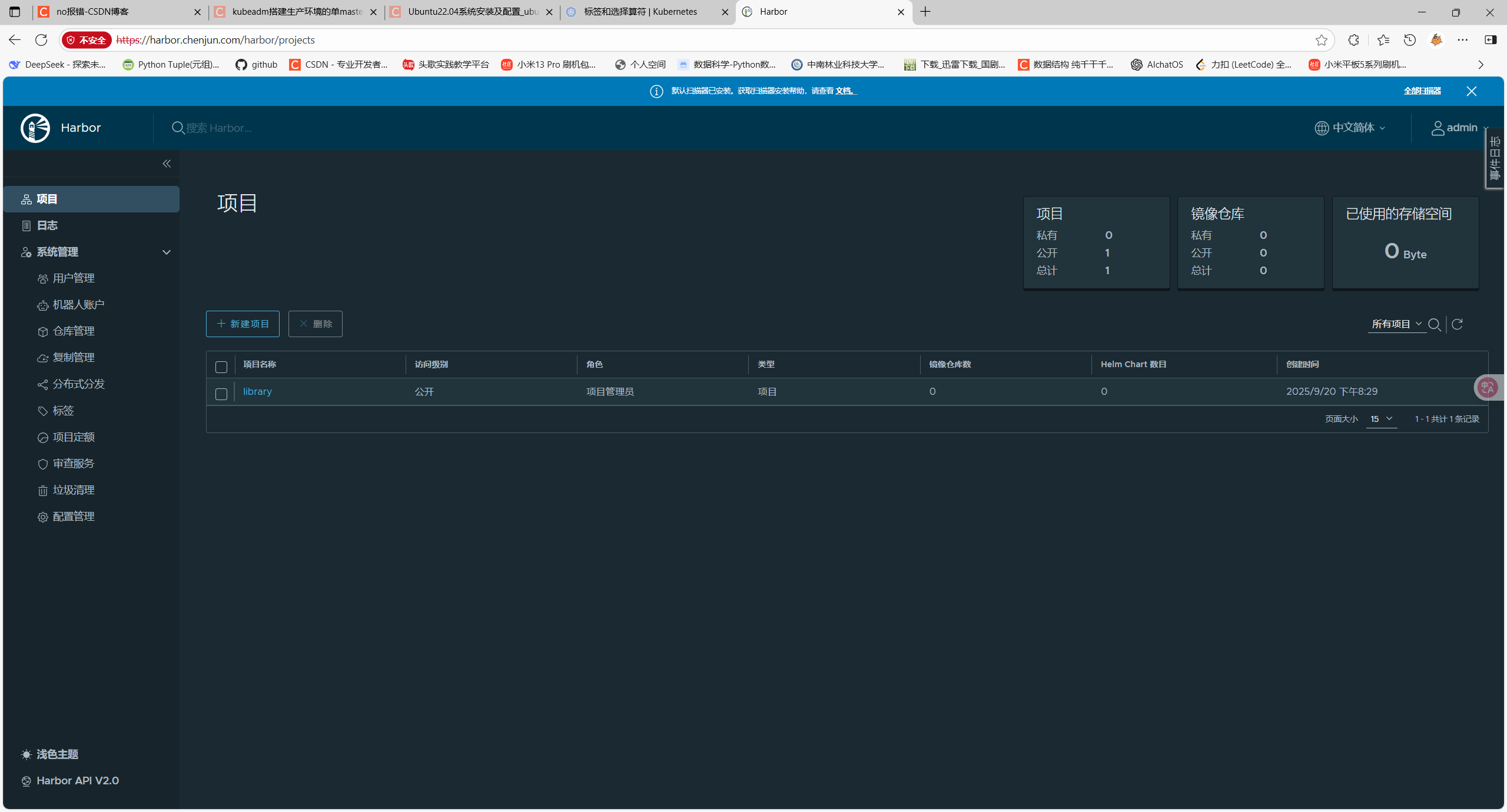

root@harbor1:/apps/harbor# ./install.sh --with-trivy --with-chartmuseum安装完成之后可以在浏览器输入之前设置的域名harbor.chenjun.com访问web页面

1.5 k8s集群部署

1.5.1 master1节点部署ansible

bash

root@master1:/# apt install ansible -y1.5.2 配置ssh免密登录

在master1节点分发密钥到三个master节点三个etcd节点三个node节点

bash

root@master1:/# ssh-copy-id 192.168.121.101

root@master1:/# ssh-copy-id 192.168.121.102

root@master1:/# ssh-copy-id 192.168.121.103

root@master1:/# ssh-copy-id 192.168.121.106

root@master1:/# ssh-copy-id 192.168.121.107

root@master1:/# ssh-copy-id 192.168.121.108

root@master1:/# ssh-copy-id 192.168.121.111

root@master1:/# ssh-copy-id 192.168.121.112

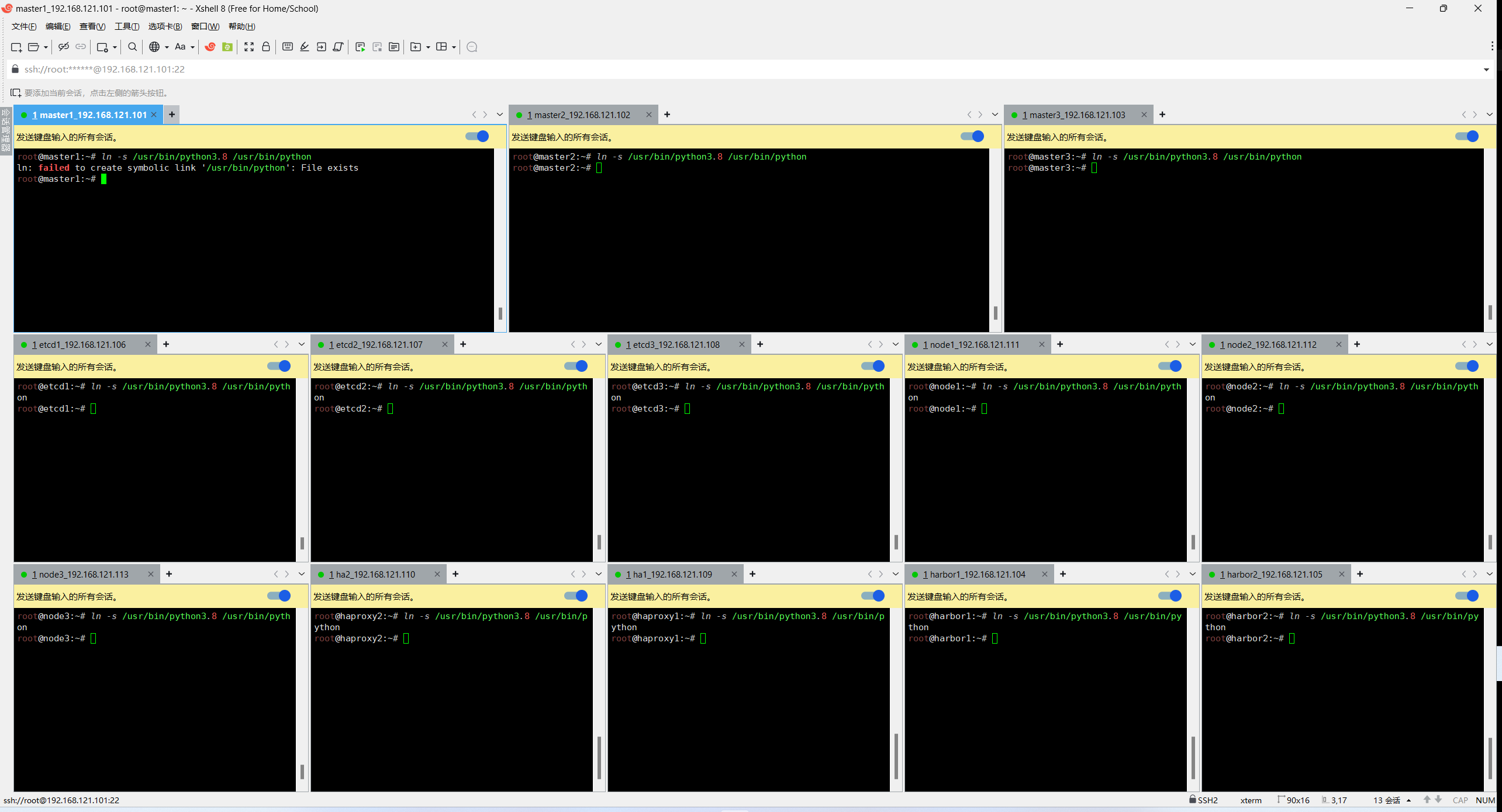

root@master1:/# ssh-copy-id 192.168.121.1131.5.3 配置python3的软连接

使用xshell发送命令到所有会话

bash

ln -s /usr/bin/python3.8 /usr/bin/python

1.5.4 在部署节点编排k8s安装

下载项目源码、二进制及离线镜像

bash

root@master1:/# export release=3.2.0

root@master1:/# wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

root@master1:/# chmod +x ./ezdown

root@master1:/# vim ezdown

# 修改docker版本19.03.15

root@master1:/# DOCKER_VER=19.03.15

# 执行(需要解决上网问题,访问docker仓库拉取镜像)

root@master1:/# ./ezdown -Dlinux写入环境变量

bash

vim /etc/profile

export http_proxy="http://服务器IP:端口"

export https_proxy="http://服务器IP:端口"

source /etc/profiledocker 上网

bash

新版本

"proxies": {

"http-proxy": "http://127.0.0.1:7890",

"https-proxy": "http://127.0.0.1:7890"

}

老版本

# 创建配置目录(如果不存在)

sudo mkdir -p /etc/systemd/system/docker.service.d

# 创建配置文件

sudo vim /etc/systemd/system/docker.service.d/http-proxy.conf

[Service]

Environment="HTTP_PROXY=http://127.0.0.1:7890"

Environment="HTTPS_PROXY=http://127.0.0.1:7890"

Environment="NO_PROXY=192.168.121.104,192.168.121.105,harbor1.chenjun.com,loalhosts,harbor2.chenjun.com,127.0.0.1" # 无需上网的地址(可选)

# 重新加载 systemd 配置

sudo systemctl daemon-reload

# 重启 Docker 服务

sudo systemctl restart docker

# 检查启动状态

sudo systemctl status docker1.5.5 创建集群配置实例

bash

root@master1:~# cd /etc/kubeasz/

# 创建一个名为k8s-01的集群,会拷贝两个文件 example/config.yml example/hosts.multi-node

root@master1:/etc/kubeasz# ./ezctl new k8s-01

2025-09-21 15:27:49 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01

2025-09-21 15:27:49 DEBUG set versions

2025-09-21 15:27:49 DEBUG disable registry mirrors

2025-09-21 15:27:49 DEBUG cluster k8s-01: files successfully created.

2025-09-21 15:27:49 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts'

2025-09-21 15:27:49 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml'

root@master1:/etc/kubeasz# cd clusters/k8s-01

root@master1:/etc/kubeasz/clusters/k8s-01# ls

config.yml hosts

# 修改hosts文件改为集群实际ip

[etcd]

192.168.121.106

192.168.121.107

192.168.121.108

# 先两个后续扩容

# master node(s)

[kube_master]

192.168.121.101

192.168.121.102

# 先两个后续扩容

# work node(s)

[kube_node]

192.168.121.111

192.168.121.112

# 打开外部负载均衡器

[ex_lb]

192.168.121.120 LB_ROLE=backup EX_APISERVER_VIP=192.168.121.188 EX_APISERVER_PORT=6443

192.168.121.129 LB_ROLE=master EX_APISERVER_VIP=192.168.121.188 EX_APISERVER_PORT=6443

# 修改运行时为docker

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# service 网段选择性修改

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# pod 网段选择性修改

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# [docker]信任的HTTP仓库 添加两台harbor服务器的ip

INSECURE_REG: '["127.0.0.1/8","192.168.121.104","192.168.121.105"]'

# 修改端口范围 30000-65000

# NodePort Range

NODE_PORT_RANGE="30000-65000"

# DNS域名可改可不改

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="chenjun.local"

# api-server等组件的二进制路径

bin_dir="/usr/local/bin"

# 修改k8s配置

root@master1:~# vim config.yml

# 修改访问入口

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS:

- "191.168.121.188" # vip

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# 修改最大pod数量

# node节点最大pod 数

MAX_PODS: 300

# coredns 自动安装 改成no 后续自己装

dns_install: "no"

# metric server 自动安装 改成no 后续自己装

metricsserver_install: "no"

# dashboard 自动安装 改成no 后续自己装

dashboard_install: "no"

# 不要负载均衡初始化

root@master1:/etc/kubeasz# vim /etc/kubeasz/playbooks/01.prepare.yml

- hosts:

- kube_master

- kube_node

- etcd

#- ex_lb 注释ex_lb

- chrony

roles:

- { role: os-harden, when: "OS_HARDEN|bool" }

- { role: chrony, when: "groups['chrony']|length > 0" }

# to create CA, kubeconfig, kube-proxy.kubeconfig etc.

- hosts: localhost

roles:

- deploy

# prepare tasks for all nodes

- hosts:

- kube_master

- kube_node

- etcd

roles:

- prepare启动第一步 初始化

bash

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 01第二步 安装etcd集群

bash

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 02第三步 安装 docker环境

bash

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 03第四步 部署k8s集群 master

bash

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 04第五步 安装node集群

bash

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 05第六步 安装网络组件

bash

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 06测试推送镜像到harbor

bash

# 必须和harbor创建的名字一致

root@master1:/etc/kubeasz# mkdir /etc/docker/certs.d/harbor1.chenjun.com -p

# 分发私钥到master服务器

root@harbor1:/# scp /apps/harbor/certs/harbor-ca.crt master1:/etc/docker/certs.d/harbor1.chenjun.com/

# master1配置harbor服务器域名解析

root@master1:/etc/kubeasz# vim /etc/hosts

192.168.121.104 harbor1.chenjun.com

root@master1:/etc/kubeasz# docker login harbor1.chenjun.com

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

在登录harbor仓库的时候遇到了一个问题

bash

root@master1:/etc/kubeasz# curl -k https://harbor1.chenjun.com/v2/

The deployment could not be found on Vercel.

DEPLOYMENT_NOT_FOUND

hkg1::f4qpb-1758451462099-e76ed2a7000b域名harbor1.chenjun.com指向 Vercel 的服务器始终访问失败404等报错信息,是开了的问题

在docker配置文件里面 添加禁止的地址解决了

bash

vim /etc/systemd/system/docker.service.d/http-proxy.conf

Environment="NO_PROXY=192.168.121.104,192.168.121.105,harbor1.chenjun.com,loalhosts,harbor2.chenjun.com,127.0.0.1" # 无需禁止的地址上传镜像

bash

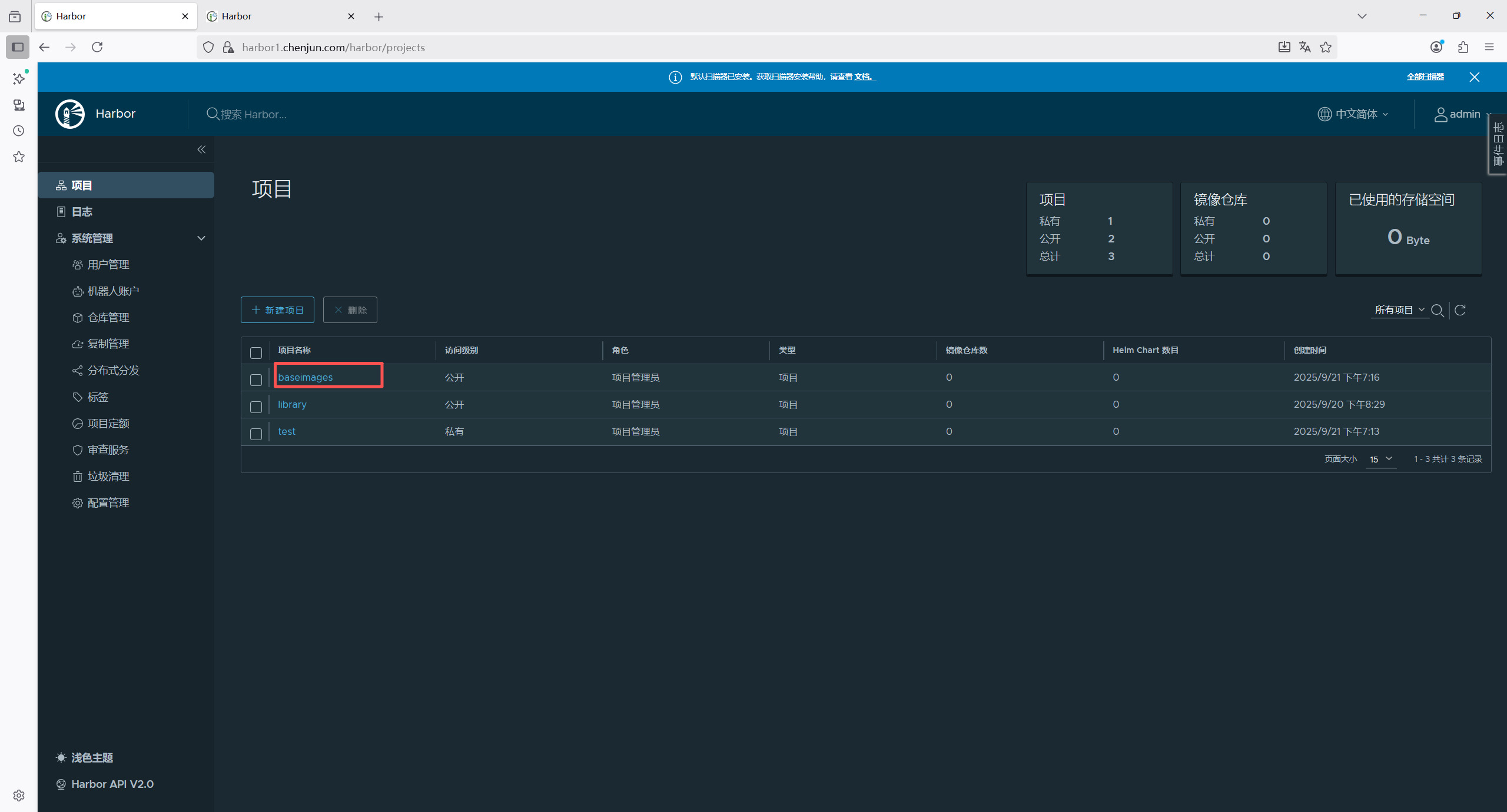

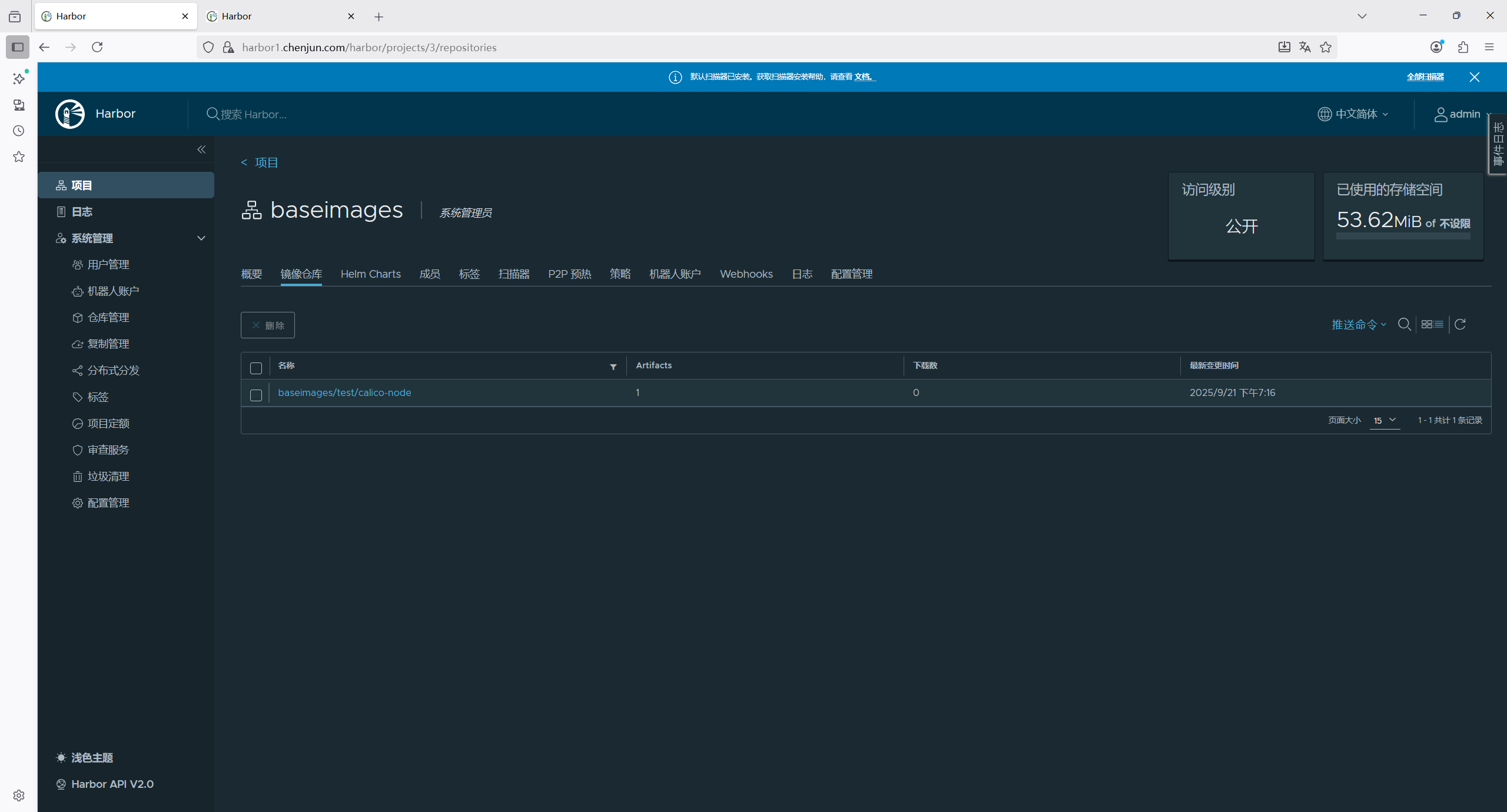

# 需要在harbor web页面新建一个项目

root@master1:/etc/kubeasz# docker tag calico/node:v3.19.3 harbor1.chenjun.com/baseimages/test/calico-node:v3.19.3

root@master1:/etc/kubeasz# docker push harbor1.chenjun.com/baseimages/test/calico-node:v3.19.3

The push refers to repository [harbor1.chenjun.com/baseimages/test/calico-node]

5c8b8d7a47a1: Pushed

803fb24398e2: Pushed

v3.19.3: digest: sha256:d84a6c139c86fabc6414267ee4c87879d42d15c2e0eaf96030c91a59e27e7a6f size: 737

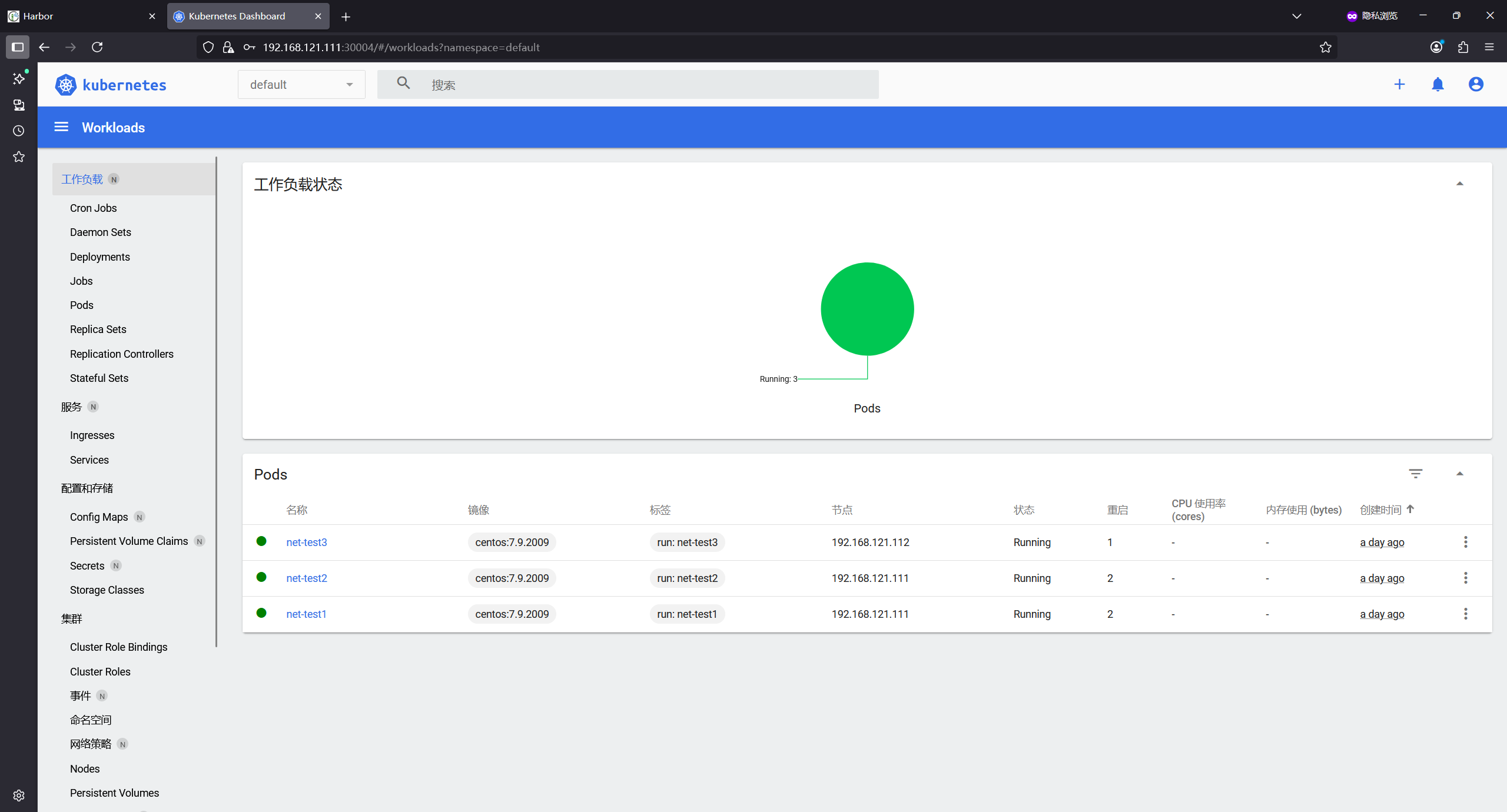

1.5.6 测试网络通信

bash

root@master1:/etc/kubeasz# calicoctl node status

Calico process is running.

IPv4 BGP status

+-----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+-----------------+-------------------+-------+----------+-------------+

| 192.168.121.102 | node-to-node mesh | up | 11:01:48 | Established |

| 192.168.121.111 | node-to-node mesh | up | 11:00:12 | Established |

| 192.168.121.112 | node-to-node mesh | up | 11:00:14 | Established |

+-----------------+-------------------+-------+----------+-------------+

root@master1:/etc/kubeasz# kubectl run net-test1 --image=centos:7.9.2009 sleep 3600000

pod/net-test1 created

root@master1:/etc/kubeasz# kubectl run net-test2 --image=centos:7.9.2009 sleep 3600000

pod/net-test2 created

root@master1:/etc/kubeasz# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 3m2s 10.200.166.129 192.168.121.111 <none> <none>

net-test2 1/1 Running 0 54s 10.200.166.130 192.168.121.111 <none> <none>

# 进入容器内部,ping 另外一个pod和w'sai

root@master1:/etc/kubeasz# kubectl exec -it net-test1 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@net-test1 /]# ping 10.200.166.129

PING 10.200.166.129 (10.200.166.129) 56(84) bytes of data.

64 bytes from 10.200.166.129: icmp_seq=1 ttl=64 time=0.044 ms

64 bytes from 10.200.166.129: icmp_seq=2 ttl=64 time=0.251 ms

^C

--- 10.200.166.129 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1019ms

rtt min/avg/max/mdev = 0.044/0.147/0.251/0.104 ms

[root@net-test1 /]# ping 10.200.166.130

PING 10.200.166.130 (10.200.166.130) 56(84) bytes of data.

64 bytes from 10.200.166.130: icmp_seq=1 ttl=63 time=6.77 ms

64 bytes from 10.200.166.130: icmp_seq=2 ttl=63 time=0.056 ms

64 bytes from 10.200.166.130: icmp_seq=3 ttl=63 time=0.056 ms

^C

--- 10.200.166.130 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 0.056/2.296/6.778/3.169 ms

[root@net-test1 /]# ping 223.5.5.5

PING 223.5.5.5 (223.5.5.5) 56(84) bytes of data.

64 bytes from 223.5.5.5: icmp_seq=1 ttl=127 time=32.7 ms

64 bytes from 223.5.5.5: icmp_seq=2 ttl=127 time=26.2 ms

^C

--- 223.5.5.5 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

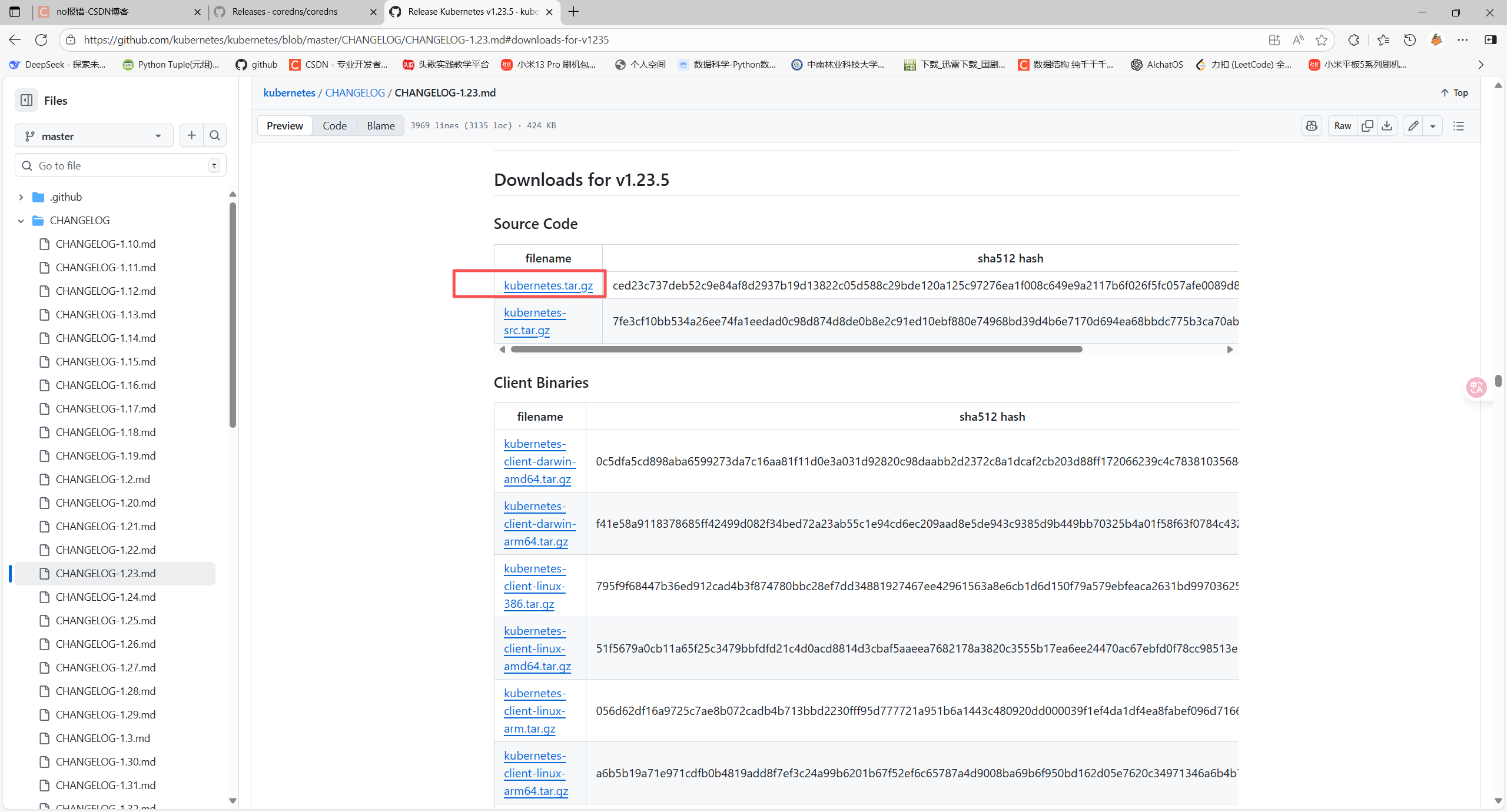

rtt min/avg/max/mdev = 26.299/29.512/32.725/3.213 ms1.6 coredns部署

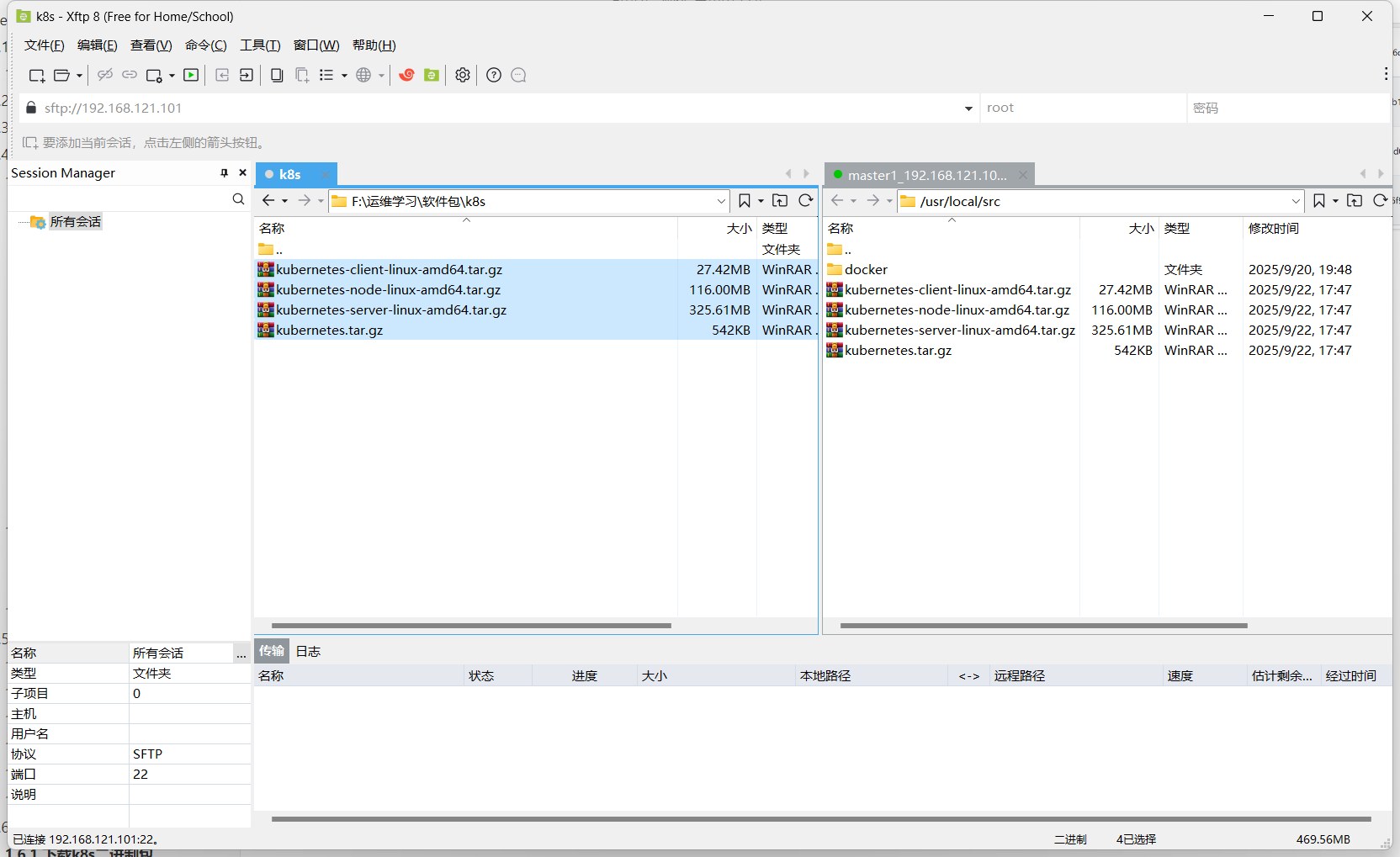

1.6.1 下载k8s二进制包

下载四个二进制文件

客户机二进制文件kubernetes-client-linux-amd64.tar.gz

服务器二进制文件kubernetes-server-linux-amd64.tar.gz

节点二进制文件kubernetes-node-linux-amd64.tar.gz

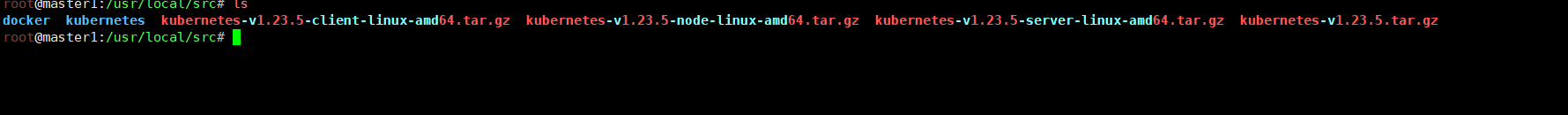

下载完成后上传至master1服务器/usr/local/src/目录下面

逐个进行解压

bash

root@master1:/usr/local/src# tar xf kubernetes-node-linux-amd64.tar.gz

root@master1:/usr/local/src# tar xf kubernetes-server-linux-amd64.tar.gz

root@master1:/usr/local/src# tar xf kubernetes.tar.gz

root@master1:/usr/local/src# tar xf kubernetes-client-linux-amd64.tar.gz解压完成会出现一个kubernetes文件夹

插件目录

bash

root@master1:/usr/local/src/kubernetes/cluster/addons# ls

OWNERS addon-manager cluster-loadbalancing device-plugins dns-horizontal-autoscaler fluentd-gcp kube-proxy metadata-proxy node-problem-detector storage-class

README.md calico-policy-controller dashboard dns fluentd-elasticsearch ip-masq-agent metadata-agent metrics-server rbac volumesnapshots1.6.2 部署coredns

1.6.2.1 进入coredns目录

bash

root@master1:/usr/local/src/kubernetes/cluster/addons/dns/coredns# ls

Makefile coredns.yaml.base coredns.yaml.in coredns.yaml.sed transforms2salt.sed transforms2sed.sed1.6.2.2 将模板文件拷贝至root目录下

bash

root@master1:/usr/local/src/kubernetes/cluster/addons/dns/coredns# cp coredns.yaml.base /root/coredns.yaml1.6.2.3 编辑coredns.yaml文件

bash

root@master1:/usr/local/src/kubernetes/cluster/addons/dns/coredns# cd

root@master1:~# vim coredns.yaml1.6.2.4 替换K8s 集群的 DNS 域名

bash

# 1. 替换K8s 集群的 DNS 域名 kubernetes __DNS__DOMAIN__ in-addr.arpa ip6.arpa { ... } 替换__DNS__DOMAIN__为之前设置的chenjun.local

# vim /etc/kubeasz/clusters/k8s-01/hosts 内设置的CLUSTER_DNS_DOMAIN="chenjun.local"

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes chenjun.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}详细注释

bash

# 对所有域名(. 表示根域名)在53端口(DNS默认端口)提供服务

.:53 {

# 启用错误日志记录,将运行时错误输出到日志

errors

# 配置健康检查相关设置

health {

# 当服务需要停止时,先进入"跛鸭"状态5秒

# 期间继续处理现有请求但不再接受新连接,实现平滑下线

lameduck 5s

}

# 启用就绪探针接口,供K8s等编排平台检测实例是否就绪

ready

# 配置Kubernetes集成的DNS解析规则

# 处理chenjun.local(集群自定义域名)及IP反向解析域(in-addr.arpa/ip6.arpa)

kubernetes chenjun.local in-addr.arpa ip6.arpa {

# 允许通过Pod IP直接解析Pod的DNS(非安全模式,不验证Pod信息)

pods insecure

# 当本插件无法解析请求时,将请求传递给后续插件处理

fallthrough in-addr.arpa ip6.arpa

# DNS记录的生存时间(TTL)为30秒

ttl 30

}

# 在9153端口暴露Prometheus监控指标(如查询量、响应时间等)

prometheus :9153

# 配置DNS转发规则

# 将本地无法解析的域名查询转发到/etc/resolv.conf中配置的上游DNS服务器

forward . /etc/resolv.conf {

# 限制最大并发转发请求数为1000,防止服务过载

max_concurrent 1000

}

# 启用DNS缓存,缓存有效期为30秒,减少重复查询提高性能

cache 30

# 检测并防止DNS循环(如请求在服务器间无限转发)

loop

# 允许动态重新加载配置文件(无需重启服务)

reload

# 对多个上游DNS服务器启用负载均衡,均匀分配查询请求

loadbalance

}1.6.2.5 资源限制

bash

resources:

limits: # 资源限制:容器最多能使用的资源上限

memory: 200Mi # 内存上限 修改为200Mi

requests: # 资源请求:容器运行所需的最小资源(用于K8s调度决策)

cpu: 100m # CPU请求:100毫核(100m = 0.1核)

memory: 70Mi # 内存请求:70兆字节(Mi为Mebibyte,1Mi ≈ 1.048MB)1.6.2.6 修改集群内部访问地址

bash

# 替换 clusterIP: __DNS__SERVER__为 pod容器系统配置的 DNS 服务器 IP 地址为 10.100.0.2

root@master1:/etc/kubeasz/clusters/k8s-01# kubectl exec -it net-test1 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@net-test1 /]# cat /etc/resolv.conf

nameserver 10.100.0.2

search default.svc.chenjun.local svc.chenjun.local chenjun.local

options ndots:5

clusterIP: 10.100.0.2

# 这个 IP 是集群内部所有 Pod 进行 DNS 解析的核心地址

#------Pod 的 /etc/resolv.conf 中会配置该 IP 作为 DNS 服务器,用于解析集群内的服务域名(如 service-name.namespace.svc.cluster.local)和外部域名。1.6.2.7 修改镜像地址

bash

image: k8s.gcr.io/coredns/coredns:v1.8.6

# 修改为

image: coredns/coredns:1.8.71.6.2.8 启动coredns并测试可用性

bash

root@master1:~# kubectl apply -f coredns.yaml

# 查看pod是否正常启动

root@master1:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 2 (20h ago) 23h

default net-test2 1/1 Running 2 (20h ago) 23h

default net-test3 1/1 Running 1 (20h ago) 23h

kube-system calico-kube-controllers-754966f84c-nb8mt 1/1 Running 2 (20h ago) 26h

kube-system calico-node-29mld 1/1 Running 2 (20h ago) 26h

kube-system calico-node-4rnzt 1/1 Running 3 (20h ago) 26h

kube-system calico-node-p4ddl 1/1 Running 2 (20h ago) 26h

kube-system calico-node-rn7fk 1/1 Running 7 (20h ago) 26h

kube-system coredns-7db6b45f67-ht47r 1/1 Running 0 50s

# 随便进入一个pod容器看是否能通过域名访问

root@master1:/etc/kubeasz/clusters/k8s-01# kubectl exec -it net-test1 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@net-test1 /]# ping baidu.com

PING baidu.com (39.156.70.37) 56(84) bytes of data.

64 bytes from 39.156.70.37 (39.156.70.37): icmp_seq=1 ttl=127 time=68.8 ms

64 bytes from 39.156.70.37 (39.156.70.37): icmp_seq=2 ttl=127 time=156 ms

# 能够ping通说明没问题1.6.2.9 配置coredns高可用

多副本方式

bash

# 执行 kubectl get deployment -n kube-system -o yaml 命令会返回 kube-system 命名空间下所有 Deployment 资源的详细配置(YAML 格式)。

root@master1:~# kubectl get deployment -n kube-system -o yaml

# 编辑deployment

root@master1:~# kubectl edit deployment coredns -n kube-system -o yaml

# 修改replicas副本数为2

spec:

progressDeadlineSeconds: 600

replicas: 2

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kube-dns

# 再次get看看

root@master1:~# kubectl get deployment -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

calico-kube-controllers 1/1 1 1 26h

coredns 2/2 2 2 10m

# READY可以看到变成了2/2资源限制方式

通过修改coredns.yaml文件

bash

resources:

limits: # 资源限制:容器最多能使用的资源上限

cpu: 2 # 添加cpu

memory: 2Gi # 修改内存上限

requests: # 资源请求:容器运行所需的最小资源(用于K8s调度决策)

cpu: 2 # 修改cpu

memory: 2Gi # 修改内存上限 dns缓存

缓存有三种:pod级别缓存,宿主机node级别缓存,coredns级别缓存。

1.7 dashboard部署

Kubernetes Dashboard 是用于k8s集群的前端ui组件,允许用户管理集群中运行的应用程序并对其进行故障排除,以及管理集群本身。

地址:Releases · kubernetes/dashboard

1.7.1 下载yaml文件

bash

root@master1:~# mkdir yaml

root@master1:~# cd yaml/

root@master1:~/yaml# mkdir 20250922

root@master1:~/yaml# cd 20250922/

root@master1:~/yaml/20250922# ls

root@master1:~/yaml/20250922# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml

--2025-09-22 20:24:55-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml

Connecting to 192.168.121.1:7890... connected.

Proxy request sent, awaiting response... 200 OK

Length: 7621 (7.4K) [text/plain]

Saving to: 'recommended.yaml'

recommended.yaml 100%[=============================================================>] 7.44K --.-KB/s in 0s

2025-09-22 20:24:55 (83.5 MB/s) - 'recommended.yaml' saved [7621/7621]

root@master1:~/yaml/20250922# mv recommended.yaml dashboard-v2.5.1.yaml1.7.2 使用本地私有镜像仓库harbor拉取镜像部署

bash

# 在harbor1服务器启动harbor

cd /apps/harbor

docker-compose stop

docker-compose start

# 在各个node节点建立目录

mkdir -p /etc/docker/certs.d/harbor1.chenjun.com/

# 分发ca私有证书到各个node节点服务器上

scp /apps/harbor/certs/harbor-ca.crt node1:/etc/docker/certs.d/harbor1.chenjun.com/

scp /apps/harbor/certs/harbor-ca.crt node2:/etc/docker/certs.d/harbor1.chenjun.com/

scp /apps/harbor/certs/harbor-ca.crt node3:/etc/docker/certs.d/harbor1.chenjun.com/

#dashboard.yaml内的image镜像

# containers:

# - name: kubernetes-dashboard

# image: kubernetesui/dashboard:v2.5.1

# imagePullPolicy: Always

# ports:

#ontainers:

# - name: dashboard-metrics-scraper

# image: kubernetesui/metrics-scraper:v1.0.7

# ports:

# - containerPort: 8000

# protocol: TCP

# livenessProbe:

# 在master1节点使用docker pull拉取dashboard镜像包,打包上传harbor私有镜像仓库,然后再通过yaml文件去部署

docker pull kubernetesui/dashboard:v2.5.1

docker pull kubernetesui/metrics-scraper:v1.0.7

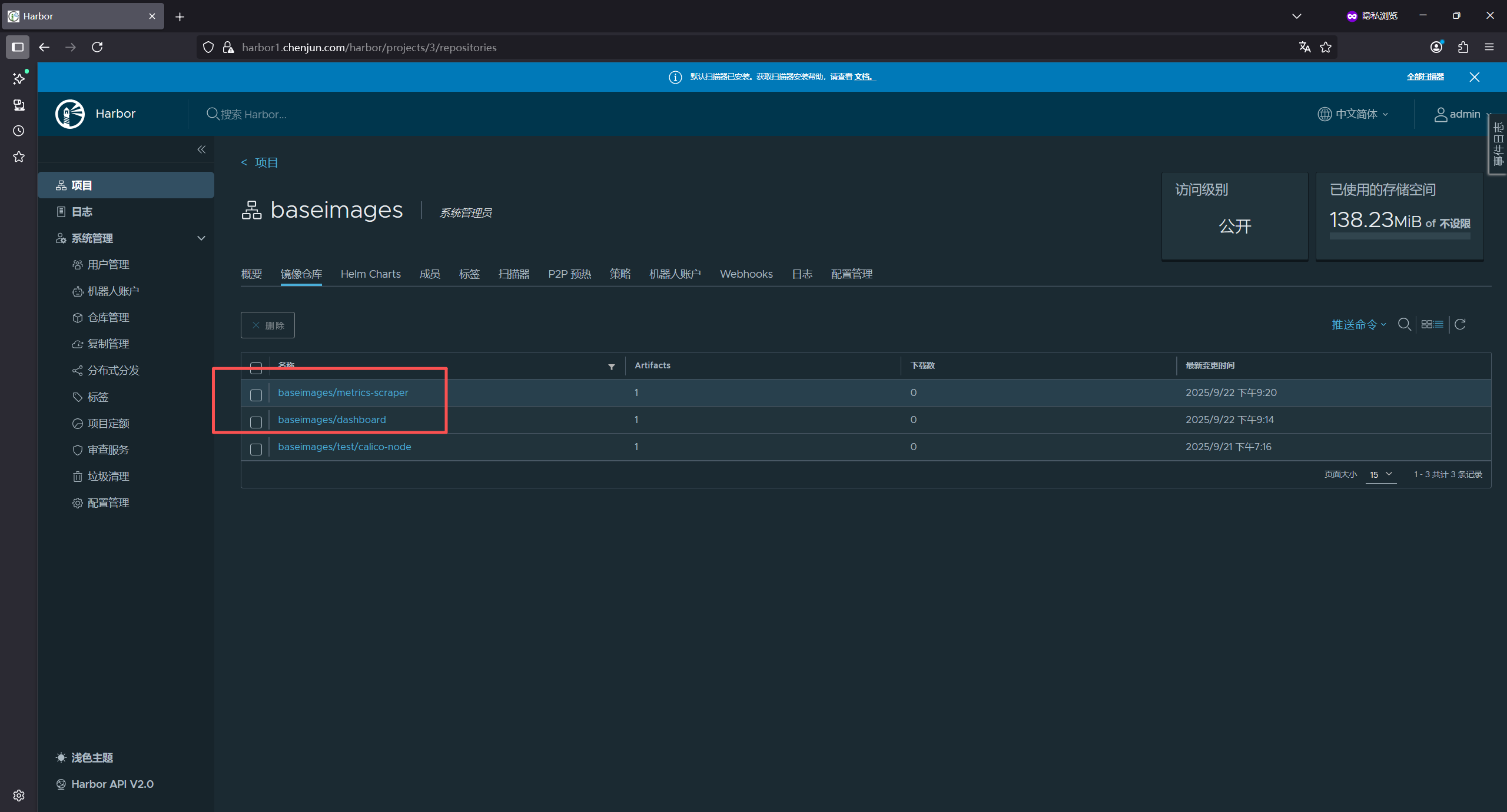

root@master1:~/yaml/20250922# docker tag kubernetesui/dashboard:v2.5.1 harbor1.chenjun.com/baseimages/dashboard:v2.5.1

root@master1:~/yaml/20250922# docker push harbor1.chenjun.com/baseimages/dashboard:v2.5.1

root@master1:~/yaml/20250922# docker tag kubernetesui/metrics-scraper:v1.0.7 harbor1.chenjun.com/baseimages/metrics-scraper:v1.0.7

root@master1:~/yaml/20250922# docker push harbor1.chenjun.com/baseimages/metrics-scraper:v1.0.7

The push refers to repository [harbor1.chenjun.com/baseimages/metrics-scraper]

7813555162f0: Pushed

10d0ebb29e3a: Pushed

v1.0.7: digest: sha256:76eb73afa0198ac457c760887ed7ebfa2f58adc09205bd9667b3f76652077a71 size: 7361.7.2.1 修改dashboard.yaml内的image镜像地址

bash

containers:

- name: kubernetes-dashboard

image: harbor1.chenjun.com/baseimages/dashboard:v2.5.1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

containers:

- name: dashboard-metrics-scraper

image: harbor1.chenjun.com/baseimages/metrics-scraper:v1.0.7

ports:

- containerPort: 8000

protocol: TCP

root@master1:~/yaml/20250922# vim dashboard-v2.5.1.yaml 1.7.2.2 启动 dashboard

bash

root@master1:~/yaml/20250922# kubectl apply -f dashboard-v2.5.1.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

# 查看pod运行状态

root@master1:~/yaml/20250922# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 2 (22h ago) 25h

default net-test2 1/1 Running 2 (22h ago) 25h

default net-test3 1/1 Running 1 (22h ago) 25h

kube-system calico-kube-controllers-754966f84c-nb8mt 1/1 Running 2 (22h ago) 28h

kube-system calico-node-29mld 1/1 Running 2 (22h ago) 28h

kube-system calico-node-4rnzt 1/1 Running 3 (22h ago) 28h

kube-system calico-node-p4ddl 1/1 Running 2 (22h ago) 28h

kube-system calico-node-rn7fk 1/1 Running 8 (32m ago) 28h

kube-system coredns-7db6b45f67-ht47r 1/1 Running 0 127m

kube-system coredns-7db6b45f67-xpzmr 1/1 Running 0 117m

kubernetes-dashboard dashboard-metrics-scraper-69d947947b-94c4p 1/1 Running 0 11s

kubernetes-dashboard kubernetes-dashboard-744bdb9f9b-f2zns 1/1 Running 0 11s

# 需要暴露端口否则无法从外部访问web页面

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort # 添加一个NodePort类型

ports:

- port: 443

targetPort: 8443

nodePort: 30004 # 添加一个端口号,也就是通过域名或ip加端口访问dashboard的页面,端口范围必须在之前hosts文件内设置的NodePort端口范围内

selector:

k8s-app: kubernetes-dashboard

root@master1:~/yaml/20250922# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 28h

kube-system kube-dns ClusterIP 10.100.0.2 <none> 53/UDP,53/TCP,9153/TCP 134m

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.100.34.202 <none> 8000/TCP 7m30s

kubernetes-dashboard kubernetes-dashboard NodePort 10.100.18.123 <none> 443:30004/TCP 7m30s

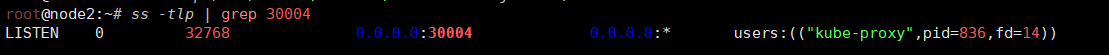

# 可以看到dashboard的443端口暴露在宿主机的30004端口上

可以看到各个node节点都监听了30004端口,node节点通过apiserver拿到了这些规则文件包括iptabler的规则等

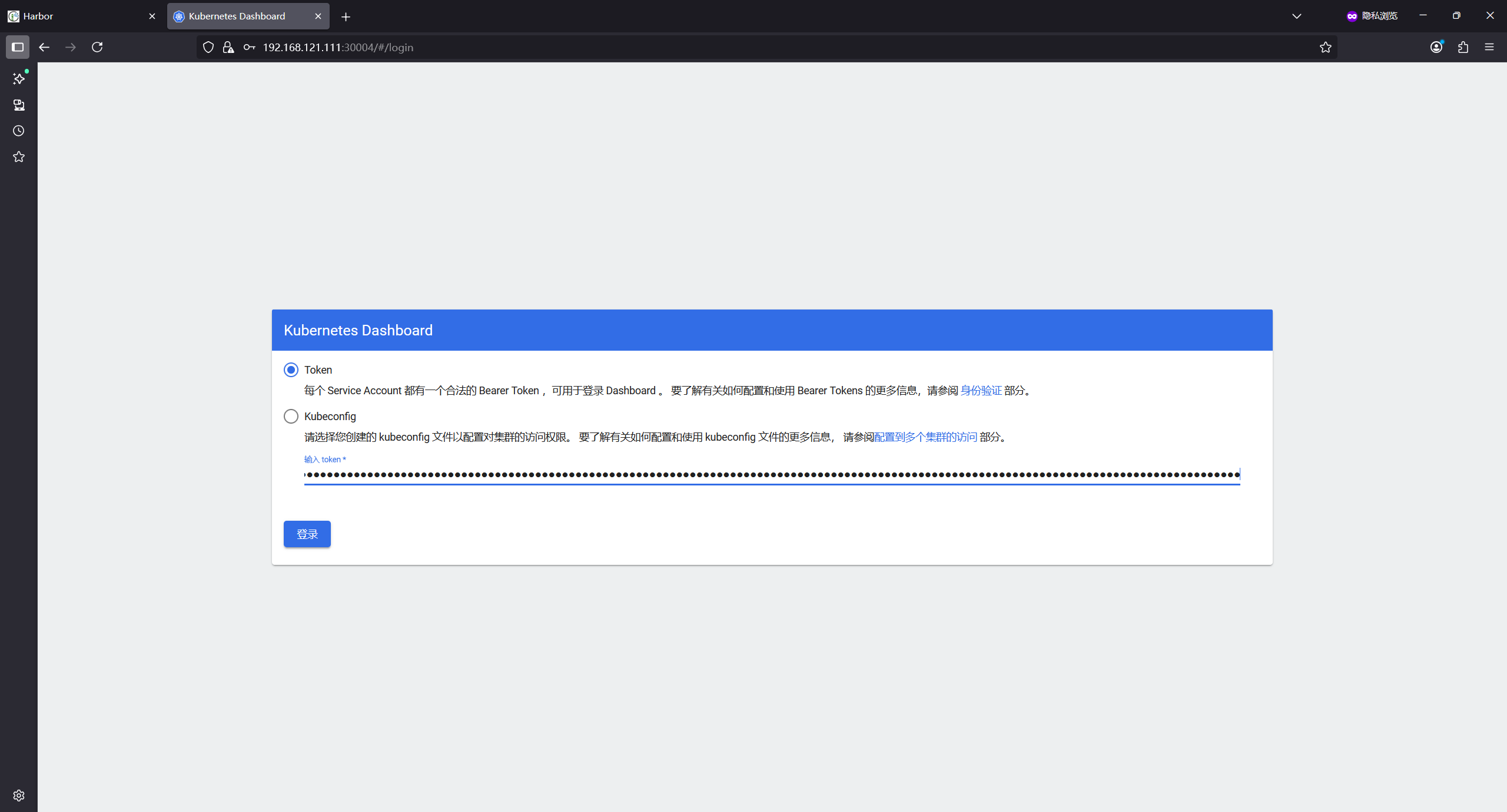

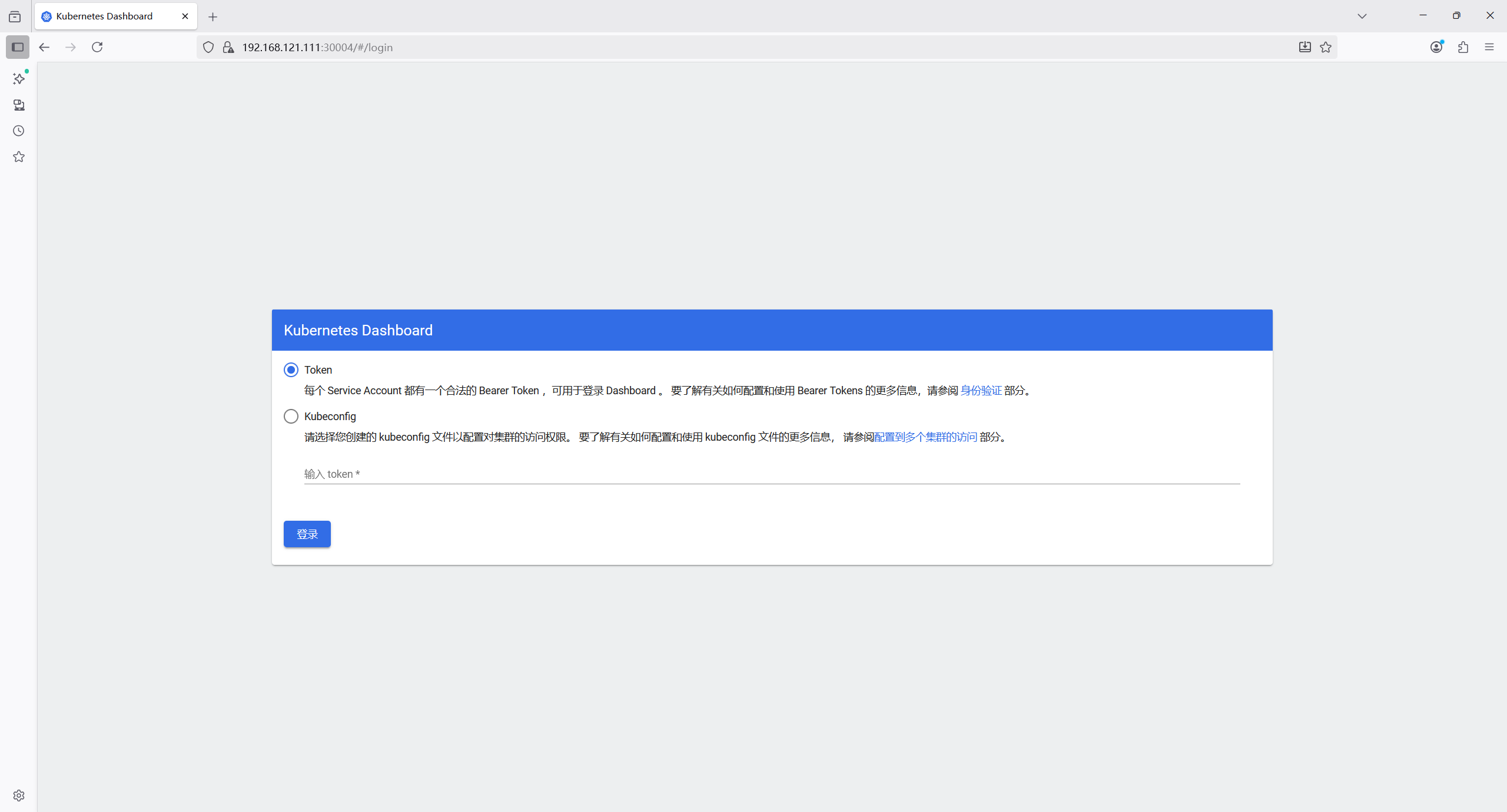

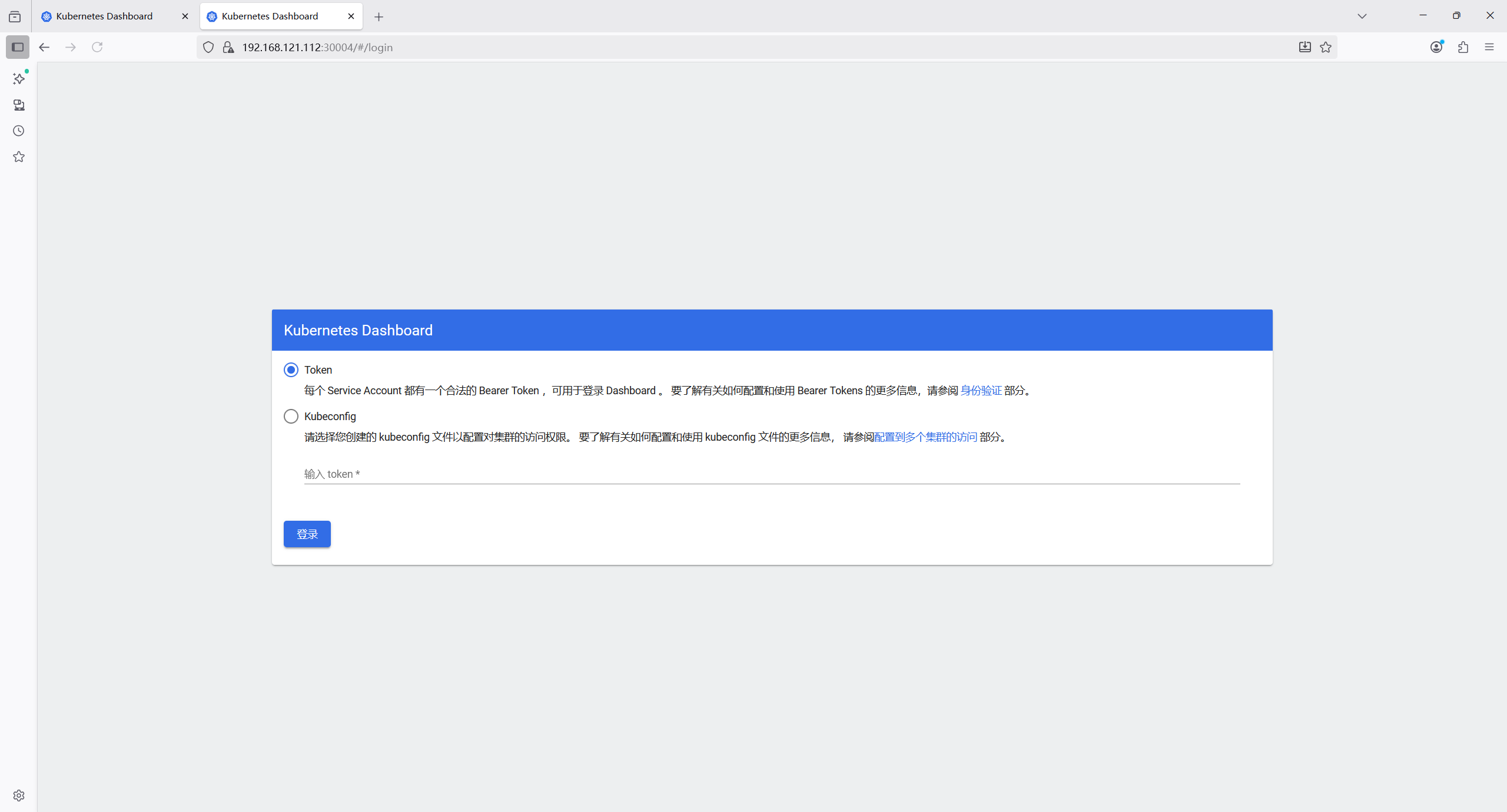

1.7.3 访问web页面

使用node1的ip+30004可以访问web页面

同样node2也可以访问

1.7.3.1 创建超级管理员权限的身份

bash

root@master1:~/yaml/20250922# vim admin-user.yaml

# 第一部分:创建服务账户(ServiceAccount)

# 服务账户是 Kubernetes 中为 Pod 提供身份标识的资源,用于与 API Server 进行认证

apiVersion: v1 # ServiceAccount 对应的 API 版本(固定为 v1)

kind: ServiceAccount # 资源类型:服务账户

metadata:

name: admin-user # 服务账户的名称,后续绑定权限时会引用此名称

namespace: kubernetes-dashboard # 服务账户所在的命名空间

# 必须与 kubernetes-dashboard 部署在同一命名空间,否则权限绑定无效

--- # YAML 文件分隔符,用于区分多个资源对象

# 第二部分:创建集群角色绑定(ClusterRoleBinding)

# 作用是将集群级别的角色(权限集合)绑定到指定主体(如服务账户),实现权限授予

apiVersion: rbac.authorization.k8s.io/v1 # RBAC 相关资源的 API 版本

kind: ClusterRoleBinding # 资源类型:集群角色绑定(作用范围为整个集群)

metadata:

name: admin-user # 绑定关系的名称,仅用于标识此绑定

# 引用要绑定的角色(权限集合)

roleRef:

apiGroup: rbac.authorization.k8s.io # 角色所属的 API 组

kind: ClusterRole # 角色类型:ClusterRole(集群级角色,适用于所有命名空间)

name: cluster-admin # 角色名称:K8s 内置的超级管理员角色

# 拥有集群内所有资源的所有操作权限(最高权限,生产环境需谨慎使用)

# 绑定的主体(被授予权限的对象)

subjects:

- kind: ServiceAccount # 主体类型:服务账户(与第一部分创建的账户对应)

name: admin-user # 服务账户名称(必须与第一部分的 name 一致)

namespace: kubernetes-dashboard # 服务账户所在的命名空间(必须与第一部分一致)1.7.3.2 创建pod

bash

root@master1:~/yaml/20250922# kubectl apply -f admin-user.yaml 1.7.3.3 获取token

bash

# 方法一

root@master1:~/yaml/20250922# kubectl get secrets -n kubernetes-dashboard

NAME TYPE DATA AGE

admin-user-token-xclwc kubernetes.io/service-account-token 3 63s

default-token-mx22j kubernetes.io/service-account-token 3 17m

kubernetes-dashboard-certs Opaque 0 17m

kubernetes-dashboard-csrf Opaque 1 17m

kubernetes-dashboard-key-holder Opaque 2 17m

kubernetes-dashboard-token-mrt55 kubernetes.io/service-account-token 3 17m

root@master1:~/yaml/20250922# kubectl describe secrets admin-user-token-xclwc -n kubernetes-dashboard

Name: admin-user-token-xclwc

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: d2162104-f367-4e70-8af9-585fc7bef52d

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1302 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImtmSmExSE9BNERJUkNmcUxFNVl1c1hmejFCV2ZMUDRUSTFkbUlUQWdHS2sifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXhjbHdjIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkMjE2MjEwNC1mMzY3LTRlNzAtOGFmOS01ODVmYzdiZWY1MmQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.o6WWGohpc4QNwvf-mhwKgNLs88hYgscryZ9KuMXamFjXGvJU8QdURxIsVgNbbH1c2U7XZY7f-JdEsU6gfoiv6jp0ZiSDlKUL1mKyHyAKh78lOOWrYCz7btz_RJrlfx4-FGzmJBkY3UAOP8-hW_3bnMnd4jY9KEDdEICF5yCuFT00LVffwKHaFBAEgBStQ6XVFUF3SKe0XR7uBeyJL7YRjjDFegJHO3F8Nkny7nGGXWsiq2YxNXbgYZ52OhkcxL1qqr8Dl2_VftxTtqnLkp8uA2sC6NxRgvh6Mp6f8h1J0fRKAfnAv9wruROKpNbC1HNF3ia4JO9bGhNq4DfUges6kA

# 方法二

root@master1:~/yaml/20250922# kubectl get secrets -A | grep admin

kubernetes-dashboard admin-user-token-xclwc kubernetes.io/service-account-token 3 96s

root@master1:~/yaml/20250922# kubectl describe secrets admin-user-token-xclwc -n kubernetes-dashboard

Name: admin-user-token-xclwc

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: d2162104-f367-4e70-8af9-585fc7bef52d

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1302 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImtmSmExSE9BNERJUkNmcUxFNVl1c1hmejFCV2ZMUDRUSTFkbUlUQWdHS2sifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXhjbHdjIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkMjE2MjEwNC1mMzY3LTRlNzAtOGFmOS01ODVmYzdiZWY1MmQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.o6WWGohpc4QNwvf-mhwKgNLs88hYgscryZ9KuMXamFjXGvJU8QdURxIsVgNbbH1c2U7XZY7f-JdEsU6gfoiv6jp0ZiSDlKUL1mKyHyAKh78lOOWrYCz7btz_RJrlfx4-FGzmJBkY3UAOP8-hW_3bnMnd4jY9KEDdEICF5yCuFT00LVffwKHaFBAEgBStQ6XVFUF3SKe0XR7uBeyJL7YRjjDFegJHO3F8Nkny7nGGXWsiq2YxNXbgYZ52OhkcxL1qqr8Dl2_VftxTtqnLkp8uA2sC6NxRgvh6Mp6f8h1J0fRKAfnAv9wruROKpNbC1HNF3ia4JO9bGhNq4DfUges6kA

# 复制eyJhbGciOiJSUzI1NiIsImtpZCI6ImtmSmExSE9BNERJUkNmcUxFNVl1c1hmejFCV2ZMUDRUSTFkbUlUQWdHS2sifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXhjbHdjIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkMjE2MjEwNC1mMzY3LTRlNzAtOGFmOS01ODVmYzdiZWY1MmQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.o6WWGohpc4QNwvf-mhwKgNLs88hYgscryZ9KuMXamFjXGvJU8QdURxIsVgNbbH1c2U7XZY7f-JdEsU6gfoiv6jp0ZiSDlKUL1mKyHyAKh78lOOWrYCz7btz_RJrlfx4-FGzmJBkY3UAOP8-hW_3bnMnd4jY9KEDdEICF5yCuFT00LVffwKHaFBAEgBStQ6XVFUF3SKe0XR7uBeyJL7YRjjDFegJHO3F8Nkny7nGGXWsiq2YxNXbgYZ52OhkcxL1qqr8Dl2_VftxTtqnLkp8uA2sC6NxRgvh6Mp6f8h1J0fRKAfnAv9wruROKpNbC1HNF3ia4JO9bGhNq4DfUges6kA使用token登录dashboard