目录

[4、重新加载 systemd 的配置](#4、重新加载 systemd 的配置)

[1. 连接到 ZooKeeper](#1. 连接到 ZooKeeper)

[2. 查看 Kafka 的核心节点](#2. 查看 Kafka 的核心节点)

[5、 创建topic名称为second,1个副本,分区2](#5、 创建topic名称为second,1个副本,分区2)

[10、 回到zookeeper容器查看second主题是否删除 成功删除topic里名称为second主题](#10、 回到zookeeper容器查看second主题是否删除 成功删除topic里名称为second主题)

一、环境准备

关闭防火墙和SElinux

bash

systemctl disable --now firewalld

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config kafka服务器:IP 192.168.158.37

二、安装docker

1、配置阿里源

bash

cat <<EOF >> /etc/yum.repos.d/docker-ce.repo

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com//docker-ce/linux/centos/9/x86_64/stable/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

EOF2、安装docker

bash

yum install -y docker-ce3、编辑配置文件

bash

vim /etc/docker/daemon.json

{

"registry-mirrors": [

"https://0vmzj3q6.mirror.aliyuncs.com",

"https://docker.m.daocloud.io",

"https://mirror.baidubce.com",

"https://dockerproxy.com",

"https://mirror.iscas.ac.cn",

"https://huecker.io",

"https://dockerhub.timeweb.cloud",

"https://noohub.ru",

"https://vlgh0kqj.mirror.aliyuncs.com"

]

}4、重新加载 systemd 的配置

bash

systemctl daemon-reload5、启动docker

bash

#设置docker开机自启

systemctl enable --now docker三、Kafka的安装和使用

默认监听端口号

zookeeper 默认监听端口号 2181

Kafkam 默认监听端口号 9092

1、下载kafka和zookeeper镜像

bash

# docker直接拉取kafka和zookeeper的镜像

docker pull wurstmeister/kafka

docker pull wurstmeister/zookeeper 2、启动zookeeper容器

首先需要启动zookeeper,如果不先启动,启动kafka没有地方注册消息

cpp

[root@localhost ~]# docker run -it --name zookeeper -p 12181:2181 -d wurstmeister/zookeeper:latest

f13306aaeacdfa721bbaddf5627cf18412fb5b62a90383744a974ec2a5b6abe2

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f13306aaeacd wurstmeister/zookeeper:latest "/bin/sh -c '/usr/sb..." 11 seconds ago Up 11 seconds 22/tcp, 2888/tcp, 3888/tcp, 0.0.0.0:12181->2181/tcp, [::]:12181->2181/tcp zookeeper2.1、容器启动失败的原因

容器启动失败的核心原因是 系统内存资源不足。

问题诊断: 您已经正确使用了 Docker 命令为容器分配了 1GB 内存 (-m 1024m) 并为 Java 进程设置了堆内存参数 (-Xmx512m -Xms256m),但日志仍然显示: library initialization failed - unable to allocate file descriptor table - out of memory 这表明,除了 JVM 堆内存外,Zookeeper 进程在初始化系统资源(特别是文件描述符表)时,宿主机操作系统无法提供足够的内存 ,导致进程崩溃 (Aborted (core dumped))。

解决方案:

此问题需要从宿主机操作系统层面和容器配置两方面解决:

-

检查并释放宿主机内存 首先确认宿主机(您的 CentOS 系统)是否有足够的可用内存。

free -h查看

available列,如果可用内存很小,您需要关闭一些不必要的进程或程序来释放内存。 -

调整系统的内存过载使用策略(关键步骤) Linux 内核有一个称为

overcommit_memory的参数,它控制着内核分配内存的策略。默认设置可能过于保守,导致即使有可用内存,分配也会失败。-

查看当前设置:

cat /proc/sys/vm/overcommit_memory -

临时调整策略(立即生效,重启后失效):

echo 1 > /proc/sys/vm/overcommit_memory将此参数设置为 1,表示内核允许分配所有的物理内存,从而避免此类分配失败。

-

永久调整策略(重启后仍生效):

编辑 /etc/sysctl.conf 文件,添加一行:

vm.overcommit_memory = 1然后执行 sysctl -p 使配置生效。

-

-

增加容器内存限制并重新启动 在调整系统策略后,移除旧容器并以更高内存限制重新运行。

docker rm zookeeper docker run -d --name zookeeper -p 12181:2181 -m wurstmeister/zookeeper:latest

总结: 您遇到的问题根源是 宿主机操作系统内核的内存分配策略 ,阻止了 Docker 容器获得它已请求的内存。通过调整 vm.overcommit_memory 参数,可以解决此问题。请先执行步骤2,这通常是解决此类"内存不足"错误的最有效方法。

3、启动kafka容器

注意需要启动三台,注意端口的映射,都是映射到9092

第一台

cpp

docker run -it --name kafka01 -p 19092:9092 -d -e KAFKA_BROKER_ID=0 -e KAFKA_ZOOKEEPER_CONNECT=192.168.158.37:12181 -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.158.37:19092 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 wurstmeister/kafka:latest第二台

cpp

docker run -it --name kafka02 -p 19093:9092 -d -e KAFKA_BROKER_ID=1 -e KAFKA_ZOOKEEPER_CONNECT=192.168.158.37:12181 -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.158.37:19093 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 wurstmeister/kafka:latest第三台

cpp

docker run -it --name kafka03 -p 19094:9092 -d -e KAFKA_BROKER_ID=2 -e KAFKA_ZOOKEEPER_CONNECT=192.168.158.37:12181 -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.158.37:19094 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 wurstmeister/kafka:latest上面端口的映射注意都是映射到Kafka的9092端口上!否则将不能够连接!

cpp

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

50c1c4800c0a wurstmeister/kafka:latest "start-kafka.sh" 41 seconds ago Up 41 seconds 0.0.0.0:19094->9092/tcp, [::]:19094->9092/tcp kafka03

f73e354ae495 wurstmeister/kafka:latest "start-kafka.sh" 51 seconds ago Up 51 seconds 0.0.0.0:19093->9092/tcp, [::]:19093->9092/tcp kafka02

192055d53819 wurstmeister/kafka:latest "start-kafka.sh" About a minute ago Up About a minute 0.0.0.0:19092->9092/tcp, [::]:19092->9092/tcp kafka01

f13306aaeacd wurstmeister/zookeeper:latest "/bin/sh -c '/usr/sb..." 4 hours ago Up 4 hours 22/tcp, 2888/tcp, 3888/tcp, 0.0.0.0:12181->2181/tcp, [::]:12181->2181/tcp zookeeper四、操作步骤详解

1. 连接到 ZooKeeper

cpp

#进入zookeeper容器

[root@localhost ~]# docker exec -it zookeeper bash

root@f13306aaeacd:/opt/zookeeper-3.4.13# cd bin/

#./zkCli.sh --server <ZooKeeper服务器IP:端口>

root@f13306aaeacd:/opt/zookeeper-3.4.13/bin# ./zkCli.sh -server 127.0.0.1:2181

。。。。省略。。

或者

root@f13306aaeacd:/opt/zookeeper-3.4.13/bin# ./zkCli.sh -server 192.168.158.37:121812. 查看 Kafka 的核心节点

cpp

# 查看所有活跃的Broker

[zk: 192.168.158.37:12181(CONNECTED) 0] ls /brokers/ids

[2, 1, 0]3、进入kafka容器里

cpp

[root@localhost ~]# docker exec -it kafka0

kafka01 kafka02 kafka03

[root@localhost ~]# docker exec -it kafka01 bash

root@192055d53819:/# ls

bin boot dev etc home kafka lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@192055d53819:/# cd opt/kafka_2.13-2.8.1/bin/

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ls

connect-distributed.sh kafka-dump-log.sh kafka-storage.sh

connect-mirror-maker.sh kafka-features.sh kafka-streams-application-reset.sh

connect-standalone.sh kafka-leader-election.sh kafka-topics.sh

kafka-acls.sh kafka-log-dirs.sh kafka-verifiable-consumer.sh

kafka-broker-api-versions.sh kafka-metadata-shell.sh kafka-verifiable-producer.sh

kafka-cluster.sh kafka-mirror-maker.sh trogdor.sh

kafka-configs.sh kafka-preferred-replica-election.sh windows

kafka-console-consumer.sh kafka-producer-perf-test.sh zookeeper-security-migration.sh

kafka-console-producer.sh kafka-reassign-partitions.sh zookeeper-server-start.sh

kafka-consumer-groups.sh kafka-replica-verification.sh zookeeper-server-stop.sh

kafka-consumer-perf-test.sh kafka-run-class.sh zookeeper-shell.sh

kafka-delegation-tokens.sh kafka-server-start.sh

kafka-delete-records.sh kafka-server-stop.sh创建topic名称为first(主题),1个副本,分区3

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-topics.sh --zookeeper 192.168.158.37:12181 --create --topic first --replication-factor 1 --partitions 3

Created topic first.4、回到zookeeper容器里查看所有topic(主题)

cpp

[zk: 192.168.158.37:12181(CONNECTED) 1] ls /brokers/topics

[first]5、 创建topic名称为second,1个副本,分区2

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-topics.sh --zookeeper 192.168.158.37:12181 --create --topic second --replication-factor 1 --partitions 2

Created topic second.6、回到zookeeper容器里查看

cpp

[zk: 192.168.158.37:12181(CONNECTED) 2] ls /brokers/topics

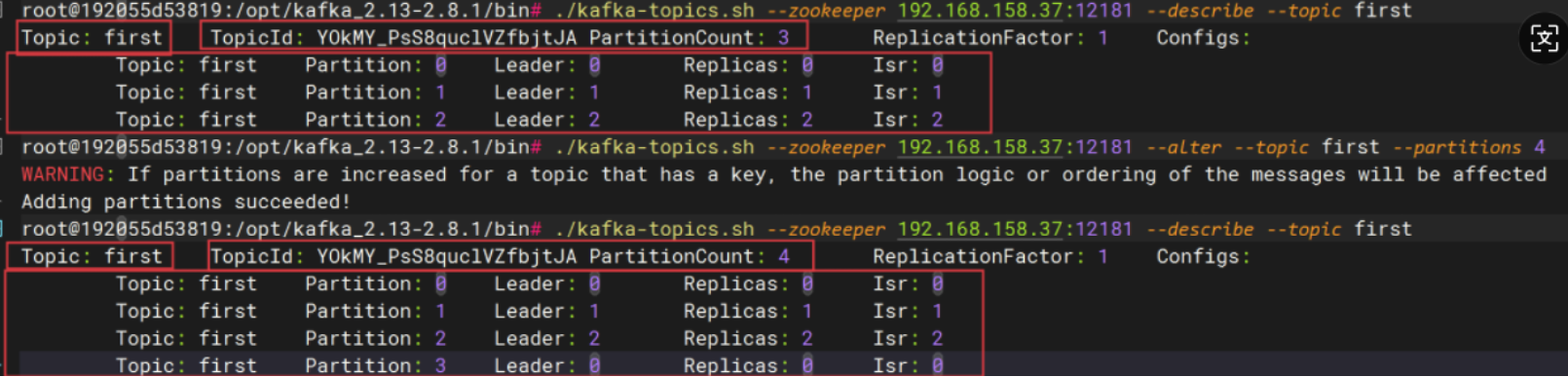

[second, first]7、kafkar容器里查看first此topic信息

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-topics.sh --zookeeper 192.168.158.37:12181 --describe --topic first

Topic: first TopicId: YOkMY_PsS8quclVZfbjtJA PartitionCount: 3 ReplicationFactor: 1 Configs:

Topic: first Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: first Partition: 1 Leader: 1 Replicas: 1 Isr: 1

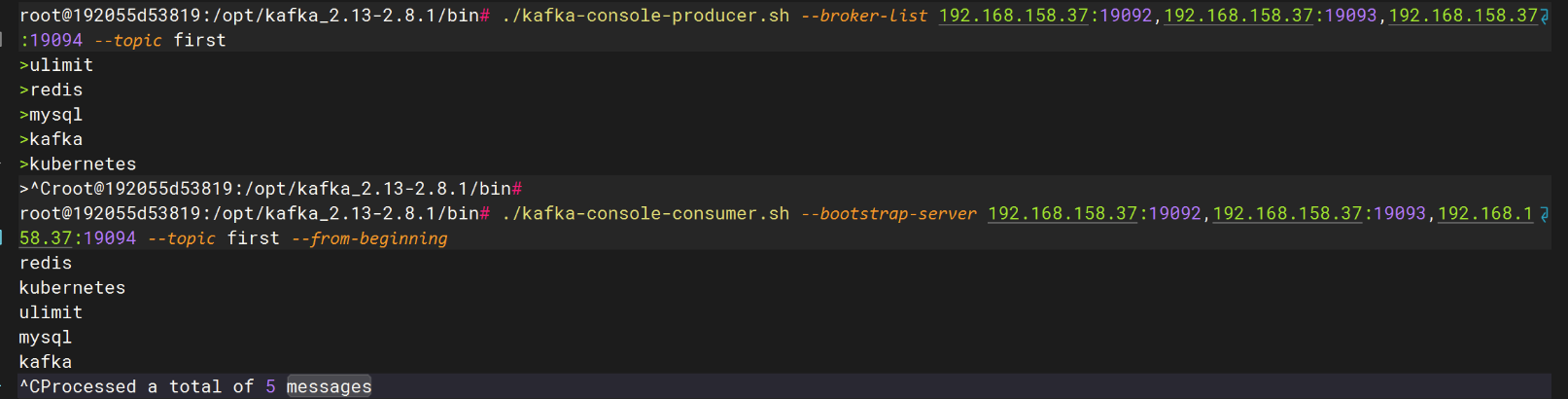

Topic: first Partition: 2 Leader: 2 Replicas: 2 Isr: 2kafka容器里调用生产者生产消息

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-console-producer.sh --broker-list 192.168.158.37:19092,192.168.158.37:19093,192.168.158.37:19094 --topic first

>ulimit

>redis

>mysql

>kafka

>kubernetes调用消费者消费消息,from-beginning表示读取全部的消息,注意它的顺序是乱的

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-console-consumer.sh --bootstrap-server 192.168.158.37:19092,192.168.158.37:19093,192.168.158.37:19094 --topic first --from-beginning

redis

kubernetes

ulimit

mysql

kafka

8、回到zookeeper容器里查看

就会看到consumer_offsets,消费者消费消息的变量就存到topic主题里边了

cpp

[zk: 192.168.158.37:12181(CONNECTED) 3] ls /brokers/topics

[__consumer_offsets, second, first]9、删除topic里名称为second主题

注意:这里的删除是没有真正的删除

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-topics.sh --zookeeper 192.168.158.37:12181 --delete --topic second

Topic second is marked for deletion. #第二个主题已标记为删除。

Note: This will have no impact if delete.topic.enable is not set to true. #注意:如果 delete.topic.enable 未设置为 true,此操作将不会产生任何影响

可以看到删除的时候只是被标记为删除marked for deletion并没有真正的删除,如果需要真正的删除,需要再config/server.properties中设置delete.topic.enable=true10、 回到zookeeper容器查看second主题是否删除

成功删除topic里名称为second主题

cpp

[zk: 192.168.158.37:12181(CONNECTED) 6] ls /brokers/topics

[__consumer_offsets, first]11、修改分区数

将分区扩展到3

先查看现在的分区数,PartitionCount(分区有三个)3

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-topics.sh --zookeeper 192.168.158.37:12181 --describe --topic first

Topic: first TopicId: YOkMY_PsS8quclVZfbjtJA PartitionCount: 3 ReplicationFactor: 1 Configs:

Topic: first Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: first Partition: 1 Leader: 1 Replicas: 1 Isr: 1

Topic: first Partition: 2 Leader: 2 Replicas: 2 Isr: 2将分区扩展到4

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-topics.sh --zookeeper 192.168.158.37:12181 --alter --topic first --partitions 4

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!

警告:如果为有键的主题增加分区,消息的分区逻辑或顺序将会受到影响

分区添加成功查看分区已扩展为4

cpp

root@192055d53819:/opt/kafka_2.13-2.8.1/bin# ./kafka-topics.sh --zookeeper 192.168.158.37:12181 --describe --topic first

Topic: first TopicId: YOkMY_PsS8quclVZfbjtJA PartitionCount: 4 ReplicationFactor: 1 Configs:

Topic: first Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: first Partition: 1 Leader: 1 Replicas: 1 Isr: 1

Topic: first Partition: 2 Leader: 2 Replicas: 2 Isr: 2

Topic: first Partition: 3 Leader: 0 Replicas: 0 Isr: 0