Kettle(Pentaho Data Integration)是一款开源的ETL工具,支持跨数据库迁移、数据同步等任务。以下是其核心概念及跨库作业设计要点:

核心组件

- **转换(Transformation)**:包含多个步骤(如表输入、字段转换、表输出),用于数据处理和数据流动。

- **作业(Job)**:由多个转换或作业项组成,控制任务执行流程,支持串行或并行执行。

- **步骤(Step)**:构成转换或作业的基本单元,如数据库连接、数据查询、字段映射等。

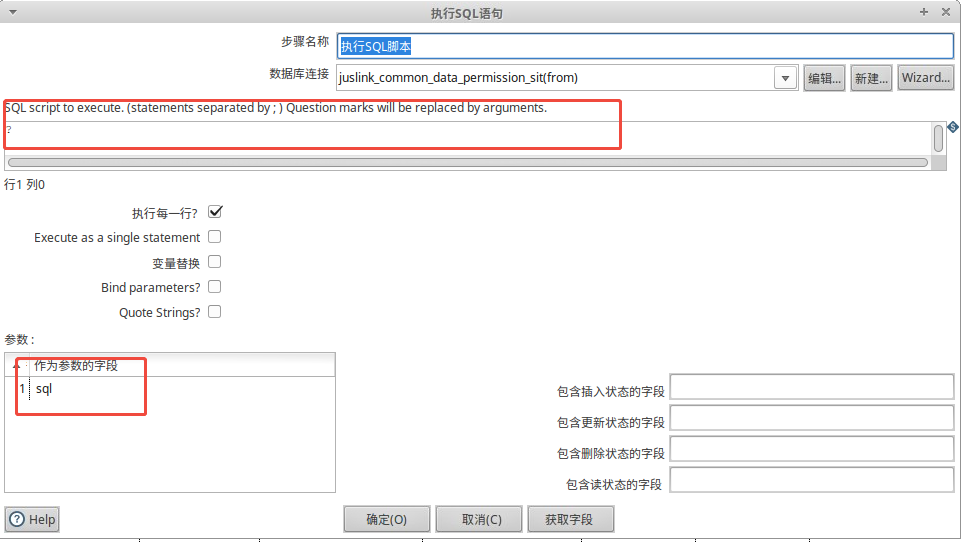

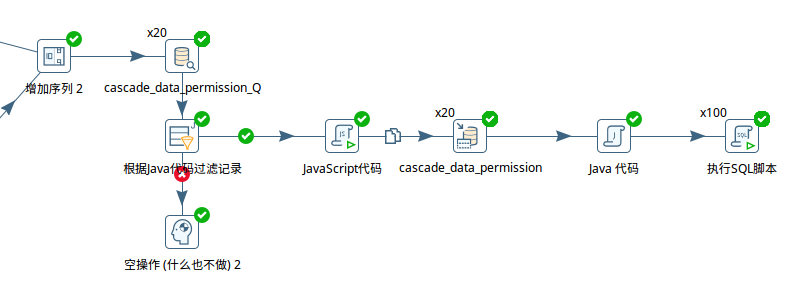

部分转换流程图:

重要节点说明:

重要节点说明:

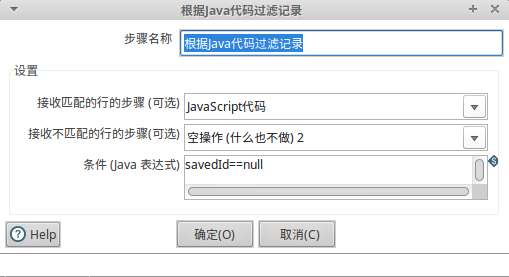

1.根据Java代码过滤记录,满足条件执行主步骤,不满足执行空操作

2.级联数据授权

处理级联数据授权的主对象,新增or修改

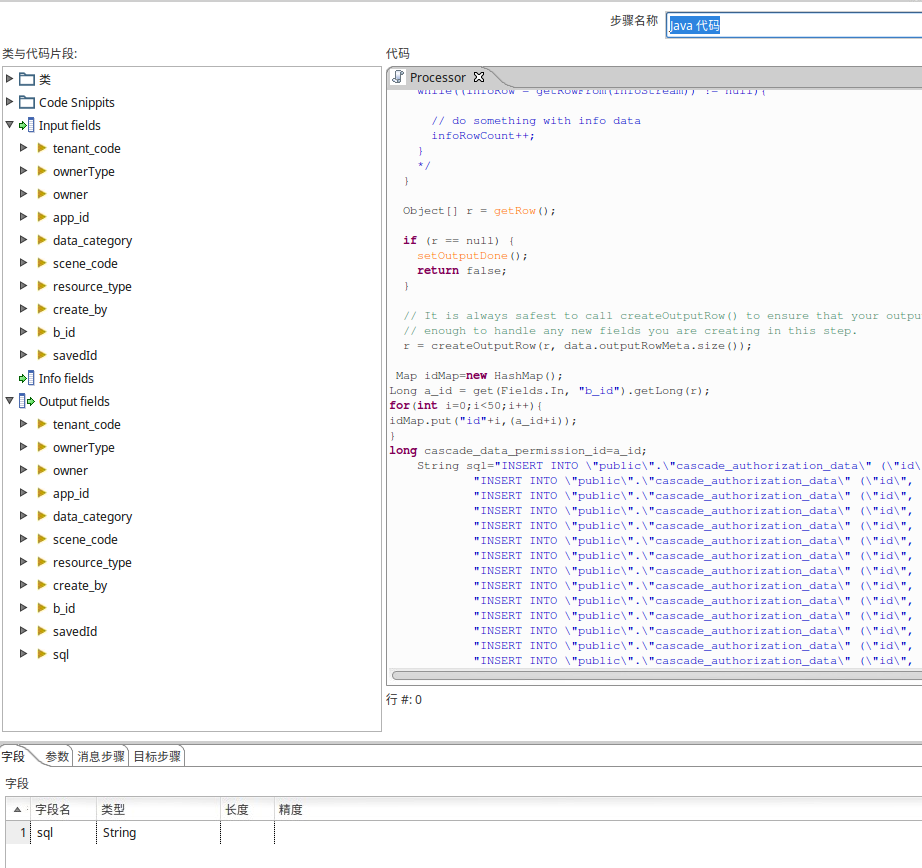

3.java代码

处理上一步骤的每一行数据,级联数据授权的明细进行批量sql的处理,通过b_id,进行递增,进行替换主键id和parentId,使其形成一颗tree

添加输出字段sql ,并将其添加到输入中的每行

java

import java.util.HashMap;

import java.util.Map;

public boolean processRow(StepMetaInterface smi, StepDataInterface sdi) throws KettleException {

if (first) {

first = false;

/* TODO: Your code here. (Using info fields)

FieldHelper infoField = get(Fields.Info, "info_field_name");

RowSet infoStream = findInfoRowSet("info_stream_tag");

Object[] infoRow = null;

int infoRowCount = 0;

// Read all rows from info step before calling getRow() method, which returns first row from any

// input rowset. As rowMeta for info and input steps varies getRow() can lead to errors.

while((infoRow = getRowFrom(infoStream)) != null){

// do something with info data

infoRowCount++;

}

*/

}

Object[] r = getRow();

if (r == null) {

setOutputDone();

return false;

}

// It is always safest to call createOutputRow() to ensure that your output row's Object[] is large

// enough to handle any new fields you are creating in this step.

r = createOutputRow(r, data.outputRowMeta.size());

Map idMap=new HashMap();

Long a_id = get(Fields.In, "b_id").getLong(r);

for(int i=0;i<50;i++){

idMap.put("id"+i,(a_id+i));

}

long cascade_data_permission_id=a_id;

String sql = "INSERT INTO \"public\".\"cascade_authorization_data\" (\"id\", \"cascade_data_permission_id\", \"resource\", \"resource_type\", \"parent_id\", \"created_by\", \"created_time\", \"last_modified_by\", \"last_modified_time\", \"org_id\", \"proxy_operator\") VALUES ("+idMap.get("id0")+", "+cascade_data_permission_id+", 'BY_ROUTINE', 'SELF_OP_TRANSPORT_ORDER_PLACEMENT_BIZ_MD', "+idMap.get("id5")+", '20250910001', '2025-09-22 07:55:21.050965', '20250910001', '2025-09-22 07:55:21.050968', '4402542551589711872', NULL);\n" +

"INSERT INTO \"public\".\"cascade_authorization_data\" (\"id\", \"cascade_data_permission_id\", \"resource\", \"resource_type\", \"parent_id\", \"created_by\", \"created_time\", \"last_modified_by\", \"last_modified_time\", \"org_id\", \"proxy_operator\") VALUES ("+idMap.get("id1")+", "+cascade_data_permission_id+", 'BY_ROUTINE', 'SELF_OP_TRANSPORT_ORDER_PLACEMENT_BIZ_MD', "+idMap.get("id3")+", '20250910001', '2025-09-22 07:55:21.050868', '20250910001', '2025-09-22 07:55:21.050884', '4402542551589711872', NULL);\n" +

"INSERT INTO \"public\".\"cascade_authorization_data\" (\"id\", \"cascade_data_permission_id\", \"resource\", \"resource_type\", \"parent_id\", \"created_by\", \"created_time\", \"last_modified_by\", \"last_modified_time\", \"org_id\", \"proxy_operator\") VALUES ("+idMap.get("id2")+", "+cascade_data_permission_id+", 'BY_ROUTINE', 'SELF_OP_TRANSPORT_ORDER_PLACEMENT_BIZ_MD', "+idMap.get("id8")+", '20250910001', '2025-09-22 07:55:21.05107', '20250910001', '2025-09-22 07:55:21.051075', '4402542551589711872', NULL);\n" +

"INSERT INTO \"public\".\"cascade_authorization_data\" (\"id\", \"cascade_data_permission_id\", \"resource\", \"resource_type\", \"parent_id\", \"created_by\", \"created_time\", \"last_modified_by\", \"last_modified_time\", \"org_id\", \"proxy_operator\") VALUES ("+idMap.get("id3")+", "+cascade_data_permission_id+", 'TPM_SEA', 'TRANSPORT_MODE', "+idMap.get("id6")+", '20250910001', '2025-09-22 07:55:21.050857', '20250910001', '2025-09-22 07:55:21.050861', '4402542551589711872', NULL);\n" +

"INSERT INTO \"public\".\"cascade_authorization_data\" (\"id\", \"cascade_data_permission_id\", \"resource\", \"resource_type\", \"parent_id\", \"created_by\", \"created_time\", \"last_modified_by\", \"last_modified_time\", \"org_id\", \"proxy_operator\") VALUES ("+idMap.get("id4")+", "+cascade_data_permission_id+", 'BY_ROUTINE', 'SELF_OP_TRANSPORT_ORDER_PLACEMENT_BIZ_MD', "+idMap.get("id16")+", '20250910001', '2025-09-22 07:55:21.050846', '20250910001', '2025-09-22 07:55:21.050848', '4402542551589711872', NULL);\n" +

"INSERT INTO \"public\".\"cascade_authorization_data\" (\"id\", \"cascade_data_permission_id\", \"resource\", \"resource_type\", \"parent_id\", \"created_by\", \"created_time\", \"last_modified_by\", \"last_modified_time\", \"org_id\", \"proxy_operator\") VALUES ("+idMap.get("id5")+", "+cascade_data_permission_id+", 'TPM_RAIL', 'TRANSPORT_MODE', "+idMap.get("id11")+", '20250910001', '2025-09-22 07:55:21.050931', '20250910001', '2025-09-22 07:55:21.050934', '4402542551589711872', NULL);\n" +

"INSERT INTO \"public\".\"cascade_authorization_data\" (\"id\", \"cascade_data_permission_id\", \"resource\", \"resource_type\", \"parent_id\", \"created_by\", \"created_time\", \"last_modified_by\", \"last_modified_time\", \"org_id\", \"proxy_operator\") VALUES ("+idMap.get("id6")+", "+cascade_data_permission_id+", 'TRM_DMC', 'TRADE_MODE', NULL, '20250910001', '2025-09-22 07:55:21.050825', '20250910001', '2025-09-22 07:55:21.050829', '4402542551589711872', NULL);";

get(Fields.Out, "sql").setValue(r, sql);

// Send the row on to the next step.

putRow(data.outputRowMeta, r);

return true;

}4.进行每行数据的sql批量执行