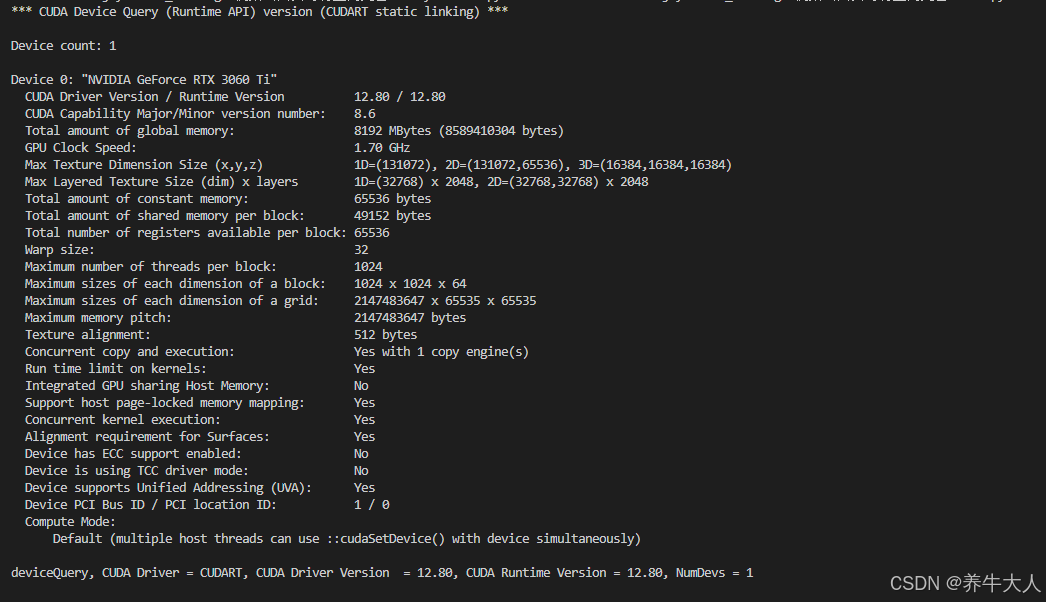

环境:opencv4.10 显卡3060Ti

编译好后,测试代码

import cv2

from cv2 import cuda

cuda.printCudaDeviceInfo(0)

python opencv cuda测试代码

python

import cv2

import numpy as np

def main():

# Define method enum equivalent

MOG = 0

MOG2 = 1

# Select method

method = MOG

# Check CUDA device count

count = cv2.cuda.getCudaEnabledDeviceCount()

print(f"GPU Device Count : {count}")

# Create CUDA Stream

stream = cv2.cuda_Stream()

# Open video capture

cap = cv2.VideoCapture("nfs.mp4")

# Read first frame

ret, frame = cap.read()

if not ret:

print("Failed to read video")

return

# Create CUDA GpuMat

d_frame = cv2.cuda_GpuMat()

d_frame.upload(frame)

# Create background subtractor

if method == MOG:

bg_subtractor = cv2.cuda.createBackgroundSubtractorMOG()

else:

bg_subtractor = cv2.cuda.createBackgroundSubtractorMOG2()

# Create CUDA GpuMats

d_fgmask = cv2.cuda_GpuMat()

d_fgimg = cv2.cuda_GpuMat()

d_bgimg = cv2.cuda_GpuMat()

while True:

ret, frame = cap.read()

if not ret:

break

start = cv2.getTickCount()

try:

# Upload frame to GPU

d_frame.upload(frame)

# Update the model

if method == MOG:

d_fgmask = bg_subtractor.apply(d_frame, 0.01, stream)

else:

d_fgmask = bg_subtractor.apply(d_frame, learningRate=-1, stream=stream)

# Get background image

d_bgimg = bg_subtractor.getBackgroundImage(stream)

# Download mask for processing

fgmask = d_fgmask.download()

# Process mask on CPU (threshold)

_, fgmask = cv2.threshold(fgmask, 250, 255, cv2.THRESH_BINARY)

# Convert to 3 channels

fgmask_3ch = cv2.cvtColor(fgmask, cv2.COLOR_GRAY2BGR)

# Upload processed mask back to GPU

d_fgmask_3ch = cv2.cuda_GpuMat()

d_fgmask_3ch.upload(fgmask_3ch)

# Create output GPU Mat for foreground

d_fgimg = cv2.cuda_GpuMat(d_frame.size(), d_frame.type())

# Extract foreground using multiply

cv2.cuda.multiply(d_frame, d_fgmask_3ch, d_fgimg, 1.0/255.0, -1, stream)

# Synchronize CUDA Stream

stream.waitForCompletion()

# Download results from GPU

fgimg = d_fgimg.download()

bgimg = None if d_bgimg.empty() else d_bgimg.download()

# Calculate FPS

fps = cv2.getTickFrequency() / (cv2.getTickCount() - start)

cv2.putText(frame, f"FPS : {fps:.2f}", (50, 50),

cv2.FONT_HERSHEY_SIMPLEX, 1.0, (0, 0, 255), 2, cv2.LINE_AA)

# Show results

cv2.imshow("image", frame)

cv2.imshow("foreground mask", fgmask)

cv2.imshow("foreground image", fgimg)

if bgimg is not None:

cv2.imshow("mean background image", bgimg)

except cv2.error as e:

print(f"OpenCV Error: {e}")

continue

# Check for ESC key

if cv2.waitKey(1) == 27:

break

# Clean up

cap.release()

cv2.destroyAllWindows()

if __name__ == "__main__":

main()其实以上的python代码由以下的c++转换来的,如果想在c++环境中测试opencv gpu效果

c++代码

https://cloud.tencent.com/developer/article/1523416

cpp

#include <iostream>

#include <string>

#include "opencv2/core.hpp"

#include "opencv2/core/utility.hpp"

#include "opencv2/cudabgsegm.hpp"

#include "opencv2/video.hpp"

#include "opencv2/highgui.hpp"

using namespace std;

using namespace cv;

using namespace cv::cuda;

enum Method

{

MOG,

MOG2,

};

int main(int argc, const char** argv)

{

Method m = MOG;

int count = cuda::getCudaEnabledDeviceCount();

printf("GPU Device Count : %d \n", count);

VideoCapture cap;

cap.open("D:/images/video/example_dsh.mp4");

Mat frame;

cap >> frame;

GpuMat d_frame(frame);

Ptr<BackgroundSubtractor> mog = cuda::createBackgroundSubtractorMOG();

Ptr<BackgroundSubtractor> mog2 = cuda::createBackgroundSubtractorMOG2();

GpuMat d_fgmask;

GpuMat d_fgimg;

GpuMat d_bgimg;

Mat fgmask;

Mat fgimg;

Mat bgimg;

switch (m)

{

case MOG:

mog->apply(d_frame, d_fgmask, 0.01);

break;

case MOG2:

mog2->apply(d_frame, d_fgmask);

break;

}

namedWindow("image", WINDOW_AUTOSIZE);

namedWindow("foreground mask", WINDOW_AUTOSIZE);

namedWindow("foreground image", WINDOW_AUTOSIZE);

namedWindow("mean background image", WINDOW_AUTOSIZE);

for (;;)

{

cap >> frame;

if (frame.empty())

break;

int64 start = cv::getTickCount();

d_frame.upload(frame);

//update the model

switch (m)

{

case MOG:

mog->apply(d_frame, d_fgmask, 0.01);

mog->getBackgroundImage(d_bgimg);

break;

case MOG2:

mog2->apply(d_frame, d_fgmask);

mog2->getBackgroundImage(d_bgimg);

break;

}

d_fgimg.create(d_frame.size(), d_frame.type());

d_fgimg.setTo(Scalar::all(0));

d_frame.copyTo(d_fgimg, d_fgmask);

d_fgmask.download(fgmask);

d_fgimg.download(fgimg);

if (!d_bgimg.empty())

d_bgimg.download(bgimg);

imshow("foreground mask", fgmask);

imshow("foreground image", fgimg);

if (!bgimg.empty())

imshow("mean background image", bgimg);

double fps = cv::getTickFrequency() / (cv::getTickCount() - start);

// std::cout << "FPS : " << fps << std::endl;

putText(frame, format("FPS : %.2f", fps), Point(50, 50), FONT_HERSHEY_SIMPLEX, 1.0, Scalar(0, 0, 255), 2, 8);

imshow("image", frame);

char key = (char)waitKey(1);

if (key == 27)

break;

}

return 0;

}