Karpenter是一个为Kubernetes构建的开源自动扩缩容项目。它提高了Kubernetes应用程序的可用性,而无需手动或过度配置计算资源。 Karpenter旨在通过观察不可调度的Pod的聚合资源请求并做出启动和终止节点的决策,以最大限度地减少调度延迟,从而在几秒钟内(而不是几分钟)提供合适的计算资源来满足您的应用程序的需求。

karpenter可以替代传统的cluster-autoscaler,我们可以参考karpenter进行安装,选择Migrating from Cluster Autoscaler安装方式,为现有的EKS集群安装karpenter

本次安装Cluster Autoscaler的参考链接

环境

| Software | version | install location |

|---|---|---|

| AWS EKS | 1.34 | AWS(俄勒冈region) |

| eksctl | 0.215.0 | 本地电脑 |

| kubectl | 1.34 | 本地电脑 |

| aws cli | 2.21.0 | 本地电脑 |

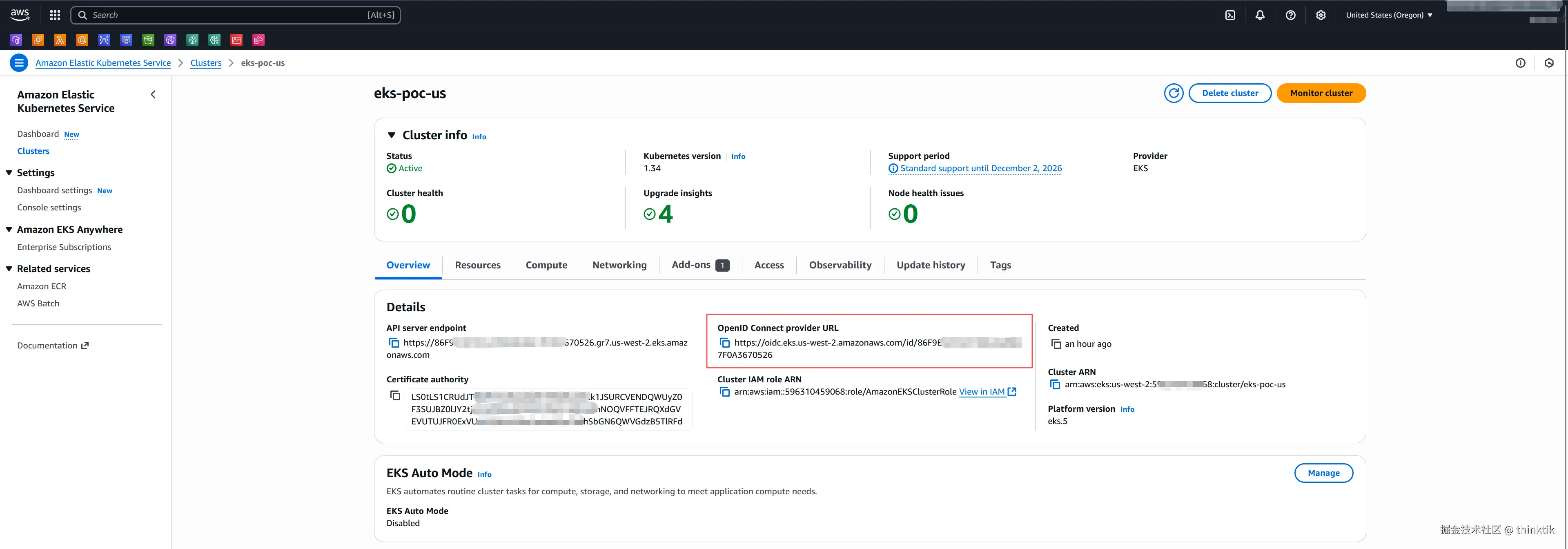

IAM OIDC provider设置

在EKS里面找到OpenID Connect provider URL,复制它

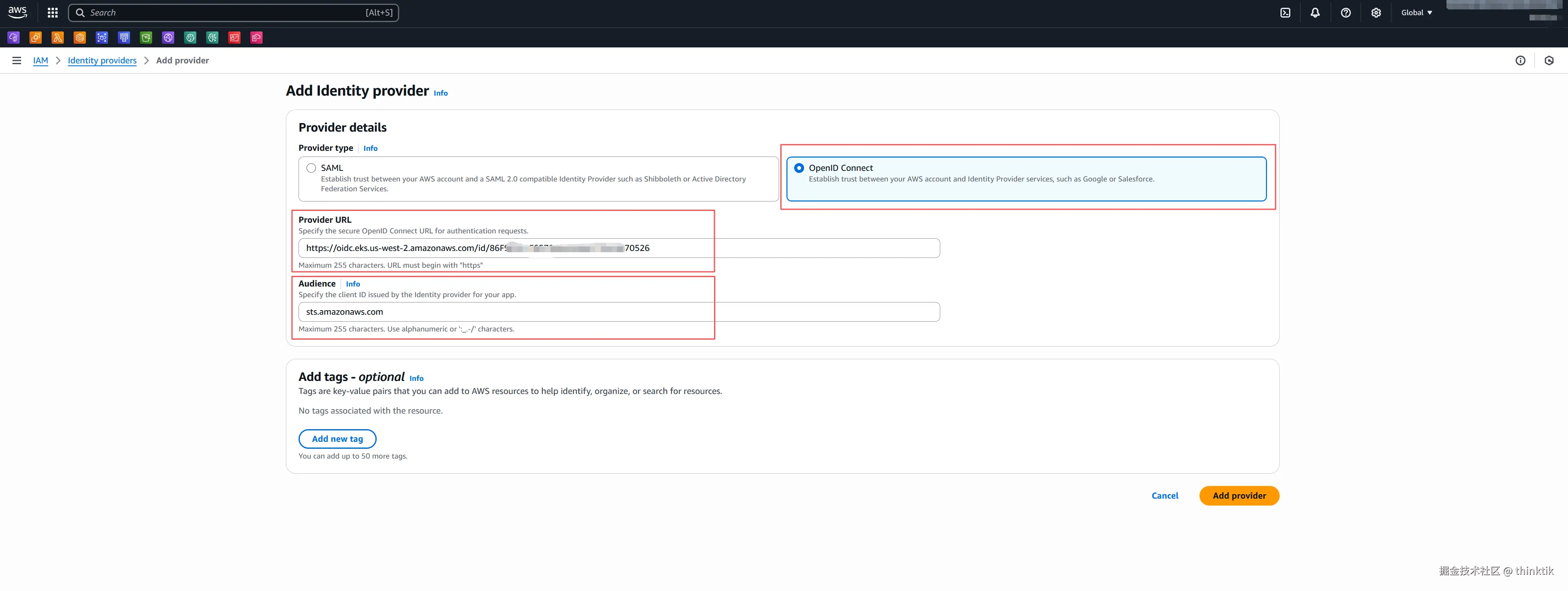

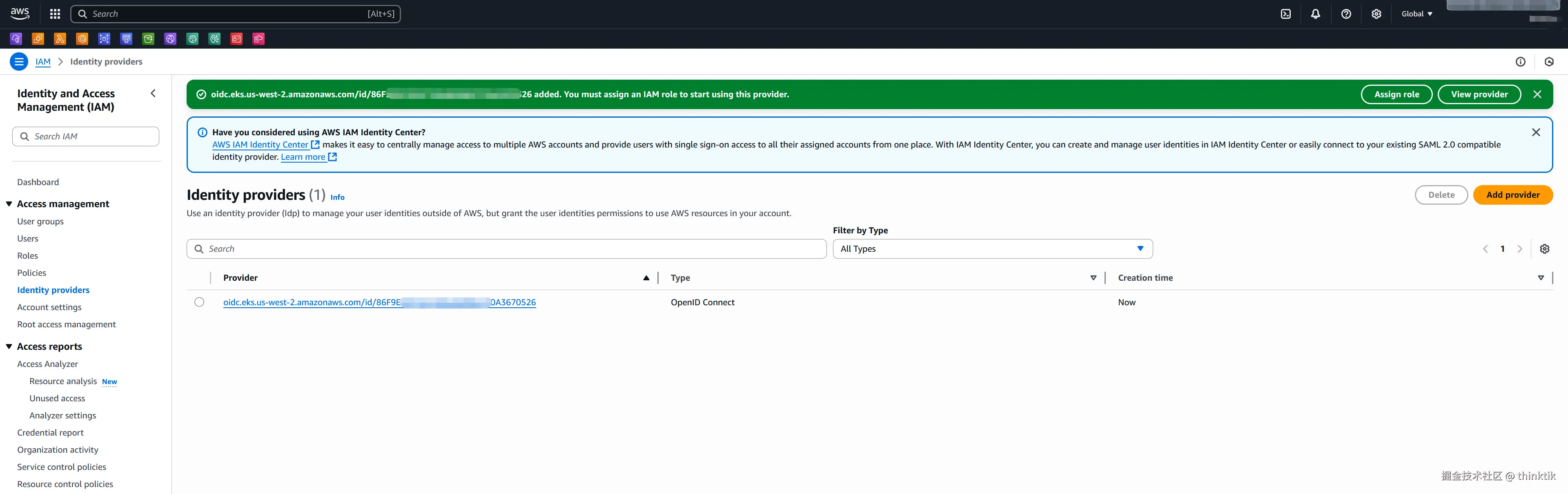

然后在AWS IAM控制台,进行添加

填入复制的OpenID Connect provider URL并在Audience里面填充sts.amazonaws.com

安装karpenter

根据Migrating from Cluster Autoscaler

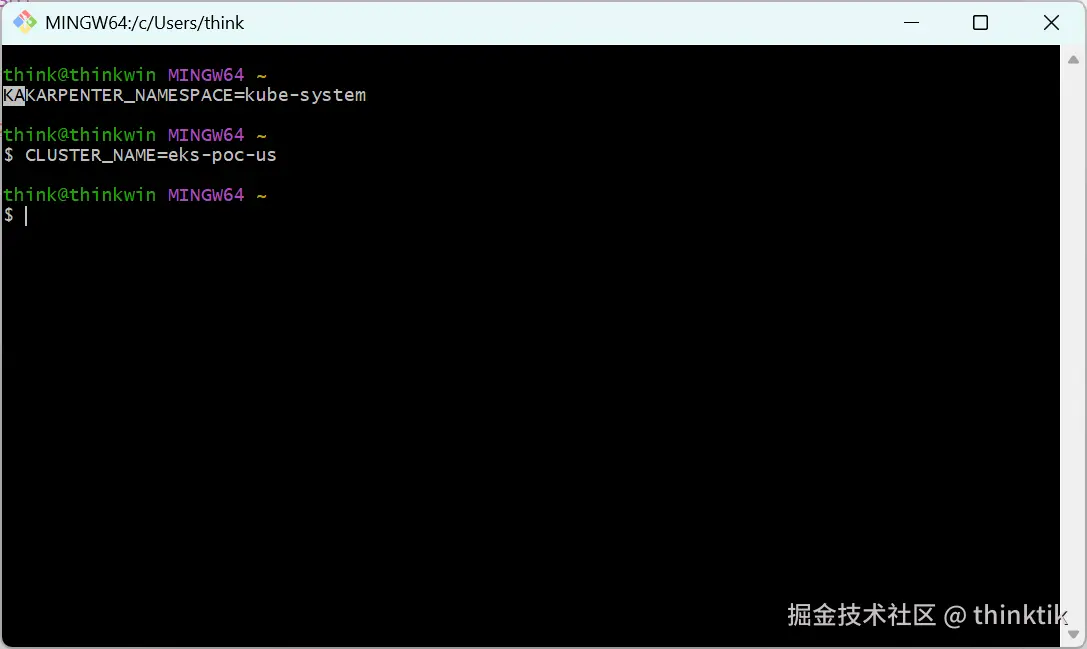

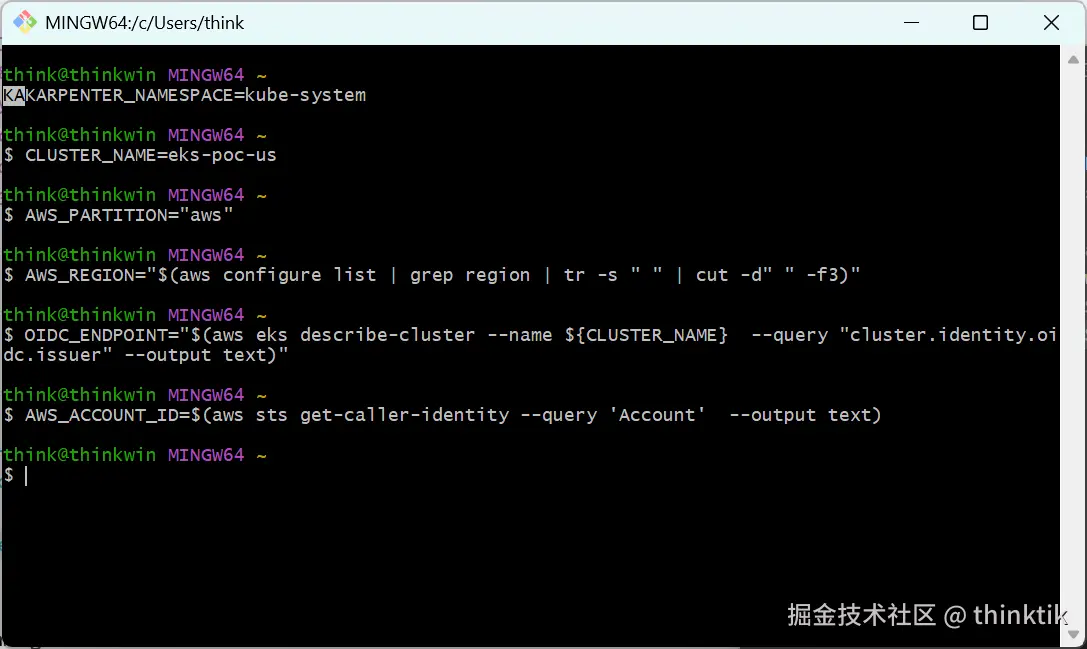

设置环境变量

bash

KARPENTER_NAMESPACE=kube-system

# 集群名称

CLUSTER_NAME=<your cluster name>建议在bash环境执行(windows可以安装mingw)

bash

# 海外区域填aws,国内填aws-cn

AWS_PARTITION="aws"

# 获取AWS region

AWS_REGION="$(aws configure list | grep region | tr -s " " | cut -d" " -f3)"

# 获取OIDC ENDPOINT

OIDC_ENDPOINT="$(aws eks describe-cluster --name ${CLUSTER_NAME} --query "cluster.identity.oidc.issuer" --output text)"

# 获取aws account id

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' --output text)

K8S_VERSION=$(aws eks describe-cluster --name "${CLUSTER_NAME}" --query "cluster.version" --output text)

ALIAS_VERSION="$(aws ssm get-parameter --name "/aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2023/x86_64/standard/recommended/image_id" --query Parameter.Value | xargs aws ec2 describe-images --query 'Images[0].Name' --image-ids | sed -r 's/^.*(v[[:digit:]]+).*$/\1/')"建议在bash环境执行(windows可以安装mingw)

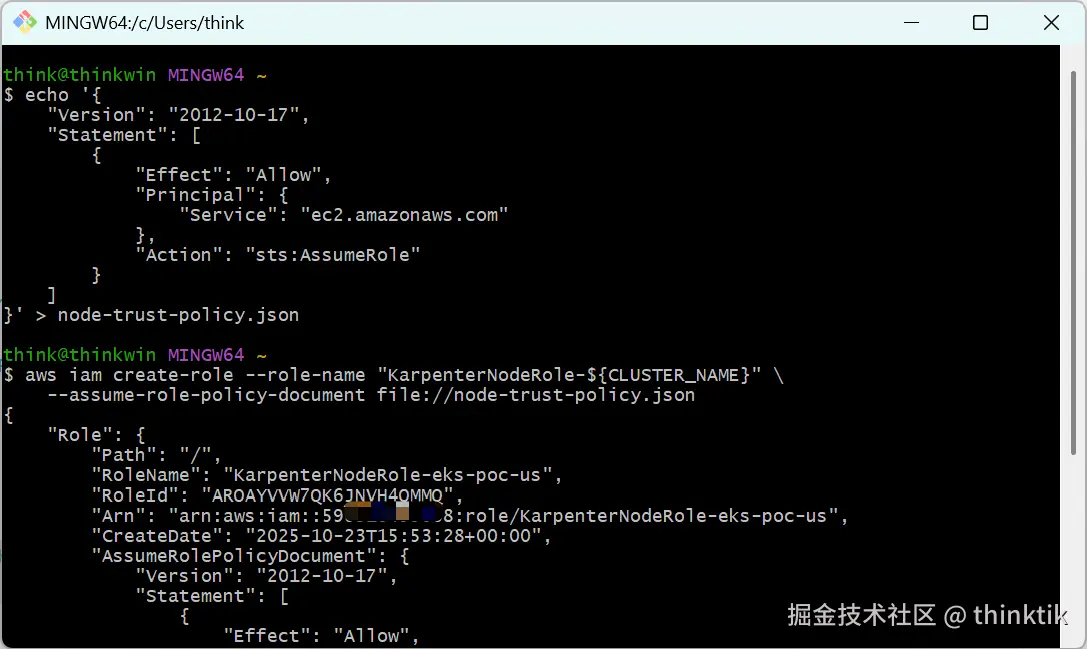

创建KarpenterNodeRole IAM role

为karpenter将要管理的节点创建KarpenterNodeRole-xxx role

bash

echo '{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}' > node-trust-policy.json

aws iam create-role --role-name "KarpenterNodeRole-${CLUSTER_NAME}" \

--assume-role-policy-document file://node-trust-policy.json效果如下:

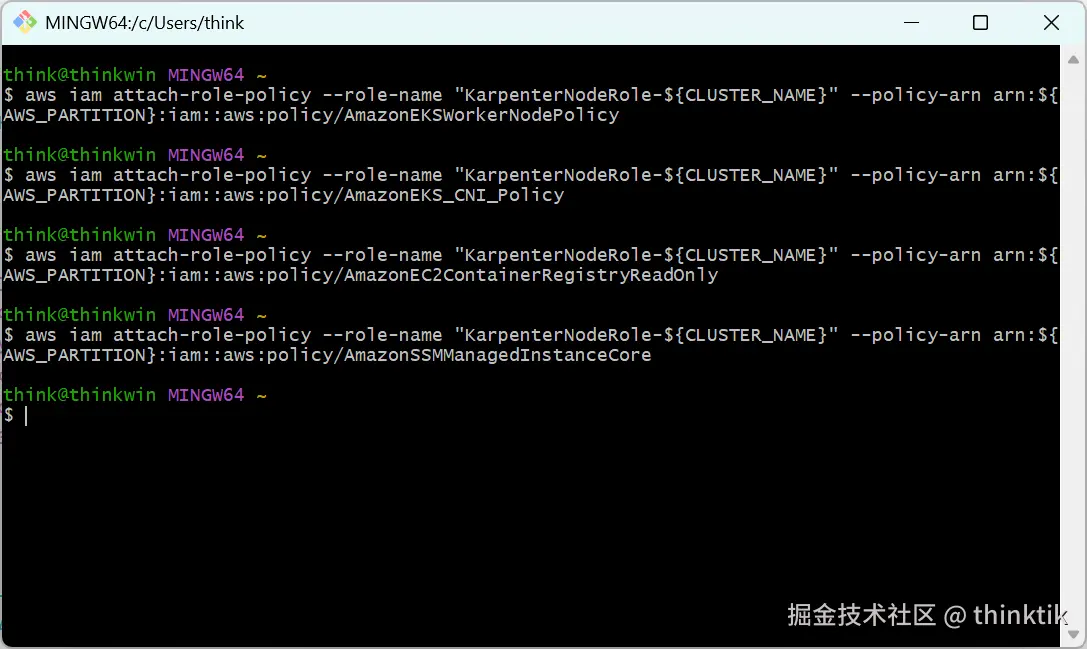

给创建的role附加policy:

bash

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" --policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonEKSWorkerNodePolicy

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" --policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonEKS_CNI_Policy

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" --policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" --policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonSSMManagedInstanceCore效果如下:

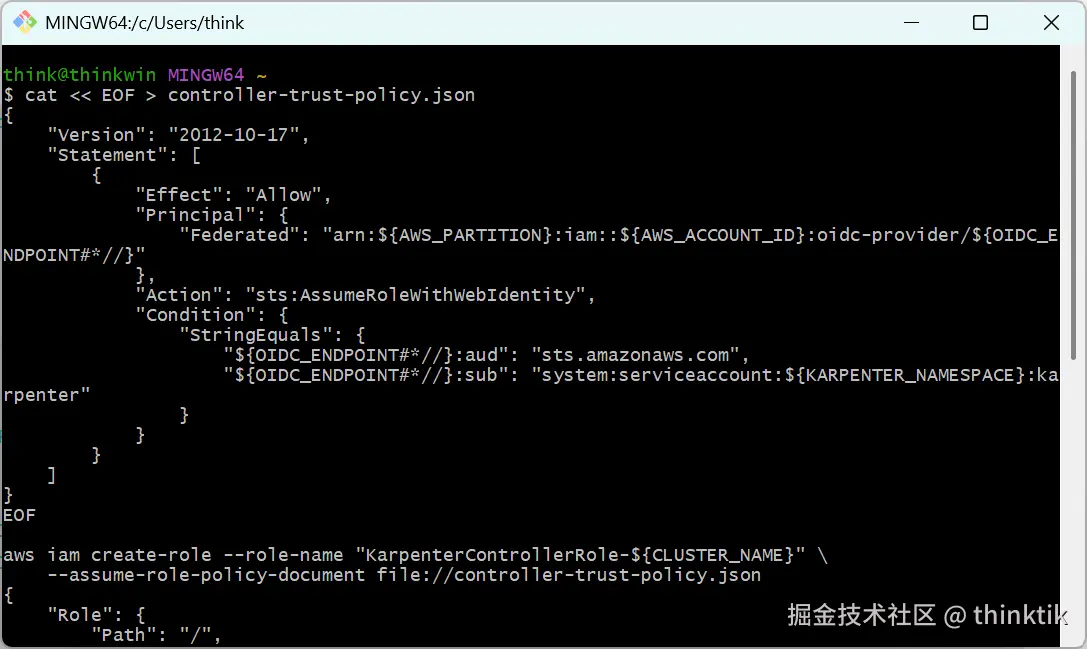

创建KarpenterControllerRole IAM Role

KarpenterControllerRole IAM Role 主要是给Karpenter 控制器自己用

bash

cat << EOF > controller-trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_ENDPOINT#*//}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_ENDPOINT#*//}:aud": "sts.amazonaws.com",

"${OIDC_ENDPOINT#*//}:sub": "system:serviceaccount:${KARPENTER_NAMESPACE}:karpenter"

}

}

}

]

}

EOF

aws iam create-role --role-name "KarpenterControllerRole-${CLUSTER_NAME}" \

--assume-role-policy-document file://controller-trust-policy.json

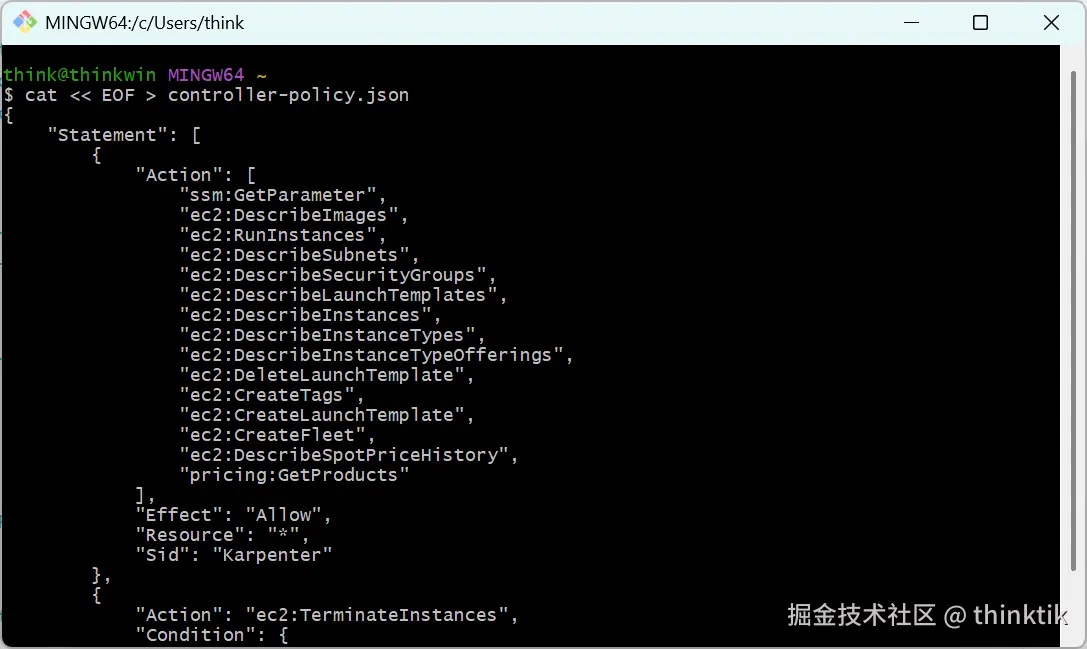

为KarpenterControllerRole附加policy

bash

cat << EOF > controller-policy.json

{

"Statement": [

{

"Action": [

"ssm:GetParameter",

"ec2:DescribeImages",

"ec2:RunInstances",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeLaunchTemplates",

"ec2:DescribeInstances",

"ec2:DescribeInstanceTypes",

"ec2:DescribeInstanceTypeOfferings",

"ec2:DeleteLaunchTemplate",

"ec2:CreateTags",

"ec2:CreateLaunchTemplate",

"ec2:CreateFleet",

"ec2:DescribeSpotPriceHistory",

"pricing:GetProducts"

],

"Effect": "Allow",

"Resource": "*",

"Sid": "Karpenter"

},

{

"Action": "ec2:TerminateInstances",

"Condition": {

"StringLike": {

"ec2:ResourceTag/karpenter.sh/nodepool": "*"

}

},

"Effect": "Allow",

"Resource": "*",

"Sid": "ConditionalEC2Termination"

},

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}",

"Sid": "PassNodeIAMRole"

},

{

"Effect": "Allow",

"Action": "eks:DescribeCluster",

"Resource": "arn:${AWS_PARTITION}:eks:${AWS_REGION}:${AWS_ACCOUNT_ID}:cluster/${CLUSTER_NAME}",

"Sid": "EKSClusterEndpointLookup"

},

{

"Sid": "AllowScopedInstanceProfileCreationActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:CreateInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:RequestTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:RequestTag/topology.kubernetes.io/region": "${AWS_REGION}"

},

"StringLike": {

"aws:RequestTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowScopedInstanceProfileTagActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:TagInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:ResourceTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:ResourceTag/topology.kubernetes.io/region": "${AWS_REGION}",

"aws:RequestTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:RequestTag/topology.kubernetes.io/region": "${AWS_REGION}"

},

"StringLike": {

"aws:ResourceTag/karpenter.k8s.aws/ec2nodeclass": "*",

"aws:RequestTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowScopedInstanceProfileActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:AddRoleToInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:DeleteInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:ResourceTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:ResourceTag/topology.kubernetes.io/region": "${AWS_REGION}"

},

"StringLike": {

"aws:ResourceTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowInstanceProfileReadActions",

"Effect": "Allow",

"Resource": "*",

"Action": "iam:GetInstanceProfile"

},

{

"Sid": "AllowUnscopedInstanceProfileListAction",

"Effect": "Allow",

"Resource": "*",

"Action": "iam:ListInstanceProfiles"

}

],

"Version": "2012-10-17"

}

EOF

aws iam put-role-policy --role-name "KarpenterControllerRole-${CLUSTER_NAME}" \

--policy-name "KarpenterControllerPolicy-${CLUSTER_NAME}" \

--policy-document file://controller-policy.json效果如下:

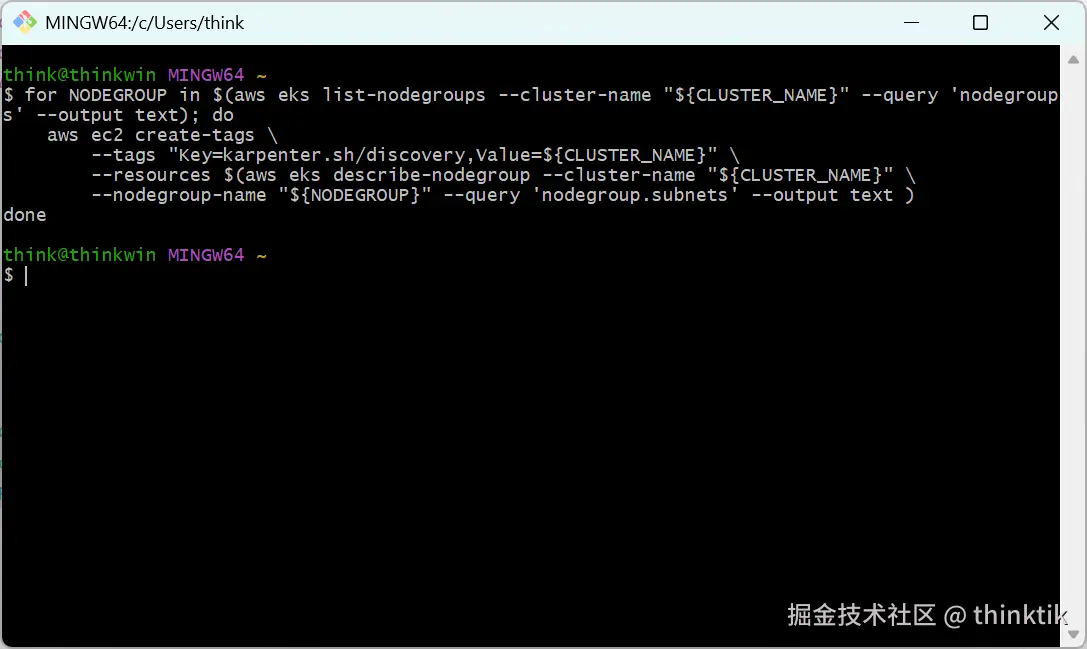

为karpenter管理的node所在的子网和安全组添加tag

为现有的nodegroup所在的子网打标签

bash

# 为现有的nodegroup所在的子网打标签

for NODEGROUP in $(aws eks list-nodegroups --cluster-name "${CLUSTER_NAME}" --query 'nodegroups' --output text); do

aws ec2 create-tags \

--tags "Key=karpenter.sh/discovery,Value=${CLUSTER_NAME}" \

--resources $(aws eks describe-nodegroup --cluster-name "${CLUSTER_NAME}" \

--nodegroup-name "${NODEGROUP}" --query 'nodegroup.subnets' --output text )

done

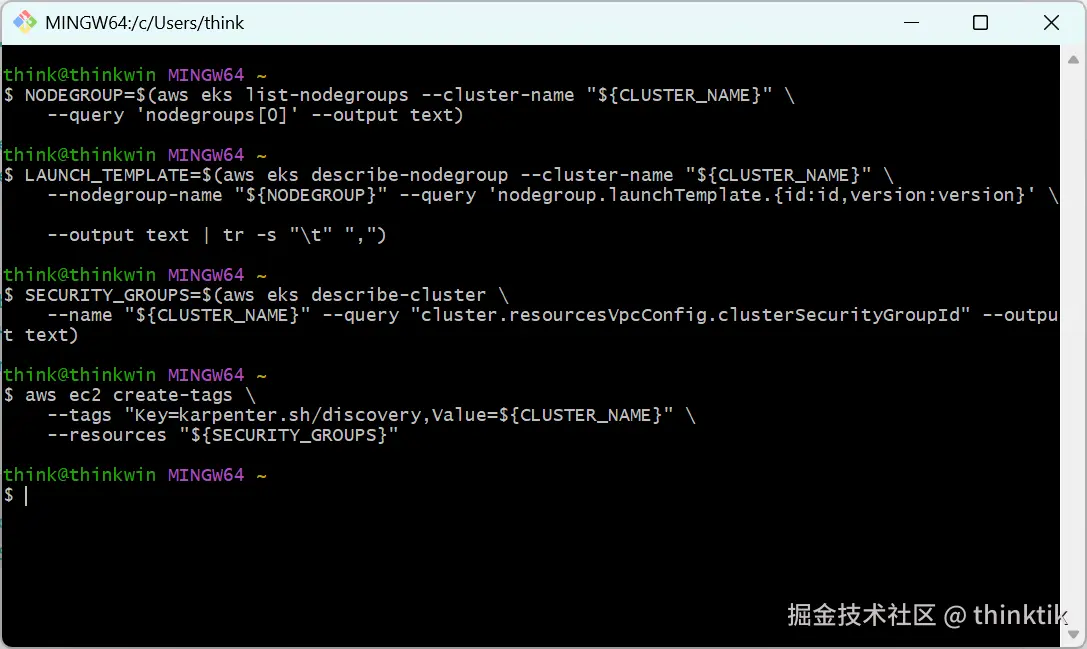

为现有的node group所在的安全组打标签

bash

# 查询nodegroup

NODEGROUP=$(aws eks list-nodegroups --cluster-name "${CLUSTER_NAME}" \

--query 'nodegroups[0]' --output text)

# 查询aws ec2 launch template

LAUNCH_TEMPLATE=$(aws eks describe-nodegroup --cluster-name "${CLUSTER_NAME}" \

--nodegroup-name "${NODEGROUP}" --query 'nodegroup.launchTemplate.{id:id,version:version}' \

--output text | tr -s "\t" ",")

# If your EKS setup is configured to use only Cluster security group, then please execute -

SECURITY_GROUPS=$(aws eks describe-cluster \

--name "${CLUSTER_NAME}" --query "cluster.resourcesVpcConfig.clusterSecurityGroupId" --output text)

# If your setup uses the security groups in the Launch template of a managed node group, then :

SECURITY_GROUPS="$(aws ec2 describe-launch-template-versions \

--launch-template-id "${LAUNCH_TEMPLATE%,*}" --versions "${LAUNCH_TEMPLATE#*,}" \

--query 'LaunchTemplateVersions[0].LaunchTemplateData.[NetworkInterfaces[0].Groups||SecurityGroupIds]' \

--output text)"

# 为安全组打tag

aws ec2 create-tags \

--tags "Key=karpenter.sh/discovery,Value=${CLUSTER_NAME}" \

--resources "${SECURITY_GROUPS}"效果如下:

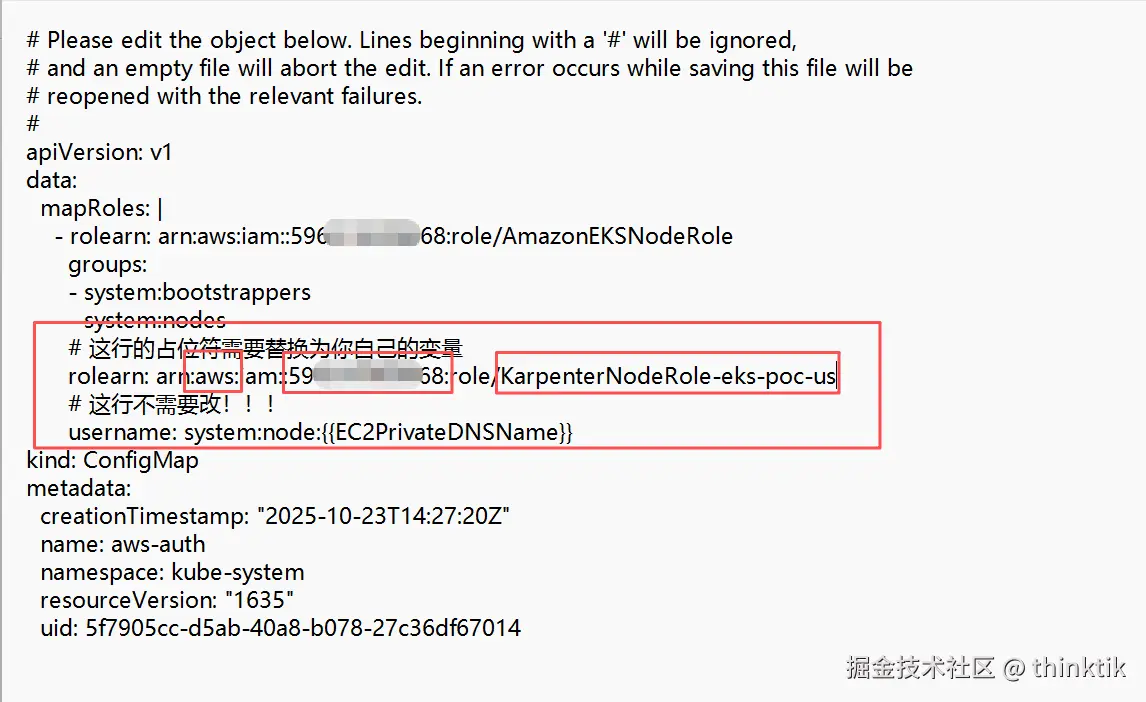

编辑aws-auth

先设置kube凭证,让本地电脑可以控制EKS集群,用你的实际的值替换<region-code>和our-eks-cluster-name>

bash

aws eks update-kubeconfig --region <region-code> --name <your-eks-cluster-name>开始编辑

bash

kubectl edit configmap aws-auth -n kube-system安装下列模板补充进去,其中AWS_PARTITION、AWS_ACCOUNT_ID、CLUSTER_NAME是变量,填你自己的实际的值

yaml

- groups:

- system:bootstrappers

- system:nodes

## If you intend to run Windows workloads, the kube-proxy group should be specified.

# For more information, see https://github.com/aws/karpenter/issues/5099.

# - eks:kube-proxy-windows

# 这行的占位符需要替换为你自己的变量

rolearn: arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}

# 这行不需要改!!!

username: system:node:{{EC2PrivateDNSName}}这里注意要将AWS_PARTITION、AWS_ACCOUNT_ID、CLUSTER_NAME替换为你账户实际的值!!!,否则后续启动的node无权限加入EKS.

安装karpenter 1.8.1

设置karpenter版本为1.8.1,一般选择最新的就好.

bash

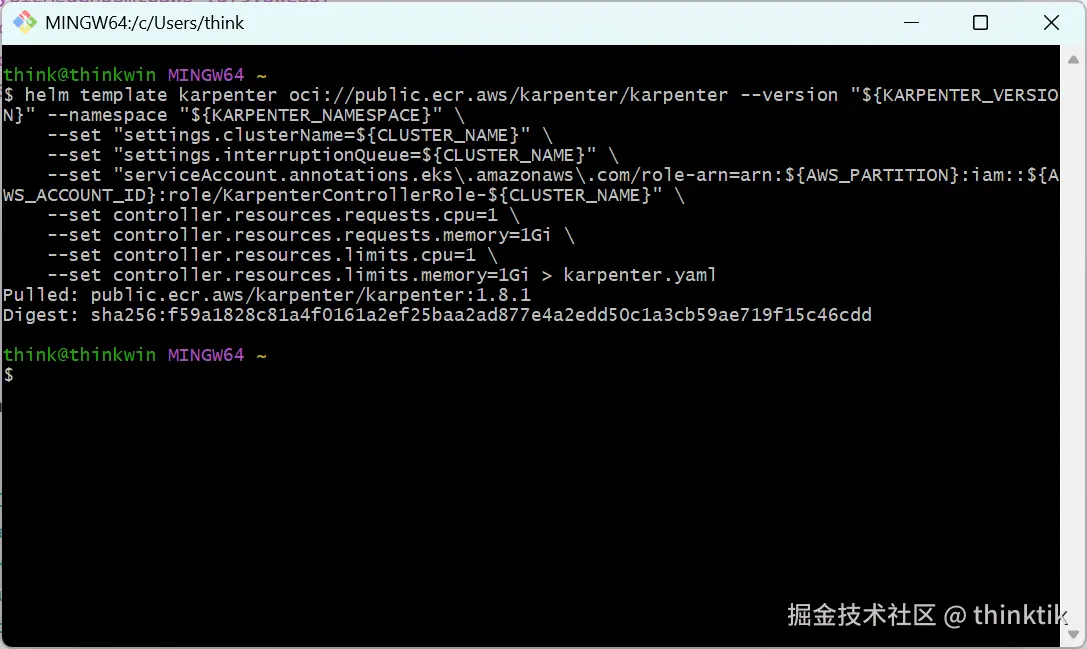

export KARPENTER_VERSION="1.8.1"用helm创建Karpenter deployment yaml模板

bash

helm template karpenter oci://public.ecr.aws/karpenter/karpenter --version "${KARPENTER_VERSION}" --namespace "${KARPENTER_NAMESPACE}" \

--set "settings.clusterName=${CLUSTER_NAME}" \

--set "settings.interruptionQueue=${CLUSTER_NAME}" \

--set "serviceAccount.annotations.eks\.amazonaws\.com/role-arn=arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterControllerRole-${CLUSTER_NAME}" \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi > karpenter.yaml

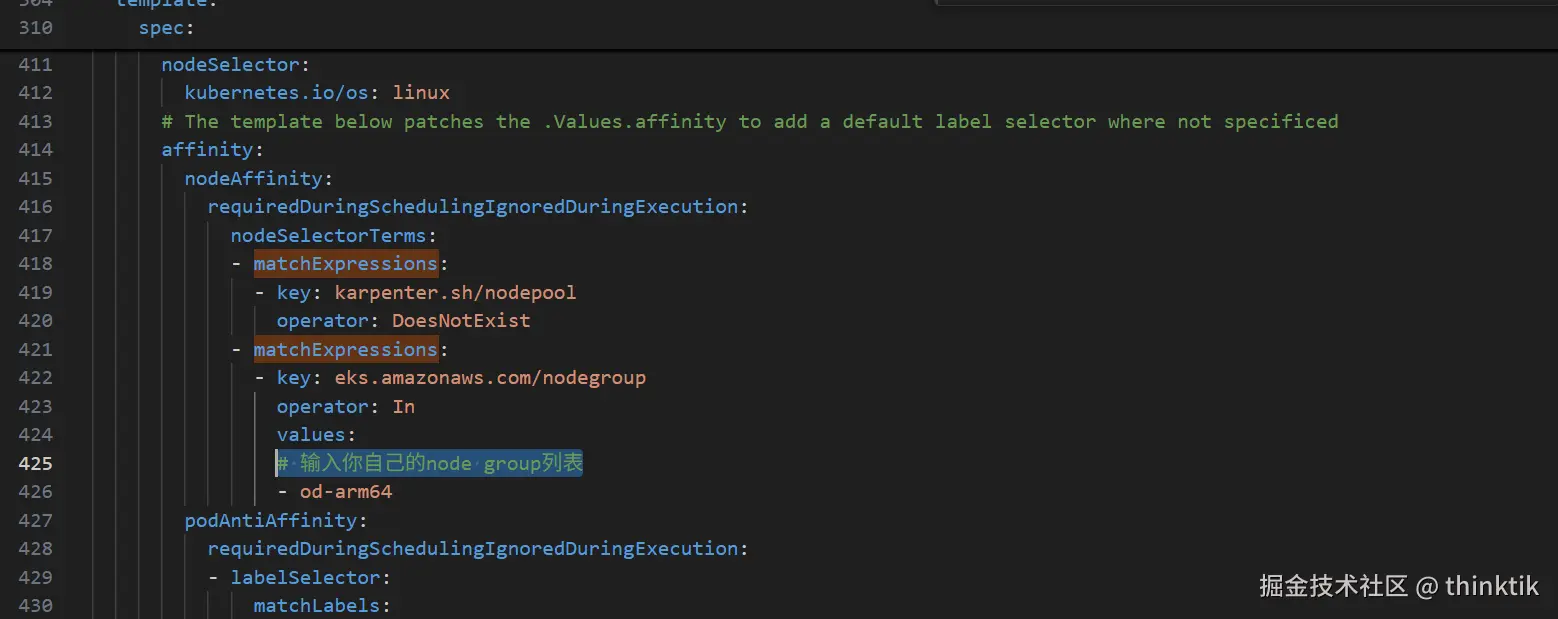

修改下载下来的karpenter.yaml,补充我红色方框的内容,并且注意将value改为你的EKS的nodegroup名称。

yaml

- matchExpressions:

- key: eks.amazonaws.com/nodegroup

operator: In

values:

# 输入你自己的node group列表

- ${NODEGROUP}

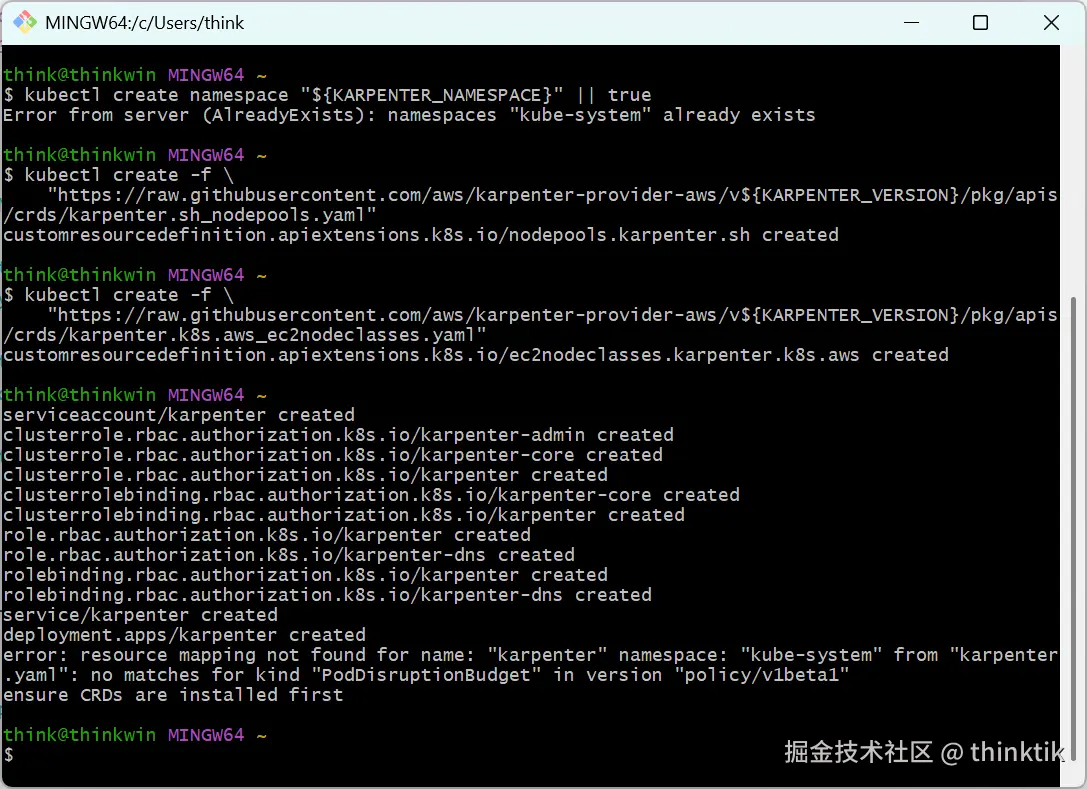

正式部署karpenter

bash

kubectl create namespace "${KARPENTER_NAMESPACE}" || true

kubectl create -f \

"https://raw.githubusercontent.com/aws/karpenter-provider-aws/v${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_nodepools.yaml"

kubectl create -f \

"https://raw.githubusercontent.com/aws/karpenter-provider-aws/v${KARPENTER_VERSION}/pkg/apis/crds/karpenter.k8s.aws_ec2nodeclasses.yaml"

kubectl create -f \

"https://raw.githubusercontent.com/aws/karpenter-provider-aws/v${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_nodeclaims.yaml"

kubectl apply -f karpenter.yaml

创建一个默认的karpenter provisioner,注意要将${CLUSTER_NAME}替换为你自己的EKS集群名称

bash

cat <<EOF | envsubst | kubectl apply -f -

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

requirements:

- key: kubernetes.io/arch

operator: In

values: ["amd64"]

- key: kubernetes.io/os

operator: In

values: ["linux"]

- key: karpenter.sh/capacity-type

operator: In

values: ["spot"]

- key: karpenter.k8s.aws/instance-category

operator: In

values: ["c", "m", "r"]

- key: karpenter.k8s.aws/instance-generation

operator: Gt

values: ["2"]

nodeClassRef:

group: karpenter.k8s.aws

kind: EC2NodeClass

name: default

expireAfter: 720h # 30 * 24h = 720h

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenEmptyOrUnderutilized

consolidateAfter: 1m

---

apiVersion: karpenter.k8s.aws/v1

kind: EC2NodeClass

metadata:

name: default

spec:

role: "KarpenterNodeRole-${CLUSTER_NAME}" # replace with your cluster name

amiSelectorTerms:

- alias: "al2023@latest"

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

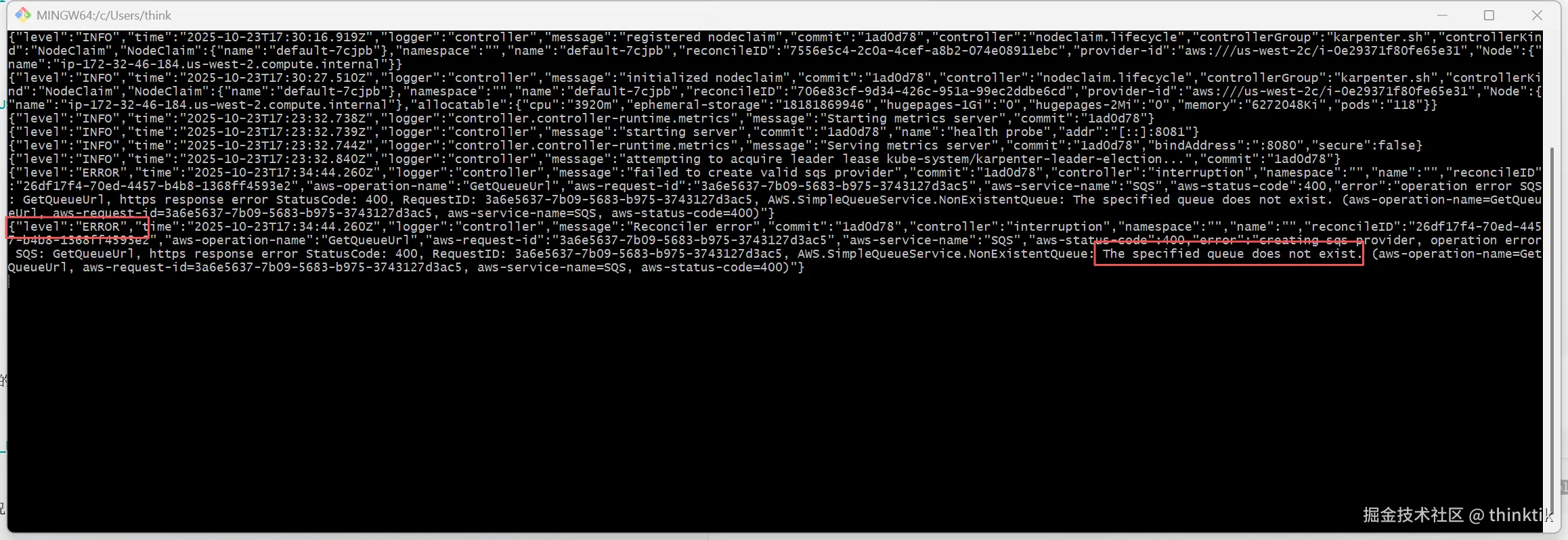

EOF到这里我们按照完成了,可以执行下面的命令查看日志,一般没有错误信息就行

bash

kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller如果发现类似The specified queue does not exist的错误,其实没大的关系,这个是因为我们还没设置SQS来配合karpenter实现EC2 spot机型的中断回收

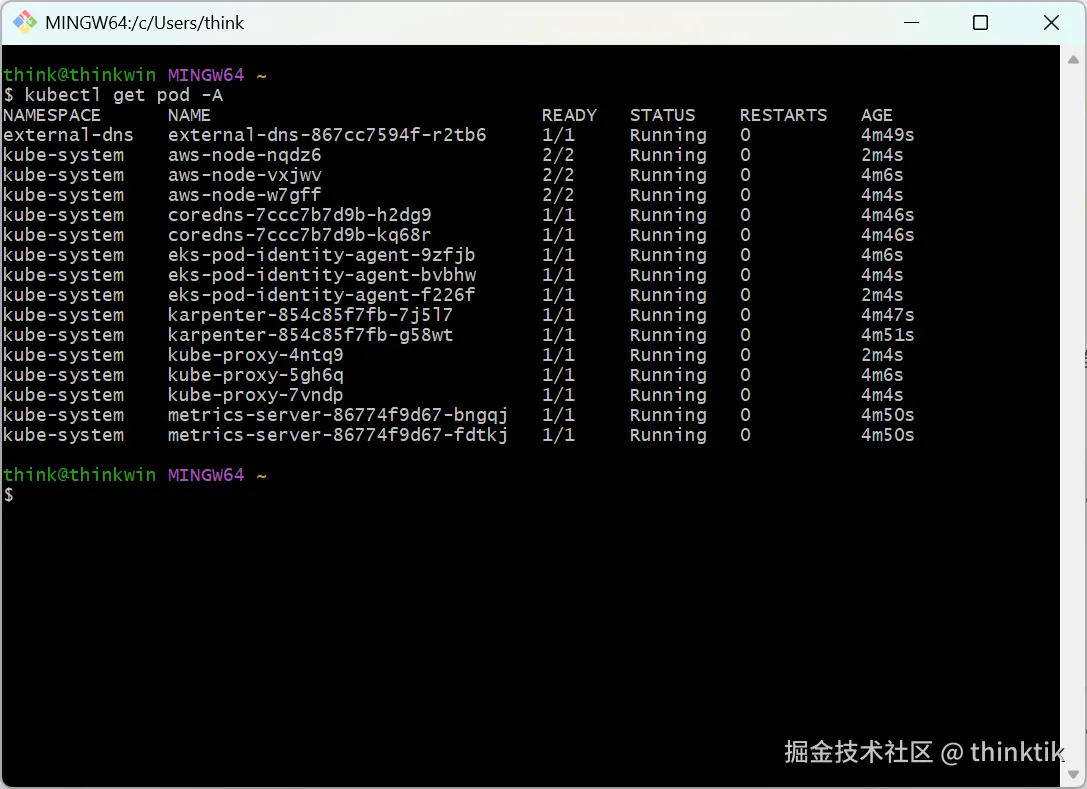

也可以从查看karpenter的pod运行情况

bash

kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller

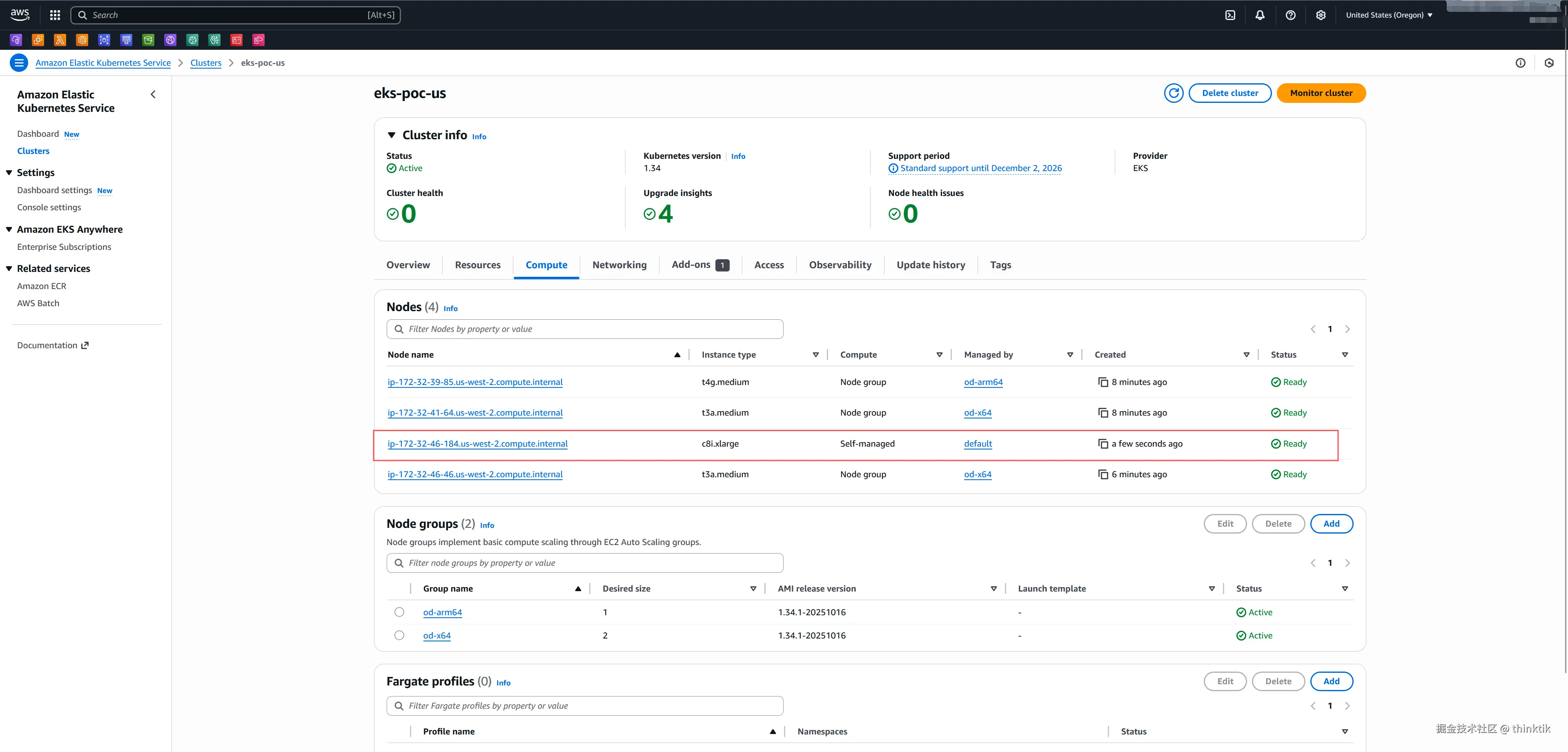

测试karpenter

我们可以部署下面的nginx Deployment来触发karpenter对EC2节点的自动伸缩,我故意设置了副本数为100,来迫使EKS中的karpenter扩展更多的EC2

yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: eks-sample-linux-deployment

namespace: default

labels:

app: eks-sample-linux-app

spec:

replicas: 100

selector:

matchLabels:

app: eks-sample-linux-app

template:

metadata:

labels:

app: eks-sample-linux-app

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- amd64

- arm64

containers:

- name: nginx

image: public.ecr.aws/nginx/nginx:1.23

ports:

- name: http

containerPort: 80

imagePullPolicy: IfNotPresent

nodeSelector:

kubernetes.io/os: linux效果如下,karpenter发现底层EC2资源不够,马上申请了了一个EC2满足大量的nginx的资源需求

当我们移出这个deployment后,karpenter会自动的在一端实际后销毁EC2. 这里我们还可以模拟了实现了EKS根据压力或者资源需求自动扩展/收缩EC2资源的功能,结合HPA可以做到了pod和EC2的弹性,关于EKS中的HPA的测试demo可以看Scale pod deployments with Horizontal Pod Autoscaler