一、Kubernetes 简介

文档:https://developer.aliyun.com/article/1635071

1.1 什么是 Kubernetes

Kubernetes(简称 K8s)是谷歌开源的容器编排与管理平台,核心作用是自动化容器化应用的部署、扩展与运维。它源于谷歌 15 年大规模容器运行经验(如 Borg 系统),结合社区最佳实践,能将分散的容器组织成逻辑单元,实现高效管理与资源优化。

简单说,K8s 就像 "容器的操作系统"------ 不管是 10 个还是 1000 个容器,它都能自动调度、监控健康状态、修复故障,让开发者不用手动管理容器细节。

1.2 核心价值

- 自动化运维:无需手动启停容器,K8s 自动处理重启、扩容、更新,减少人工操作。

- 无限扩展能力:从单机测试到全球分布式集群,K8s 可随业务规模弹性扩展,不增加运维负担(摘要 1 "Planet Scale" 特性)。

- 全环境可移植:支持本地数据中心、公有云(AWS/Azure/ 阿里云)、混合云,容器可跨环境无缝迁移(摘要 1 "Run K8s Anywhere")。

- 稳定可靠:自带 "自愈" 能力,容器崩溃自动重启、节点故障自动调度,保障服务高可用。

1.3 核心功能

kubernetes的本质是一组服务器集群,它可以在集群的每个节点上运行特定的程序,来对节点中的容器

进行管理。目的是实现资源管理的自动化,主要提供了如下的主要功能:

- 自我修复:一旦某一个容器崩溃,能够在1秒中左右迅速启动新的容器

- 弹性伸缩:可以根据需要,自动对集群中正在运行的容器数量进行调整

- 服务发现:服务可以通过自动发现的形式找到它所依赖的服务

- 负载均衡:如果一个服务起动了多个容器,能够自动实现请求的负载均衡

- 版本回退:如果发现新发布的程序版本有问题,可以立即回退到原来的版本

- 存储编排:可以根据容器自身的需求自动创建存储卷

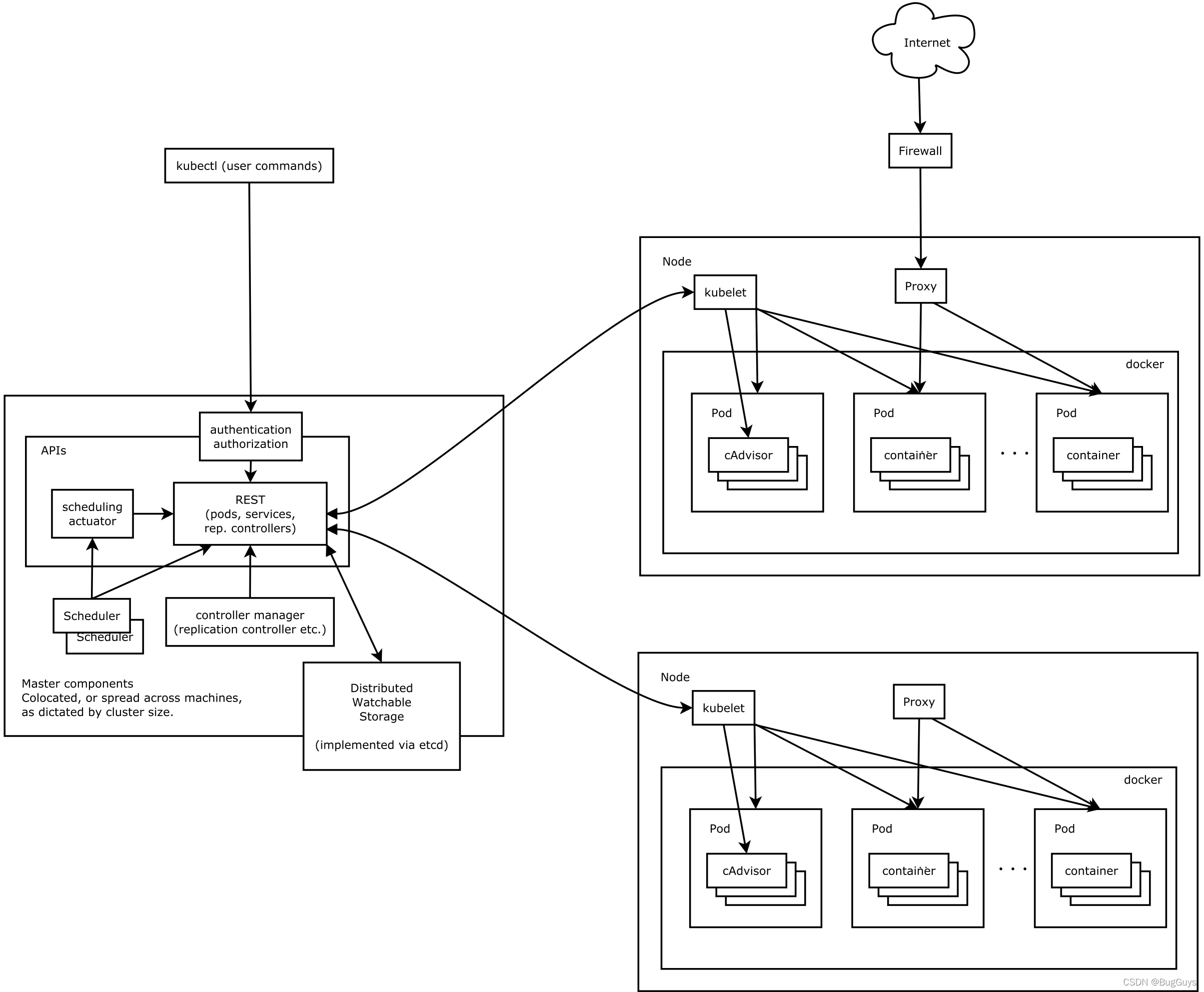

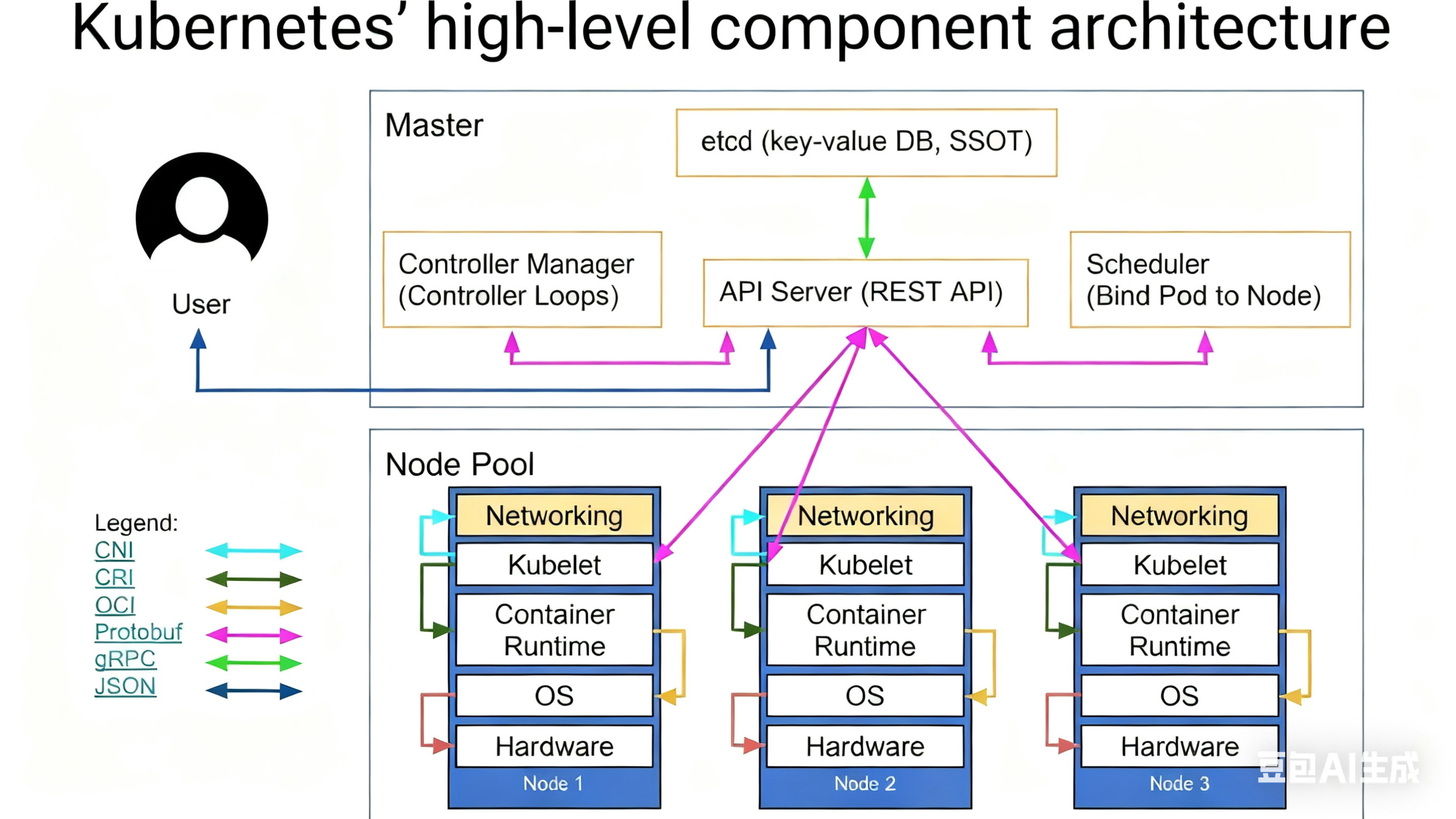

1.4 K8S的设计架构

一个kubernetes集群主要是由控制节点(master)、**工作节点(node)**构成,每个节点上都会安装不同的组

件

1 master:集群的控制平面,负责集群的决策**

- ApiServer : 资源操作的唯一入口,接收用户输入的命令,提供认证、授权、API注册和发现等机制

- Scheduler : 负责集群资源调度,按照预定的调度策略将Pod调度到相应的node节点上

- ControllerManager : 负责维护集群的状态,比如程序部署安排、故障检测、自动扩展、滚动更新等

- Etcd :负责存储集群中各种资源对象的信息

2 node:集群的数据平面,负责为容器提供运行环境**

- kubelet:负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理

- Container runtime:负责镜像管理以及Pod和容器的真正运行(CRI)

- kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡

1.5 K8S 各组件之间的调用关系

当我们要运行一个web服务时

- kubernetes环境启动之后,master和node都会将自身的信息存储到etcd数据库中

- web服务的安装请求会首先被发送到master节点的apiServer组件

- apiServer组件会调用scheduler组件来决定到底应该把这个服务安装到哪个node节点上

在此时,它会从etcd中读取各个node节点的信息,然后按照一定的算法进行选择,并将结果告知apiServer

- apiServer调用controller-manager去调度Node节点安装web服务5. kubelet接收到指令后,会通知docker,然后由docker来启动一个web服务的pod

- 如果需要访问web服务,就需要通过kube-proxy来对pod产生访问的代理

- CRI:标准化 K8s(kubelet)与容器运行时(如 containerd)的交互,让 K8s 能统一管理不同运行时的容器生命周期(创建、启停等)。

- CNI:标准化容器网络配置接口,让各类网络插件(如 flannel、Calico)能统一为 Pod 分配 IP、配置路由,实现 Pod 间及内外网通信。

- OCI:制定容器镜像和运行时的通用标准(如镜像格式、容器运行行为),确保不同工具(如 Docker、runc)的镜像和运行时可兼容。

1.6 K8S 的常用名词概念

Master:集群控制节点,每个集群需要至少一个master节点负责集群的管控

Node:工作负载节点,由master分配容器到这些node工作节点上,然后node节点上的

Pod:kubernetes的最小控制单元,容器都是运行在pod中的,一个pod中可以有1个或者多个容器

Controller:控制器,通过它来实现对pod的管理,比如启动pod、停止pod、伸缩pod的数量等等

Service:pod对外服务的统一入口,下面可以维护者同一类的多个pod

Label:标签,用于对pod进行分类,同一类pod会拥有相同的标签

NameSpace:命名空间,用来隔离pod的运行环境

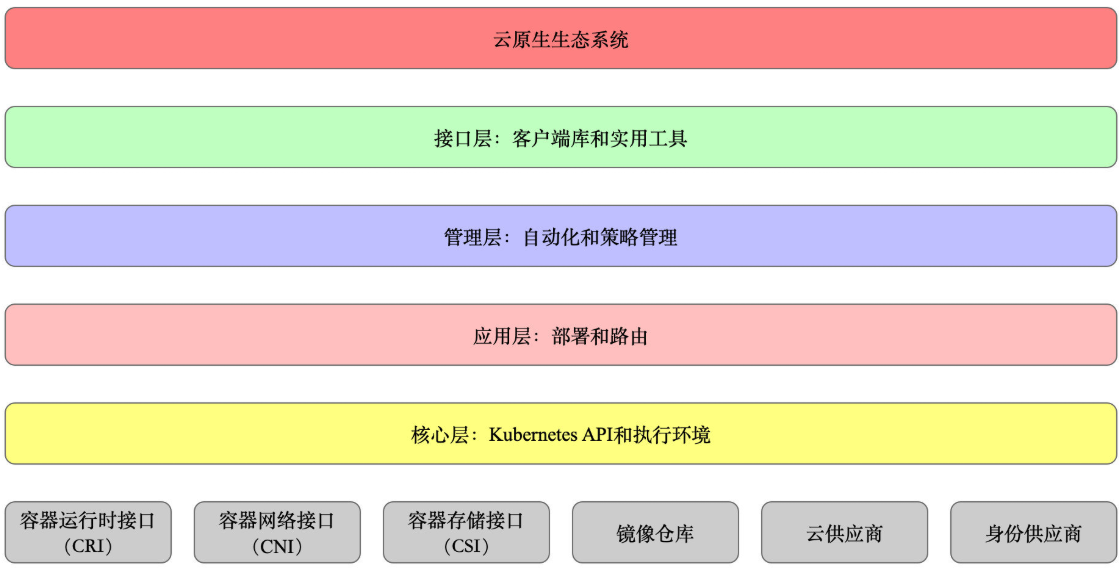

1.7 k8S的分层架构

- 核心层:Kubernetes最核心的功能,对外提供API构建高层的应用,对内提供插件式应用执行环境

- 应用层:部署(无状态应用、有状态应用、批处理任务、集群应用等)和路由(服务发现、DNS解析等)

- 管理层:系统度量(如基础设施、容器和网络的度量),自动化(如自动扩展、动态Provision等)

- 以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

- 接口层:kubectl命令行工具、客户端SDK以及集群联邦

- 生态系统:在接口层之上的庞大容器集群管理调度的生态系统,可以划分为两个范畴

- Kubernetes外部:日志、监控、配置管理、CI、CD、Workflow、FaaS、OTS应用、ChatOps等

- Kubernetes内部:CRI、CNI、CVI、镜像仓库、Cloud Provider、集群自身的配置和管理等

二 K8S集群环境搭建

1 部署思路

简单说,搭建 K8S 集群就像搭积木:先把地基打好(环境初始化),再准备好装积木的盒子(容器引擎 Docker),然后建个仓库放积木零件(Harbor 仓库),接着把妨碍积木稳定的东西挪走(禁用 Swap),再装上传送零件的工具(K8S 组件和 cri-docker 插件),最后搭起主体结构(初始化集群)并连上电线(网络插件),确保所有积木块能互通。

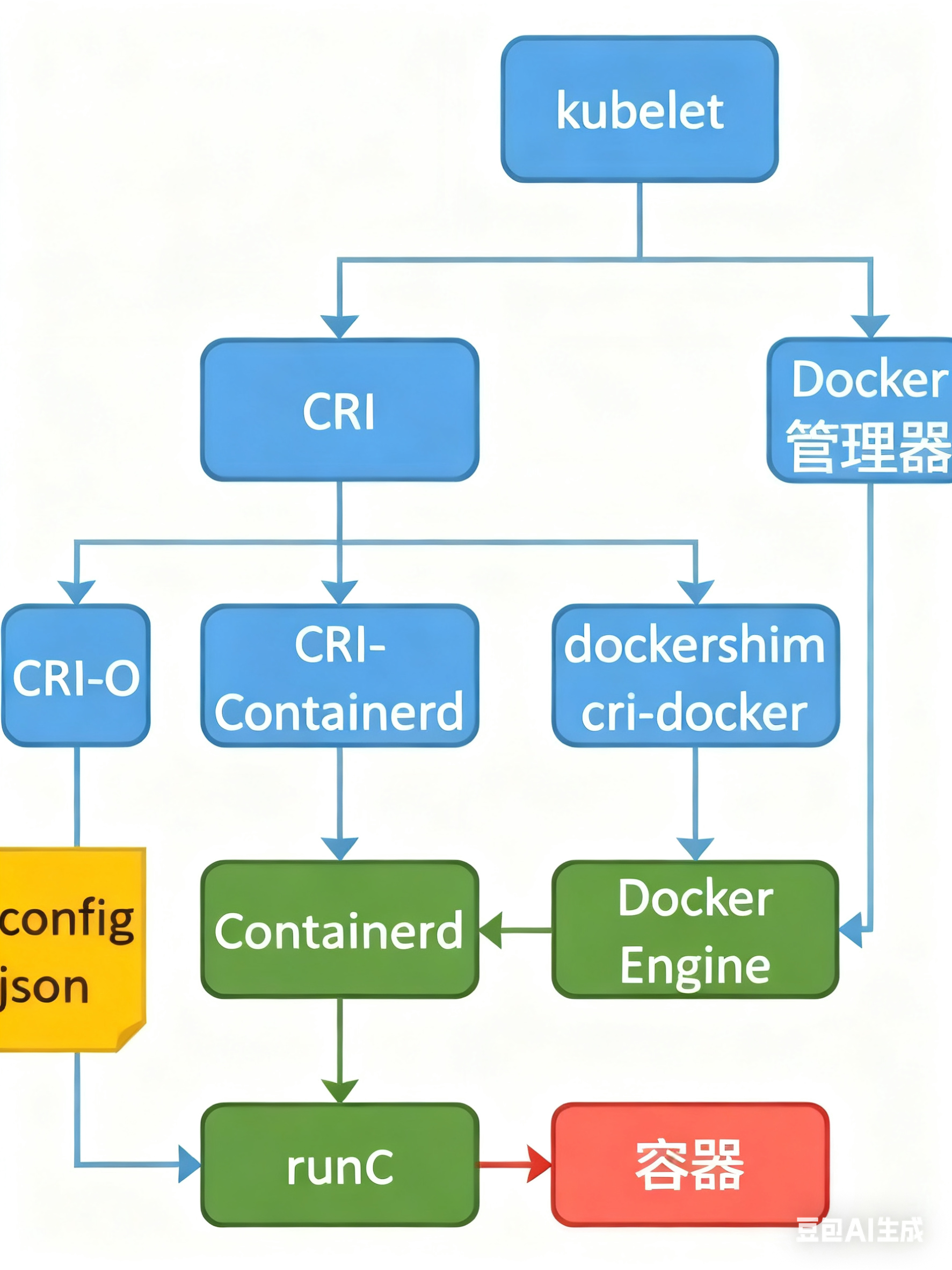

1.1 k8s中容器的管理方式

K8S 集群创建方式有3种:

containerd

默认情况下,K8S在创建集群时使用的方式

docker

Docker使用的普记录最高,虽然K8S在1.24版本后已经费力了kubelet对docker的支持,但时可以借助

cri-docker方式来实现集群创建

cri-o

CRI-O的方式是Kubernetes创建容器最直接的一种方式,在创建集群的时候,需要借助于cri-o插件的方

式来实现Kubernetes集群的创建。

!NOTE

docker 和cri-o 这两种方式要对kubelet程序的启动参数进行设置

2 详细步骤与代码解析

2.1 k8s 环境部署说明

K8S中文官网:https://kubernetes.io/zh-cn/

| 主机名 | IP | 角色 |

|---|---|---|

| master | 192.168.2.70 | master,k8s集群控制节点 |

| harbor | 192.168.2.71 | harbor仓库 |

| node1 | 192.168.2.72 | worker,k8s集群工作节点 |

| node2 | 192.168.2.73 | worker,k8s集群工作节点 |

- 所有节点禁用selinux和防火墙

- 所有节点同步时间和解析

- 所有节点安装docker-ce

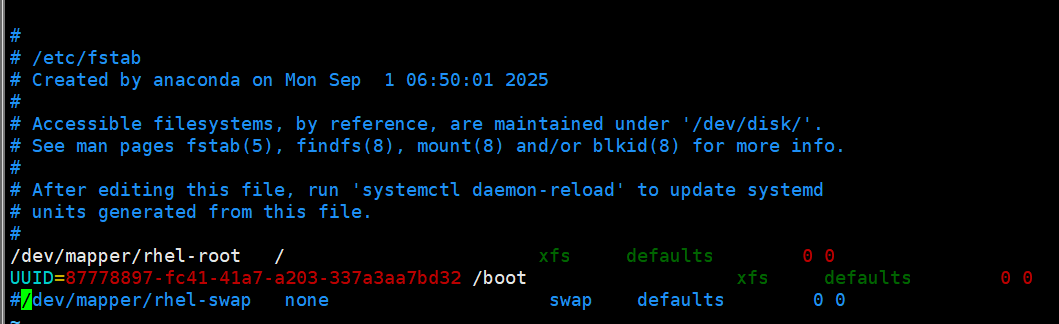

- 所有节点禁用swap,注意注释掉/etc/fstab文件中的定义

2.2 环境初始化(所有节点必做)

2.2.1 基础配置--所有节点都做

bash

#配置主机名与 IP 映射

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.70 master

192.168.2.71 harbor rch.hjn.com

192.168.2.72 node1

192.168.2.73 node2

#禁用 SELinux 和防火墙

[root@master ~]# getenforce

Disabled # 确保输出为Disabled,否则执行setenforce 0临时关闭,再修改/etc/selinux/config永久禁用

[root@master ~]# systemctl status firewalld.service

○ firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; preset: enabled)

Active: inactive (dead) # 确保防火墙处于关闭状态

Docs: man:firewalld(1)

#同步时间

[root@master ~]# cat /etc/chrony.conf

server ntp.aliyun.com iburst

stratumweight 0

driftfile /var/lib/chrony/drift

rtcsync

makestep 10 3

bindcmdaddress 127.0.0.1

bindcmdaddress ::1

keyfile /etc/chrony.keys

commandkey 1

generatecommandkey

logchange 0.5

logdir /var/log/chrony

[root@master ~]# chronyc sources -v

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current best, '+' = combined, '-' = not combined,

| / 'x' = may be in error, '~' = too variable, '?' = unusable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 203.107.6.88 2 9 377 14 +59us[ +139us] +/- 28ms2.2.2.2 所有安装docker--所有节点都做

Docker 是容器引擎,K8S 用它来运行容器。配置阿里云源是为了下载更快

bash

[root@master ~]# cd /etc/yum.repos.d/

[root@master yum.repos.d]# cat docker.repo # 创建Docker的yum源配置

[docker]

name = docker

baseurl = https://mirrors.aliyun.com/docker-ce/linux/rhel/9.3/x86_64/stable/ # 阿里云Docker镜像源

gpgcheck = 0 # 不验证签名(加快安装)

[root@master yum.repos.d]# yum install docker-ce -y # 安装Docker

[root@master yum.repos.d]# systemctl enable --now docker # 开机自启并立即启动Docker

[root@master yum.repos.d]# systemctl status docker # 检查状态

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; preset: disabled)

Active: active (running) since Thu 2025-10-23 15:45:30 CST; 46min ago2.3 搭建 Harbor 私有仓库(仅 harbor 节点做)

2.3.1 生成 SSL 证书(让仓库通信加密)

Harbor 仓库需要 HTTPS 通信,证书用于加密传输,避免镜像被篡改

bash

[root@harbor ~]# mkdir /data/certs/ -p # 创建证书存放目录

[root@harbor ~]# openssl req -newkey rsa:4096 \

-nodes -sha256 -keyout /data/certs/rch.hjn.key \ # 生成私钥

-addext "subjectAltName = DNS:rch.hjn.com" \ # 绑定仓库域名

-x509 -days 365 -out /data/certs/rch.hjn.crt # 生成证书(有效期1年)

[root@harbor ~]# tree /data/certs/

/data/certs/

├── rch.hjn.crt

└── rch.hjn.key2.3.2 安装并配置 Harbor

Harbor 是私有镜像仓库,存放 K8S 需要的镜像(避免每次从国外下载)

bash

[root@harbor opt]# ll

总用量 721484

drwx--x--x 4 root root 28 10月 20 21:27 containerd

-rw-r--r-- 1 root root 738797440 10月 20 21:29 harbor-offline-installer-v2.5.4.tgz

[root@harbor opt]# tar xzf harbor.v2.5.4.tar.gz

[root@harbor opt]# cd harbor/

[root@harbor harbor]# cp harbor.yml.tmpl harbor.yml

[root@harbor harbor]# ll

总用量 48

-rw-r--r-- 1 root root 3639 8月 28 2022 common.sh

-rw-r--r-- 1 root root 9906 10月 20 21:32 harbor.yml

-rw-r--r-- 1 root root 9917 8月 28 2022 harbor.yml.tmpl

-rwxr-xr-x 1 root root 2622 8月 28 2022 install.sh

-rw-r--r-- 1 root root 11347 8月 28 2022 LICENSE

-rwxr-xr-x 1 root root 1881 8月 28 2022 prepare

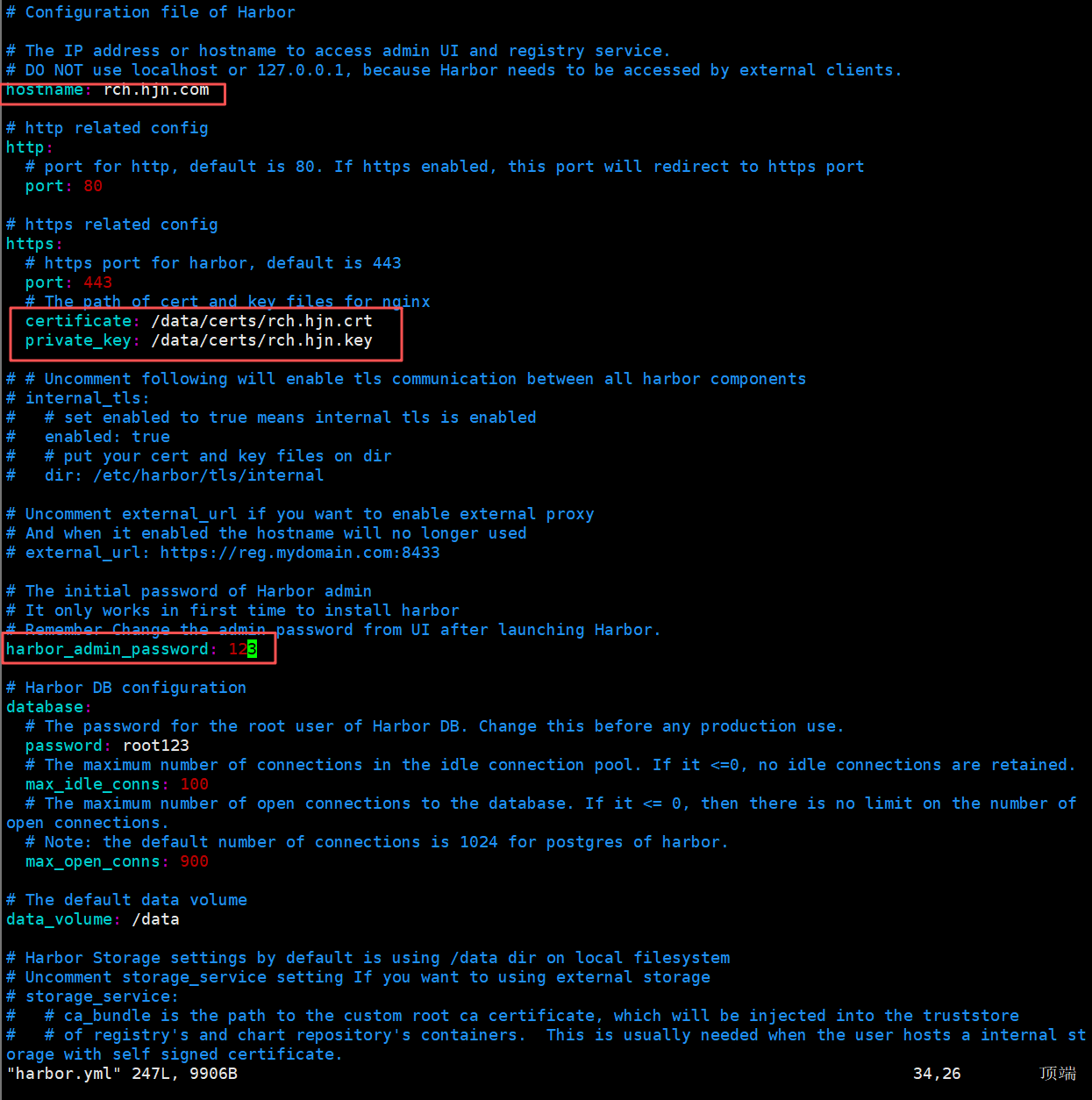

[root@harbor harbor]# vim harbor.yml

hostname: rch.hjn.com # 仓库的域名(和之前的证书对应)

certificate: /data/certs/rch.hjn.crt # 证书路径(之前生成的证书放这)

private_key: /data/certs/rch.hjn.key # 密钥路径(之前生成的密钥放这)

harbor_admin_password: 123 # 管理员密码(登录网页用的,自己设)

bash

[root@harbor harbor]# ./install.sh --with-chartmuseum # 安装Harbor(带图表仓库功能)

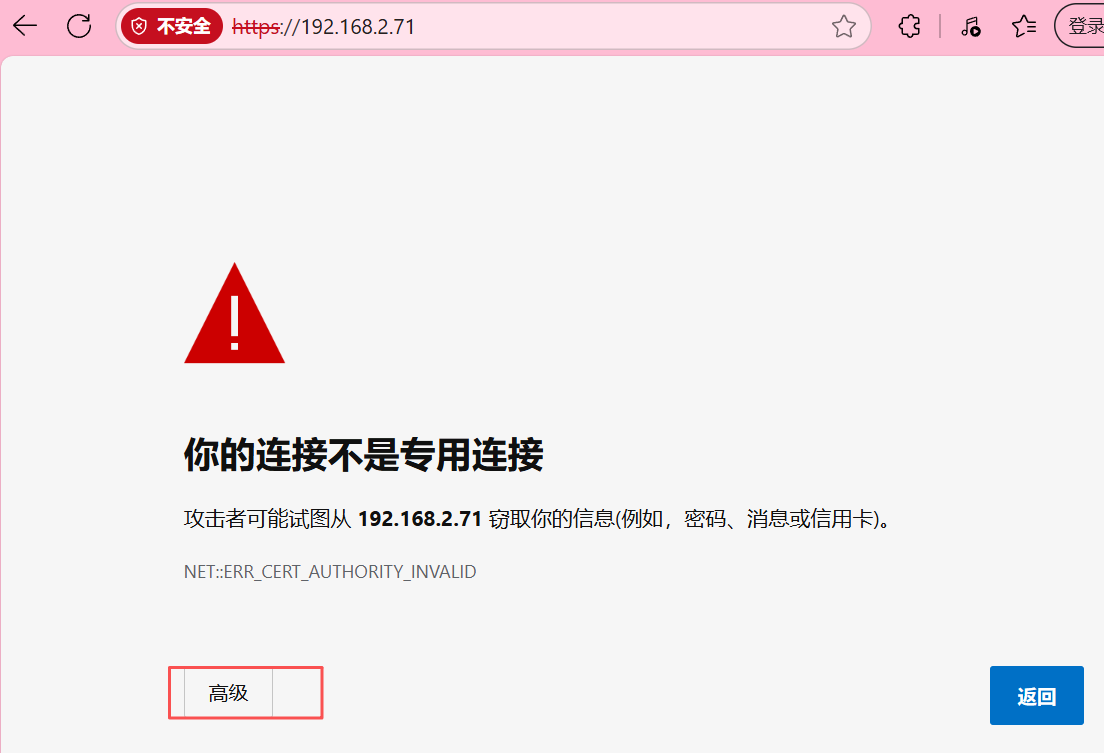

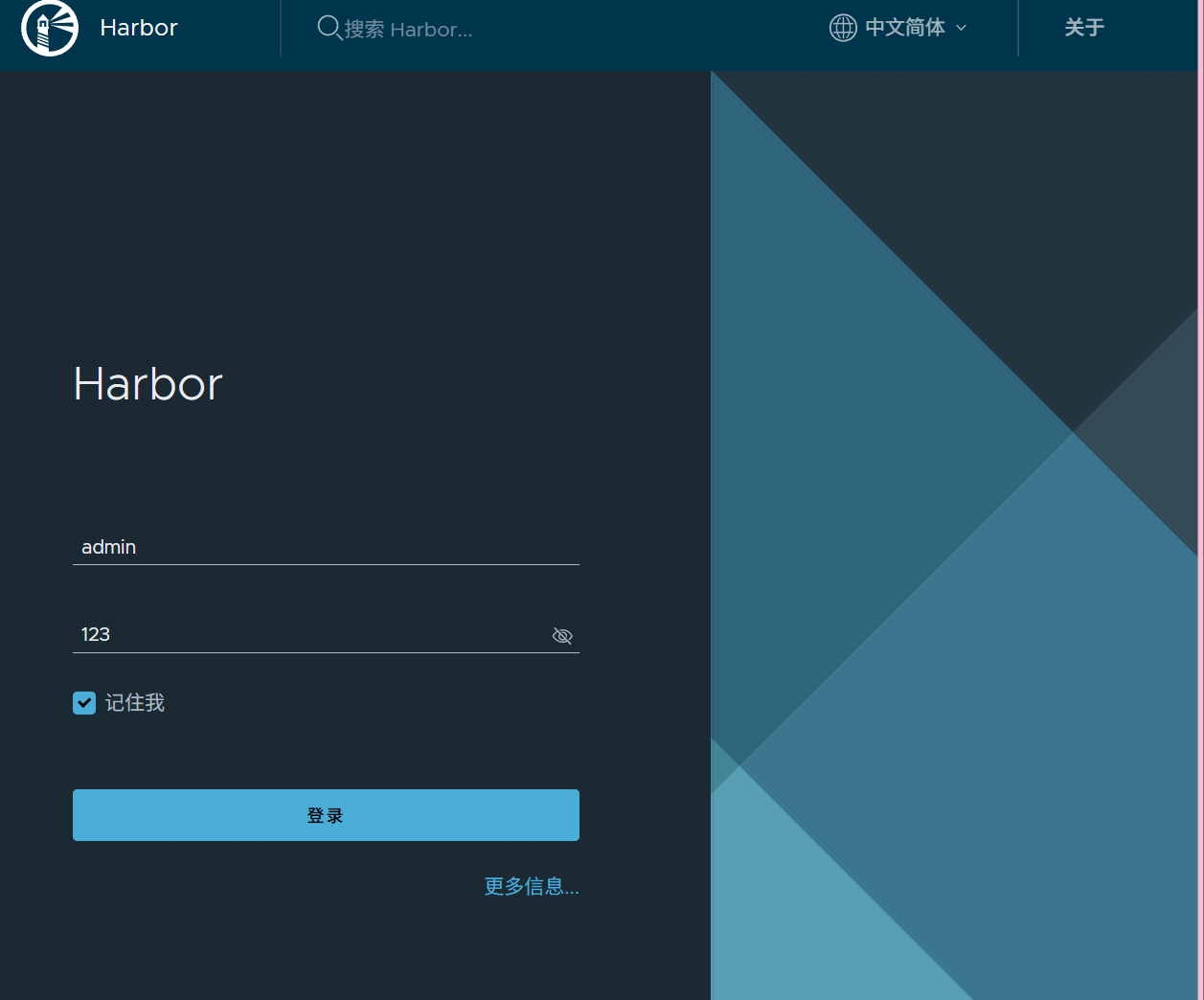

[root@harbor harbor]# docker compose up -d # 启动Harbor服务浏览器就可以看到harbor仓库了

!NOTE

harbor仓库默认从library项目中拉取镜像

2.4 所有禁用swap--master和node做

K8S 要求禁用 Swap,否则会影响容器内存分配(容器应优先用物理内存)。

bash

[root@master ~]# vim /etc/fstab # 注释掉Swap分区的行(永久禁用)

bash

[root@master ~]# systemctl disable --now swap.target # 立即关闭Swap服务

[root@master ~]# systemctl status swap.target

○ swap.target - Swaps

Loaded: loaded (/usr/lib/systemd/system/swap.target; static)

Active: inactive (dead) since Thu 2025-10-23 17:00:36 CST; 25s ago

Docs: man:systemd.special(7)

10月 23 17:00:36 master systemd[1]: Stopped target Swaps.

[root@master ~]# systemctl mask swap.target

Created symlink /etc/systemd/system/swap.target → /dev/null.

#发现swap分区还在

[root@master ~]# swapon -s

Filename Type Size Used Priority

/dev/dm-1 partition 4194300 0 -2

[root@master ~]# swapoff /dev/dm-1 # 临时关闭Swap分区(如果还在运行)

[root@master ~]# swapon -s

[root@master ~]# reboot # 重启生效

#swapon -s无输出,说明 Swap 已完全禁用。

[root@master ~]# swapon -s # 检查是否禁用成功(无输出即为成功)2.5 配置 Docker 连接 Harbor(master 和 node 节点做)

让 Docker 信任私有仓库 Harbor,并优先从 Harbor 下载镜像(速度快且安全)。

bash

[root@master ~]# vim /etc/docker/daemon.json # 配置Docker镜像源

[root@master ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://rch.hjn.com"] # 优先从Harbor拉取镜像

}

[root@master ~]# mkdir /etc/docker/certs.d/rch.hjn.com/ -p

#快速拷贝harbor主机上的ca证书

[root@harbor ~]# for i in 70 72 73

> do

> scp /data/certs/rch.hjn.crt root@192.168.2.$i:/etc/docker/certs.d/rch.hjn.com/ca.crt

> done

[root@master ~]# ll /etc/docker/certs.d/rch.hjn.com/

总用量 4

-rw-r--r-- 1 root root 2098 10月 23 17:20 ca.crt

[root@master ~]# systemctl enable --now docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@master ~]# docker info

Client: Docker Engine - Community

Version: 28.5.1

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.29.1

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.40.1

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 28.5.1

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

CDI spec directories:

/etc/cdi

/var/run/cdi

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: b98a3aace656320842a23f4a392a33f46af97866

runc version: v1.3.0-0-g4ca628d1

init version: de40ad0

Security Options:

seccomp

Profile: builtin

cgroupns

Kernel Version: 5.14.0-362.8.1.el9_3.x86_64

Operating System: Red Hat Enterprise Linux 9.3 (Plow)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 3.782GiB

Name: master

ID: ad4717ab-6504-41db-af2c-2a5e4df35a87

Docker Root Dir: /var/lib/docker

Debug Mode: false

Experimental: false

Insecure Registries:

::1/128

127.0.0.0/8

Registry Mirrors: #docker info中Registry Mirrors显示https://rch.hjn.com即为成功。

https://rch.hjn.com/

Live Restore Enabled: false2.6 安装 K8S 组件(所有节点做)

安装 K8S 的核心组件,kubelet 是每个节点必须运行的代理,kubeadm 用于初始化集群,kubectl 用于操作集群。

bash

[root@master ~]# vim /etc/yum.repos.d/k8s.repo # 配置K8S的yum源

[k8s]

name=k8s

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm # 阿里云K8S源

gpgcheck=0

# master节点安装控制工具(kubelet是节点代理,kubeadm是部署工具,kubectl是命令行工具)

[root@master ~]# yum install kubelet-1.30.0 kubeadm-1.30.0 kubectl-1.30.0 -y

# worker节点只需要kubelet和kubeadm(不需要kubectl)

[root@node1 ~]# yum install kubelet-1.30.0 kubeadm-1.30.0 -y2.7 设置kubectl命令补齐功能

bash

[root@master ~]# source <(kubectl completion bash)

[root@master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc 2.8 在所有节点安装cri-docker

k8s从1.24版本开始移除了dockershim,cri-docker 是 Docker 和 K8S 之间的翻译官,让 K8S 能控制 Docker 容器,所以需要安装cri-docker插件才能使用docker

软件下载:https://github.com/Mirantis/cri-dockerd

bash

#我选择上传本地已经存在的rpm包

[root@master ~]# ll

-rw-r--r-- 1 root root 11183248 10月 23 17:27 cri-dockerd-0.3.14-3.el8.x86_64.rpm

-rw-r--r-- 1 root root 581815296 10月 23 17:29 k8s-1.30.tar

-rw-r--r-- 1 root root 70188 10月 23 17:27 libcgroup-0.41-19.el8.x86_64.rpm

[root@master ~]# yum install *.rpm -y

已安装:

cri-dockerd-3:0.3.14-3.el8.x86_64 libcgroup-0.41-19.el8.x86_64

bash

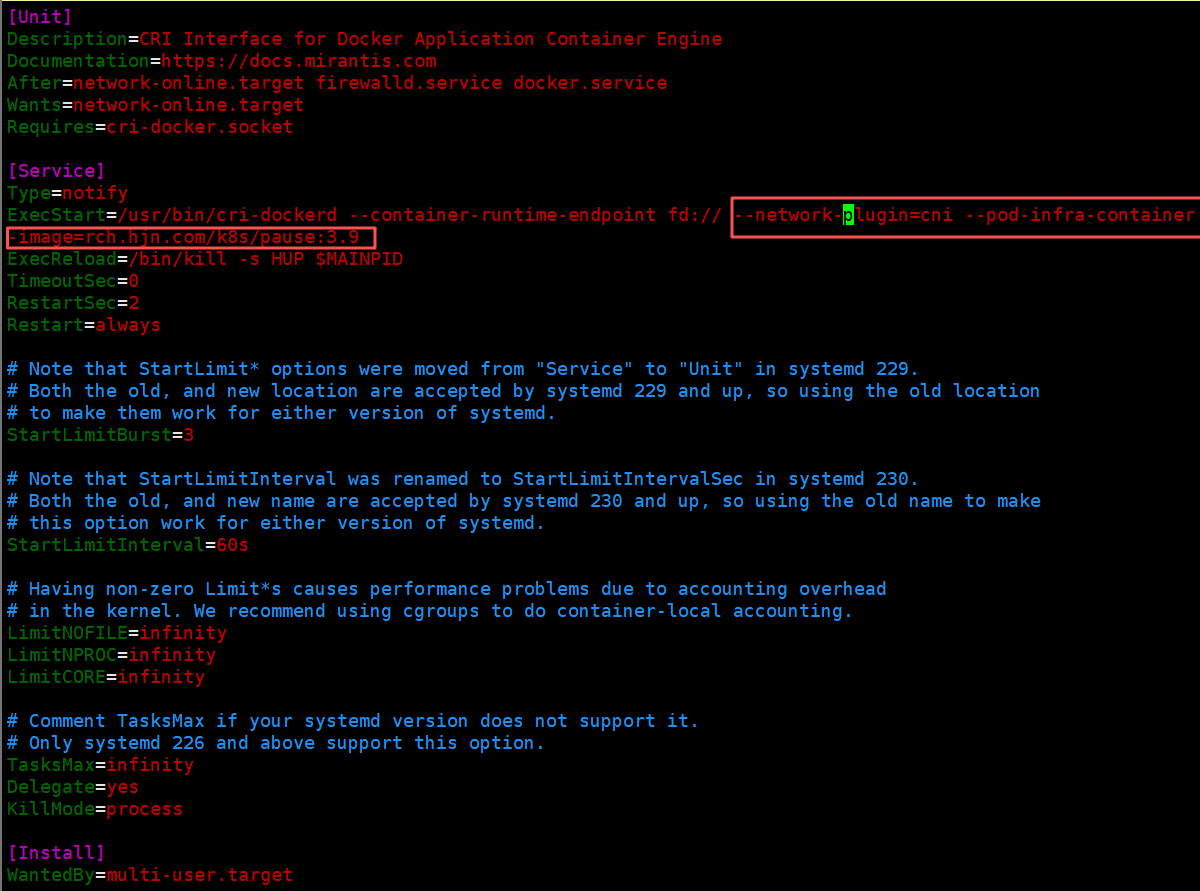

[root@master ~]# vim /lib/systemd/system/cri-docker.service # 配置插件

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --pod-infra-container-image=rch.hjn.com/k8s/pause:3.9

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

# Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229.

# Both the old, and new location are accepted by systemd 229 and up, so using the old location

# to make them work for either version of systemd.

StartLimitBurst=3

# Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230.

# Both the old, and new name are accepted by systemd 230 and up, so using the old name to make

# this option work for either version of systemd.

StartLimitInterval=60s

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Comment TasksMax if your systemd version does not support it.

# Only systemd 226 and above support this option.

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

[root@node2 ~]# systemctl daemon-reload

[root@node2 ~]# systemctl enable --now cri-docker.service

Created symlink /etc/systemd/system/multi-user.target.wants/cri-docker.service → /usr/lib/systemd/system/cri-docker.service.

#ll /var/run/cri-dockerd.sock能看到 socket 文件,说明插件启动成功

[root@node2 ~]# ll /var/run/cri-dockerd.sock

srw-rw---- 1 root docker 0 10月 23 17:53 /var/run/cri-dockerd.sock

如果不知道k8s默认情况初始化时读取的工具,可以用以下命令查看

bash

[root@master ~]# kubeadm config print init-defaults | less

criSocket: unix:///var/run/containerd/containerd.sock2.9 初始化 K8S 集群(master 节点做)

2.9.1 拉取 K8S 镜像并上传到 Harbor

K8S 启动需要一系列基础镜像(如 apiserver、控制器等),先拉到本地再传到私有仓库,避免 worker 节点下载慢。

bash

# 从阿里云拉取K8S镜像(国内快)

[root@master ~]# kubeadm config images pull \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.30.0 \

--cri-socket=unix:///var/run/cri-dockerd.sock

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.11.1

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.12-0

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.30.0 c42f13656d0b 18 months ago 117MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.30.0 259c8277fcbb 18 months ago 62MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.30.0 c7aad43836fa 18 months ago 111MB

registry.aliyuncs.com/google_containers/kube-proxy v1.30.0 a0bf559e280c 18 months ago 84.7MB

registry.aliyuncs.com/google_containers/etcd 3.5.12-0 3861cfcd7c04 20 months ago 149MB

registry.aliyuncs.com/google_containers/coredns v1.11.1 cbb01a7bd410 2 years ago 59.8MB

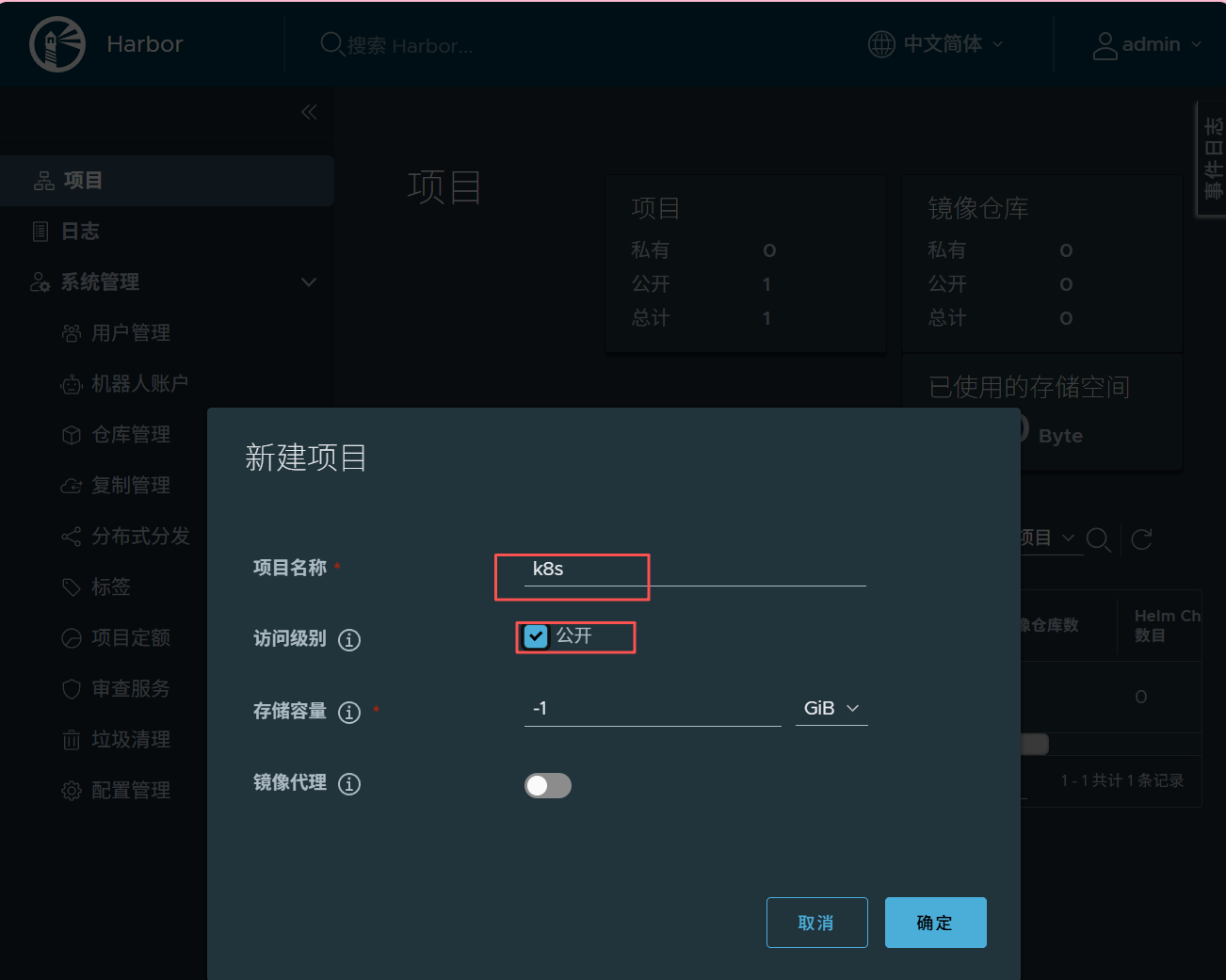

registry.aliyuncs.com/google_containers/pause 3.9 e6f181688397 3 years ago 744kB其他节点也需要,所以将这些镜像上传至harbor仓库,项目名称不能乱写在配置cri-docker时候,配置文件里是rch.hjn.com/k8s/pause:3.9,所以要创建名为k8s的项目

bash

# 给镜像打标签(改成Harbor仓库的地址)

[root@master ~]# docker images | awk '/google/{print $1":"$2}' | awk -F / '{system("docker tag " $0 " rch.hjn.com/k8s/"$3)}'

[root@master ~]# docker images | awk '/rch/{print $0}'

rch.hjn.com/k8s/kube-apiserver v1.30.0 c42f13656d0b 18 months ago 117MB

rch.hjn.com/k8s/kube-controller-manager v1.30.0 c7aad43836fa 18 months ago 111MB

rch.hjn.com/k8s/kube-scheduler v1.30.0 259c8277fcbb 18 months ago 62MB

rch.hjn.com/k8s/kube-proxy v1.30.0 a0bf559e280c 18 months ago 84.7MB

rch.hjn.com/k8s/etcd 3.5.12-0 3861cfcd7c04 20 months ago 149MB

rch.hjn.com/k8s/coredns v1.11.1 cbb01a7bd410 2 years ago 59.8MB

rch.hjn.com/k8s/pause 3.9 e6f181688397 3 years ago 744kB

[root@master ~]# docker login rch.hjn.com

Username: admin

Password:

WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'.

Configure a credential helper to remove this warning. See

https://docs.docker.com/go/credential-store/

Login Succeeded

# 上传到Harbor(供所有节点下载)

[root@master ~]# docker images | awk '/rch/{system("docker push " $1":"$2)}'Harbor 的k8s项目中能看到上传的镜像,说明成功。

2.9.2 初始化 master 节点

初始化 master 节点,生成集群配置,同时指定网络段和镜像来源。

bash

#所有主机都要做

[root@master ~]# systemctl enable --now kubelet.service

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@master ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 \ # 设定容器网络段

--image-repository rch.hjn.com/k8s \ # 从Harbor拉取镜像

--kubernetes-version v1.30.0 \ # K8S版本

--cri-socket=unix:///var/run/cri-dockerd.sock # 指定cri-docker插件

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.2.70:6443 --token d6b2qf.fxfal46sed2sr1dj \

--discovery-token-ca-cert-hash sha256:f5dc42f9e3eb90557233ec89496b43073417eeed5a9a0c757f26c4ec8504871f

[root@master ~]# kubectl get nodes

E1023 18:42:29.353546 5758 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E1023 18:42:29.354249 5758 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E1023 18:42:29.356263 5758 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E1023 18:42:29.356749 5758 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E1023 18:42:29.358689 5758 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

The connection to the server localhost:8080 was refused - did you specify the right host or port?

# 配置kubectl命令(让master节点能操作集群)

[root@master ~]# export KUBECONFIG=/etc/kubernetes/admin.conf

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 85s v1.30.0 # NotReady是因为网络还没设置

[root@master ~]# vim .bash_profile

[root@master ~]# cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

export KUBECONFIG=/etc/kubernetes/admin.conf

# User specific environment and startup programs

#在两个work主机上都要做,这是一个令牌

[root@node1 ~]# kubeadm join 192.168.2.70:6443 --token d6b2qf.fxfal46sed2sr1dj \

--discovery-token-ca-cert-hash sha256:f5dc42f9e3eb90557233ec89496b43073417eeed5a9a0c757f26c4ec8504871f --cri-socket=unix:///var/run/cri-dockerd.sock

#如果忘了令牌,可以在master执行,令牌24小时更新一次

[root@master ~]# kubeadm token create --print-join-command

kubeadm join 192.168.2.70:6443 --token ar4hbv.qwqmt25s44mbboy0 --discovery-token-ca-cert-hash sha256:f5dc42f9e3eb90557233ec89496b43073417eeed5a9a0c757f26c4ec8504871f

#kubectl get nodes显示 master 节点状态为NotReady(暂时没网络插件),说明初始化成功。

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 7m23s v1.30.0 #NotReady没有网络插件

node1 NotReady <none> 2m33s v1.30.0

node2 NotReady <none> 2m24s v1.30.0如果配置错误可以使用重置

bash

kubeadm reset2.10 安装 flannel 网络插件(master 节点做)

flannel 是容器网络插件,让不同节点的容器能互相通信(K8S 必须有网络插件才会 Ready)。

官方网站:https://github.com/flannel-io/flanne

bash

#下载flannel的yaml部署文件

[root@master ~]# wget https://github.com/flannelio/flannel/releases/latest/download/kube-flannel.yml

#下载镜像:

[root@master ~]# docker pull docker.io/flannel/flannel:v0.25.5

[root@master ~]# docekr docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1

#我这里是有本地镜像

[root@master ~]# mkdir network

[root@master ~]# cd network/

[root@master network]# mv ~/flannel-0.25.5.tag.gz .

[root@master network]# mv ~/kube-flannel.yml .

[root@master network]# ll

总用量 82140

-rw-r--r-- 1 root root 84103168 10月 23 18:53 flannel-0.25.5.tag.gz

-rw-r--r-- 1 root root 4406 10月 23 18:53 kube-flannel.yml

[root@master network]# docker load -i flannel-0.25.5.tag.gz

ef7a14b43c43: Loading layer [==================================================>] 8.079MB/8.079MB

1d9375ff0a15: Loading layer [==================================================>] 9.222MB/9.222MB

4af63c5dc42d: Loading layer [==================================================>] 16.61MB/16.61MB

2b1d26302574: Loading layer [==================================================>] 1.544MB/1.544MB

d3dd49a2e686: Loading layer [==================================================>] 42.11MB/42.11MB

7278dc615b95: Loading layer [==================================================>] 5.632kB/5.632kB

c09744fc6e92: Loading layer [==================================================>] 6.144kB/6.144kB

0a2b46a5555f: Loading layer [==================================================>] 1.923MB/1.923MB

5f70bf18a086: Loading layer [==================================================>] 1.024kB/1.024kB

601effcb7aab: Loading layer [==================================================>] 1.928MB/1.928MB

Loaded image: flannel/flannel:v0.25.5

21692b7dc30c: Loading layer [==================================================>] 2.634MB/2.634MB

Loaded image: flannel/flannel-cni-plugin:v1.5.1-flannel1

#需要创建一个flannel的项目

bash

# 上传到Harbor(供所有节点使用)

[root@master network]# docker tag flannel/flannel:v0.25.5 rch.hjn.com/flannel/flannel:v0.25.5

[root@master network]# docker tag flannel/flannel-cni-plugin:v1.5.1-flannel1 rch.hjn.com/flannel/flannel-cni-plugin:v1.5.1-flannel1

[root@master network]# docker push rch.hjn.com/flannel/flannel:v0.25.5

[root@master network]# docker push rch.hjn.com/flannel/flannel-cni-plugin:v1.5.1-flannel1

之后修改配置文件

bash

[root@master network]# vim kube-flannel.yml

[root@master network]# grep -w image kube-flannel.yml

image: flannel/flannel:v0.25.5

image: flannel/flannel-cni-plugin:v1.5.1-flannel1

image: flannel/flannel:v0.25.5

[root@master network]# kubectl apply -f kube-flannel.yml # 部署网络插件

#kubectl get nodes显示所有节点状态为Ready,说明网络插件生效

[root@master network]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 20m v1.30.0

node1 Ready <none> 16m v1.30.0

node2 Ready <none> 15m v1.30.02.11 测试集群功能

通过启动一个 nginx 容器,验证集群是否能正常创建和运行容器。

bash

[root@master ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

38513bd72563: Pull complete

10d18f46ee87: Pull complete

a8d825a0683a: Pull complete

a131bc1d4bd5: Pull complete

3818929ac19f: Pull complete

1498b1cfda15: Pull complete

c50c84d0ed4d: Pull complete

Digest: sha256:029d4461bd98f124e531380505ceea2072418fdf28752aa73b7b273ba3048903

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

[root@master ~]# kubectl run webserver --image nginx:latest # 启动一个nginx容器

pod/webserver created

[root@master ~]# kubectl get pods -o wide # 查看容器状态(Running表示正常)

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webserver 0/1 ContainerCreating 0 12s <none> node1 <none> <none>

[root@master ~]# docker tag nginx:latest rch.hjn.com/library/nginx:latest

[root@master ~]# docker push rch.hjn.com/library/nginx:latest

The push refers to repository [rch.hjn.com/library/nginx]

19aab87073f2: Pushed

1e8adaf99b20: Pushed

1cf4237d06e9: Pushed

5a74922ed625: Pushed

fc74d1be15ae: Pushed

7d4434559286: Pushed

d7c97cb6f1fe: Pushed

latest: digest: sha256:681da4f8f67a716207fe86b5432e3b7b5fc1e2b0e830acbe24cc42bc5813c468 size: 1778

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webserver 1/1 Running 0 83s 10.244.1.3 node1 <none> <none>

[root@master ~]# kubectl describe pods webserver

Name: webserver

Namespace: default

Priority: 0

Service Account: default

Node: node1/192.168.2.72

Start Time: Thu, 23 Oct 2025 19:13:41 +0800

Labels: run=webserver

Annotations: <none>

Status: Running

IP: 10.244.1.3

IPs:

IP: 10.244.1.3

Containers:

webserver:

Container ID: docker://4935fc4bef3dcf0750f541e32ead459fc0fc1ed4145735498da58c266e4d0ed7

[root@node1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4935fc4bef3d nginx "/docker-entrypoint...." 2 minutes ago Up 2 minutes k8s_webserver_webserver_default_b22f44f3-562c-46f0-8543-18446ad9a3a0_0

2b319cca5c78 rch.hjn.com/k8s/pause:3.9 "/pause" 3 minutes ago Up 3 minutes k8s_POD_webserver_default_b22f44f3-562c-46f0-8543-18446ad9a3a0_1

d85e90b06ba3 b9f4beb93d68 "/opt/bin/flanneld -..." 14 minutes ago Up 14 minutes k8s_kube-flannel_kube-flannel-ds-nfd8q_kube-flannel_8a173c1f-4dae-4cbb-96b7-7878b6c9ad4d_0

ea60cc79d806 rch.hjn.com/k8s/pause:3.9 "/pause" 14 minutes ago Up 14 minutes k8s_POD_kube-flannel-ds-nfd8q_kube-flannel_8a173c1f-4dae-4cbb-96b7-7878b6c9ad4d_0

0382aa56901e rch.hjn.com/k8s/kube-proxy "/usr/local/bin/kube..." 30 minutes ago Up 30 minutes k8s_kube-proxy_kube-proxy-x459n_kube-system_713d209d-0e67-492a-97fc-0b2aed77aadb_0

8a8568c9d08a rch.hjn.com/k8s/pause:3.9 "/pause" 30 minutes ago Up 30 minutes k8s_POD_kube-proxy-x459n_kube-system_713d209d-0e67-492a-97fc-0b2aed77aadb_0删除容器试试(etcd效果)

bash

[root@node1 ~]# docker rm -f 4935fc4bef3d

4935fc4bef3d

[root@node1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

40a16b8041d1 nginx "/docker-entrypoint...." Less than a second ago Up Less than a second k8s_webserver_webserver_default_b22f44f3-562c-46f0-8543-18446ad9a3a0_1

2b319cca5c78 rch.hjn.com/k8s/pause:3.9 "/pause" 4 minutes ago Up 4 minutes k8s_POD_webserver_default_b22f44f3-562c-46f0-8543-18446ad9a3a0_1

d85e90b06ba3 b9f4beb93d68 "/opt/bin/flanneld -..." 15 minutes ago Up 15 minutes k8s_kube-flannel_kube-flannel-ds-nfd8q_kube-flannel_8a173c1f-4dae-4cbb-96b7-7878b6c9ad4d_0

ea60cc79d806 rch.hjn.com/k8s/pause:3.9 "/pause" 15 minutes ago Up 15 minutes k8s_POD_kube-flannel-ds-nfd8q_kube-flannel_8a173c1f-4dae-4cbb-96b7-7878b6c9ad4d_0

0382aa56901e rch.hjn.com/k8s/kube-proxy "/usr/local/bin/kube..." 31 minutes ago Up 31 minutes k8s_kube-proxy_kube-proxy-x459n_kube-system_713d209d-0e67-492a-97fc-0b2aed77aadb_0

8a8568c9d08a rch.hjn.com/k8s/pause:3.9 "/pause" 31 minutes ago Up 31 minutes k8s_POD_kube-proxy-x459n_kube-system_713d209d-0e67-492a-97fc-0b2aed77aadb_0三、总结

整个部署步骤分 6 大步:

- 环境准备(关防火墙、同步时间、配 hosts);

- 装 Docker 和私有仓库(Harbor);

- 禁用 Swap(K8S 要求);

- 装 K8S 组件和 cri-docker 插件(让 K8S 控制 Docker);

- 初始化 master 并加入 worker 节点;

- 装网络插件(让节点互通)。