Kafka 4.1.0 彻底遗弃zookeeper, 亲测,避坑,三节点集群搭建。

系统:buntu 24.04.3 LTS

JDK:jdk-17.0.12

一、下载软件

下载 Kafka 4.1.0 二进制包,解压到目标目录

wget https://archive.apache.org/dist/kafka/4.1.0/kafka_2.13-4.1.0.tgz

tar -xzf kafka_2.13-4.1.0.tgz -C /apps二、Raft集群规划

实现高可用,集群若允许N个controller失败,则需要2N+1个controller组成集群。下面搭建一个3节点的Kafka集群,3个controller,3个broker。Controller的ID从1000起编,配置端口号为9093 。Broker的ID从2000起编,配置端口号为9092。

角色 ID IP 端口

Controller 1000 192.168.2.24 9093

Controller 1001 192.168.2.25 9093

Controller 1002 192.168.2.26 9093

Broker 2000 192.168.2.24 9092

Broker 2001 192.168.2.25 9092

Broker 2002 192.168.2.26 9092三、配置环境变量

3.1、JDK下载

(略)

3.2、配置变量

vim /etc/profile

export JAVA_HOME=/apps/jdk-17.0.12

export PATH=$JAVA_HOME/bin:$PATH使配置生效

source /etc/profile四、集群配置

以下为192.168.2.24节点的controller和broker的部分配置。其他节点按规划修改node.id,listeners,advertised.listeners的内容,参看如下配置

4.1 修改controller配置

路径:conf/controller.properties

process.roles=controller

node.id=1000

listeners=CONTROLLER://192.168.2.24:9093

advertised.listeners=CONTROLLER://192.168.2.24:9093

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

controller.listener.names=CONTROLLER

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-controller-logs查看修改后的配置

root@ops-test-024:/apps/kafka_2.13-4.1.0/config# grep -v '^#' controller.properties

process.roles=controller

node.id=1000

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

listeners=CONTROLLER://192.168.2.24:9093

advertised.listeners=CONTROLLER://192.168.2.24:9093

controller.listener.names=CONTROLLER

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-controller-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

share.coordinator.state.topic.replication.factor=1

share.coordinator.state.topic.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

root@ops-test-024:/apps/kafka_2.13-4.1.0/config# 4.2 修改broker配置

路径:conf/broker.properties

process.roles=broker

node.id=2000

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

listeners=PLAINTEXT://192.168.2.24:9092

inter.broker.listener.name=PLAINTEXT

advertised.listeners=PLAINTEXT://192.168.2.24:9092

controller.listener.names=CONTROLLER

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-broker-logs查看修改后的配置

root@ops-test-024:/apps/kafka_2.13-4.1.0/config# grep -v '^#' broker.properties

process.roles=broker

node.id=2000

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

listeners=PLAINTEXT://192.168.2.24:9092

inter.broker.listener.name=PLAINTEXT

advertised.listeners=PLAINTEXT://192.168.2.24:9092

controller.listener.names=CONTROLLER

listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-broker-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

share.coordinator.state.topic.replication.factor=1

share.coordinator.state.topic.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

root@ops-test-024:/apps/kafka_2.13-4.1.0/config# 4.3 、第二个节点的配置

Controller 配置 (conf/controller.properties)

process.roles=controller

node.id=1001

listeners=CONTROLLER://192.168.2.25:9093

advertised.listeners=CONTROLLER://192.168.2.25:9093

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

controller.listener.names=CONTROLLER

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-controller-logsBroker 配置 (conf/broker.properties)

process.roles=broker

node.id=2001

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

listeners=PLAINTEXT://192.168.2.25:9092

inter.broker.listener.name=PLAINTEXT

advertised.listeners=PLAINTEXT://192.168.2.25:9092

controller.listener.names=CONTROLLER

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-broker-logs4.4 、第三个节点的配置

Controller 配置 (conf/controller.properties)

process.roles=controller

node.id=1002

listeners=CONTROLLER://192.168.2.26:9093

advertised.listeners=CONTROLLER://192.168.2.26:9093

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

controller.listener.names=CONTROLLER

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-controller-logsBroker 配置 (conf/broker.properties)

process.roles=broker

node.id=2002

controller.quorum.bootstrap.servers=192.168.2.24:9093,192.168.2.25:9093,192.168.2.26:9093

listeners=PLAINTEXT://192.168.2.26:9092

inter.broker.listener.name=PLAINTEXT

advertised.listeners=PLAINTEXT://192.168.2.26:9092

controller.listener.names=CONTROLLER

log.dirs=/apps/kafka_2.13-4.1.0/data/kraft-broker-logs五、部署操作

Kafka kraft集群建议从1个controller搭建起,搭完后再通过add-controller的方式将controller扩展为3节点。步骤如下:

5.1、生成集群cluster-id

bin/kafka-storage.sh random-uuid

0VMe9kUwROS-0fcQn-0yzg5.2、Standalone controller部署

在节点192.168.2.24上,使用cluster-id格式化controller目录。

bin/kafka-storage.sh format --cluster-id MCg3e_6pSYumFqhbyt6mKA --standalone --config config/controller.properties执行结果:

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-storage.sh format --cluster-id MCg3e_6pSYumFqhbyt6mKA --standalone --config config/controller.properties

Formatting dynamic metadata voter directory /apps/kafka_2.13-4.1.0/data/kraft-controller-logs with metadata.version 4.1-IV1.

root@ops-test-024:/apps/kafka_2.13-4.1.0# 格式化成功,会在controller的log.dir中生成集群元数据。

root@ops-test-024:/apps/kafka_2.13-4.1.0# ls -l data/kraft-controller-logs

total 12

drwxr-xr-x 2 root root 4096 Nov 3 15:43 __cluster_metadata-0

-rw-r--r-- 1 root root 355 Nov 3 15:43 bootstrap.checkpoint

-rw-r--r-- 1 root root 125 Nov 3 15:43 meta.properties

root@ops-test-024:/apps/kafka_2.13-4.1.0# 启动contoller

bin/kafka-server-start.sh -daemon config/controller.properties查看controller日志确认节点运行正常

root@ops-test-024:/apps/kafka_2.13-4.1.0# tail -f logs/controller.log

[2025-11-03 16:16:49,135] INFO [QuorumController id=1000] Replayed a FeatureLevelRecord setting metadata.version to 4.1-IV1 (org.apache.kafka.controller.FeatureControlManager)

[2025-11-03 16:16:49,136] INFO [QuorumController id=1000] Replayed a FeatureLevelRecord setting feature eligible.leader.replicas.version to 1 (org.apache.kafka.controller.FeatureControlManager)

[2025-11-03 16:16:49,136] INFO [QuorumController id=1000] Replayed a FeatureLevelRecord setting feature group.version to 1 (org.apache.kafka.controller.FeatureControlManager)

[2025-11-03 16:16:49,137] INFO [QuorumController id=1000] Replayed a FeatureLevelRecord setting feature transaction.version to 2 (org.apache.kafka.controller.FeatureControlManager)

[2025-11-03 16:16:49,137] INFO [QuorumController id=1000] Replayed ConfigRecord for ConfigResource(type=BROKER, name='') which set configuration min.insync.replicas to 1 (org.apache.kafka.controller.ConfigurationControlManager)

[2025-11-03 16:16:49,137] INFO [QuorumController id=1000] Replayed EndTransactionRecord() at offset 9. (org.apache.kafka.controller.OffsetControlManager)

[2025-11-03 16:16:49,212] INFO [QuorumController id=1000] Becoming the active controller at epoch 2, next write offset 3825. (org.apache.kafka.controller.QuorumController)

[2025-11-03 16:16:49,219] WARN [QuorumController id=1000] Performing controller activation. (org.apache.kafka.controller.QuorumController)

[2025-11-03 16:16:49,224] INFO [QuorumController id=1000] Activated periodic tasks: electPreferred, electUnclean, expireDelegationTokens, generatePeriodicPerformanceMessage, maybeFenceStaleBroker, writeNoOpRecord (org.apache.kafka.controller.PeriodicTaskControlManager)

[2025-11-03 16:16:49,413] INFO [QuorumController id=1000] Replayed RegisterControllerRecord containing ControllerRegistration(id=1000, incarnationId=Nbj6HR7ASSysBoXkTX7y3w, zkMigrationReady=false, listeners=[Endpoint(listenerName='CONTROLLER', securityProtocol=PLAINTEXT, host='192.168.2.24', port=9093)], supportedFeatures={eligible.leader.replicas.version: 0-1, group.version: 0-1, kraft.version: 0-1, metadata.version: 7-27, share.version: 0-1, transaction.version: 0-2}). (org.apache.kafka.controller.ClusterControlManager)

[2025-11-03 16:17:13,889] INFO [QuorumController id=1000] No previous registration found for broker 2000. New incarnation ID is 5usoWUoIQDq8w68c0Bp3GA. Generated 0 record(s) to clean up previous incarnations. New broker epoch is 3875. (org.apache.kafka.controller.ClusterControlManager)

[2025-11-03 16:17:13,906] INFO [QuorumController id=1000] Replayed initial RegisterBrokerRecord for broker 2000: RegisterBrokerRecord(brokerId=2000, isMigratingZkBroker=false, incarnationId=5usoWUoIQDq8w68c0Bp3GA, brokerEpoch=3875, endPoints=[BrokerEndpoint(name='PLAINTEXT', host='192.168.2.24', port=9092, securityProtocol=0)], features=[BrokerFeature(name='group.version', minSupportedVersion=0, maxSupportedVersion=1), BrokerFeature(name='kraft.version', minSupportedVersion=0, maxSupportedVersion=1), BrokerFeature(name='metadata.version', minSupportedVersion=7, maxSupportedVersion=27), BrokerFeature(name='share.version', minSupportedVersion=0, maxSupportedVersion=1), BrokerFeature(name='transaction.version', minSupportedVersion=0, maxSupportedVersion=2), BrokerFeature(name='eligible.leader.replicas.version', minSupportedVersion=0, maxSupportedVersion=1)], rack=null, fenced=true, inControlledShutdown=false, logDirs=[wLuTIWTiCkk9DBgKqj1wGQ]) (org.apache.kafka.controller.ClusterControlManager)5.2、Kafka broker集群部署

在节点192.168.2.24上,使用cluster-id格式化broker目录。

bin/kafka-storage.sh format --cluster-id MCg3e_6pSYumFqhbyt6mKA --config config/broker.properties

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-storage.sh format --cluster-id MCg3e_6pSYumFqhbyt6mKA --config config/broker.properties

Formatting metadata directory /apps/kafka_2.13-4.1.0/data/kraft-broker-logs with metadata.version 4.1-IV1.

root@ops-test-024:/apps/kafka_2.13-4.1.0# 格式化成功后在对应目录中生成元数据文件

root@ops-test-024:/apps/kafka_2.13-4.1.0# ls -l data/kraft-broker-logs

total 8

-rw-r--r-- 1 root root 355 Nov 3 15:48 bootstrap.checkpoint

-rw-r--r-- 1 root root 125 Nov 3 15:48 meta.properties

root@ops-test-024:/apps/kafka_2.13-4.1.0# 启动broker 2000

bin/kafka-server-start.sh -daemon config/broker.properties

root@ops-test-024:/apps/kafka_2.13-4.1.0# tail logs/server.log

[2025-11-03 16:17:14,385] INFO Awaiting socket connections on 192.168.2.24:9092. (kafka.network.DataPlaneAcceptor)

[2025-11-03 16:17:14,405] INFO [BrokerServer id=2000] Waiting for all of the authorizer futures to be completed (kafka.server.BrokerServer)

[2025-11-03 16:17:14,405] INFO [BrokerServer id=2000] Finished waiting for all of the authorizer futures to be completed (kafka.server.BrokerServer)

[2025-11-03 16:17:14,405] INFO [BrokerServer id=2000] Waiting for all of the SocketServer Acceptors to be started (kafka.server.BrokerServer)

[2025-11-03 16:17:14,405] INFO [BrokerServer id=2000] Finished waiting for all of the SocketServer Acceptors to be started (kafka.server.BrokerServer)

[2025-11-03 16:17:14,406] INFO [BrokerServer id=2000] Transition from STARTING to STARTED (kafka.server.BrokerServer)

[2025-11-03 16:17:14,407] INFO Kafka version: 4.1.0 (org.apache.kafka.common.utils.AppInfoParser)

[2025-11-03 16:17:14,407] INFO Kafka commitId: 13f70256db3c994c (org.apache.kafka.common.utils.AppInfoParser)

[2025-11-03 16:17:14,407] INFO Kafka startTimeMs: 1762157834406 (org.apache.kafka.common.utils.AppInfoParser)

[2025-11-03 16:17:14,408] INFO [KafkaRaftServer nodeId=2000] Kafka Server started (kafka.server.KafkaRaftServer)

root@ops-test-024:/apps/kafka_2.13-4.1.0# 如上broker启动正常后,在其他两个节点以同样的步骤启动broker 2001,2002.

第二节点:

格式化broker metadata

bin/kafka-storage.sh format --cluster-id MCg3e_6pSYumFqhbyt6mKA --config config/broker.properties启动broker 2001

bin/kafka-server-start.sh -daemon config/broker.properties第三节点:

格式化broker metadata

bin/kafka-storage.sh format --cluster-id MCg3e_6pSYumFqhbyt6mKA --config config/broker.properties启动broker 2002

bin/kafka-server-start.sh -daemon config/broker.properties5.3、查看集群状态

bin/kafka-cluster.sh list-endpoints --bootstrap-server 192.168.2.24:9092

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-cluster.sh list-endpoints --bootstrap-server 192.168.2.24:9092

ID HOST PORT RACK STATE ENDPOINT_TYPE

2000 192.168.2.24 9092 null unfenced broker

2001 192.168.2.25 9092 null unfenced broker

2002 192.168.2.26 9092 null unfenced broker

root@ops-test-024:/apps/kafka_2.13-4.1.0# 5.4 Controller集群扩展

目前为止,搭建了1个controller + 3个broker的kafka集群,现在需要将kafka controller扩展为3个。

5.4.1 扩展节点(节点上执行)

需要扩展的节点上,执行以下命令格式化controller。注意增加了 --no-initial-controllers。

bin/kafka-storage.sh format --cluster-id MCg3e_6pSYumFqhbyt6mKA --config config/controller.properties --no-initial-controllers格式化成功后启动controller。

bin/kafka-server-start.sh -daemon config/controller.properties5。4.2、查看状态:

启动成功后查看新controller开始与主controller同步元数据,通过以下命令查看同步状态。当lag为0时表示同步到最新,此时controller的状态为Observer。

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-metadata-quorum.sh --bootstrap-controller 192.168.2.24:9093 describe --replication

NodeId DirectoryId LogEndOffset Lag LastFetchTimestamp LastCaughtUpTimestamp Status

1000 WQdEjHlJSMiyrdItFp5zLQ 8439 0 1762160113112 1762160113112 Leader

2001 LSWHR4qpGQdD0qaDRmPGjQ 8439 0 1762160112814 1762160112814 Observer

1002 rjimKsPznfm-I-O9sWschw 0 8439 1762159816017 -1 Observer

2000 wLuTIWTiCkk9DBgKqj1wGQ 8439 0 1762160112814 1762160112814 Observer

2002 qu2706Ym7QbnH-PUGAyYTg 8439 0 1762160112819 1762160112819 Observer

1001 QwOwk7fyIninpdmLCQouPA 8439 0 1762160112815 1762160112815 Observer

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-metadata-quorum.sh --bootstrap-controller 192.168.2.24:9093 describe --replication

NodeId DirectoryId LogEndOffset Lag LastFetchTimestamp LastCaughtUpTimestamp Status

1000 WQdEjHlJSMiyrdItFp5zLQ 8510 0 1762160148617 1762160148617 Leader

2001 LSWHR4qpGQdD0qaDRmPGjQ 8510 0 1762160148324 1762160148324 Observer

2000 wLuTIWTiCkk9DBgKqj1wGQ 8510 0 1762160148324 1762160148324 Observer

2002 qu2706Ym7QbnH-PUGAyYTg 8510 0 1762160148326 1762160148326 Observer

1001 QwOwk7fyIninpdmLCQouPA 8510 0 1762160148325 1762160148325 Observer

root@ops-test-024:/apps/kafka_2.13-4.1.0# 5.4.3、节点conttroller加入到controller集群中

新controller同步元数据后,将其正式加入到controller集群中。

分别在在新加的controller节点上执行

bin/kafka-metadata-quorum.sh --command-config config/controller.properties --bootstrap-controller 192.168.2.24:9093 add-controller

root@ops-test-025:/apps/kafka_2.13-4.1.0# bin/kafka-metadata-quorum.sh --command-config config/controller.properties --bootstrap-controller 192.168.2.24:9093 add-controller

Added controller 1001 with directory id QwOwk7fyIninpdmLCQouPA and endpoints: CONTROLLER://192.168.2.25:9093

root@ops-test-025:/apps/kafka_2.13-4.1.0# 5.4.4、查看controller的状态

bin/kafka-metadata-quorum.sh --bootstrap-controller 192.168.2.24:9093 describe --replication加入成功后,controller会变成follower。

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-metadata-quorum.sh --bootstrap-controller 192.168.2.24:9093 describe --replication

NodeId DirectoryId LogEndOffset Lag LastFetchTimestamp LastCaughtUpTimestamp Status

1000 WQdEjHlJSMiyrdItFp5zLQ 12572 0 1762162177937 1762162177937 Leader

1001 QwOwk7fyIninpdmLCQouPA 12572 0 1762162177787 1762162177787 Follower

1002 rjimKsPznfm-I-O9sWschw 12572 0 1762162177789 1762162177789 Follower

2001 LSWHR4qpGQdD0qaDRmPGjQ 12572 0 1762162177787 1762162177787 Observer

2000 wLuTIWTiCkk9DBgKqj1wGQ 12572 0 1762162177787 1762162177787 Observer

2002 qu2706Ym7QbnH-PUGAyYTg 12572 0 1762162177788 1762162177788 Observer

root@ops-test-024:/apps/kafka_2.13-4.1.0# 至此Kafka kraft集群搭建完毕。

六、验证使用

6.1 创建topic

bin/kafka-topics.sh --bootstrap-server 192.168.2.24:9092 --create --topic nierdayede --partitions 3 --replication-factor 1

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-topics.sh --bootstrap-server 192.168.2.24:9092 --create --topic nierdayede --partitions 3 --replication-factor 1

Created topic nierdayede.

root@ops-test-024:/apps/kafka_2.13-4.1.0# 6.2 生产消息

bin/kafka-console-producer.sh --bootstrap-server 192.168.2.24:9092 --topic nierdayede 6.3 消费消息

bin/kafka-console-consumer.sh --bootstrap-server 192.168.2.24:9092 --topic nierdayede --from-beginning结果展示:

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-topics.sh --bootstrap-server 192.168.2.24:9092 --create --topic nierdayede --partitions 3 --replication-factor 1

Created topic nierdayede.

root@ops-test-024:/apps/kafka_2.13-4.1.0# bin/kafka-console-producer.sh --bootstrap-server 192.168.2.24:9092 --topic nierdayede

>haha

>^Croot@ops-test-024:/apps/kafka_2.13-4.1.bin/kafka-console-consumer.sh --bootstrap-server 192.168.2.24:9092 --topic nierdayede --from-beginninging

haha七,页面查看

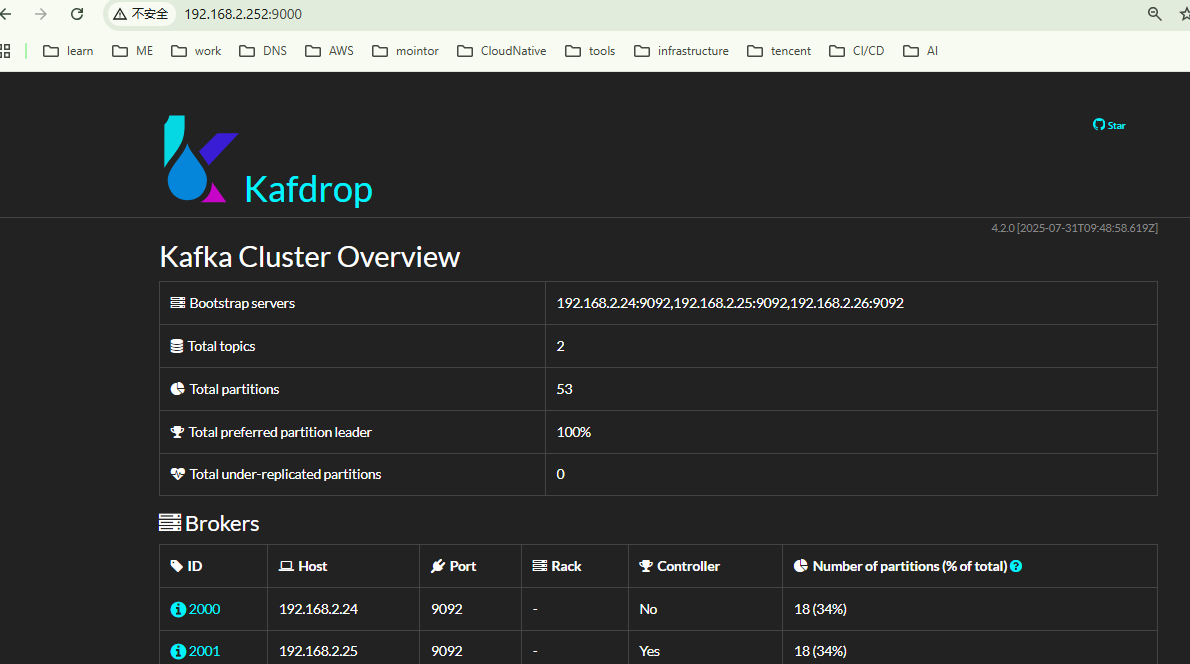

开源工具:Kafdrop

docker run -d -p 9000:9000 \

-e KAFKA_BROKERCONNECT=192.168.2.24:9092,192.168.2.25:9092,192.168.2.26:9092 \

obsidiandynamics/kafdrop访问查看

八,问题排查

生成集群cluster-id

bin/kafka-storage.sh random-uuid

0VMe9kUwROS-0fcQn-0yzg----这里遇到一个问题--

jdk版本用的是17.0.2

root@ops-test-024:/apps/kafka_2.13-4.1.0/bin# ./kafka-storage.sh random-uuid

java.lang.NullPointerException: Cannot invoke "jdk.internal.platform.CgroupInfo.getMountPoint()" because "anyController" is null

at java.base/jdk.internal.platform.cgroupv2.CgroupV2Subsystem.getInstance(CgroupV2Subsystem.java:81)

at java.base/jdk.internal.platform.CgroupSubsystemFactory.create(CgroupSubsystemFactory.java:113)

at java.base/jdk.internal.platform.CgroupMetrics.getInstance(CgroupMetrics.java:167)

at java.base/jdk.internal.platform.SystemMetrics.instance(SystemMetrics.java:29)

at java.base/jdk.internal.platform.Metrics.systemMetrics(Metrics.java:58)

at java.base/jdk.internal.platform.Container.metrics(Container.java:43)

at jdk.management/com.sun.management.internal.OperatingSystemImpl.<init>(OperatingSystemImpl.java:182)

at jdk.management/com.sun.management.internal.PlatformMBeanProviderImpl.getOperatingSystemMXBean(PlatformMBeanProviderImpl.java:280)

at jdk.management/com.sun.management.internal.PlatformMBeanProviderImpl$3.nameToMBeanMap(PlatformMBeanProviderImpl.java:199)

at java.management/java.lang.management.ManagementFactory.lambda$getPlatformMBeanServer$0(ManagementFactory.java:488)

at java.base/java.util.stream.ReferencePipeline$7$1.accept(ReferencePipeline.java:273)

at java.base/java.util.stream.ReferencePipeline$2$1.accept(ReferencePipeline.java:179)

at java.base/java.util.HashMap$ValueSpliterator.forEachRemaining(HashMap.java:1779)

at java.base/java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:509)

at java.base/java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:499)

at java.base/java.util.stream.ForEachOps$ForEachOp.evaluateSequential(ForEachOps.java:150)

at java.base/java.util.stream.ForEachOps$ForEachOp$OfRef.evaluateSequential(ForEachOps.java:173)

at java.base/java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

at java.base/java.util.stream.ReferencePipeline.forEach(ReferencePipeline.java:596)

at java.management/java.lang.management.ManagementFactory.getPlatformMBeanServer(ManagementFactory.java:489)

at jdk.management.agent/sun.management.jmxremote.ConnectorBootstrap.startLocalConnectorServer(ConnectorBootstrap.java:543)

at jdk.management.agent/jdk.internal.agent.Agent.startLocalManagementAgent(Agent.java:318)

at jdk.management.agent/jdk.internal.agent.Agent.startAgent(Agent.java:450)

at jdk.management.agent/jdk.internal.agent.Agent.startAgent(Agent.java:599)

Exception thrown by the agent : java.lang.NullPointerException: Cannot invoke "jdk.internal.platform.CgroupInfo.getMountPoint()" because "anyController" is null

root@ops-test-024:/apps/kafka_2.13-4.1.0/bin# java -version

openjdk version "17.0.2" 2022-01-18

OpenJDK Runtime Environment (build 17.0.2+8-86)

OpenJDK 64-Bit Server VM (build 17.0.2+8-86, mixed mode, sharing)解析:

OpenJDK 官方 Bug:

Bug ID: JDK-8287073: "NPE from CgroupV2Subsystem.getInstance()"。

描述:在 Linux 上,当 cgroup v2 的 "anyController" 为空时,JDK 在 CgroupV2Subsystem.getInstance() 会抛 NPE。

影响版本:JDK 11.0.16, 17.0.3, 18.0.2, 19 等。

修复版本:列出为 "Fixed" in build b25 等。

意义:说明该异常是 JDK 本身在处理容器/cgroup 情况下的缺陷,而非 Kafka 专属

替换为17.0.12版本 执行成功

root@ops-test-024:/apps/kafka_2.13-4.1.0/bin# java -version

java version "17.0.12" 2024-07-16 LTS

Java(TM) SE Runtime Environment (build 17.0.12+8-LTS-286)

Java HotSpot(TM) 64-Bit Server VM (build 17.0.12+8-LTS-286, mixed mode, sharing)

root@ops-test-024:/apps/kafka_2.13-4.1.0/bin# ./kafka-storage.sh random-uuid

MCg3e_6pSYumFqhbyt6mKA

root@ops-test-024:/apps/kafka_2.13-4.1.0/bin#