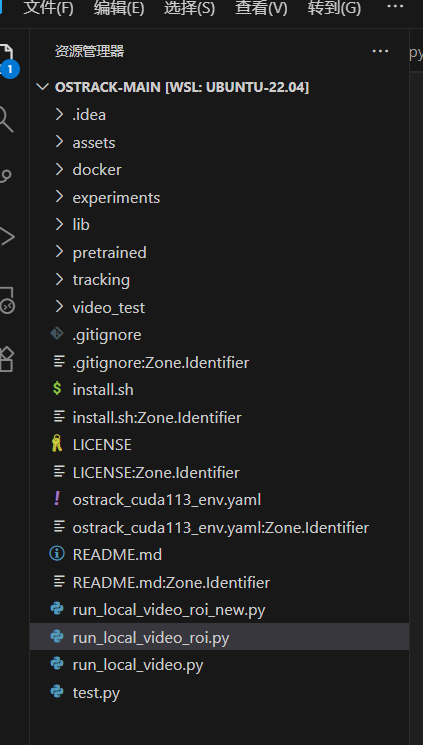

项目框架

缺点:无法实现移出画面时自动定位到原位置

本地运行脚本:

python

# run_local_video_roi.py

import sys

import os

import time

sys.path.insert(0, os.path.dirname(os.path.abspath(__file__)))

import cv2

import torch

import numpy as np

from lib.test.parameter.ostrack import parameters

from lib.test.tracker.ostrack import OSTrack

class OSTrackDemo:

def __init__(self, yaml_name="vitb_256_mae_ce_32x4_ep300",

model_path="pretrained/OSTrack-256.pth.tar",

use_gpu=True):

self.device = "cuda" if (use_gpu and torch.cuda.is_available()) else "cpu"

print(f"[INFO] Device: {self.device}")

params = parameters(yaml_name)

params.debug = getattr(params, 'debug', False)

params.save_all_boxes = getattr(params, 'save_all_boxes', False)

params.checkpoint = model_path

print(f"[INFO] Using model: {params.checkpoint}")

self.tracker = OSTrack(params, dataset_name="demo")

self.tracker.network.to(self.device)

self.initialized = False

def initialize(self, image, bbox):

"""

bbox: [x, y, w, h] in original image coordinates

"""

self.tracker.initialize(image, {'init_bbox': list(bbox)})

self.initialized = True

def track(self, image):

if not self.initialized:

return None, 0

start_time = time.time()

out = self.tracker.track(image)

print('out:',out)

elapsed_ms = (time.time() - start_time) * 1000

return out['target_bbox'], elapsed_ms

def main():

# ====== 默认配置 ======

VIDEO_PATH = "video_test/test11.mp4"

MODEL_PATH = "pretrained/OSTrack-256.pth.tar"

YAML_NAME = "vitb_256_mae_ce_32x4_ep300"

USE_GPU = True

# 检查文件

assert os.path.exists(VIDEO_PATH), f"Video not found: {VIDEO_PATH}"

assert os.path.exists(MODEL_PATH), f"Model not found: {MODEL_PATH}"

demo = OSTrackDemo(yaml_name=YAML_NAME, model_path=MODEL_PATH, use_gpu=USE_GPU)

cap = cv2.VideoCapture(VIDEO_PATH)

ret, frame = cap.read()

if not ret:

print("[ERROR] Cannot read video")

return

# === 使用 cv2.selectROI 选择目标 ===

print("👉 Please draw a bounding box around the target object.")

print(" - Press ENTER or SPACE to confirm")

print(" - Press C to cancel")

print(" - Press ESC to exit")

cv2.namedWindow("Select ROI", cv2.WINDOW_NORMAL)

bbox = cv2.selectROI("Select ROI", frame, fromCenter=False)

cv2.destroyAllWindows()

if bbox[2] == 0 or bbox[3] == 0:

print("[INFO] No valid ROI selected. Exiting.")

return

print(f"[INFO] Selected ROI: x={bbox[0]}, y={bbox[1]}, w={bbox[2]}, h={bbox[3]}")

demo.initialize(frame, bbox)

# 跟踪主循环

frame_count = 0

while True:

ret, frame = cap.read()

if not ret:

break

bbox, elapsed_ms = demo.track(frame)

if bbox is not None:

x, y, w, h = map(int, bbox)

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)

cx, cy = x + w // 2, y + h // 2

cv2.circle(frame, (cx, cy), 4, (0, 255, 0), -1)

cv2.putText(frame, f"Tracking ({cx}, {cy})", (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 255, 0), 2)

else:

cv2.putText(frame, "LOST", (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2)

cv2.putText(frame, f"Frame: {frame_count}", (10, 60),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 255), 2)

cv2.putText(frame, f"Time: {elapsed_ms:.1f} ms", (10, 90),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 0), 2)

cv2.imshow("OSTrack Demo", frame)

frame_count += 1

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

print("[INFO] Done.")

if __name__ == "__main__":

main()