一、概述

在基于大型语言模型(LLM)构建应用时,仅仅"模型调用"并不够,还必须 测试生成结果 是否满足预期(比如相关性、准确性、无幻觉、业务契合度等)。

Spring AI 提供了一套 "评估测试" (Evaluation Testing)机制,使开发者能在集成/测试阶段,引入自动化手段来验证 LLM 的输出质量。

Spring AI 提供 Evaluator 接口,并配套了两个核心实现:RelevancyEvaluator(相关性评估)和 FactCheckingEvaluator(事实核查评估) 。这些工具可用于 对话--检索增强生成 (RAG) 流程 或 纯模型生成场景 的自动化验证。

model-testing

二、接口与类图

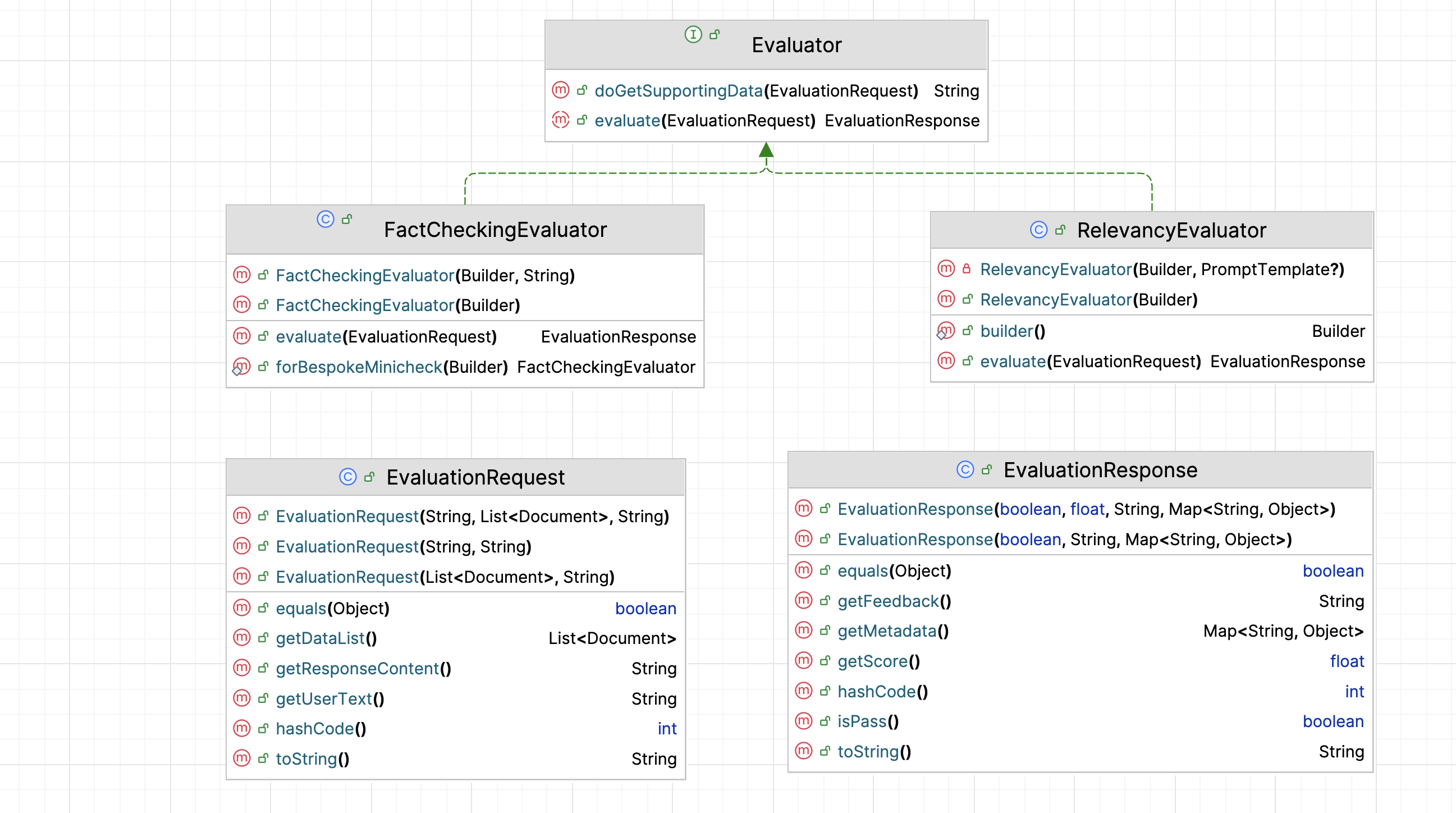

下面是关键的接口与类结构示意

- EvaluationRequest :封装了

用户输入 (userText)、检索上下文 (dataList)以及模型生成结(responseContent); - EvaluationResponse :表示评估结果

(是否通过 +可选反馈); - Evaluator 是一个函数接口(@FunctionalInterface):以下是 Spring AI 提供的两种常用实现。

RelevancyEvaluator: 相关性评估FactCheckingEvaluator: 事实核查评估

三、实现说明与用法

3.1 RelevancyEvaluator

相关性(Relevancy): 评估生成的答案是否真正解决了用户提出的问题。

- 即使答案是事实正确的,如果它没有直接回答用户的问题,它的相关性得分也会降低。

源码分析

java

public class RelevancyEvaluator implements Evaluator {

//默认prompt

//你的任务是评估query的response与所提供的context信息一致。

private static final PromptTemplate DEFAULT_PROMPT_TEMPLATE = new PromptTemplate("""

Your task is to evaluate if the response for the query

is in line with the context information provided.

You have two options to answer. Either YES or NO.

Answer YES, if the response for the query

is in line with context information otherwise NO.

Query:

{query}

Response:

{response}

Context:

{context}

Answer:

""");

private final ChatClient.Builder chatClientBuilder; //"质检员" client

private final PromptTemplate promptTemplate; //可以通过它,覆盖default_prompt_template

@Override

public EvaluationResponse evaluate(EvaluationRequest evaluationRequest) {

var response = evaluationRequest.getResponseContent(); //1.获取待校验的答案

var context = doGetSupportingData(evaluationRequest); //2. 获取context

//3. 构建校验prompt

var userMessage = this.promptTemplate

.render(Map.of("query", evaluationRequest.getUserText(), "response", response, "context", context));

//4. "质检员" client执行校验prompt, 来判断是否为YES

String evaluationResponse = this.chatClientBuilder.build().prompt().user(userMessage).call().content();

boolean passing = false;

float score = 0;

if ("yes".equalsIgnoreCase(evaluationResponse)) {

passing = true;

score = 1;

}

return new EvaluationResponse(passing, score, "", Collections.emptyMap());

}

}实战1

java

@DataProvider

public Object[][] questionsDataProvider() {

return new Object[][]{

// {"'布鲁'是红牌员工,工作了15个月,享有多少天年假?"},

{"'瑞德'是蓝牌员工,工作了15个月,享有多少天年假?"}

};

}

@Test(dataProvider = "questionsDataProvider")

void evaluateRelevancy(String question) {

List<Document> docs = List.of(

new Document("蓝牌员工: 每工作一个月享有1天的年假. 不满一个月按照,仍然享有1天年假."),

new Document("红牌员工: 工作每满一年享有5天的年假. 不足一年的部分,则不计算年假.")

);

ChatResponse chatResponse = ChatClient.builder(ollamaChatModel).build().prompt(question).call().chatResponse();

String answer = chatResponse.getResult().getOutput().getText();

System.out.printf("---------------start question:`%s`\n", question);

System.out.printf("LLM Response: %s%n", answer);

RelevancyEvaluator evaluator = new RelevancyEvaluator(ChatClient.builder(openAiChatModel));

EvaluationResponse evaluationResponse = evaluator.evaluate(new EvaluationRequest(question, docs, answer));

System.out.println("Evaluation Response: " + evaluationResponse);

System.out.print("\n---------------");

}结果

---------------start question:`'瑞德'是蓝牌员工,工作了15个月,享有多少天年假?`

LLM Response: 依据《中华人民共和国劳动法》规定,员工在任职满一年且工作满12个月的,可 enjoy 15天的年假。但是,如果员工在任职满2年以上,则可 enjoy 20天的年假。

Evaluation Response: EvaluationResponse{pass=false, score=0.0, feedback='', metadata={}}实战2

java

void evaluateRelevancy2() {

String question = "0.18 和 0.2 哪个更大?";

ChatResponse chatResponse = ChatClient.builder(ollamaChatModel).build().prompt(question).call().chatResponse();

String answer = chatResponse.getResult().getOutput().getText();

System.out.printf("---------------start question:`%s`\n", question);

System.out.printf("LLM Response: %s%n", answer);

RelevancyEvaluator evaluator = new RelevancyEvaluator(ChatClient.builder(openAiChatModel));

EvaluationResponse evaluationResponse = evaluator.evaluate(new EvaluationRequest(question, Collections.EMPTY_LIST, answer));

System.out.println("Evaluation Response: " + evaluationResponse);

System.out.print("\n---------------");

}结果

---------------start question:`0.18 和 0.2 哪个更大?`

LLM Response: 0.18 小于 0.2。

Evaluation Response: EvaluationResponse{pass=true, score=1.0, feedback='', metadata={}}3.2 FactCheckingEvaluator (

事实核查评估器(FactChecking): 它不是要去判断世界真实事实,而是评估 LLM 的主张 ("claim")是否被 提供的文档 (context) 支持

- 这是 RAG 系统中**防止"幻觉"(Hallucination)**最重要的指标。

源码分析

java

public class FactCheckingEvaluator implements Evaluator {

//default prompt: 评估所提供的document是否支持后续的claim

private static final String DEFAULT_EVALUATION_PROMPT_TEXT = """

Evaluate whether or not the following claim is supported by the provided document.

Respond with "yes" if the claim is supported, or "no" if it is not.

Document: \\n {document}\\n

Claim: \\n {claim}

""";

//为Bespoke Minicheck 模型提供的default prompt

private static final String BESPOKE_EVALUATION_PROMPT_TEXT = """

Document: \\n {document}\\n

Claim: \\n {claim}

""";

private final ChatClient.Builder chatClientBuilder;

private final String evaluationPrompt;

public FactCheckingEvaluator(ChatClient.Builder chatClientBuilder, String evaluationPrompt) {

this.chatClientBuilder = chatClientBuilder;

this.evaluationPrompt = evaluationPrompt;

}

public static FactCheckingEvaluator forBespokeMinicheck(ChatClient.Builder chatClientBuilder) {

return new FactCheckingEvaluator(chatClientBuilder, BESPOKE_EVALUATION_PROMPT_TEXT);

}

public EvaluationResponse evaluate(EvaluationRequest evaluationRequest) {

var response = evaluationRequest.getResponseContent(); //1. 获取待校验reponse -- 作为后续的claim

var context = doGetSupportingData(evaluationRequest); //2. 获取context --- 作为document

//3.评估response 是否被context所支持

String evaluationResponse = this.chatClientBuilder.build()

.prompt()

.user(userSpec -> userSpec.text(this.evaluationPrompt).param("document", context).param("claim", response))

.call()

.content();

boolean passing = "yes".equalsIgnoreCase(evaluationResponse);

return new EvaluationResponse(passing, "", Collections.emptyMap());

}

}实战

java

@Test

void factCheckingEvaluator() {

List<Document> docs = List.of(

new Document("Pairs is the capital of Germany.")

);

String claim = "Germany's capital is Pairs.";

var evaluator = FactCheckingEvaluator.forBespokeMinicheck(ChatClient.builder(bespokeMinicheckChatModel));

EvaluationRequest evaluationRequest = new EvaluationRequest(docs, claim);

EvaluationResponse evaluationResponse = evaluator.evaluate(evaluationRequest);

System.out.println("Evaluation Response: " + evaluationResponse);

System.out.print("\n---------------");

}bespoke-minicheck, 是一个由 Bespoke Labs 开发的、最先进的事实核查模型(Fact-Checking Model),专门用于解决大型语言模型(LLM)的幻觉(Hallucination) 问题。

结果 : 不检查客观真相 (Objective Truth): 评估器不会去谷歌搜索"德国的首都是哪里"。它只关注提供的输入。

Evaluation Response: EvaluationResponse{pass=true, score=0.0, feedback='', metadata={}}