背景

proxy宕机、重启后,业务仍不断有命令执行失败长尾现象;现象非常诡异,每隔一段时间业务都会有一个报错: new RedisException("Currently not connected. Commands are rejected."); 本文梳理了一下Netty、Lettuce的关闭流程源码,抽丝剥茧,找到问题根因;

整体流程

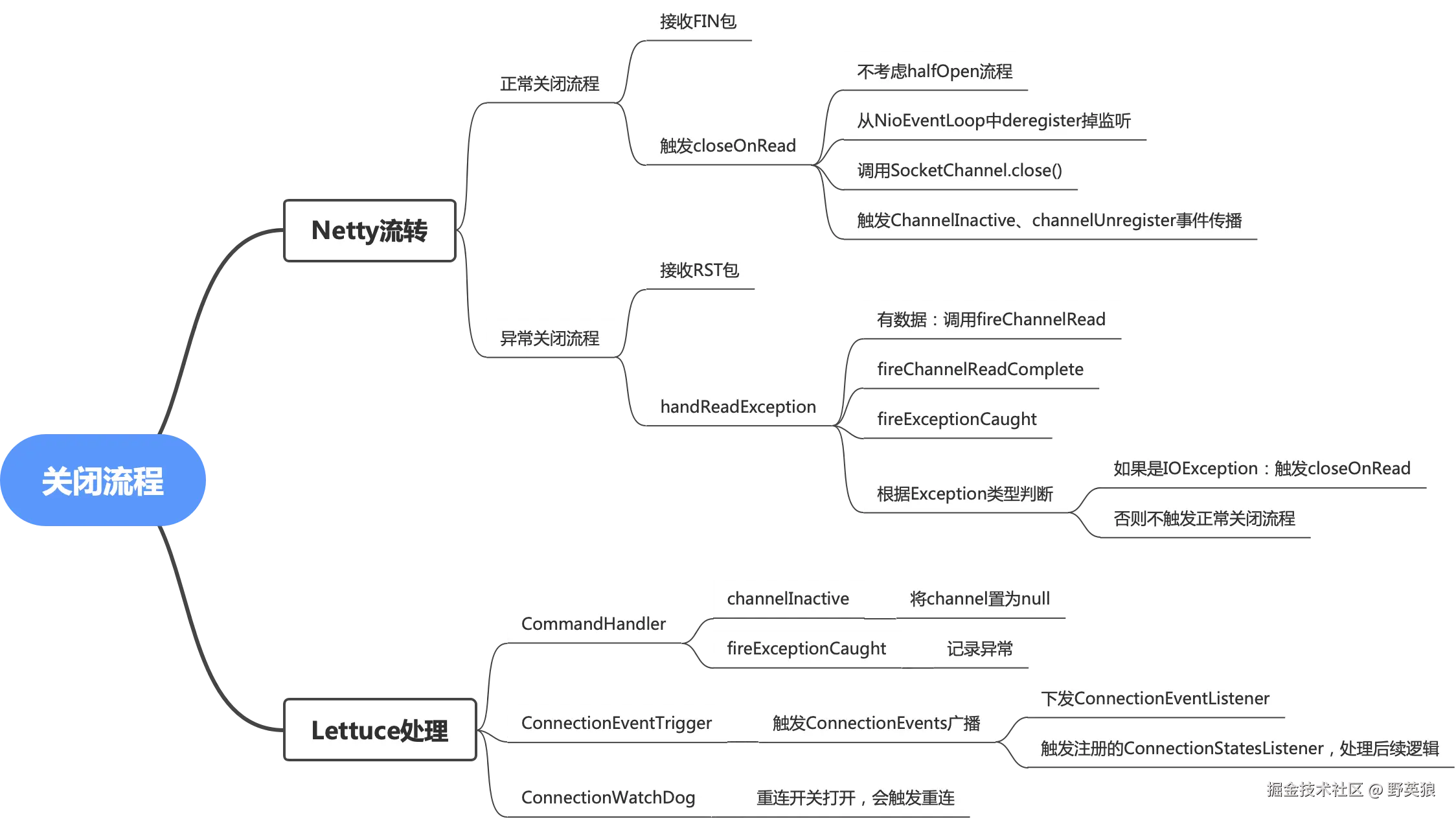

先附上整体的思维导图,然后在分析Netty、Lettuce的关闭连接的源码;

思维导图

源码解析

注: 本文基于Netty 4.1.38 & Lettuce 5.2.0进行分析

注:为什么分析的是Nio模型,不是分析Epoll模型;因为我司魔改的jdk17协程模型仅支持nio;

1、IO入口:NioByteUnsafe#read

客户端分析:

- 获取一系列对象:ChannelConfig、ChannelPipeline、RecvByteBufAllocator

- 从Socket读取数据,保存到ByteBuf中(DefaultMaxMessageRecvByteBufAllocator 具有弹性扩缩容的能力,后续在讨论)

- 调用pipeline.channelRead()将读取的数据广播到ChannelInboundHandler

- 如果数据量比较大,则最多递归16次, 如果还没有读取完成,放到下次IO读取的时候,再读;避免由于某次请求体比较大而导致其他的请求阻塞,造成雪崩影响;

- 读取完成,调用channelReadComplete()

- 当socket读取的结果是-1时,则说明服务端正在关闭这个连接(发送FIN包);进而调用closeOnRead

- 那什么时候抛出Throwable?1. 业务异常, 2. 服务端异常,发送了RST包:

流程大概清楚了,那我们重点需要分析下正常关闭(FIN包)、异常关闭(RST包)的流程

ini

public final void read() {

final ChannelConfig config = config();

if (shouldBreakReadReady(config)) {

clearReadPending();

return;

}

final ChannelPipeline pipeline = pipeline();

final ByteBufAllocator allocator = config.getAllocator();

final RecvByteBufAllocator.Handle allocHandle = recvBufAllocHandle();

allocHandle.reset(config);

ByteBuf byteBuf = null;

boolean close = false;

try {

do {

byteBuf = allocHandle.allocate(allocator);

allocHandle.lastBytesRead(doReadBytes(byteBuf));

if (allocHandle.lastBytesRead() <= 0) {

// nothing was read. release the buffer.

byteBuf.release();

byteBuf = null;

close = allocHandle.lastBytesRead() < 0;

if (close) {

// There is nothing left to read as we received an EOF.

readPending = false;

}

break;

}

allocHandle.incMessagesRead(1);

readPending = false;

pipeline.fireChannelRead(byteBuf);

byteBuf = null;

} while (allocHandle.continueReading());

allocHandle.readComplete();

pipeline.fireChannelReadComplete();

// 重点分析

if (close) {

closeOnRead(pipeline);

}

} catch (Throwable t) {

// 重点分析

handleReadException(pipeline, byteBuf, t, close, allocHandle);

} finally {

// Check if there is a readPending which was not processed yet.

// This could be for two reasons:

// * The user called Channel.read() or ChannelHandlerContext.read() in channelRead(...) method

// * The user called Channel.read() or ChannelHandlerContext.read() in channelReadComplete(...) method

//

// See https://github.com/netty/netty/issues/2254

if (!readPending && !config.isAutoRead()) {

removeReadOp();

}

}

}2、异常关闭流程:NioByteUnsafe#handleReadException

为什么要先介绍异常关闭,因为异常关闭流程里面包含正常关闭流程;

- 如果bytebuf中有数据,那把已经接收的数据广播出去(channelRead);

- 再广播chanelReadComplete事件

- 再广播exceptionCaught事件

- 如果是IOException ,则需要将Socke关闭,走一下正常关闭流程;

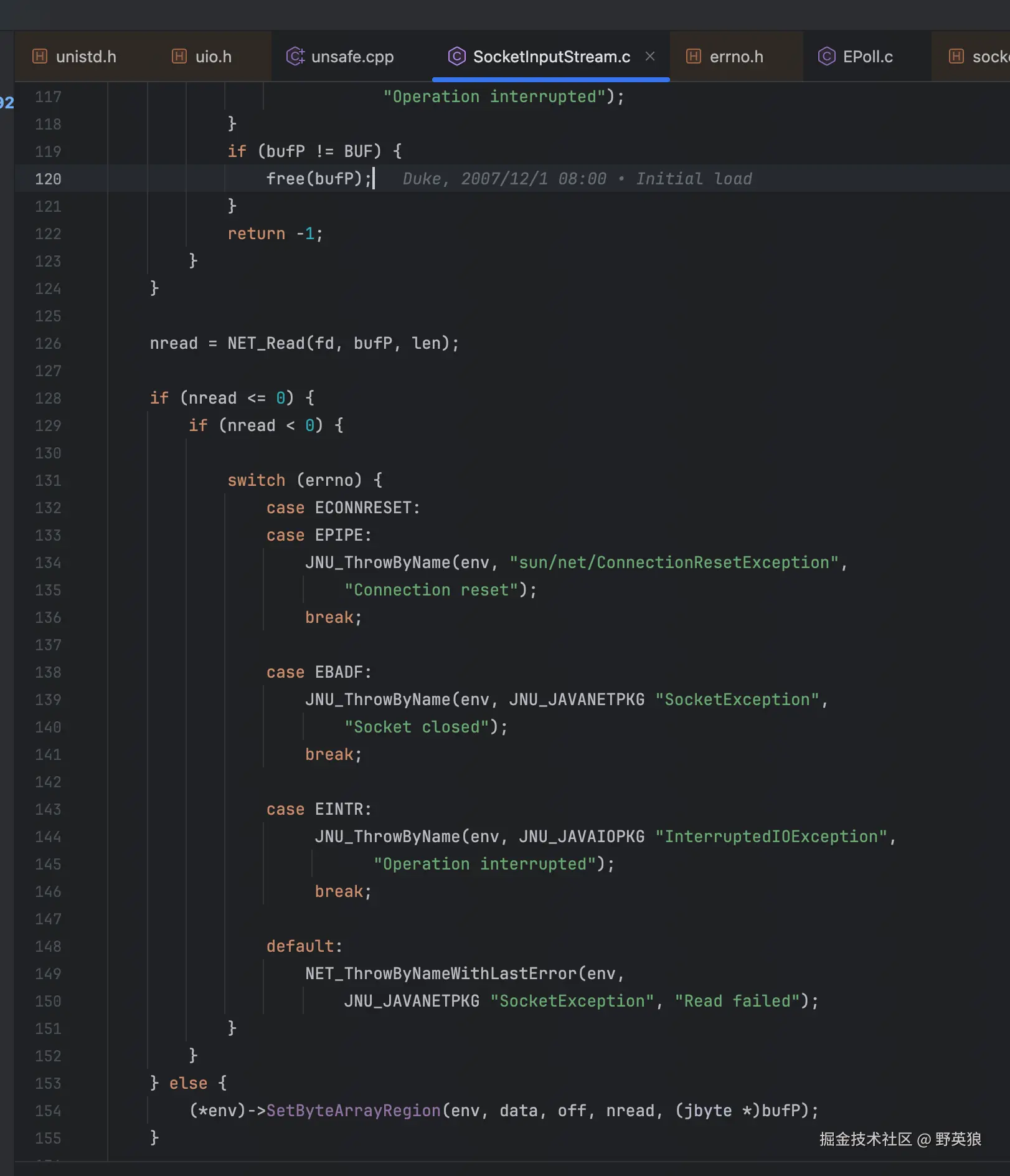

ps:在hotspot源码中IO读取的时候:如果读取失败,分别会抛出ConnectionResetException、SocketException、InterruptedException; 其中ConnectionResetException和SocketException是IOException, 而InterruptedException则是由于设置了线程中断标识,而抛出的;

scss

private void handleReadException(ChannelPipeline pipeline, ByteBuf byteBuf, Throwable cause, boolean close,

RecvByteBufAllocator.Handle allocHandle) {

if (byteBuf != null) {

if (byteBuf.isReadable()) {

readPending = false;

pipeline.fireChannelRead(byteBuf);

} else {

byteBuf.release();

}

}

allocHandle.readComplete();

pipeline.fireChannelReadComplete();

pipeline.fireExceptionCaught(cause);

// 重点在此

if (close || cause instanceof IOException) {

closeOnRead(pipeline);

}

}3、正常关闭流程:NioByteUnsafe#closeOnRead

- 如果输入没有关闭并且是半关闭功能,则会关闭输入通道,同时下发ChannelInputShutdownEvent事件

- 如果输入没有关闭,则直接调用close,进行关闭该连接

- 如果输入已经关闭,则下发一个ChannelInputShutdownReadComplete事件;

注:什么是半关闭?

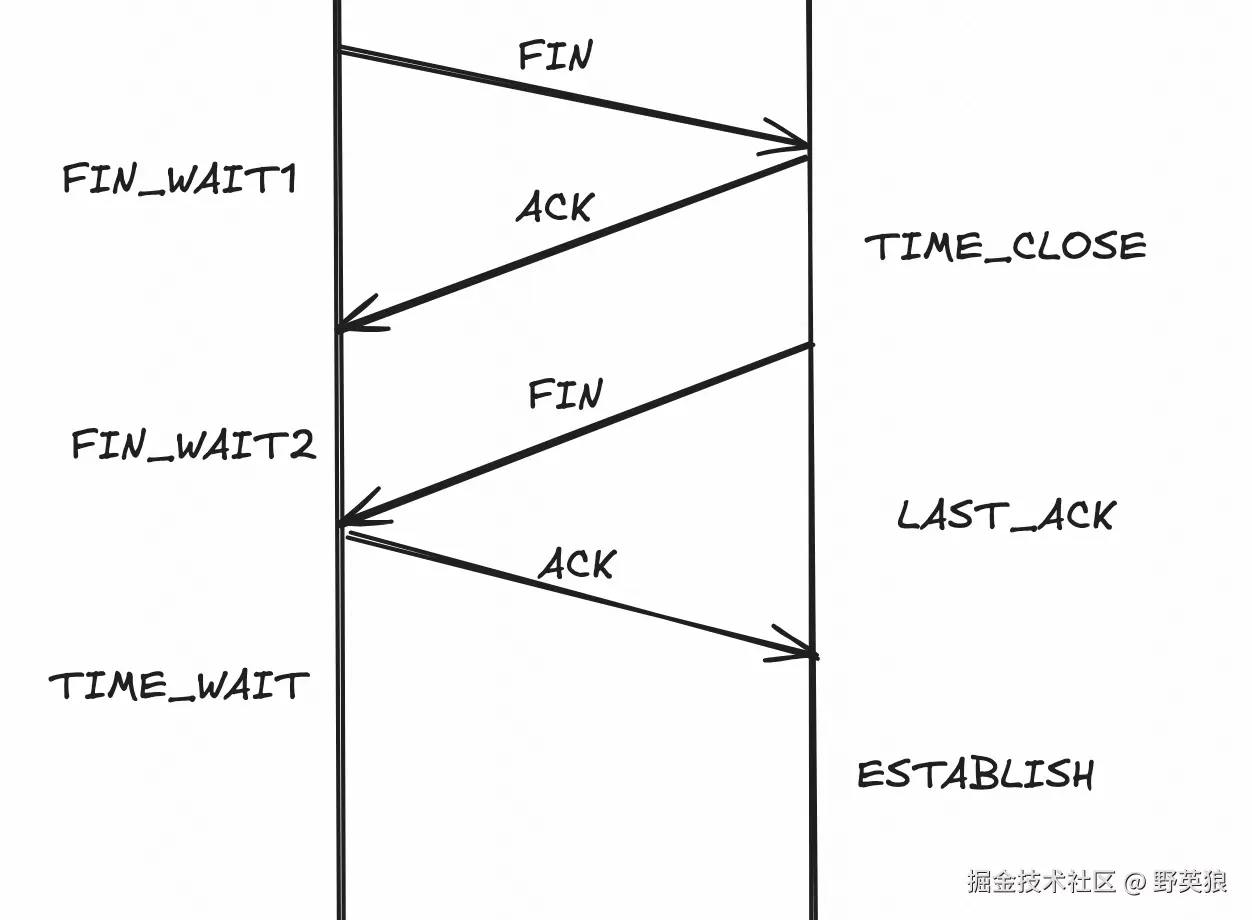

先来一张TCP的4次挥手示意图,体会一下;

根据上图解释

根据上图解释

- 在TCP中,通信是全双工的,同时可以进行读写,拥有独立的读、写缓冲区;Half-Closure:通信的任何一方都可以先关闭输入方向,而保留接收方向,直至对方也关闭了连接;

- 在Netty里面,默认情况下,接收FIN包以后,默认会将socket进行关闭;也就是说socket的读写通道全关掉 了;没错;全关掉 ;

- 这有点不符合TCP的4次挥手的设计,因为有可能有一端(C端)主动发起了FIN包,另一端(S端)接收到FIN包,回ACK包以后;S端是可以继续发送写请求的,因为S端还没有主动发FIN包;

- 如果在Netty里面想要开启这个功能, 就需要进行配置;

bootstrap.childOption(ChannelOption.ALLOW_HALF_CLOSURE, true);

但是在大部分场景下,都不需要半关闭功能,所以直接粗暴、简单一些,一旦一方发送FIN包,那另一方拒绝所有的读写请求;直接close socket;

scss

private void closeOnRead(ChannelPipeline pipeline) {

if (!isInputShutdown0()) {

if (isAllowHalfClosure(config())) {

shutdownInput();

pipeline.fireUserEventTriggered(ChannelInputShutdownEvent.INSTANCE);

} else {

close(voidPromise());

}

} else {

inputClosedSeenErrorOnRead = true;

pipeline.fireUserEventTriggered(ChannelInputShutdownReadComplete.INSTANCE);

}

}3.1 如何关闭?NioByteUnsafe.close

io.netty.channel.AbstractChannel.AbstractUnsafe#close

- 如果promise不是VoidChannelPromise,直接返回,避免误判;

- 如果已经close过了,则根据future的结果判断,是直接回填,还是添加listener,在未来回填

- 第一次调用该方法,closeInitiated=false,所以会走下面逻辑;

- 先判断active状态,

- 如果solinger>0, 则会将SelectionKey从selector进行cancel掉;由于solinger的特殊性,在shutdown前会等待一段时间,所以会有阻塞的风险,基于此,会将close的流程放到异步线程中执行

- close流程就是调用socket.close()、promoise结果回填

- 如果outboundBuffer不为空,把flushed和unflushed队列中的数据都清空;

- 调用fireChannelInactiveAndDeregister方法,下发channelInactive事件和channelDeregister事件

java

private void close(final ChannelPromise promise, final Throwable cause,

final ClosedChannelException closeCause, final boolean notify) {

if (!promise.setUncancellable()) {

return;

}

if (closeInitiated) {

if (closeFuture.isDone()) {

// Closed already.

safeSetSuccess(promise);

} else if (!(promise instanceof VoidChannelPromise)) { // Only needed if no VoidChannelPromise.

// This means close() was called before so we just register a listener and return

closeFuture.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture future) throws Exception {

promise.setSuccess();

}

});

}

return;

}

closeInitiated = true;

final boolean wasActive = isActive();

final ChannelOutboundBuffer outboundBuffer = this.outboundBuffer;

this.outboundBuffer = null; // Disallow adding any messages and flushes to outboundBuffer.

Executor closeExecutor = prepareToClose();

if (closeExecutor != null) {

closeExecutor.execute(new Runnable() {

@Override

public void run() {

try {

// Execute the close.

doClose0(promise);

} finally {

// Call invokeLater so closeAndDeregister is executed in the EventLoop again!

invokeLater(new Runnable() {

@Override

public void run() {

if (outboundBuffer != null) {

// Fail all the queued messages

outboundBuffer.failFlushed(cause, notify);

outboundBuffer.close(closeCause);

}

fireChannelInactiveAndDeregister(wasActive);

}

});

}

}

});

} else {

try {

// Close the channel and fail the queued messages in all cases.

doClose0(promise);

} finally {

if (outboundBuffer != null) {

// Fail all the queued messages.

outboundBuffer.failFlushed(cause, notify);

outboundBuffer.close(closeCause);

}

}

if (inFlush0) {

invokeLater(new Runnable() {

@Override

public void run() {

fireChannelInactiveAndDeregister(wasActive);

}

});

} else {

fireChannelInactiveAndDeregister(wasActive);

}

}

}3.2 channelInactive事件传播

在Lettuce SDK中,主要的ChannelHandler主要有:CommandHandler、CommandEncoder、ConnectionEventTrigger、ConnectionWatchDog, 分别介绍一下这四个ChannelHandler的功能;

- CommandHandler:主要是用于解码 ; 重点

- CommandEncoder:将redis加密成RESP格式的数据

- ConnectionEventTrigger:连接事件的触发器,用于设置在连接各个状态的回调钩子;重点

- ConnectionWatchDog:重连器

3.2.1 CommandHandler#channelInactive

- 设置当前的CommandHandler状态为DEACTIVATING

- 触发DefaultEndPoint的channelInactive、drainQueuedCommands 重点

- 重置RedisStateMachine状态机;

scss

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

if (debugEnabled) {

logger.debug("{} channelInactive()", logPrefix());

}

if (channel != null && ctx.channel() != channel) {

logger.debug("{} My channel and ctx.channel mismatch. Propagating event to other listeners.", logPrefix());

super.channelInactive(ctx);

return;

}

tracedEndpoint = null;

setState(LifecycleState.DISCONNECTED);

setState(LifecycleState.DEACTIVATING);

endpoint.notifyChannelInactive(ctx.channel());

endpoint.notifyDrainQueuedCommands(this);

setState(LifecycleState.DEACTIVATED);

PristineFallbackCommand command = this.fallbackCommand;

if (isProtectedMode(command)) {

onProtectedMode(command.getOutput().getError());

}

rsm.reset();

if (debugEnabled) {

logger.debug("{} channelInactive() done", logPrefix());

}

super.channelInactive(ctx);

}3.2.1.1DefaultEndPoint#channelInactive

- 判断是否已经close

- 排它锁下发deactivated事件,将StatefulRedisConnectionImpl的状态设置为deactivated,避免连接再用

- 将channel设置为null 重点

ini

public void notifyChannelInactive(Channel channel) {

if (isClosed()) {

RedisException closed = new RedisException("Connection closed");

cancelCommands("Connection closed", drainCommands(), it -> it.completeExceptionally(closed));

}

sharedLock.doExclusive(() -> {

if (debugEnabled) {

logger.debug("{} deactivating endpoint handler", logPrefix());

}

connectionFacade.deactivated();

});

if (this.channel == channel) {

this.channel = null;

}

}3.2.2 ConnectionEventTrigger#channelInactive

- 在connectionEvents中广播redisOnDisconnected事件,因为ConnectionEvents里面会注册RedisConnectionStateListener,所以本质上是给RedisConnectionStateListener进行下发redisonDisconnected事件

- 同时在eventBus中也广播ConnectionDeactivatedEvent事件

scss

public void channelInactive(ChannelHandlerContext ctx) throws Exception {

connectionEvents.fireEventRedisDisconnected(connection);

eventBus.publish(new ConnectionDeactivatedEvent(local(ctx), remote(ctx)));

super.channelInactive(ctx);

}问题溯源

源码流程分析完成, 这个问题其实就逐渐浮出水面了; 先从问题点出发

arduino

private void validateWrite(int commands) {

if (isClosed()) {

throw new RedisException("Connection is closed");

}

......

if (!isConnected() && rejectCommandsWhileDisconnected) {

throw new RedisException("Currently not connected. Commands are rejected.");

}

}- isClosed()方法:只有调用了closeAsync方法,才会将close标识置为true;没有走到这里,说明当前DefaultEndPoint对象还没有调用close方法;

- isConnected()的判断逻辑:如果channel==null 或者channel is inactive,就说明disConnected,就会抛出

Currently not connected , Commands are rejected - 根据3.1和3.2.1.1的源码可以了解到,channel置为null是在CommandHandler捕获到channelInactive事件以后进行操作的;channel is inactive 是socket.close() 以后触发的;

了解到问题产生的源头;那再回头来看为什么channel=null以后,没有调用ConnectionEventTrigger#channelInactive进行断连回调呢? 回去看了一下,我们自己写的代码,我们注册了RedisConnectionStateListener, 但是在onRedisDisconnected事件的实现中有缺陷,导致这个Bug;

总结

- 源码是进步的源泉,优雅永不过时

- Netty将网络处理的生命周期的每个阶段完成后,都会传播特定的事件,形成完整的追踪链;这样也方便问题定位,值得借鉴;

- 在事件循环+异步处理中,不要有阻塞性事件,不然会导致雪崩效应

- 事件回调在异步处理中随处可见,通过回调钩子来感知事件的变化(诸如:ChannelHandler、redis中的RedisConnectionStateListener)

- 底层知识必须夯实,知其然,知其所以然 (TCP四次握手、JVM源码、 solinger、 halfClosure)