一、基于OPENCV的工程部署

首先需要将前一章训练的pt文件转换成onnx文件,然后使用下面的代码实现。

转换方法:yolo export model=best.pt format=onnx opset=12 dynamic=False

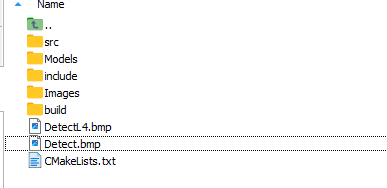

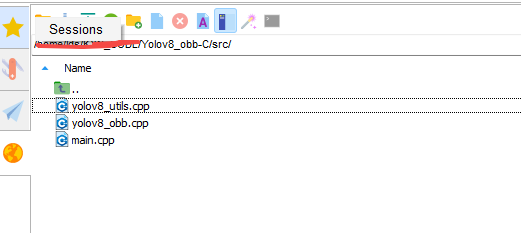

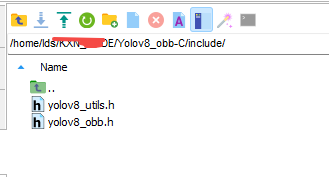

opencv的版本4.9.0不能低于该版本,否则没有办法实现。环境基础是linux,使用c++实现。工程的结构如下图所示:

参考github的网址:https://github.com/YHongQ/Yolov8_obb-C-

仅需要修改 CMakeLists.txt 的文件中的内容为,且需要将上述工程中的文件修改成下面图示的样式,即可实现编译。完成基于OPENCV的部署。

python

cmake_minimum_required(VERSION 3.10)

project(YOLO_OBB_TRT)

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

# ---CUDA ---

set(CUDA_INCLUDE_DIRS /home/lds/KXN_CODE/import/cuda/linux/include)

set(CUDA_LIB_DIR /home/lds/KXN_CODE/import/cuda/linux/lib)

link_directories(${CUDA_LIB_DIR})

#set(CUDA_TOOLKIT_ROOT_DIR /home/lds/KXN_CODE/import/cuda/linux)

#find_package(CUDA REQUIRED)

# OpenCV

set(OpenCV_DIR "/usr/local/opencv490") # 根据实际安装路径修改

#set(OpenCV_DIR "/home/ema/kxn/rknn_model_zoo/3rdparty/opencv/opencv-linux-aarch64/share/OpenCV")

find_package(OpenCV REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

message(${OpenCV_INCLUDE_DIRS})

# --- 2. Find ONNX Runtime ---

# ONNX Runtime

set(ONNXRUNTIME_ROOT "/home/lds/KXN_CODE/import/onnxruntime/linux") # <--- ������

set(ONNXRUNTIME_INCLUDE ${ONNXRUNTIME_ROOT}/include)

set(ONNXRUNTIME_LIB ${ONNXRUNTIME_ROOT}/lib)

if(NOT EXISTS ${ONNXRUNTIME_ROOT})

message(FATAL_ERROR "ONNX Runtime not found at ${ONNXRUNTIME_ROOT}. Please modify ONNXRUNTIME_ROOT in CMakeLists.txt")

endif()

link_directories(${ONNXRUNTIME_LIB})

include_directories(include)

aux_source_directory(. SRCS )

file(GLOB_RECURSE SRC ./src/*.cpp)

add_executable(yolo_obb_infer ${SRC})

target_include_directories(yolo_obb_infer PRIVATE

${OpenCV_INCLUDE_DIRS}

${TRT_INCLUDE_DIRS}

${CUDA_INCLUDE_DIRS}

${ONNXRUNTIME_INCLUDE}

)

target_link_libraries(yolo_obb_infer

${OpenCV_LIBS}

${CUDA_LIB_DIR}/libcudnn.so

${CUDA_LIB_DIR}/libcublas.so

${CUDA_LIB_DIR}/libcublasLt.so

${CUDA_LIB_DIR}/libcudart.so

${CUDA_LIB_DIR}/libnvinfer.so

${CUDA_LIB_DIR}/libnvonnxparser.so

${CUDA_LIB_DIR}/libnvinfer_plugin.so

onnxruntime

)

二、基于onnx的推理

该方法对opencv的版本没有特殊的要求,只要位置指定对,就可以实现

2.1 单张图像的推理

main.cpp的内容

cpp

#include <iostream>

#include <fstream>

#include <string>

#include <vector>

#include <algorithm>

#include <numeric>

#include <memory>

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

// --- 结构体用于存储检测结果 ---

struct Detection {

cv::RotatedRect box; // 旋转矩形

float confidence; // 置信度

int class_id; // 类别ID

};

// Calculate IoU between two rotated rectangles

float calculateRotatedIoU(const cv::RotatedRect& box1, const cv::RotatedRect& box2) {

cv::Point2f vertices1[4], vertices2[4];

box1.points(vertices1);

box2.points(vertices2);

std::vector<cv::Point2f> intersection_points;

cv::intersectConvexConvex(cv::Mat(4, 2, CV_32F, vertices1),

cv::Mat(4, 2, CV_32F, vertices2),

intersection_points);

float inter_area = 0.0f;

if (!intersection_points.empty()) {

inter_area = cv::contourArea(intersection_points);

}

float area1 = box1.size.area();

float area2 = box2.size.area();

return inter_area / (area1 + area2 - inter_area + 1e-5f);

}

// Manual implementation of NMS for rotated boxes

void NMSBoxesRotated(

const std::vector<cv::RotatedRect>& boxes,

const std::vector<float>& scores,

const float score_threshold,

const float iou_threshold,

std::vector<int>& indices) {

std::vector<int> sorted_indices(scores.size());

std::iota(sorted_indices.begin(), sorted_indices.end(), 0);

std::sort(sorted_indices.begin(), sorted_indices.end(),

[&scores](int i1, int i2) { return scores[i1] > scores[i2]; });

std::vector<bool> suppressed(scores.size(), false);

indices.clear();

for (size_t i = 0; i < sorted_indices.size(); ++i) {

int idx = sorted_indices[i];

if (suppressed[idx] || scores[idx] < score_threshold) {

continue;

}

indices.push_back(idx);

for (size_t j = i + 1; j < sorted_indices.size(); ++j) {

int idx2 = sorted_indices[j];

if (suppressed[idx2]) {

continue;

}

float iou = calculateRotatedIoU(boxes[idx], boxes[idx2]);

if (iou > iou_threshold) {

suppressed[idx2] = true;

}

}

}

}

void InputImageConveter(const cv::Mat& image, cv::Mat& OutImage,

const cv::Size& newShape,

cv::Vec4d& params,

bool scaleFill = false,

bool scaleUp = true,

const cv::Scalar& color = cv::Scalar(114, 114, 114))

{

/*

params:image 输入图像

params:OutImage 输出图像

params:newShape 新的图像尺寸

params:params 参数,用于存储[ratio_x,ratio_y,dw,dh]

params:scaleFill 是否直接进行resize图像

params:sclaUp 是否放大图像

核心就是直接reshape会导致图像的长宽比发生改变,所以需要根据目标形状调整图像大小,也就是说,需要保持图像的长宽比不变

进行等比例缩放,然后填充颜色,同时保证等比例缩放的图像位于填充后的图像中心位置

*/

//cv::Vec4d是opencv中的一个四维向量类,用于表示四维向量

cv::Size InputImageShape = image.size();

float R = std::min((float)newShape.height / (float)InputImageShape.height,

(float)newShape.width / (float)InputImageShape.width);//计算缩放比例,取长宽比的最小值,因为要保持图像的长宽比不变

if (!scaleUp)//是否根据目标形状调整图像大小,如果为false,则不允许放大图像

{

R = std::min(R, 1.0f);

}

float Ratio[2] = { R,R };//缩放比例{ R,R };

int New_Un_padding[2] = {(int)std::round((float)InputImageShape.width *R),

(int)std::round((float)InputImageShape.height * R) };//缩放后的图像尺寸,未进行填充

auto dw = (float)(newShape.width - New_Un_padding[0]);//计算填充的宽度

auto dh = (float)(newShape.height - New_Un_padding[1]);//计算填充的高度

if (scaleFill) //如果选择强制缩放图像完全填满目标尺寸(不保留长宽比例)

{

dw = 0.0f;

dh = 0.0f;

New_Un_padding[0] = newShape.width;

New_Un_padding[1] = newShape.height;

Ratio[0] = (float)newShape.width / (float)InputImageShape.width;

Ratio[1] = (float)newShape.height / (float)InputImageShape.height;

}

dw /= 2.0f;//计算填充的宽度

dh /= 2.0f;//计算填充的高度

// 生成填充后的图像

if (InputImageShape.width != New_Un_padding[0] && InputImageShape.height != New_Un_padding[1])

{

cv::resize(image, OutImage, cv::Size(New_Un_padding[0], New_Un_padding[1]));

}

else

{

OutImage = image.clone();

}

int top = int(std::round(dh - 0.1f));//计算填充的上边界

int bottom = int(std::round(dh + 0.1f));//计算填充的下边界

int left = int(std::round(dw - 0.1f));//计算填充的左边界

int right = int(std::round(dw + 0.1f));//计算填充的右边界

params[0] = Ratio[0];//存储缩放比例

params[1] = Ratio[1];//存储缩放比例

params[2] = left;//存储填充的左边界dw

params[3] = top;//存储填充的上边界dh

cv::copyMakeBorder(OutImage, OutImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);//填充图像

}

// --- 主函数 ---

int main() {

// --- 1. 配置参数 ---

const std::string model_path = "/home/lds/KXN_CODE/test_obbonnx/models/OBB1119.onnx";

const std::string image_path = "/home/lds/KXN_CODE/test_obbonnx/images/L4_2_2600.jpg";

const float CONF_THRESHOLD = 0.25f;

const float NMS_THRESHOLD = 0.5f;

const float INPUT_WIDTH = 640.0f;

const float INPUT_HEIGHT = 640.0f;

// COCO-OBB 数据集的类别名

const std::vector<std::string> class_names = {

"plane"

};

// --- 2. 初始化 ONNX Runtime ---

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "Yolov8_OBB_Test");

Ort::SessionOptions session_options; // 创建会话选项

session_options.SetIntraOpNumThreads(1);

// 如果使用GPU,取消下面一行的注释,并确保使用GPU版本的onnxruntime

//session_options.AppendExecutionProvider_CUDA(0);

Ort::Session session(env, model_path.c_str(), session_options);

// 打印模型输入/输出信息

Ort::AllocatorWithDefaultOptions allocator;

std::vector<const char*> input_names_ptr;

std::vector<std::vector<int64_t>> input_shapes;

std::vector<const char*> output_names_ptr;

std::vector<std::vector<int64_t>> output_shapes;

// Input info

size_t num_input_nodes = session.GetInputCount();

input_names_ptr.reserve(num_input_nodes);

input_shapes.reserve(num_input_nodes);

for (size_t i = 0; i < num_input_nodes; i++) {

char* input_name = session.GetInputName(i, allocator);

input_names_ptr.push_back(input_name);

Ort::TypeInfo input_type_info = session.GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_shapes.push_back(input_dims);

std::cout << "Input " << i << " : " << input_name << " [";

for (size_t j = 0; j < input_dims.size(); ++j) std::cout << input_dims[j] << (j < input_dims.size() - 1 ? ", " : "");

std::cout << "]" << std::endl;

}

// Output info

size_t num_output_nodes = session.GetOutputCount();

output_names_ptr.reserve(num_output_nodes);

output_shapes.reserve(num_output_nodes);

for (size_t i = 0; i < num_output_nodes; i++) {

char* output_name = session.GetOutputName(i, allocator);

output_names_ptr.push_back(output_name);

Ort::TypeInfo output_type_info = session.GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_shapes.push_back(output_dims);

std::cout << "Output " << i << " : " << output_name << " [";

for (size_t j = 0; j < output_dims.size(); ++j) std::cout << output_dims[j] << (j < output_dims.size() - 1 ? ", " : "");

std::cout << "]" << std::endl;

}

std::cout << " 000"<<std::endl;

// --- 3. 读取并预处理图像 ---

cv::Mat original_image = cv::imread(image_path);

if (original_image.empty()) {

std::cerr << "Error: Could not read image from " << image_path << std::endl;

return -1;

}

int _inputWidth = 640;

int _inputHeight = 640;

cv::Mat blob; //blob是opencv中的一种数据结构,用于存储图像数据

int img_height = original_image.rows;

int img_width = original_image.cols;

cv::Mat netInputImg; //网络输入图像

cv::Vec4d params; //用于存储[ratio_x,ratio_y,dw,dh]

InputImageConveter(original_image, netInputImg, cv::Size(_inputWidth, _inputHeight), params);

std::cout << "params[0]: " << params[0] <<std::endl;

std::cout << "params[1]: " << params[1] <<std::endl;

std::cout << "params[2]: " << params[2] <<std::endl;

std::cout << "params[3]: " << params[3] <<std::endl;

cv::dnn::blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_inputWidth, _inputHeight), cv::Scalar(0, 0, 0), true, false);

//std::cout << "Blob values: " << blob << std::endl;

//bool success = cv::imwrite("/home/lds/KXN_CODE/test_obbonnx/blob1.jpg",blob);

//if (success) {

//std::cout << "Blob保存成功!" << std::endl;

//} else {

// std::cout << "Blob保存失败!" << std::endl;

//}

// --- 4. 创建输入张量并运行推理 ---

std::vector<int64_t> input_shape = {1, 3, static_cast<int64_t>(INPUT_HEIGHT), static_cast<int64_t>(INPUT_WIDTH)};

Ort::MemoryInfo memory_info = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value input_tensor = Ort::Value::CreateTensor<float>(memory_info, blob.ptr<float>(), blob.total(), input_shape.data(), input_shape.size());

auto output_tensors = session.Run(Ort::RunOptions{nullptr}, input_names_ptr.data(), &input_tensor, 1, output_names_ptr.data(), output_names_ptr.size());

// --- 5. 后处理 ---

const float* output_data = output_tensors[0].GetTensorData<float>();

auto output_shape = output_shapes[0]; // e.g., [1, 20, 8400]

int num_classes = output_shape[1] - 5; // 20 - 5 = 15

int num_proposals = output_shape[2]; // 8400

std::cout << "num_classes " << num_classes <<std::endl;

std::cout << "num_proposals " << num_proposals <<std::endl;

size_t total_elements = 1;

for (auto dim : output_shape) {

total_elements *= dim;

}

std::cout << "total_elements " << total_elements <<std::endl;

std::cout << " 222"<<std::endl;

std::vector<Detection> final_detections;

std::vector<cv::RotatedRect> boxes;

std::vector<float> scores;

std::vector<int> class_ids;

int num_bbox = 0;

// 遍历所有预测结果

for (int i = 0; i < num_proposals; ++i) {

//float* proposal = (float*)output_data + i * (5 + num_classes);

//float cx = proposal[0];

//float cy = proposal[1];

//float w = proposal[2];

//float h = proposal[3];

//float angle = proposal[4] ; // 角度,单位是度

// float cx = output_data[0*num_proposals + i];

// float cy = output_data[1*num_proposals + i];

// float w = output_data[2 * num_proposals + i];

// float h = output_data[3 * num_proposals + i];

float cx = (output_data[0*num_proposals + i]- params[2]) / params[0] /2;

float cy = (output_data[1*num_proposals + i]- params[3]) / params[1] /1.125;

float w = output_data[2 * num_proposals + i]/ params[0] /2;

float h = output_data[3 * num_proposals + i] / params[1] /1.125;

float score = output_data[4 * num_proposals + i];

float angle = output_data[5 * num_proposals + i] *180/3.1415926;

// 找到最大类别分数

float max_class_score = 0.0f;

int class_id = -1;

for (int j = 0; j < num_classes; ++j) {

//if (proposal[5 + j] > max_class_score) {

if (score> max_class_score) {

//max_class_score = proposal[5 + j];

max_class_score = score;

class_id = j;

}

}

if (max_class_score > CONF_THRESHOLD) {

num_bbox += 1;

//std::cout << "idx: " << num_bbox <<" cx: " << cx <<" cy: " << cy <<" w: " << w <<" h: " << h <<" angle: " << angle <<" max_class_score: " << max_class_score << std::endl;

// 坐标还原到原始图像尺寸

float scale_x = static_cast<float>(img_width) / INPUT_WIDTH;

float scale_y = static_cast<float>(img_height) / INPUT_HEIGHT;

cv::RotatedRect box;

box.center.x = cx * scale_x;

box.center.y = cy * scale_y;

box.size.width = w * scale_x;

box.size.height = h * scale_y;

box.angle = angle;

std::cout << "idx: " << num_bbox <<" cx: " << box.center.x <<" cy: " << box.center.y <<" w: " << box.size.width <<" h: " << box.size.height <<" angle: " << box.angle <<" max_class_score: " << max_class_score << std::endl;

boxes.push_back(box);

scores.push_back(max_class_score);

class_ids.push_back(class_id);

}

}

// --- 6. 应用 NMS ---

std::vector<int> indices;

NMSBoxesRotated(boxes, scores, CONF_THRESHOLD, NMS_THRESHOLD, indices);

// --- 7. 绘制最终结果 ---

for (int idx : indices) {

std::cout << "fin idx: " << idx <<std::endl;

const auto& box = boxes[idx];

float score = scores[idx];

int class_id = class_ids[idx];

// 获取旋转矩形的四个顶点

cv::Point2f vertices[4];

box.points(vertices);

// 绘制旋转框

for (int j = 0; j < 4; ++j) {

cv::line(original_image, vertices[j], vertices[(j + 1) % 4], cv::Scalar(0, 255, 0), 2);

}

// 绘制标签

std::string label = class_names[class_id] + ": " + std::to_string(score).substr(0, 4);

int baseline;

cv::Size text_size = cv::getTextSize(label, cv::FONT_HERSHEY_SIMPLEX, 0.6, 1, &baseline);

cv::Point text_origin(vertices[0].x, vertices[0].y - 5); // 在第一个顶点上方绘制

cv::putText(original_image, label, text_origin, cv::FONT_HERSHEY_SIMPLEX, 0.6, cv::Scalar(0, 255, 0), 1);

}

// --- 8. 显示和保存结果 ---

cv::imshow("YOLOv8-OBB C++ Inference", original_image);

cv::waitKey(0);

cv::destroyAllWindows();

cv::imwrite("result_cpp.jpg", original_image);

std::cout << "Result saved to result_cpp.jpg" << std::endl;

return 0;

}CMakeLists.txt的内容

cpp

cmake_minimum_required(VERSION 3.10)

project(YOLO_OBB_TRT)

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

# --- ָ���Զ���� CUDA ·�� ---

set(CUDA_INCLUDE_DIRS /home/lds/KXN_CODE/import/cuda/linux/include)

set(CUDA_LIB_DIR /home/lds/KXN_CODE/import/cuda/linux/lib)

link_directories(${CUDA_LIB_DIR})

#set(CUDA_TOOLKIT_ROOT_DIR /home/lds/KXN_CODE/import/cuda/linux)

#find_package(CUDA REQUIRED)

# ���� OpenCV

set(OpenCV_INCLUDE_DIRS /home/lds/KXN_CODE/import/opencv_4_5_5/linux/include)

set(OpenCV_LIB_DIR /home/lds/KXN_CODE/import/opencv_4_5_5/linux/lib)

link_directories(${OpenCV_LIB_DIR})

# ���� TensorRT

set(TRT_INCLUDE_DIRS /home/lds/KXN_CODE/import/tensorrt/include)

set(TRT_LIBRARY_DIR /home/lds/KXN_CODE/import/tensorrt/lib)

link_directories(${TRT_LIBRARY_DIR})

# --- 2. Find ONNX Runtime ---

# �ֶ�ָ�� ONNX Runtime ��·��

set(ONNXRUNTIME_ROOT "/home/lds/KXN_CODE/import/onnxruntime/linux") # <--- ������

set(ONNXRUNTIME_INCLUDE ${ONNXRUNTIME_ROOT}/include)

set(ONNXRUNTIME_LIB ${ONNXRUNTIME_ROOT}/lib)

# ���·���Ƿ����

if(NOT EXISTS ${ONNXRUNTIME_ROOT})

message(FATAL_ERROR "ONNX Runtime not found at ${ONNXRUNTIME_ROOT}. Please modify ONNXRUNTIME_ROOT in CMakeLists.txt")

endif()

link_directories(${ONNXRUNTIME_LIB})

#set(TRT_ROOT "/home/lds/TensorRT-8.6.1.6/")

#set(TRT_INCLUDE_DIRS ${TRT_ROOT}/include)

#set(TRT_LIBRARY_DIRS ${TRT_ROOT}/lib)

#link_directories(${TRT_LIBRARY_DIRS})

# ���ӿ�ִ���ļ�

add_executable(yolo_obb_infer main.cpp)

# ����ͷ�ļ�Ŀ¼

target_include_directories(yolo_obb_infer PRIVATE

${OpenCV_INCLUDE_DIRS}

${TRT_INCLUDE_DIRS}

${CUDA_INCLUDE_DIRS}

${ONNXRUNTIME_INCLUDE}

)

# ���ӿ�

target_link_libraries(yolo_obb_infer

${OpenCV_LIB_DIR}/libopencv_core.so

${OpenCV_LIB_DIR}/libopencv_imgproc.so

${OpenCV_LIB_DIR}/libopencv_imgcodecs.so

${OpenCV_LIB_DIR}/libopencv_highgui.so

${OpenCV_LIB_DIR}/libopencv_dnn.so

${CUDA_LIB_DIR}/libcudnn.so

${CUDA_LIB_DIR}/libcublas.so

${CUDA_LIB_DIR}/libcublasLt.so

${CUDA_LIB_DIR}/libcudart.so

${CUDA_LIB_DIR}/libnvinfer.so

${CUDA_LIB_DIR}/libnvonnxparser.so

${CUDA_LIB_DIR}/libnvinfer_plugin.so

${CUDA_LIB_DIR}/libcurand.so.10

${CUDA_LIB_DIR}/libcufft.so.11

${ONNXRUNTIME_LIB}/libonnxruntime.so

${ONNXRUNTIME_LIB}/libonnxruntime_providers_cuda.so

${ONNXRUNTIME_LIB}/libonnxruntime_providers_shared.so

${ONNXRUNTIME_LIB}/libonnxruntime_providers_tensorrt.so

)2.2 基于视频的推理

CMakeLists.txt的内容

python

cmake_minimum_required(VERSION 3.10)

project(YOLO_OBB_TRT)

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

# --- ָ���Զ���� CUDA ·�� ---

set(CUDA_INCLUDE_DIRS /home/lds/KXN_CODE/import/cuda/linux/include)

set(CUDA_LIB_DIR /home/lds/KXN_CODE/import/cuda/linux/lib)

link_directories(${CUDA_LIB_DIR})

#set(CUDA_TOOLKIT_ROOT_DIR /home/lds/KXN_CODE/import/cuda/linux)

#find_package(CUDA REQUIRED)

# ���� OpenCV

set(OpenCV_INCLUDE_DIRS /home/lds/KXN_CODE/import/opencv_4_5_5/linux/include)

set(OpenCV_LIB_DIR /home/lds/KXN_CODE/import/opencv_4_5_5/linux/lib)

link_directories(${OpenCV_LIB_DIR})

# ���� TensorRT

set(TRT_INCLUDE_DIRS /home/lds/KXN_CODE/import/tensorrt/include)

set(TRT_LIBRARY_DIR /home/lds/KXN_CODE/import/tensorrt/lib)

link_directories(${TRT_LIBRARY_DIR})

# --- 2. Find ONNX Runtime ---

# �ֶ�ָ�� ONNX Runtime ��·��

set(ONNXRUNTIME_ROOT "/home/lds/KXN_CODE/import/onnxruntime/linux") # <--- ������

set(ONNXRUNTIME_INCLUDE ${ONNXRUNTIME_ROOT}/include)

set(ONNXRUNTIME_LIB ${ONNXRUNTIME_ROOT}/lib)

# ���·���Ƿ����

if(NOT EXISTS ${ONNXRUNTIME_ROOT})

message(FATAL_ERROR "ONNX Runtime not found at ${ONNXRUNTIME_ROOT}. Please modify ONNXRUNTIME_ROOT in CMakeLists.txt")

endif()

link_directories(${ONNXRUNTIME_LIB})

#set(TRT_ROOT "/home/lds/TensorRT-8.6.1.6/")

#set(TRT_INCLUDE_DIRS ${TRT_ROOT}/include)

#set(TRT_LIBRARY_DIRS ${TRT_ROOT}/lib)

#link_directories(${TRT_LIBRARY_DIRS})

# ���ӿ�ִ���ļ�

add_executable(yolo_obb_infer main.cpp)

# ����ͷ�ļ�Ŀ¼

target_include_directories(yolo_obb_infer PRIVATE

${OpenCV_INCLUDE_DIRS}

${TRT_INCLUDE_DIRS}

${CUDA_INCLUDE_DIRS}

${ONNXRUNTIME_INCLUDE}

)

# ���ӿ�

target_link_libraries(yolo_obb_infer

${OpenCV_LIB_DIR}/libopencv_core.so

${OpenCV_LIB_DIR}/libopencv_imgproc.so

${OpenCV_LIB_DIR}/libopencv_imgcodecs.so

${OpenCV_LIB_DIR}/libopencv_highgui.so

${OpenCV_LIB_DIR}/libopencv_dnn.so

${OpenCV_LIB_DIR}/libopencv_videoio.so

${CUDA_LIB_DIR}/libcudnn.so

${CUDA_LIB_DIR}/libcublas.so

${CUDA_LIB_DIR}/libcublasLt.so

${CUDA_LIB_DIR}/libcudart.so

${CUDA_LIB_DIR}/libnvinfer.so

${CUDA_LIB_DIR}/libnvonnxparser.so

${CUDA_LIB_DIR}/libnvinfer_plugin.so

${CUDA_LIB_DIR}/libcurand.so.10

${CUDA_LIB_DIR}/libcufft.so.11

${ONNXRUNTIME_LIB}/libonnxruntime.so

${ONNXRUNTIME_LIB}/libonnxruntime_providers_cuda.so

${ONNXRUNTIME_LIB}/libonnxruntime_providers_shared.so

${ONNXRUNTIME_LIB}/libonnxruntime_providers_tensorrt.so

)main.cpp的内容

cpp

#include <iostream>

#include <fstream>

#include <string>

#include <vector>

#include <algorithm>

#include <numeric>

#include <memory>

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

// --- 结构体用于存储检测结果 ---

struct Detection {

cv::RotatedRect box; // 旋转矩形

float confidence; // 置信度

int class_id; // 类别ID

};

// Calculate IoU between two rotated rectangles

float calculateRotatedIoU(const cv::RotatedRect& box1, const cv::RotatedRect& box2) {

cv::Point2f vertices1[4], vertices2[4];

box1.points(vertices1);

box2.points(vertices2);

std::vector<cv::Point2f> intersection_points;

cv::intersectConvexConvex(cv::Mat(4, 2, CV_32F, vertices1),

cv::Mat(4, 2, CV_32F, vertices2),

intersection_points);

float inter_area = 0.0f;

if (!intersection_points.empty()) {

inter_area = cv::contourArea(intersection_points);

}

float area1 = box1.size.area();

float area2 = box2.size.area();

return inter_area / (area1 + area2 - inter_area + 1e-5f);

}

// Manual implementation of NMS for rotated boxes

void NMSBoxesRotated(

const std::vector<cv::RotatedRect>& boxes,

const std::vector<float>& scores,

const float score_threshold,

const float iou_threshold,

std::vector<int>& indices) {

std::vector<int> sorted_indices(scores.size());

std::iota(sorted_indices.begin(), sorted_indices.end(), 0);

std::sort(sorted_indices.begin(), sorted_indices.end(),

[&scores](int i1, int i2) { return scores[i1] > scores[i2]; });

std::vector<bool> suppressed(scores.size(), false);

indices.clear();

for (size_t i = 0; i < sorted_indices.size(); ++i) {

int idx = sorted_indices[i];

if (suppressed[idx] || scores[idx] < score_threshold) {

continue;

}

indices.push_back(idx);

for (size_t j = i + 1; j < sorted_indices.size(); ++j) {

int idx2 = sorted_indices[j];

if (suppressed[idx2]) {

continue;

}

float iou = calculateRotatedIoU(boxes[idx], boxes[idx2]);

if (iou > iou_threshold) {

suppressed[idx2] = true;

}

}

}

}

void InputImageConveter(const cv::Mat& image, cv::Mat& OutImage,

const cv::Size& newShape,

cv::Vec4d& params,

bool scaleFill = false,

bool scaleUp = true,

const cv::Scalar& color = cv::Scalar(114, 114, 114))

{

/*

params:image 输入图像

params:OutImage 输出图像

params:newShape 新的图像尺寸

params:params 参数,用于存储[ratio_x,ratio_y,dw,dh]

params:scaleFill 是否直接进行resize图像

params:sclaUp 是否放大图像

核心就是直接reshape会导致图像的长宽比发生改变,所以需要根据目标形状调整图像大小,也就是说,需要保持图像的长宽比不变

进行等比例缩放,然后填充颜色,同时保证等比例缩放的图像位于填充后的图像中心位置

*/

//cv::Vec4d是opencv中的一个四维向量类,用于表示四维向量

cv::Size InputImageShape = image.size();

float R = std::min((float)newShape.height / (float)InputImageShape.height,

(float)newShape.width / (float)InputImageShape.width);//计算缩放比例,取长宽比的最小值,因为要保持图像的长宽比不变

if (!scaleUp)//是否根据目标形状调整图像大小,如果为false,则不允许放大图像

{

R = std::min(R, 1.0f);

}

float Ratio[2] = { R,R };//缩放比例{ R,R };

int New_Un_padding[2] = {(int)std::round((float)InputImageShape.width *R),

(int)std::round((float)InputImageShape.height * R) };//缩放后的图像尺寸,未进行填充

auto dw = (float)(newShape.width - New_Un_padding[0]);//计算填充的宽度

auto dh = (float)(newShape.height - New_Un_padding[1]);//计算填充的高度

if (scaleFill) //如果选择强制缩放图像完全填满目标尺寸(不保留长宽比例)

{

dw = 0.0f;

dh = 0.0f;

New_Un_padding[0] = newShape.width;

New_Un_padding[1] = newShape.height;

Ratio[0] = (float)newShape.width / (float)InputImageShape.width;

Ratio[1] = (float)newShape.height / (float)InputImageShape.height;

}

dw /= 2.0f;//计算填充的宽度

dh /= 2.0f;//计算填充的高度

// 生成填充后的图像

if (InputImageShape.width != New_Un_padding[0] && InputImageShape.height != New_Un_padding[1])

{

cv::resize(image, OutImage, cv::Size(New_Un_padding[0], New_Un_padding[1]));

}

else

{

OutImage = image.clone();

}

int top = int(std::round(dh - 0.1f));//计算填充的上边界

int bottom = int(std::round(dh + 0.1f));//计算填充的下边界

int left = int(std::round(dw - 0.1f));//计算填充的左边界

int right = int(std::round(dw + 0.1f));//计算填充的右边界

params[0] = Ratio[0];//存储缩放比例

params[1] = Ratio[1];//存储缩放比例

params[2] = left;//存储填充的左边界dw

params[3] = top;//存储填充的上边界dh

cv::copyMakeBorder(OutImage, OutImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);//填充图像

}

// --- 主函数 ---

int main() {

// --- 1. 配置参数 ---

const std::string model_path = "/home/lds/KXN_CODE/obb_onnx_vedio/models/OBB1202.onnx";

const std::string image_path = "/home/lds/KXN_CODE/test_obbonnx/images/1201test4.jpg";

std::string outputPath = "/home/lds/KXN_CODE/obb_onnx_vedio/images/output_video.mp4";

std::string videoPath = "/home/lds/KXN_CODE/obb_onnx_vedio/images/E1-3F-L8.mp4"; // 替换为你的视频文件路径

const float CONF_THRESHOLD = 0.25f;

const float NMS_THRESHOLD = 0.5f;

const float INPUT_WIDTH = 640.0f;

const float INPUT_HEIGHT = 640.0f;

// COCO-OBB 数据集的类别名

const std::vector<std::string> class_names = {

"plane"

};

// --- 2. 初始化 ONNX Runtime ---

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "Yolov8_OBB_Test");

Ort::SessionOptions session_options; // 创建会话选项

session_options.SetIntraOpNumThreads(1);

// 如果使用GPU,取消下面一行的注释,并确保使用GPU版本的onnxruntime

//session_options.AppendExecutionProvider_CUDA(0);

Ort::Session session(env, model_path.c_str(), session_options);

// 打印模型输入/输出信息

Ort::AllocatorWithDefaultOptions allocator;

std::vector<const char*> input_names_ptr;

std::vector<std::vector<int64_t>> input_shapes;

std::vector<const char*> output_names_ptr;

std::vector<std::vector<int64_t>> output_shapes;

// Input info

size_t num_input_nodes = session.GetInputCount();

input_names_ptr.reserve(num_input_nodes);

input_shapes.reserve(num_input_nodes);

for (size_t i = 0; i < num_input_nodes; i++) {

char* input_name = session.GetInputName(i, allocator);

input_names_ptr.push_back(input_name);

Ort::TypeInfo input_type_info = session.GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_shapes.push_back(input_dims);

std::cout << "Input " << i << " : " << input_name << " [";

for (size_t j = 0; j < input_dims.size(); ++j) std::cout << input_dims[j] << (j < input_dims.size() - 1 ? ", " : "");

std::cout << "]" << std::endl;

}

// Output info

size_t num_output_nodes = session.GetOutputCount();

output_names_ptr.reserve(num_output_nodes);

output_shapes.reserve(num_output_nodes);

for (size_t i = 0; i < num_output_nodes; i++) {

char* output_name = session.GetOutputName(i, allocator);

output_names_ptr.push_back(output_name);

Ort::TypeInfo output_type_info = session.GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_shapes.push_back(output_dims);

std::cout << "Output " << i << " : " << output_name << " [";

for (size_t j = 0; j < output_dims.size(); ++j) std::cout << output_dims[j] << (j < output_dims.size() - 1 ? ", " : "");

std::cout << "]" << std::endl;

}

std::cout << " 000"<<std::endl;

// // --- 3. 读取并预处理图像 ---

// cv::Mat original_image = cv::imread(image_path);

// if (original_image.empty()) {

// std::cerr << "Error: Could not read image from " << image_path << std::endl;

// return -1;

// }

// 方法2:使用视频文件

cv::VideoCapture cap(videoPath);

// 检查视频是否成功打开

if (!cap.isOpened()) {

std::cout << "无法打开视频文件或摄像头!" << std::endl;

return -1;

}

// 获取视频信息

double fps = cap.get(cv::CAP_PROP_FPS);

int frameWidth = cap.get(cv::CAP_PROP_FRAME_WIDTH);

int frameHeight = cap.get(cv::CAP_PROP_FRAME_HEIGHT);

int totalFrames = cap.get(cv::CAP_PROP_FRAME_COUNT);

std::cout << "视频信息:" << std::endl;

std::cout << "分辨率: " << frameWidth << "x" << frameHeight << std::endl;

std::cout << "帧率: " << fps << " FPS" << std::endl;

std::cout << "总帧数: " << totalFrames << std::endl;

cv::Mat original_image;

int frameCount = 0;

// 创建VideoWriter

cv::VideoWriter outputVideo;

bool isColor = true; // 是否为彩色视频

// 尝试打开视频写入器

int fourcc = cv::VideoWriter::fourcc('M', 'J', 'P', 'G'); // MJPG编码器

outputVideo.open(outputPath, fourcc, fps, cv::Size(frameWidth, frameHeight), isColor);

if (!outputVideo.isOpened()) {

std::cerr << "无法创建输出视频文件!" << std::endl;

// 尝试使用默认编码器

outputVideo.open(outputPath, 0, fps, cv::Size(frameWidth, frameHeight), isColor);

if (!outputVideo.isOpened()) {

std::cerr << "使用默认编码器也失败!" << std::endl;

return -1;

}

std::cout << "使用默认编码器" << std::endl;

}

std::cout << "输出视频: " << outputPath << std::endl;

std::cout << "编码器: " << fourcc << std::endl;

while (true) {

// 读取一帧

cap >> original_image;

// 检查帧是否为空(视频结束)

if (original_image.empty()) {

std::cout << "视频播放结束!" << std::endl;

break;

}

int _inputWidth = 640;

int _inputHeight = 640;

cv::Mat blob; //blob是opencv中的一种数据结构,用于存储图像数据

int img_height = original_image.rows;

int img_width = original_image.cols;

cv::Mat netInputImg; //网络输入图像

cv::Vec4d params; //用于存储[ratio_x,ratio_y,dw,dh]

InputImageConveter(original_image, netInputImg, cv::Size(_inputWidth, _inputHeight), params);

std::cout << "params[0]: " << params[0] <<std::endl;

std::cout << "params[1]: " << params[1] <<std::endl;

std::cout << "params[2]: " << params[2] <<std::endl;

std::cout << "params[3]: " << params[3] <<std::endl;

cv::dnn::blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_inputWidth, _inputHeight), cv::Scalar(0, 0, 0), true, false);

//std::cout << "Blob values: " << blob << std::endl;

//bool success = cv::imwrite("/home/lds/KXN_CODE/test_obbonnx/blob1.jpg",blob);

//if (success) {

//std::cout << "Blob保存成功!" << std::endl;

//} else {

// std::cout << "Blob保存失败!" << std::endl;

//}

// --- 4. 创建输入张量并运行推理 ---

std::vector<int64_t> input_shape = {1, 3, static_cast<int64_t>(INPUT_HEIGHT), static_cast<int64_t>(INPUT_WIDTH)};

Ort::MemoryInfo memory_info = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value input_tensor = Ort::Value::CreateTensor<float>(memory_info, blob.ptr<float>(), blob.total(), input_shape.data(), input_shape.size());

auto output_tensors = session.Run(Ort::RunOptions{nullptr}, input_names_ptr.data(), &input_tensor, 1, output_names_ptr.data(), output_names_ptr.size());

// --- 5. 后处理 ---

const float* output_data = output_tensors[0].GetTensorData<float>();

auto output_shape = output_shapes[0]; // e.g., [1, 20, 8400]

int num_classes = output_shape[1] - 5; // 20 - 5 = 15

int num_proposals = output_shape[2]; // 8400

std::cout << "num_classes " << num_classes <<std::endl;

std::cout << "num_proposals " << num_proposals <<std::endl;

size_t total_elements = 1;

for (auto dim : output_shape) {

total_elements *= dim;

}

std::cout << "total_elements " << total_elements <<std::endl;

std::cout << " 222"<<std::endl;

std::vector<Detection> final_detections;

std::vector<cv::RotatedRect> boxes;

std::vector<float> scores;

std::vector<int> class_ids;

int num_bbox = 0;

// 遍历所有预测结果

for (int i = 0; i < num_proposals; ++i) {

//float* proposal = (float*)output_data + i * (5 + num_classes);

//float cx = proposal[0];

//float cy = proposal[1];

//float w = proposal[2];

//float h = proposal[3];

//float angle = proposal[4] ; // 角度,单位是度

// float cx = output_data[0*num_proposals + i];

// float cy = output_data[1*num_proposals + i];

// float w = output_data[2 * num_proposals + i];

// float h = output_data[3 * num_proposals + i];

float cx = (output_data[0*num_proposals + i]- params[2]) / params[0] /2;

float cy = (output_data[1*num_proposals + i]- params[3]) / params[1] /1.125;

float w = output_data[2 * num_proposals + i]/ params[0] /2;

float h = output_data[3 * num_proposals + i] / params[1] /1.125;

float score = output_data[4 * num_proposals + i];

float angle = output_data[5 * num_proposals + i] *180/3.1415926;

// 找到最大类别分数

float max_class_score = 0.0f;

int class_id = -1;

for (int j = 0; j < num_classes; ++j) {

//if (proposal[5 + j] > max_class_score) {

if (score> max_class_score) {

//max_class_score = proposal[5 + j];

max_class_score = score;

class_id = j;

}

}

if (max_class_score > CONF_THRESHOLD) {

num_bbox += 1;

//std::cout << "idx: " << num_bbox <<" cx: " << cx <<" cy: " << cy <<" w: " << w <<" h: " << h <<" angle: " << angle <<" max_class_score: " << max_class_score << std::endl;

// 坐标还原到原始图像尺寸

float scale_x = static_cast<float>(img_width) / INPUT_WIDTH;

float scale_y = static_cast<float>(img_height) / INPUT_HEIGHT;

cv::RotatedRect box;

box.center.x = cx * scale_x;

box.center.y = cy * scale_y;

box.size.width = w * scale_x;

box.size.height = h * scale_y;

box.angle = angle;

std::cout << "idx: " << num_bbox <<" cx: " << box.center.x <<" cy: " << box.center.y <<" w: " << box.size.width <<" h: " << box.size.height <<" angle: " << box.angle <<" max_class_score: " << max_class_score << std::endl;

boxes.push_back(box);

scores.push_back(max_class_score);

class_ids.push_back(class_id);

}

}

// --- 6. 应用 NMS ---

std::vector<int> indices;

NMSBoxesRotated(boxes, scores, CONF_THRESHOLD, NMS_THRESHOLD, indices);

// --- 7. 绘制最终结果 ---

for (int idx : indices) {

std::cout << "fin idx: " << idx <<std::endl;

const auto& box = boxes[idx];

float score = scores[idx];

int class_id = class_ids[idx];

// 获取旋转矩形的四个顶点

cv::Point2f vertices[4];

box.points(vertices);

// 绘制旋转框

for (int j = 0; j < 4; ++j) {

cv::line(original_image, vertices[j], vertices[(j + 1) % 4], cv::Scalar(0, 255, 0), 2);

}

// 绘制标签

std::string label = class_names[class_id] + ": " + std::to_string(score).substr(0, 4);

int baseline;

cv::Size text_size = cv::getTextSize(label, cv::FONT_HERSHEY_SIMPLEX, 0.6, 1, &baseline);

cv::Point text_origin(vertices[0].x, vertices[0].y - 5); // 在第一个顶点上方绘制

cv::putText(original_image, label, text_origin, cv::FONT_HERSHEY_SIMPLEX, 0.6, cv::Scalar(0, 255, 0), 1);

}

outputVideo.write(original_image);

// // --- 8. 显示和保存结果 ---

// cv::imshow("YOLOv8-OBB C++ Inference", original_image);

// cv::waitKey(0);

// cv::destroyAllWindows();

// cv::imwrite("result_cpp.jpg", original_image);

// std::cout << "Result saved to result_cpp.jpg" << std::endl;

}

// 4. 释放资源

cap.release();

outputVideo.release();

return 0;

}如果在执行中,遇到缺少opencv库的问题,可以使用下面的命令添加环境变量:

export LD_LIBRARY_PATH=/usr/local/lib:$LD_LIBRARY_PATH

可以参考的博文的链接: