k8s环境规划:

podSubnet(pod 网段) 10.20.0.0/16

serviceSubnet(service 网段): 10.10.0.0/16

实验环境规划:

操作系统:Ubuntu 20.04.3

配置: 4G 内存/2核CPU/120G 硬盘

网络: NAT

| K8s集群角色 | ip | 主机名 | |

|---|---|---|---|

| 控制节点(master) | 192.168.121.100 | master | apiserver、controller-manager、scheduler、etcd、docker、node-exporter:v1.8.1 |

| 工作节点(node1) | 192.168.121.101 | node1 | kubelet、kube-proxy、docker、calico、coredns、node-exporter:v1.8.1、nginx:latest |

| 工作节点(node2) | 192.168.121.102 | node2 | kubelet、kube-proxy、docker、calico、coredns、node-exporter:v1.8.1、nginx:latest |

| 工作节点(node3) | 192.168.121.103 | node3 | kubelet、kube-proxy、docker、calico、coredns、node-exporter:v1.8.1、nginx:latest |

| 负载均衡(lb)主 | 192.168.121.104 | lb1 | keepalived:v2.0.19、nginx:1.28.0、node-exporter:v1.8.1 |

| 负载均衡(lb)备 | 192.168.121.105 | lb2 | keepalived:v2.0.19、nginx:1.28.0、node-exporter:v1.8.1 |

| 镜像仓库(harbor+NFS) | 192.168.121.106 | harbor | harbor:2.4.2、node-exporter:v1.8.1、nfs-kernel-server:1:1.3.4-2.5ubuntu3.7 |

| 统一访问入口(VIP) | 192.168.121.188 | - | https://www.test.com |

1 部署k8s集群

1.1 安装 kubelet kubeadm kubectl 1.20.6 每台服务器执行

bash

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

apt update

apt install -y --allow-downgrades --allow-change-held-packages kubelet=1.20.6-00 kubeadm=1.20.6-00 kubectl=1.20.6-001.2 kubeadm初始化k8s集群

把初始化 k8s 集群需要的离线镜像包上传到 集群机器上,手动解压:

bash

docker load -i k8simage-1-20-6.tar.gz

# kubeadm 初始化集群

kubeadm init --kubernetes-version=1.20.6 --apiserver-advertise-address=192.168.121.100 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.20.0.0/16 --ignore-preflight-errors=SystemVerification

# 初始化成功后会有添加节点命令

kubeadm join 192.168.121.100:6443 --token e6p5bq.bqju9z9dqwj2ydvy \

--discovery-token-ca-cert-hash sha256:9b3750aedaed5c1c3f95f689ce41d7da1951f2bebba6e7974a53e0b20754a09d 1.3 配置管理权限

bash

#配置 kubectl 的配置文件 config,相当于对 kubectl 进行授权,这样 kubectl 命令可以使用这个证书对 k8s 集群进行管理

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config1.4 扩容工作节点

bash

# 在每一个工作节点输入

kubeadm join 192.168.121.100:6443 --token e6p5bq.bqju9z9dqwj2ydvy \

--discovery-token-ca-cert-hash sha256:9b3750aedaed5c1c3f95f689ce41d7da1951f2bebba6e7974a53e0b20754a09d 1.5 把roles变成work

bash

kubectl label node node1 node-role.kubernetes.io/work=work1.6 安装kubernetes 网络组件-Calico

bash

[root@master1 ~]# kubectl apply -f calico.yaml2 安装 k8s 可视化 UI 界面 dashboard

把安装 kubernetes-dashboard 需要的镜像上传到工作节点node1 、node2、node3,手动解压:

2.1 安装dashboard

bash

docker load -i dashboard_2_0_0.tar.gz

kubectl apply -f kubernetes-dashboard.yaml 2.2 修改svc成NodePort对外暴露端口

bash

#修改 service type 类型变成 NodePort

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

root@master:~# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.109.203.174 <none> 8000/TCP 6m14s

kubernetes-dashboard NodePort 10.107.8.70 <none> 443:31559/TCP 6m14s浏览器访问地址https://192.168.121.102:31559

2.3 通过 token 令牌访问 dashboard

bash

# 通过 Token 登陆 dashboard

# 创建管理员 token,具有查看任何空间的权限,可以管理所有资源对象

kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboard

clusterrolebinding.rbac.authorization.k8s.io/dashboard-cluster-admin created

# 查看 kubernetes-dashboard 名称空间下的 secret

kubectl get secret -n kubernetes-dashboard

NAME TYPE DATA AGE

default-token-59g55 kubernetes.io/service-account-token 3 7m52s

kubernetes-dashboard-certs Opaque 0 7m52s

kubernetes-dashboard-csrf Opaque 1 7m52s

kubernetes-dashboard-key-holder Opaque 2 7m52s

kubernetes-dashboard-token-7865l kubernetes.io/service-account-token 3 7m52s

# 找到对应的带有 token 的 kubernetes-dashboard-token-ppc8c

kubectl describe secret kubernetes-dashboard-token-7865l -n kubernetes-dashboard

Name: kubernetes-dashboard-token-7865l

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: c5675c08-6606-41c2-859d-e8e54d80710a

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlkxdExiWm5Xdm0xX0F6emVwTEVTMkpQQy1pX2dTU25EUl91d0hZalpjWDAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi03ODY1bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImM1Njc1YzA4LTY2MDYtNDFjMi04NTlkLWU4ZTU0ZDgwNzEwYSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.ZPuldOtmERsqTRBPVADMXWJpC8CwDPdsbOYSfKT6SmXo2hAXU9EqBngGEQecXd2OlBo7UT-748AssQJtBYcIh0KwCz9dwruszuS-KHRqG89D-2wz1Sg_uRTBA445yJctlfclGJFQKbogFvAPCF31lYCi6yLYhNhbzoiIRV3ZtPowKox0yoBXcopLT6x8W-YReQRDuHHu51La33Lu2Xsd8tkYu_4JXlrmTkPxfznUuZ3SArZguww5rirY06V7pLKE54kOMgwQ_Z7bCb2PYbuvXAg2G_h7TgGQIaNuQm2mMEVb_0lspIVj9cRYm38Yn-yOwb_bFu_2YCQfzfiWmvUyww

# 将token 后面的值复制到浏览器token登录处即可登录

2.4 通过 kubeconfig 文件访问 dashboard

bash

cd /etc/kubernetes/pki/

# 创建cluster集群

kubectl config set-cluster kubernetes --certificate-authority=./ca.crt --server="https://192.168.121.100:6443" --embed-certs=true --kubeconfig=/root/dashboard-admin.conf

Cluster "kubernetes" set.

cat /root/dashboard-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJMU1Ea3hNakE1TXpJeU0xb1hEVE0xTURreE1EQTVNekl5TTFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTVkvCjlSV2lQUHE4dlpLNkw2QjkzZGsrTm1GTDhIbnZYbk5ZSnBXVUVCNmMxZExrRlJIVVVndlJIT0FkZlE3S3lBLzgKazJBWmRpdFZicVhEOTRQMG94N0xaOWdEZjlibnNKMFVPbzduT211anQ5MUZOcHZ2bXUrb2UyR28yTjc0aXUyZgoxRjhZV1ZaSUJKOVpBVnlqWk5xaEt6RTBrMzh5K1RORGxPcHN6YkJxeExKQ0xpanliNmpCd3NqRElQUUpVVDQ3CnBUVWR2alFnQll1M3FhVWk4ZWFCNzFrN0YxT1BzTU1KWW1nemtoU2Z6TXhReFF1aFo5VnJJWnJiKytEY2NZVWUKaG5iNmNIVDJiWU01NWsvWWhnNkpySEluM2xLTkNyemVwM1lhZ0xNMjNMdGQ3eUxoQXNyb1NsRXdkc3puTlBJRAp3Slc4L3MzdmZXUUJYeVhycmxNQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZPcEFyRWVnZE1WS2xlckZpVFdzbkFzY1ZHanlNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFDb3FUMjlTRXBORFM0cjMvT3J2Z1ZKY1ozRmxVemJvNXJma05SeVhDelFqZVpiZFhqMgo2dVBoZ2thdi8yYWNkU1IvZHpCQWJOTlNRMTJkaEY4SmVKYitEb1ptYlpDcUdKU1lMN3REVHJZWmpYV0dTV2ZMCmhudXdJalhVMFMwVmI1dS9vYktFNkxyRDRLRys4NW9PaVpTb0NySzM3ck5KT281TVZyRjBQNmN4QUE5eS9tWEUKOTRObXVKWW1KeTIxc1RwcisxTUQ0UG9od09CM0dKMTF1UkltdkRHaEJqTHF3TThITGp4SHBFWWdpZmU3N2FjcgpobjdvSjdKTHUwRGdGdGdobnBDeG5jWDNnU1BZd08xREpQV2o0Rml0ckJiOFgyNnU0dEtORmVkMUxiblE2YzZnCnA0amlnVHh4eEpENnR1dE1SbUoycTRjd05CSWxVdUZ2UldjUQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.121.100:6443

name: kubernetes

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

# 创建credentials

# 创建 credentials 需要使用上面的 kubernetes-dashboard-token-7865l 对应的 token 信息

DEF_NS_ADMIN_TOKEN=$(kubectl get secret kubernetes-dashboard-token-7865l -n kubernetes-dashboard -o jsonpath={.data.token}|base64 -d)

kubectl config set-credentials dashboard-admin --token=$DEF_NS_ADMIN_TOKEN --kubeconfig=/root/dashboard-admin.conf

User "dashboard-admin" set.

cat /root/dashboard-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJMU1Ea3hNakE1TXpJeU0xb1hEVE0xTURreE1EQTVNekl5TTFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTVkvCjlSV2lQUHE4dlpLNkw2QjkzZGsrTm1GTDhIbnZYbk5ZSnBXVUVCNmMxZExrRlJIVVVndlJIT0FkZlE3S3lBLzgKazJBWmRpdFZicVhEOTRQMG94N0xaOWdEZjlibnNKMFVPbzduT211anQ5MUZOcHZ2bXUrb2UyR28yTjc0aXUyZgoxRjhZV1ZaSUJKOVpBVnlqWk5xaEt6RTBrMzh5K1RORGxPcHN6YkJxeExKQ0xpanliNmpCd3NqRElQUUpVVDQ3CnBUVWR2alFnQll1M3FhVWk4ZWFCNzFrN0YxT1BzTU1KWW1nemtoU2Z6TXhReFF1aFo5VnJJWnJiKytEY2NZVWUKaG5iNmNIVDJiWU01NWsvWWhnNkpySEluM2xLTkNyemVwM1lhZ0xNMjNMdGQ3eUxoQXNyb1NsRXdkc3puTlBJRAp3Slc4L3MzdmZXUUJYeVhycmxNQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZPcEFyRWVnZE1WS2xlckZpVFdzbkFzY1ZHanlNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFDb3FUMjlTRXBORFM0cjMvT3J2Z1ZKY1ozRmxVemJvNXJma05SeVhDelFqZVpiZFhqMgo2dVBoZ2thdi8yYWNkU1IvZHpCQWJOTlNRMTJkaEY4SmVKYitEb1ptYlpDcUdKU1lMN3REVHJZWmpYV0dTV2ZMCmhudXdJalhVMFMwVmI1dS9vYktFNkxyRDRLRys4NW9PaVpTb0NySzM3ck5KT281TVZyRjBQNmN4QUE5eS9tWEUKOTRObXVKWW1KeTIxc1RwcisxTUQ0UG9od09CM0dKMTF1UkltdkRHaEJqTHF3TThITGp4SHBFWWdpZmU3N2FjcgpobjdvSjdKTHUwRGdGdGdobnBDeG5jWDNnU1BZd08xREpQV2o0Rml0ckJiOFgyNnU0dEtORmVkMUxiblE2YzZnCnA0amlnVHh4eEpENnR1dE1SbUoycTRjd05CSWxVdUZ2UldjUQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.121.100:6443

name: kubernetes

contexts: null

current-context: ""

kind: Config

preferences: {}

users:

- name: dashboard-admin

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlkxdExiWm5Xdm0xX0F6emVwTEVTMkpQQy1pX2dTU25EUl91d0hZalpjWDAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi03ODY1bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImM1Njc1YzA4LTY2MDYtNDFjMi04NTlkLWU4ZTU0ZDgwNzEwYSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.ZPuldOtmERsqTRBPVADMXWJpC8CwDPdsbOYSfKT6SmXo2hAXU9EqBngGEQecXd2OlBo7UT-748AssQJtBYcIh0KwCz9dwruszuS-KHRqG89D-2wz1Sg_uRTBA445yJctlfclGJFQKbogFvAPCF31lYCi6yLYhNhbzoiIRV3ZtPowKox0yoBXcopLT6x8W-YReQRDuHHu51La33Lu2Xsd8tkYu_4JXlrmTkPxfznUuZ3SArZguww5rirY06V7pLKE54kOMgwQ_Z7bCb2PYbuvXAg2G_h7TgGQIaNuQm2mMEVb_0lspIVj9cRYm38Yn-yOwb_bFu_2YCQfzfiWmvUyww

# 创建 context

kubectl config set-context dashboard-admin@kubernetes --cluster=kubernetes --user=dashboard-admin --kubeconfig=/root/dashboard-admin.conf

Context "dashboard-admin@kubernetes" created.

cat /root/dashboard-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJMU1Ea3hNakE1TXpJeU0xb1hEVE0xTURreE1EQTVNekl5TTFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTVkvCjlSV2lQUHE4dlpLNkw2QjkzZGsrTm1GTDhIbnZYbk5ZSnBXVUVCNmMxZExrRlJIVVVndlJIT0FkZlE3S3lBLzgKazJBWmRpdFZicVhEOTRQMG94N0xaOWdEZjlibnNKMFVPbzduT211anQ5MUZOcHZ2bXUrb2UyR28yTjc0aXUyZgoxRjhZV1ZaSUJKOVpBVnlqWk5xaEt6RTBrMzh5K1RORGxPcHN6YkJxeExKQ0xpanliNmpCd3NqRElQUUpVVDQ3CnBUVWR2alFnQll1M3FhVWk4ZWFCNzFrN0YxT1BzTU1KWW1nemtoU2Z6TXhReFF1aFo5VnJJWnJiKytEY2NZVWUKaG5iNmNIVDJiWU01NWsvWWhnNkpySEluM2xLTkNyemVwM1lhZ0xNMjNMdGQ3eUxoQXNyb1NsRXdkc3puTlBJRAp3Slc4L3MzdmZXUUJYeVhycmxNQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZPcEFyRWVnZE1WS2xlckZpVFdzbkFzY1ZHanlNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFDb3FUMjlTRXBORFM0cjMvT3J2Z1ZKY1ozRmxVemJvNXJma05SeVhDelFqZVpiZFhqMgo2dVBoZ2thdi8yYWNkU1IvZHpCQWJOTlNRMTJkaEY4SmVKYitEb1ptYlpDcUdKU1lMN3REVHJZWmpYV0dTV2ZMCmhudXdJalhVMFMwVmI1dS9vYktFNkxyRDRLRys4NW9PaVpTb0NySzM3ck5KT281TVZyRjBQNmN4QUE5eS9tWEUKOTRObXVKWW1KeTIxc1RwcisxTUQ0UG9od09CM0dKMTF1UkltdkRHaEJqTHF3TThITGp4SHBFWWdpZmU3N2FjcgpobjdvSjdKTHUwRGdGdGdobnBDeG5jWDNnU1BZd08xREpQV2o0Rml0ckJiOFgyNnU0dEtORmVkMUxiblE2YzZnCnA0amlnVHh4eEpENnR1dE1SbUoycTRjd05CSWxVdUZ2UldjUQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.121.101:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: dashboard-admin

name: dashboard-admin@kubernetes

current-context: ""

kind: Config

preferences: {}

users:

- name: dashboard-admin

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlkxdExiWm5Xdm0xX0F6emVwTEVTMkpQQy1pX2dTU25EUl91d0hZalpjWDAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi03ODY1bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImM1Njc1YzA4LTY2MDYtNDFjMi04NTlkLWU4ZTU0ZDgwNzEwYSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.ZPuldOtmERsqTRBPVADMXWJpC8CwDPdsbOYSfKT6SmXo2hAXU9EqBngGEQecXd2OlBo7UT-748AssQJtBYcIh0KwCz9dwruszuS-KHRqG89D-2wz1Sg_uRTBA445yJctlfclGJFQKbogFvAPCF31lYCi6yLYhNhbzoiIRV3ZtPowKox0yoBXcopLT6x8W-YReQRDuHHu51La33Lu2Xsd8tkYu_4JXlrmTkPxfznUuZ3SArZguww5rirY06V7pLKE54kOMgwQ_Z7bCb2PYbuvXAg2G_h7TgGQIaNuQm2mMEVb_0lspIVj9cRYm38Yn-yOwb_bFu_2YCQfzfiWmvUyww

# 切换context的current-context是dashboard-admin@kubernetes

kubectl config use-context dashboard-admin@kubernetes --kubeconfig=/root/dashboard-admin.conf

Switched to context "dashboard-admin@kubernetes".

cat /root/dashboard-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJMU1Ea3hNakE1TXpJeU0xb1hEVE0xTURreE1EQTVNekl5TTFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTVkvCjlSV2lQUHE4dlpLNkw2QjkzZGsrTm1GTDhIbnZYbk5ZSnBXVUVCNmMxZExrRlJIVVVndlJIT0FkZlE3S3lBLzgKazJBWmRpdFZicVhEOTRQMG94N0xaOWdEZjlibnNKMFVPbzduT211anQ5MUZOcHZ2bXUrb2UyR28yTjc0aXUyZgoxRjhZV1ZaSUJKOVpBVnlqWk5xaEt6RTBrMzh5K1RORGxPcHN6YkJxeExKQ0xpanliNmpCd3NqRElQUUpVVDQ3CnBUVWR2alFnQll1M3FhVWk4ZWFCNzFrN0YxT1BzTU1KWW1nemtoU2Z6TXhReFF1aFo5VnJJWnJiKytEY2NZVWUKaG5iNmNIVDJiWU01NWsvWWhnNkpySEluM2xLTkNyemVwM1lhZ0xNMjNMdGQ3eUxoQXNyb1NsRXdkc3puTlBJRAp3Slc4L3MzdmZXUUJYeVhycmxNQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZPcEFyRWVnZE1WS2xlckZpVFdzbkFzY1ZHanlNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFDb3FUMjlTRXBORFM0cjMvT3J2Z1ZKY1ozRmxVemJvNXJma05SeVhDelFqZVpiZFhqMgo2dVBoZ2thdi8yYWNkU1IvZHpCQWJOTlNRMTJkaEY4SmVKYitEb1ptYlpDcUdKU1lMN3REVHJZWmpYV0dTV2ZMCmhudXdJalhVMFMwVmI1dS9vYktFNkxyRDRLRys4NW9PaVpTb0NySzM3ck5KT281TVZyRjBQNmN4QUE5eS9tWEUKOTRObXVKWW1KeTIxc1RwcisxTUQ0UG9od09CM0dKMTF1UkltdkRHaEJqTHF3TThITGp4SHBFWWdpZmU3N2FjcgpobjdvSjdKTHUwRGdGdGdobnBDeG5jWDNnU1BZd08xREpQV2o0Rml0ckJiOFgyNnU0dEtORmVkMUxiblE2YzZnCnA0amlnVHh4eEpENnR1dE1SbUoycTRjd05CSWxVdUZ2UldjUQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.121.101:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: dashboard-admin

name: dashboard-admin@kubernetes

current-context: dashboard-admin@kubernetes

kind: Config

preferences: {}

users:

- name: dashboard-admin

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlkxdExiWm5Xdm0xX0F6emVwTEVTMkpQQy1pX2dTU25EUl91d0hZalpjWDAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi03ODY1bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImM1Njc1YzA4LTY2MDYtNDFjMi04NTlkLWU4ZTU0ZDgwNzEwYSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.ZPuldOtmERsqTRBPVADMXWJpC8CwDPdsbOYSfKT6SmXo2hAXU9EqBngGEQecXd2OlBo7UT-748AssQJtBYcIh0KwCz9dwruszuS-KHRqG89D-2wz1Sg_uRTBA445yJctlfclGJFQKbogFvAPCF31lYCi6yLYhNhbzoiIRV3ZtPowKox0yoBXcopLT6x8W-YReQRDuHHu51La33Lu2Xsd8tkYu_4JXlrmTkPxfznUuZ3SArZguww5rirY06V7pLKE54kOMgwQ_Z7bCb2PYbuvXAg2G_h7TgGQIaNuQm2mMEVb_0lspIVj9cRYm38Yn-yOwb_bFu_2YCQfzfiWmvUyww

3 部署keepalived+nginx

3.1 安装keepalived+nginx(lb1/lb2节点)

bash

root@lb1:~# apt install -y keepalived nginx3.2 配置nginx(lb1/lb2节点)

bash

root@lb1:~# vim /etc/nginx/nginx.conf

-----------------------------------------------

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr [$time_local] $protocol $status $bytes_sent $bytes_received $session_time';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.121.100:6443; # master的APIServer

}

server {

listen 6443;

proxy_pass k8s-apiserver;

proxy_timeout 300s;

proxy_connect_timeout 10s;

}

# 代理K8s Ingress 443端口

upstream k8s_ingress_https {

server 192.168.121.101:443; # K8s节点1

server 192.168.121.102:443; # K8s节点2

server 192.168.121.103:443; # K8s节点3

least_conn; # 最少连接负载均衡

}

server {

listen 443;

proxy_pass k8s_ingress_https;

proxy_timeout 300s;

proxy_connect_timeout 10s;

}

}

# 处理80端口HTTP请求,重定向test.com到HTTPS

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

# test.com 80端口重定向到HTTPS

server {

listen 80;

server_name www.test.com; # 仅匹配test.com域名

return 308 https://$host$request_uri; # 保留完整请求路径

}

server {

listen 80 default_server;

server_name _;

return 404;

}

}

-----------------------------------------------------------------

# 检查nginx配置文件

root@lb1:~# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

# 启动nginx设置开机自启动并检查状态

root@lb1:~# systemctl restart nginx && systemctl enable nginx && systemctl status nginx3.3 配置keepalived(lb1/lb2节点)

lb1 节点

bash

root@lb1:~# vim /etc/keepalived/keepalived.conf

------------------------------------------------

global_defs { router_id LVS_DEVEL }

vrrp_script check_nginx {

script "/usr/bin/pgrep nginx"

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication { auth_type PASS; auth_pass 1111; }

virtual_ipaddress { 192.168.121.188/24; } # VIP

track_script { check_nginx; }

}lb2 节点

bash

root@lb2:~# vim /etc/keepalived/keepalived.conf

------------------------------------

global_defs { router_id LVS_DEVEL }

vrrp_script check_nginx {

script "/usr/bin/pgrep nginx"

interval 2

weight 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication { auth_type PASS; auth_pass 1111; }

virtual_ipaddress { 192.168.121.188/24; }

track_script { check_nginx; }

}

--------------------------------------------

# 启动服务设置开机自启动并检查启动状态

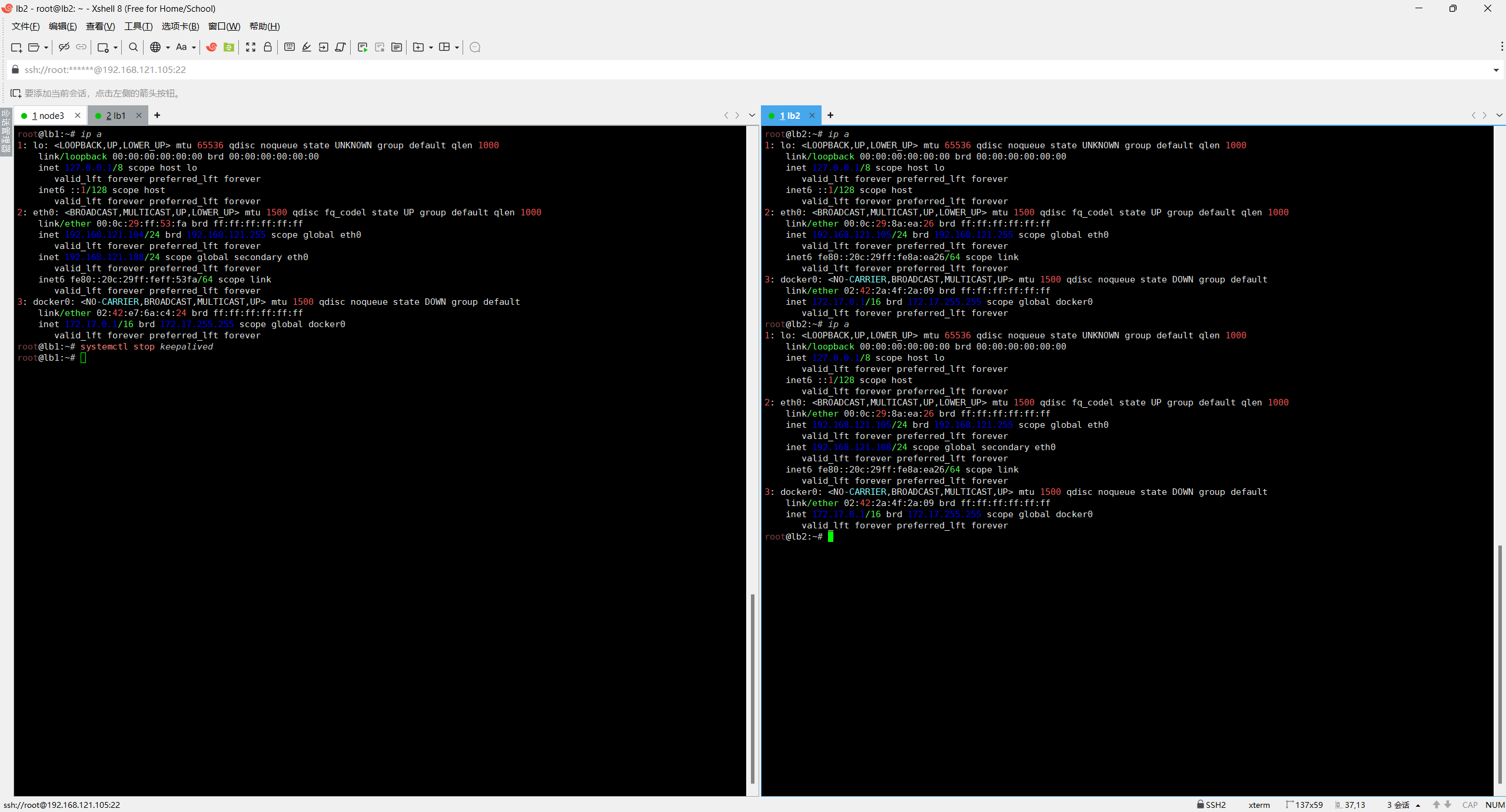

root@lb2:~# systemctl restart keepalived && systemctl enable keepalived && systemctl status keepalived3.4 测试VIP是否正常漂移

关闭lb1的keepalived查看lb2的网卡信息

可以看到lb1模拟宕机后,VIP正常漂移到了lb2节点对外提供服务

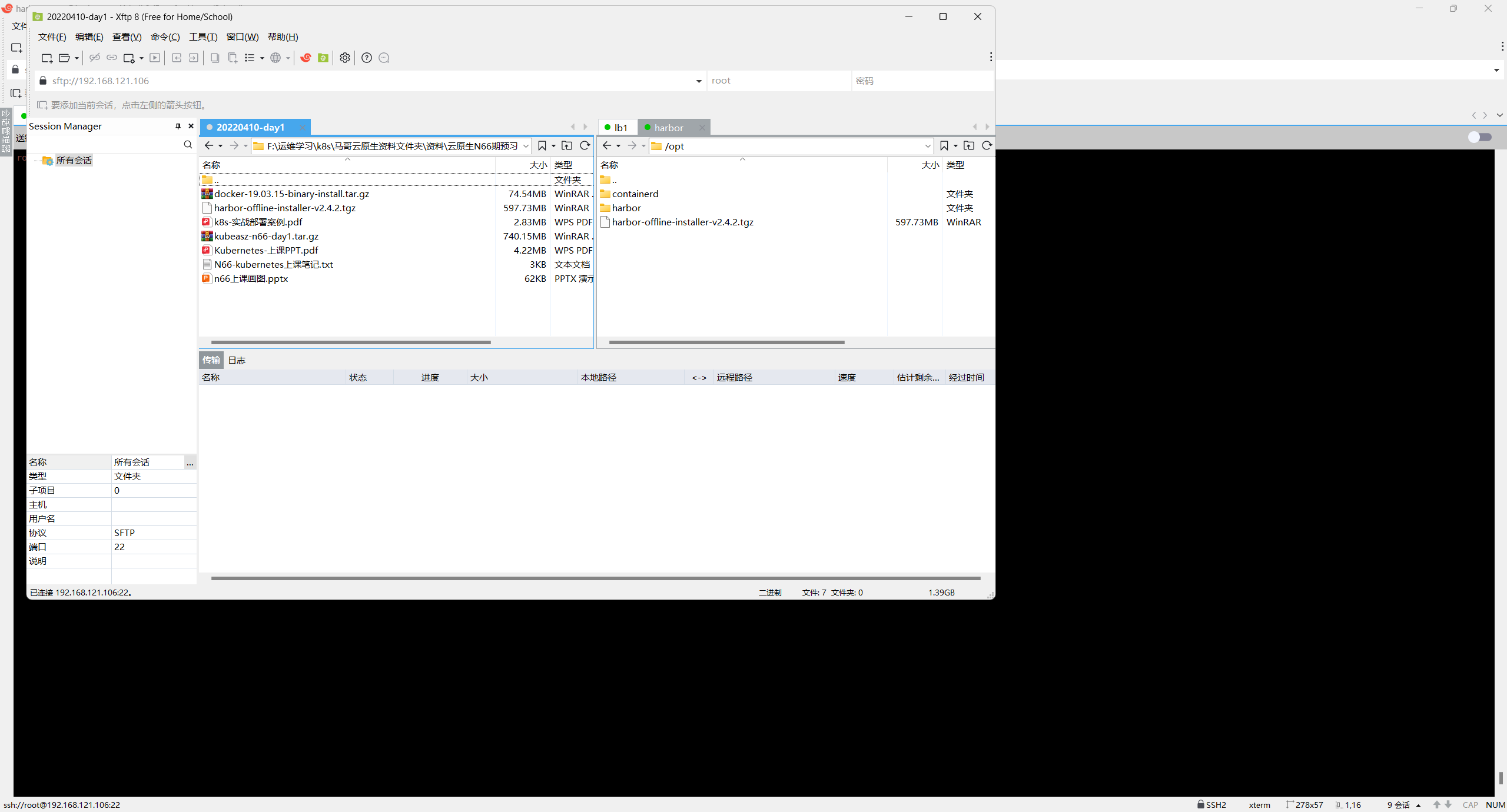

4 部署harbor

上传安装包至harbor服务器

解压安装包

bash

root@harbor:/opt# tar -xvf harbor-offline-installer-v2.4.2.tgz

root@harbor:/opt# cd harbor

root@harbor:/opt/harbor# cp harbor.yml.tmpl harbor.yml # 复制模板文件

# 生成ssl证书

root@harbor:/opt/harbor# mkdir certs

root@harbor:/opt/harbor# cd certs/

root@harbor:/opt/harbor/certs# openssl genrsa -out ./harbor-ca.key

root@harbor:/opt/harbor/certs# openssl req -x509 -new -nodes -key ./harbor-ca.key -subj "/CN=harbor.test.com" -days 7120 -out ./harbor-ca.crt

root@harbor:/opt/harbor/certs# ls

harbor-ca.crt harbor-ca.key

root@harbor:/opt/harbor/certs# cd ..

root@harbor:/opt/harbor# vim harbor.yml

----------------------------------------

# 修改主机域名

hostname: harbor.test.com

# 修改私钥公钥path路径

https:

port: 443

certificate: /opt/harbor/certs/harbor-ca.crt

private_key: /opt/harbor/certs/harbor-ca.key

# 修改harbor密码

harbor_admin_password: 123456安装harbor

bash

root@harbor:/opt/harbor# ./install.sh --with-trivy --with-chartmuseum安装完成之后可以在浏览器输入之前设置的域名harbor.test.com访问web页面

5 部署ingress-nginx 控制器

bash

root@master:~# mkdir -p yaml/ingress

root@master:~# cd yaml/ingress/

# 下载官方ingress-nginx:v1.2.0

root@master:~/yaml/ingress# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.2.0/deploy/static/provider/baremetal/deploy.yaml

root@master:~/yaml/ingress# ls

deploy.yaml

root@master:~/yaml/ingress# mv deploy.yaml ingress-nginx-controller-daemonset.yaml

# 修改yaml文件

root@master:~/yaml/ingress# vim ingress-nginx-controller-daemonset.yaml

-------------------------------

# 注释service

#apiVersion: v1

#kind: Service

#metadata:

# labels:

# app.kubernetes.io/component: controller

# app.kubernetes.io/instance: ingress-nginx

# app.kubernetes.io/name: ingress-nginx

# app.kubernetes.io/part-of: ingress-nginx

# app.kubernetes.io/version: 1.2.0

# name: ingress-nginx-controller

# namespace: ingress-nginx

#spec:

# ipFamilies:

# - IPv4

# ipFamilyPolicy: SingleStack

# ports:

# - appProtocol: http

# name: http

# port: 80

# protocol: TCP

# targetPort: http

# - appProtocol: https

# name: https

# port: 443

# protocol: TCP

# targetPort: https

# selector:

# app.kubernetes.io/component: controller

# app.kubernetes.io/instance: ingress-nginx

# app.kubernetes.io/name: ingress-nginx

# type: NodePort

# 修改资源类型为Daemonset所有节点部署,使用宿主机网络,可以通过node节点主机名+端口号访问

apiVersion: apps/v1

#kind: Deployment

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true #使用宿主机网络

hostPID: true #使用宿主机Pid

------------------------------------

# 更新资源配置文件

root@master:~/yaml/ingress# kubectl apply -f ingress-nginx-controller-daemonset.yaml

# 查看pod启动状态

root@master:~/yaml/ingress# kubectl get pod -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-l7skj 0/1 Completed 0 1m 10.20.135.24 node3 <none> <none>

ingress-nginx-admission-patch-cvmjf 0/1 Completed 0 1m 10.20.104.20 node2 <none> <none>

ingress-nginx-controller-h2lht 1/1 Running 0 1m 192.168.121.102 node2 <none> <none>

ingress-nginx-controller-kdc98 1/1 Running 0 1m 192.168.121.103 node3 <none> <none>

ingress-nginx-controller-lm6g2 1/1 Running 0 1m 192.168.121.101 node1 <none> <none>6 SSL 证书配置(自签名)

bash

# 创建证书目录

root@master:~# mkdir -p /tmp/ssl && cd /tmp/ssl

# 生成自签证书

root@master:~# openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

-keyout tls.key \

-out tls.crt \

-subj "/CN=www.test.com/O=test"

# 存入K8s Secret

root@master:~# kubectl create secret tls test-tls --key tls.key --cert tls.crt

# 检查secret内容

root@master:~# kubectl describe secret test-tls

Name: test-tls

Namespace: default

Labels: <none>

Annotations: <none>

Type: kubernetes.io/tls

Data

====

tls.crt: 1164 bytes

tls.key: 1704 bytes7 ConfigMap 配置(欢迎页 + Harbor 认证)

-

欢迎页cm

bash# 创建目录 root@master:~/yaml# mkdir configmap root@master:~/yaml/configmap# cd configmap # 创建cm文件 root@master:~/yaml/configmap# vim nginx-welcome-cm.yaml ------------------------------------------- apiVersion: v1 kind: ConfigMap metadata: name: welcome-nginx-cm namespace: default data: index.html: | <!DOCTYPE html> <html> <head><title>Welcome</title></head> <body><h1>welcome to test.com</h1></body> </html>

2.harbo认证cm

bash

# base 64加密

root@master:~/yaml/configmap# echo "admin:123456" | base64

YWRtaW46MTIzNDU2Cg==

# 创建cm文件

root@master:~/yaml/configmap# vim harbor-auth-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: harbor-auth-cm

namespace: default

data:

.dockerconfigjson: |

{

"auths": {

"harbor.test.com": {

"username": "admin",

"password": "123456",

"auth": "YWRtaW46MTIzNDU2Cg=="

}

}

}3.应用cm并转为镜像拉取secret

bash

root@master:~/yaml/configmap# kubectl apply -f .

# cm转secret

kubectl create secret generic harbor-registry-secret --from-literal=.dockerconfigjson="$(kubectl get cm harbor-auth-cm -o jsonpath='{.data.\.dockerconfigjson}')" --type=kubernetes.io/dockerconfigjson8 部署动态供应 NFS PV(存储 nginx Log)

- 在harbor节点搭建NFS服务器

bash

# 安装nfs服务

root@harbor:~# apt install -y nfs-kernel-server

# 创建NFS共享目录

root@harbor:~# mkdir -p /data/nfs/nginx-logs

root@harbor:~# chmod -R 777 /data/nfs/nginx-logs

# 配置NFS共享(编辑/etc/exports)

root@harbor:~# echo "/data/nfs/nginx-logs *(rw,sync,no_root_squash,no_subtree_check)" >> /etc/exports

# 生效配置

root@harbor:~# exportfs -r

# 验证NFS共享

root@harbor:~# showmount -e localhost部署 NFS Provisioner

bash

root@master:~/yaml# mkdir StorageClass

root@master:~/yaml# cd StorageClass

root@master:~/yaml/StorageClass# vim nfs-provisioner.yaml

-------------------------------------

apiVersion: v1

kind: Namespace

metadata:

name: nfs-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner-sa

namespace: nfs-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: nfs-provisioner-role

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: nfs-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nfs-provisioner-role

subjects:

- kind: ServiceAccount

name: nfs-provisioner-sa

namespace: nfs-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-provisioner

namespace: nfs-storage

spec:

replicas: 1

selector:

matchLabels:

app: nfs-provisioner

template:

metadata:

labels:

app: nfs-provisioner

spec:

serviceAccountName: nfs-provisioner-sa

containers:

- name: nfs-provisioner

image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

imagePullPolicy: IfNotPresent

env:

- name: PROVISIONER_NAME

value: nfs-provisioner # 存储类的provisioner需匹配此值

- name: NFS_SERVER

value: 192.168.121.106 # NFS服务器IP(harbor节点IP)

- name: NFS_PATH

value: /data/nfs/nginx-logs # NFS共享目录

volumeMounts:

- name: nfs-volume

mountPath: /persistentvolumes

volumes:

- name: nfs-volume

nfs:

server: 192.168.121.106 # NFS服务器IP

path: /data/nfs/nginx-logs

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-sc

provisioner: nfs-provisioner # 匹配Deployment中的PROVISIONER_NAME

reclaimPolicy: Delete

volumeBindingMode: Immediate

parameters:

archiveOnDelete: "false" # 删除PVC时不归档数据更新资源配置文件

bash

root@master:~/yaml/StorageClass# kubectl apply -f nfs-provisioner.yaml- 创建rwx模式的pvc

bash

root@master:~/yaml/StorageClass# vim nginx-accesslog-nfs-pvc.yaml

---------------------------------

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-accesslog-pvc

namespace: default

spec:

accessModes: [ReadWriteMany] # 支持多节点/多Pod读写

resources: { requests: { storage: 1Gi } }

storageClassName: nfs-sc # NFS存储类

# 更新资源配置文件

root@master:~/yaml/StorageClass# kubectl apply -f nginx-accesslog-nfs-pvc.yaml9 部署nginx应用 (deployment+svc)

- 在k8s集群各个节点建立存放ca证书的目录

bash

root@master:~# mkdir -p /etc/docker/certs.d/harbor.test.com

root@node1:~# mkdir -p /etc/docker/certs.d/harbor.test.com

root@node2:~# mkdir -p /etc/docker/certs.d/harbor.test.com

root@node3:~# mkdir -p /etc/docker/certs.d/harbor.test.com- 分发harbor ca证书到k8s集群各个节点

bash

root@harbor:/opt/harbor/certs# scp harbor-ca.crt master:/etc/docker/certs.d/harbor.test.com

root@harbor:/opt/harbor/certs# scp harbor-ca.crt node1:/etc/docker/certs.d/harbor.test.com

root@harbor:/opt/harbor/certs# scp harbor-ca.crt node2:/etc/docker/certs.d/harbor.test.com

root@harbor:/opt/harbor/certs# scp harbor-ca.crt node3:/etc/docker/certs.d/harbor.test.com- docker拉取nginx镜像并上传到harbo镜像仓库

bash

root@master:~# docker pull nginx

root@master:~# docker login harbor.test.com

root@master:~# docker tag nginx:latest harbor.test.com/library/nginx:latest

root@master:~# docker push harbor.test.com/library/nginx:latest- 创建deployment文件

bash

root@master:~/yaml# mkdir deploy

root@master:~/yaml# cd deploy

root@master:~/yaml/deploy# vim nginx-deployment.yaml

-------------------------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: nginx

# 滚动更新配置

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

template:

metadata:

labels:

app: nginx

spec:

imagePullSecrets:

- name: harbor-registry-secret # 拉取Harbor私有镜像的密钥

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution: # 软亲和:优先分散,不匹配也能调度

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values: [nginx]

topologyKey: kubernetes.io/hostname

containers:

- name: nginx

image: harbor.test.com/library/nginx:latest

ports:

- containerPort: 80

# 挂载ConfigMap

volumeMounts:

- name: welcome-page

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

- name: accesslog

mountPath: /var/log/nginx

# 资源限制

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 200m

memory: 256Mi

# 健康检查

livenessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 5

periodSeconds: 10

readinessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 3

periodSeconds: 5

volumes:

- name: welcome-page

configMap:

name: welcome-nginx-cm

items:

- key: index.html

path: index.html

- name: accesslog

persistentVolumeClaim:

claimName: nginx-accesslog-pvc

------------------------------------------ 创建service文件

bash

root@master:~/yaml/deploy# vim nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: default

spec:

selector: { app: nginx }

ports: [{ port: 80, targetPort: 80 }]

type: ClusterIP- 更新资源配置文件

bash

root@master:~/yaml/deploy# kubectl apply -f .

# 检查pod启动状态

root@master:~/yaml/deploy# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-56fcf7c4c9-4tcpg 1/1 Running 0 1m 10.20.104.10 node2 <none> <none>

nginx-deployment-56fcf7c4c9-6sjf9 1/1 Running 0 1m 10.20.135.14 node3 <none> <none>

nginx-deployment-56fcf7c4c9-n6zjl 1/1 Running 0 1m 10.20.166.144 node1 <none> <none>10 ingress规则

创建ingress文件

bash

root@master:~/yaml# cd ingress

root@master:~/yaml/ingress# vim ingress-test.yaml

----------------------------------------

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: test-ingress

namespace: default

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true" # 强制HTTP→HTTPS

nginx.ingress.kubernetes.io/force-ssl-redirect: "true" # 增强HTTPS重定向

nginx.ingress.kubernetes.io/proxy-connect-timeout: "30" # 后端连接超时

nginx.ingress.kubernetes.io/proxy-read-timeout: "60" # 后端读超时

nginx.ingress.kubernetes.io/backend-protocol: "HTTP" # 后端协议

spec:

ingressClassName: nginx

tls: # 关联SSL Secret

- hosts: [www.test.com]

secretName: test-tls

rules:

- host: www.test.com

http:

paths:

- pathType: Prefix

backend:

path: "/"

service:

name: nginx-service

port:

number: 80

-----------------------------

# 更新资源配置文件

root@master:~/yaml/ingress# kubectl apply -f ingress-test.yaml

# 验证ingress

root@master:~/yaml/ingress# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

test-ingress nginx www.test.com 192.168.121.101,192.168.121.102,192.168.121.103 80, 443 24m11 Prometheus + Grafana 监控

11.1 部署node-exporter

11.1.1 前置准备

bash

# 创建监控命名空间

root@master:~# kubectl create ns monitoring

# docker拉取node-exporter镜像并上传harbor镜像仓库减少拉取时间

root@master:~# docker pull prom/node-exporter:v1.8.1

root@master:~# docker tag prom/node-exporter:v1.8.1 harbor.test.com/monitoring/node-exporter:v1.8.1

root@master:~# docker push harbor.test.com/monitoring/node-exporter:v1.8.1

# docker拉取prom/prometheus镜像并上传harbor镜像仓库减少拉取时间

root@master:~# docker pull prom/prom/prometheus:v2.53.1

root@master:~# docker tag prom/prom/prometheus:v2.53.1 harbor.test.com/monitoring/prometheus:v2.53.1

root@master:~# docker push harbor.test.com/monitoring/prometheus:v2.53.1

# docker拉取grafana/grafana镜像并上传harbor镜像仓库减少拉取时间

root@master:~# docker pull prom/grafana/grafana:v11.2.0

root@master:~# docker tag grafana/grafana:v11.2.0 harbor.test.com/monitoring/grafana:v11.2.0

root@master:~# docker push harbor.test.com/monitoring/grafana:v11.2.0

# docker拉取blackbox-exporter镜像并上传harbor镜像仓库减少拉取时间

root@master:~# docker pull prom/blackbox-exporter:v0.24.0

root@master:~# docker tag prom/blackbox-exporter:v0.24.0 harbor.test.com/monitoring/blackbox-exporter:v0.24.0

root@master:~# docker push harbor.test.com/monitoring/blackbox-exporter:v0.24.011.1.2 创建yaml并部署node-exporter

bash

root@master:~/yaml# mkdir monitoring

root@master:~/yaml# cd monitoring

root@master:~/yaml/monitoring# vim node-exporter.yaml

-----------------------------

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

tolerations:

- key: "node-role.kubernetes.io/master" # 对应 Master 污点的 Key

operator: "Exists" # 只要 Key 存在即可(无需匹配 Value)

effect: "NoSchedule" # 对应 Master 污点的 Effect

hostNetwork: true # 访问主机网络

hostPID: true

imagePullSecrets: [{ name: harbor-registry-secret }]

containers:

- name: node-exporter

image: harbor.test.com/monitoring/node-exporter:v1.8.1

args:

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --collector.filesystem.ignored-mount-points=^/(sys|proc|dev|host|etc)($|/)

securityContext:

privileged: true

volumeMounts:

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

volumes:

- name: proc

hostPath:

path: /proc

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

---

# NodeExporter Service(供Prometheus抓取)

apiVersion: v1

kind: Service

metadata:

name: node-exporter

namespace: monitoring

labels:

app: node-exporter

spec:

selector:

app: node-exporter

ports:

- name: metrics

port: 9100

targetPort: 9100

type: ClusterIP

# 更新配置文件

root@master:~/yaml/monitoring# kubectl apply -f node-exporter.yaml

# 验证是否所有节点都持有node-export

root@master:~/yaml/monitoring# kubectl get pods -n monitoring -l app=node-exporter -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-2lm7j 1/1 Running 0 5m 192.168.121.103 node3 <none> <none>

node-exporter-cvc2k 1/1 Running 0 5m 192.168.121.101 node1 <none> <none>

node-exporter-lxf86 1/1 Running 0 5m 192.168.121.100 master <none> <none>

node-exporter-q2vtd 1/1 Running 0 5m 192.168.121.102 node2 <none> <none>11.2 部署 Prometheus Server

11.2.1 配置 Prometheus RBAC 权限

bash

root@master:~/yaml/monitoring# vim prometheus-rbac.yaml

-----------------------------

# 允许Prometheus访问K8s资源和指标

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources:

- configmaps

verbs: ["get"]

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics", "/metrics/cadvisor"]

verbs: ["get"]

---

# 绑定集群角色到monitoring命名空间的default账户

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: default

namespace: monitoring

-------------------------------------11.2.2 配置 Prometheus 抓取规则

bash

root@master:~/yaml/monitoring# vim prometheus-config.yaml

------------------------------------------

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

# harbo节点

- job_name: 'harbor-node-exporter'

static_configs:

- targets: ['192.168.121.106:9100']

# lb主备节点

- job_name: 'lb-node-exporter'

static_configs:

- targets: ['192.168.121.104:9100','192.168.121.105:9100']

# 抓取Prometheus自身指标

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

# 抓取k8s集群节点NodeExporter指标

- job_name: 'k8s-node-exporter'

kubernetes_sd_configs:

- role: endpoints

namespaces:

names: ['monitoring']

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app]

regex: node-exporter

action: keep

- source_labels: [__meta_kubernetes_endpoint_port_name]

regex: metrics

action: keep

# 抓取Blackbox Exporter(页面监控)指标

- job_name: 'blackbox-exporter'

metrics_path: /probe

params:

module: [http_2xx] # 检测HTTP 200状态

kubernetes_sd_configs:

- role: endpoints

namespaces:

names: ['monitoring']

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app]

regex: blackbox-exporter

action: keep

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-exporter.monitoring.svc:9115 # Blackbox Service地址

- source_labels: [instance]

regex: (.*)

target_label: target

replacement: ${1}

# 抓取K8s集群组件指标(APIServer)

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

regex: default;kubernetes;https

action: keep11.2.3 部署 Prometheus Deployment + Service

bash

root@master:~/yaml/monitoring# vim prometheus-deployment.yaml

--------------------------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: monitoring

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

imagePullSecrets: [{ name: harbor-registry-secret }]

containers:

- name: prometheus

image: harbor.test.com/monitoring/prometheus:v2.53.1

args:

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus

- --web.console.libraries=/usr/share/prometheus/console_libraries

- --web.console.templates=/usr/share/prometheus/consoles

ports:

- containerPort: 9090

volumeMounts:

- name: prometheus-config

mountPath: /etc/prometheus

- name: prometheus-storage

mountPath: /prometheus

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 500m

memory: 512Mi

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

- name: prometheus-storage

emptyDir: {}

---

# Prometheus Service

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitoring

spec:

selector:

app: prometheus

ports:

- port: 9090

targetPort: 9090

nodePort: 30090 # 固定NodePort端口

type: NodePort11.2.4 应用prometheus所有配置

bash

root@master:~/yaml/monitoring# kubectl apply -f .

# 验证Prometheus Pod运行状态

root@master:~/yaml/monitoring# kubectl get pods -n monitoring -l app=prometheus

NAME READY STATUS RESTARTS AGE

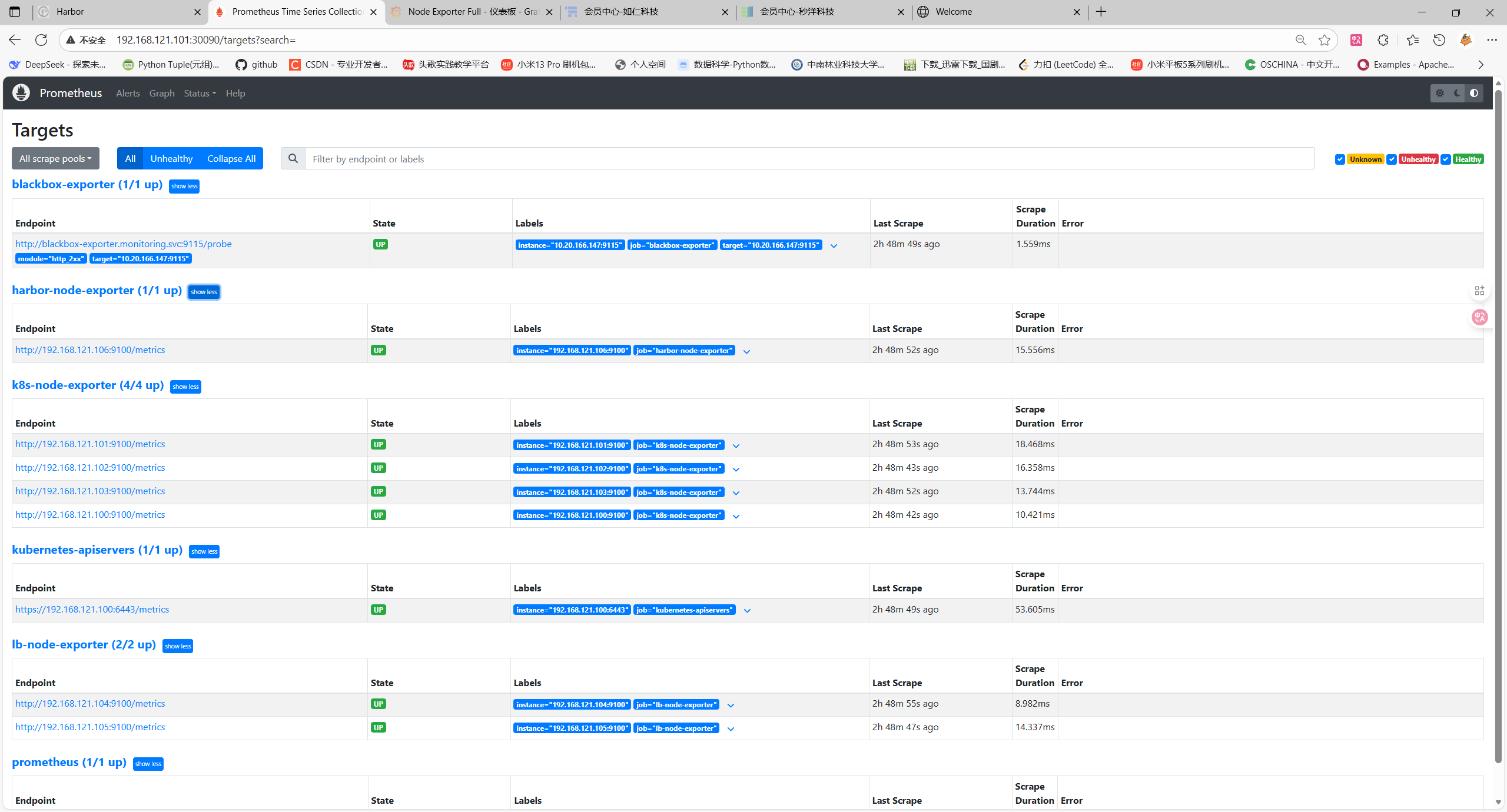

prometheus-8469769d7c-vrq9n 1/1 Running 0 3h6m访问验证192.168.121.101:30090

11.3 部署 Grafana

11.3.1 部署 Grafana Deployment + Service

bash

root@master:~/yaml/monitoring# vim grafana-deployment.yaml

------------------------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitoring

labels:

app: grafana

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

imagePullSecrets: [{ name: harbor-registry-secret }]

containers:

- name: grafana

image: harbor.test.com/monitoring/grafana:11.2.0

ports:

- containerPort: 3000

env:

- name: GF_SECURITY_ADMIN_PASSWORD

value: "admin123" # Grafana管理员密码

- name: GF_USERS_ALLOW_SIGN_UP

value: "false"

volumeMounts:

- name: grafana-storage

mountPath: /var/lib/grafana

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 200m

memory: 256Mi

volumes:

- name: grafana-storage

emptyDir: {}

---

# Grafana Service(NodePort暴露)

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

spec:

selector:

app: grafana

ports:

- port: 3000

targetPort: 3000

nodePort: 30030 # 固定NodePort端口

type: NodePort

-----------------------------------11.3.2 应用 Grafana 配置

bash

root@master:~/yaml/monitoring# kubectl apply -f grafana-deployment.yaml

# 验证Grafana Pod运行

root@master:~/yaml/monitoring# kubectl get pods -n monitoring -l app=grafana

NAME READY STATUS RESTARTS AGE

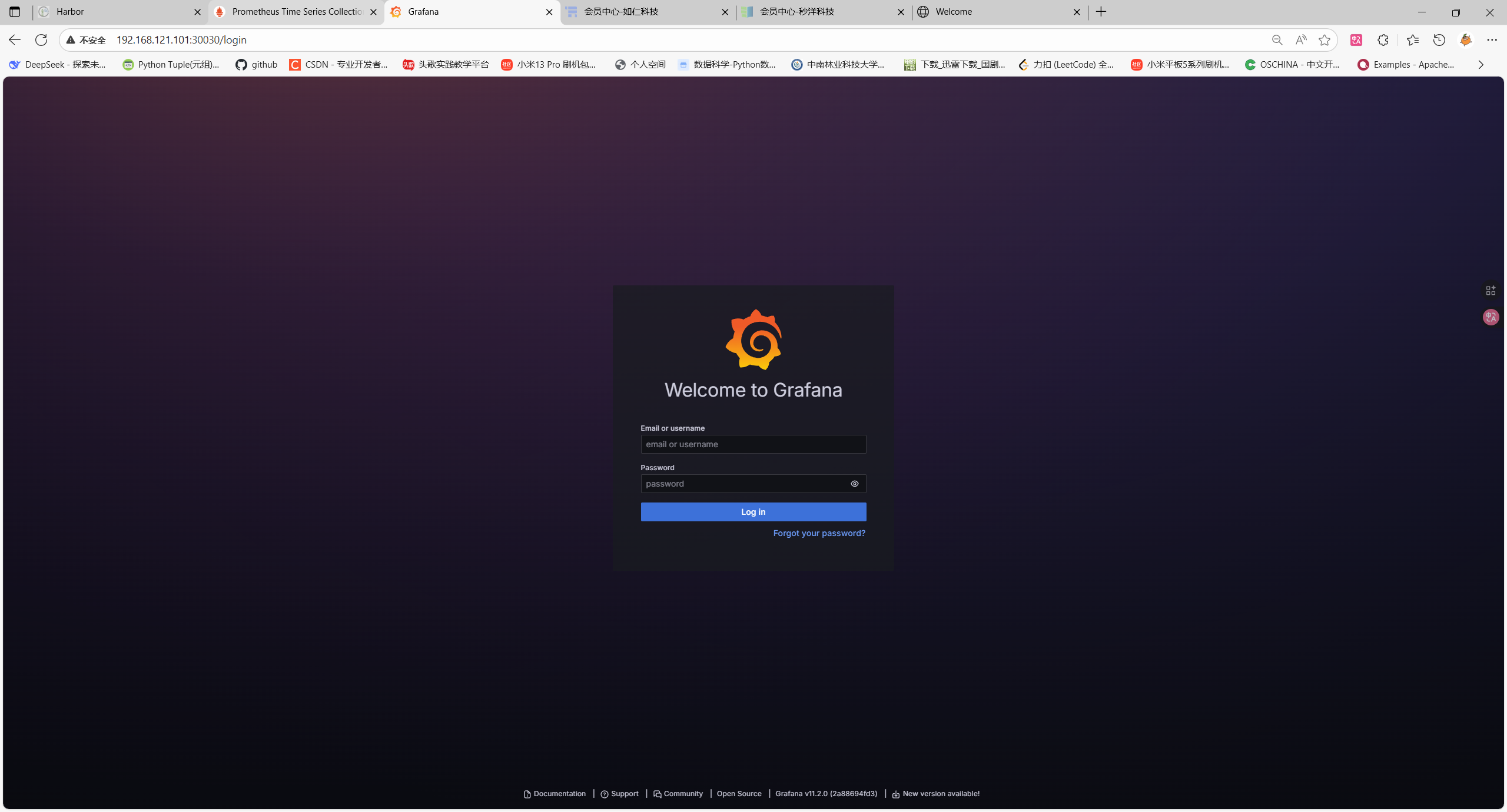

grafana-844d4f8bdb-58wsc 1/1 Running 0 3h18m访问验证192.168.121.101:30030

11.3.3 配置 Grafana 数据源

-

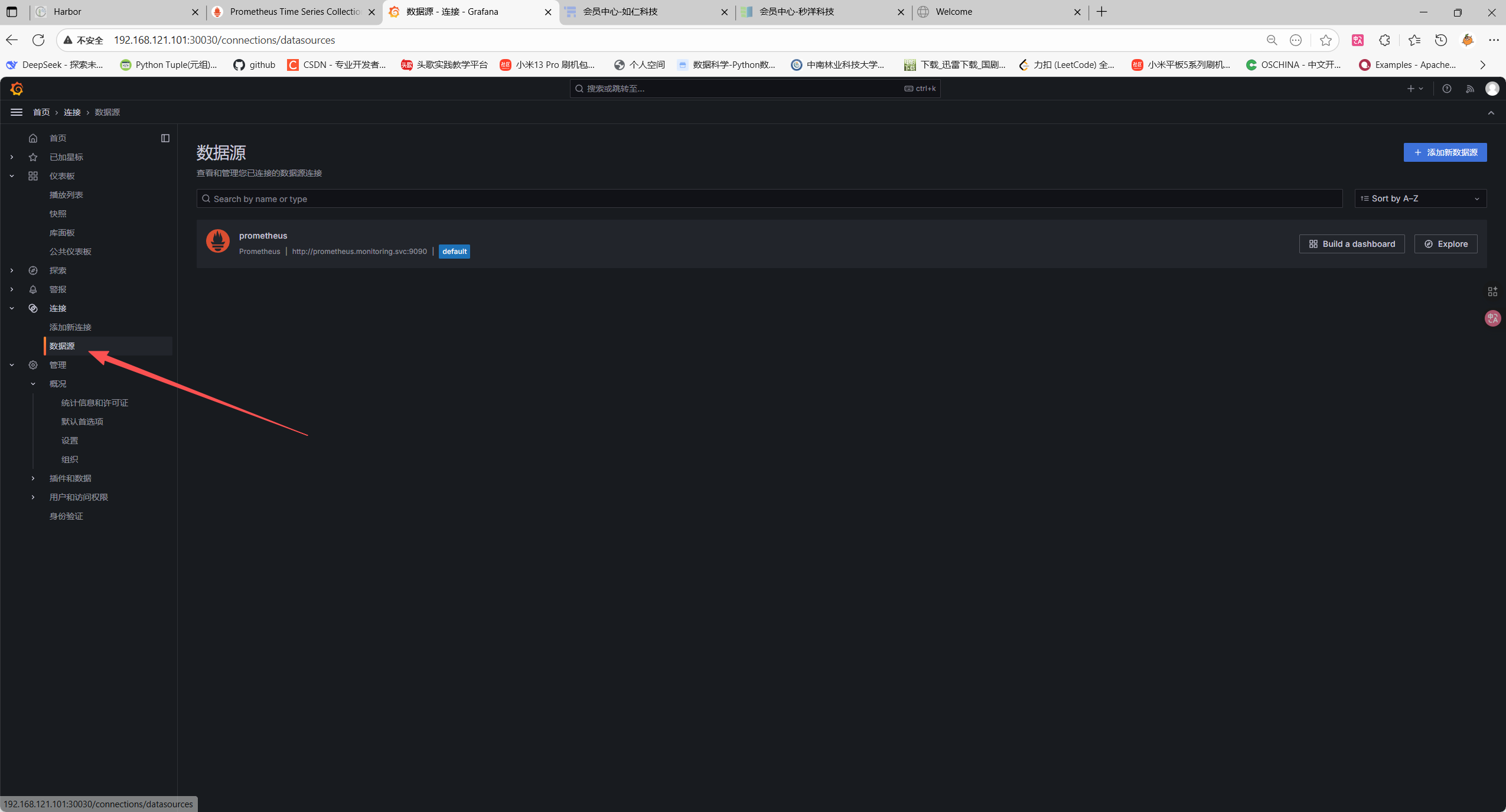

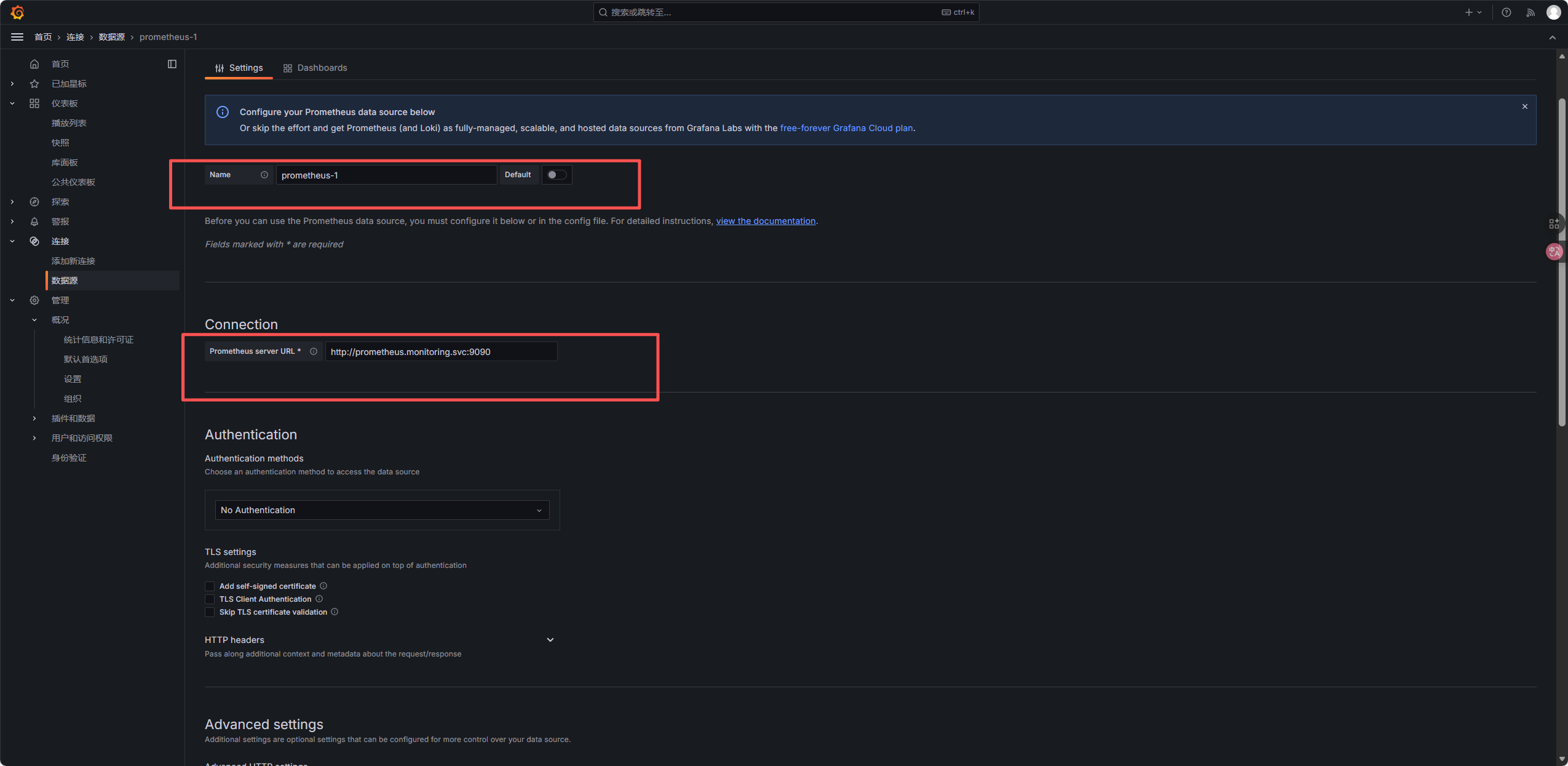

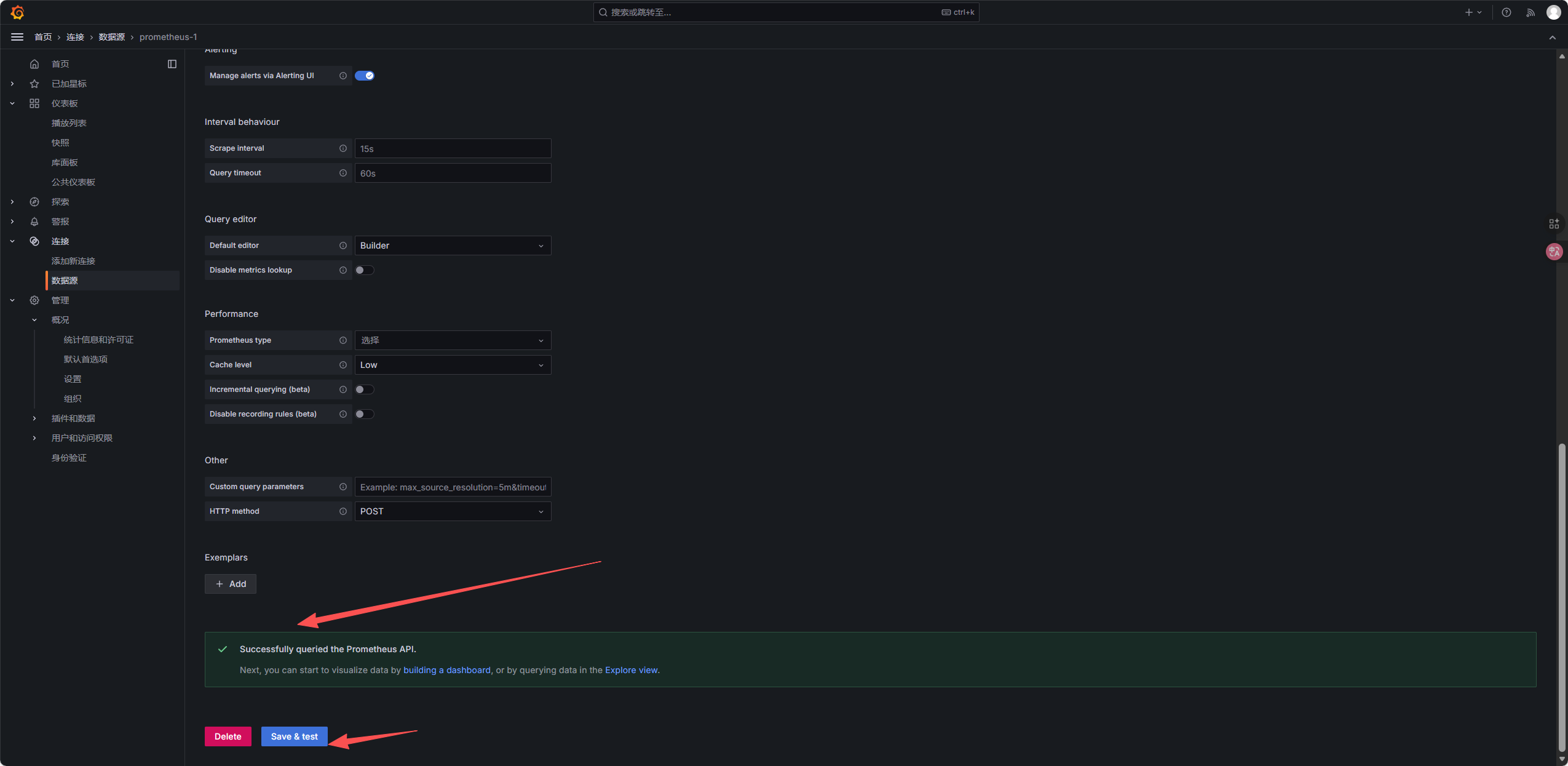

登录 Grafana 后,点击左侧 连接->数据源->添加新数据源;

-

选择Prometheus,配置 URL 为:

http://prometheus.monitoring.svc:9090(K8s 内部 Service 地址);

-

点击Save & test,提示Successfully queried the Prometheus API即配置成功。

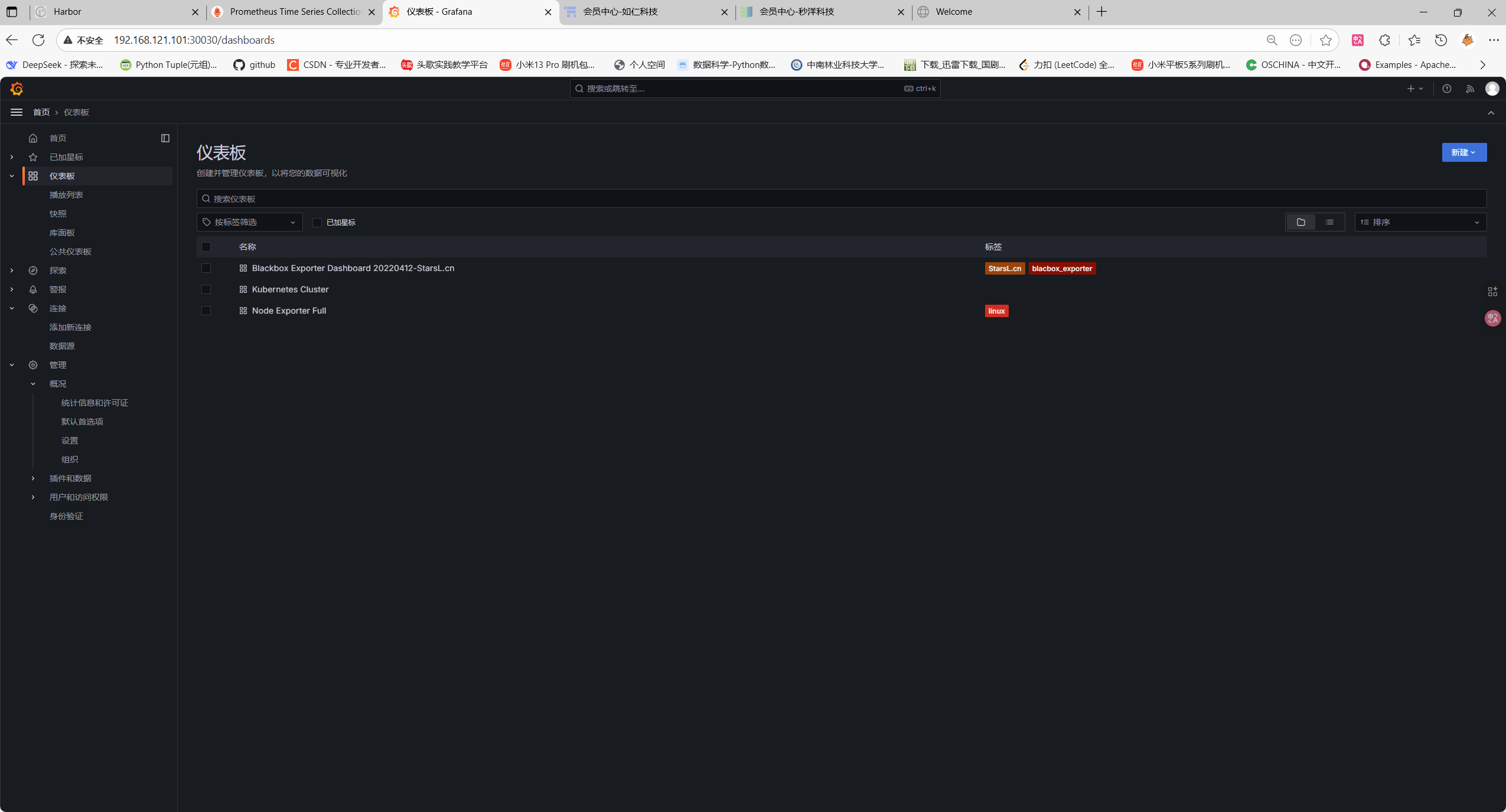

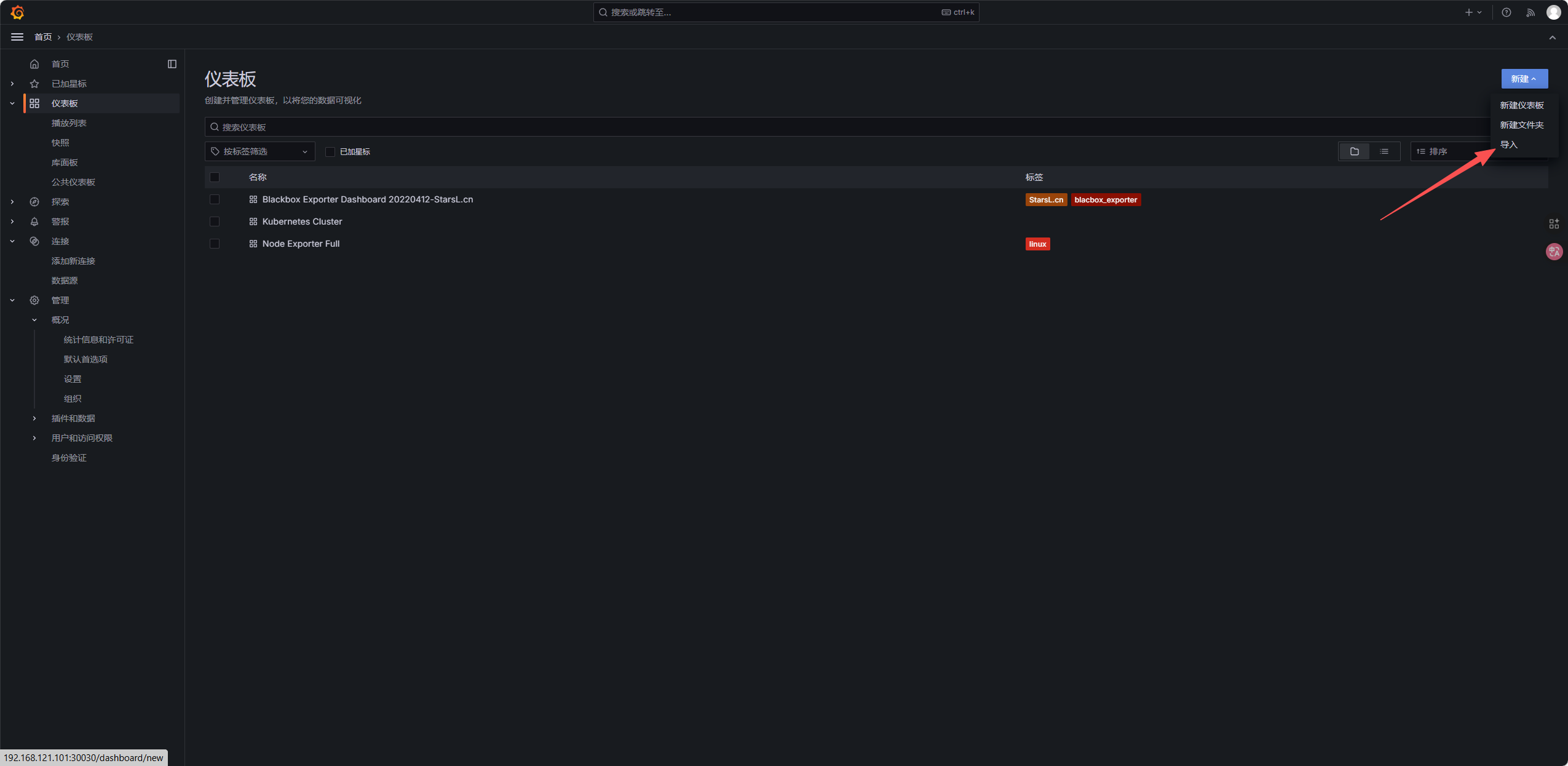

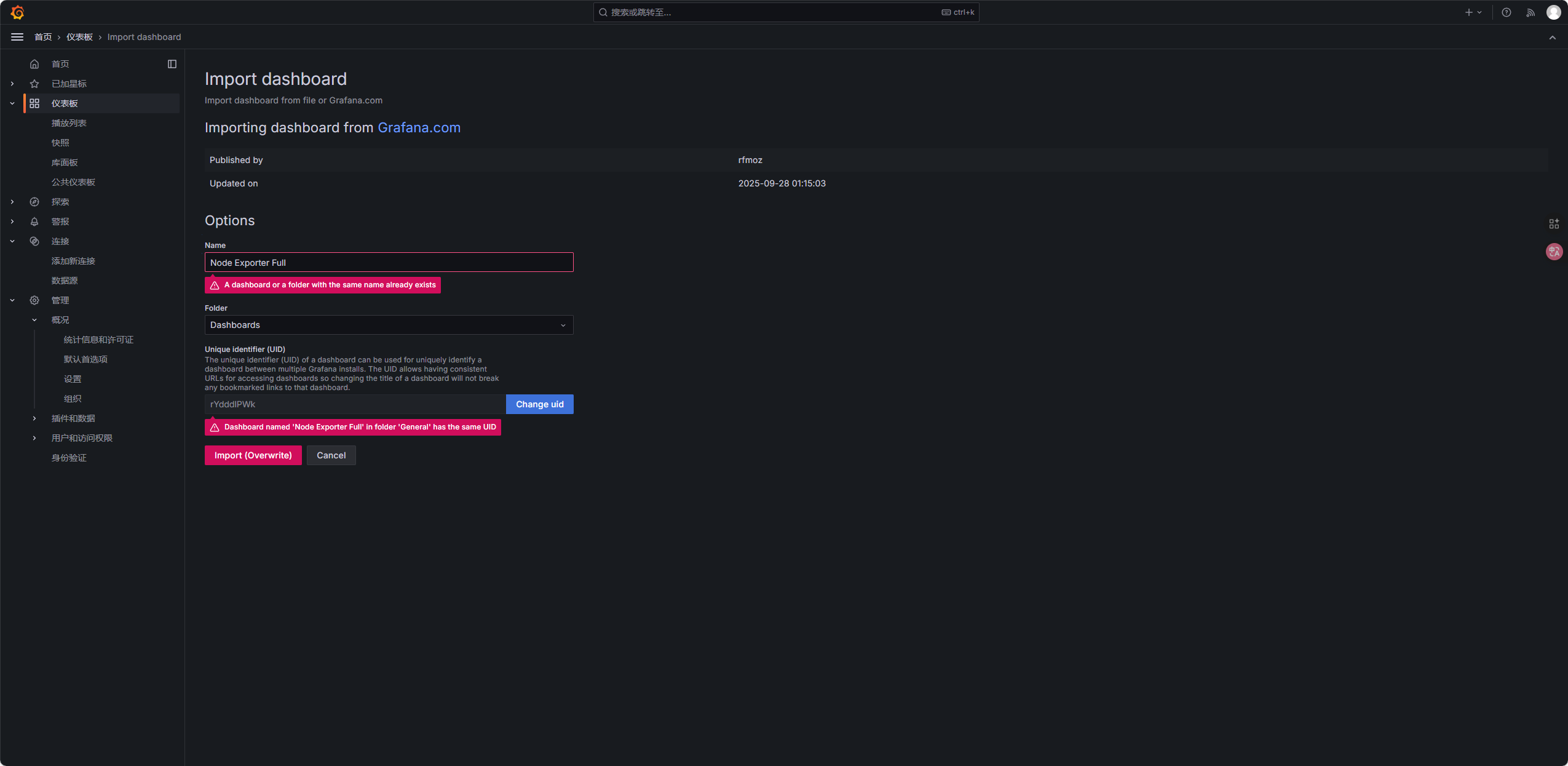

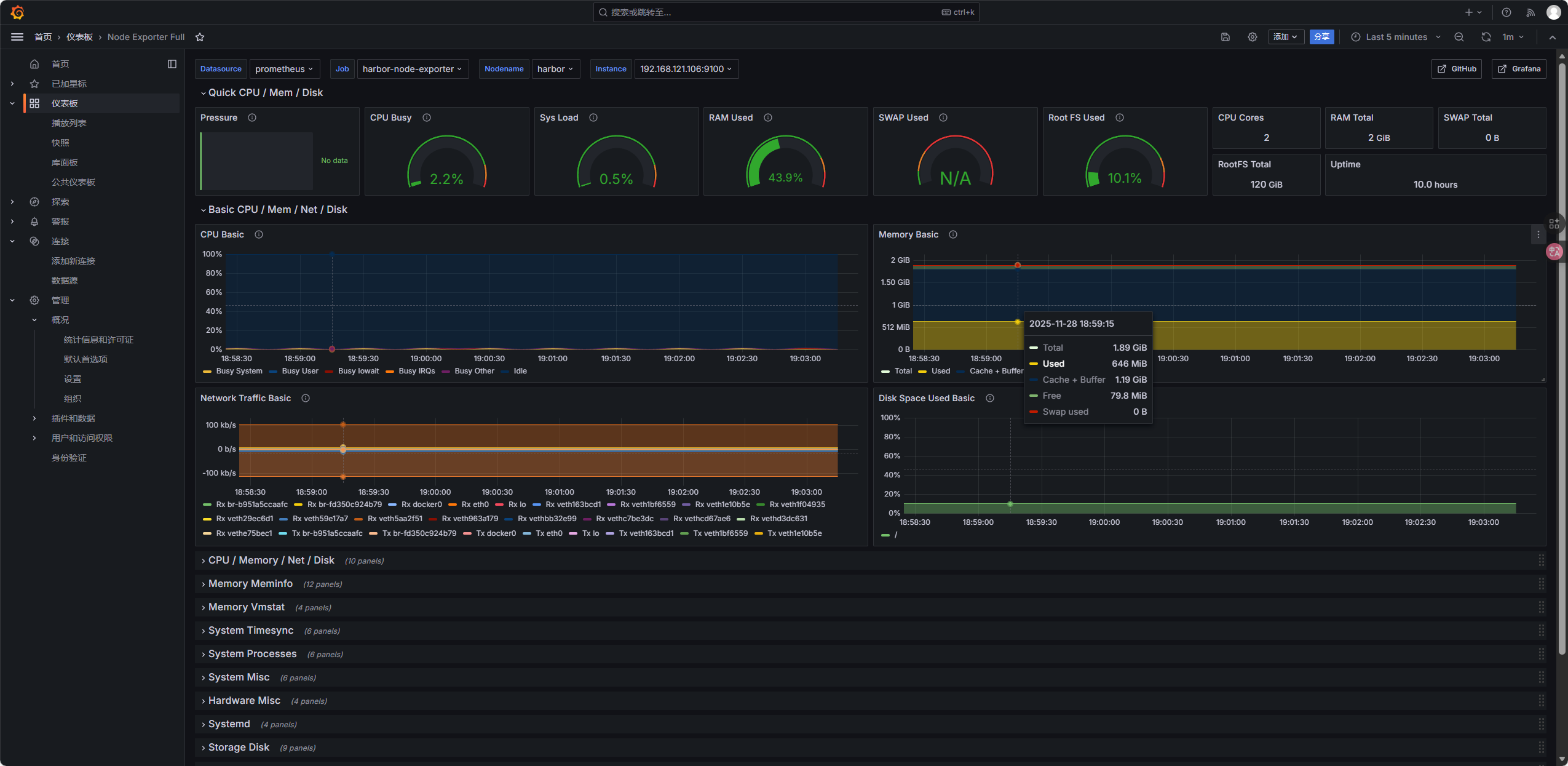

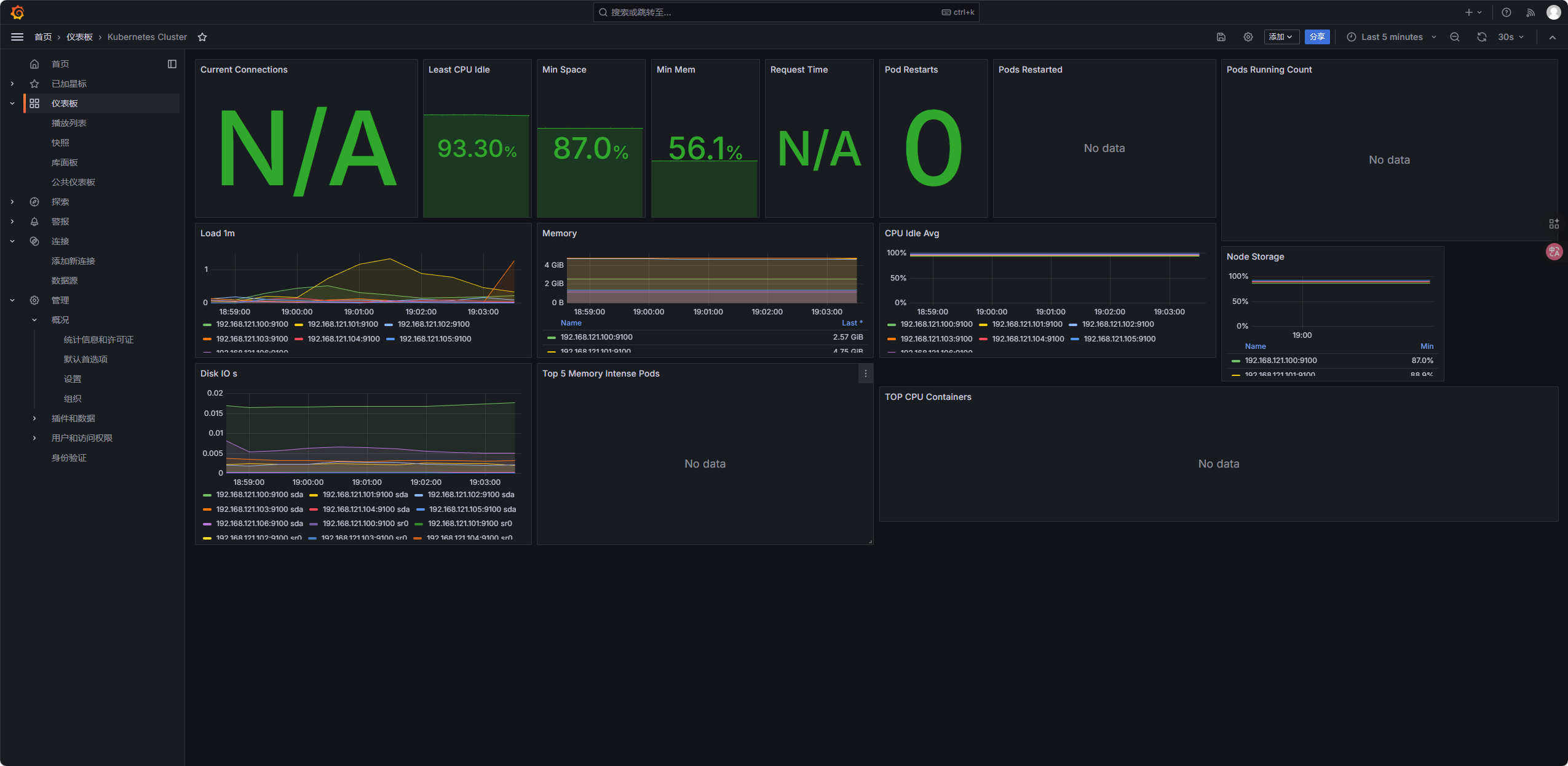

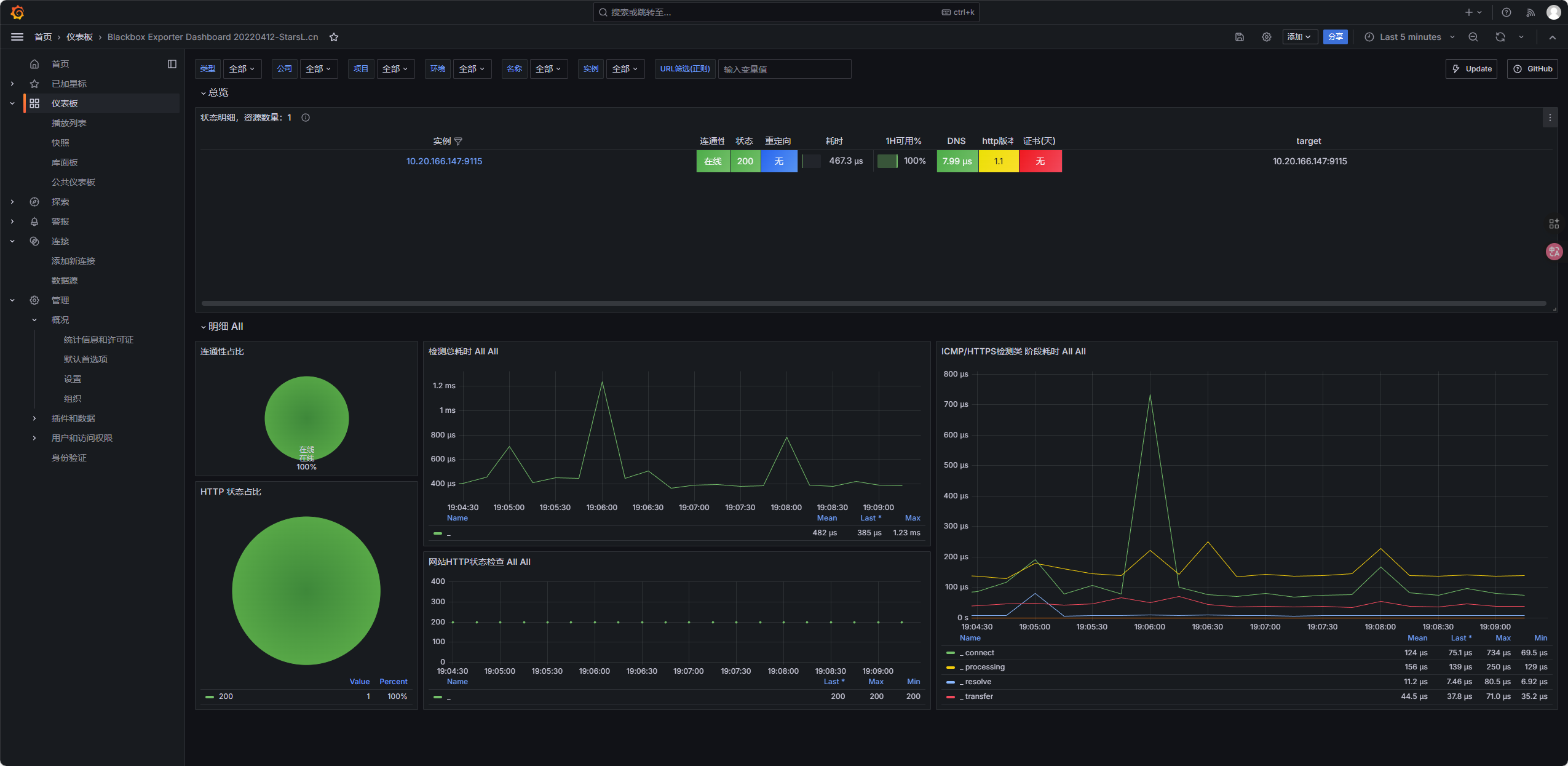

11.3.4 导入 Grafana 仪表盘

- 点击左侧仪表板->左侧新建导入

- 输入仪表盘 ID,点击「Load」:

- 节点状态监控:

1860(Node Exporter Full,节点 CPU / 内存 / 磁盘); - K8s 集群监控:

7249(Kubernetes Cluster Monitoring,集群组件); - 页面可用性监控:

9965(Blackbox Exporter Dashboard,后续部署 Blackbox 后生效);

11.4 部署Blackbox Exporter(页面可用性监控)

用于检测https://www.test.com页面是否存活(返回 200 状态码),并将指标推送给 Prometheus:

bash

root@master:~/yaml/monitoring# vim blackbox-exporter.yaml

--------------------------------

# Blackbox配置:检测HTTP 200状态,跳过自签SSL证书验证

apiVersion: v1

kind: ConfigMap

metadata:

name: blackbox-config

namespace: monitoring

data:

blackbox.yml: |

modules:

http_2xx:

prober: http

timeout: 5s

http:

valid_status_codes: [200]

tls_config:

insecure_skip_verify: true # 适配自签SSL证书

follow_redirects: true

---

# Blackbox Deployment(核心:添加启动参数指定配置文件)

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox-exporter

namespace: monitoring

labels:

app: blackbox-exporter

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

containers:

- name: blackbox-exporter

image: harbor.test.com/monitoring/blackbox-exporter:v0.24.0

args: # 关键:显式指定配置文件路径

- --config.file=/etc/blackbox_exporter/blackbox.yml

ports:

- containerPort: 9115

volumeMounts:

- name: blackbox-config

mountPath: /etc/blackbox_exporter # 挂载ConfigMap到该目录

readOnly: true

securityContext:

runAsUser: 0

runAsGroup: 0

volumes:

- name: blackbox-config

configMap:

name: blackbox-config

# 显式指定挂载的文件(确保文件名和路径匹配)

items:

- key: blackbox.yml

path: blackbox.yml

---

# Blackbox Service

apiVersion: v1

kind: Service

metadata:

name: blackbox-exporter

namespace: monitoring

labels:

app: blackbox-exporter

spec:

selector:

app: blackbox-exporter

ports:

- port: 9115

targetPort: 9115

type: ClusterIP

---

# 页面宕机告警规则(PrometheusRule)

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-alert-rules

namespace: monitoring

data:

alert-rules.yml: |

groups:

- name: test-page-alerts

rules:

- alert: TestPageDown

expr: probe_success{target="https://www.test.com"} == 0

for: 1m

labels:

severity: critical

annotations:

summary: "www.test.com 页面不可用"

description: "页面https://www.test.com已连续1分钟返回非200状态码,请检查服务!"应用配置

bash

root@master:~/yaml/monitoring# kubectl apply -f blackbox-exporter.yaml

# 检查是否正常启动

root@master:~/yaml/monitoring# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

blackbox-exporter-bfbd654b9-qdnfz 1/1 Running 0 1m验证监控

12 部署Jenkins 实现 CI/CD

12.1 前置准备

- 创建jenkisn 命名空间以及yaml存放目录

bash

root@master:~/yaml# kubectl create namespace jenkins

root@master:~/yaml# mkdir jenkins-

在nfs服务器创建共享目录

bashroot@harbor:~# mkdir -p /data/nfs/jenkins-data root@harbor:~# chmod -R 777 /data/nfs/jenkins-data root@harbor:~# echo "/data/nfs/jenkins-data *(rw,sync,no_root_squash,no_subtree_check)" >> /etc/exports root@harbor:~# exportfs -r root@harbor:~# showmount -e localhost -

下载jenkins:lts镜像并上传harbo镜像仓库

bash

root@master:~/yaml# docker pull jenkins/jenkins:lts

root@master:~/yaml# docker tag jenkins/jenkins:lts harbor.test.com/jenkins/jenkins:lts

root@master:~/yaml# docker push harbor.test.com/jenkins/jenkins:lts后期执行构建时遇到的一些问题

-

Jenkins容器内无docker导致构建镜像失败

-

镜像构建成功后,上传harbor仓库失败,原因是没有配置ip 域名映射,docker login时域名解析不到ip(在yaml文件内新增hostAliases域名解析解决),没有配置harbor的ca证书

-

jenkins容器内无kubectl导致更新资源配置文件失败

解决方案:基于harbor.test.com/jenkins/jenkins:lts 基础镜像进行增量配置持久化生效

bashroot@master:~/yaml/jenkins# mkdir dockerfile root@master:~/yaml/jenkins# cd dockerfile # 准备下载好的docker安装包 root@master:~/yaml/jenkins/dockerfile# curl -L --retry 3 --connect-timeout 10 https://mirrors.aliyun.com/docker-ce/linux/static/stable/x86_64/docker-20.10.24.tgz -o docker.tgz root@master:~/yaml/jenkins/dockerfile# tar xzf docker.tgz # 复制kubectl二进制文件 root@master:~/yaml/jenkins/dockerfile# cp /usr/bin/kubectl /root/yaml/jenkins/dockerfile/ # 复制harbor的ca证书 root@master:~/yaml/jenkins/dockerfile# cp /etc/docker/certs.d/harbor.test.com/harbor-ca.crt /root/yaml/jenkins/dockerfile/ root@master:~/yaml/jenkins/dockerfile# ls kubectl docker harbor-ca.crt # 编写dockerfile root@master:~/yaml/jenkins/dockerfile# vim Dockerfile ---------------------------- FROM harbor.test.com/jenkins/jenkins:lts USER root WORKDIR /usr/local/src RUN echo "nameserver 223.5.5.5" > /etc/resolv.conf \ && echo "nameserver 8.8.8.8" >> /etc/resolv.conf # 创建 harbor 证书目录并复制证书 RUN mkdir -p /etc/docker/certs.d/harbor.test.com COPY harbor-ca.crt /etc/docker/certs.d/harbor.test.com/ COPY docker /usr/local/src/ COPY docker/docker /usr/bin/ RUN chmod +x /usr/bin/docker RUN export DOCKER_API_VERSION=1.40 COPY kubectl /usr/local/bin/ RUN chmod +x /usr/local/bin/kubectl USER jenkins --------------------------------- # 执行构建 root@master:~/yaml/jenkins/dockerfile# docker build -t jenkins:v1 . # 上传至harbor镜像仓库 root@master:~/yaml/jenkins/dockerfile# docker tag jenkins:v1 harbor.test.com/jenkins/jenkins:v1 root@master:~/yaml/jenkins/dockerfile# docker push harbor.test.com/jenkins/jenkins:v1

12.2 配置 jenkins RBAC 权限

bash

root@master:~/yaml/jenkins# vim jenkins-rbac.yaml

--------------------------

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: jenkins

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: ["apps"]

resources: ["deployments"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list", "create", "update", "patch", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: jenkins

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

-------------------------------------12.3 创建NFS 的deploy

bash

root@master:~/yaml/jenkins# vim nfs-provisioner-deploy.yaml

------------------------------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

namespace: jenkins

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME # 供应器名称(后续StorageClass需引用)

value: "k8s-sigs.io/nfs-subdir-external-provisioner"

- name: NFS_SERVER # NFS服务器IP

value: "192.168.121.106"

- name: NFS_PATH # NFS共享目录

value: "/data/nfs/jenkins-data"

volumes:

- name: nfs-client-root

nfs:

server: 192.168.121.106 # 同上NFS服务器IP

path: /data/nfs/jenkins-data # 同上NFS共享目录

---------------------------------------------------12.4 更新 RBAC和deploy资源配置文件

bash

root@master:~/yaml/jenkins# kubectl apply -f jenkins-rbac.yaml

root@master:~/yaml/jenkins# kubectl apply -f nfs-provisioner-deploy.yaml

# 验证状态

root@master:~/yaml/jenkins# kubectl get pods -n jenkins | grep nfs-client-provisioner

nfs-client-provisioner-8465bdd9f5-j5kqx 1/1 Running 0 12m12.5 创建动态存储类

bash

root@master:~/yaml/jenkins# vim nfs-storageclass.yaml

--------------------------

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storageclass

namespace: jenkins

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "false"

reclaimPolicy: Delete

volumeBindingMode: Immediate

# 更新资源配置文件

kubectl apply -f nfs-storageclass.yaml

# 验证是否创建成功

root@master:~/yaml/jenkins# kubectl get sc -n jenkins

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storageclass k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 14m12.6 创建pvc

bash

root@master:~/yaml/jenkins# vim jenkins-nfs-pvc.yaml

----------------------------

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-pvc

namespace: jenkins

spec:

accessModes:

- ReadWriteOnce

storageClassName: nfs-storageclass

resources:

requests:

storage: 10Gi

----------------------------

# 更新资源配置文件

root@master:~/yaml/jenkins# kubectl apply -f jenkins-nfs-pvc.yaml

# 验证绑定状态

root@master:~/yaml/jenkins# kubectl get pvc -n jenkins

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

jenkins-pvc Bound pvc-6ae87406-cac2-4c72-805f-2ec254dbd545 10Gi RWO nfs-storageclass 16m12.7 部署 Jenkins Deployment + Service

bash

root@master:~/yaml/jenkins# vim jenkins-deployment.yaml

----------------------------

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: jenkins

labels:

app: jenkins

spec:

replicas: 1

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

hostAliases:

- ip: "192.168.121.106"

hostnames:

- "harbor.test.com"

- ip: "192.168.121.188"

hostnames:

- "www.test.com"

imagePullSecrets:

- name: harbor-registry-secret

serviceAccountName: nfs-client-provisioner

containers:

- name: jenkins

image: harbor.test.com/jenkins/jenkins:v1

ports:

- containerPort: 8080

- containerPort: 50000

env:

- name: HTTP_PROXY

value: "http://192.168.121.1:7890"

- name: HTTPS_PROXY

value: "http://192.168.121.1:7890"

- name: NO_PROXY

value: "localhost,127.0.0.1,10.0.0.0/8,192.168.0.0/16,.svc,.cluster.local,192.168.121.188,www.test.com"

- name: JAVA_OPTS

value: "-Duser.timezone=Asia/Shanghai -Dhudson.model.DirectoryBrowserSupport.CSP="

- name: JENKINS_OPTS

value: "--prefix=/jenkins"

volumeMounts:

- name: jenkins-data

mountPath: /var/jenkins_home # Jenkins数据目录

- name: docker-sock

mountPath: /var/run/docker.sock

resources:

limits:

cpu: 2000m

memory: 2Gi

requests:

cpu: 1000m

memory: 1Gi

securityContext:

runAsUser: 0

privileged: true

volumes:

- name: jenkins-data

persistentVolumeClaim:

claimName: jenkins-pvc

- name: docker-sock

hostPath:

path: /var/run/docker.sock

type: Socket

---

# Jenkins Service

apiVersion: v1

kind: Service

metadata:

name: jenkins

namespace: jenkins

spec:

selector:

app: jenkins

ports:

- name: web

port: 8080

targetPort: 8080

nodePort: 30080

- name: agent

port: 50000

targetPort: 50000

nodePort: 30081

type: NodePort更新资源配置文件

bash

root@master:~/yaml/jenkins# kubectl apply -f jenkins-deployment.yaml

# 验证pod是否创建成功

root@master:~/yaml/jenkins# kubectl get pod -n jenkins

NAME READY STATUS RESTARTS AGE

jenkins-58b5dfd8b-b6cd9 1/1 Running 0 20m

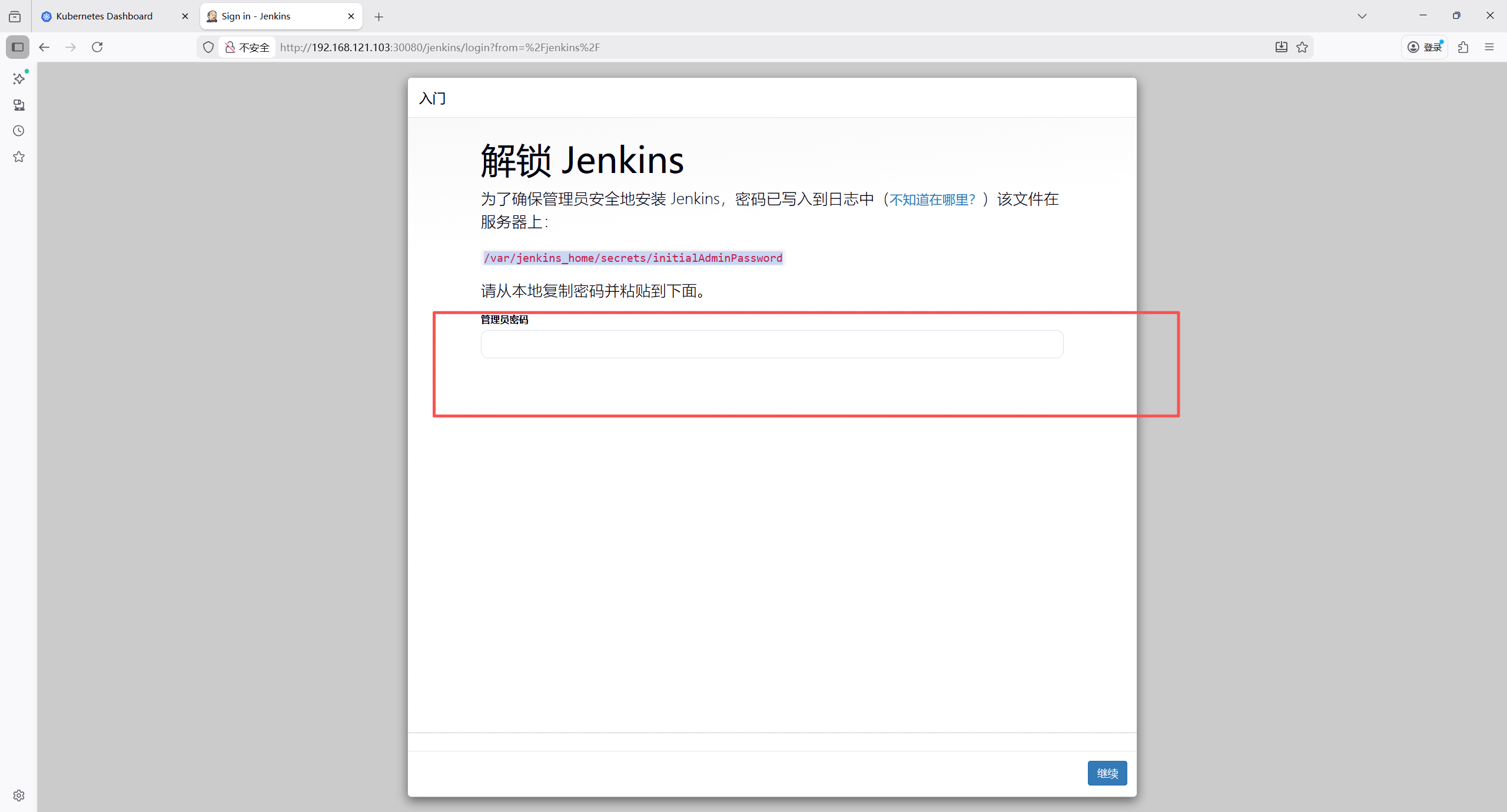

nfs-client-provisioner-8465bdd9f5-j5kqx 1/1 Running 0 20m12.8 初始化jenkins

12.8.1 获取jenkins初始密码

在nfs服务器共享目录查看init文件

bash

root@harbor:~# cat /data/nfs/jenkins-data/jenkins-jenkins-pvc-pvc-6ae87406-cac2-4c72-805f-2ec254dbd545/secrets/initialAdminPassword

6a8f32add9b8424face397b782d9b28212.8.2 访问jenkins Web页面并初始化

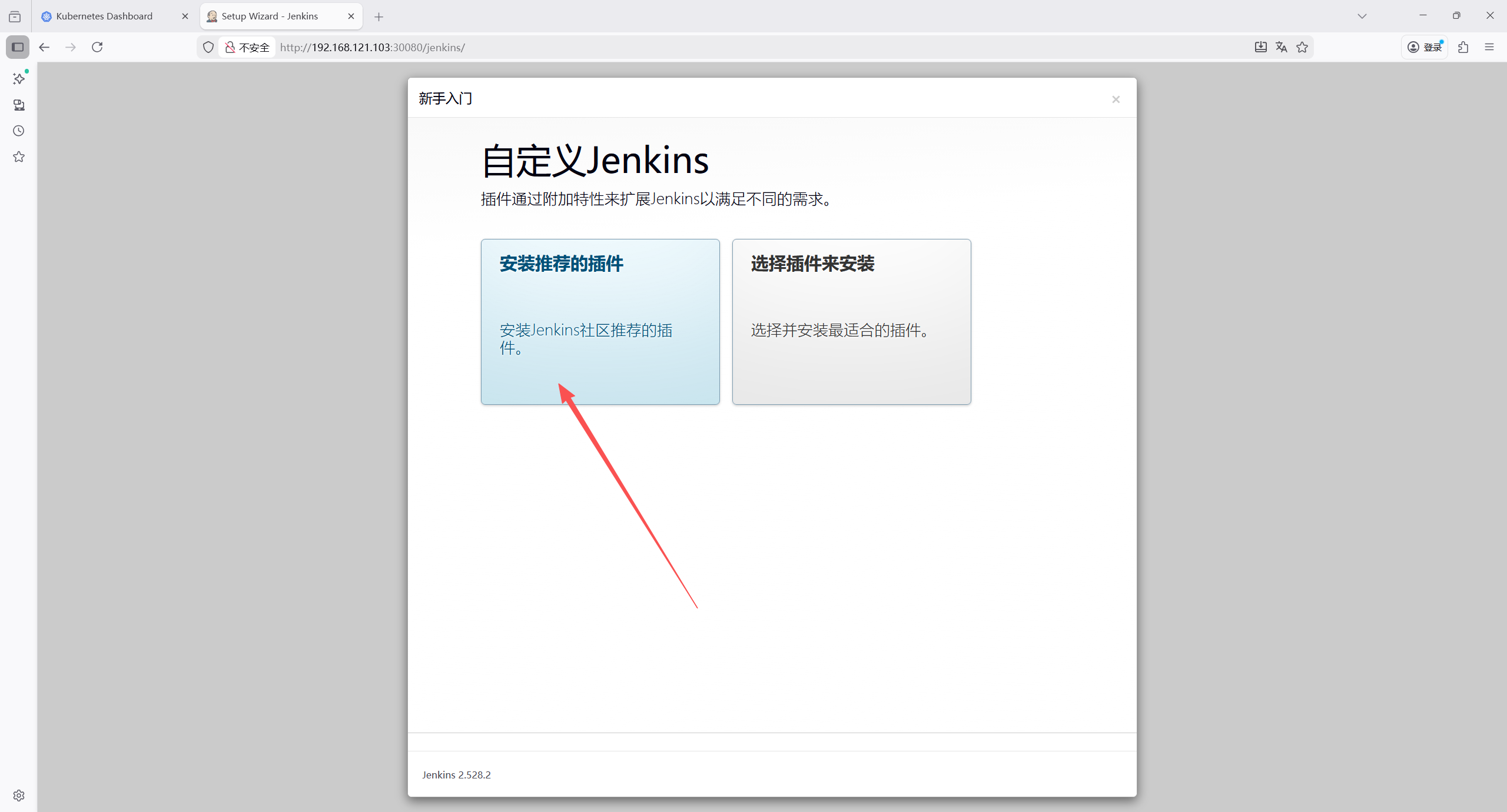

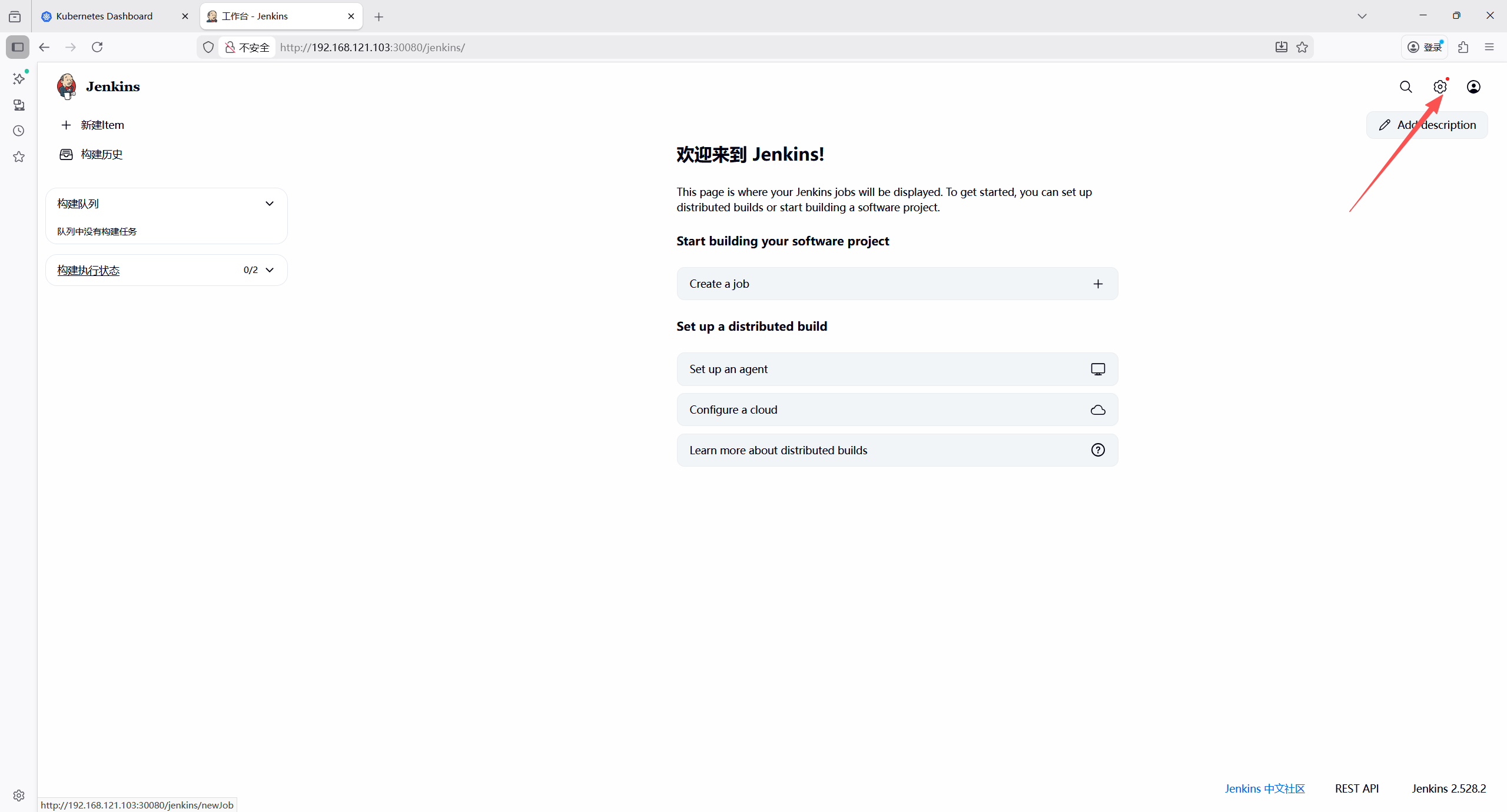

访问地址http://192.168.121.101:30080/jenkins

输入初始密码

安装推荐插件

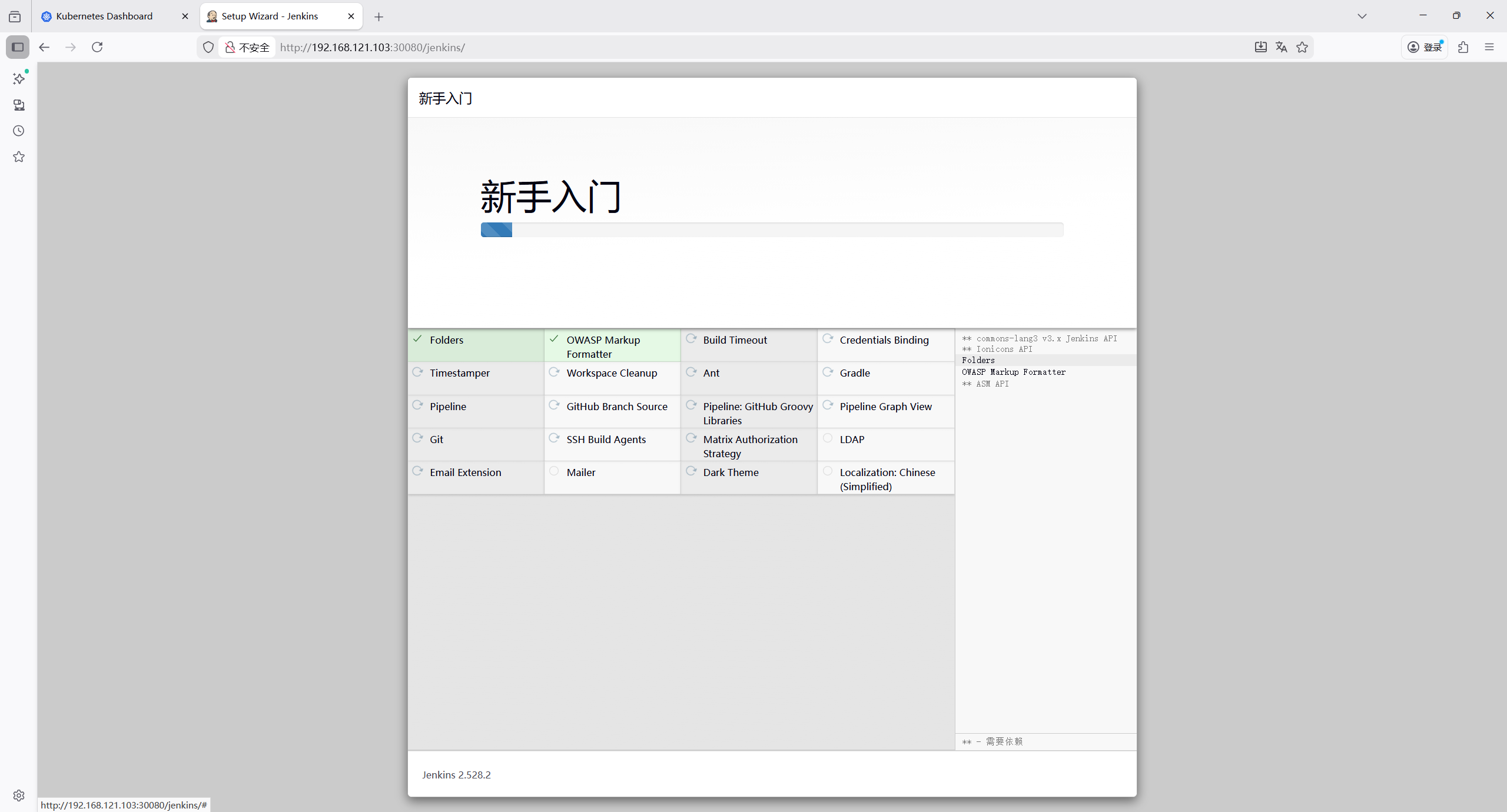

等在安装完成

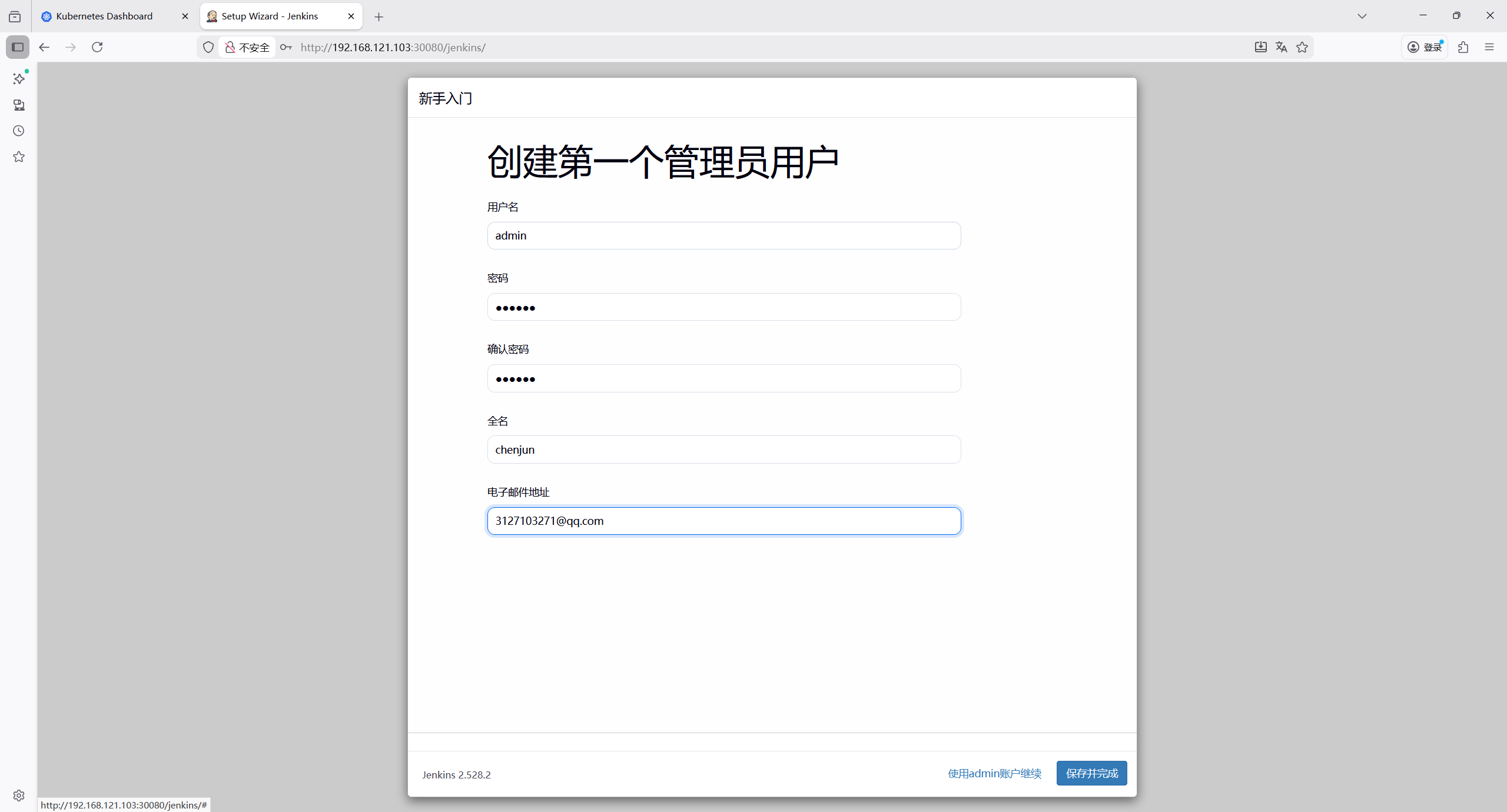

创建管理员用户

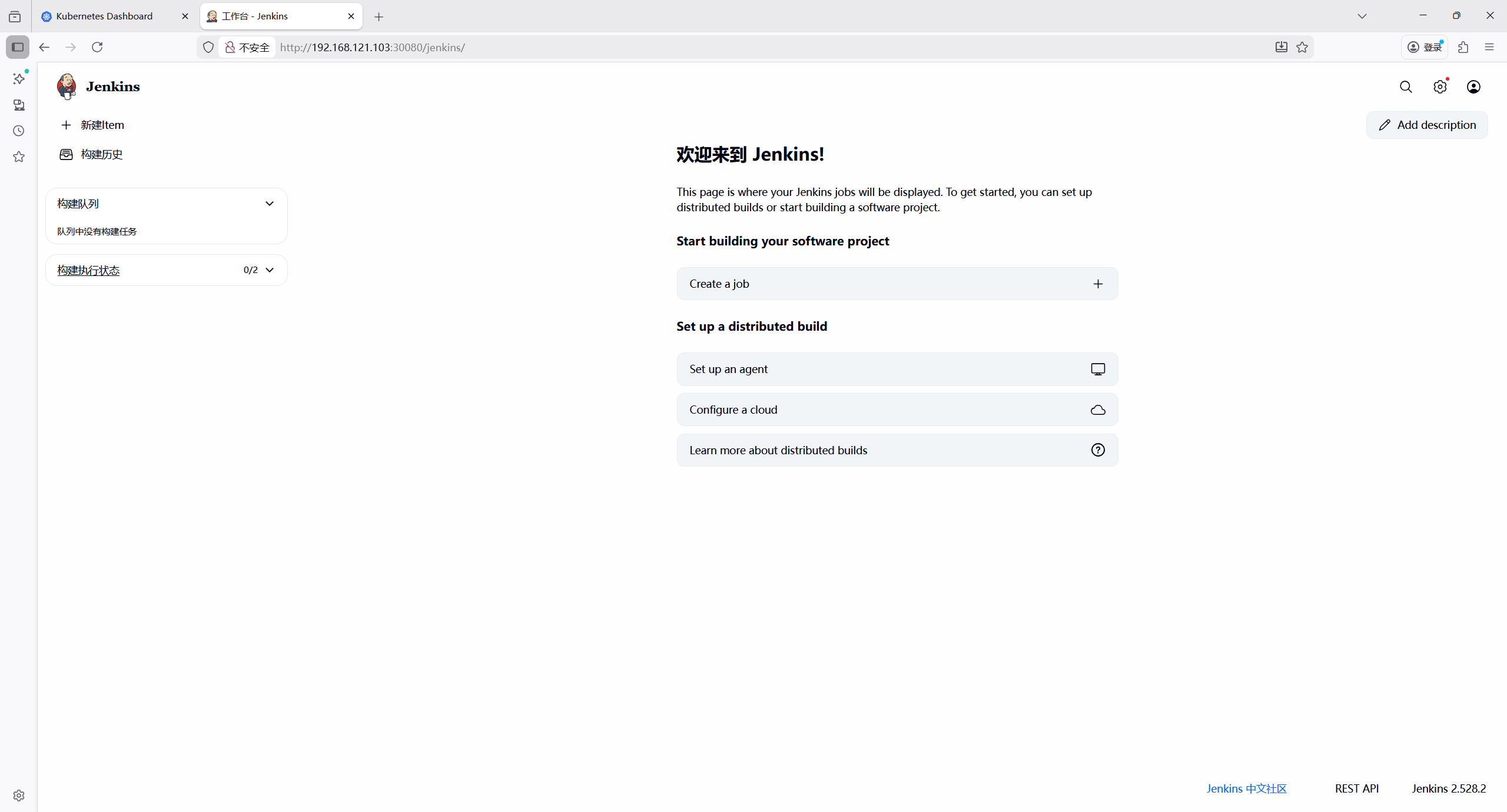

进入首页

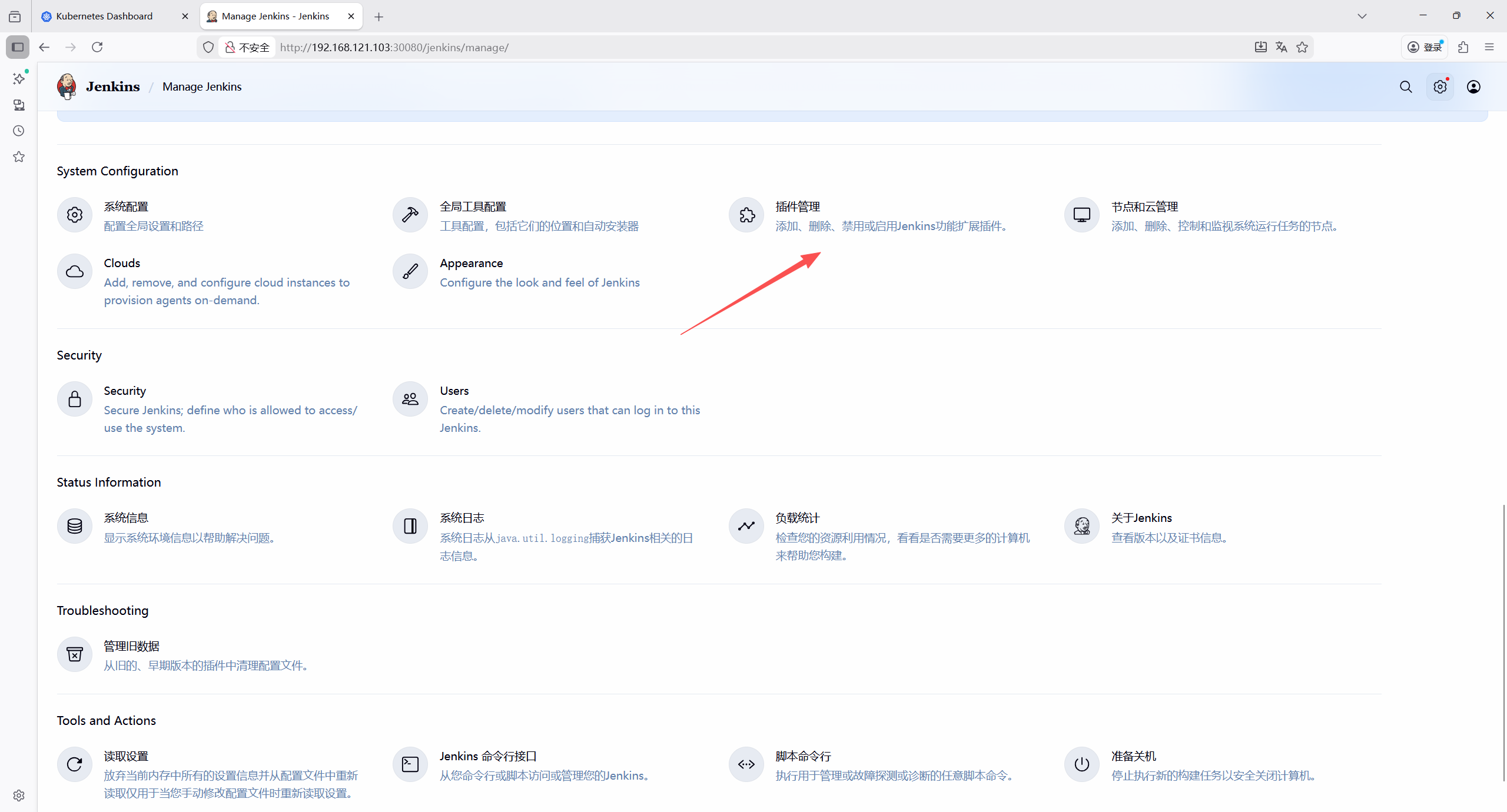

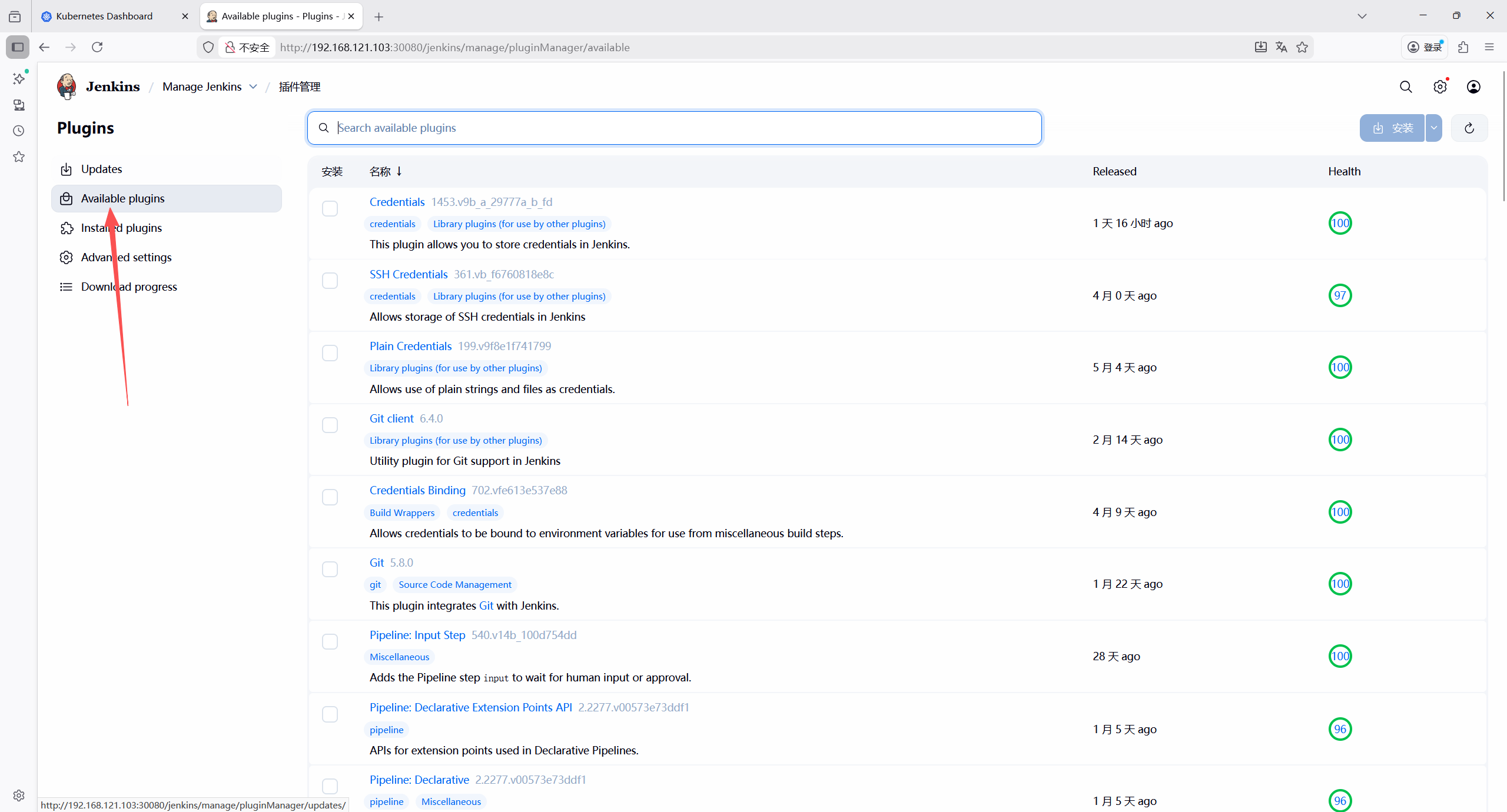

12.8.3 安装必备插件

-

Kubernetes Plugin(Jenkins 与 K8s 集成);

-

Docker Plugin(构建 Docker 镜像);

-

Docker Pipeline Plugin(Pipeline 中操作 Docker);

-

Git Plugin(拉取 Git 代码);

-

Credentials Binding Plugin(管理凭证);

-

Pipeline Utility Steps(Pipeline 辅助步骤);

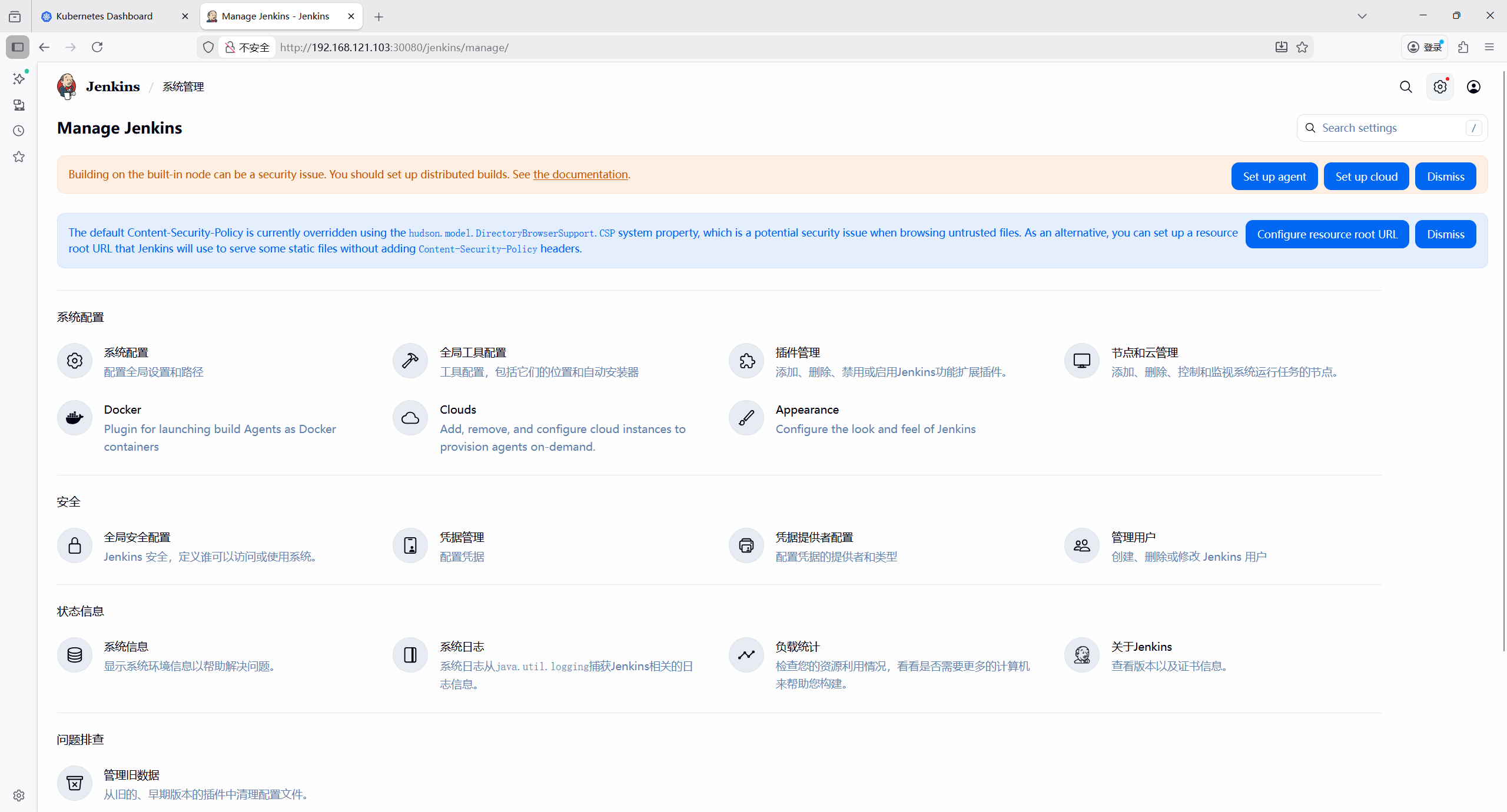

安装完成后重启 Jenkins(系统管理→重启 Jenkins)。

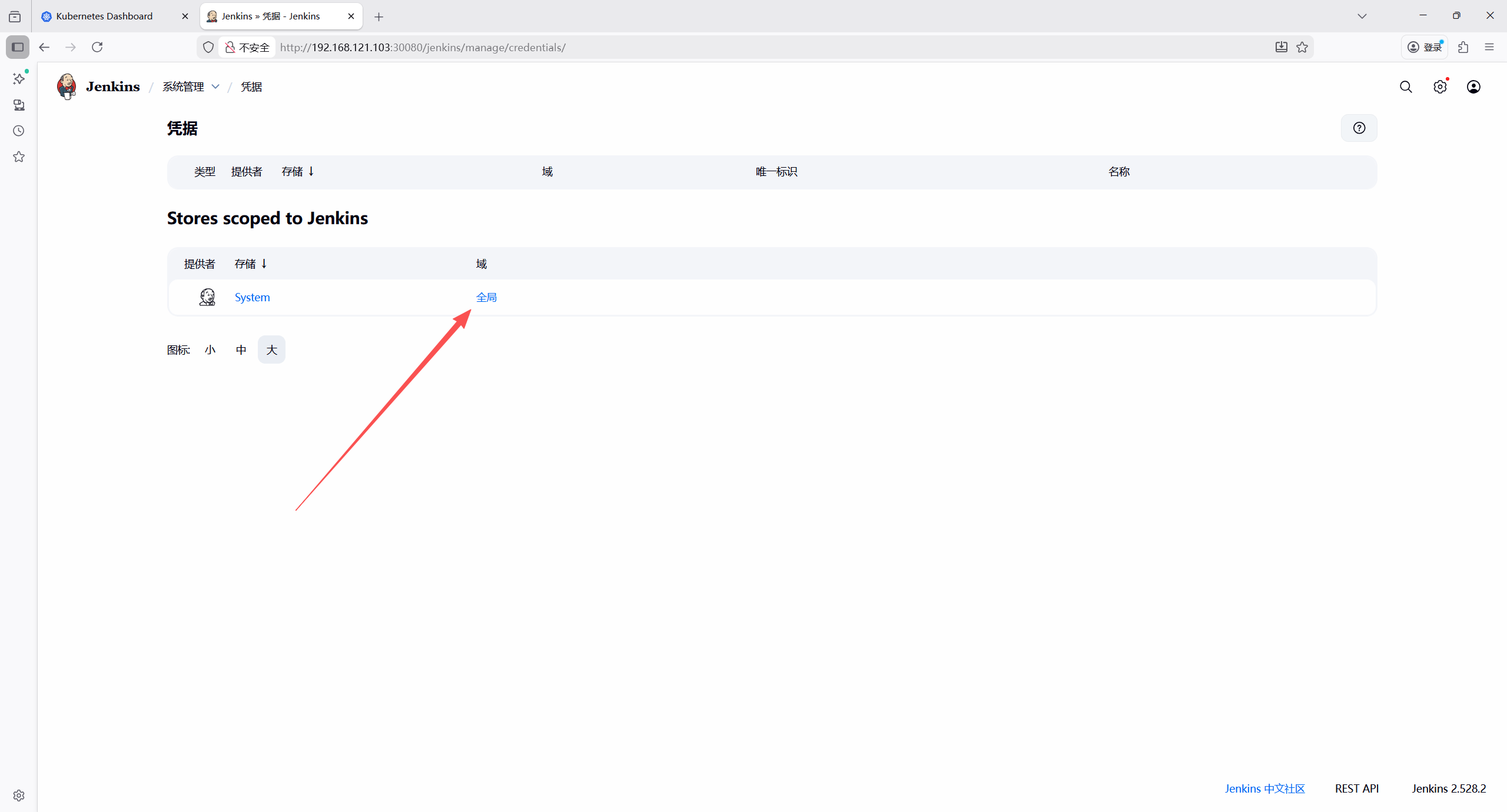

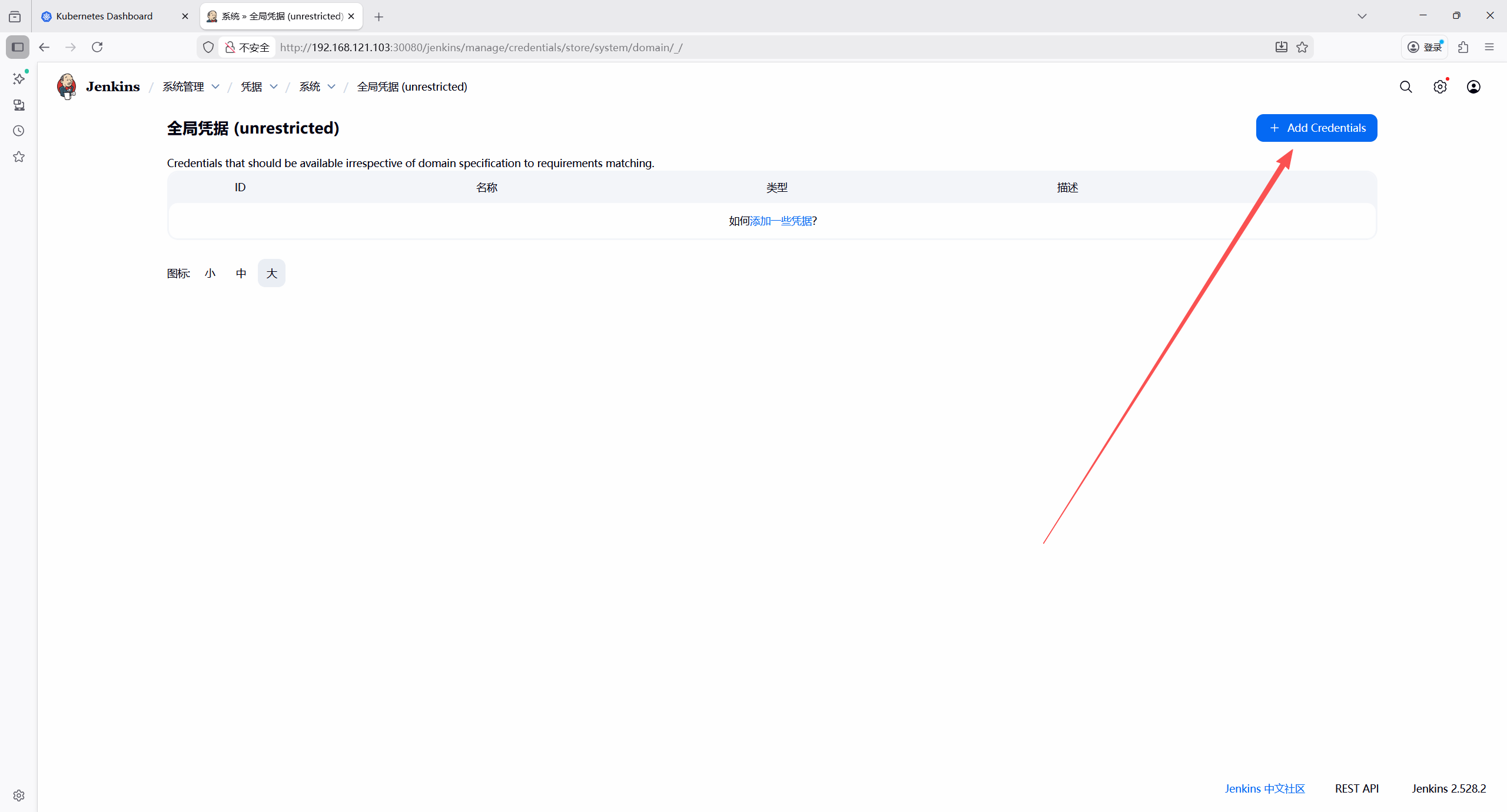

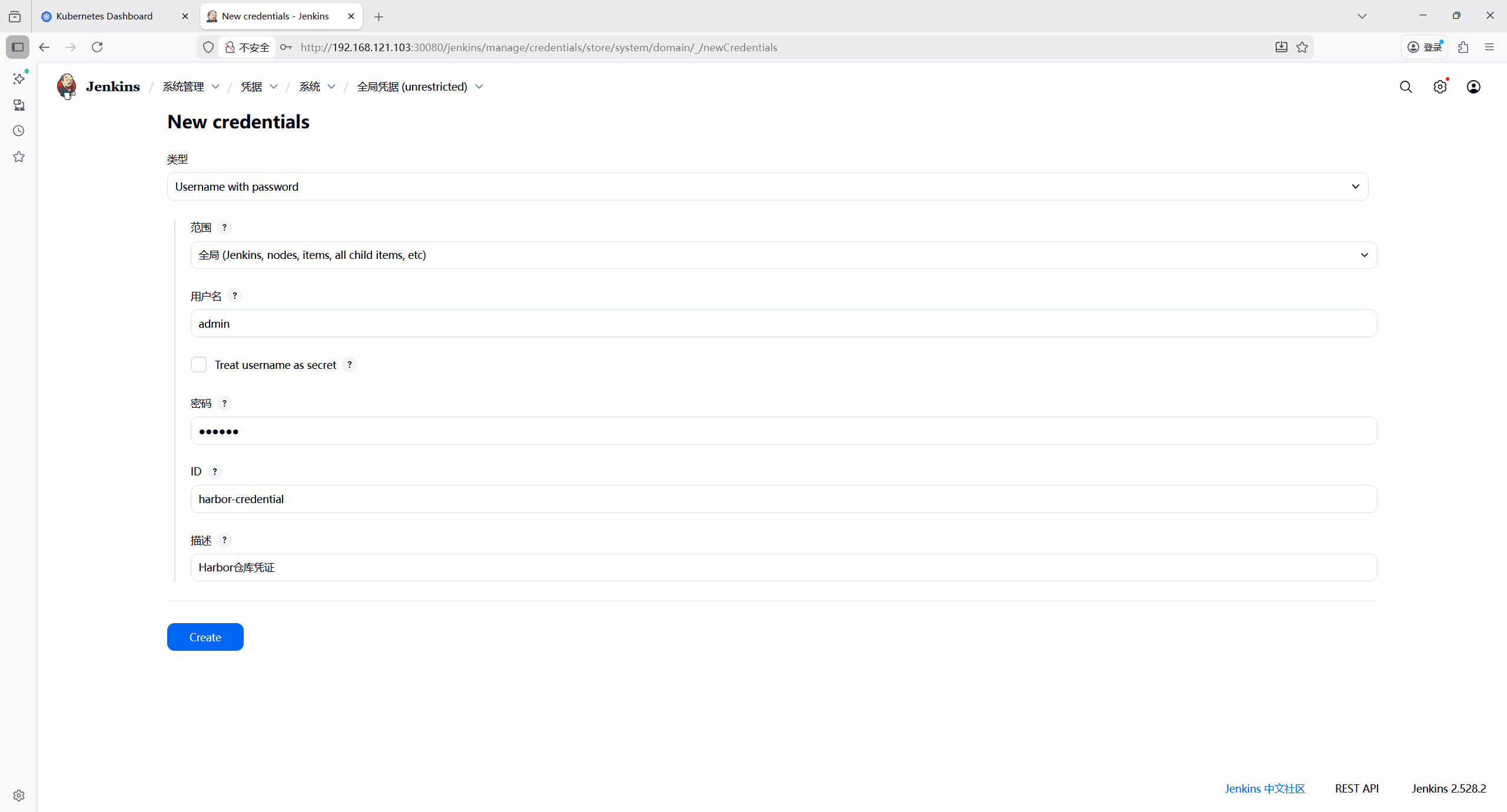

12.8.4 配置jenkins全局凭证

添加harbor凭证

类型 :Username with password

用户名: admin

密码:123456

id: harbor-credential

描述: harbor仓库凭证

保存

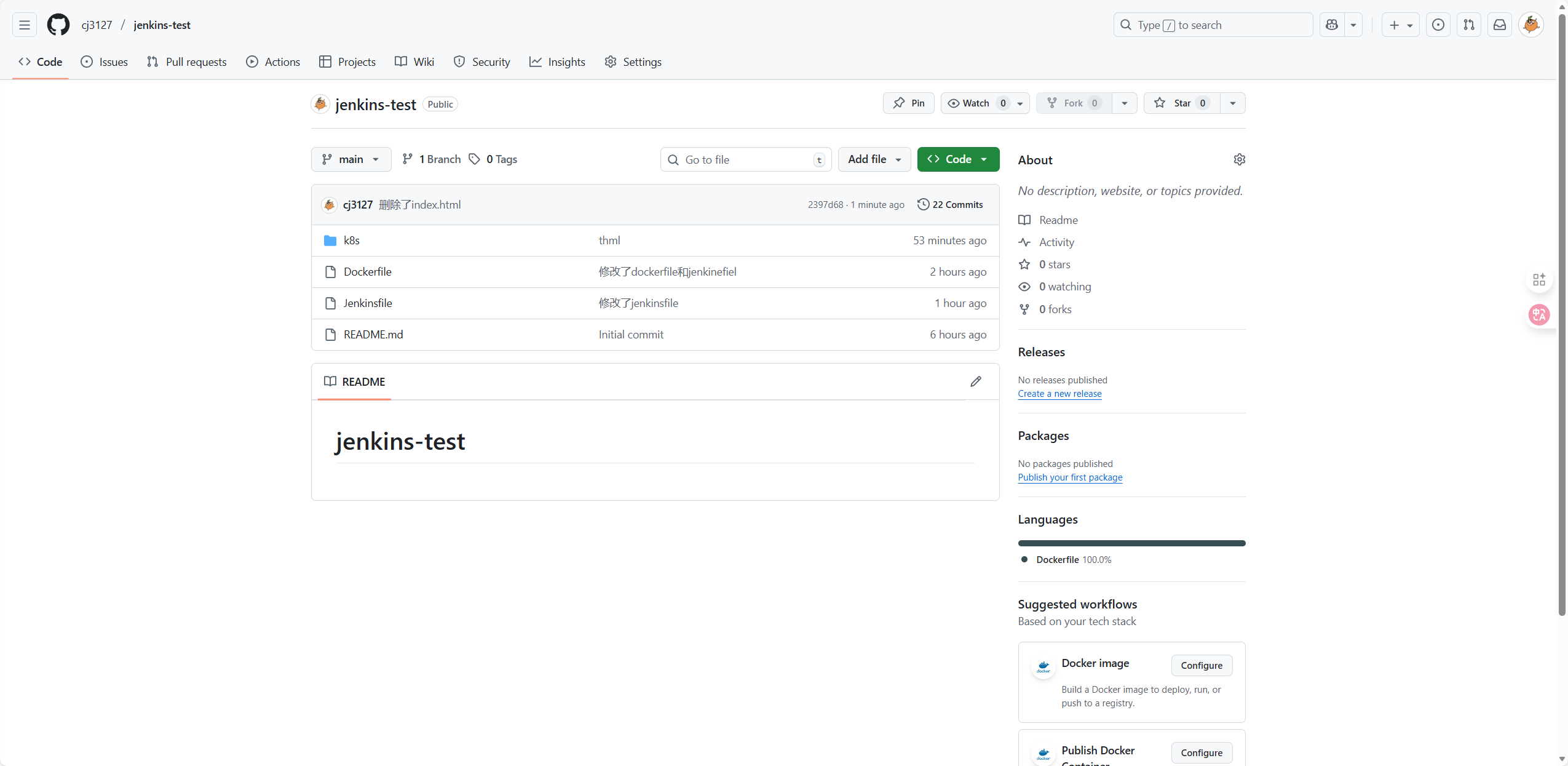

12.8.5 编写CI/CD流水线

-

准备Github代码仓库,配置ssh免密

-

建立本地目录

bash

root@master:~/yaml# mkdir github

root@master:~/yaml# cd github- Git身份设置

bash

root@master:~/yaml/github# git config --global user.name "chenjun"

root@master:~/yaml/github# git config --global user.email "3127103271@qq.com"

root@master:~/yaml/github# git config --global color.ui true

root@master:~/yaml/github# git config --list

user.name=chenjun

user.email=3127103271@qq.com

color.ui=true

# 初始化

root@master:~/yaml# git init- 克隆github远程仓库到本地

bash

root@master:~/yaml/github# git clone git@github.com:cj3127/jenkins-test.git

Cloning into 'jenkins-test'...

remote: Enumerating objects: 3, done.

remote: Counting objects: 100% (3/3), done.

remote: Total 3 (delta 0), reused 0 (delta 0), pack-reused 0 (from 0)

Receiving objects: 100% (3/3), done.

root@master:~/yaml/github# ls

jenkins-test

root@master:~/yaml/github# cd jenkins-test- 编写Dockerfile

bash

root@master:~/yaml/github/jenkins-test# vim Dockerfile

# 基于Harbor的nginx:latest镜像

FROM harbor.test.com/library/nginx:latest- 创建nginx相关配置

bash

root@master:~/yaml/github/jenkins-test# mkdir k8s

root@master:~/yaml/github/jenkins-test# cd k8s

# 复制原始deploy配置文件

root@master:~/yaml/github/jenkins-test/k8s# cp /root/yaml/deploy/nginx-deployment.yaml /root/yaml/github/jenkins-test/k8s/

# 复制原始svc配置文件

root@master:~/yaml/github/jenkins-test/k8s# cp /root/yaml/deploy/nginx-service.yaml /root/yaml/github/jenkins-test/k8s/

# 复制nginx-cm配置文件

root@master:~/yaml/github/jenkins-test/k8s# cp /root/yaml/configmap/nginx-welcome-cm.yaml /root/yaml/github/jenkins-test/k8s/- 编写Jenkinsfile

bash

root@master:~/yaml/github/jenkins-test# vim Jenkinsfile

pipeline {

agent any

environment {

HARBOR_ADDR = "harbor.test.com"

HARBOR_PROJECT = "library"

IMAGE_NAME = "nginx"

IMAGE_TAG = "${BUILD_NUMBER}"

FULL_IMAGE_NAME = "${HARBOR_ADDR}/${HARBOR_PROJECT}/${IMAGE_NAME}:${IMAGE_TAG}"

K8S_NAMESPACE = "default"

}

stages {

stage("构建Docker镜像") {

steps {

echo "构建Docker镜像"

sh "docker build -t ${FULL_IMAGE_NAME} ."

}

}

stage("推送镜像到Harbor") {

steps {

echo "推送镜像到Harbor"

withCredentials([usernamePassword(credentialsId: 'harbor-credential', passwordVariable: 'HARBOR_PWD', usernameVariable: 'HARBOR_USER')]) {

sh "docker login ${HARBOR_ADDR} -u ${HARBOR_USER} -p ${HARBOR_PWD}"

sh "docker push ${FULL_IMAGE_NAME}"

sh "docker logout ${HARBOR_ADDR}"

}

}

}

stage("部署到K8s集群") {

steps {

echo "部署到K8s集群"

sh "kubectl apply -f k8s/nginx-welcome-cm.yaml -n ${K8S_NAMESPACE}"

sh "sed -i 's|harbor.test.com/library/nginx:latest|${FULL_IMAGE_NAME}|g' k8s/nginx-deployment.yaml"

sh "kubectl apply -f k8s/nginx-deployment.yaml -n ${K8S_NAMESPACE}"

sh "kubectl rollout status deployment/nginx-deployment -n ${K8S_NAMESPACE}"

}

}

stage("验证服务") {

steps {

echo "验证服务可用性"

sh "curl -k https://www.test.com -o /dev/null -w '%{http_code}' | grep 200"

}

}

}

post {

success {

echo "流水线执行成功"

}

failure {

echo "流水线执行失败"

}

always {

sh "docker rmi ${FULL_IMAGE_NAME} || true"

}

}

}12.8.6 提交本地文件至Github代码仓库

bash

root@master:~/yaml/github/jenkins-test# git add Dockerfile Jenkinsfile k8s

root@master:~/yaml/github/jenkins-test# git commit -m "提交了Dockerfile Jenkinsfile k8s"

root@master:~/yaml/github/jenkins-test# git push origin main

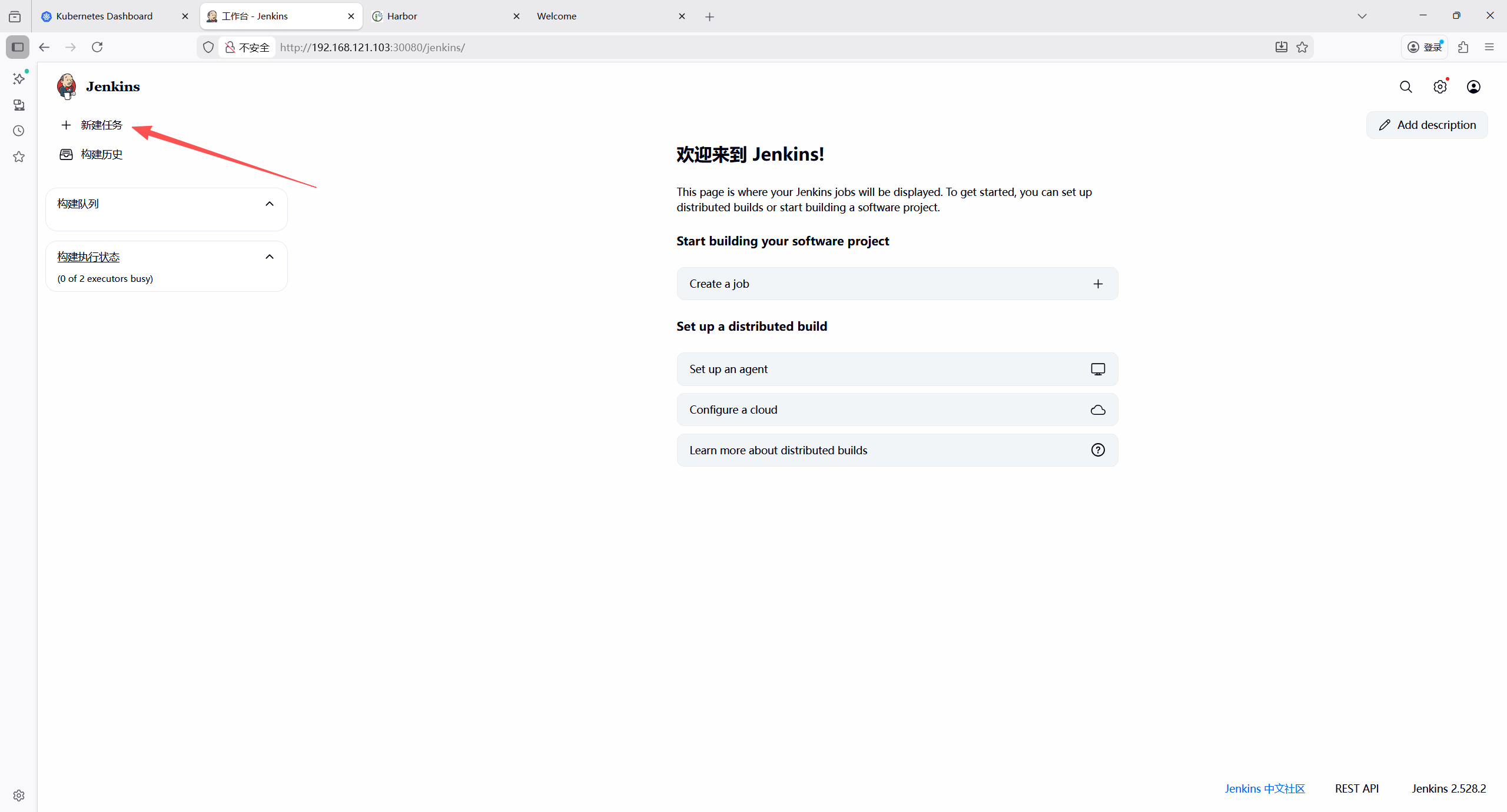

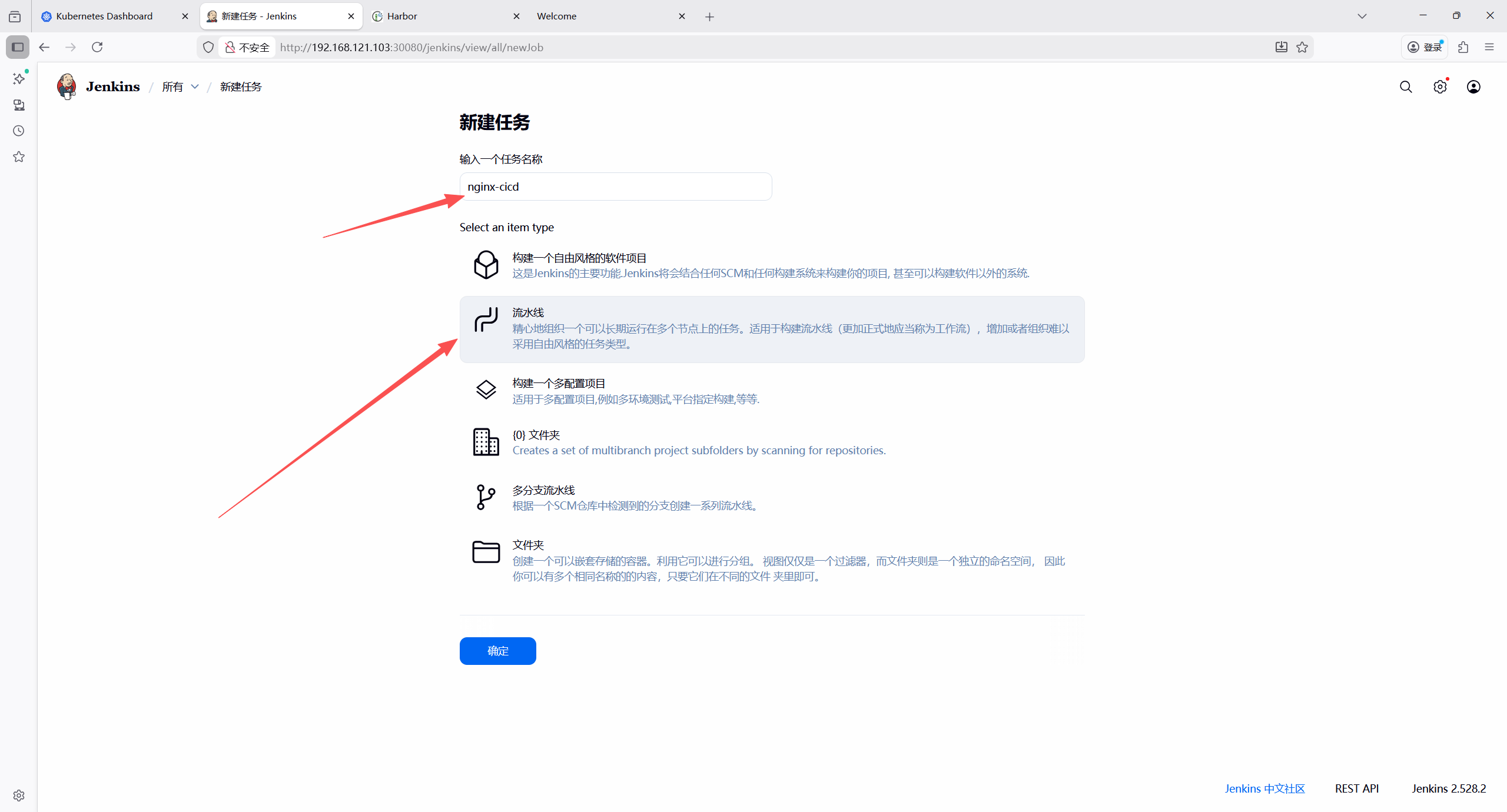

12.9 创建jenkins流水线任务

- 首页新建任务

- 输入任务名称,选择流水线,确定

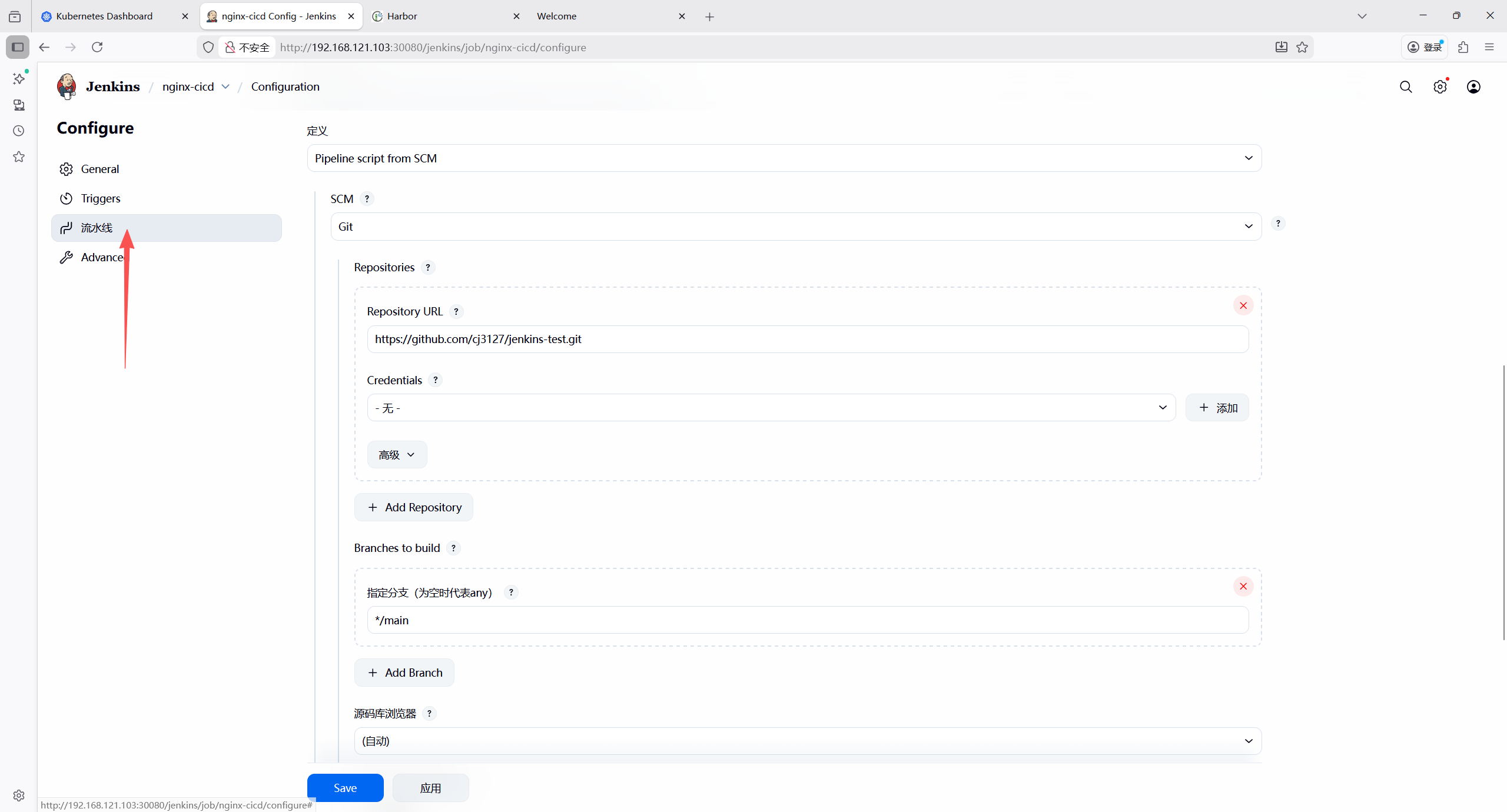

- 流水线配置

- 定义:Pipeline script from SCM;

- SCM:Git;

- 仓库 URL: Git 仓库地址;

- 分支:

*/main; - 脚本路径:

Jenkinsfile;

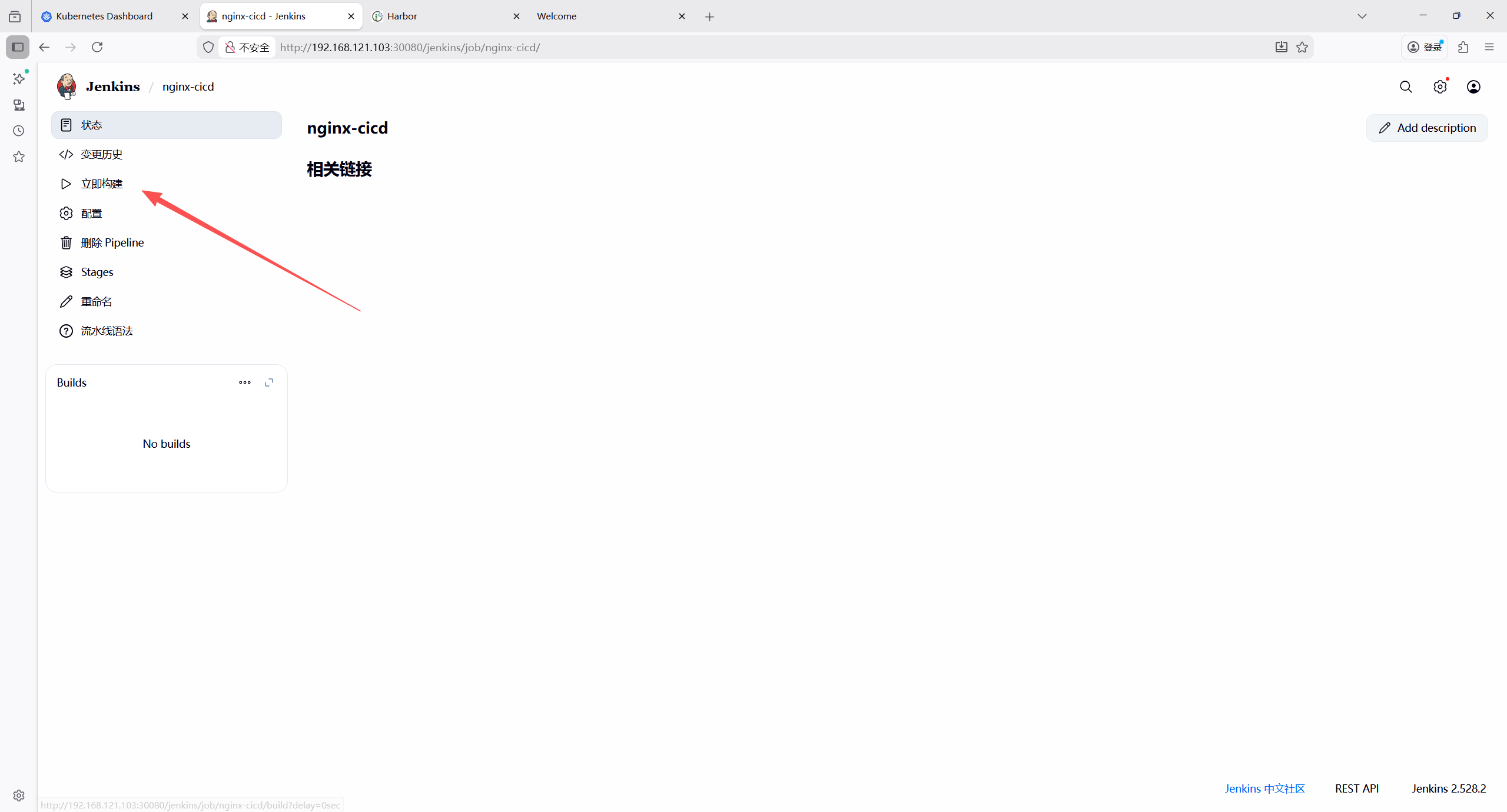

应用保存配置

修改cm配置的主页内容验证是否更新成功

bash

root@master:~/yaml/github/jenkins-test/k8s# vim nginx-welcome-cm.yaml

root@master:~/yaml/github/jenkins-test/k8s# git add nginx-welcome-cm.yaml

root@master:~/yaml/github/jenkins-test/k8s# git commit -m "修改了主页内容"

[main 35cd872] 修改了主页内容

1 file changed, 1 insertion(+), 1 deletion(-)

root@master:~/yaml/github/jenkins-test/k8s# git push origin main

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 2 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 399 bytes | 399.00 KiB/s, done.

Total 4 (delta 3), reused 0 (delta 0)

remote: Resolving deltas: 100% (3/3), completed with 3 local objects.

To github.com:cj3127/jenkins-test.git

73e5b7c..35cd872 main -> main

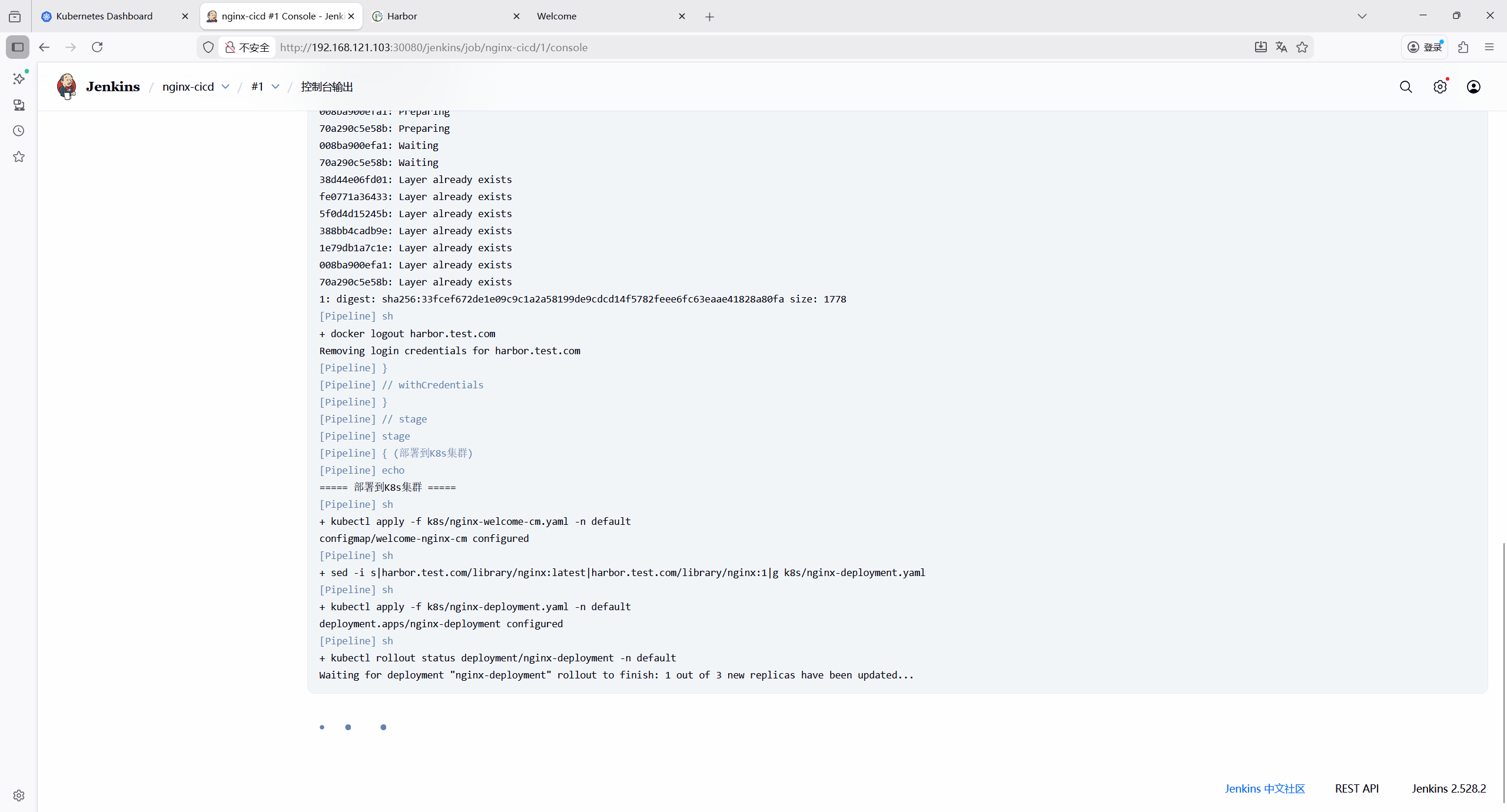

root@master:~/yaml/github/jenkins-test/k8s# - 执行构建任务

查看构建控制台输出内容

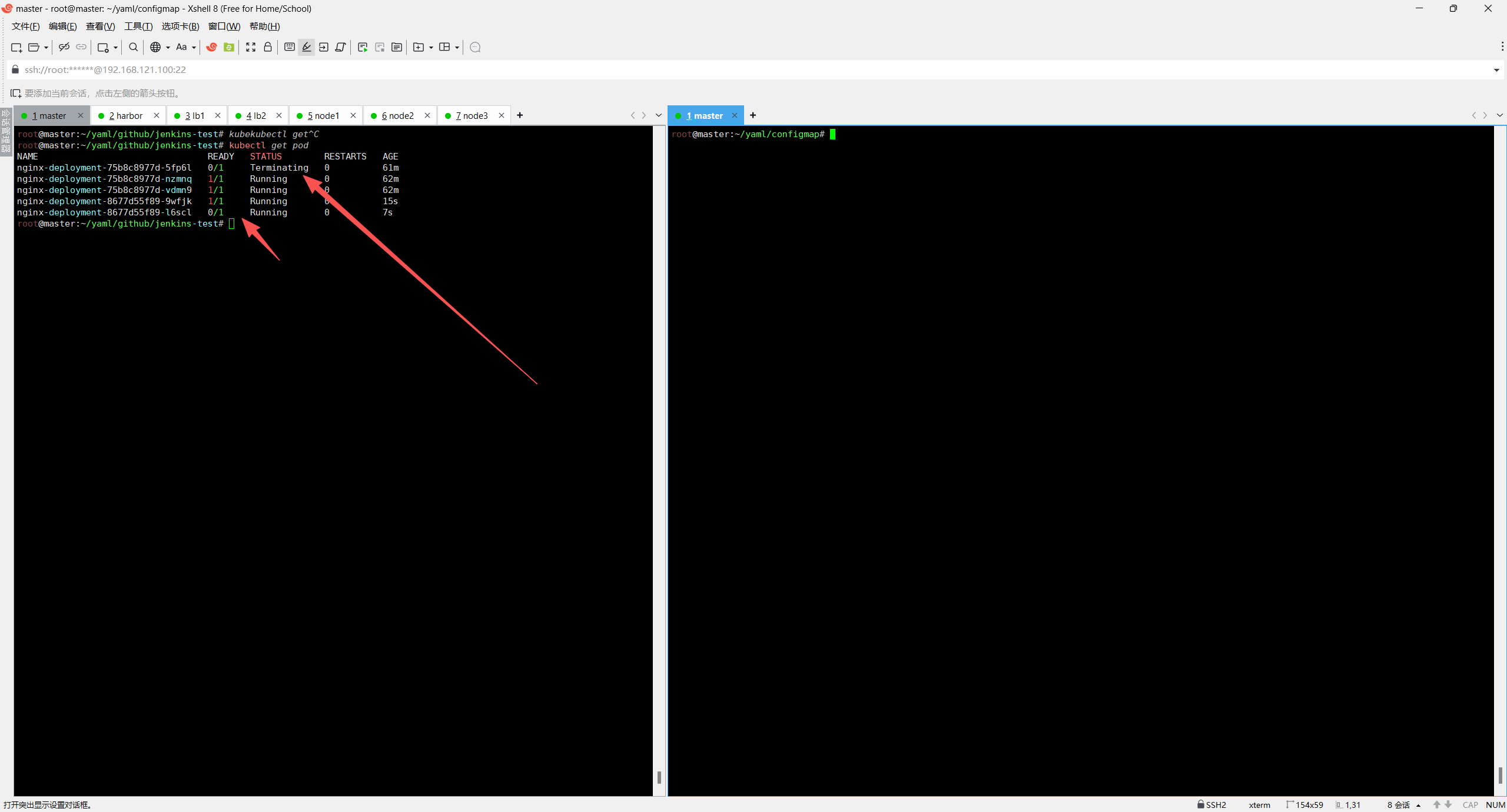

查看pod状态,滚动更新

更新完成后通过域名www.test.com访问测试查看页面内容