在ubuntu24.04运行yolov5(用CPU)修正1

- 一、思路架构

- 1、window10操作

-

- 1.1、使用window10将PyTorch导出TorchScript

- [1.2、在ubuntu检测结果:是正常的 TorchScript(如下)](#1.2、在ubuntu检测结果:是正常的 TorchScript(如下))

- [1.3、问题:为什么生成是 `yolov5s.torchscript` 而不是 `yolov5s.torchscript.pt`](#1.3、问题:为什么生成是

yolov5s.torchscript而不是yolov5s.torchscript.pt)

- 2、ubuntu操作

-

- 2.1、ubuntu依赖环境

- 2.2、ubuntu下载源码

- 2.3、安装虚拟环境将TorchScript使用PNNX转NCNN

- 2.3、创建测试工程

- [2.4、解释输出现象(YOLOv5 head 的原始输出(已经 decode+sigmoid+scale)但未经过 NMS)](#2.4、解释输出现象(YOLOv5 head 的原始输出(已经 decode+sigmoid+scale)但未经过 NMS))

-

- [2.4.1、输出形状:`out shape (c, h, w): 1, 25200, 85`](#2.4.1、输出形状:

out shape (c, h, w): 1, 25200, 85) - [2.4.2、为什么是 25200?](#2.4.2、为什么是 25200?)

- [2.4.3、为什么每个点是 85 维?](#2.4.3、为什么每个点是 85 维?)

- 2.4.4、这些值的类型是什么?

- [2.4.1、输出形状:`out shape (c, h, w): 1, 25200, 85`](#2.4.1、输出形状:

环境

开发机:Ubuntu 24.04(刷系统,用真实主机跑ubuntu,效率很快)

- 目标板:ubuntu24

- 已有:

yolov5n.pt - 自己写的文档,有点长,如果能在ubuntu跑通,放在其它板子换个交叉编译即可(有用点点赞哦)

一、思路架构

- Windows(GPU) :只做

pt -> torchscript(导出速度更快、PyTorch 环境你也说更成熟) - Ubuntu(CPU) :做

torchscript -> ncnn(param/bin)+ 编译/运行

1、window10操作

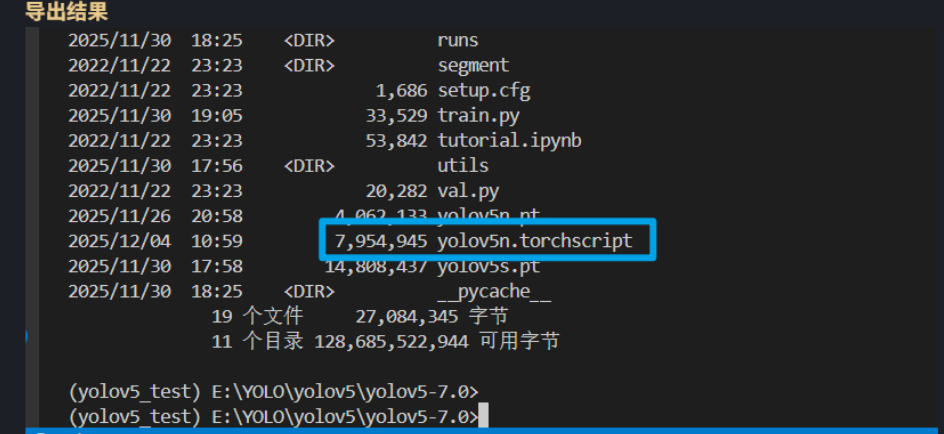

1.1、使用window10将PyTorch导出TorchScript

1.1.1、创建脚本export_ts.py

有一个前置条件:在window10部署能够跑yolo的环境,才能用下列脚本,不然应该会报错

import torch

print("loading yolov5n...")

model = torch.hub.load('ultralytics/yolov5', 'yolov5n', pretrained=True)

model.eval()

dummy = torch.randn(1, 3, 640, 640)

print("tracing...")

ts = torch.jit.trace(model, dummy)

ts.save("yolov5n.torchscript.pt")

print("saved --> yolov5n.torchscript.pt")1.1.2、运行脚本

python export_ts.py1.1.3、脚本结果

yolov5n.torchscript.pt

1.2、在ubuntu检测结果:是正常的 TorchScript(如下)

user@user-EliteMini-Series:~$ file yolov5n.torchscript

yolov5n.torchscript: Zip archive data, at least v0.0 to extract, compression method=store1.3、问题:为什么生成是 yolov5s.torchscript 而不是 yolov5s.torchscript.pt

1. YOLOv5的export.py在不同版本、不同参数组合下,TorchScript 产物文件名可能是:yolov5s.torchscript、yolov5s.torchscript.pt、yolov5s.torchscript.zip

2. TorchScript 本质通常是一个 zip 打包的模型(TorchScript archive),后缀不影响内容。

3. pnnx 接收的是 TorchScript 模型文件路径,直接把yolov5s.torchscript作为输入也可以。2、ubuntu操作

2.1、ubuntu依赖环境

bash

sudo apt install build-essential git cmake libprotobuf-dev protobuf-compiler libomp-dev libvulkan-dev vulkan-tools libopencv-dev python3 python3-pip2.2、ubuntu下载源码

cd /home/user/RK/RK3568/4_rk3568_ncnn

mkdir -p rk3568_work

cd rk3568_work

git clone https://github.com/Tencent/ncnn.git

cd ncnn

git submodule update --init2.3、安装虚拟环境将TorchScript使用PNNX转NCNN

# 创建一个新的虚拟环境

python3 -m venv /home/user/RK/RK3568/4_rk3568_ncnn/rk3568_work/my_pytorch_env

# 激活虚拟环境

source /home/user/RK/RK3568/4_rk3568_ncnn/rk3568_work/my_pytorch_env/bin/activate

pip install torch torchvision torchaudio

pip install pnnx

pnnx yolov5n.torchscript inputshape=[1,3,640,640] fp16=0 optlevel=2 device=cpu转换后的模型

bash

(my_pytorch_env) user@user-EliteMini-Series:~/RK/RK3568/4_rk3568_ncnn/rk3568_work$ ls

app-rk3568 ncnn-build-host yolov5n.ncnn.param yolov5n.pnnx.onnx yolov5n.torchscript

my_pytorch_env ncnn-build-rk3568 yolov5n_ncnn.py yolov5n.pnnx.param

ncnn yolov5n.ncnn.bin yolov5n.pnnx.bin yolov5n_pnnx.py转换后的模型功能

yolov5n.torchscript # 你的 TorchScript 原始模型

yolov5n.ncnn.param # ✅ 最终 ncnn 模型(param)--最重要

yolov5n.ncnn.bin # ✅ 最终 ncnn 模型(bin)--最重要

yolov5n.pnnx.onnx # 中间 onnx

yolov5n.pnnx.param # 中间 pnnx 版 ncnn(一般是更原始图)

yolov5n.pnnx.bin

yolov5n_pnnx.py # pnnx 产生的 python demo(吃 pnnx.param/bin)

yolov5n_ncnn.py # pnnx 产生的 python demo(吃 ncnn.param/bin)C++程序就这么写

net.load_param("yolov5n.ncnn.param");

net.load_model("yolov5n.ncnn.bin");测试PNNX转换NCNN(如下测试,可选)

compare.py

import torch

from yolov5n_ncnn import test_inference as ncnn_infer # 导入 NCNN 推理函数

from yolov5n_pnnx import Model as PNNXModel # 导入 PNNX 模型

from yolov5n_pnnx import test_inference # 从 yolov5n_pnnx 导入 test_inference 函数

def compare_outputs():

# 为了保证一致,统一使用一个输入

torch.manual_seed(0)

inp = torch.rand(1, 3, 640, 640, dtype=torch.float)

# 1. PNNX 推理

pnnx_model = PNNXModel()

pnnx_output = test_inference(pnnx_model, inp) # 使用相同输入

# 如果 pnnx_output 是元组,取出第一个元素

if isinstance(pnnx_output, tuple):

pnnx_output = pnnx_output[0] # 取元组中的第一个元素

# 2. NCNN 推理

ncnn_output = ncnn_infer(inp) # 传入相同输入

# 3. 检查维度是否一致

print("pnnx shape:", pnnx_output.shape)

print("ncnn shape:", ncnn_output.shape)

print("Shapes equal:", pnnx_output.shape == ncnn_output.shape)

# 4. 计算最大绝对误差

diff = (pnnx_output - ncnn_output).abs()

print("Max absolute diff:", diff.max().item())

# 5. 使用 allclose 检查是否对齐

allclose = torch.allclose(pnnx_output, ncnn_output, rtol=1e-3, atol=1e-6)

print("Allclose (within 1e-6):", allclose)

if __name__ == "__main__":

compare_outputs()yolov5n_ncnn.py

yolov5n_ncnn.py

# yolov5n_ncnn.py

import torch

import ncnn

import numpy as np

def test_inference(in0=None):

if in0 is None:

torch.manual_seed(0)

in0 = torch.rand(1, 3, 640, 640, dtype=torch.float)

out = []

with ncnn.Net() as net:

net.load_param("yolov5n.ncnn.param")

net.load_model("yolov5n.ncnn.bin")

with net.create_extractor() as ex:

# NCNN 需要在输入时转换数据类型

ex.input("in0", ncnn.Mat(in0.squeeze(0).numpy()).clone())

_, out0 = ex.extract("out0")

out.append(torch.from_numpy(np.array(out0)).unsqueeze(0))

if len(out) == 1:

return out[0]

else:

return tuple(out)

if __name__ == "__main__":

print(test_inference())yolov5n_pnnx.py

yolov5n_pnnx.py

import os

import numpy as np

import tempfile, zipfile

import torch

import torch.nn as nn

import torch.nn.functional as F

try:

import torchvision

import torchaudio

except:

pass

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model_0_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=3, kernel_size=(6,6), out_channels=16, padding=(2,2), padding_mode='zeros', stride=(2,2))

self.model_0_act = nn.SiLU()

self.model_1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=16, kernel_size=(3,3), out_channels=32, padding=(1,1), padding_mode='zeros', stride=(2,2))

self.model_1_act = nn.SiLU()

self.model_2_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(1,1), out_channels=16, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_2_cv1_act = nn.SiLU()

self.model_2_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=16, kernel_size=(1,1), out_channels=16, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_2_m_0_cv1_act = nn.SiLU()

self.model_2_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=16, kernel_size=(3,3), out_channels=16, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_2_m_0_cv2_act = nn.SiLU()

self.model_2_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(1,1), out_channels=16, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_2_cv2_act = nn.SiLU()

self.model_2_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_2_cv3_act = nn.SiLU()

self.model_3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(3,3), out_channels=64, padding=(1,1), padding_mode='zeros', stride=(2,2))

self.model_3_act = nn.SiLU()

self.model_4_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_4_cv1_act = nn.SiLU()

self.model_4_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_4_m_0_cv1_act = nn.SiLU()

self.model_4_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(3,3), out_channels=32, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_4_m_0_cv2_act = nn.SiLU()

self.model_4_m_1_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_4_m_1_cv1_act = nn.SiLU()

self.model_4_m_1_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(3,3), out_channels=32, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_4_m_1_cv2_act = nn.SiLU()

self.model_4_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_4_cv2_act = nn.SiLU()

self.model_4_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_4_cv3_act = nn.SiLU()

self.model_5_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(3,3), out_channels=128, padding=(1,1), padding_mode='zeros', stride=(2,2))

self.model_5_act = nn.SiLU()

self.model_6_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_6_cv1_act = nn.SiLU()

self.model_6_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_6_m_0_cv1_act = nn.SiLU()

self.model_6_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(3,3), out_channels=64, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_6_m_0_cv2_act = nn.SiLU()

self.model_6_m_1_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_6_m_1_cv1_act = nn.SiLU()

self.model_6_m_1_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(3,3), out_channels=64, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_6_m_1_cv2_act = nn.SiLU()

self.model_6_m_2_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_6_m_2_cv1_act = nn.SiLU()

self.model_6_m_2_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(3,3), out_channels=64, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_6_m_2_cv2_act = nn.SiLU()

self.model_6_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_6_cv2_act = nn.SiLU()

self.model_6_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_6_cv3_act = nn.SiLU()

self.model_7_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(3,3), out_channels=256, padding=(1,1), padding_mode='zeros', stride=(2,2))

self.model_7_act = nn.SiLU()

self.model_8_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_8_cv1_act = nn.SiLU()

self.model_8_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_8_m_0_cv1_act = nn.SiLU()

self.model_8_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(3,3), out_channels=128, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_8_m_0_cv2_act = nn.SiLU()

self.model_8_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_8_cv2_act = nn.SiLU()

self.model_8_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=256, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_8_cv3_act = nn.SiLU()

self.model_9_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_9_cv1_act = nn.SiLU()

self.model_9_m = nn.MaxPool2d(ceil_mode=False, dilation=(1,1), kernel_size=(5,5), padding=(2,2), return_indices=False, stride=(1,1))

self.pnnx_unique_0 = nn.MaxPool2d(ceil_mode=False, dilation=(1,1), kernel_size=(5,5), padding=(2,2), return_indices=False, stride=(1,1))

self.pnnx_unique_1 = nn.MaxPool2d(ceil_mode=False, dilation=(1,1), kernel_size=(5,5), padding=(2,2), return_indices=False, stride=(1,1))

self.model_9_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=512, kernel_size=(1,1), out_channels=256, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_9_cv2_act = nn.SiLU()

self.model_10_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_10_act = nn.SiLU()

self.model_11 = nn.Upsample(mode='nearest', scale_factor=(2.0,2.0), size=None)

self.model_13_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_13_cv1_act = nn.SiLU()

self.model_13_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_13_m_0_cv1_act = nn.SiLU()

self.model_13_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(3,3), out_channels=64, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_13_m_0_cv2_act = nn.SiLU()

self.model_13_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_13_cv2_act = nn.SiLU()

self.model_13_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_13_cv3_act = nn.SiLU()

self.model_14_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_14_act = nn.SiLU()

self.model_15 = nn.Upsample(mode='nearest', scale_factor=(2.0,2.0), size=None)

self.model_17_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_17_cv1_act = nn.SiLU()

self.model_17_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_17_m_0_cv1_act = nn.SiLU()

self.model_17_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=32, kernel_size=(3,3), out_channels=32, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_17_m_0_cv2_act = nn.SiLU()

self.model_17_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=32, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_17_cv2_act = nn.SiLU()

self.model_17_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_17_cv3_act = nn.SiLU()

self.model_18_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(3,3), out_channels=64, padding=(1,1), padding_mode='zeros', stride=(2,2))

self.model_18_act = nn.SiLU()

self.model_20_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_20_cv1_act = nn.SiLU()

self.model_20_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_20_m_0_cv1_act = nn.SiLU()

self.model_20_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(3,3), out_channels=64, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_20_m_0_cv2_act = nn.SiLU()

self.model_20_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=64, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_20_cv2_act = nn.SiLU()

self.model_20_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_20_cv3_act = nn.SiLU()

self.model_21_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(3,3), out_channels=128, padding=(1,1), padding_mode='zeros', stride=(2,2))

self.model_21_act = nn.SiLU()

self.model_23_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_23_cv1_act = nn.SiLU()

self.model_23_m_0_cv1_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_23_m_0_cv1_act = nn.SiLU()

self.model_23_m_0_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(3,3), out_channels=128, padding=(1,1), padding_mode='zeros', stride=(1,1))

self.model_23_m_0_cv2_act = nn.SiLU()

self.model_23_cv2_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=128, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_23_cv2_act = nn.SiLU()

self.model_23_cv3_conv = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=256, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_23_cv3_act = nn.SiLU()

self.model_24_m_0 = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=64, kernel_size=(1,1), out_channels=255, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_24_m_1 = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=128, kernel_size=(1,1), out_channels=255, padding=(0,0), padding_mode='zeros', stride=(1,1))

self.model_24_m_2 = nn.Conv2d(bias=True, dilation=(1,1), groups=1, in_channels=256, kernel_size=(1,1), out_channels=255, padding=(0,0), padding_mode='zeros', stride=(1,1))

archive = zipfile.ZipFile('yolov5n.pnnx.bin', 'r')

self.model_0_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.0.conv.bias', (16), 'float32')

self.model_0_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.0.conv.weight', (16,3,6,6), 'float32')

self.model_1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.1.conv.bias', (32), 'float32')

self.model_1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.1.conv.weight', (32,16,3,3), 'float32')

self.model_2_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.2.cv1.conv.bias', (16), 'float32')

self.model_2_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.2.cv1.conv.weight', (16,32,1,1), 'float32')

self.model_2_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.2.m.0.cv1.conv.bias', (16), 'float32')

self.model_2_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.2.m.0.cv1.conv.weight', (16,16,1,1), 'float32')

self.model_2_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.2.m.0.cv2.conv.bias', (16), 'float32')

self.model_2_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.2.m.0.cv2.conv.weight', (16,16,3,3), 'float32')

self.model_2_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.2.cv2.conv.bias', (16), 'float32')

self.model_2_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.2.cv2.conv.weight', (16,32,1,1), 'float32')

self.model_2_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.2.cv3.conv.bias', (32), 'float32')

self.model_2_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.2.cv3.conv.weight', (32,32,1,1), 'float32')

self.model_3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.3.conv.bias', (64), 'float32')

self.model_3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.3.conv.weight', (64,32,3,3), 'float32')

self.model_4_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.4.cv1.conv.bias', (32), 'float32')

self.model_4_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.4.cv1.conv.weight', (32,64,1,1), 'float32')

self.model_4_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.0.cv1.conv.bias', (32), 'float32')

self.model_4_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.0.cv1.conv.weight', (32,32,1,1), 'float32')

self.model_4_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.0.cv2.conv.bias', (32), 'float32')

self.model_4_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.0.cv2.conv.weight', (32,32,3,3), 'float32')

self.model_4_m_1_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.1.cv1.conv.bias', (32), 'float32')

self.model_4_m_1_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.1.cv1.conv.weight', (32,32,1,1), 'float32')

self.model_4_m_1_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.1.cv2.conv.bias', (32), 'float32')

self.model_4_m_1_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.4.m.1.cv2.conv.weight', (32,32,3,3), 'float32')

self.model_4_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.4.cv2.conv.bias', (32), 'float32')

self.model_4_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.4.cv2.conv.weight', (32,64,1,1), 'float32')

self.model_4_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.4.cv3.conv.bias', (64), 'float32')

self.model_4_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.4.cv3.conv.weight', (64,64,1,1), 'float32')

self.model_5_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.5.conv.bias', (128), 'float32')

self.model_5_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.5.conv.weight', (128,64,3,3), 'float32')

self.model_6_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.cv1.conv.bias', (64), 'float32')

self.model_6_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.cv1.conv.weight', (64,128,1,1), 'float32')

self.model_6_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.0.cv1.conv.bias', (64), 'float32')

self.model_6_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.0.cv1.conv.weight', (64,64,1,1), 'float32')

self.model_6_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.0.cv2.conv.bias', (64), 'float32')

self.model_6_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.0.cv2.conv.weight', (64,64,3,3), 'float32')

self.model_6_m_1_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.1.cv1.conv.bias', (64), 'float32')

self.model_6_m_1_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.1.cv1.conv.weight', (64,64,1,1), 'float32')

self.model_6_m_1_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.1.cv2.conv.bias', (64), 'float32')

self.model_6_m_1_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.1.cv2.conv.weight', (64,64,3,3), 'float32')

self.model_6_m_2_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.2.cv1.conv.bias', (64), 'float32')

self.model_6_m_2_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.2.cv1.conv.weight', (64,64,1,1), 'float32')

self.model_6_m_2_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.2.cv2.conv.bias', (64), 'float32')

self.model_6_m_2_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.m.2.cv2.conv.weight', (64,64,3,3), 'float32')

self.model_6_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.cv2.conv.bias', (64), 'float32')

self.model_6_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.cv2.conv.weight', (64,128,1,1), 'float32')

self.model_6_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.6.cv3.conv.bias', (128), 'float32')

self.model_6_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.6.cv3.conv.weight', (128,128,1,1), 'float32')

self.model_7_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.7.conv.bias', (256), 'float32')

self.model_7_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.7.conv.weight', (256,128,3,3), 'float32')

self.model_8_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.8.cv1.conv.bias', (128), 'float32')

self.model_8_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.8.cv1.conv.weight', (128,256,1,1), 'float32')

self.model_8_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.8.m.0.cv1.conv.bias', (128), 'float32')

self.model_8_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.8.m.0.cv1.conv.weight', (128,128,1,1), 'float32')

self.model_8_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.8.m.0.cv2.conv.bias', (128), 'float32')

self.model_8_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.8.m.0.cv2.conv.weight', (128,128,3,3), 'float32')

self.model_8_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.8.cv2.conv.bias', (128), 'float32')

self.model_8_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.8.cv2.conv.weight', (128,256,1,1), 'float32')

self.model_8_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.8.cv3.conv.bias', (256), 'float32')

self.model_8_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.8.cv3.conv.weight', (256,256,1,1), 'float32')

self.model_9_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.9.cv1.conv.bias', (128), 'float32')

self.model_9_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.9.cv1.conv.weight', (128,256,1,1), 'float32')

self.model_9_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.9.cv2.conv.bias', (256), 'float32')

self.model_9_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.9.cv2.conv.weight', (256,512,1,1), 'float32')

self.model_10_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.10.conv.bias', (128), 'float32')

self.model_10_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.10.conv.weight', (128,256,1,1), 'float32')

self.model_13_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.13.cv1.conv.bias', (64), 'float32')

self.model_13_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.13.cv1.conv.weight', (64,256,1,1), 'float32')

self.model_13_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.13.m.0.cv1.conv.bias', (64), 'float32')

self.model_13_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.13.m.0.cv1.conv.weight', (64,64,1,1), 'float32')

self.model_13_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.13.m.0.cv2.conv.bias', (64), 'float32')

self.model_13_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.13.m.0.cv2.conv.weight', (64,64,3,3), 'float32')

self.model_13_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.13.cv2.conv.bias', (64), 'float32')

self.model_13_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.13.cv2.conv.weight', (64,256,1,1), 'float32')

self.model_13_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.13.cv3.conv.bias', (128), 'float32')

self.model_13_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.13.cv3.conv.weight', (128,128,1,1), 'float32')

self.model_14_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.14.conv.bias', (64), 'float32')

self.model_14_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.14.conv.weight', (64,128,1,1), 'float32')

self.model_17_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.17.cv1.conv.bias', (32), 'float32')

self.model_17_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.17.cv1.conv.weight', (32,128,1,1), 'float32')

self.model_17_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.17.m.0.cv1.conv.bias', (32), 'float32')

self.model_17_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.17.m.0.cv1.conv.weight', (32,32,1,1), 'float32')

self.model_17_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.17.m.0.cv2.conv.bias', (32), 'float32')

self.model_17_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.17.m.0.cv2.conv.weight', (32,32,3,3), 'float32')

self.model_17_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.17.cv2.conv.bias', (32), 'float32')

self.model_17_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.17.cv2.conv.weight', (32,128,1,1), 'float32')

self.model_17_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.17.cv3.conv.bias', (64), 'float32')

self.model_17_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.17.cv3.conv.weight', (64,64,1,1), 'float32')

self.model_18_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.18.conv.bias', (64), 'float32')

self.model_18_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.18.conv.weight', (64,64,3,3), 'float32')

self.model_20_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.20.cv1.conv.bias', (64), 'float32')

self.model_20_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.20.cv1.conv.weight', (64,128,1,1), 'float32')

self.model_20_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.20.m.0.cv1.conv.bias', (64), 'float32')

self.model_20_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.20.m.0.cv1.conv.weight', (64,64,1,1), 'float32')

self.model_20_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.20.m.0.cv2.conv.bias', (64), 'float32')

self.model_20_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.20.m.0.cv2.conv.weight', (64,64,3,3), 'float32')

self.model_20_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.20.cv2.conv.bias', (64), 'float32')

self.model_20_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.20.cv2.conv.weight', (64,128,1,1), 'float32')

self.model_20_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.20.cv3.conv.bias', (128), 'float32')

self.model_20_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.20.cv3.conv.weight', (128,128,1,1), 'float32')

self.model_21_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.21.conv.bias', (128), 'float32')

self.model_21_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.21.conv.weight', (128,128,3,3), 'float32')

self.model_23_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.23.cv1.conv.bias', (128), 'float32')

self.model_23_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.23.cv1.conv.weight', (128,256,1,1), 'float32')

self.model_23_m_0_cv1_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.23.m.0.cv1.conv.bias', (128), 'float32')

self.model_23_m_0_cv1_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.23.m.0.cv1.conv.weight', (128,128,1,1), 'float32')

self.model_23_m_0_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.23.m.0.cv2.conv.bias', (128), 'float32')

self.model_23_m_0_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.23.m.0.cv2.conv.weight', (128,128,3,3), 'float32')

self.model_23_cv2_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.23.cv2.conv.bias', (128), 'float32')

self.model_23_cv2_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.23.cv2.conv.weight', (128,256,1,1), 'float32')

self.model_23_cv3_conv.bias = self.load_pnnx_bin_as_parameter(archive, 'model.23.cv3.conv.bias', (256), 'float32')

self.model_23_cv3_conv.weight = self.load_pnnx_bin_as_parameter(archive, 'model.23.cv3.conv.weight', (256,256,1,1), 'float32')

self.model_24_m_0.bias = self.load_pnnx_bin_as_parameter(archive, 'model.24.m.0.bias', (255), 'float32')

self.model_24_m_0.weight = self.load_pnnx_bin_as_parameter(archive, 'model.24.m.0.weight', (255,64,1,1), 'float32')

self.model_24_m_1.bias = self.load_pnnx_bin_as_parameter(archive, 'model.24.m.1.bias', (255), 'float32')

self.model_24_m_1.weight = self.load_pnnx_bin_as_parameter(archive, 'model.24.m.1.weight', (255,128,1,1), 'float32')

self.model_24_m_2.bias = self.load_pnnx_bin_as_parameter(archive, 'model.24.m.2.bias', (255), 'float32')

self.model_24_m_2.weight = self.load_pnnx_bin_as_parameter(archive, 'model.24.m.2.weight', (255,256,1,1), 'float32')

self.pnnx_53_data = self.load_pnnx_bin_as_parameter(archive, 'pnnx_53.data', (1,3,20,20,2,), 'float32')

self.pnnx_54_data = self.load_pnnx_bin_as_parameter(archive, 'pnnx_54.data', (1,3,20,20,2,), 'float32')

self.pnnx_55_data = self.load_pnnx_bin_as_parameter(archive, 'pnnx_55.data', (1,3,40,40,2,), 'float32')

self.pnnx_56_data = self.load_pnnx_bin_as_parameter(archive, 'pnnx_56.data', (1,3,40,40,2,), 'float32')

self.pnnx_58_data = self.load_pnnx_bin_as_parameter(archive, 'pnnx_58.data', (1,3,80,80,2,), 'float32')

self.pnnx_60_data = self.load_pnnx_bin_as_parameter(archive, 'pnnx_60.data', (1,3,80,80,2,), 'float32')

archive.close()

def load_pnnx_bin_as_parameter(self, archive, key, shape, dtype, requires_grad=True):

return nn.Parameter(self.load_pnnx_bin_as_tensor(archive, key, shape, dtype), requires_grad)

def load_pnnx_bin_as_tensor(self, archive, key, shape, dtype):

fd, tmppath = tempfile.mkstemp()

with os.fdopen(fd, 'wb') as tmpf, archive.open(key) as keyfile:

tmpf.write(keyfile.read())

m = np.memmap(tmppath, dtype=dtype, mode='r', shape=shape).copy()

os.remove(tmppath)

return torch.from_numpy(m)

def forward(self, v_0):

v_1 = self.model_0_conv(v_0)

v_2 = self.model_0_act(v_1)

v_3 = self.model_1_conv(v_2)

v_4 = self.model_1_act(v_3)

v_5 = self.model_2_cv1_conv(v_4)

v_6 = self.model_2_cv1_act(v_5)

v_7 = self.model_2_m_0_cv1_conv(v_6)

v_8 = self.model_2_m_0_cv1_act(v_7)

v_9 = self.model_2_m_0_cv2_conv(v_8)

v_10 = self.model_2_m_0_cv2_act(v_9)

v_11 = (v_6 + v_10)

v_12 = self.model_2_cv2_conv(v_4)

v_13 = self.model_2_cv2_act(v_12)

v_14 = torch.cat((v_11, v_13), dim=1)

v_15 = self.model_2_cv3_conv(v_14)

v_16 = self.model_2_cv3_act(v_15)

v_17 = self.model_3_conv(v_16)

v_18 = self.model_3_act(v_17)

v_19 = self.model_4_cv1_conv(v_18)

v_20 = self.model_4_cv1_act(v_19)

v_21 = self.model_4_m_0_cv1_conv(v_20)

v_22 = self.model_4_m_0_cv1_act(v_21)

v_23 = self.model_4_m_0_cv2_conv(v_22)

v_24 = self.model_4_m_0_cv2_act(v_23)

v_25 = (v_20 + v_24)

v_26 = self.model_4_m_1_cv1_conv(v_25)

v_27 = self.model_4_m_1_cv1_act(v_26)

v_28 = self.model_4_m_1_cv2_conv(v_27)

v_29 = self.model_4_m_1_cv2_act(v_28)

v_30 = (v_25 + v_29)

v_31 = self.model_4_cv2_conv(v_18)

v_32 = self.model_4_cv2_act(v_31)

v_33 = torch.cat((v_30, v_32), dim=1)

v_34 = self.model_4_cv3_conv(v_33)

v_35 = self.model_4_cv3_act(v_34)

v_36 = self.model_5_conv(v_35)

v_37 = self.model_5_act(v_36)

v_38 = self.model_6_cv1_conv(v_37)

v_39 = self.model_6_cv1_act(v_38)

v_40 = self.model_6_m_0_cv1_conv(v_39)

v_41 = self.model_6_m_0_cv1_act(v_40)

v_42 = self.model_6_m_0_cv2_conv(v_41)

v_43 = self.model_6_m_0_cv2_act(v_42)

v_44 = (v_39 + v_43)

v_45 = self.model_6_m_1_cv1_conv(v_44)

v_46 = self.model_6_m_1_cv1_act(v_45)

v_47 = self.model_6_m_1_cv2_conv(v_46)

v_48 = self.model_6_m_1_cv2_act(v_47)

v_49 = (v_44 + v_48)

v_50 = self.model_6_m_2_cv1_conv(v_49)

v_51 = self.model_6_m_2_cv1_act(v_50)

v_52 = self.model_6_m_2_cv2_conv(v_51)

v_53 = self.model_6_m_2_cv2_act(v_52)

v_54 = (v_49 + v_53)

v_55 = self.model_6_cv2_conv(v_37)

v_56 = self.model_6_cv2_act(v_55)

v_57 = torch.cat((v_54, v_56), dim=1)

v_58 = self.model_6_cv3_conv(v_57)

v_59 = self.model_6_cv3_act(v_58)

v_60 = self.model_7_conv(v_59)

v_61 = self.model_7_act(v_60)

v_62 = self.model_8_cv1_conv(v_61)

v_63 = self.model_8_cv1_act(v_62)

v_64 = self.model_8_m_0_cv1_conv(v_63)

v_65 = self.model_8_m_0_cv1_act(v_64)

v_66 = self.model_8_m_0_cv2_conv(v_65)

v_67 = self.model_8_m_0_cv2_act(v_66)

v_68 = (v_63 + v_67)

v_69 = self.model_8_cv2_conv(v_61)

v_70 = self.model_8_cv2_act(v_69)

v_71 = torch.cat((v_68, v_70), dim=1)

v_72 = self.model_8_cv3_conv(v_71)

v_73 = self.model_8_cv3_act(v_72)

v_74 = self.model_9_cv1_conv(v_73)

v_75 = self.model_9_cv1_act(v_74)

v_76 = self.model_9_m(v_75)

v_77 = self.pnnx_unique_0(v_76)

v_78 = self.pnnx_unique_1(v_77)

v_79 = torch.cat((v_75, v_76, v_77, v_78), dim=1)

v_80 = self.model_9_cv2_conv(v_79)

v_81 = self.model_9_cv2_act(v_80)

v_82 = self.model_10_conv(v_81)

v_83 = self.model_10_act(v_82)

v_84 = self.model_11(v_83)

v_85 = torch.cat((v_84, v_59), dim=1)

v_86 = self.model_13_cv1_conv(v_85)

v_87 = self.model_13_cv1_act(v_86)

v_88 = self.model_13_m_0_cv1_conv(v_87)

v_89 = self.model_13_m_0_cv1_act(v_88)

v_90 = self.model_13_m_0_cv2_conv(v_89)

v_91 = self.model_13_m_0_cv2_act(v_90)

v_92 = self.model_13_cv2_conv(v_85)

v_93 = self.model_13_cv2_act(v_92)

v_94 = torch.cat((v_91, v_93), dim=1)

v_95 = self.model_13_cv3_conv(v_94)

v_96 = self.model_13_cv3_act(v_95)

v_97 = self.model_14_conv(v_96)

v_98 = self.model_14_act(v_97)

v_99 = self.model_15(v_98)

v_100 = torch.cat((v_99, v_35), dim=1)

v_101 = self.model_17_cv1_conv(v_100)

v_102 = self.model_17_cv1_act(v_101)

v_103 = self.model_17_m_0_cv1_conv(v_102)

v_104 = self.model_17_m_0_cv1_act(v_103)

v_105 = self.model_17_m_0_cv2_conv(v_104)

v_106 = self.model_17_m_0_cv2_act(v_105)

v_107 = self.model_17_cv2_conv(v_100)

v_108 = self.model_17_cv2_act(v_107)

v_109 = torch.cat((v_106, v_108), dim=1)

v_110 = self.model_17_cv3_conv(v_109)

v_111 = self.model_17_cv3_act(v_110)

v_112 = self.model_18_conv(v_111)

v_113 = self.model_18_act(v_112)

v_114 = torch.cat((v_113, v_98), dim=1)

v_115 = self.model_20_cv1_conv(v_114)

v_116 = self.model_20_cv1_act(v_115)

v_117 = self.model_20_m_0_cv1_conv(v_116)

v_118 = self.model_20_m_0_cv1_act(v_117)

v_119 = self.model_20_m_0_cv2_conv(v_118)

v_120 = self.model_20_m_0_cv2_act(v_119)

v_121 = self.model_20_cv2_conv(v_114)

v_122 = self.model_20_cv2_act(v_121)

v_123 = torch.cat((v_120, v_122), dim=1)

v_124 = self.model_20_cv3_conv(v_123)

v_125 = self.model_20_cv3_act(v_124)

v_126 = self.model_21_conv(v_125)

v_127 = self.model_21_act(v_126)

v_128 = torch.cat((v_127, v_83), dim=1)

v_129 = self.model_23_cv1_conv(v_128)

v_130 = self.model_23_cv1_act(v_129)

v_131 = self.model_23_m_0_cv1_conv(v_130)

v_132 = self.model_23_m_0_cv1_act(v_131)

v_133 = self.model_23_m_0_cv2_conv(v_132)

v_134 = self.model_23_m_0_cv2_act(v_133)

v_135 = self.model_23_cv2_conv(v_128)

v_136 = self.model_23_cv2_act(v_135)

v_137 = torch.cat((v_134, v_136), dim=1)

v_138 = self.model_23_cv3_conv(v_137)

v_139 = self.model_23_cv3_act(v_138)

v_140 = self.pnnx_53_data

v_141 = self.pnnx_54_data

v_142 = self.pnnx_55_data

v_143 = self.pnnx_56_data

v_144 = self.pnnx_58_data

v_145 = self.pnnx_60_data

v_146 = self.model_24_m_0(v_111)

v_147 = v_146.reshape(1, 3, 85, 80, 80)

v_148 = v_147.permute(dims=(0,1,3,4,2))

v_149 = F.sigmoid(v_148)

v_150, v_151, v_152 = torch.split(tensor=v_149, dim=4, split_size_or_sections=(2,2,81))

v_153 = (((v_150 * 2) + v_145) * 8.0)

v_154 = (torch.pow((v_151 * 2), 2) * v_144)

v_155 = torch.cat((v_153, v_154, v_152), dim=4)

v_156 = v_155.reshape(1, 19200, 85)

v_157 = self.model_24_m_1(v_125)

v_158 = v_157.reshape(1, 3, 85, 40, 40)

v_159 = v_158.permute(dims=(0,1,3,4,2))

v_160 = F.sigmoid(v_159)

v_161, v_162, v_163 = torch.split(tensor=v_160, dim=4, split_size_or_sections=(2,2,81))

v_164 = (((v_161 * 2) + v_143) * 16.0)

v_165 = (torch.pow((v_162 * 2), 2) * v_142)

v_166 = torch.cat((v_164, v_165, v_163), dim=4)

v_167 = v_166.reshape(1, 4800, 85)

v_168 = self.model_24_m_2(v_139)

v_169 = v_168.reshape(1, 3, 85, 20, 20)

v_170 = v_169.permute(dims=(0,1,3,4,2))

v_171 = F.sigmoid(v_170)

v_172, v_173, v_174 = torch.split(tensor=v_171, dim=4, split_size_or_sections=(2,2,81))

v_175 = (((v_172 * 2) + v_141) * 32.0)

v_176 = (torch.pow((v_173 * 2), 2) * v_140)

v_177 = torch.cat((v_175, v_176, v_174), dim=4)

v_178 = v_177.reshape(1, 1200, 85)

v_179 = torch.cat((v_156, v_167, v_178), dim=1)

v_180 = (v_179, )

return v_180

def export_torchscript():

net = Model()

net.float()

net.eval()

torch.manual_seed(0)

v_0 = torch.rand(1, 3, 640, 640, dtype=torch.float)

mod = torch.jit.trace(net, v_0)

mod.save("yolov5n_pnnx.py.pt")

def export_onnx():

net = Model()

net.float()

net.eval()

torch.manual_seed(0)

v_0 = torch.rand(1, 3, 640, 640, dtype=torch.float)

torch.onnx.export(net, v_0, "yolov5n_pnnx.py.onnx", export_params=True, operator_export_type=torch.onnx.OperatorExportTypes.ONNX_ATEN_FALLBACK, opset_version=13, input_names=['in0'], output_names=['out0'])

def export_pnnx():

net = Model()

net.float()

net.eval()

torch.manual_seed(0)

v_0 = torch.rand(1, 3, 640, 640, dtype=torch.float)

import pnnx

pnnx.export(net, "yolov5n_pnnx.py.pt", v_0)

def export_ncnn():

export_pnnx()

@torch.no_grad()

def test_inference(self, v_0=None):

# 如果没有传入输入,默认创建随机数据

if v_0 is None:

torch.manual_seed(0)

v_0 = torch.rand(1, 3, 640, 640, dtype=torch.float)

return self.forward(v_0)

# 直接运行时,会调用此部分

if __name__ == "__main__":

net = Model()

net.eval()

out = net.test_inference() # 默认会给出一个随机的输入

print(out)显示效果:测试验证成功

bash

(my_pytorch_env) user@user-EliteMini-Series:~/RK/RK3568/4_rk3568_ncnn/rk3568_work$ python compare.py

pnnx shape: torch.Size([1, 25200, 85])

ncnn shape: torch.Size([1, 25200, 85])

Shapes equal: True

Max absolute diff: 0.0010223388671875

Allclose (within 1e-6): True2.3、创建测试工程

2.3.1、构建目录

rk3568_work

├─ ncnn

├─ yolov5n.ncnn.param

├─ yolov5n.ncnn.bin

├─ my_pytorch_env(虚拟环境主要使用pnnx)

├─ deploy_ubuntu

│ ├─ CMakeLists.txt (等会创建)

│ ├─ src

│ │ └─ yolov5_ncnn.cpp (等会创建)

│ ├─ model

│ │ ├─ yolov5n.ncnn.param

│ │ └─ yolov5n.ncnn.bin

│ └─ test.jpg

└─ build_ubuntu2.3.2、构建源码和目录

cd /home/user/RK/RK3568/4_rk3568_ncnn/rk3568_work

# 创建源码目录和构建目录

mkdir -p deploy_ubuntu/src

mkdir -p deploy_ubuntu/model

mkdir -p build_ubuntu

cd /home/user/RK/RK3568/4_rk3568_ncnn/rk3568_work/build_ubuntu

cmake ../deploy_ubuntu

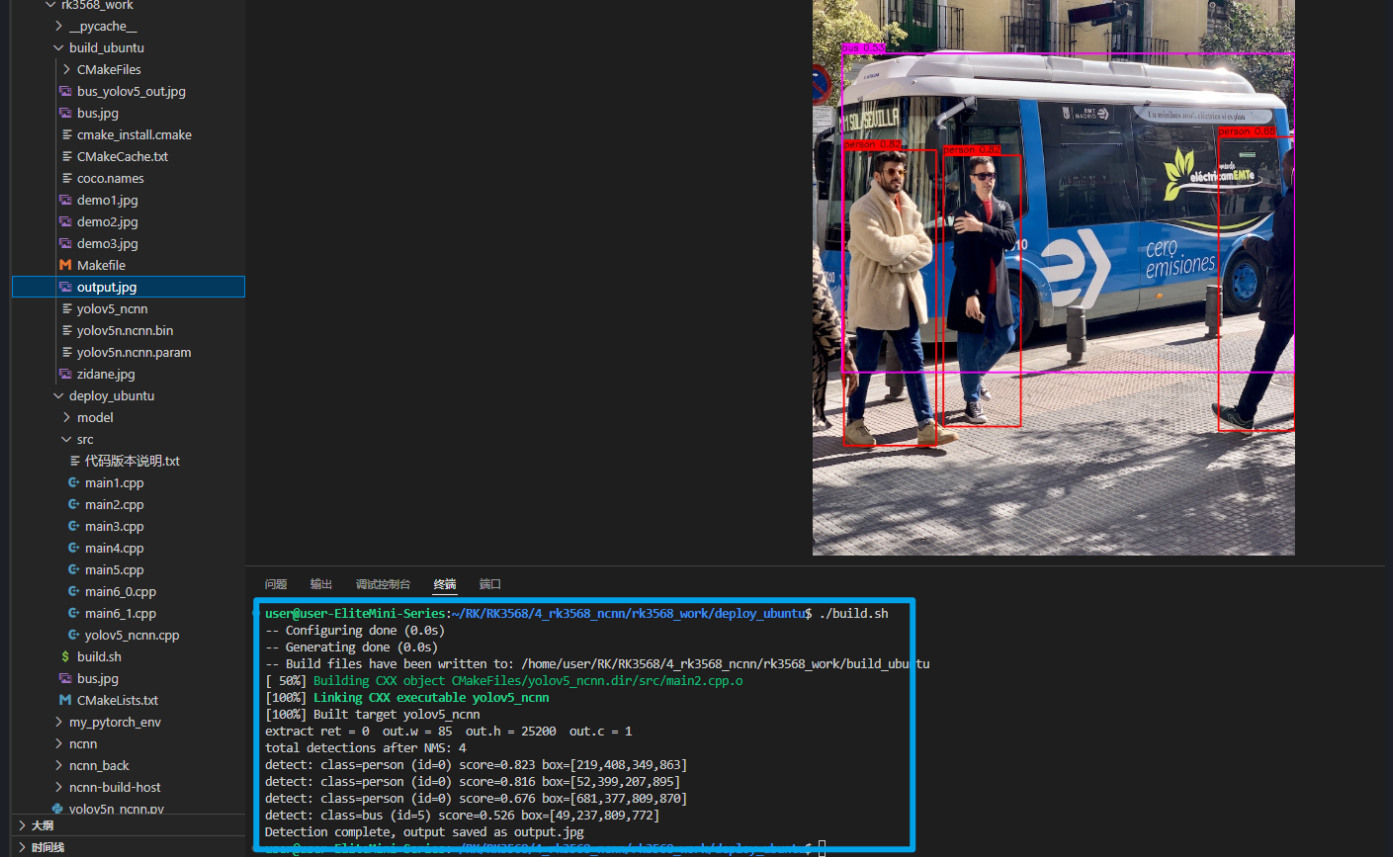

cmake --build . -j$(nproc)效果:出现下列效果是默认你把所有的文件都配置好了,包括图片

bash

user@user-EliteMini-Series:~/RK/RK3568/4_rk3568_ncnn/rk3568_work/build_ubuntu$ ./yolov5_ncnn yolov5n.ncnn.param yolov5n.ncnn.bin bus.jpg

out shape (c, h, w): 1, 25200, 85

first 10 values: 8.45431 2.88228 4.83573 6.39983 0.9488 2.09542e-09 4.4782e-09 3.80263e-05 2.06879e-06 4.36303e-06 2.3.3、构建CMakeLists.txt

cmake_minimum_required(VERSION 3.16)

project(yolov5n_ncnn_ubuntu CXX)

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

# ncnn 源码根目录

set(NCNN_ROOT "/home/user/RK/RK3568/4_rk3568_ncnn/rk3568_work/ncnn")

# ncnn 在 x86 上的 build 目录

set(NCNN_BUILD "/home/user/RK/RK3568/4_rk3568_ncnn/rk3568_work/ncnn-build-host")

set(CMAKE_BUILD_TYPE Release)

add_compile_options(-O3 -DNDEBUG)

#yolov5_ncnn.cpp这个就是类似的main.cpp

add_executable(yolov5_ncnn src/yolov5_ncnn.cpp)

# 这里一定要同时包含 源码/src + build/src

target_include_directories(yolov5_ncnn PRIVATE

${NCNN_ROOT}/src

${NCNN_BUILD}/src

)

target_link_libraries(yolov5_ncnn PRIVATE

${NCNN_BUILD}/src/libncnn.a

pthread

)

find_package(OpenMP)

if(OpenMP_CXX_FOUND)

target_link_libraries(yolov5_ncnn PRIVATE OpenMP::OpenMP_CXX)

endif()

find_package(OpenCV REQUIRED)

target_include_directories(yolov5_ncnn PRIVATE ${OpenCV_INCLUDE_DIRS})

target_link_libraries(yolov5_ncnn PRIVATE ${OpenCV_LIBS})2.3.4、构建build.sh

#!/bin/bash

cd /home/user/RK/RK3568/4_rk3568_ncnn/rk3568_work/build_ubuntu

cmake ../deploy_ubuntu

cmake --build . -j$(nproc)

#这个表示识别当前这个图片bus.jpg

sudo ./yolov5_ncnn yolov5n.ncnn.param yolov5n.ncnn.bin2.3.5、构建yolov5_ncnn.cpp

#include <iostream>

#include <vector>

#include <string>

#include <algorithm>

#include <cmath>

#include <cstdio>

#include <opencv2/opencv.hpp>

#include "net.h" // ncnn 头文件

// ------------------------ COCO 80 类名字 ------------------------

static const std::vector<std::string> coco_class_names = {

"person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train",

"truck", "boat", "traffic light", "fire hydrant", "stop sign",

"parking meter", "bench", "bird", "cat", "dog", "horse", "sheep",

"cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard",

"sports ball", "kite", "baseball bat", "baseball glove", "skateboard",

"surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork",

"knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange",

"broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair",

"sofa", "pottedplant", "bed", "diningtable", "toilet", "tvmonitor",

"laptop", "mouse", "remote", "keyboard", "cell phone", "microwave",

"oven", "toaster", "sink", "refrigerator", "book", "clock", "vase",

"scissors", "teddy bear", "hair drier", "toothbrush"

};

// ------------------------ 基本结构体和函数 ------------------------

// 检测框结构体:左上角(x1,y1) 和 右下角(x2,y2),在原图坐标系下

struct Detection {

float x1, y1, x2, y2;

int class_id;

float score; // obj_conf * class_conf

};

// 计算 IOU

float intersection_over_union(const Detection& a, const Detection& b)

{

float x1 = std::max(a.x1, b.x1);

float y1 = std::max(a.y1, b.y1);

float x2 = std::min(a.x2, b.x2);

float y2 = std::min(a.y2, b.y2);

float w = std::max(0.f, x2 - x1);

float h = std::max(0.f, y2 - y1);

float inter = w * h;

float area_a = (a.x2 - a.x1) * (a.y2 - a.y1);

float area_b = (b.x2 - b.x1) * (b.y2 - b.y1);

float uni = area_a + area_b - inter;

if (uni <= 0.f) return 0.f;

return inter / uni;

}

/**

* 解码 YOLOv5 输出 + NMS

* out: ncnn::Mat,shape 为 (h=num_preds=25200, w=num_attr=85, c=1)

* detections: 输出的最终检测结果

* conf_thresh: 置信度阈值(与 Python detect.py 对齐,0.25)

* nms_thresh: NMS IOU 阈值(默认 0.45)

* orig_w, orig_h: 原图宽高

* scale: letterbox 时的缩放比例 r

* pad_x, pad_y: letterbox 左右 / 上下 padding 的像素(dx, dy)

* input_size: 网络输入尺寸(通常 640)

*/

void decode_and_nms(const ncnn::Mat& out,

std::vector<Detection>& detections,

float conf_thresh,

float nms_thresh,

int orig_w,

int orig_h,

float scale,

int pad_x,

int pad_y,

int input_size = 640)

{

// 这里根据你打印的结果:out.w = 85, out.h = 25200, out.c = 1

int num_preds = out.h; // 25200

int num_attr = out.w; // 85

const int num_classes = num_attr - 5; // 80

for (int i = 0; i < num_preds; i++)

{

const float* ptr = out.row(i);

float obj_conf = ptr[4];

// 和 Python 行为更接近:不再用 0.01 的硬编码阈值,而是和 conf_thresh 一致

if (obj_conf < conf_thresh)

continue;

// 找类别概率最大的那个类

int class_id = -1;

float max_cls_conf = 0.f;

for (int c = 0; c < num_classes; c++)

{

float cls_conf = ptr[5 + c];

if (cls_conf > max_cls_conf)

{

max_cls_conf = cls_conf;

class_id = c;

}

}

// final score = obj_conf * class_conf

float score = obj_conf * max_cls_conf;

if (score < conf_thresh)

continue;

// YOLOv5 导出到 ncnn 时,前 4 个通常是 cx, cy, w, h(在 640x640 上的像素坐标)

float cx = ptr[0];

float cy = ptr[1];

float w = ptr[2];

float h = ptr[3];

// 在 640x640 上的左上/右下

float x1 = cx - w * 0.5f;

float y1 = cy - h * 0.5f;

float x2 = cx + w * 0.5f;

float y2 = cy + h * 0.5f;

// 去掉 letterbox padding,再除以 scale,还原到原图坐标

x1 = (x1 - pad_x) / scale;

y1 = (y1 - pad_y) / scale;

x2 = (x2 - pad_x) / scale;

y2 = (y2 - pad_y) / scale;

// 裁剪到原图范围

x1 = std::max(0.f, std::min(x1, (float)orig_w - 1));

y1 = std::max(0.f, std::min(y1, (float)orig_h - 1));

x2 = std::max(0.f, std::min(x2, (float)orig_w - 1));

y2 = std::max(0.f, std::min(y2, (float)orig_h - 1));

// 防止异常框

if (x2 <= x1 || y2 <= y1)

continue;

Detection det;

det.x1 = x1;

det.y1 = y1;

det.x2 = x2;

det.y2 = y2;

det.class_id = class_id;

det.score = score;

detections.push_back(det);

}

// 按 score 降序排序

std::sort(detections.begin(), detections.end(),

[](const Detection& a, const Detection& b) {

return a.score > b.score;

});

// NMS

std::vector<Detection> nms_result;

for (const auto& det : detections)

{

bool keep = true;

for (const auto& kept : nms_result)

{

float iou = intersection_over_union(det, kept);

if (iou > nms_thresh)

{

keep = false;

break;

}

}

if (keep)

nms_result.push_back(det);

}

detections.swap(nms_result);

}

// ------------------------ draw_detections_and_print ------------------------

// 为每个类别分配一个唯一的颜色

cv::Scalar get_class_color(int class_id)

{

// 颜色池,根据 class_id 获取不同的颜色

std::vector<cv::Scalar> colors = {

cv::Scalar(0, 0, 255), // Red -> person

cv::Scalar(0, 255, 0), // Green -> bicycle

cv::Scalar(255, 0, 0), // Blue -> car

cv::Scalar(0, 255, 255), // Yellow -> motorbike

cv::Scalar(255, 255, 0), // Cyan -> aeroplane

cv::Scalar(255, 0, 255), // Magenta -> bus

// 其他颜色可以继续添加

};

// 保证类别 ID 不超出颜色池

return colors[class_id % colors.size()];

}

// 把检测结果画到图片上,并在控制台打印

void draw_detections_and_print(cv::Mat& img,

const std::vector<Detection>& dets)

{

for (const auto& det : dets)

{

int x1 = std::max(0, (int)std::round(det.x1));

int y1 = std::max(0, (int)std::round(det.y1));

int x2 = std::min(img.cols - 1, (int)std::round(det.x2));

int y2 = std::min(img.rows - 1, (int)std::round(det.y2));

// 获取每个类别对应的颜色

cv::Scalar color = get_class_color(det.class_id);

// 画矩形框

cv::rectangle(img,

cv::Point(x1, y1),

cv::Point(x2, y2),

color, 2); // 使用类别颜色绘制框

// 类别名字

std::string cls_name = "cls_" + std::to_string(det.class_id);

if (det.class_id >= 0 &&

det.class_id < (int)coco_class_names.size())

{

cls_name = coco_class_names[det.class_id];

}

// 文本:类名 + 分数

char text[256];

std::snprintf(text, sizeof(text), "%s %.2f",

cls_name.c_str(), det.score);

int baseline = 0;

cv::Size tsize = cv::getTextSize(text,

cv::FONT_HERSHEY_SIMPLEX,

0.5,

1,

&baseline);

int ty = std::max(0, y1 - tsize.height - baseline);

// 文本背景

cv::rectangle(img,

cv::Point(x1, ty),

cv::Point(x1 + tsize.width,

ty + tsize.height + baseline),

color, -1); // 使用类别颜色作为文本背景

// 文字

cv::putText(img, text,

cv::Point(x1, ty + tsize.height),

cv::FONT_HERSHEY_SIMPLEX,

0.5,

cv::Scalar(0, 0, 0),

1);

// 控制台打印

std::printf("detect: class=%s (id=%d) score=%.3f box=[%d,%d,%d,%d]\n",

cls_name.c_str(), det.class_id, det.score,

x1, y1, x2, y2);

}

}

// ------------------------ main ------------------------

int main(int argc, char** argv)

{

if (argc < 4)

{

std::cerr << "Usage: " << argv[0]

<< " yolov5n.ncnn.param yolov5n.ncnn.bin image.jpg"

<< std::endl;

return -1;

}

const char* param_path = argv[1];

const char* bin_path = argv[2];

const char* image_path = argv[3];

// 1. 读入原图(BGR)

cv::Mat img_bgr = cv::imread(image_path);

if (img_bgr.empty())

{

std::cerr << "Error: unable to read image: "

<< image_path << std::endl;

return -1;

}

int orig_w = img_bgr.cols;

int orig_h = img_bgr.rows;

// 2. 初始化 ncnn 网络

ncnn::Net net;

if (net.load_param(param_path) != 0)

{

std::cerr << "Error: failed to load ncnn param." << std::endl;

return -1;

}

if (net.load_model(bin_path) != 0)

{

std::cerr << "Error: failed to load ncnn bin." << std::endl;

return -1;

}

// 3. 前处理:letterbox 到 640×640(和 Python 对齐)

const int input_size = 640;

// 计算等比缩放比例

float r = std::min((float)input_size / (float)orig_w,

(float)input_size / (float)orig_h);

int new_w = (int)std::round(orig_w * r);

int new_h = (int)std::round(orig_h * r);

cv::Mat resized_bgr;

cv::resize(img_bgr, resized_bgr, cv::Size(new_w, new_h));

// 生成 640x640 的画布(用 114 填充,和 YOLOv5 一致)

cv::Mat letterbox_bgr(input_size, input_size,

CV_8UC3,

cv::Scalar(114, 114, 114));

int dx = (input_size - new_w) / 2;

int dy = (input_size - new_h) / 2;

resized_bgr.copyTo(letterbox_bgr(cv::Rect(dx, dy, new_w, new_h)));

// ⭐ 与 Python 对齐的关键改动:BGR -> RGB

cv::Mat letterbox_rgb;

cv::cvtColor(letterbox_bgr, letterbox_rgb, cv::COLOR_BGR2RGB);

// 转成 ncnn::Mat,并做 /255 归一化

ncnn::Mat in = ncnn::Mat::from_pixels(

letterbox_rgb.data,

ncnn::Mat::PIXEL_RGB, // ⭐ 改为 RGB

input_size,

input_size);

const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};

in.substract_mean_normalize(nullptr, norm_vals);

// 4. 推理

ncnn::Extractor ex = net.create_extractor();

int ret = ex.input("in0", in); // 注意:与 .param 输入 blob 名一致

if (ret != 0)

{

std::cerr << "ex.input failed, ret = " << ret << std::endl;

return -1;

}

ncnn::Mat out;

ret = ex.extract("out0", out); // 注意:与 .param 输出 blob 名一致

std::cout << "extract ret = " << ret

<< " out.w = " << out.w

<< " out.h = " << out.h

<< " out.c = " << out.c << std::endl;

if (ret != 0 || out.w == 0 || out.h == 0)

{

std::cerr << "Error: extract output failed." << std::endl;

return -1;

}

// 5. 解码 + NMS

std::vector<Detection> detections;

float conf_thresh = 0.25f; // 与 Python detect.py 一致

float nms_thresh = 0.45f;

decode_and_nms(out, detections,

conf_thresh, nms_thresh,

orig_w, orig_h,

r, dx, dy,

input_size);

std::cout << "total detections after NMS: "

<< detections.size() << std::endl;

// 6. 画框 + 打印(在 BGR 原图上画)

cv::Mat img_show = img_bgr.clone();

draw_detections_and_print(img_show, detections);

// 7. 保存结果图

std::string out_path = "output.jpg";

cv::imwrite(out_path, img_show);

std::cout << "Detection complete, output saved as "

<< out_path << std::endl;

return 0;

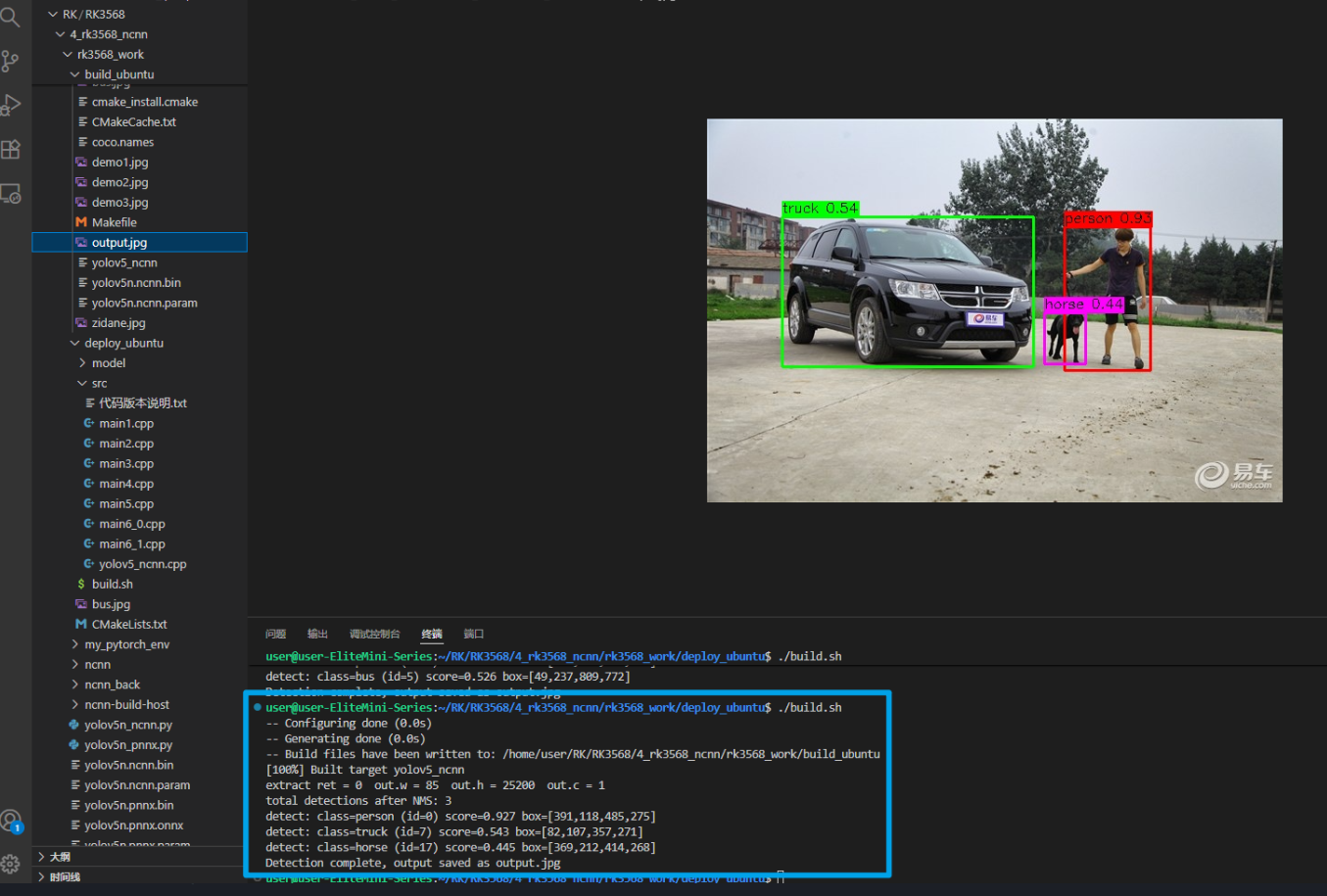

}2.3.6、使用上述步骤,在ubuntu上的效果

识别图片效果:能识别到,说明推理正常

换一张也能识别,虽然识别的不是非常准确(这个githup获取的yolov5n.pt,没有手动训练过,出现不精准,属于正常)

2.4、解释输出现象(YOLOv5 head 的原始输出(已经 decode+sigmoid+scale)但未经过 NMS)

bash

user@user-EliteMini-Series:~/RK/RK3568/4_rk3568_ncnn/rk3568_work/build_ubuntu$ ./yolov5_ncnn yolov5n.ncnn.param yolov5n.ncnn.bin bus.jpg

out shape (c, h, w): 1, 25200, 85

first 10 values: 8.45431 2.88228 4.83573 6.39983 0.9488 2.09542e-09 4.4782e-09 3.80263e-05 2.06879e-06 4.36303e-06 2.4.1、输出形状:out shape (c, h, w): 1, 25200, 85

| 维度 | 数值 | 解释 |

|---|---|---|

| c | 1 | batch 或通道(ncnn 的内部格式) |

| h | 25200 | 预测点数量(即 80x80 + 40x40 + 20x20 共 3 个输出层合并后的 anchor-free 点) |

| w | 85 | 每个预测点的属性数量(xywh + obj + 80 类 = 85) |

2.4.2、为什么是 25200?

YOLOv5n 有三个检测输出层:

| 特征图大小 | 锚点类型 | 网格点数量 |

|---|---|---|

| 80×80 | P3 | 6400 |

| 40×40 | P4 | 1600 |

| 20×20 | P5 | 400 |

每层 3 个 anchor → 等价拆成单 anchor,再展平成:

80×80×3 = 19200

40×40×3 = 4800

20×20×3 = 1200

总计:19200 + 4800 +1200 = 252002.4.3、为什么每个点是 85 维?

YOLOv5 的每个预测值的结构:

[ x, y, w, h, obj_conf, class_prob_0, class_prob_1, ... class_prob_79 ]

[ 0 1 2 3 4 5 第1个类别 第79个类别 ]

= 4 + 1 + 80 = 85你打印的前 10 个值对应:

| 索引 | 数值 | 含义 |

|---|---|---|

| 0 | 8.45431 | x 坐标(激活后) |

| 1 | 2.88228 | y 坐标(激活后) |

| 2 | 4.83573 | width(激活后) |

| 3 | 6.39983 | height(激活后) |

| 4 | 0.9488 | objectness 置信度 |

| 5 | 2.09542e-09 | 第 0 类概率 |

| 6 | 4.4782e-09 | 第 1 类概率 |

| 7 | 3.80263e-05 | 第 2 类概率 |

| 8 | 2.06879e-06 | 第 3 类概率 |

| 9 | 4.36303e-06 | 第 4 类概率 |

2.4.4、这些值的类型是什么?

1. 所有数值类型都来自 **`ncnn::Mat`**,底层是:float(32位浮点数)

2. ncnn 的 Mat 是 CHW 格式:c=1, h=25200, w=85,第 0 通道下的所有 (h,w) 数字(也就是[25200][85]的二维数组)

3. 第 5 个值 0.9488 是 objectness 分数,第 6~85 个值是 80 个类别的置信度(还未乘以 objectness)

4. | 索引 | 含义 |

|---|---|

| 0 | 预测框中心 x |

| 1 | 预测框中心 y |

| 2 | 预测框宽度 |

| 3 | 高度 |

| 4 | objectness 置信度 |

| 5~84 | 80 类概率 |

类型全部为 float32。