只知道LeNet是很古老的神经网络,但是究竟啥效果也没尝试过,所以特别做了这个小实验来了解它。

基准版本LeNet

原始的LeNet5是2+3的结构,即两个卷积+池化串行构成feature extractor,然后三个全连接构成分类模块。

python

import torch

from torchvision import datasets

from torchvision.transforms import ToTensor

from torchvision.transforms import RandomRotation

from torchvision import transforms

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import torch.nn as nn

from torch.optim.lr_scheduler import StepLR

from torch.utils.tensorboard import SummaryWriter

# get the img calss name

def label2str(classes,label):

return classes[label]

# my LeNet:origin LeNet is 2+3,two CNN layers for feature extraction and three FC layers for classification

class CMyLeNet(nn.Module):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

# feature layer conv1

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels=1,out_channels=6,kernel_size=(5,5),stride=1,padding=2),

nn.ReLU(),

nn.MaxPool2d(2,2)

)

#layer conv2

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels=6,out_channels=16,kernel_size=(5,5),stride=1,padding=0),

nn.ReLU(),

nn.MaxPool2d(2,2)

)

#

self.classifier = nn.Sequential(

nn.Flatten(),

nn.Linear(in_features=400,out_features=400),

nn.ReLU(),

nn.Linear(in_features=400,out_features=120),

nn.ReLU(),

nn.Linear(in_features=120,out_features=10)

)

def forward(self,x):

x = self.conv1(x)

x = self.conv2(x)

x = self.classifier(x)

return x

# check the data

def checkImgData(self,train_data,classes):

fig = plt.figure(figsize=(8,8))

plt.axis("off")

plt.title("check img and label",y=1.1)

for i in range(1,10):

img,label = train_data[i]

fig.add_subplot(3,3,i)

class_name = label2str(classes,label)

plt.title(class_name,y=-0.13)

plt.axis("off")

plt.imshow(img.squeeze(),"gray")

plt.show()

def main():

# test data set

test_data = datasets.FashionMNIST(

root="D:/deepBlue/LearnBasic/data",

train=False,

transform= ToTensor(),

download= False

)

#test data loader

test_loader = DataLoader(dataset=test_data,batch_size=100,shuffle=True,num_workers=5)

print("test data set size:",len(test_data))

#train data set

train_data = datasets.FashionMNIST(

root="D:/deepBlue/LearnBasic/data",

train=True,

transform=ToTensor(),

download= False

)

#train data loader

train_loader = DataLoader(dataset=train_data,batch_size=100,shuffle=True,num_workers=5)

print("train data set size:",len(train_data))

# data set classes names

classes = train_data.classes

print("classes:{}".format(classes))

#set the device

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print("device:{}".format(device))

#the model

lenet = CMyLeNet()

lenet.checkImgData(train_data=train_data, classes=classes)

lenet.to(device=device)

loss_fuc = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(params= lenet.parameters(),lr= 0.001)

scheduler = StepLR(optimizer=optimizer,step_size=15,gamma=0.5)

writer = SummaryWriter(log_dir="runs1/log")

#train process

for epoch in range(150):

total_correct = 0

total_sample = 0

for batch_num,(imgs,labels) in enumerate(train_loader):

imgs = imgs.to(device)

labels = labels.to(device)

output = lenet(imgs)

loss = loss_fuc(output,labels)

loss.backward()

optimizer.step()

optimizer.zero_grad()

correct = (output.argmax(1)==labels).sum().item()

cur_accu = correct/imgs.shape[0]

# if batch_num%50==0:

# print("{} epc {} batch correct rate:{}".format(epoch,batch_num,cur_accu))

total_correct += correct

total_sample += imgs.shape[0]

scheduler.step()

apoch_accu = total_correct/total_sample

print("{} epoch train accu:{}".format(epoch,apoch_accu))

#evaluate the model performance

# if epoch> 100 and epoch%5 == 0:

if True:

lenet.eval()

total_correct = 0

total_sample =0

for batch_num,(imgs,labels) in enumerate(test_loader):

imgs = imgs.to(device)

labels = labels.to(device)

output = lenet(imgs)

correct = (output.argmax(1)==labels).sum().item()

total_correct += correct

total_sample += imgs.shape[0]

test_accu = total_correct/total_sample

print("test accu:{}".format(test_accu))

writer.add_scalars("accuracy",{"train_accu":apoch_accu,"test_accu":test_accu},epoch)

writer.close()

if __name__ == "__main__":

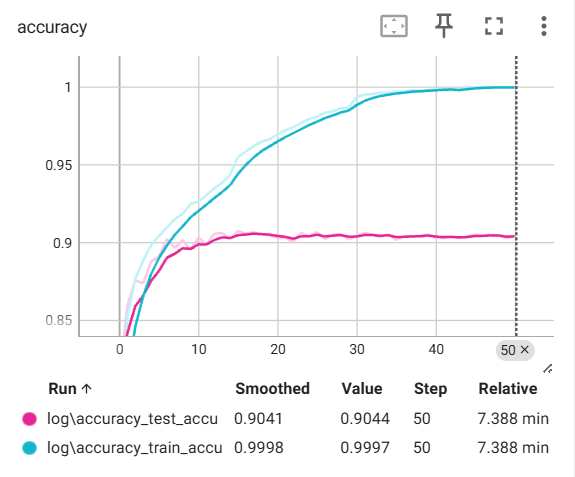

main()训练结果准确率为90%,整体而言中规中矩,以下是训练过程可视化,可见差不多在10个epoch 之后就发生了过拟合现象,即准确率在训练数据上持续上升,但是在测试集上就逐渐稳定下来,甚至降低的现象。

升级版

因为原来的网络只有两个卷积层,所以首先考虑加深一点,我额外添加两个卷积层;其次,为了让收敛速度加快,我添加batch normalization层;最后,过拟合也要考虑,从网络角度考虑加drop out和L2 regularization;数据端也做一些数据扩充,观察数据集发现添加旋转和水平翻转是合理的。

对于BN,我会在卷积层和全连接层都添加。对于,drop out和L2 正则化,仅仅在分类部分的全连接层添加,原因是网络在全连接层更容易发生过拟合,因为参数主要集中在这里。

代码如下:

python

import torch

from torchvision import datasets

from torchvision.transforms import ToTensor

from torchvision.transforms import RandomRotation

from torchvision import transforms

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import torch.nn as nn

from torch.optim.lr_scheduler import StepLR

from torch.utils.tensorboard import SummaryWriter

# get the img calss name

def label2str(classes,label):

return classes[label]

# my LeNet:origin LeNet is 2+3,two CNN layers for feature extraction and three FC layers for classification

class CMyLeNet(nn.Module):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

# feature layer conv1

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels=1,out_channels=6,kernel_size=(5,5),stride=1,padding=2),

nn.BatchNorm2d(num_features=6),

nn.ReLU(),

nn.Conv2d(in_channels=6,out_channels=6,kernel_size=(5,5),stride=1,padding=2),

nn.BatchNorm2d(num_features=6),

nn.ReLU(),

nn.MaxPool2d(2,2)

)

#layer conv2

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels=6,out_channels=16,kernel_size=(5,5),stride=1,padding=0),

nn.BatchNorm2d(num_features=16),

nn.ReLU(),

nn.Conv2d(in_channels=16,out_channels=16,kernel_size=(5,5),stride=1,padding=2),

nn.BatchNorm2d(num_features=16),

nn.ReLU(),

nn.MaxPool2d(2,2)

)

#

self.fc1 = nn.Linear(in_features=400,out_features=400)

self.fc2 = nn.Linear(in_features=400,out_features=120)

self.fc3 = nn.Linear(in_features=120,out_features=10)

self.classifier = nn.Sequential(

nn.Flatten(),

nn.Dropout(0.25),

self.fc1,

nn.BatchNorm1d(num_features=400),

nn.ReLU(),

nn.Dropout(0.25),

self.fc2,

nn.BatchNorm1d(num_features=120),

nn.ReLU(),

self.fc3

)

def forward(self,x):

x = self.conv1(x)

x = self.conv2(x)

x = self.classifier(x)

return x

# check the data

def checkImgData(self,train_data,classes):

fig = plt.figure(figsize=(8,8))

plt.axis("off")

plt.title("check img and label",y=1.1)

for i in range(1,10):

img,label = train_data[i]

fig.add_subplot(3,3,i)

class_name = label2str(classes,label)

plt.title(class_name,y=-0.13)

plt.axis("off")

plt.imshow(img.squeeze(),"gray")

plt.show()

def main():

# test data set

test_data = datasets.FashionMNIST(

root="D:/deepBlue/LearnBasic/data",

train=False,

transform= ToTensor(),

download= False

)

#test data loader

test_loader = DataLoader(dataset=test_data,batch_size=100,shuffle=True,num_workers=5)

print("test data set size:",len(test_data))

trans = transforms.Compose([RandomRotation(15),ToTensor(),transforms.RandomHorizontalFlip()])

#train data set

train_data = datasets.FashionMNIST(

root="D:/deepBlue/LearnBasic/data",

train=True,

transform= trans,

download= False

)

#train data loader

train_loader = DataLoader(dataset=train_data,batch_size=100,shuffle=True,num_workers=5)

print("train data set size:",len(train_data))

# data set classes names

classes = train_data.classes

print("classes:{}".format(classes))

#set the device

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print("device:{}".format(device))

#the model

lenet = CMyLeNet()

lenet.checkImgData(train_data=train_data, classes=classes)

lenet.to(device=device)

loss_fuc = nn.CrossEntropyLoss()

fc_params = []

other_params = []

fc_params.extend(lenet.fc1.parameters())

fc_params.extend(lenet.fc2.parameters())

fc_params.extend(lenet.fc3.parameters())

print("fc_params size:",len(fc_params))

other_params = [p for p in lenet.parameters() if p not in set(fc_params)]

print("other params size:",len(other_params))

total_params = [p for p in lenet.parameters()]

print("totoal params size:",len(total_params)," A+B:",len(fc_params)+len(other_params))

optimizer = torch.optim.Adam(params=[

{"params":fc_params,"weight_decay":2.0*1e-5},

{"params":other_params,"weight_decay":0}

],

lr= 0.001)

scheduler = StepLR(optimizer=optimizer,step_size=10,gamma=0.5)

writer = SummaryWriter(log_dir="runs1/log")

#train process

for epoch in range(150):

total_correct = 0

total_sample = 0

for batch_num,(imgs,labels) in enumerate(train_loader):

imgs = imgs.to(device)

labels = labels.to(device)

output = lenet(imgs)

loss = loss_fuc(output,labels)

loss.backward()

optimizer.step()

optimizer.zero_grad()

correct = (output.argmax(1)==labels).sum().item()

cur_accu = correct/imgs.shape[0]

# if batch_num%50==0:

# print("{} epc {} batch correct rate:{}".format(epoch,batch_num,cur_accu))

total_correct += correct

total_sample += imgs.shape[0]

scheduler.step()

apoch_accu = total_correct/total_sample

print("{} epoch train accu:{}".format(epoch,apoch_accu))

#evaluate the model performance

# if epoch> 100 and epoch%5 == 0:

if True:

lenet.eval()

total_correct = 0

total_sample =0

for batch_num,(imgs,labels) in enumerate(test_loader):

imgs = imgs.to(device)

labels = labels.to(device)

output = lenet(imgs)

correct = (output.argmax(1)==labels).sum().item()

total_correct += correct

total_sample += imgs.shape[0]

test_accu = total_correct/total_sample

print("test accu:{}".format(test_accu))

writer.add_scalars("accuracy",{"train_accu":apoch_accu,"test_accu":test_accu},epoch)

writer.close()

if __name__ == "__main__":

main()

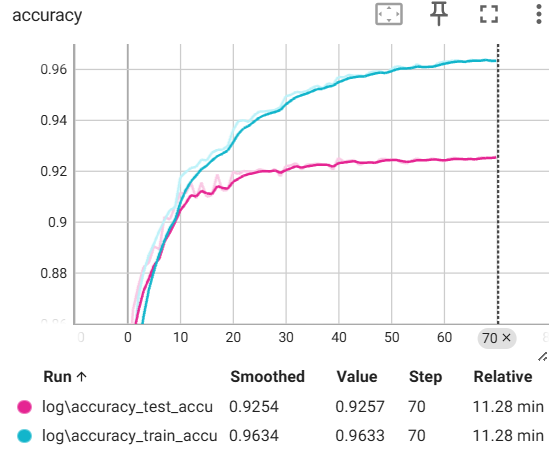

准确率大概在92.5%就很难再往上。相比于基础版本,这里过拟合要稍微好一些,但是依然存在。

总结:

简单的任务,比如Fashion MNIST, 即使是5层LeNet也可以取得一定的效果,但是准确率没有特别高,93%都很难达到。