controller核心管理

控制器controller

controller

作用

- 状态监控:通过 K8s API Server 实时监控集群中各类资源(如 Pod、Deployment、Node)的实际状态;

- 状态对比 :将资源的实际状态与用户通过 YAML/JSON 定义的期望状态做对比;

- 自动调谐:若实际状态偏离期望状态,自动执行修复 / 调整操作(如重启故障 Pod、扩容副本数、调度新 Pod 到可用节点);

- 集群自动化管理:实现 Pod 生命周期、节点管理、服务发现、存储挂载等集群核心功能的自动化,无需人工干预。

分类

K8s 的控制器主要分为内置控制器 (由kube-controller-manager统一管理)和自定义控制器(基于 CRD 扩展),以下是最常用的核心类型:

内置控制器(核心组件,开箱即用)

所有内置控制器都被封装在 K8s 控制平面的kube-controller-manager组件中,避免单独部署的复杂性,核心内置控制器及作用如下:

| 控制器名称 | 核心作用 |

|---|---|

| DeploymentController | 管理 Deployment 资源,控制 ReplicaSet 的创建 / 更新,实现 Pod 的滚动更新、回滚 |

| ReplicaSetController | 确保指定数量的 Pod 副本始终运行,若 Pod 故障则自动重建 |

| StatefulSetController | 管理有状态应用(如数据库),保证 Pod 的固定名称、网络标识和持久化存储 |

| DaemonSetController | 确保集群中所有(或指定)Node 上运行一个 Pod 副本(如日志采集、监控代理) |

| JobController/CronJobController | 管理一次性任务(Job)和定时任务(CronJob),确保任务按计划执行并完成 |

| NodeController | 监控节点状态,若节点故障则标记为不可用,并将该节点上的 Pod 调度到其他节点 |

| ServiceController | 关联 Service 与 Pod,维护 Endpoints 资源(记录 Pod 的 IP 列表),实现服务发现 |

| NamespaceController | 管理 Namespace 的生命周期,清理过期 Namespace 下的资源 |

| PersistentVolumeController | 管理 PV(持久卷)与 PVC(持久卷声明)的绑定,实现存储资源的动态分配 |

自定义控制器(基于 CRD 扩展)

当 K8s 内置控制器无法满足自定义业务的资源管理需求时(如管理 MySQL、Redis、Elasticsearch 等中间件的生命周期),可通过CRD(自定义资源定义)+ 自定义控制器实现,核心特点:

- 基于 K8s 的声明式 API 扩展,定义自定义资源(如

MySQLCluster、RedisInstance); - 结合Operator SDK /kubebuilder等工具开发控制器,实现自定义资源的生命周期管理(如部署、扩容、备份、升级);

- 典型场景:Prometheus Operator、MySQL Operator、Elasticsearch Operator,实现中间件的自动化运维。

Deployment

作用

- Pod 副本管理:确保指定数量的 Pod 副本始终运行(自愈能力),Pod 故障时自动重建,节点宕机时自动调度到其他节点。

- 滚动更新:平滑更新 Pod 的镜像或配置,避免服务中断(逐步替换旧 Pod,新建新 Pod,更新过程中服务持续可用)。

- 版本回滚:若更新后出现问题,可快速回滚到之前的 Deployment 版本(基于 ReplicaSet 的版本历史)。

- 弹性扩缩容:手动或通过 HPA(水平 Pod 自动扩缩器)动态调整 Pod 副本数,应对业务流量变化。

- 暂停与恢复:更新过程中可暂停 Deployment,验证新配置无误后再恢复,降低更新风险。

Deployment 的适用场景

Deployment 专为无状态应用设计,适合部署:

- Web 服务(如 Nginx、Tomcat)、API 服务(如 Spring Boot 微服务);

- 前端静态资源服务、中间件的无状态节点(如 Kafka 的 broker、Elasticsearch 的 data 节点);

- 需要频繁更新、弹性扩缩容的无状态业务。

创建deployment类型应用

bash

[root@master ~]# mkdir controller_dir

[root@master ~]# cd controller_dir/

[root@master controller_dir]# cat deployment-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master controller_dir]# kubectl apply -f deployment-nginx.yaml

deployment.apps/deployment-nginx created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b6d5cd699-m55s7 1/1 Running 0 8s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d20h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

[root@master controller_dir]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-nginx-5b6d5cd699-m55s7 1/1 Running 0 2m6s 10.244.104.11 node2 <none> <none>

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d 10.244.104.10 node2 <none> <none>

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d20h 10.244.166.132 node1 <none> <none>

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d 10.244.104.13 node2 <none> <none>

[root@master controller_dir]# ping -c 4 10.244.104.11

PING 10.244.104.11 (10.244.104.11) 56(84) bytes of data.

64 bytes from 10.244.104.11: icmp_seq=1 ttl=63 time=0.479 ms

64 bytes from 10.244.104.11: icmp_seq=2 ttl=63 time=0.324 ms

64 bytes from 10.244.104.11: icmp_seq=3 ttl=63 time=0.330 ms

64 bytes from 10.244.104.11: icmp_seq=4 ttl=63 time=0.344 ms

--- 10.244.104.11 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3001ms

rtt min/avg/max/mdev = 0.324/0.369/0.479/0.065 ms

[root@master controller_dir]# curl http://10.244.104.11

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#只能删除deploment才能删除podpod版本升级

bash

#升级前验证nginx版本

[root@master controller_dir]# kubectl describe pods deployment-nginx-5b6d5cd699-m55s7 | grep Image

Image: nginx:1.26-alpine

Image ID: docker-pullable://nginx@sha256:1eadbb07820339e8bbfed18c771691970baee292ec4ab2558f1453d26153e22d

#升级

[root@master controller_dir]# kubectl set image deployment deployment-nginx c1=nginx:1.29-alpine --record

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/deployment-nginx image updated

#查看过程

#先创建再删除

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b4d7776b6-wnfl8 0/1 ContainerCreating 0 29s

deployment-nginx-5b6d5cd699-m55s7 1/1 Running 0 12m

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d20h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b4d7776b6-wnfl8 1/1 Running 0 57s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d20h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

#两种方法查看升级结果

#方法一

[root@master controller_dir]# kubectl describe pod deployment-nginx-5b4d7776b6-wnfl8 | grep Image:

Image: nginx:1.29-alpine

#方法二

[root@master controller_dir]# kubectl exec deployment-nginx-5b4d7776b6-wnfl8 -- nginx -v

nginx version: nginx/1.29.3

#查看更新是否成功

#常用于升级的pod较多,不知道是否完成的情况下

[root@master controller_dir]# kubectl rollout status deployment deployment-nginx

deployment "deployment-nginx" successfully rolled out

#额外

#查看容器名

#查看事件

[root@master controller_dir]# kubectl describe pod deployment-nginx-5b4d7776b6-wnfl8

Name: deployment-nginx-5b4d7776b6-wnfl8

Namespace: default

Priority: 0

Service Account: default

Node: node1/192.168.100.70

Start Time: Mon, 24 Nov 2025 10:46:07 +0800

Labels: app=nginx

pod-template-hash=5b4d7776b6

Annotations: cni.projectcalico.org/containerID: 56d55d5a469071e6fc14ac6d8f1a023feb110bfa57c381dd9411ecd9839b845f

cni.projectcalico.org/podIP: 10.244.166.135/32

cni.projectcalico.org/podIPs: 10.244.166.135/32

Status: Running

IP: 10.244.166.135

IPs:

IP: 10.244.166.135

Controlled By: ReplicaSet/deployment-nginx-5b4d7776b6

Containers:

c1:

Container ID: docker://0a14c4c9d00be83b15875d1422fff62ac93feb5b423b30173d4a87d0a81c4f95

Image: nginx:1.29-alpine

Image ID: docker-pullable://nginx@sha256:b3c656d55d7ad751196f21b7fd2e8d4da9cb430e32f646adcf92441b72f82b14

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 24 Nov 2025 10:46:55 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-nlfdk (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-nlfdk:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m15s default-scheduler Successfully assigned default/deployment-nginx-5b4d7776b6-wnfl8 to node1

Normal Pulling 4m14s kubelet Pulling image "nginx:1.29-alpine"

Normal Pulled 3m28s kubelet Successfully pulled image "nginx:1.29-alpine" in 46.186s (46.186s including waiting)

Normal Created 3m27s kubelet Created container c1

Normal Started 3m27s kubelet Started container c1

#也可以查看容器名

#进入里面查看

[root@master controller_dir]# kubectl edit deployment deployment-nginx

Edit cancelled, no changes made.

#也可以查看容器名

[root@master controller_dir]# kubectl get deployment deployment-nginx -o yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "2"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"name":"deployment-nginx","namespace":"default"},"spec":{"replicas":1,"selector":{"matchLabels":{"app":"nginx"}},"template":{"metadata":{"labels":{"app":"nginx"}},"spec":{"containers":[{"image":"nginx:1.26-alpine","imagePullPolicy":"IfNotPresent","name":"c1","ports":[{"containerPort":80}]}]}}}}

kubernetes.io/change-cause: kubectl set image deployment deployment-nginx c1=nginx:1.29-alpine

--record=true

creationTimestamp: "2025-11-24T02:34:07Z"

generation: 2

name: deployment-nginx

namespace: default

resourceVersion: "259751"

uid: c1ac08d6-1148-4754-bd12-1f7783a0a030

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx:1.29-alpine

imagePullPolicy: IfNotPresent

name: c1

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 1

conditions:

- lastTransitionTime: "2025-11-24T02:34:09Z"

lastUpdateTime: "2025-11-24T02:34:09Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2025-11-24T02:34:07Z"

lastUpdateTime: "2025-11-24T02:46:55Z"

message: ReplicaSet "deployment-nginx-5b4d7776b6" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 2

readyReplicas: 1

replicas: 1

updatedReplicas: 1pod版本回退

bash

#查看版本历史信息

[root@master controller_dir]# kubectl rollout history deployment deployment-nginx

deployment.apps/deployment-nginx

REVISION CHANGE-CAUSE

1 <none>

2 kubectl set image deployment deployment-nginx c1=nginx:1.29-alpine --record=true

#回退版本

#先升级到1.28

[root@master controller_dir]# kubectl set image deployment deployment-nginx c1=nginx:1.28-alpine --record

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/deployment-nginx image updated

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-65d975f4fc-9q44s 1/1 Running 0 21s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

#查看更新后的历史

[root@master controller_dir]# kubectl rollout history deployment deployment-nginx

deployment.apps/deployment-nginx

REVISION CHANGE-CAUSE

1 <none>

2 kubectl set image deployment deployment-nginx c1=nginx:1.29-alpine --record=true

3 kubectl set image deployment deployment-nginx c1=nginx:1.28-alpine --record=true

#开始回退

[root@master controller_dir]# kubectl rollout history deployment deployment-nginx --revision=2

deployment.apps/deployment-nginx with revision #2

Pod Template:

Labels: app=nginx

pod-template-hash=5b4d7776b6

Annotations: kubernetes.io/change-cause: kubectl set image deployment deployment-nginx c1=nginx:1.29-alpine --record=true

Containers:

c1:

Image: nginx:1.29-alpine

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

#回退到2的版本即1.29版本

[root@master controller_dir]# kubectl rollout undo deployment deployment-nginx --to-revision=2

deployment.apps/deployment-nginx rolled back

[root@master controller_dir]# kubectl describe pod deployment-nginx-5b4d7776b6-4whjz | grep Image:

Image: nginx:1.29-alpine

#再次查看历史记录

[root@master controller_dir]# kubectl rollout history deployment deployment-nginx

deployment.apps/deployment-nginx

REVISION CHANGE-CAUSE

1 <none>

3 kubectl set image deployment deployment-nginx c1=nginx:1.28-alpine --record=true

4 kubectl set image deployment deployment-nginx c1=nginx:1.29-alpine --record=true副本扩容

bash

[root@master controller_dir]# kubectl scale deployment deployment-nginx --replicas=4

deployment.apps/deployment-nginx scaled

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b4d7776b6-4whjz 1/1 Running 0 17m

deployment-nginx-5b4d7776b6-dm2l9 1/1 Running 0 5s

deployment-nginx-5b4d7776b6-jrrz2 0/1 ContainerCreating 0 5s

deployment-nginx-5b4d7776b6-jzt4v 0/1 ContainerCreating 0 5s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b4d7776b6-4whjz 1/1 Running 0 19m

deployment-nginx-5b4d7776b6-dm2l9 1/1 Running 0 101s

deployment-nginx-5b4d7776b6-jrrz2 1/1 Running 0 101s

deployment-nginx-5b4d7776b6-jzt4v 1/1 Running 0 101s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

[root@master controller_dir]# kubectl scale deployment deployment-nginx --replicas=1

deployment.apps/deployment-nginx scaled

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b4d7776b6-4whjz 1/1 Running 0 20m

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

#设置为0

#只是pod的资源没有了

[root@master controller_dir]# kubectl scale deployment deployment-nginx --replicas=0

deployment.apps/deployment-nginx scaled

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

#副本和控制器都存在

[root@master controller_dir]# kubectl get rs

NAME DESIRED CURRENT READY AGE

deployment-nginx-5b4d7776b6 0 0 0 39m

deployment-nginx-5b6d5cd699 0 0 0 51m

deployment-nginx-65d975f4fc 0 0 0 26m

nginx-7854ff8877 3 3 3 10d

[root@master controller_dir]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

deployment-nginx 0/0 0 0 51m

nginx 3/3 3 3 10d

#再次扩容依然成功

[root@master controller_dir]# kubectl scale deployment deployment-nginx --replicas=3

deployment.apps/deployment-nginx scaled

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b4d7776b6-8djfc 1/1 Running 0 3s

deployment-nginx-5b4d7776b6-pct8p 1/1 Running 0 3s

deployment-nginx-5b4d7776b6-twspv 1/1 Running 0 3s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d多副本滚动更新

bash

#先确定一下更新前版本

[root@master controller_dir]# kubectl describe pod deployment-nginx-5b4d7776b6-8djfc | grep Image:

Image: nginx:1.29-alpine

#多副本更新到1.26版本

[root@master controller_dir]# kubectl set image deployment deployment-nginx c1=nginx:1.26-alpine --record

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/deployment-nginx image updated

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-5b6d5cd699-8k7qv 1/1 Running 0 38s

deployment-nginx-5b6d5cd699-bwz9t 1/1 Running 0 14s

deployment-nginx-5b6d5cd699-xrnt5 1/1 Running 0 15s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d17h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d17h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d17h ago) 10d

[root@master controller_dir]# kubectl describe pod deployment-nginx-5b6d5cd699-8k7qv | grep Image:

Image: nginx:1.26-alpine

[root@master controller_dir]# kubectl rollout status deployment deployment-nginx

deployment "deployment-nginx" successfully rolled out

#回退更新

[root@master controller_dir]# kubectl rollout history deployment deployment-nginx

deployment.apps/deployment-nginx

REVISION CHANGE-CAUSE

3 kubectl set image deployment deployment-nginx c1=nginx:1.28-alpine --record=true

4 kubectl set image deployment deployment-nginx c1=nginx:1.29-alpine --record=true

5 kubectl set image deployment deployment-nginx c1=nginx:1.26-alpine --record=true

#回退到版本3

[root@master controller_dir]# kubectl rollout undo deployment deployment-nginx --to-revision=3

deployment.apps/deployment-nginx rolled back

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-nginx-65d975f4fc-c2p95 1/1 Running 0 9s

deployment-nginx-65d975f4fc-czjzz 1/1 Running 0 8s

deployment-nginx-65d975f4fc-mkp7c 1/1 Running 0 71s

nginx-7854ff8877-4lss5 1/1 Running 11 (2d18h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d18h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d18h ago) 10d

#查看回退后的版本变化

[root@master controller_dir]# kubectl describe pod deployment-nginx-65d975f4fc-c2p95 | grep Image:

Image: nginx:1.28-alpine

#删除deploment后pod资源也没有了

[root@master controller_dir]# kubectl delete deployment deployment-nginx

deployment.apps "deployment-nginx" deleted

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7854ff8877-4lss5 1/1 Running 11 (2d18h ago) 10d

nginx-7854ff8877-8qcvp 1/1 Running 6 (2d18h ago) 5d21h

nginx-7854ff8877-fzbfm 1/1 Running 11 (2d18h ago) 10dReplicaset

作用

- 副本数保障 :严格维持用户定义的 Pod 副本数(

replicas),实际 Pod 数少于期望数时自动创建,多于时自动删除。 - Pod 自愈能力:Pod 因容器崩溃、节点宕机等原因故障终止时,RS 会自动在其他健康节点重建 Pod。

- 标签筛选管理 :通过标签选择器匹配 Pod,仅管理符合标签规则的 Pod,实现 Pod 的分组管控。

- 为 Deployment 提供底层支撑:Deployment 的滚动更新、版本回滚本质是通过创建 / 销毁不同版本的 RS 来实现,RS 仅负责单一版本 Pod 的副本管理。

示例:

bash

[root@master controller_dir]# cat rs-nginx.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: rs-nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master controller_dir]# kubectl apply -f rs-nginx.yaml

replicaset.apps/rs-nginx created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7854ff8877-8qcvp 0/1 Terminating 6 5d23h

rs-nginx-25szd 1/1 Running 0 4s

rs-nginx-p58ml 1/1 Running 0 4s

[root@master controller_dir]# kubectl describe rs rs-nginx

Name: rs-nginx

Namespace: default

Selector: app=nginx

Labels: <none>

Annotations: <none>

Replicas: 2 current / 2 desired

Pods Status: 2 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=nginx

Containers:

c1:

Image: nginx:1.26-alpine

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 110s replicaset-controller Created pod: rs-nginx-p58ml

Normal SuccessfulCreate 110s replicaset-controller Created pod: rs-nginx-25szd

[root@master controller_dir]# kubectl describe pod rs-nginx-25szd

Name: rs-nginx-25szd

Namespace: default

Priority: 0

Service Account: default

Node: node1/192.168.100.70

Start Time: Mon, 24 Nov 2025 13:36:25 +0800

Labels: app=nginx

Annotations: cni.projectcalico.org/containerID: 09e4316b114139720c4e1c3cb287ff36257f2e3299b423342b2b72f1a313b3c6

cni.projectcalico.org/podIP: 10.244.166.152/32

cni.projectcalico.org/podIPs: 10.244.166.152/32

Status: Running

IP: 10.244.166.152

IPs:

IP: 10.244.166.152

Controlled By: ReplicaSet/rs-nginx

Containers:

c1:

Container ID: docker://c0f055157eae512c769b5c6209a7caf2573473c56e52242f40efa54753346f57

Image: nginx:1.26-alpine

Image ID: docker-pullable://nginx@sha256:1eadbb07820339e8bbfed18c771691970baee292ec4ab2558f1453d26153e22d

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 24 Nov 2025 13:36:26 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-wkh75 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-wkh75:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m51s default-scheduler Successfully assigned default/rs-nginx-25szd to node1

Normal Pulled 2m49s kubelet Container image "nginx:1.26-alpine" already present on machine

Normal Created 2m49s kubelet Created container c1

Normal Started 2m49s kubelet Started container c1

#扩容

[root@master controller_dir]# kubectl scale rs rs-nginx --replicas=4

replicaset.apps/rs-nginx scaled

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7854ff8877-8qcvp 0/1 Terminating 6 5d23h

rs-nginx-25szd 1/1 Running 0 4m11s

rs-nginx-7pdsq 1/1 Running 0 5s

rs-nginx-mx8b7 1/1 Running 0 5s

rs-nginx-p58ml 1/1 Running 0 4m11s

[root@master controller_dir]#

#缩容

[root@master controller_dir]# kubectl scale rs rs-nginx --replicas=0

replicaset.apps/rs-nginx scaled

[root@master controller_dir]# kubectl get pods

No resources found in default namespace.

#升级

#发现无法升级

[root@master controller_dir]# kubectl exec rs-nginx-59drn -- nginx -v

nginx version: nginx/1.26.3

[root@master controller_dir]# kubectl set image rs rs-nginx c1=nginx:1.29-alpine --record

Flag --record has been deprecated, --record will be removed in the future

replicaset.apps/rs-nginx image updated

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

rs-nginx-59drn 1/1 Running 0 85s

rs-nginx-fz8dl 1/1 Running 0 85s

[root@master controller_dir]# kubectl exec rs-nginx-59drn -- nginx -v

nginx version: nginx/1.26.3

#总结

#ReplicaSet作用:作为 K8s 的核心控制器,RS 的唯一目标是维持指定数量的 Pod 副本运行,并通过标签选择器管理 Pod(标签不匹配的 Pod 不会被 RS 管理)。

#基本操作流程:通过 YAML 定义 RS → kubectl apply创建 → kubectl get/describe查看资源 → kubectl scale手动扩缩容。

#RS 的局限性:不支持自动滚动更新和版本回滚,仅能通过修改模板后手动删除旧 Pod 实现镜像升级,因此在生产环境中,通常使用Deployment(封装了 RS)替代 RS,Deployment 继承了 RS 的副本管理能力,同时增加了滚动更新、回滚、暂停 / 继续等高级功能。控制器controller进阶

DaemonSet

介绍

DaemonSet 是 Kubernetes 中面向节点级别的 Pod 部署资源 ,由DaemonSetController管控,核心目标是确保 所有(或指定)Node 上仅运行一个对应 Pod 副本 。与 ReplicaSet/Deployment 按「固定副本数」部署不同,DaemonSet 按「节点维度」部署 ------ 新节点加入集群时自动在其上创建 Pod,节点移除时自动删除对应 Pod,是部署节点级基础设施组件的首选方式。

作用

DaemonSet 专为「需要在每个节点上运行的后台服务」设计,核心价值是节点级服务的统一部署与生命周期管理,典型用途:

- 网络插件:如 Calico、Flannel、Cilium 的代理 Pod,需每个节点运行以实现网络互通;

- 监控采集:如 Prometheus Node Exporter,需每个节点运行以采集硬件 / 系统指标;

- 日志采集:如 Filebeat、Fluentd,需每个节点运行以收集容器 / 宿主机日志;

- 节点安全:如漏洞扫描、杀毒软件的 Agent Pod,需每个节点部署以保障节点安全。

DaemonSet无需配置replicas字段,副本数由集群中符合条件的节点数自动决定。

DaemonSet 支持滚动更新 (默认)和替换更新

工作原理

DaemonSetController 遵循 K8s 控制循环逻辑,核心步骤:

- 监控节点状态:实时监听集群中 Node 的新增、删除、标签变更等事件;

- 匹配节点规则 :根据

nodeSelector、toleration等规则,筛选出需要部署 Pod 的节点; - 维护 Pod 副本:

- 新节点加入且符合规则:自动在该节点创建 1 个 Pod;

- 节点移除 / 不符合规则:删除该节点上的 DaemonSet Pod;

- 节点正常且符合规则:确保该节点上仅运行 1 个 Pod 副本(多则删、少则补)。

DaemonSet 与 ReplicaSet/Deployment 的核心区别

| 特性 | DaemonSet | ReplicaSet/Deployment |

|---|---|---|

| 副本管理维度 | 按节点数部署(1 节点 1Pod) | 按固定副本数部署(与节点数无关) |

| 核心配置 | 无replicas字段 |

必须配置replicas字段 |

| 适用场景 | 节点级基础设施(监控、日志、网络) | 无状态业务应用(Web 服务、API) |

| 扩缩容方式 | 新增 / 删除节点 / 修改节点规则 | 手动scale或 HPA 自动扩缩容 |

| 更新策略 | 滚动更新(默认)、替换更新 | 滚动更新、重建更新 |

示例

bash

#查看daemon版本

[root@master controller_dir]# kubectl api-resources | grep daemon

daemonsets ds apps/v1 true DaemonSet

#写yaml文件

[root@master controller_dir]# cat ds-nginx.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: ds-nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

resources:

limits:

memory: 100Mi

requests:

memory: 100Mi

[root@master controller_dir]# kubectl apply -f ds-nginx.yaml

daemonset.apps/ds-nginx created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ds-nginx-5nqpn 1/1 Running 0 5s

ds-nginx-spk5r 1/1 Running 0 5s

[root@master controller_dir]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ds-nginx-5nqpn 1/1 Running 0 2m28s 10.244.104.37 node2 <none> <none>

ds-nginx-spk5r 1/1 Running 0 2m28s 10.244.166.148 node1 <none> <none>

#无法扩容

#副本数量取决于node节点数量

[root@master controller_dir]# kubectl scale daemonset ds-nginx --replicas=4

Error from server (NotFound): the server could not find the requested resource

#升级版本

[root@master controller_dir]# kubectl exec ds-nginx-5nqpn -- nginx -v

nginx version: nginx/1.26.3

[root@master controller_dir]# kubectl set image daemonset ds-nginx c1=nginx:1.29-alpine --record

Flag --record has been deprecated, --record will be removed in the future

daemonset.apps/ds-nginx image updated

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ds-nginx-gnp4g 1/1 Running 0 5s

ds-nginx-hnszn 1/1 Running 0 3s

#查看版本

[root@master controller_dir]# kubectl exec ds-nginx-gnp4g -- nginx -v

nginx version: nginx/1.29.3额外实验

bash

#验证deployment在不设置replicas的情况下能否扩缩容

#实验结果是:可以

[root@master controller_dir]# cat dp-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: dp-nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c2

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master controller_dir]# kubectl apply -f dp-nginx.yaml

deployment.apps/dp-nginx created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

dp-nginx-5dc988f864-5plzs 1/1 Running 0 4s

[root@master controller_dir]# kubectl scale deployment dp-nginx --replicas=2

deployment.apps/dp-nginx scaled

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

dp-nginx-5dc988f864-5plzs 1/1 Running 0 34s

dp-nginx-5dc988f864-876sh 1/1 Running 0 1s

Job

介绍

Job 是 Kubernetes 中专门管理一次性任务 的控制器,由JobController管控,核心目标是确保指定的任务执行完成后终止 (而非像 Deployment/DaemonSet 那样让 Pod 持续运行)。当 Job 对应的 Pod 成功完成任务(退出码为 0),Job 会标记为完成状态;若 Pod 执行失败,Job 可根据配置自动重试,直到任务完成或达到重试上限。它是部署批处理、数据备份、一次性计算、数据迁移等临时任务的核心资源。

作用

- 一次性任务执行:触发任务后,Pod 执行完业务逻辑即终止,不会重启(与 Deployment 的常驻 Pod 形成核心差异);

- 任务完成保障 :确保任务按配置的次数(

completions)执行完成,即使 Pod 因节点故障中断,Job 也会重新调度 Pod 继续执行; - 失败重试机制 :可配置失败重试次数(

backoffLimit),任务执行失败时自动重启 Pod; - 并行任务执行 :支持配置并行度(

parallelism),实现多个 Pod 同时执行同类型任务,提升批处理效率。

工作原理

JobController 遵循 K8s 控制循环逻辑,核心步骤:

- 监控任务状态 :通过 API Server 监听 Job 的

completions和 Pod 的执行状态; - 创建 Pod 执行任务 :根据

parallelism配置,创建指定数量的 Pod 启动任务; - 处理任务结果:

- 若 Pod 成功完成(退出码 0):累计完成数,直到达到

completions,标记 Job 为Complete; - 若 Pod 执行失败(退出码非 0):根据

backoffLimit判断是否重试,未达上限则重新创建 Pod / 重启容器,达上限则标记 Job 为Failed; - 若任务超时(

activeDeadlineSeconds):强制终止所有 Pod,标记 Job 为Failed。

- 若 Pod 成功完成(退出码 0):累计完成数,直到达到

Job 与其他控制器的核心区别

| 控制器 | 核心目标 | Pod 状态 | 适用场景 |

|---|---|---|---|

| Job | 执行一次性任务 | 任务完成后终止 | 批处理、数据备份、一次性计算 |

| CronJob | 按 Cron 表达式定时执行任务 | 每次执行的 Pod 完成后终止 | 定时备份、定时同步、定时报表 |

| Deployment | 维持固定数量的常驻 Pod | 持续运行(除非手动终止) | 无状态业务应用(Web 服务、API) |

| DaemonSet | 每个节点运行一个常驻 Pod | 持续运行 | 节点级基础设施(监控、日志、网络) |

| ReplicaSet | 维持固定数量的常驻 Pod | 持续运行 | 被 Deployment 依赖,不直接使用 |

Job 的注意事项

- 重启策略 :Job 仅支持

Never和OnFailure,不可配置Always(否则 Pod 会无限重启,违背一次性任务的设计); - 资源限制:批量任务的 Pod 需合理设置 CPU / 内存限制,避免占用过多节点资源;

- 并发策略 :CronJob 的

concurrencyPolicy需根据业务场景选择,避免定时任务并行执行导致数据冲突; - 历史清理 :配置

successfulJobsHistoryLimit和failedJobsHistoryLimit,避免集群中积累大量无用的 Job/Pod 资源。

示例

计算圆周率2000位

bash

#查看版本

[root@master controller_dir]# kubectl api-resources | grep job

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

[root@master controller_dir]# cat job-pi.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

metadata:

name: job-pi

spec:

nodeName: node1

containers:

- name: c1

image: perl

imagePullPolicy: IfNotPresent

command: ["perl","-Mbignum=bpi","-wle","print bpi(2000)"]

restartPolicy: Never

[root@master controller_dir]# kubectl apply -f job-pi.yaml

job.batch/pi created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pi-gqc7p 0/1 Completed 0 11s

[root@master controller_dir]# kubectl logs pi-gqc7p

3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170679821480865132823066470938446095505822317253594081284811174502841027019385211055596446229489549303819644288109756659334461284756482337867831652712019091456485669234603486104543266482133936072602491412737245870066063155881748815209209628292540917153643678925903600113305305488204665213841469519415116094330572703657595919530921861173819326117931051185480744623799627495673518857527248912279381830119491298336733624406566430860213949463952247371907021798609437027705392171762931767523846748184676694051320005681271452635608277857713427577896091736371787214684409012249534301465495853710507922796892589235420199561121290219608640344181598136297747713099605187072113499999983729780499510597317328160963185950244594553469083026425223082533446850352619311881710100031378387528865875332083814206171776691473035982534904287554687311595628638823537875937519577818577805321712268066130019278766111959092164201989380952572010654858632788659361533818279682303019520353018529689957736225994138912497217752834791315155748572424541506959508295331168617278558890750983817546374649393192550604009277016711390098488240128583616035637076601047101819429555961989467678374494482553797747268471040475346462080466842590694912933136770289891521047521620569660240580381501935112533824300355876402474964732639141992726042699227967823547816360093417216412199245863150302861829745557067498385054945885869269956909272107975093029553211653449872027559602364806654991198818347977535663698074265425278625518184175746728909777727938000816470600161452491921732172147723501414419735685481613611573525521334757418494684385233239073941433345477624168625189835694855620992192221842725502542568876717904946016534668049886272327917860857843838279679766814541009538837863609506800642251252051173929848960841284886269456042419652850222106611863067442786220391949450471237137869609563643719172874677646575739624138908658326459958133904780275901

[root@master controller_dir]# kubectl get job

NAME COMPLETIONS DURATION AGE

pi 1/1 5s 53s创建固定次数job

bash

[root@master controller_dir]# cat job-count.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: job-hello

spec:

completions: 10

parallelism: 1

template:

metadata:

name: hello

spec:

containers:

- name: c1

image: busybox

imagePullPolicy: IfNotPresent

command: ["echo","鸡你太美"]

restartPolicy: Never

[root@master controller_dir]# kubectl apply -f job-count.yaml

job.batch/job-hello created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

job-hello-68f6l 0/1 Completed 0 38s

job-hello-9s96x 0/1 Completed 0 47s

job-hello-dk7kx 0/1 Completed 0 41s

job-hello-hfxpq 0/1 Completed 0 54s

job-hello-kh6vj 0/1 Completed 0 29s

job-hello-nlrc5 0/1 Completed 0 44s

job-hello-nwfhl 0/1 Completed 0 50s

job-hello-q84bk 0/1 Completed 0 32s

job-hello-r7hmx 0/1 Completed 0 35s

job-hello-zmj6v 0/1 Completed 0 26s

pi-gqc7p 0/1 Completed 0 7m18s

#查看输出内容

[root@master controller_dir]# kubectl logs job-hello-68f6l

鸡你太美一次性备份MySQL数据库

bash

[root@master controller_dir]# cat mysql-dump.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql-service

spec:

ports:

- port: 3306

name: mysql

clusterIP: None

selector:

app: mysql

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: db

spec:

selector:

matchLabels:

app: mysql #和模板标签一致

serviceName: "mysql-service" #匹配service资源

template:

metadata:

labels:

app: mysql #模板标签

spec:

nodeName: node2

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

value: "123"

volumeMounts:

- mountPath: "/var/lib/mysql"

name: mysql-data

volumes:

- name: mysql-data

hostPath:

path: /opt/mysqldata

[root@master controller_dir]# kubectl apply -f mysql-dump.yaml

service/mysql-service unchanged

statefulset.apps/db unchanged

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

db-0 1/1 Running 0 5s

[root@master controller_dir]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11d

mysql-service ClusterIP None <none> 3306/TCP 54s

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 11d

#指定的node2节点查看

[root@node2 ~]# ls /opt/

cni containerd mysqldata rh

[root@node2 ~]# ls /opt/mysqldata/

auto.cnf client-cert.pem ibdata1 ibtmp1 performance_schema server-cert.pem

ca-key.pem client-key.pem ib_logfile0 mysql private_key.pem server-key.pem

ca.pem ib_buffer_pool ib_logfile1 mysql.sock public_key.pem sys

#master查看

[root@master controller_dir]# kubectl exec -it pods/db-0 -- /bin/sh

sh-4.2# ls

bin dev entrypoint.sh home lib64 mnt proc run srv tmp var

boot docker-entrypoint-initdb.d etc lib media opt root sbin sys usr

sh-4.2# cd /var/lib/

sh-4.2# ls

alternatives games misc mysql mysql-files mysql-keyring rpm rpm-state supportinfo yum

sh-4.2# cd mysql

sh-4.2# ls

auto.cnf ca.pem client-key.pem ib_logfile0 ibdata1 mysql performance_schema public_key.pem server-key.pem

ca-key.pem client-cert.pem ib_buffer_pool ib_logfile1 ibtmp1 mysql.sock private_key.pem server-cert.pem sys

sh-4.2#

#再外部进行操作,去内部也能看到

[root@node2 ~]# cd /opt/mysqldata/

[root@node2 mysqldata]# touch yuxb.txt

sh-4.2# ls

auto.cnf ca.pem client-key.pem ib_logfile0 ibdata1 mysql performance_schema public_key.pem server-key.pem yuxb.txt

ca-key.pem client-cert.pem ib_buffer_pool ib_logfile1 ibtmp1 mysql.sock private_key.pem server-cert.pem sys

[root@master controller_dir]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

db-0 1/1 Running 0 6m54s 10.244.104.45 node2 <none> <none>

[root@master controller_dir]# kubectl get statefulset

NAME READY AGE

db 1/1 7m32s

[root@master controller_dir]# ping -c 3 10.244.104.45

PING 10.244.104.45 (10.244.104.45) 56(84) bytes of data.

64 bytes from 10.244.104.45: icmp_seq=1 ttl=63 time=0.409 ms

64 bytes from 10.244.104.45: icmp_seq=2 ttl=63 time=0.385 ms

64 bytes from 10.244.104.45: icmp_seq=3 ttl=63 time=0.339 ms

--- 10.244.104.45 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.339/0.377/0.409/0.036 ms

#安装mysql客户端

[root@master controller_dir]# yum install -y mariadb

[root@master controller_dir]# mysql -uroot -p123 -h 10.244.104.45

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.44 MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

MySQL [(none)]>

[root@master controller_dir]# kubectl exec -it pods/db-0 -- /bin/sh

sh-4.2# my

my_print_defaults mysql_install_db mysqladmin mysqlsh

mysql mysql_ssl_rsa_setup mysqld

mysql-secret-store-login-path mysql_tzinfo_to_sql mysqldump

mysql_config mysql_upgrade mysqlpump

sh-4.2# mysql -uroot -p123

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.7.44 MySQL Community Server (GPL)

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

mysql>

#创建数据库也同步创建用于实现任务的资源清单文件

bash

[root@master controller_dir]# cat mysql-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: mysql-job

spec:

template:

metadata:

name: mysql-dump

spec:

nodeName: node1

restartPolicy: Never

containers:

- name: mysql

image: mysql:5.7

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","mysqldump --host=mysql-service -uroot -p123 --databases mysql > /root/mysql_back.sql"]

volumeMounts:

- mountPath: "/root"

name: mysql-bk

#存储卷

volumes:

- name: mysql-bk

hostPath:

path: /opt/mysqldump

[root@master controller_dir]# kubectl apply -f mysql-job.yaml

job.batch/mysql-job created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

db-0 1/1 Running 0 47m

mysql-job-82p7j 0/1 Completed 0 3s

#验证文件夹及脚本

[root@node1 ~]# cd /opt/

[root@node1 opt]# ls

cni containerd mysqldump rh

[root@node1 opt]# cd mysqldump/

[root@node1 mysqldump]# ls

mysql_back.sqlcronjob

周期性输出字符

bash

[root@master controller_dir]# cat hello-cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello-cronjob

spec:

jobTemplate:

spec:

template:

spec:

restartPolicy: OnFailure

containers:

- name: hello

image: busybox

imagePullPolicy: IfNotPresent

command: #["/bin/sh","-c","data;echo hello yuxb"]

- /bin/sh

- -c

- date;echo hello yuxb

schedule: "* * * * *"

#发现不是job了而是cronjob

#job随后执行

[root@master controller_dir]# kubectl apply -f hello-cronjob.yaml

cronjob.batch/hello-cronjob created

[root@master controller_dir]# kubectl get pods

No resources found in default namespace.

[root@master controller_dir]# kubectl get cronjobs

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello-cronjob * * * * * False 0 <none> 14s

#开始执行了

#发现每分钟会执行一次

[root@master controller_dir]# kubectl get cronjobs.batch

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello-cronjob * * * * * False 0 23s 26s

[root@master controller_dir]# watch kubectl get pods

Every 2.0s: kubectl get pods Tue Nov 25 13:30:57 2025

NAME READY STATUS RESTARTS AGE

hello-cronjob-29400808-vr8kf 0/1 Completed 0 2m57s

hello-cronjob-29400809-6jxn7 0/1 Completed 0 117s

hello-cronjob-29400810-cjstw 0/1 Completed 0 57s

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-cronjob-29400809-6jxn7 0/1 Completed 0 2m21s

hello-cronjob-29400810-cjstw 0/1 Completed 0 81s

hello-cronjob-29400811-2smwn 0/1 Completed 0 21s

#可以看到echo的内容和时间

[root@master controller_dir]# kubectl logs hello-cronjob-29400809-6jxn7

Tue Nov 25 05:29:01 UTC 2025

hello yuxb周期性备份MySQL数据库

bash

[root@master controller_dir]# kubectl apply -f mysql-dump.yaml

service/mysql-service created

statefulset.apps/db created

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

db-0 1/1 Running 0 19s

hello-cronjob-29400936-5rzjm 0/1 Completed 0 3m

hello-cronjob-29400937-r4p4c 0/1 Completed 0 2m

hello-cronjob-29400938-9j8tt 0/1 Completed 0 60s

[root@master controller_dir]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

db-0 1/1 Running 0 28s 10.244.104.19 node2 <none> <none>

hello-cronjob-29400937-r4p4c 0/1 Completed 0 2m9s 10.244.104.17 node2 <none> <none>

hello-cronjob-29400938-9j8tt 0/1 Completed 0 69s 10.244.104.15 node2 <none> <none>

hello-cronjob-29400939-g4tdg 0/1 Completed 0 9s 10.244.104.16 node2 <none> <none>

[root@master controller_dir]# mysql -uroot -p123 -h 10.244.104.19

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.44 MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> exit

Byecronjob控制器类型应用资源清单文件

bash

[root@master controller_dir]# cat mysql-cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: mysql-cronjob

spec:

jobTemplate:

spec:

template:

spec:

nodeName: node1

restartPolicy: OnFailure

containers:

- name: mysql-dump

image: mysql:5.7

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- mysqldump --host=mysql-service -uroot -p123 --databases mysql > /root/mysql-`date +%Y%m%d%H%M`.sql

volumeMounts:

- mountPath: /root

name: mysql-data

volumes:

- name: mysql-data

hostPath:

path: /opt/mysql_backup

schedule: "*/1 * * * *"

[root@master controller_dir]# kubectl apply -f mysql-cronjob.yaml

cronjob.batch/mysql-cronjob created

[root@master controller_dir]# kubectl get cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello-cronjob * * * * * False 0 46s 4h35m

[root@master controller_dir]# kubectl get pods

NAME READY STATUS RESTARTS AGE

db-0 1/1 Running 0 14m

mysql-cronjob-29400952-f7rdn 0/1 Completed 0 97s

mysql-cronjob-29400953-gh6z8 0/1 Completed 0 37s

#备份成功

#每分钟备份一次

[root@node1 ~]# cd /opt/mysql_backup/

[root@node1 mysql_backup]# ls

mysql-202511250752.sql

[root@node1 mysql_backup]# ls

mysql-202511250752.sql mysql-202511250753.sql

[root@node1 mysql_backup]# ls

mysql-202511250752.sql mysql-202511250753.sql mysql-202511250754.sql

[root@node1 mysql_backup]# ls

mysql-202511250752.sql mysql-202511250753.sql mysql-202511250754.sql mysql-202511250755.sql mysql-202511250756.sql

#删除cronjob后文件还是会存在的

[root@master controller_dir]# kubectl delete -f mysql-cronjob.yaml

cronjob.batch "mysql-cronjob" deleted

[root@master controller_dir]# kubectl get cronjobs

No resources found in default namespace.

[root@node1 mysql_backup]# ls

mysql-202511250752.sql mysql-202511250754.sql mysql-202511250756.sql

mysql-202511250753.sql mysql-202511250755.sql mysql-202511250757.sql控制器controller之StatefulSet

statefulset控制器作用

StatefulSet 的核心目标是解决有状态应用在 Kubernetes 中的编排难题,具体作用包括:

- 为 Pod 提供稳定的唯一标识:每个 Pod 拥有固定的名称、主机名、DNS 域名,即使 Pod 被重建(如节点故障、重启),标识也不会改变。

- 绑定稳定的持久化存储:每个 Pod 对应专属的 PersistentVolumeClaim(PVC),数据不会因 Pod 重建丢失,实现数据持久化。

- 支持有序的操作流程:严格按照序号执行 Pod 的部署(0→1→2...)、扩缩容(缩容从最大序号开始,扩容从下一个序号开始)、更新(可按序滚动更新)和终止。

- 适配分布式有状态集群:满足 ZooKeeper、Elasticsearch、Kafka、MySQL 主从等分布式有状态应用的部署需求,这类应用需要节点间的固定身份和网络通信。

无状态应用与有状态应用

Kubernetes 中应用分为无状态(Stateless)**和**有状态(Stateful)**两类,核心差异体现在**身份、存储、依赖关系上,具体对比如下:

| 特性 | 无状态应用(Stateless) | 有状态应用(Stateful) |

|---|---|---|

| Pod 标识 | Pod 名称随机(如 nginx-7f96789d7f-2xq5z),无固定身份 | Pod 名称固定(如 zk-0、zk-1),有序号索引,身份唯一 |

| 存储 | 共享存储(或无持久化),Pod 间数据无差异 | 专属存储,每个 Pod 有独立的持久化数据(如数据库数据、集群元数据) |

| 部署 / 扩缩容 | 并行执行,无顺序要求 | 有序执行,需按序号部署 / 缩容 |

| 网络标识 | 通过 Service 负载均衡,无需固定 IP / 域名 | 需要固定的 DNS 域名、IP(或 Headless Service 解析) |

| 依赖关系 | Pod 间无依赖,完全独立 | Pod 间有依赖(如主从、主备、集群节点选举) |

| 典型示例 | Nginx、Apache、前端静态服务、无状态 API | MySQL 主从、ZooKeeper、Kafka、Elasticsearch、ETCD |

StatefulSet的特点

StatefulSet 的设计围绕 "稳定性"和"有序性" 展开,核心特点如下:

- 稳定的网络标识(Stable Network Identity)

- 依赖 Headless Service(无头服务) 实现:Headless Service 无 ClusterIP,通过 DNS 解析直接返回 Pod 的 IP 地址。

- 每个 Pod 的 DNS 域名格式为:

<pod-name>.<headless-service-name>.<namespace>.svc.cluster.local(如 zk-0.zk-svc.default.svc.cluster.local),即使 Pod 重建,域名与 Pod 的绑定关系不变。

- 稳定的持久化存储(Stable Storage)

- 通过 volumeClaimTemplates(PVC 模板) 为每个 Pod 自动创建专属 PVC,PVC 名称格式为

<pvc模板名>-<statefulset名>-<序号>(如 data-zk-0)。 - PVC 与 PV 绑定后,即使 Pod 被删除重建,PVC 和 PV 依然存在,数据不会丢失。

- 通过 volumeClaimTemplates(PVC 模板) 为每个 Pod 自动创建专属 PVC,PVC 名称格式为

- 有序的部署与终止(Ordered Deployment and Termination)

- 部署 :按序号从 0 到 N-1 依次创建 Pod,只有前一个 Pod 进入

Running和Ready状态,才会创建下一个。 - 终止:按序号从 N-1 到 0 依次删除 Pod,先删除序号大的 Pod,再删除序号小的。

- 缩容 :与终止逻辑一致,从最大序号开始缩容;扩容:从当前最大序号的下一个开始创建。

- 部署 :按序号从 0 到 N-1 依次创建 Pod,只有前一个 Pod 进入

- 有序的更新策略(Ordered Update)

- 默认采用

RollingUpdate(滚动更新),按序号从大到小依次更新 Pod;也可通过Partition(分区)指定更新的起始序号(如 Partition=2,仅更新序号≥2 的 Pod)。 - 支持

OnDelete更新策略:需手动删除旧 Pod,控制器才会创建新 Pod(类似 Deployment 的 Recreate)。

- 默认采用

- 身份唯一性

- 每个 Pod 拥有唯一的Ordinal Index(序号索引)(从 0 开始的整数),该序号作为 Pod 名称、存储、网络标识的核心部分,是 StatefulSet 管理有状态应用的基础。

StatefulSet的YAML组成

StatefulSet 的 YAML 配置遵循 Kubernetes 的 API 规范,核心分为元数据(metadata)**和**规格(spec)**两部分,且必须配合**Headless Service使用(否则 Pod 无法获得稳定网络标识)。

核心字段说明

| 层级字段 | 作用 |

|---|---|

apiVersion |

API 版本,StatefulSet 的稳定版本为apps/v1(K8s 1.9+) |

kind |

资源类型,固定为StatefulSet |

metadata |

元数据:name(StatefulSet 名称)、namespace(所属命名空间)等 |

spec.serviceName |

关联的 Headless Service 名称(必选字段),用于为 Pod 提供稳定网络标识 |

spec.replicas |

期望的 Pod 副本数(默认 1) |

spec.selector |

标签选择器,匹配 Pod 模板的标签(必须与template.metadata.labels一致) |

spec.template |

Pod 模板,定义 Pod 的容器、端口、资源限制、标签等(与 Deployment 的 Pod 模板一致) |

spec.volumeClaimTemplates |

PVC 模板,为每个 Pod 自动创建专属 PVC,指定存储类、容量、访问模式等 |

spec.updateStrategy |

更新策略:RollingUpdate(默认)或OnDelete,可配置partition分区 |

spec.podManagementPolicy |

Pod 管理策略:OrderedReady(默认,有序部署)或Parallel(并行部署) |

StatefulSet应用

网络存储NFS部署

创建一台部署nfs服务器的虚拟机

bash

#192.168.100.138

[root@nfsserver ~]# systemctl disable firewalld.service --now

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@nfsserver ~]# getenforce

Disabled

[root@nfsserver ~]# mkdir -p /data/nfs

[root@nfsserver ~]# vim /etc/exports

[root@nfsserver ~]# cat /etc/exports

/data/nfs *(rw,no_root_squash,sync)

[root@nfsserver ~]# systemctl restart nfs-server.service

[root@nfsserver ~]# systemctl enable nfs-server.service

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

[root@nfsserver ~]# showmount -e

Export list for nfsserver:

/data/nfs *node节点安装依赖包

bash

[root@node1/2 ~]# yum install nfs-utils -y验证nfs可用性

bash

[root@node1 ~]# showmount -e 192.168.100.138

Export list for 192.168.100.138:

/data/nfs *

[root@node2 ~]# showmount -e 192.168.100.138

Export list for 192.168.100.138:

/data/nfs *master节点上创建yaml文件

bash

#通过YAML文件创建Deployment,并将NFS共享目录挂载到Pod的Nginx网页根目录(/usr/share/nginx/html),实现Pod的网页内容持久化。

[root@master ~]# mkdir statefulset

[root@master ~]#

[root@master ~]# cd statefulset/

[root@master statefulset]# rz -E

rz waiting to receive.

[root@master statefulset]# cat nginx-nfs.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-nfs

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html

name: documentroot

volumes:

- name: documentroot

nfs:

path: /data/nfs

server: 192.168.100.138

[root@master statefulset]# kubectl apply -f nginx-nfs.yml

deployment.apps/nginx-nfs created

[root@master statefulset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-nfs-8f849866d-kf5wf 1/1 Running 0 3s

nginx-nfs-8f849866d-rlv7g 1/1 Running 0 3s

[root@master statefulset]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-nfs-8f849866d-kf5wf 1/1 Running 0 23s 10.244.104.36 node2 <none> <none>

nginx-nfs-8f849866d-rlv7g 1/1 Running 0 23s 10.244.166.163 node1 <none> <none>在nfs服务器上创建index文件

验证 NFS 共享存储的同步特性

bash

[root@nfsserver ~]# cd /data/nfs/

[root@nfsserver nfs]# vim index.html

[root@nfsserver nfs]# cat index.html

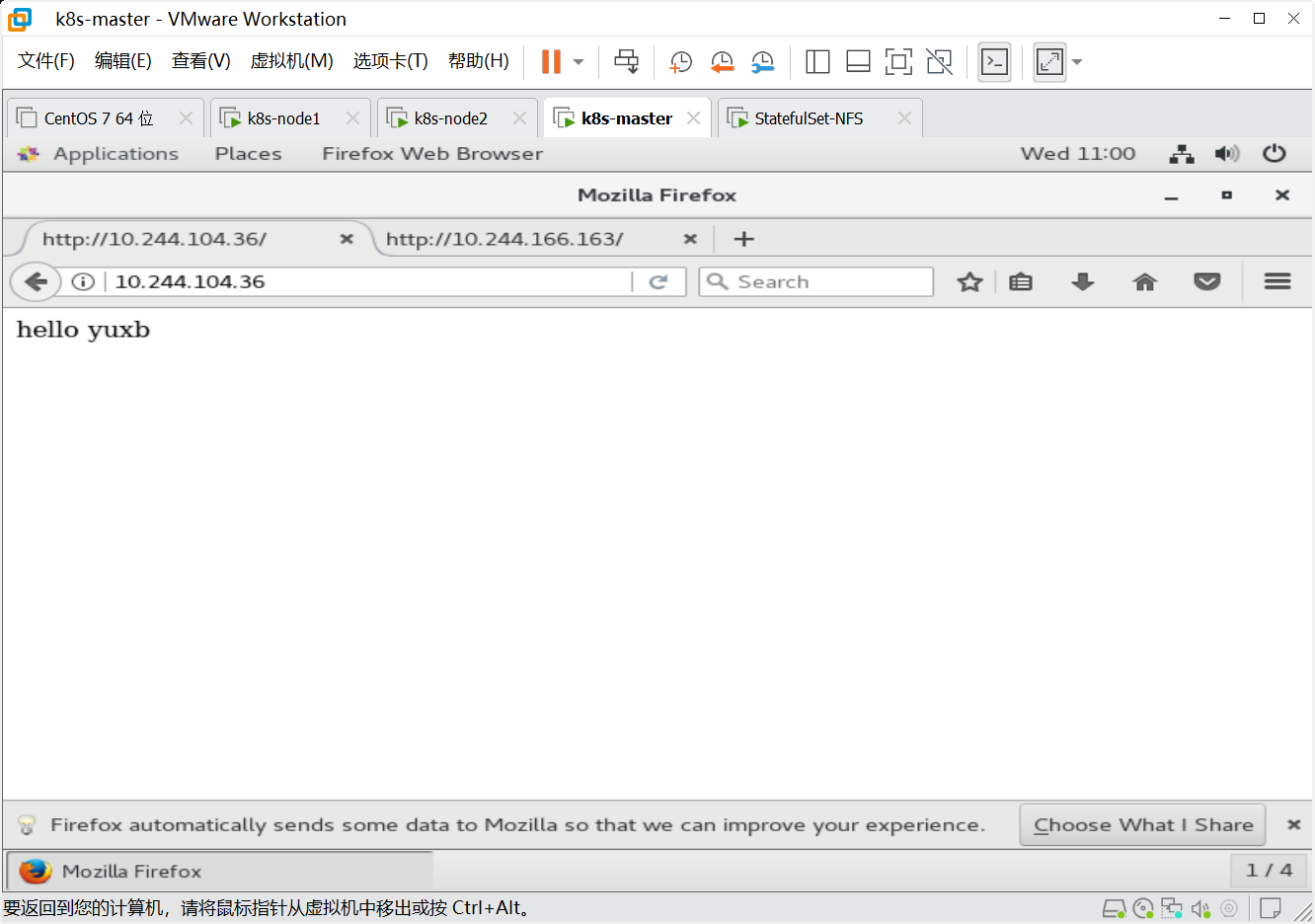

hello yuxb回到master节点验证文件是否创建成功

bash

[root@master statefulset]# curl http://10.244.104.36

hello yuxb

#去虚拟机内部查看网页时候能打开并显示内容

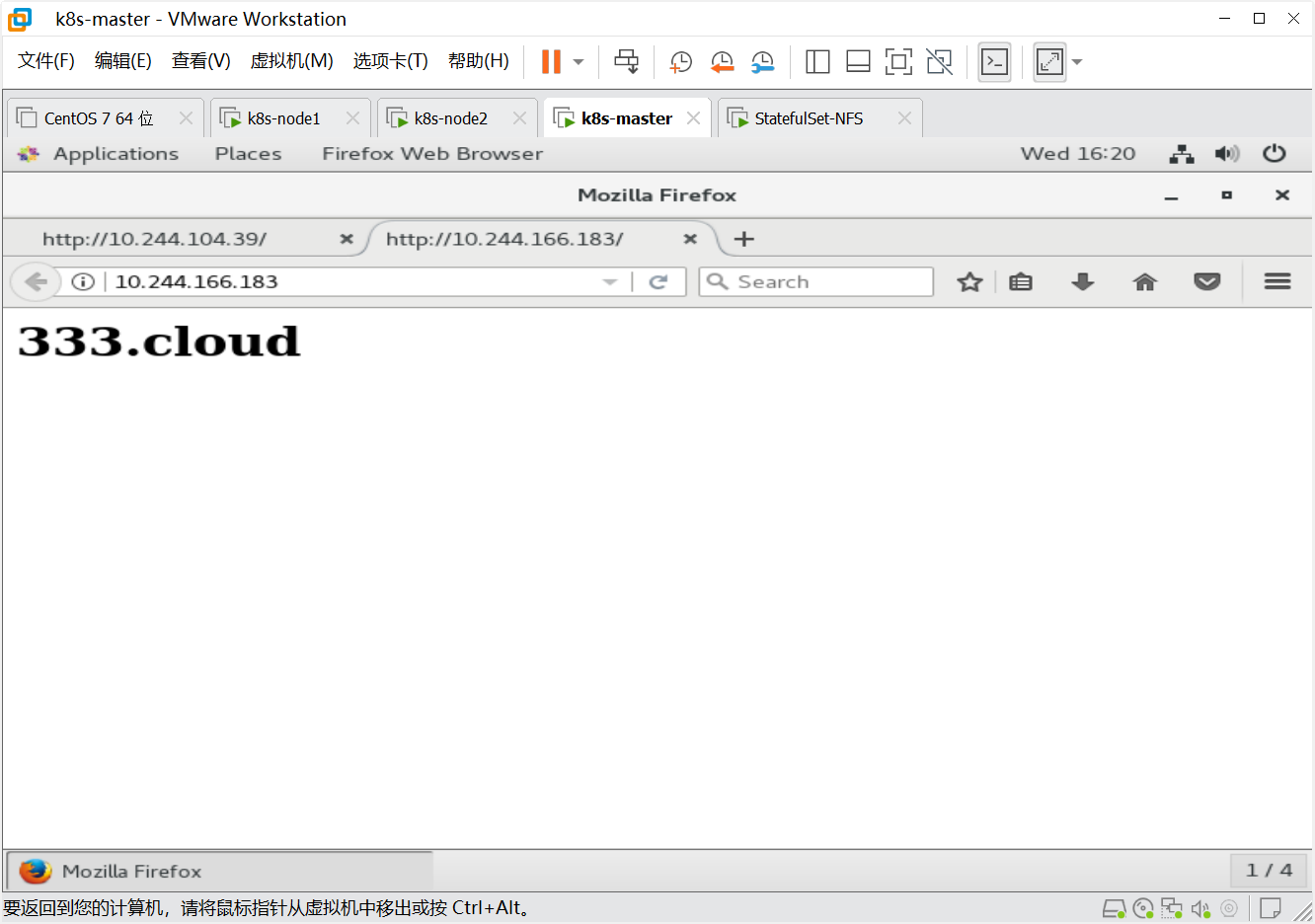

#如下图

bash

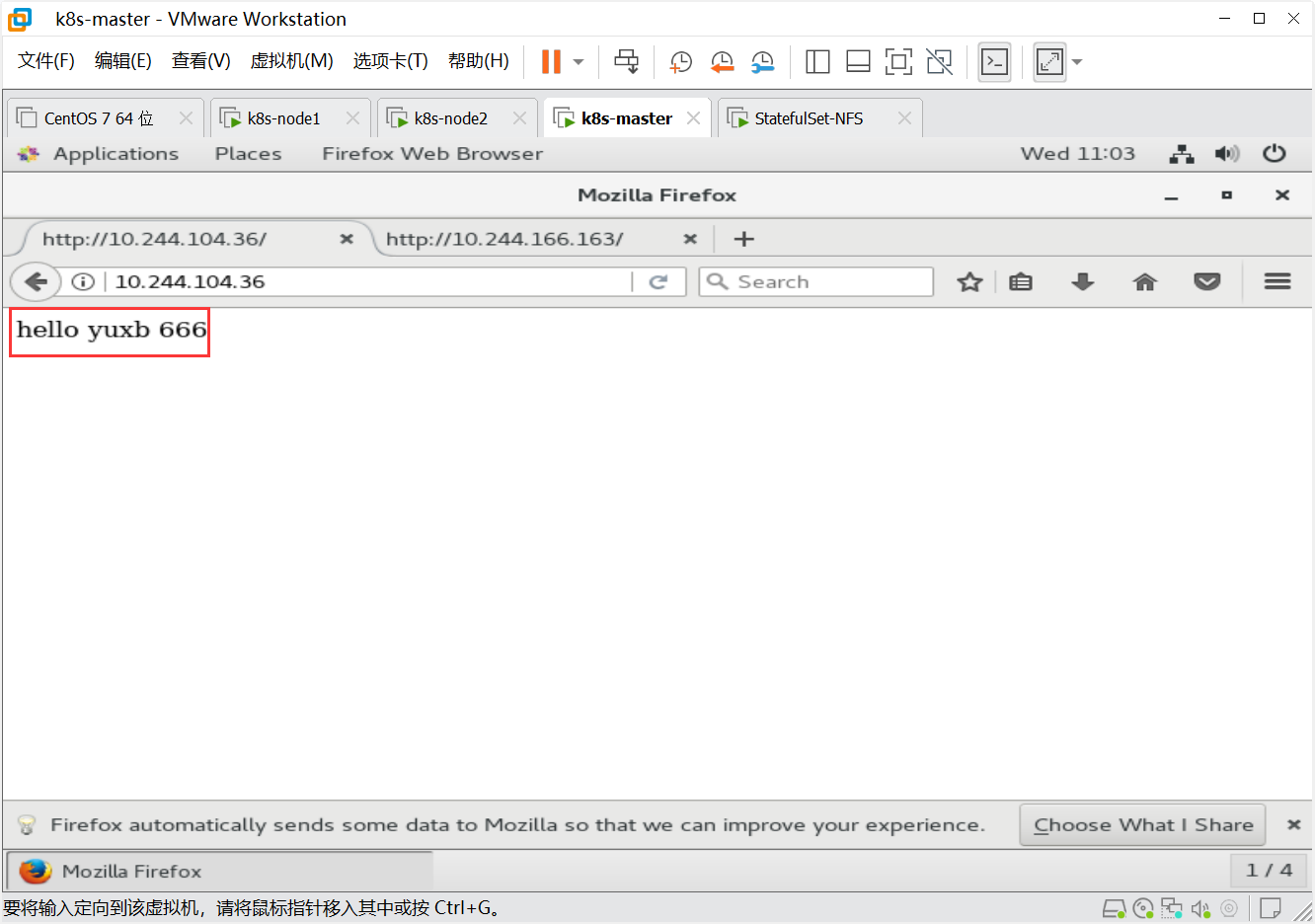

#exec进入pod里面更改index文件并查看效果

[root@master statefulset]# kubectl exec -it pods/nginx-nfs-8f849866d-kf5wf -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls

index.html

/usr/share/nginx/html # vim index.html

/bin/sh: vim: not found

/usr/share/nginx/html # vi index.html

/usr/share/nginx/html # cat index.html

hello yuxb 666

/usr/share/nginx/html # exit

[root@master statefulset]# curl http://10.244.104.36

hello yuxb 666

[root@master statefulset]# curl http://10.244.166.163

hello yuxb 666

#nfs服务器里的index.html也发生了变化

[root@nfsserver nfs]# cat index.html

hello yuxb 666

#回到虚拟机里面查看网页变化

#如下图

删除pod资源,依旧存在nfs存储

验证 NFS 存储的持久化特性

bash

[root@master statefulset]# kubectl delete -f nginx-nfs.yml

deployment.apps "nginx-nfs" deleted

[root@master statefulset]# kubectl get pods

No resources found in default namespace.

[root@node2 ~]# showmount -e 192.168.100.138

Export list for 192.168.100.138:

/data/nfs *

[root@node1 ~]# showmount -e 192.168.100.138

Export list for 192.168.100.138:

/data/nfs *

#删除 Deployment 后,Pod 被全部删除,但 NFS 服务端的/data/nfs目录及文件依然存在,且 Node 节点仍能正常访问 NFS 服务端的共享目录

#K8s 的 Pod 是临时资源(易被销毁、重建),而 NFS 存储是外部持久化存储,Pod 的生命周期不影响 NFS 中的数据,这是实现有状态服务的基础PV(持久化存储卷)与 PVC(持久存储卷声明)

PV(PersistentVolume):集群的 "存储资源池"

PV 是集群级别的持久化存储资源,由 Kubernetes 集群管理员预先创建,独立于 Pod 的生命周期,本质是对底层存储(如本地磁盘、NFS、Ceph、云厂商云盘等)的抽象。

PV 的核心特性

-

集群共享:PV 属于集群资源,而非某个命名空间(Namespace),可被所有命名空间的 PVC 申请使用。

-

生命周期独立:PV 的创建、删除与 Pod 无关,即使使用该 PV 的 Pod 被删除,PV 依然存在(除非触发回收策略)。

-

核心属性:

属性 说明 容量(Capacity) 声明 PV 的存储大小(如 storage: 1Gi)访问模式(AccessModes) 定义 PV 的读写权限,支持 3 种模式:① ReadWriteOnce(RWO):单节点读写②ReadOnlyMany(ROX):多节点只读③ReadWriteMany(RWX):多节点读写回收策略(ReclaimPolicy) PV 被 PVC 解绑后的处理方式:① Retain(保留):手动回收(默认)②Delete(删除):自动删除底层存储(如云盘)③Recycle(回收):清除数据后重新可用(已废弃)存储类(StorageClassName) 关联 StorageClass,用于动态创建 PV(无该字段则为静态 PV)卷模式(VolumeMode) 存储卷的类型: Filesystem(文件系统,默认)或Block(块设备)

PV 的类型

根据创建方式,PV 分为静态 PV 和动态 PV:

- 静态 PV:由管理员手动创建的 PV,提前准备好存储资源,等待 PVC 绑定。

- 动态 PV :管理员通过

StorageClass定义存储模板,当 PVC 申请的存储无匹配的静态 PV 时,K8s 会根据StorageClass自动创建 PV(无需管理员手动干预)。

PVC(PersistentVolumeClaim):用户的 "存储申请单"

PVC 是用户对存储资源的请求声明 ,由应用开发者创建,属于命名空间级资源。PVC 的作用是向 Kubernetes 申请存储资源,无需关心底层存储的具体实现(如 NFS、Ceph 还是云盘),实现了存储消费与存储实现的解耦。

PVC 的核心作用

- 抽象存储请求:用户只需声明所需的存储容量、访问模式、存储类,K8s 自动匹配并绑定符合条件的 PV,无需了解底层存储细节。

- 与 Pod 关联 :Pod 通过

volumes字段引用 PVC,从而使用 PVC 绑定的 PV 存储资源。 - 配合 StatefulSet :StatefulSet 的

volumeClaimTemplates会为每个 Pod 自动创建专属 PVC (名称格式:<模板名>-<StatefulSet名>-<Pod序号>),实现每个 Pod 的独立存储。

pv与pvc之间的关系

PV 和 PVC 遵循 **"供需匹配"的设计模式,二者是一对一绑定 ** 的关系,核心关联机制体现在以下方面:

1. 绑定机制:双向匹配,一对一独占

Kubernetes 的PV 控制器 会根据 PVC 的申请条件(容量、访问模式、存储类、卷模式等),从集群的可用 PV 中筛选出最匹配的资源,完成绑定(Binding)。

- 匹配规则:PVC 的存储容量请求≤PV 的容量、访问模式完全匹配、存储类名称一致(无存储类则匹配无存储类的 PV)。

- 独占性:一个 PV 只能被一个 PVC 绑定,一个 PVC 也只能绑定一个 PV,绑定后二者形成专属关联,其他 PVC 无法再使用该 PV。

- 绑定状态:

- 绑定成功:PVC 的

status.phase为Bound,PV 的status.phase也为Bound,并在spec.claimRef中记录绑定的 PVC 信息。 - 绑定失败:PVC 的

status.phase为Pending,提示 "无匹配的 PV"(可通过动态 PV 解决)。

- 绑定成功:PVC 的

2. 生命周期关联:PVC 主导,PV 跟随(非绝对)

PV 和 PVC 的生命周期并非完全同步,但 PVC 的状态会直接影响 PV 的使用:

- PVC 存在时:PV 被绑定并被 PVC 独占,Pod 通过 PVC 使用 PV 的存储资源,数据持久化存储在 PV 对应的底层存储中。

- PVC 被删除时:PV 的状态变为Released(已释放),此时 PV 不会被立即删除,而是根据回收策略处理:

Retain(保留):PV 保留,数据也保留,管理员需手动清理数据并将 PV 重新标记为Available(可用)。Delete(删除):自动删除 PV 及对应的底层存储(如云厂商的云盘),数据被删除。Recycle(回收):清除 PV 内的数据(执行rm -rf /thevolume/*),然后将 PV 重新标记为Available(已废弃,推荐用StorageClass的动态配置替代)。

- PV 被手动删除时 :PVC 的状态变为

Lost(丢失),Pod 无法再使用该存储资源,需重新创建 PV 并绑定。

3. 解耦设计:存储层与应用层分离

PV 和 PVC 的核心价值是实现存储资源的解耦,具体体现为:

- 管理员视角 :专注于创建 PV/

StorageClass,管理底层存储资源(如扩容、维护 NFS/Ceph),无需关心应用如何使用。 - 开发者视角:只需创建 PVC 申请存储,Pod 引用 PVC 即可,无需了解底层存储是 NFS、云盘还是本地磁盘。

- 可移植性 :应用的 PVC 配置在不同集群中无需修改,只需集群管理员配置对应的 PV/

StorageClass,即可实现应用的跨集群存储适配。

4. 与 StatefulSet 的联动:自动创建 PVC,绑定专属 PV

在 StatefulSet 中,volumeClaimTemplates会为每个 Pod 自动创建 PVC,结合 PV 的绑定机制,实现每个 Pod 的专属存储:

- StatefulSet 创建 Pod 时,根据

volumeClaimTemplates自动生成 PVC(名称如nginx-data-nginx-stateful-0)。 - K8s 为该 PVC 匹配并绑定 PV(静态或动态创建)。

- Pod 被删除重建时,同名 PVC 依然存在,会重新绑定原 PV,数据不会丢失(这是 StatefulSet 实现有状态应用数据持久化的核心)。

PV/PVC 核心概念

- PV(PersistentVolume) :集群级别的持久化存储卷,由管理员创建,属于集群资源(不隶属于任何命名空间),定义了存储的具体实现(如 NFS、Ceph、本地存储)。

- PVC(PersistentVolumeClaim) :用户的存储资源请求 ,由开发 / 运维人员创建 ,隶属于具体命名空间,仅声明存储需求(容量、访问模式),K8s 会自动将 PVC 绑定到匹配的 PV上。

- 绑定规则 :PVC 与 PV 需满足访问模式一致 、PVC 请求容量≤PV 总容量 、存储类(StorageClass)匹配(本次未使用存储类)等条件。

示例:

实现nfs类型pv与pvc

bash

#查看版本

[root@master statefulset]# kubectl api-resources | grep PersistentVolume

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

[root@master statefulset]# kubectl api-resources | grep PersistentVolumeClaim

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

#编写pv的yaml文件

[root@master statefulset]# cat pv-nfs.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv01

spec:

capacity: #预制空间

storage: 10Gi

accessModes: #访问模式,读写挂载多节点

- ReadWriteMany

nfs:

path: /data/nfs

server: 192.168.100.138

[root@master statefulset]# kubectl apply -f pv-nfs.yaml

persistentvolume/nfs-pv01 created

#查看

#目前并未绑定

[root@master statefulset]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv01 10Gi RWX Retain Available 24s

#Retain是回收策略,Retain表示不使用了需要手动回收

#创建pvc的yaml文件

[root@master statefulset]# cat pvc-nfs.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs

spec:

accessModes: #和pv的访问模式一致

- ReadWriteMany

#申请空间

resources:

requests:

storage: 10Gi

[root@master statefulset]# kubectl apply -f pvc-nfs.yaml

persistentvolumeclaim/pvc-nfs created

#查看

#STATUS为Bound

[root@master statefulset]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-nfs Bound nfs-pv01 10Gi RWX 14s

#再次查看pv

#已经绑定成功 Bound

[root@master statefulset]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv01 10Gi RWX Retain Bound default/pvc-nfs 9m19s创建nginx应用yaml文件

bash

#创建 Deployment 并挂载 PVC

#通过 YAML 创建 Deployment,将 PVC 作为存储卷挂载到 Nginx Pod 的网页根目录,实现应用与存储的解耦(Pod 只需声明 PVC 名称,无需知道底层是 NFS 还是其他存储)。

[root@master statefulset]# cat nginx-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-pvc

spec:

replicas: 4

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

restartPolicy: Always

containers:

- name: nginx

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

#挂载

volumeMounts:

- mountPath: /usr/share/nginx/html # 挂载到Nginx网页根目录

name: www # 卷名称,与下方volumes.name一致

#存储卷

volumes:

- name: www

persistentVolumeClaim: # 卷类型为PVC

claimName: pvc-nfs # 绑定的PVC名称

[root@master statefulset]# kubectl apply -f nginx-pvc.yaml

deployment.apps/nginx-pvc created

[root@master statefulset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-pvc-7974ccf99-fq6wc 1/1 Running 0 11s

nginx-pvc-7974ccf99-lqxv8 1/1 Running 0 11s

nginx-pvc-7974ccf99-v4fcz 1/1 Running 0 11s

nginx-pvc-7974ccf99-x7vq7 1/1 Running 0 11s

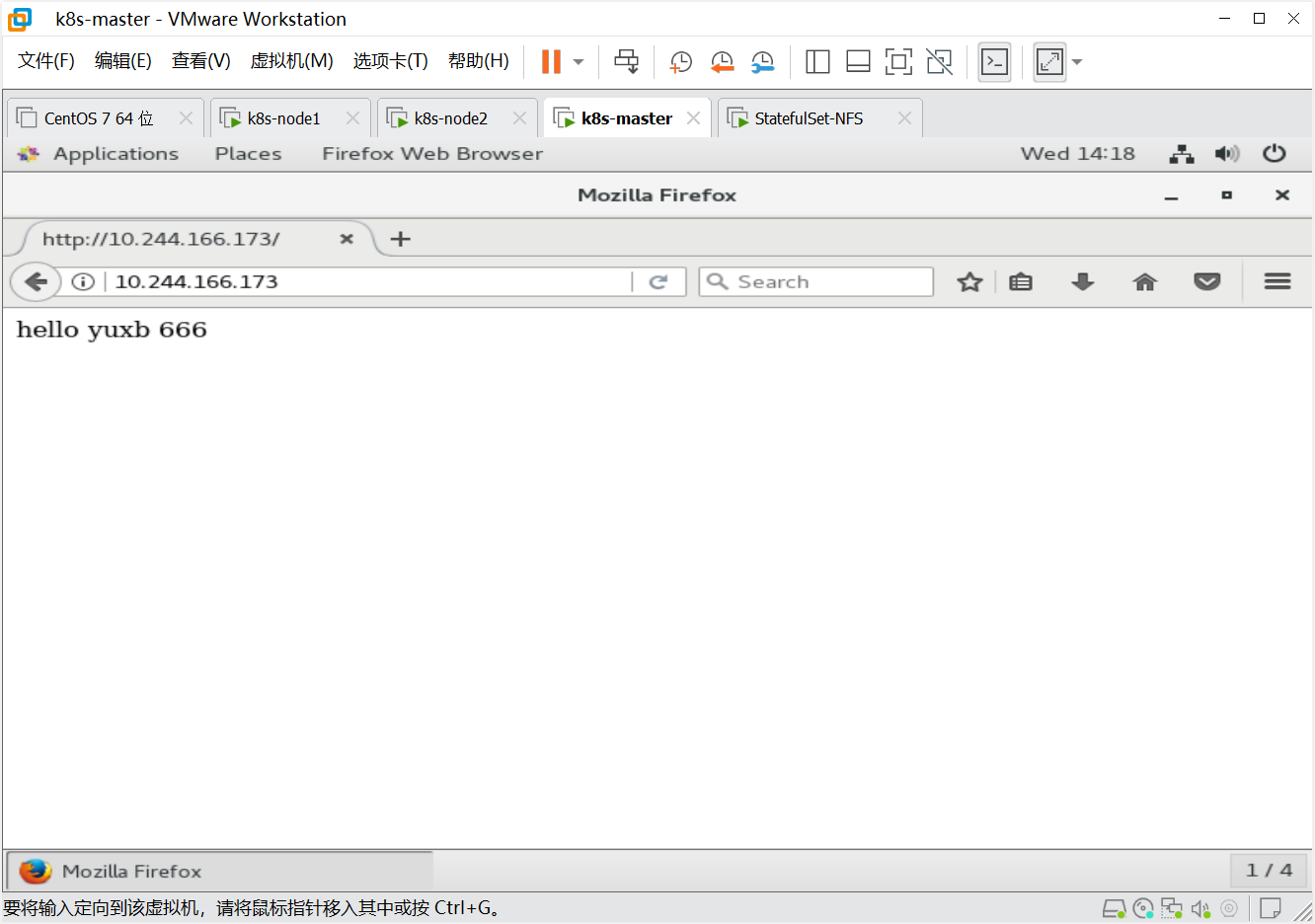

[root@master statefulset]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pvc-7974ccf99-fq6wc 1/1 Running 0 41s 10.244.166.173 node1 <none> <none>

nginx-pvc-7974ccf99-lqxv8 1/1 Running 0 41s 10.244.104.27 node2 <none> <none>

nginx-pvc-7974ccf99-v4fcz 1/1 Running 0 41s 10.244.104.29 node2 <none> <none>

nginx-pvc-7974ccf99-x7vq7 1/1 Running 0 41s 10.244.166.176 node1 <none> <none>

[root@master statefulset]# kubectl exec -it pods/nginx-pvc-7974ccf99-fq6wc -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls

index.html

/usr/share/nginx/html # cat index.html

hello yuxb 666

/usr/share/nginx/html # exit

#去vm虚拟机内部访问pod的ip发现可以访问网页内容

#如下图

与直接挂载 NFS 的对比

| 方式 | 优点 | 缺点 |

|---|---|---|

| 直接挂载 NFS | 配置简单,无需 PV/PVC | 应用与存储紧耦合,可维护性差 |

| 挂载 PV/PVC(NFS) | 解耦存储与应用,标准化管理 | 多一步 PV/PVC 的创建配置 |

动态供给

动态供给 是解决静态 PV 手动创建繁琐问题的存储资源分配方式,核心是PVC 申请存储时自动创建匹配的 PV 及底层存储,是 StatefulSet 部署有状态应用的主流存储方案

-

核心定义

替代手动创建静态 PV 的方式,当 PVC 提交存储申请且集群无匹配 PV 时,K8s 根据 PVC 指定的

StorageClass,通过存储供应者(Provisioner) 自动创建符合需求的 PV 和底层存储资源(如 NFS 子目录、云盘)。 -

核心组件

- StorageClass:存储模板,定义 PV 的创建规则(如存储类型、回收策略、扩容配置),PVC 通过名称关联它触发动态供给。

- Provisioner:动态供给的执行者,负责创建 PV 和底层存储,分内置(如云厂商 EBS)和第三方(如 NFS-subdir-provisioner)两类。

-

极简工作流程

- 管理员部署 Provisioner 并创建 StorageClass;

- 用户创建 PVC(或通过 StatefulSet 的

volumeClaimTemplates自动生成),指定 StorageClass 名称; - K8s 检测无匹配静态 PV,调用 Provisioner 自动创建 PV 和底层存储;

- PV 与 PVC 自动绑定,Pod 通过 PVC 使用存储。

-

核心优势

- 自动化:无需手动创建 PV,减少运维工作量;

- 按需分配:根据 PVC 需求创建存储,避免资源闲置;

- 适配性强:通过不同 StorageClass 适配 NFS、Ceph、云盘等存储,完美配合 StatefulSet 实现有状态应用的专属存储分配。

NFS文件系统创建存储动态供给

Kubernetes 中 NFS 存储动态供给的完整实操流程 :核心是通过nfs-subdir-external-provisioner供给器实现PVC 自动创建 PV (无需管理员手动定义 PV),并结合StatefulSet和无头服务实现有状态应用的部署与稳定网络访问。相比之前的静态 PV/PVC,动态供给是生产环境中更高效的存储管理方式。

核心概念

- 存储动态供给 :与静态供给 (管理员手动创建 PV,用户创建 PVC 绑定)不同,动态供给通过

StorageClass和存储供给器(Provisioner) 实现 PV 的自动创建。当用户创建 PVC 时,供给器会根据 StorageClass 的配置,自动在后端存储(如 NFS)创建对应的存储目录并生成 PV,大幅提升存储管理效率。 - nfs-subdir-external-provisioner:K8s 社区提供的 NFS 动态供给器,核心功能是监听 PVC 的创建事件,自动在 NFS 服务端创建专属子目录,并生成对应的 NFS 类型 PV,实现 PVC 与 PV 的自动绑定。

- 无头服务(Headless Service) :

ClusterIP: None的 Service,不分配集群 IP,核心作用是为 StatefulSet 的 Pod 提供稳定的网络标识 (固定域名),K8s 的 CoreDNS 会将无头服务域名解析为所有 Pod 的 IP,同时每个 Pod 拥有podname.servicename.namespace.svc.cluster.local的固定域名。

示例:

下载class插件文件

bash

[root@master statefulset]# mkdir nfs-cli

[root@master statefulset]# cd nfs-cli/

[root@master nfs-cli]# wget https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/class.yaml

--2025-11-26 15:20:55-- https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/class.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.108.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 246 [text/plain]

Saving to: 'class.yaml'

100%[====================================================================================================>] 246 452B/s in 0.5s

2025-11-26 15:21:12 (452 B/s) - 'class.yaml' saved [246/246]

[root@master nfs-cli]# ls

class.yaml

[root@master nfs-cli]# cp class.yaml storageclass.yaml

[root@master nfs-cli]#

[root@master nfs-cli]# kubectl apply -f storageclass.yaml

storageclass.storage.k8s.io/nfs-client created

[root@master nfs-cli]# kubectl get pods

No resources found in default namespace.

[root@master nfs-cli]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 19s下载并创建rbac

nfs-client-provisioner 需要访问 K8s API(如监听 PVC 事件、创建 PV),因此需通过 RBAC 配置授予对应的权限

bash

[root@master nfs-cli]# wget https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/rbac.yaml

--2025-11-26 15:26:00-- https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/rbac.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.108.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1900 (1.9K) [text/plain]

Saving to: 'rbac.yaml'

100%[====================================================================================================>] 1,900 818B/s in 2.3s

2025-11-26 15:26:05 (818 B/s) - 'rbac.yaml' saved [1900/1900]

[root@master nfs-cli]# ls

class.yaml rbac.yaml storageclass.yaml

[root@master nfs-cli]# cp rbac.yaml storageclass-rbac.yaml

[root@master nfs-cli]# kubectl apply -f storageclass-rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[root@master nfs-cli]# kubectl get rolebinding

NAME ROLE AGE

leader-locking-nfs-client-provisioner Role/leader-locking-nfs-client-provisioner 61s创建动态供给的deployment

部署 nfs-client-provisioner 供给器

这是动态供给的核心组件,通过 Deployment 部署供给器 Pod,负责监听 PVC 事件并自动创建 NFS 类型 PV。

bash

[root@master nfs-cli]# wget https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/deployment.yaml

--2025-11-26 15:30:25-- https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/deployment.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.108.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1069 (1.0K) [text/plain]

Saving to: 'deployment.yaml'

100%[====================================================================================================>] 1,069 --.-K/s in 0s

2025-11-26 15:30:26 (101 MB/s) - 'deployment.yaml' saved [1069/1069]

[root@master nfs-cli]#

[root@master nfs-cli]# ls

class.yaml deployment.yaml rbac.yaml storageclass-rbac.yaml storageclass.yaml

[root@master nfs-cli]# vim deployment.yaml

[root@master nfs-cli]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/pylixm/nfs-subdir-external-provisioner:v4.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.186.100.138

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.100.138

path: /data/nfs

#部署与验证

[root@master nfs-cli]# kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

[root@master nfs-cli]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-54b9cb8bf9-4fn9k 0/1 ContainerCreating 0 4s

[root@master nfs-cli]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-54b9cb8bf9-4fn9k 1/1 Running 0 2m2snginx应用使用动态供给

部署 StatefulSet + 无头服务,使用动态存储供给

通过无头服务 为 StatefulSet 提供稳定网络标识,通过volumeClaimTemplates触发动态供给,为每个 Pod 自动创建专属的 PVC/PV 和 NFS 子目录。

bash

[root@master nfs-cli]# cat nginx-storageclass-nfs.yaml

apiVersion: v1 #无头服务定义

kind: Service

metadata:

name: nginx-svc

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1 #StatefulSet 定义

kind: StatefulSet

metadata:

name: nginx-class

spec:

replicas: 2

selector:

matchLabels:

app: nginx

serviceName: "nginx-svc"

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: web

volumeMounts:

- mountPath: /usr/share/nginx/html

name: www

#动态供给

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: "nfs-client"

resources:

requests:

storage: 1Gi

#部署与验证存储动态创建

[root@master nfs-cli]# kubectl apply -f nginx-storageclass-nfs.yaml

service/nginx-svc created

statefulset.apps/nginx-class created

[root@master nfs-cli]#

[root@master nfs-cli]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-54b9cb8bf9-4fn9k 1/1 Running 0 37m

nginx-class-0 1/1 Running 0 5s

nginx-class-1 0/1 Pending 0 1s

[root@master nfs-cli]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-54b9cb8bf9-4fn9k 1/1 Running 0 37m 10.244.104.42 node2 <none> <none>

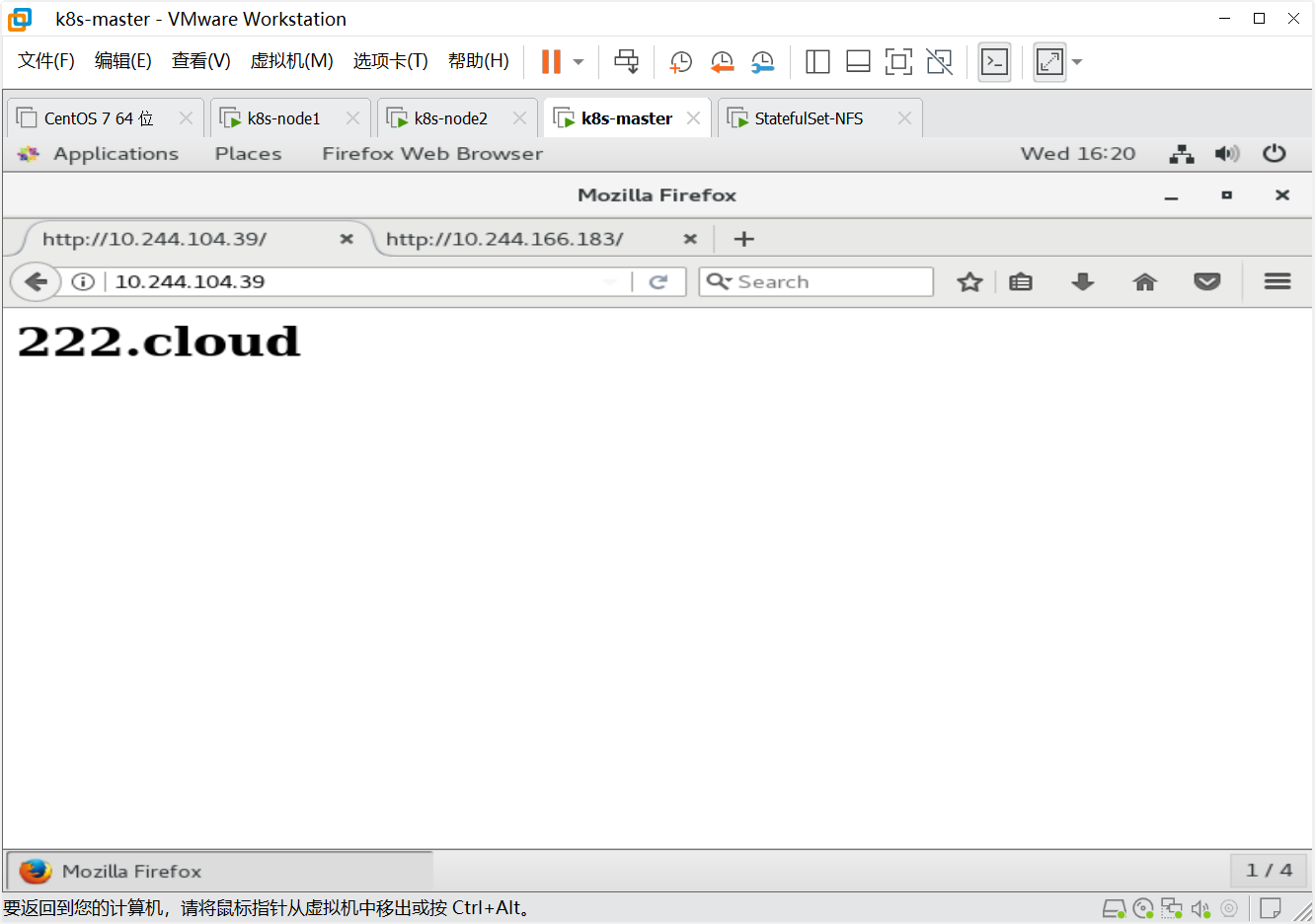

nginx-class-0 1/1 Running 0 19s 10.244.104.39 node2 <none> <none>

nginx-class-1 1/1 Running 0 15s 10.244.166.183 node1 <none> <none>

[root@nfsserver nfs]# ls

default-www-nginx-class-0-pvc-c9bb4b12-1e60-4c6c-82d4-a61296803375 index.html

default-www-nginx-class-1-pvc-0913328f-d054-413c-b271-5891bdfb61fe

#供给器会在 NFS 的/data/nfs目录下,为每个 Pod 创建专属子目录(命名规则:命名空间-PVC名称-Pod名称-PVCUID);

#每个子目录对应一个自动创建的 PV/PVC,实现 StatefulSet Pod 的数据隔离(有状态服务的核心需求)。

[root@nfsserver nfs]# echo "<h1>222.cloud<h1>" > default-www-nginx-class-0-pvc-c9bb4b12-1e60-4c6c-82d4-a61296803375/index.html

[root@nfsserver nfs]# echo "<h1>333.cloud<h1>" > default-www-nginx-class-1-pvc-0913328f-d054-413c-b271-5891bdfb61fe/index.html

bash

#使用kube-dns解析无头服务域名,可以直接看到后端pod的地址

[root@master nfs-cli]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 13d

metrics-server ClusterIP 10.104.124.222 <none> 443/TCP 12d

[root@master nfs-cli]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

nginx-svc ClusterIP None <none> 80/TCP 6m1s

#解析无头服务域名

[root@master nfs-cli]# dig -t a nginx-svc.default.svc.cluster.local. @10.96.0.10

; <<>> DiG 9.9.4-RedHat-9.9.4-50.el7 <<>> -t a nginx-svc.default.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 15964

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx-svc.default.svc.cluster.local. IN A

;; ANSWER SECTION:

nginx-svc.default.svc.cluster.local. 30 IN A 10.244.166.183

nginx-svc.default.svc.cluster.local. 30 IN A 10.244.104.39

;; Query time: 10 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Nov 26 16:22:02 CST 2025

;; MSG SIZE rcvd: 166在集群里创建pod访问域名,观察负载均衡

bash

#集群内 Pod 访问验证

[root@master nfs-cli]# kubectl run -it centos --image=centos:7 --image-pull-policy=IfNotPresent

If you don't see a command prompt, try pressing enter.

[root@centos /]# curl http://nginx-svc.default.svc.cluster.local.

<h1>222.cloud<h1>

[root@centos /]# curl http://nginx-svc.default.svc.cluster.local.

<h1>222.cloud<h1>

[root@centos /]# curl http://nginx-svc.default.svc.cluster.local.

<h1>333.cloud<h1>

[root@centos /]# curl http://nginx-svc.default.svc.cluster.local.

<h1>222.cloud<h1>

[root@centos /]# curl http://nginx-svc.default.svc.cluster.local.

<h1>333.cloud<h1>

[root@centos /]#

#轮询访问

#指定

[root@centos /]#

[root@centos /]# curl http://nginx-class-0.nginx-svc.default.svc.cluster.local.

<h1>222.cloud<h1>

[root@centos /]# curl http://nginx-class-0.nginx-svc.default.svc.cluster.local.

<h1>222.cloud<h1>

[root@centos /]# curl http://nginx-class-0.nginx-svc.default.svc.cluster.local.

<h1>222.cloud<h1>

[root@centos /]# curl http://nginx-class-0.nginx-svc.default.svc.cluster.local.

<h1>222.cloud<h1>

[root@centos /]# exit

[root@master nfs-cli]# kubectl get pods

NAME READY STATUS RESTARTS AGE

centos 1/1 Running 1 (30s ago) 15m

nfs-client-provisioner-54b9cb8bf9-4fn9k 1/1 Running 2 (28m ago) 18h

nginx-class-0 1/1 Running 1 (17h ago) 17h

nginx-class-1 1/1 Running 1 (17h ago) 17h

#轮询负载均衡:无头服务的域名解析采用轮询策略,多次访问会分发到不同 Pod;

#稳定 Pod 域名:StatefulSet 的每个 Pod 拥有固定的域名,即使 Pod 重建,域名仍不变(IP 可能变化,但 CoreDNS 会自动更新),这是有状态服务的关键特性。流程总结

实现 K8s 集群中 NFS 存储自动分配,部署数据持久化、网络稳定、支持负载均衡的有状态 Nginx 应用。

核心组件

- NFS 服务器:集群共享存储载体,保障数据持久化;

- 动态供给组件(StorageClass+RBAC+nfs-client-provisioner):自动分配存储(免手动建 PV);

- StatefulSet:管理有状态 Nginx,实现 Pod 有序部署、数据隔离;

- 无头服务:提供稳定域名,支持负载均衡访问。

关键步骤与配置

一、NFS 存储部署(基础)

- 关闭防火墙 / SELinux,创建共享目录

/data/nfs; - 配置共享规则:

/data/nfs *(rw,no_root_squash,sync); - Node 节点安装

nfs-utils,确保集群可访问 NFS 服务端。

二、动态存储供给配置(核心自动化)

- StorageClass:定义存储策略(供给器名称、回收策略

Delete); - RBAC:授予供给器 API 访问权限(创建 ServiceAccount、角色绑定);

- nfs-client-provisioner:监听 PVC 请求,自动在 NFS 创建子目录、生成 PV 并绑定 PVC。

三、有状态 Nginx 部署

- 无头服务:

clusterIP: None,为 Pod 提供固定域名(pod名.服务名.命名空间.svc.cluster.local); - StatefulSet:

- 关联无头服务,配置

volumeClaimTemplates触发动态供给(每个 Pod 分配 1GB 专属存储); - 容器挂载

/usr/share/nginx/html到专属存储,实现数据隔离。

- 关联无头服务,配置

四、访问验证

- 解析无头服务域名:获取所有 Pod IP;

- 集群内访问:轮询负载均衡,支持固定 Pod 域名精准访问。

核心价值

- 存储自动化:免手动建 PV,降低运维成本;

- 数据安全:NFS 持久化存储,Pod 重建数据不丢失;

- 网络稳定:固定域名,解决 IP 变动问题;

- 负载均衡:集群内自动分发请求。

金丝雀发布更新

它将按照与 Pod 终止相同的顺序(从最大序号到最小序号)进行,每次更新一个 Pod。

Statefulset可以使用partition参数来实现金丝雀更新,partition参数可以控制StatefulSet控制器更新的 Pod。

示例:

bash

# 查看 StatefulSet 状态

[root@master nfs-cli]# kubectl get sts

NAME READY AGE

nginx-class 2/2 17h

# 查看原始配置

[root@master nfs-cli]# kubectl get sts nginx-class -o wide

NAME READY AGE CONTAINERS IMAGES

nginx-class 2/2 18h c1 nginx:1.26-alpine

[root@master nfs-cli]# kubectl get sts nginx-class -o yaml

apiVersion: apps/v1

kind: StatefulSet

......

updateStrategy:

rollingUpdate:

partition: 0 #更新前

type: RollingUpdate

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

creationTimestamp: null

name: www

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs-client

volumeMode: Filesystem

status:

phase: Pending

status:

availableReplicas: 2

collisionCount: 0

currentReplicas: 2

currentRevision: nginx-class-746fb98798

observedGeneration: 1

readyReplicas: 2

replicas: 2

updateRevision: nginx-class-746fb98798

updatedReplicas: 2

#partition 是 StatefulSet 滚动更新的 "分区阈值",规则为:

#Pod 序号 ≥ partition 值:触发更新(使用新版本镜像);