系列文章:

FFmpeg解码音频数据AudioTrack/OpenSL播放

FFmpeg解码OpenSL、ANativeWindow播放实现音视频同步

前面三篇文章分别介绍了:FFmpeg解码数据后用OpenSL、AudioTrack单独播放音频;FFmpeg解码数据用ANativeWindow、OpenGL Texture单独播放视频。现在结合以上音频和视频播放的代码,来实现"FFmpeg解码OpenSL、ANativeWindow播放实现音视频同步"的功能。

FFmpeg解码OpenSL、ANativeWindow播放

一、音视频同步原理:

音视频同步(也叫"唇音同步")是多媒体播放、流媒体和实时通信中的核心问题。其本质是解决音频和视频播放时钟不一致的问题。

1、核心问题:时钟漂移:

音视频数据在采集、编码、传输、解码的过程中,是分开处理的。但播放时,它们必须按照正确的时间关系呈现。

-

音频时钟 和 视频时钟 是基于不同的硬件(声卡、显卡/显示器)和软件时钟,它们的频率存在微小差异(时钟漂移)。

-

即使开始时同步,播放几秒或几分钟后,微小的差异也会累积成明显的不同步(音频快于视频,或视频快于音频)。

2、基础同步策略:

解决这个问题的关键在于选择一个统一的"主时钟",让另一个流去跟随它。主要有三种策略:

音频主时钟:

以音频播放时间作为系统的主时钟(Master Clock)。视频播放速度会动态调整,以匹配音频的时间。

人耳对音频不连续更敏感:轻微的音频卡顿、抖动或速度变化(如音调改变)会让人非常不适。

人眼对视频的连续性和轻微跳帧相对更宽容。

音频的采样率(如44.1kHz)本身就是一个非常精确的时钟源。

音频主时钟实现方式:

-

音频按照自己的节奏正常播放;

-

视频播放器不断比较:当前视频帧的显示时间戳(PTS) 与 当前音频播放的位置(主时钟时间);

-

根据比较结果决策:

-

视频慢了(PTS < 音频时钟):丢帧(跳帧),追赶音频。

-

视频快了(PTS > 音频时钟):重复显示当前帧或延迟下一帧的显示,等待音频。

-

同步良好:正常播放。

-

视频主时钟:

以视频播放时间作为主时钟。音频播放速度(重采样)会动态调整,以匹配视频的时间。

**使用场景:**对视频连续性要求极高的场景(如专业视频编辑、幻灯片演示);音频质量要求不高或没有音频的流。

缺点 :调整音频速度需要进行重采样,可能改变音调,处理不当会影响听觉体验,且计算量较大。

外部时钟:

使用一个独立的、更精确的系统时钟或外部时间源(如网络时间协议NTP)作为主时钟。音频和视频都向这个外部时钟同步。

使用场景:多流同步(如多个摄像头和麦克风);分布式系统(如多地视频会议);广播电视系统。

3、关键技术组件:

无论采用哪种策略,都依赖于以下关键技术:

时间戳体系:

这是同步的基石。在编码时,每一帧音频和视频数据都会被赋予一个显示时间戳。

-

PTS : 显示时间戳,告诉播放器"这一帧应该在什么时间被呈现";

-

DTS : 解码时间戳(主要用于有B帧的视频),告诉解码器"这一帧应该在什么时间被解码"。对于同步,我们主要使用PTS。

-

时间戳的基准是一个连续的、单调递增的时钟基。

同步逻辑与反馈控制:

同步逻辑与反馈控制:

-

测量 :不断计算音频和视频PTS的差值(同步偏差)。

-

决策:根据偏差大小和正负,决定调整策略(丢帧、等待、加速)。

-

执行:通过控制视频显示队列或音频重采样来实施调整。

二、音视频同步代码的实现:

在原有的基础上,粗略的实现音视频同步。

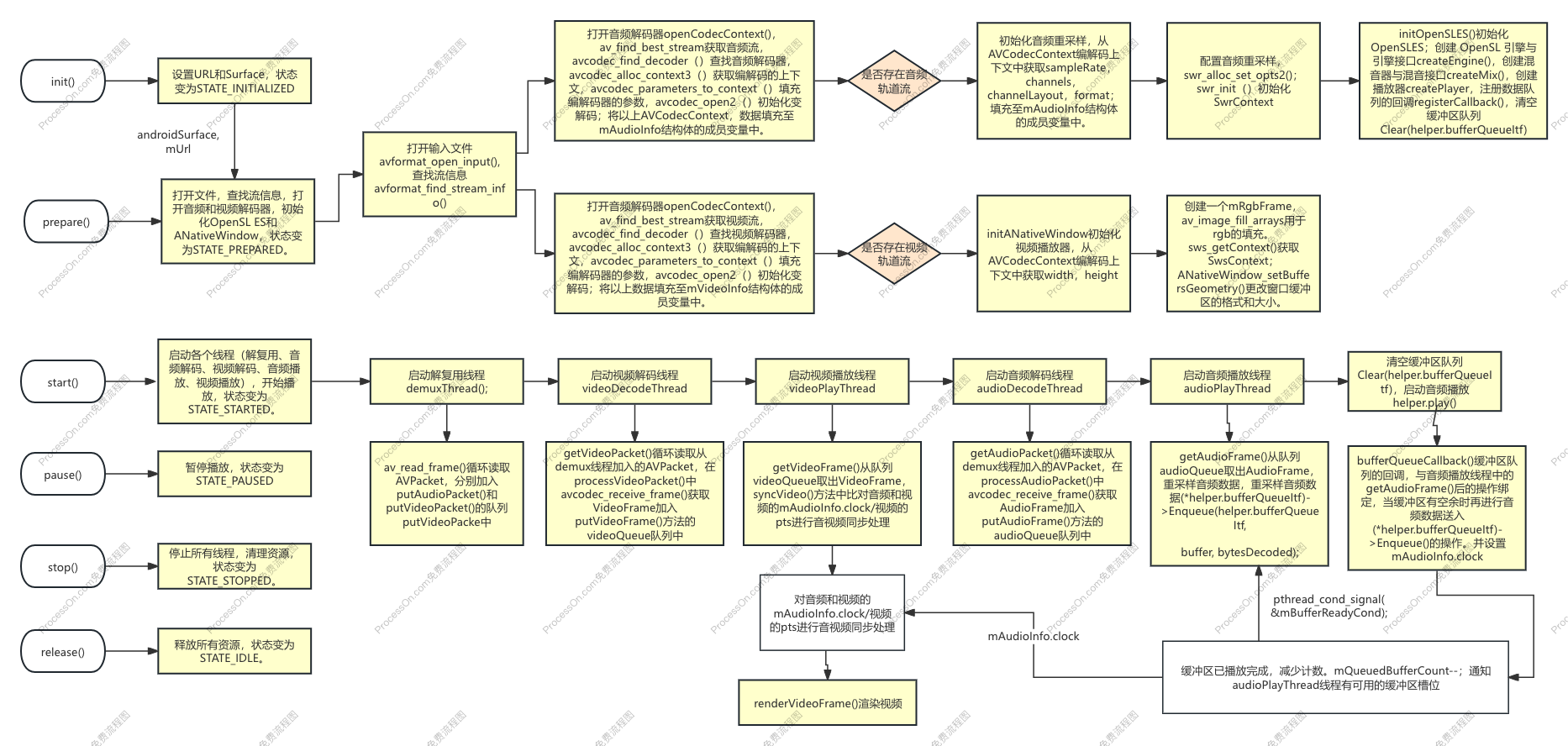

1.代码流程草图:

2.struct结构体设计:

我们把音频和视频需要的参数包裹在结构体中,便于参数的管理。

- 音频帧结构体AudioFrame:AVFrame和pts;

- 视频帧结构体VideoFrame:AVFrame和pts;

- 音频信息AudioInfo:streamIndex用于记录音频轨道的index;codecContext编解码器的上下文;swrContext音频重采样的上下文;timeBase音频的时间基,来计算pts;音频参数sampleRate/channels/channelLayout/format;音频队列audioQueue用于解码线程和播放线程的AudioFrame的传递;互斥锁audioMutex保证线程间数据同步安全;信号量audioCond控制线程间的队列大小;maxAudioFrames最大帧量保证队列不过大影响内存的使用;时钟clock在音视频同步时启到基准的作用;时钟互斥锁clockMutex保证时钟数据线程间的数据安全。

- 视频信息VideoInfo:streamIndex用于记录视频轨道的index;codecContext编解码器的上下文;timeBase音频的时间基,来计算pts;视频参数widith和height;视频队列videoQueue

用于解码线程和播放线程的VideoFrame的传递;互斥锁videoMutex保证线程间数据同步安全;信号量videoCond控制线程间的队列大小;maxVideoFrames最大帧量保证队列不过大影响内存的使用;时钟clock在音视频同步时启到基准的作用;时钟互斥锁clockMutex保证时钟数据线程间的数据安全。

代码如下:

cpp

// 音频帧结构

struct AudioFrame {

AVFrame *frame;

double pts;

int64_t pos;

AudioFrame() : frame(nullptr), pts(0), pos(0) {}

~AudioFrame() {

if (frame) {

av_frame_free(&frame);

}

}

};

// 视频帧结构

struct VideoFrame {

AVFrame *frame;

double pts;

int64_t pos;

VideoFrame() : frame(nullptr), pts(0), pos(0) {}

~VideoFrame() {

if (frame) {

av_frame_free(&frame);

}

}

};

// 音频信息

struct AudioInfo {

int streamIndex;

AVCodecContext *codecContext;

SwrContext *swrContext;

AVRational timeBase;

// 音频参数

int sampleRate;

int channels;

int bytesPerSample;

AVChannelLayout *channelLayout;

enum AVSampleFormat format;

// 音频队列

std::queue<AudioFrame *> audioQueue;

pthread_mutex_t audioMutex;

pthread_cond_t audioCond;

int maxAudioFrames;

// 时钟

double clock;

pthread_mutex_t clockMutex;

AudioInfo() : streamIndex(-1), codecContext(nullptr), swrContext(nullptr),

sampleRate(0), channels(0), bytesPerSample(0),

channelLayout(nullptr), format(AV_SAMPLE_FMT_NONE),

maxAudioFrames(100), clock(0) {

pthread_mutex_init(&audioMutex, nullptr);

pthread_cond_init(&audioCond, nullptr);

pthread_mutex_init(&clockMutex, nullptr);

}

~AudioInfo() {

pthread_mutex_destroy(&audioMutex);

pthread_cond_destroy(&audioCond);

pthread_mutex_destroy(&clockMutex);

}

};

// 视频信息

struct VideoInfo {

int streamIndex;

AVCodecContext *codecContext;

AVRational timeBase;

int width;

int height;

// 视频队列

std::queue<VideoFrame *> videoQueue;

pthread_mutex_t videoMutex;

pthread_cond_t videoCond;

int maxVideoFrames;

// 时钟

double clock;

pthread_mutex_t clockMutex;

VideoInfo() : streamIndex(-1), codecContext(nullptr),

width(0), height(0), maxVideoFrames(30), clock(0) {

pthread_mutex_init(&videoMutex, nullptr);

pthread_cond_init(&videoCond, nullptr);

pthread_mutex_init(&clockMutex, nullptr);

}

~VideoInfo() {

pthread_mutex_destroy(&videoMutex);

pthread_cond_destroy(&videoCond);

pthread_mutex_destroy(&clockMutex);

}

};3.init()方法:

传入解码文件的路径地址及关于视频播放要使用到的surface。

代码如下:

cpp

bool FFMediaPlayer::init(const char *url, jobject surface) {

androidSurface = mEnv->NewGlobalRef(surface);

mUrl = strdup(url);

mState = STATE_INITIALIZED;

return true;

}4.prepare()方法:

打开文件,查找流信息,打开音频和视频解码器,初始化OpenSL ES和ANativeWindow,状态变为STATE_PREPARED。

打开输入文件avformat_open_input(),查找流信息avformat_find_stream_info();分别

- 打开音频解码器openCodecContext(),av_find_best_stream获取音频流,avcodec_find_decoder()查找音频解码器,avcodec_alloc_context3()获取编解码的上下文,avcodec_parameters_to_context()填充编解码器的参数,avcodec_open2()初始化变解码;将以上AVCodecContext,数据填充至mAudioInfo结构体的成员变量中;

- 打开音频解码器openCodecContext(),av_find_best_stream获取视频流,avcodec_find_decoder()查找视频解码器,avcodec_alloc_context3()获取编解码的上下文,avcodec_parameters_to_context()填充编解码器的参数,avcodec_open2()初始化变解码;将以上数据填充至mVideoInfo结构体的成员变量中;

代码如下:

cpp

bool FFMediaPlayer::prepare() {

LOGI("prepare()");

playAudioInfo =

"prepare() \n";

PostStatusMessage(playAudioInfo.c_str());

if (mState != STATE_INITIALIZED) {

LOGE("prepare called in invalid state: %d", mState);

return false;

}

mState = STATE_PREPARING;

// 打开输入文件

if (avformat_open_input(&mFormatContext, mUrl, nullptr, nullptr) < 0) {

LOGE("Could not open input file: %s", mUrl);

mState = STATE_ERROR;

return false;

}

// 查找流信息

if (avformat_find_stream_info(mFormatContext, nullptr) < 0) {

LOGE("Could not find stream information");

mState = STATE_ERROR;

return false;

}

// 打开音频解码器

if (!openCodecContext(&mAudioInfo.streamIndex,

&mAudioInfo.codecContext,

mFormatContext, AVMEDIA_TYPE_AUDIO)) {

LOGE("Could not open audio codec");

// 不是错误,可能没有音频流

} else {

// 初始化音频重采样

mAudioInfo.sampleRate = mAudioInfo.codecContext->sample_rate;

mAudioInfo.channels = mAudioInfo.codecContext->channels;

mAudioInfo.channelLayout = &mAudioInfo.codecContext->ch_layout;

mAudioInfo.format = mAudioInfo.codecContext->sample_fmt;

// 配置音频重采样

AVChannelLayout out_ch_layout = AV_CHANNEL_LAYOUT_STEREO;

swr_alloc_set_opts2(&mAudioInfo.swrContext,

&out_ch_layout, AV_SAMPLE_FMT_S16, mAudioInfo.sampleRate,

mAudioInfo.channelLayout, mAudioInfo.format, mAudioInfo.sampleRate,

0, NULL);

if (!mAudioInfo.swrContext || swr_init(mAudioInfo.swrContext) < 0) {

LOGE("Could not initialize swresample");

mState = STATE_ERROR;

return false;

}

// 初始化OpenSL ES

if (!initOpenSLES()) {

LOGE("Could not initialize OpenSL ES");

mState = STATE_ERROR;

return false;

}

}

// 打开视频解码器

if (!openCodecContext(&mVideoInfo.streamIndex,

&mVideoInfo.codecContext,

mFormatContext, AVMEDIA_TYPE_VIDEO)) {

LOGE("Could not open video codec");

mState = STATE_ERROR;

return false;

} else {

// 初始化视频渲染

initANativeWindow();

}

mDuration = mFormatContext->duration / AV_TIME_BASE;

mState = STATE_PREPARED;

LOGI("Media player prepared successfully");

return true;

}openCodecContext()方法:

代码如下:

cpp

// OpenSL ES初始化和其他辅助方法

bool FFMediaPlayer::openCodecContext(int *streamIndex, AVCodecContext **codecContext,

AVFormatContext *formatContext, enum AVMediaType type) {

int streamIdx = av_find_best_stream(formatContext, type, -1, -1, nullptr, 0);

if (streamIdx < 0) {

LOGE("Could not find %s stream", av_get_media_type_string(type));

return false;

}

AVStream *stream = formatContext->streams[streamIdx];

const AVCodec *codec = avcodec_find_decoder(stream->codecpar->codec_id);

if (!codec) {

LOGE("Could not find %s codec", av_get_media_type_string(type));

return false;

}

*codecContext = avcodec_alloc_context3(codec);

if (!*codecContext) {

LOGE("Could not allocate %s codec context", av_get_media_type_string(type));

return false;

}

if (avcodec_parameters_to_context(*codecContext, stream->codecpar) < 0) {

LOGE("Could not copy %s codec parameters", av_get_media_type_string(type));

return false;

}

if (avcodec_open2(*codecContext, codec, nullptr) < 0) {

LOGE("Could not open %s codec", av_get_media_type_string(type));

return false;

}

*streamIndex = streamIdx;

// 设置时间基

if (type == AVMEDIA_TYPE_AUDIO) {

mAudioInfo.timeBase = stream->time_base;

} else if (type == AVMEDIA_TYPE_VIDEO) {

mVideoInfo.timeBase = stream->time_base;

}

return true;

}initOpenSLES()方法:

initOpenSLES()初始化OpenSLES;创建 OpenSL 引擎与引擎接口createEngine(),创建混音器与混音接口createMix(),创建播放器createPlayer,注册数据队列的回调registerCallback(),清空缓冲区队列Clear(helper.bufferQueueItf)。

代码如下:

cpp

bool FFmpegOpenSLPlayer::initOpenSL() {

// 创建 OpenSL 引擎与引擎接口

SLresult result = helper.createEngine();

if (!helper.isSuccess(result)) {

LOGE("create engine error: %d", result);

PostStatusMessage("Create engine error\n");

return false;

}

PostStatusMessage("OpenSL createEngine Success \n");

// 创建混音器与混音接口

result = helper.createMix();

if (!helper.isSuccess(result)) {

LOGE("create mix error: %d", result);

PostStatusMessage("Create mix error \n");

return false;

}

PostStatusMessage("OpenSL createMix Success \n");

result = helper.createPlayer(mChannels, mSampleRate * 1000, SL_PCMSAMPLEFORMAT_FIXED_16,

mChannels == 2 ? (SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT)

: SL_SPEAKER_FRONT_CENTER);

if (!helper.isSuccess(result)) {

LOGE("create player error: %d", result);

PostStatusMessage("Create player error\n");

return false;

}

PostStatusMessage("OpenSL createPlayer Success \n");

// 注册回调并开始播放

result = helper.registerCallback(bufferQueueCallback, this);

if (!helper.isSuccess(result)) {

LOGE("register callback error: %d", result);

PostStatusMessage("Register callback error \n");

return false;

}

PostStatusMessage("OpenSL registerCallback Success \n");

// 清空缓冲区队列

result = (*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to clear buffer queue: %d, error: %s", result, getSLErrorString(result));

playAudioInfo = "Failed to clear buffer queue:" + string(getSLErrorString(result));

PostStatusMessage(playAudioInfo.c_str());

return false;

}

LOGI("OpenSL ES initialized successfully: %d Hz, %d channels", mSampleRate, mChannels);

playAudioInfo = "OpenSL ES initialized ,Hz:" + to_string(mSampleRate) + ",channels:" +

to_string(mChannels) + " ,duration:" + to_string(mDuration) + "\n";

PostStatusMessage(playAudioInfo.c_str());

return true;

}initANativeWindow()方法:

initANativeWindow初始化视频播放器,从AVCodecContext编解码上下文中获取width,height;创建一个mRgbFrame,av_image_fill_arrays用于rgb的填充。sws_getContext()获取SwsContext;ANativeWindow_setBuffersGeometry()更改窗口缓冲区的格式和大小。

代码如下:

cpp

bool FFMediaPlayer::initANativeWindow() {

// 获取视频尺寸

mVideoInfo.width = mVideoInfo.codecContext->width;

mVideoInfo.height = mVideoInfo.codecContext->height;

mNativeWindow = ANativeWindow_fromSurface(mEnv, androidSurface);

if (!mNativeWindow) {

LOGE("Couldn't get native window from surface");

return false;

}

mRgbFrame = av_frame_alloc();

if (!mRgbFrame) {

LOGE("Could not allocate RGB frame");

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

return false;

}

int bufferSize = av_image_get_buffer_size(AV_PIX_FMT_RGBA, mVideoInfo.width, mVideoInfo.height,

1);

mOutbuffer = (uint8_t *) av_malloc(bufferSize * sizeof(uint8_t));

if (!mOutbuffer) {

LOGE("Could not allocate output buffer");

av_frame_free(&mRgbFrame);

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

return false;

}

mSwsContext = sws_getContext(mVideoInfo.width, mVideoInfo.height,

mVideoInfo.codecContext->pix_fmt,

mVideoInfo.width, mVideoInfo.height, AV_PIX_FMT_RGBA,

SWS_BICUBIC, nullptr, nullptr, nullptr);

if (!mSwsContext) {

LOGE("Could not create sws context");

av_free(mOutbuffer);

mOutbuffer = nullptr;

av_frame_free(&mRgbFrame);

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

return false;

}

if (ANativeWindow_setBuffersGeometry(mNativeWindow, mVideoInfo.width, mVideoInfo.height,

WINDOW_FORMAT_RGBA_8888) < 0) {

LOGE("Couldn't set buffers geometry");

cleanupANativeWindow();

return false;

}

if (av_image_fill_arrays(mRgbFrame->data, mRgbFrame->linesize,

mOutbuffer, AV_PIX_FMT_RGBA,

mVideoInfo.width, mVideoInfo.height, 1) < 0) {

LOGE("Could not fill image arrays");

cleanupANativeWindow();

return false;

}

LOGI("ANativeWindow initialization successful");

playAudioInfo =

"ANativeWindow initialization successful \n";

PostStatusMessage(playAudioInfo.c_str());

return true;

}5.start()方法:

启动各个线程(解复用、音频解码、视频解码、音频播放、视频播放),开始播放,状态变为STATE_STARTED,清空缓冲区队列(*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

代码如下:

cpp

bool FFMediaPlayer::start() {

if (mState != STATE_PREPARED && mState != STATE_PAUSED) {

LOGE("start called in invalid state: %d", mState);

return false;

}

mExit = false;

mPause = false;

// 重置状态

mQueuedBufferCount = 0;

mCurrentBuffer = 0;

// 清空缓冲区队列

if (helper.bufferQueueItf) {

(*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

}

// 启动解复用线程

if (pthread_create(&mDemuxThread, nullptr, demuxThread, this) != 0) {

LOGE("Could not create demux thread");

return false;

}

// 启动视频解码线程

if (pthread_create(&mVideoDecodeThread, nullptr, videoDecodeThread, this) != 0) {

LOGE("Could not create video decode thread");

return false;

}

// 启动视频播放线程

if (pthread_create(&mVideoPlayThread, nullptr, videoPlayThread, this) != 0) {

LOGE("Could not create video play thread");

return false;

}

// 启动音频解码线程

if (mAudioInfo.codecContext) {

if (pthread_create(&mAudioDecodeThread, nullptr, audioDecodeThread, this) != 0) {

LOGE("Could not create audio decode thread");

return false;

}

// 启动音频播放线程

if (pthread_create(&mAudioPlayThread, nullptr, audioPlayThread, this) != 0) {

LOGE("Could not create audio play thread");

return false;

}

// 启动音频播放

SLresult result = helper.play();

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to set play state: %d, error: %s", result, getSLErrorString(result));

playAudioInfo = "Failed to set play state: " + string(getSLErrorString(result));

PostStatusMessage(playAudioInfo.c_str());

}

LOGE("helper.play() successfully");

}

mState = STATE_STARTED;

return true;

}demuxThread解复码线程:

av_read_frame()循环读取AVPacket,分别加入putAudioPacket()和putVideoPacket()的队列putVideoPacke中,在此过程中要控制包队列大小,避免内存占用过大。通过队列mAudioPackets和mVideoPackets大小和来控制解复码;在getAudioPacket()和getVideoPacket()取出队列数据后通过信号量mPacketCond通知demuxThread()线程中 pthread_cond_wait(&mPacketCond, &mPacketMutex);循环继续进行解复码的操作。

cpp

void *FFMediaPlayer::demuxThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->demux();

return nullptr;

}

void FFMediaPlayer::demux() {

AVPacket *packet = av_packet_alloc();

while (!mExit) {

if (mPause) {

usleep(10000); // 暂停时睡眠10ms

continue;

}

// 控制包队列大小,避免内存占用过大

pthread_mutex_lock(&mPacketMutex);

if (mAudioPackets.size() + mVideoPackets.size() > mMaxPackets) {

// LOGI("demux() Waiting for Packets slot, Packets: %d", mAudioPackets.size() + mVideoPackets.size());

pthread_cond_wait(&mPacketCond, &mPacketMutex);

}

pthread_mutex_unlock(&mPacketMutex);

if (av_read_frame(mFormatContext, packet) < 0) {

break;

}

if (packet->stream_index == mAudioInfo.streamIndex) {

putAudioPacket(packet);

packet = av_packet_alloc(); // 分配新包,旧的已入队

} else if (packet->stream_index == mVideoInfo.streamIndex) {

putVideoPacket(packet);

packet = av_packet_alloc(); // 分配新包,旧的已入队

} else {

av_packet_unref(packet);

}

}

av_packet_free(&packet);

// 发送结束信号

if (mAudioInfo.codecContext) {

AVPacket *endPacket = av_packet_alloc();

endPacket->data = nullptr;

endPacket->size = 0;

putAudioPacket(endPacket);

}

AVPacket *endPacket = av_packet_alloc();

endPacket->data = nullptr;

endPacket->size = 0;

putVideoPacket(endPacket);

}

void FFMediaPlayer::putAudioPacket(AVPacket *packet) {

pthread_mutex_lock(&mPacketMutex);

mAudioPackets.push(packet);

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

}

void FFMediaPlayer::putVideoPacket(AVPacket *packet) {

pthread_mutex_lock(&mPacketMutex);

mVideoPackets.push(packet);

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

}

AVPacket *FFMediaPlayer::getAudioPacket() {

pthread_mutex_lock(&mPacketMutex);

while (mAudioPackets.empty() && !mExit) {

pthread_cond_wait(&mPacketCond, &mPacketMutex);

}

if (mExit) {

pthread_mutex_unlock(&mPacketMutex);

return nullptr;

}

AVPacket *packet = mAudioPackets.front();

mAudioPackets.pop();

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

return packet;

}

AVPacket *FFMediaPlayer::getVideoPacket() {

pthread_mutex_lock(&mPacketMutex);

while (mVideoPackets.empty() && !mExit) {

pthread_cond_wait(&mPacketCond, &mPacketMutex);

}

if (mExit) {

pthread_mutex_unlock(&mPacketMutex);

return nullptr;

}

AVPacket *packet = mVideoPackets.front();

mVideoPackets.pop();

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

return packet;

}videoDecodeThread视频解码线程:

getVideoPacket()循环读取从demux线程加入的AVPacket,在processVideoPacket()中avcodec_receive_frame()获取VideoFrame加入putVideoFrame()方法的videoQueue队列中;

在putVideoFrame()受限于mVideoInfo.maxVideoFrames的大小,当mVideoInfo.videoQueue.size()大于mVideoInfo.maxVideoFrames时进行pthread_cond_wait();直到视频渲染线程getVideoFrame()后通过信号量mVideoInfo.videoCond通过继续。

代码如下:

cpp

void *FFMediaPlayer::videoDecodeThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->videoDecode();

return nullptr;

}

void FFMediaPlayer::videoDecode() {

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

AVPacket *packet = getVideoPacket();

if (!packet) {

continue;

}

// 检查结束包

if (packet->data == nullptr && packet->size == 0) {

av_packet_free(&packet);

break;

}

processVideoPacket(packet);

av_packet_free(&packet);

}

// 刷新解码器

AVPacket *flushPacket = av_packet_alloc();

flushPacket->data = nullptr;

flushPacket->size = 0;

processVideoPacket(flushPacket);

av_packet_free(&flushPacket);

}

void FFMediaPlayer::processVideoPacket(AVPacket *packet) {

AVFrame *frame = av_frame_alloc();

int ret = avcodec_send_packet(mVideoInfo.codecContext, packet);

if (ret < 0) {

av_frame_free(&frame);

return;

}

while (ret >= 0) {

ret = avcodec_receive_frame(mVideoInfo.codecContext, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

} else if (ret < 0) {

break;

}

VideoFrame *vframe = decodeVideoFrame(frame);

if (vframe) {

putVideoFrame(vframe);

}

av_frame_unref(frame);

}

av_frame_free(&frame);

}

VideoFrame *FFMediaPlayer::decodeVideoFrame(AVFrame *frame) {

if (!frame) {

return nullptr;

}

// 计算PTS

double pts = frame->best_effort_timestamp;

if (pts != AV_NOPTS_VALUE) {

pts *= av_q2d(mVideoInfo.timeBase);

setVideoClock(pts);

}

// 创建视频帧副本

AVFrame *frameCopy = av_frame_alloc();

if (av_frame_ref(frameCopy, frame) < 0) {

av_frame_free(&frameCopy);

return nullptr;

}

VideoFrame *vframe = new VideoFrame();

vframe->frame = frameCopy;

vframe->pts = pts;

return vframe;

}

void FFMediaPlayer::putVideoFrame(VideoFrame *frame) {

pthread_mutex_lock(&mVideoInfo.videoMutex);

while (mVideoInfo.videoQueue.size() >= mVideoInfo.maxVideoFrames && !mExit) {

// LOGI("putVideoFrame Waiting for buffer slot, videoQueue: %d", mVideoInfo.videoQueue.size());

pthread_cond_wait(&mVideoInfo.videoCond, &mVideoInfo.videoMutex);

}

if (!mExit) {

mVideoInfo.videoQueue.push(frame);

}

pthread_cond_signal(&mVideoInfo.videoCond);

pthread_mutex_unlock(&mVideoInfo.videoMutex);

}

VideoFrame *FFMediaPlayer::getVideoFrame() {

pthread_mutex_lock(&mVideoInfo.videoMutex);

if (mVideoInfo.videoQueue.empty()) {

pthread_mutex_unlock(&mVideoInfo.videoMutex);

return nullptr;

}

VideoFrame *frame = mVideoInfo.videoQueue.front();

mVideoInfo.videoQueue.pop();

pthread_cond_signal(&mVideoInfo.videoCond);

pthread_mutex_unlock(&mVideoInfo.videoMutex);

return frame;

}

void FFMediaPlayer::processVideoPacket(AVPacket *packet) {

AVFrame *frame = av_frame_alloc();

int ret = avcodec_send_packet(mVideoInfo.codecContext, packet);

if (ret < 0) {

av_frame_free(&frame);

return;

}

while (ret >= 0) {

ret = avcodec_receive_frame(mVideoInfo.codecContext, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

} else if (ret < 0) {

break;

}

VideoFrame *vframe = decodeVideoFrame(frame);

if (vframe) {

putVideoFrame(vframe);

}

av_frame_unref(frame);

}

av_frame_free(&frame);

}videoPlayThread视频渲染线程:

getVideoFrame()从队列videoQueue取出VideoFrame,syncVideo()方法中比对音频和视频的mAudioInfo.clock/视频的pts进行音视频同步处理。

代码如下:

cpp

void *FFMediaPlayer::videoPlayThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->videoPlay();

return nullptr;

}

void FFMediaPlayer::videoPlay() {

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

// 定期输出性能信息

logPerformanceStats();

VideoFrame *vframe = getVideoFrame();

if (!vframe) {

usleep(10000);

continue;

}

// 音视频同步

syncVideo(vframe->pts);

// 渲染视频

renderVideoFrame(vframe);

delete vframe;

}

}

void FFMediaPlayer::syncVideo(double pts) {

double audioTime = getAudioClock();

double videoTime = pts;

double diff = videoTime - audioTime;

// 同步阈值

const double syncThreshold = 0.01; // 10ms同步阈值

const double maxFrameDelay = 0.1; // 最大100ms延迟

if (fabs(diff) < maxFrameDelay) {

if (diff <= -syncThreshold) {

// 视频落后,立即显示

return;

} else if (diff >= syncThreshold) {

// 视频超前,延迟显示

int delay = (int) (diff * 1000000); // 转换为微秒

usleep(delay);

}

}

// 差异太大,直接显示

}

void FFMediaPlayer::renderVideoFrame(VideoFrame *vframe) {

if (!mNativeWindow || !vframe) {

return;

}

ANativeWindow_Buffer windowBuffer;

// 颜色空间转换

sws_scale(mSwsContext, vframe->frame->data, vframe->frame->linesize, 0,

mVideoInfo.height, mRgbFrame->data, mRgbFrame->linesize);

// 锁定窗口缓冲区

if (ANativeWindow_lock(mNativeWindow, &windowBuffer, nullptr) < 0) {

LOGE("Cannot lock window");

return;

}

// 优化拷贝:检查 stride 是否匹配

uint8_t *dst = static_cast<uint8_t *>(windowBuffer.bits);

int dstStride = windowBuffer.stride * 4; // 目标步长(字节)

int srcStride = mRgbFrame->linesize[0]; // 源步长(字节)

if (dstStride == srcStride) {

// 步长匹配,可以直接整体拷贝

memcpy(dst, mOutbuffer, srcStride * mVideoInfo.height);

} else {

// 步长不匹配,需要逐行拷贝

for (int h = 0; h < mVideoInfo.height; h++) {

memcpy(dst + h * dstStride,

mOutbuffer + h * srcStride,

srcStride);

}

}

ANativeWindow_unlockAndPost(mNativeWindow);

}audioDecodeThread音频解码线程:

getAudioPacket()循环读取从demux线程加入的AVPacket,在processAudioPacket()中avcodec_receive_frame()获取AudioFrame加入putAudioFrame()方法的audioQueue队列中。

在putAudioFrame()受限于mAudioInfo.maxAudioFrames的大小,当mAudioInfo.audioQueue.size()大于mAudioInfo.maxAudioFrames时进行pthread_cond_wait();直到视频渲染线程getAudioFrame()后通过信号量mAudioInfo.audioCond通过继续。

代码如下:

cpp

void *FFMediaPlayer::audioDecodeThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->audioDecode();

return nullptr;

}

void FFMediaPlayer::audioDecode() {

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

AVPacket *packet = getAudioPacket();

if (!packet) {

continue;

}

// 检查结束包

if (packet->data == nullptr && packet->size == 0) {

av_packet_free(&packet);

break;

}

processAudioPacket(packet);

av_packet_free(&packet);

}

// 刷新解码器

AVPacket *flushPacket = av_packet_alloc();

flushPacket->data = nullptr;

flushPacket->size = 0;

processAudioPacket(flushPacket);

av_packet_free(&flushPacket);

}

void FFMediaPlayer::processAudioPacket(AVPacket *packet) {

AVFrame *frame = av_frame_alloc();

int ret = avcodec_send_packet(mAudioInfo.codecContext, packet);

if (ret < 0) {

av_frame_free(&frame);

return;

}

while (ret >= 0) {

ret = avcodec_receive_frame(mAudioInfo.codecContext, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

} else if (ret < 0) {

break;

}

AudioFrame *aframe = decodeAudioFrame(frame);

if (aframe) {

putAudioFrame(aframe);

}

av_frame_unref(frame);

}

av_frame_free(&frame);

}

void FFMediaPlayer::putAudioFrame(AudioFrame *frame) {

pthread_mutex_lock(&mAudioInfo.audioMutex);

while (mAudioInfo.audioQueue.size() >= mAudioInfo.maxAudioFrames && !mExit) {

// LOGI("putAudioFrame Waiting for buffer slot, audioQueue: %d", mAudioInfo.audioQueue.size());

pthread_cond_wait(&mAudioInfo.audioCond, &mAudioInfo.audioMutex);

}

if (!mExit) {

mAudioInfo.audioQueue.push(frame);

}

pthread_cond_signal(&mAudioInfo.audioCond);

pthread_mutex_unlock(&mAudioInfo.audioMutex);

}

AudioFrame *FFMediaPlayer::getAudioFrame() {

pthread_mutex_lock(&mAudioInfo.audioMutex);

if (mAudioInfo.audioQueue.empty()) {

pthread_mutex_unlock(&mAudioInfo.audioMutex);

return nullptr;

}

AudioFrame *frame = mAudioInfo.audioQueue.front();

mAudioInfo.audioQueue.pop();

pthread_cond_signal(&mAudioInfo.audioCond);

pthread_mutex_unlock(&mAudioInfo.audioMutex);

return frame;

}audioPlayThread音频播放线程:

getAudioFrame()从队列audioQueue取出AudioFrame,重采样音频数据,重采样音频数据(*helper.bufferQueueItf)->Enqueue(helper.bufferQueueItf,buffer, bytesDecoded);

在需要注意⚠️的是在openSL中的缓冲区队列是固定大小的,当缓冲区队列播放完成后会通过回调bufferQueueCallback()。如果在播放线程中不判定缓冲区队列是否可用状态,一直(*helper.bufferQueueItf)->Enqueue(helper.bufferQueueItf,buffer, bytesDecoded)这时就会报错奔溃掉。

代码如下:

cpp

void *FFMediaPlayer::audioPlayThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->audioPlay();

return nullptr;

}

void FFMediaPlayer::audioPlay() {

// 音频播放主要由OpenSL ES回调驱动

// 这里主要处理音频队列管理和时钟更新

LOGW("audioPlay===============");

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

// 等待直到有可用的缓冲区槽位

pthread_mutex_lock(&mBufferMutex);

while (mQueuedBufferCount >= NUM_BUFFERS) {

pthread_cond_wait(&mBufferReadyCond, &mBufferMutex);

}

// 从音频队列获取一帧数据

AudioFrame *aframe = getAudioFrame();

if (aframe) {

// 重采样音频数据

uint8_t *buffer = mBuffers[mCurrentBuffer];

uint8_t *outBuffer = buffer;

// 计算最大输出样本数

int maxSamples = BUFFER_SIZE / (mAudioInfo.channels * 2);

int outSamples = swr_convert(mAudioInfo.swrContext, &outBuffer, maxSamples,

(const uint8_t **) aframe->frame->data,

aframe->frame->nb_samples);

if (outSamples > 0) {

int bytesDecoded = outSamples * mAudioInfo.channels * 2;

// 确保不超过缓冲区大小

if (bytesDecoded > BUFFER_SIZE) {

LOGW("Decoded data exceeds buffer size: %d > %d", bytesDecoded,

BUFFER_SIZE);

bytesDecoded = BUFFER_SIZE;

}

// 检查是否还有可用的缓冲区槽位

if (mQueuedBufferCount >= NUM_BUFFERS) {

LOGW("No buffer slots available, skipping frame");

pthread_mutex_unlock(&mBufferMutex);

break;

}

// 将缓冲区加入播放队列

SLresult result = (*helper.bufferQueueItf)->Enqueue(helper.bufferQueueItf,

buffer, bytesDecoded);

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to enqueue buffer: %d, error: %s",

result, getSLErrorString(result));

if (result == SL_RESULT_BUFFER_INSUFFICIENT) {

// 等待一段时间后重试

pthread_mutex_unlock(&mBufferMutex);

usleep(10000); // 10ms

pthread_mutex_lock(&mBufferMutex);

}

break;

} else {

// 成功入队,更新状态

mQueuedBufferCount++;

mCurrentBuffer = (mCurrentBuffer + 1) % NUM_BUFFERS;

}

} else if (outSamples < 0) {

LOGE("swr_convert failed: %d", outSamples);

}

delete aframe;

} else {

// 队列为空,送入静音数据

static uint8_t silence[4096] = {0};

// 将缓冲区加入播放队列

SLresult result = (*helper.bufferQueueItf)->Enqueue(helper.bufferQueueItf,

silence, sizeof(silence));

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to enqueue buffer: %d, error: %s",

result, getSLErrorString(result));

if (result == SL_RESULT_BUFFER_INSUFFICIENT) {

// 等待一段时间后重试

pthread_mutex_unlock(&mBufferMutex);

usleep(10000); // 10ms

pthread_mutex_lock(&mBufferMutex);

}

break;

} else {

// 成功入队,更新状态

mQueuedBufferCount++;

mCurrentBuffer = (mCurrentBuffer + 1) % NUM_BUFFERS;

}

}

pthread_mutex_unlock(&mBufferMutex);

usleep(1000); // 减少CPU占用

}

}

void FFMediaPlayer::bufferQueueCallback(SLAndroidSimpleBufferQueueItf bq, void *context) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(context);

player->processBufferQueue();

}

void FFMediaPlayer::processBufferQueue() {

pthread_mutex_lock(&mBufferMutex);

// 缓冲区已播放完成,减少计数

if (mQueuedBufferCount > 0) {

mQueuedBufferCount--;

// 更新音频时钟(减去已播放的缓冲区时长)

if (mAudioInfo.codecContext && mAudioInfo.sampleRate > 0) {

double buffer_duration = (double) BUFFER_SIZE /

(mAudioInfo.channels * 2 * mAudioInfo.sampleRate);

pthread_mutex_lock(&mAudioInfo.clockMutex);

// 更新音频时钟

mAudioInfo.clock -= buffer_duration;

pthread_mutex_unlock(&mAudioInfo.clockMutex);

}

}

// 通知解码线程有可用的缓冲区槽位

pthread_cond_signal(&mBufferReadyCond);

pthread_mutex_unlock(&mBufferMutex);

}三.完整代码呈现:

FFMediaPlayer.h:

代码如下:

cpp

//

// Created by wangyao on 2025/12/13.

//

#ifndef FFMPEGPRACTICE_FFMEDIAPLAYER_H

#define FFMPEGPRACTICE_FFMEDIAPLAYER_H

#include <pthread.h>

#include <atomic>

#include <string>

#include "ThreadSafeQueue.h"

#include "BasicCommon.h"

#include "jni.h"

#include "android/native_window.h"

#include "android/native_window_jni.h"

#include "OpenslHelper.h"

#include <SLES/OpenSLES.h>

#include <SLES/OpenSLES_Android.h>

// 音频帧结构

struct AudioFrame {

AVFrame *frame;

double pts;

int64_t pos;

AudioFrame() : frame(nullptr), pts(0), pos(0) {}

~AudioFrame() {

if (frame) {

av_frame_free(&frame);

}

}

};

// 视频帧结构

struct VideoFrame {

AVFrame *frame;

double pts;

int64_t pos;

VideoFrame() : frame(nullptr), pts(0), pos(0) {}

~VideoFrame() {

if (frame) {

av_frame_free(&frame);

}

}

};

// 音频信息

struct AudioInfo {

int streamIndex;

AVCodecContext *codecContext;

SwrContext *swrContext;

AVRational timeBase;

// 音频参数

int sampleRate;

int channels;

int bytesPerSample;

AVChannelLayout *channelLayout;

enum AVSampleFormat format;

// 音频队列

std::queue<AudioFrame *> audioQueue;

pthread_mutex_t audioMutex;

pthread_cond_t audioCond;

int maxAudioFrames;

// 时钟

double clock;

pthread_mutex_t clockMutex;

AudioInfo() : streamIndex(-1), codecContext(nullptr), swrContext(nullptr),

sampleRate(0), channels(0), bytesPerSample(0),

channelLayout(nullptr), format(AV_SAMPLE_FMT_NONE),

maxAudioFrames(100), clock(0) {

pthread_mutex_init(&audioMutex, nullptr);

pthread_cond_init(&audioCond, nullptr);

pthread_mutex_init(&clockMutex, nullptr);

}

~AudioInfo() {

pthread_mutex_destroy(&audioMutex);

pthread_cond_destroy(&audioCond);

pthread_mutex_destroy(&clockMutex);

}

};

// 视频信息

struct VideoInfo {

int streamIndex;

AVCodecContext *codecContext;

AVRational timeBase;

int width;

int height;

// 视频队列

std::queue<VideoFrame *> videoQueue;

pthread_mutex_t videoMutex;

pthread_cond_t videoCond;

int maxVideoFrames;

// 时钟

double clock;

pthread_mutex_t clockMutex;

VideoInfo() : streamIndex(-1), codecContext(nullptr),

width(0), height(0), maxVideoFrames(30), clock(0) {

pthread_mutex_init(&videoMutex, nullptr);

pthread_cond_init(&videoCond, nullptr);

pthread_mutex_init(&clockMutex, nullptr);

}

~VideoInfo() {

pthread_mutex_destroy(&videoMutex);

pthread_cond_destroy(&videoCond);

pthread_mutex_destroy(&clockMutex);

}

};

// 添加性能监控结构

struct PerformanceStats {

int64_t demuxPackets;

int64_t audioFrames;

int64_t videoFrames;

int64_t audioQueueSize;

int64_t videoQueueSize;

double audioClock;

double videoClock;

double syncDiff;

};

class FFMediaPlayer {

public:

FFMediaPlayer(JNIEnv *env, jobject thiz);

~FFMediaPlayer();

bool init(const char *url, jobject surface);

bool prepare();

bool start();

bool pause();

bool stop();

void release();

int getState() const { return mState; }

int64_t getDuration() const { return mDuration; }

int64_t getCurrentPosition() const;

private:

enum State {

STATE_IDLE,

STATE_INITIALIZED,

STATE_PREPARING,

STATE_PREPARED,

STATE_STARTED,

STATE_PAUSED,

STATE_STOPPED,

STATE_ERROR

};

string playAudioInfo;

JavaVM *mJavaVm = nullptr;

jobject mJavaObj = nullptr;

JNIEnv *mEnv = nullptr;

OpenslHelper helper;

// OpenSL ES回调

void processBufferQueue();

static void bufferQueueCallback(SLAndroidSimpleBufferQueueItf bq, void *context);

// OpenSL ES回调

void audioCallback(SLAndroidSimpleBufferQueueItf bufferQueue);

static void audioCallbackWrapper(SLAndroidSimpleBufferQueueItf bufferQueue, void *context);

// 缓冲区

static const int NUM_BUFFERS = 4;

static const int BUFFER_SIZE = 4096;

uint8_t *mBuffers[NUM_BUFFERS];

int mCurrentBuffer;

std::atomic<int> mQueuedBufferCount; // 跟踪已入队的缓冲区数量

pthread_mutex_t mBufferMutex;

pthread_cond_t mBufferReadyCond;

int count;

jobject androidSurface = NULL;

// NativeWindow;

ANativeWindow *mNativeWindow = nullptr;

SwsContext *mSwsContext = nullptr;

AVFrame *mRgbFrame = nullptr;

uint8_t *mOutbuffer = nullptr;

// 成员变量

State mState;

char *mUrl;

AVFormatContext *mFormatContext;

AudioInfo mAudioInfo;

VideoInfo mVideoInfo;

// 线程

pthread_t mDemuxThread;

pthread_t mAudioDecodeThread;

pthread_t mVideoDecodeThread;

pthread_t mAudioPlayThread;

pthread_t mVideoPlayThread;

// 控制变量

std::atomic<bool> mExit;

std::atomic<bool> mPause;

int64_t mDuration;

// 同步变量

pthread_mutex_t mStateMutex;

pthread_cond_t mStateCond;

// 数据包队列

std::queue<AVPacket *> mAudioPackets;

std::queue<AVPacket *> mVideoPackets;

pthread_mutex_t mPacketMutex;

pthread_cond_t mPacketCond;

int mMaxPackets;

// 私有方法

bool openCodecContext(int *streamIndex, AVCodecContext **codecContext,

AVFormatContext *formatContext, enum AVMediaType type);

bool initOpenSLES();

bool initANativeWindow();

void cleanupANativeWindow();

bool initVideoRenderer();

// 线程函数

static void *demuxThread(void *arg);

static void *audioDecodeThread(void *arg);

static void *videoDecodeThread(void *arg);

static void *audioPlayThread(void *arg);

static void *videoPlayThread(void *arg);

void demux();

void audioDecode();

void videoDecode();

void audioPlay();

void videoPlay();

// 数据处理

void processAudioPacket(AVPacket *packet);

void processVideoPacket(AVPacket *packet);

AudioFrame *decodeAudioFrame(AVFrame *frame);

VideoFrame *decodeVideoFrame(AVFrame *frame);

void renderVideoFrame(VideoFrame *vframe);

// 队列操作

void putAudioPacket(AVPacket *packet);

void putVideoPacket(AVPacket *packet);

AVPacket *getAudioPacket();

AVPacket *getVideoPacket();

void clearAudioPackets();

void clearVideoPackets();

void putAudioFrame(AudioFrame *frame);

void putVideoFrame(VideoFrame *frame);

AudioFrame *getAudioFrame();

VideoFrame *getVideoFrame();

void clearAudioFrames();

void clearVideoFrames();

// 同步方法

double getMasterClock();

double getAudioClock();

double getVideoClock();

void setAudioClock(double pts);

void setVideoClock(double pts);

void syncVideo(double pts);

int64_t getCurrentPosition();

const char *getSLErrorString(SLresult result);

JNIEnv *GetJNIEnv(bool *isAttach);

void PostStatusMessage(const char *msg);

void logPerformanceStats();

};

#endif //FFMPEGPRACTICE_FFMEDIAPLAYER_HFFMediaPlayer.cpp:

代码如下:

cpp

//

// Created by wangyao on 2025/12/13.

//

#include "includes/FFMediaPlayer.h"

#include <unistd.h>

FFMediaPlayer::FFMediaPlayer(JNIEnv *env, jobject thiz)

: mState(STATE_IDLE),

mUrl(nullptr),

mFormatContext(nullptr),

mExit(false),

mPause(false),

mDuration(0),

mMaxPackets(50) {

mEnv = env;

env->GetJavaVM(&mJavaVm);

mJavaObj = env->NewGlobalRef(thiz);

pthread_mutex_init(&mStateMutex, nullptr);

pthread_cond_init(&mStateCond, nullptr);

pthread_mutex_init(&mPacketMutex, nullptr);

pthread_cond_init(&mPacketCond, nullptr);

pthread_mutex_init(&mBufferMutex, nullptr);

pthread_cond_init(&mBufferReadyCond, nullptr);

// 初始化OpenSLES缓冲区

for (int i = 0; i < NUM_BUFFERS; i++) {

mBuffers[i] = new uint8_t[BUFFER_SIZE];

memset(mBuffers[i], 0, BUFFER_SIZE); // 初始化为静音

}

}

FFMediaPlayer::~FFMediaPlayer() {

if (androidSurface) {

mEnv->DeleteLocalRef(androidSurface);

}

if (mNativeWindow) {

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

}

mEnv->DeleteGlobalRef(mJavaObj);

release();

pthread_mutex_destroy(&mStateMutex);

pthread_cond_destroy(&mStateCond);

pthread_mutex_destroy(&mPacketMutex);

pthread_cond_destroy(&mPacketCond);

pthread_mutex_destroy(&mBufferMutex);

pthread_cond_destroy(&mBufferReadyCond);

}

bool FFMediaPlayer::init(const char *url, jobject surface) {

androidSurface = mEnv->NewGlobalRef(surface);

mUrl = strdup(url);

mState = STATE_INITIALIZED;

return true;

}

bool FFMediaPlayer::prepare() {

LOGI("prepare()");

playAudioInfo =

"prepare() \n";

PostStatusMessage(playAudioInfo.c_str());

if (mState != STATE_INITIALIZED) {

LOGE("prepare called in invalid state: %d", mState);

return false;

}

mState = STATE_PREPARING;

// 打开输入文件

if (avformat_open_input(&mFormatContext, mUrl, nullptr, nullptr) < 0) {

LOGE("Could not open input file: %s", mUrl);

mState = STATE_ERROR;

return false;

}

// 查找流信息

if (avformat_find_stream_info(mFormatContext, nullptr) < 0) {

LOGE("Could not find stream information");

mState = STATE_ERROR;

return false;

}

// 打开音频解码器

if (!openCodecContext(&mAudioInfo.streamIndex,

&mAudioInfo.codecContext,

mFormatContext, AVMEDIA_TYPE_AUDIO)) {

LOGE("Could not open audio codec");

// 不是错误,可能没有音频流

} else {

// 初始化音频重采样

mAudioInfo.sampleRate = mAudioInfo.codecContext->sample_rate;

mAudioInfo.channels = mAudioInfo.codecContext->channels;

mAudioInfo.channelLayout = &mAudioInfo.codecContext->ch_layout;

mAudioInfo.format = mAudioInfo.codecContext->sample_fmt;

// 配置音频重采样

AVChannelLayout out_ch_layout = AV_CHANNEL_LAYOUT_STEREO;

swr_alloc_set_opts2(&mAudioInfo.swrContext,

&out_ch_layout, AV_SAMPLE_FMT_S16, mAudioInfo.sampleRate,

mAudioInfo.channelLayout, mAudioInfo.format, mAudioInfo.sampleRate,

0, NULL);

if (!mAudioInfo.swrContext || swr_init(mAudioInfo.swrContext) < 0) {

LOGE("Could not initialize swresample");

mState = STATE_ERROR;

return false;

}

// 初始化OpenSL ES

if (!initOpenSLES()) {

LOGE("Could not initialize OpenSL ES");

mState = STATE_ERROR;

return false;

}

}

// 打开视频解码器

if (!openCodecContext(&mVideoInfo.streamIndex,

&mVideoInfo.codecContext,

mFormatContext, AVMEDIA_TYPE_VIDEO)) {

LOGE("Could not open video codec");

mState = STATE_ERROR;

return false;

} else {

// 初始化视频渲染

initANativeWindow();

}

mDuration = mFormatContext->duration / AV_TIME_BASE;

mState = STATE_PREPARED;

LOGI("Media player prepared successfully");

return true;

}

bool FFMediaPlayer::start() {

if (mState != STATE_PREPARED && mState != STATE_PAUSED) {

LOGE("start called in invalid state: %d", mState);

return false;

}

mExit = false;

mPause = false;

// 重置状态

mQueuedBufferCount = 0;

mCurrentBuffer = 0;

// 清空缓冲区队列

if (helper.bufferQueueItf) {

(*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

}

// 启动解复用线程

if (pthread_create(&mDemuxThread, nullptr, demuxThread, this) != 0) {

LOGE("Could not create demux thread");

return false;

}

// 启动视频解码线程

if (pthread_create(&mVideoDecodeThread, nullptr, videoDecodeThread, this) != 0) {

LOGE("Could not create video decode thread");

return false;

}

// 启动视频播放线程

if (pthread_create(&mVideoPlayThread, nullptr, videoPlayThread, this) != 0) {

LOGE("Could not create video play thread");

return false;

}

// 启动音频解码线程

if (mAudioInfo.codecContext) {

if (pthread_create(&mAudioDecodeThread, nullptr, audioDecodeThread, this) != 0) {

LOGE("Could not create audio decode thread");

return false;

}

// 启动音频播放线程

if (pthread_create(&mAudioPlayThread, nullptr, audioPlayThread, this) != 0) {

LOGE("Could not create audio play thread");

return false;

}

// 启动音频播放

SLresult result = helper.play();

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to set play state: %d, error: %s", result, getSLErrorString(result));

playAudioInfo = "Failed to set play state: " + string(getSLErrorString(result));

PostStatusMessage(playAudioInfo.c_str());

}

LOGE("helper.play() successfully");

}

mState = STATE_STARTED;

return true;

}

bool FFMediaPlayer::pause() {

if (mState != STATE_STARTED) {

LOGE("pause called in invalid state: %d", mState);

return false;

}

mPause = true;

SLresult result = helper.pause();

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to set play state: %d, error: %s", result, getSLErrorString(result));

playAudioInfo = "Failed to set play state: " + string(getSLErrorString(result));

PostStatusMessage(playAudioInfo.c_str());

}

mState = STATE_PAUSED;

return true;

}

bool FFMediaPlayer::stop() {

if (mState != STATE_STARTED && mState != STATE_PAUSED) {

LOGE("stop called in invalid state: %d", mState);

return false;

}

mExit = true;

mPause = false;

// 设置退出标志后,先通知所有条件变量

pthread_cond_broadcast(&mPacketCond);

pthread_cond_broadcast(&mAudioInfo.audioCond);

pthread_cond_broadcast(&mVideoInfo.videoCond);

pthread_cond_broadcast(&mBufferReadyCond);

// 等待一小段时间让线程检测到退出标志

usleep(100000);

// 再次通知,确保所有线程都能退出

pthread_cond_broadcast(&mPacketCond);

pthread_cond_broadcast(&mAudioInfo.audioCond);

pthread_cond_broadcast(&mVideoInfo.videoCond);

cleanupANativeWindow();

if (mAudioInfo.codecContext) {

pthread_join(mAudioDecodeThread, nullptr);

pthread_join(mAudioPlayThread, nullptr);

}

pthread_join(mVideoDecodeThread, nullptr);

pthread_join(mVideoPlayThread, nullptr);

pthread_join(mDemuxThread, nullptr);

// 清空队列

clearAudioPackets();

clearVideoPackets();

clearAudioFrames();

clearVideoFrames();

if (helper.player) {

helper.stop();

}

// 清空缓冲区队列

if (helper.bufferQueueItf) {

(*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

}

// 清理缓冲区

for (int i = 0; i < NUM_BUFFERS; i++) {

delete[] mBuffers[i];

}

mState = STATE_STOPPED;

return true;

}

void FFMediaPlayer::release() {

if (mState == STATE_IDLE || mState == STATE_ERROR) {

return;

}

stop();

if (mAudioInfo.swrContext) {

swr_free(&mAudioInfo.swrContext);

}

// 释放FFmpeg资源

if (mAudioInfo.codecContext) {

avcodec_free_context(&mAudioInfo.codecContext);

}

if (mVideoInfo.codecContext) {

avcodec_free_context(&mVideoInfo.codecContext);

}

if (mFormatContext) {

avformat_close_input(&mFormatContext);

mFormatContext = nullptr;

}

if (mUrl) {

free(mUrl);

mUrl = nullptr;

}

mState = STATE_IDLE;

}

int64_t FFMediaPlayer::getCurrentPosition() {

return (int64_t) (getMasterClock() * 1000); // 转换为毫秒

}

// 线程函数实现

void *FFMediaPlayer::demuxThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->demux();

return nullptr;

}

void *FFMediaPlayer::audioDecodeThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->audioDecode();

return nullptr;

}

void *FFMediaPlayer::videoDecodeThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->videoDecode();

return nullptr;

}

void *FFMediaPlayer::audioPlayThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->audioPlay();

return nullptr;

}

void *FFMediaPlayer::videoPlayThread(void *arg) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(arg);

player->videoPlay();

return nullptr;

}

void FFMediaPlayer::demux() {

AVPacket *packet = av_packet_alloc();

while (!mExit) {

if (mPause) {

usleep(10000); // 暂停时睡眠10ms

continue;

}

// 控制包队列大小,避免内存占用过大

pthread_mutex_lock(&mPacketMutex);

if (mAudioPackets.size() + mVideoPackets.size() > mMaxPackets) {

// LOGI("demux() Waiting for Packets slot, Packets: %d", mAudioPackets.size() + mVideoPackets.size());

pthread_cond_wait(&mPacketCond, &mPacketMutex);

}

pthread_mutex_unlock(&mPacketMutex);

if (av_read_frame(mFormatContext, packet) < 0) {

break;

}

if (packet->stream_index == mAudioInfo.streamIndex) {

putAudioPacket(packet);

packet = av_packet_alloc(); // 分配新包,旧的已入队

} else if (packet->stream_index == mVideoInfo.streamIndex) {

putVideoPacket(packet);

packet = av_packet_alloc(); // 分配新包,旧的已入队

} else {

av_packet_unref(packet);

}

}

av_packet_free(&packet);

// 发送结束信号

if (mAudioInfo.codecContext) {

AVPacket *endPacket = av_packet_alloc();

endPacket->data = nullptr;

endPacket->size = 0;

putAudioPacket(endPacket);

}

AVPacket *endPacket = av_packet_alloc();

endPacket->data = nullptr;

endPacket->size = 0;

putVideoPacket(endPacket);

}

void FFMediaPlayer::audioDecode() {

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

AVPacket *packet = getAudioPacket();

if (!packet) {

continue;

}

// 检查结束包

if (packet->data == nullptr && packet->size == 0) {

av_packet_free(&packet);

break;

}

processAudioPacket(packet);

av_packet_free(&packet);

}

// 刷新解码器

AVPacket *flushPacket = av_packet_alloc();

flushPacket->data = nullptr;

flushPacket->size = 0;

processAudioPacket(flushPacket);

av_packet_free(&flushPacket);

}

void FFMediaPlayer::videoDecode() {

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

AVPacket *packet = getVideoPacket();

if (!packet) {

continue;

}

// 检查结束包

if (packet->data == nullptr && packet->size == 0) {

av_packet_free(&packet);

break;

}

processVideoPacket(packet);

av_packet_free(&packet);

}

// 刷新解码器

AVPacket *flushPacket = av_packet_alloc();

flushPacket->data = nullptr;

flushPacket->size = 0;

processVideoPacket(flushPacket);

av_packet_free(&flushPacket);

}

void FFMediaPlayer::audioPlay() {

// 音频播放主要由OpenSL ES回调驱动

// 这里主要处理音频队列管理和时钟更新

LOGW("audioPlay===============");

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

// 等待直到有可用的缓冲区槽位

pthread_mutex_lock(&mBufferMutex);

while (mQueuedBufferCount >= NUM_BUFFERS) {

pthread_cond_wait(&mBufferReadyCond, &mBufferMutex);

}

// 从音频队列获取一帧数据

AudioFrame *aframe = getAudioFrame();

if (aframe) {

// 重采样音频数据

uint8_t *buffer = mBuffers[mCurrentBuffer];

uint8_t *outBuffer = buffer;

// 计算最大输出样本数

int maxSamples = BUFFER_SIZE / (mAudioInfo.channels * 2);

int outSamples = swr_convert(mAudioInfo.swrContext, &outBuffer, maxSamples,

(const uint8_t **) aframe->frame->data,

aframe->frame->nb_samples);

if (outSamples > 0) {

int bytesDecoded = outSamples * mAudioInfo.channels * 2;

// 确保不超过缓冲区大小

if (bytesDecoded > BUFFER_SIZE) {

LOGW("Decoded data exceeds buffer size: %d > %d", bytesDecoded,

BUFFER_SIZE);

bytesDecoded = BUFFER_SIZE;

}

// 检查是否还有可用的缓冲区槽位

if (mQueuedBufferCount >= NUM_BUFFERS) {

LOGW("No buffer slots available, skipping frame");

pthread_mutex_unlock(&mBufferMutex);

break;

}

// 将缓冲区加入播放队列

SLresult result = (*helper.bufferQueueItf)->Enqueue(helper.bufferQueueItf,

buffer, bytesDecoded);

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to enqueue buffer: %d, error: %s",

result, getSLErrorString(result));

if (result == SL_RESULT_BUFFER_INSUFFICIENT) {

// 等待一段时间后重试

pthread_mutex_unlock(&mBufferMutex);

usleep(10000); // 10ms

pthread_mutex_lock(&mBufferMutex);

}

break;

} else {

// 成功入队,更新状态

mQueuedBufferCount++;

mCurrentBuffer = (mCurrentBuffer + 1) % NUM_BUFFERS;

}

} else if (outSamples < 0) {

LOGE("swr_convert failed: %d", outSamples);

}

delete aframe;

} else {

// 队列为空,送入静音数据

static uint8_t silence[4096] = {0};

// 将缓冲区加入播放队列

SLresult result = (*helper.bufferQueueItf)->Enqueue(helper.bufferQueueItf,

silence, sizeof(silence));

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to enqueue buffer: %d, error: %s",

result, getSLErrorString(result));

if (result == SL_RESULT_BUFFER_INSUFFICIENT) {

// 等待一段时间后重试

pthread_mutex_unlock(&mBufferMutex);

usleep(10000); // 10ms

pthread_mutex_lock(&mBufferMutex);

}

break;

} else {

// 成功入队,更新状态

mQueuedBufferCount++;

mCurrentBuffer = (mCurrentBuffer + 1) % NUM_BUFFERS;

}

}

pthread_mutex_unlock(&mBufferMutex);

usleep(1000); // 减少CPU占用

}

}

void FFMediaPlayer::videoPlay() {

while (!mExit) {

if (mPause) {

usleep(10000);

continue;

}

// 定期输出性能信息

logPerformanceStats();

VideoFrame *vframe = getVideoFrame();

if (!vframe) {

usleep(10000);

continue;

}

// 音视频同步

syncVideo(vframe->pts);

// 渲染视频

renderVideoFrame(vframe);

delete vframe;

}

}

// 数据处理方法

void FFMediaPlayer::processAudioPacket(AVPacket *packet) {

AVFrame *frame = av_frame_alloc();

int ret = avcodec_send_packet(mAudioInfo.codecContext, packet);

if (ret < 0) {

av_frame_free(&frame);

return;

}

while (ret >= 0) {

ret = avcodec_receive_frame(mAudioInfo.codecContext, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

} else if (ret < 0) {

break;

}

AudioFrame *aframe = decodeAudioFrame(frame);

if (aframe) {

putAudioFrame(aframe);

}

av_frame_unref(frame);

}

av_frame_free(&frame);

}

void FFMediaPlayer::processVideoPacket(AVPacket *packet) {

AVFrame *frame = av_frame_alloc();

int ret = avcodec_send_packet(mVideoInfo.codecContext, packet);

if (ret < 0) {

av_frame_free(&frame);

return;

}

while (ret >= 0) {

ret = avcodec_receive_frame(mVideoInfo.codecContext, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

} else if (ret < 0) {

break;

}

VideoFrame *vframe = decodeVideoFrame(frame);

if (vframe) {

putVideoFrame(vframe);

}

av_frame_unref(frame);

}

av_frame_free(&frame);

}

AudioFrame *FFMediaPlayer::decodeAudioFrame(AVFrame *frame) {

if (!frame || !mAudioInfo.swrContext) {

return nullptr;

}

// 计算PTS

double pts = frame->best_effort_timestamp;

if (pts != AV_NOPTS_VALUE) {

pts *= av_q2d(mAudioInfo.timeBase);

setAudioClock(pts);

}

// 创建视频帧副本

AVFrame *frameCopy = av_frame_alloc();

if (av_frame_ref(frameCopy, frame) < 0) {

av_frame_free(&frameCopy);

return nullptr;

}

AudioFrame *aframe = new AudioFrame();

aframe->frame = frameCopy;

aframe->pts = pts;

return aframe;

}

VideoFrame *FFMediaPlayer::decodeVideoFrame(AVFrame *frame) {

if (!frame) {

return nullptr;

}

// 计算PTS

double pts = frame->best_effort_timestamp;

if (pts != AV_NOPTS_VALUE) {

pts *= av_q2d(mVideoInfo.timeBase);

setVideoClock(pts);

}

// 创建视频帧副本

AVFrame *frameCopy = av_frame_alloc();

if (av_frame_ref(frameCopy, frame) < 0) {

av_frame_free(&frameCopy);

return nullptr;

}

VideoFrame *vframe = new VideoFrame();

vframe->frame = frameCopy;

vframe->pts = pts;

return vframe;

}

void FFMediaPlayer::renderVideoFrame(VideoFrame *vframe) {

if (!mNativeWindow || !vframe) {

return;

}

ANativeWindow_Buffer windowBuffer;

// 颜色空间转换

sws_scale(mSwsContext, vframe->frame->data, vframe->frame->linesize, 0,

mVideoInfo.height, mRgbFrame->data, mRgbFrame->linesize);

// 锁定窗口缓冲区

if (ANativeWindow_lock(mNativeWindow, &windowBuffer, nullptr) < 0) {

LOGE("Cannot lock window");

return;

}

// 优化拷贝:检查 stride 是否匹配

uint8_t *dst = static_cast<uint8_t *>(windowBuffer.bits);

int dstStride = windowBuffer.stride * 4; // 目标步长(字节)

int srcStride = mRgbFrame->linesize[0]; // 源步长(字节)

if (dstStride == srcStride) {

// 步长匹配,可以直接整体拷贝

memcpy(dst, mOutbuffer, srcStride * mVideoInfo.height);

} else {

// 步长不匹配,需要逐行拷贝

for (int h = 0; h < mVideoInfo.height; h++) {

memcpy(dst + h * dstStride,

mOutbuffer + h * srcStride,

srcStride);

}

}

ANativeWindow_unlockAndPost(mNativeWindow);

}

// 队列操作方法实现

void FFMediaPlayer::putAudioPacket(AVPacket *packet) {

pthread_mutex_lock(&mPacketMutex);

mAudioPackets.push(packet);

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

}

void FFMediaPlayer::putVideoPacket(AVPacket *packet) {

pthread_mutex_lock(&mPacketMutex);

mVideoPackets.push(packet);

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

}

AVPacket *FFMediaPlayer::getAudioPacket() {

pthread_mutex_lock(&mPacketMutex);

while (mAudioPackets.empty() && !mExit) {

pthread_cond_wait(&mPacketCond, &mPacketMutex);

}

if (mExit) {

pthread_mutex_unlock(&mPacketMutex);

return nullptr;

}

AVPacket *packet = mAudioPackets.front();

mAudioPackets.pop();

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

return packet;

}

AVPacket *FFMediaPlayer::getVideoPacket() {

pthread_mutex_lock(&mPacketMutex);

while (mVideoPackets.empty() && !mExit) {

pthread_cond_wait(&mPacketCond, &mPacketMutex);

}

if (mExit) {

pthread_mutex_unlock(&mPacketMutex);

return nullptr;

}

AVPacket *packet = mVideoPackets.front();

mVideoPackets.pop();

pthread_cond_signal(&mPacketCond);

pthread_mutex_unlock(&mPacketMutex);

return packet;

}

void FFMediaPlayer::clearAudioPackets() {

pthread_mutex_lock(&mPacketMutex);

while (!mAudioPackets.empty()) {

AVPacket *packet = mAudioPackets.front();

mAudioPackets.pop();

av_packet_free(&packet);

}

pthread_mutex_unlock(&mPacketMutex);

}

void FFMediaPlayer::clearVideoPackets() {

pthread_mutex_lock(&mPacketMutex);

while (!mVideoPackets.empty()) {

AVPacket *packet = mVideoPackets.front();

mVideoPackets.pop();

av_packet_free(&packet);

}

pthread_mutex_unlock(&mPacketMutex);

}

void FFMediaPlayer::putAudioFrame(AudioFrame *frame) {

pthread_mutex_lock(&mAudioInfo.audioMutex);

while (mAudioInfo.audioQueue.size() >= mAudioInfo.maxAudioFrames && !mExit) {

// LOGI("putAudioFrame Waiting for buffer slot, audioQueue: %d", mAudioInfo.audioQueue.size());

pthread_cond_wait(&mAudioInfo.audioCond, &mAudioInfo.audioMutex);

}

if (!mExit) {

mAudioInfo.audioQueue.push(frame);

}

pthread_cond_signal(&mAudioInfo.audioCond);

pthread_mutex_unlock(&mAudioInfo.audioMutex);

}

void FFMediaPlayer::putVideoFrame(VideoFrame *frame) {

pthread_mutex_lock(&mVideoInfo.videoMutex);

while (mVideoInfo.videoQueue.size() >= mVideoInfo.maxVideoFrames && !mExit) {

// LOGI("putVideoFrame Waiting for buffer slot, videoQueue: %d", mVideoInfo.videoQueue.size());

pthread_cond_wait(&mVideoInfo.videoCond, &mVideoInfo.videoMutex);

}

if (!mExit) {

mVideoInfo.videoQueue.push(frame);

}

pthread_cond_signal(&mVideoInfo.videoCond);

pthread_mutex_unlock(&mVideoInfo.videoMutex);

}

AudioFrame *FFMediaPlayer::getAudioFrame() {

pthread_mutex_lock(&mAudioInfo.audioMutex);

if (mAudioInfo.audioQueue.empty()) {

pthread_mutex_unlock(&mAudioInfo.audioMutex);

return nullptr;

}

AudioFrame *frame = mAudioInfo.audioQueue.front();

mAudioInfo.audioQueue.pop();

pthread_cond_signal(&mAudioInfo.audioCond);

pthread_mutex_unlock(&mAudioInfo.audioMutex);

return frame;

}

VideoFrame *FFMediaPlayer::getVideoFrame() {

pthread_mutex_lock(&mVideoInfo.videoMutex);

if (mVideoInfo.videoQueue.empty()) {

pthread_mutex_unlock(&mVideoInfo.videoMutex);

return nullptr;

}

VideoFrame *frame = mVideoInfo.videoQueue.front();

mVideoInfo.videoQueue.pop();

pthread_cond_signal(&mVideoInfo.videoCond);

pthread_mutex_unlock(&mVideoInfo.videoMutex);

return frame;

}

void FFMediaPlayer::clearAudioFrames() {

pthread_mutex_lock(&mAudioInfo.audioMutex);

while (!mAudioInfo.audioQueue.empty()) {

AudioFrame *frame = mAudioInfo.audioQueue.front();

mAudioInfo.audioQueue.pop();

delete frame;

}

pthread_mutex_unlock(&mAudioInfo.audioMutex);

}

void FFMediaPlayer::clearVideoFrames() {

pthread_mutex_lock(&mVideoInfo.videoMutex);

while (!mVideoInfo.videoQueue.empty()) {

VideoFrame *frame = mVideoInfo.videoQueue.front();

mVideoInfo.videoQueue.pop();

delete frame;

}

pthread_mutex_unlock(&mVideoInfo.videoMutex);

}

// 同步方法实现

double FFMediaPlayer::getMasterClock() {

return getAudioClock(); // 以音频时钟为主时钟

}

double FFMediaPlayer::getAudioClock() {

pthread_mutex_lock(&mAudioInfo.clockMutex);

double clock = mAudioInfo.clock;

pthread_mutex_unlock(&mAudioInfo.clockMutex);

return clock;

}

double FFMediaPlayer::getVideoClock() {

pthread_mutex_lock(&mVideoInfo.clockMutex);

double clock = mVideoInfo.clock;

pthread_mutex_unlock(&mVideoInfo.clockMutex);

return clock;

}

void FFMediaPlayer::setAudioClock(double pts) {

pthread_mutex_lock(&mAudioInfo.clockMutex);

mAudioInfo.clock = pts;

pthread_mutex_unlock(&mAudioInfo.clockMutex);

}

void FFMediaPlayer::setVideoClock(double pts) {

pthread_mutex_lock(&mVideoInfo.clockMutex);

mVideoInfo.clock = pts;

pthread_mutex_unlock(&mVideoInfo.clockMutex);

}

void FFMediaPlayer::syncVideo(double pts) {

double audioTime = getAudioClock();

double videoTime = pts;

double diff = videoTime - audioTime;

// 同步阈值

const double syncThreshold = 0.01; // 10ms同步阈值

const double maxFrameDelay = 0.1; // 最大100ms延迟

if (fabs(diff) < maxFrameDelay) {

if (diff <= -syncThreshold) {

// 视频落后,立即显示

return;

} else if (diff >= syncThreshold) {

// 视频超前,延迟显示

int delay = (int) (diff * 1000000); // 转换为微秒

usleep(delay);

}

}

// 差异太大,直接显示

}

// OpenSL ES初始化和其他辅助方法

bool FFMediaPlayer::openCodecContext(int *streamIndex, AVCodecContext **codecContext,

AVFormatContext *formatContext, enum AVMediaType type) {

int streamIdx = av_find_best_stream(formatContext, type, -1, -1, nullptr, 0);

if (streamIdx < 0) {

LOGE("Could not find %s stream", av_get_media_type_string(type));

return false;

}

AVStream *stream = formatContext->streams[streamIdx];

const AVCodec *codec = avcodec_find_decoder(stream->codecpar->codec_id);

if (!codec) {

LOGE("Could not find %s codec", av_get_media_type_string(type));

return false;

}

*codecContext = avcodec_alloc_context3(codec);

if (!*codecContext) {

LOGE("Could not allocate %s codec context", av_get_media_type_string(type));

return false;

}

if (avcodec_parameters_to_context(*codecContext, stream->codecpar) < 0) {

LOGE("Could not copy %s codec parameters", av_get_media_type_string(type));

return false;

}

if (avcodec_open2(*codecContext, codec, nullptr) < 0) {

LOGE("Could not open %s codec", av_get_media_type_string(type));

return false;

}

*streamIndex = streamIdx;

// 设置时间基

if (type == AVMEDIA_TYPE_AUDIO) {

mAudioInfo.timeBase = stream->time_base;

} else if (type == AVMEDIA_TYPE_VIDEO) {

mVideoInfo.timeBase = stream->time_base;

}

return true;

}

bool FFMediaPlayer::initOpenSLES() {

LOGI("initOpenSLES()");

playAudioInfo =

"initOpenSLES() \n";

PostStatusMessage(playAudioInfo.c_str());

// 创建 OpenSL 引擎与引擎接口

SLresult result = helper.createEngine();

if (!helper.isSuccess(result)) {

LOGE("create engine error: %d", result);

PostStatusMessage("Create engine error\n");

return false;

}

PostStatusMessage("OpenSL createEngine Success \n");

// 创建混音器与混音接口

result = helper.createMix();

if (!helper.isSuccess(result)) {

LOGE("create mix error: %d", result);

PostStatusMessage("Create mix error \n");

return false;

}

PostStatusMessage("OpenSL createMix Success \n");

result = helper.createPlayer(mAudioInfo.channels, mAudioInfo.sampleRate * 1000,

SL_PCMSAMPLEFORMAT_FIXED_16,

mAudioInfo.channels == 2 ? (SL_SPEAKER_FRONT_LEFT |

SL_SPEAKER_FRONT_RIGHT)

: SL_SPEAKER_FRONT_CENTER);

if (!helper.isSuccess(result)) {

LOGE("create player error: %d", result);

PostStatusMessage("Create player error\n");

return false;

}

PostStatusMessage("OpenSL createPlayer Success \n");

// 注册回调

result = helper.registerCallback(bufferQueueCallback, this);

if (!helper.isSuccess(result)) {

LOGE("register callback error: %d", result);

PostStatusMessage("Register callback error \n");

return false;

}

PostStatusMessage("OpenSL registerCallback Success \n");

// 清空缓冲区队列

result = (*helper.bufferQueueItf)->Clear(helper.bufferQueueItf);

if (result != SL_RESULT_SUCCESS) {

LOGE("Failed to clear buffer queue: %d, error: %s", result, getSLErrorString(result));

playAudioInfo = "Failed to clear buffer queue:" + string(getSLErrorString(result));

PostStatusMessage(playAudioInfo.c_str());

return false;

}

LOGI("OpenSL ES initialized successfully: %d Hz, %d channels", mAudioInfo.sampleRate,

mAudioInfo.channels);

playAudioInfo =

"OpenSL ES initialized ,Hz:" + to_string(mAudioInfo.sampleRate) + ",channels:" +

to_string(mAudioInfo.channels) + " ,duration:" + to_string(mDuration) + "\n";

PostStatusMessage(playAudioInfo.c_str());

return true;

}

void FFMediaPlayer::bufferQueueCallback(SLAndroidSimpleBufferQueueItf bq, void *context) {

FFMediaPlayer *player = static_cast<FFMediaPlayer *>(context);

player->processBufferQueue();

}

void FFMediaPlayer::processBufferQueue() {

pthread_mutex_lock(&mBufferMutex);

// 缓冲区已播放完成,减少计数

if (mQueuedBufferCount > 0) {

mQueuedBufferCount--;

// 更新音频时钟(减去已播放的缓冲区时长)

if (mAudioInfo.codecContext && mAudioInfo.sampleRate > 0) {

double buffer_duration = (double) BUFFER_SIZE /

(mAudioInfo.channels * 2 * mAudioInfo.sampleRate);

pthread_mutex_lock(&mAudioInfo.clockMutex);

// 更新音频时钟

mAudioInfo.clock -= buffer_duration;

pthread_mutex_unlock(&mAudioInfo.clockMutex);

}

}

// 通知解码线程有可用的缓冲区槽位

pthread_cond_signal(&mBufferReadyCond);

pthread_mutex_unlock(&mBufferMutex);

}

bool FFMediaPlayer::initANativeWindow() {

// 获取视频尺寸

mVideoInfo.width = mVideoInfo.codecContext->width;

mVideoInfo.height = mVideoInfo.codecContext->height;

mNativeWindow = ANativeWindow_fromSurface(mEnv, androidSurface);

if (!mNativeWindow) {

LOGE("Couldn't get native window from surface");

return false;

}

mRgbFrame = av_frame_alloc();

if (!mRgbFrame) {

LOGE("Could not allocate RGB frame");

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

return false;

}

int bufferSize = av_image_get_buffer_size(AV_PIX_FMT_RGBA, mVideoInfo.width, mVideoInfo.height,

1);

mOutbuffer = (uint8_t *) av_malloc(bufferSize * sizeof(uint8_t));

if (!mOutbuffer) {

LOGE("Could not allocate output buffer");

av_frame_free(&mRgbFrame);

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

return false;

}

mSwsContext = sws_getContext(mVideoInfo.width, mVideoInfo.height,

mVideoInfo.codecContext->pix_fmt,

mVideoInfo.width, mVideoInfo.height, AV_PIX_FMT_RGBA,

SWS_BICUBIC, nullptr, nullptr, nullptr);

if (!mSwsContext) {

LOGE("Could not create sws context");

av_free(mOutbuffer);

mOutbuffer = nullptr;

av_frame_free(&mRgbFrame);

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

return false;

}

if (ANativeWindow_setBuffersGeometry(mNativeWindow, mVideoInfo.width, mVideoInfo.height,

WINDOW_FORMAT_RGBA_8888) < 0) {

LOGE("Couldn't set buffers geometry");

cleanupANativeWindow();

return false;

}

if (av_image_fill_arrays(mRgbFrame->data, mRgbFrame->linesize,

mOutbuffer, AV_PIX_FMT_RGBA,

mVideoInfo.width, mVideoInfo.height, 1) < 0) {

LOGE("Could not fill image arrays");

cleanupANativeWindow();

return false;

}

LOGI("ANativeWindow initialization successful");

playAudioInfo =

"ANativeWindow initialization successful \n";

PostStatusMessage(playAudioInfo.c_str());

return true;

}

void FFMediaPlayer::cleanupANativeWindow() {

if (mSwsContext) {

sws_freeContext(mSwsContext);

mSwsContext = nullptr;

}

if (mRgbFrame) {

av_frame_free(&mRgbFrame);

mRgbFrame = nullptr;

}

if (mOutbuffer) {

av_free(mOutbuffer);

mOutbuffer = nullptr;

}

if (mNativeWindow) {

ANativeWindow_release(mNativeWindow);

mNativeWindow = nullptr;

}

}

JNIEnv *FFMediaPlayer::GetJNIEnv(bool *isAttach) {

if (!mJavaVm) {

LOGE("GetJNIEnv mJavaVm == nullptr");

return nullptr;

}

JNIEnv *env;

int status = mJavaVm->GetEnv((void **) &env, JNI_VERSION_1_6);

if (status == JNI_EDETACHED) {

status = mJavaVm->AttachCurrentThread(&env, nullptr);

if (status != JNI_OK) {

LOGE("Failed to attach current thread");

return nullptr;

}

*isAttach = true;

} else if (status != JNI_OK) {

LOGE("Failed to get JNIEnv");

return nullptr;

} else {

*isAttach = false;

}

return env;

}

void FFMediaPlayer::PostStatusMessage(const char *msg) {

bool isAttach = false;

JNIEnv *env = GetJNIEnv(&isAttach);

if (!env) {

return;

}

jmethodID mid = env->GetMethodID(env->GetObjectClass(mJavaObj),

"CppStatusCallback", "(Ljava/lang/String;)V");

if (mid) {

jstring jMsg = env->NewStringUTF(msg);

env->CallVoidMethod(mJavaObj, mid, jMsg);

env->DeleteLocalRef(jMsg);

}

if (isAttach) {

mJavaVm->DetachCurrentThread();

}

}

// 添加这个辅助函数来获取错误描述

const char *FFMediaPlayer::getSLErrorString(SLresult result) {

switch (result) {

case SL_RESULT_SUCCESS:

return "SL_RESULT_SUCCESS";

case SL_RESULT_PRECONDITIONS_VIOLATED:

return "SL_RESULT_PRECONDITIONS_VIOLATED";

case SL_RESULT_PARAMETER_INVALID:

return "SL_RESULT_PARAMETER_INVALID";

case SL_RESULT_MEMORY_FAILURE:

return "SL_RESULT_MEMORY_FAILURE";

case SL_RESULT_RESOURCE_ERROR:

return "SL_RESULT_RESOURCE_ERROR";

case SL_RESULT_RESOURCE_LOST:

return "SL_RESULT_RESOURCE_LOST";

case SL_RESULT_IO_ERROR:

return "SL_RESULT_IO_ERROR";

case SL_RESULT_BUFFER_INSUFFICIENT:

return "SL_RESULT_BUFFER_INSUFFICIENT";

case SL_RESULT_CONTENT_CORRUPTED:

return "SL_RESULT_CONTENT_CORRUPTED";

case SL_RESULT_CONTENT_UNSUPPORTED:

return "SL_RESULT_CONTENT_UNSUPPORTED";

case SL_RESULT_CONTENT_NOT_FOUND:

return "SL_RESULT_CONTENT_NOT_FOUND";

case SL_RESULT_PERMISSION_DENIED:

return "SL_RESULT_PERMISSION_DENIED";

case SL_RESULT_FEATURE_UNSUPPORTED:

return "SL_RESULT_FEATURE_UNSUPPORTED";

case SL_RESULT_INTERNAL_ERROR:

return "SL_RESULT_INTERNAL_ERROR";

case SL_RESULT_UNKNOWN_ERROR:

return "SL_RESULT_UNKNOWN_ERROR";

case SL_RESULT_OPERATION_ABORTED:

return "SL_RESULT_OPERATION_ABORTED";

case SL_RESULT_CONTROL_LOST:

return "SL_RESULT_CONTROL_LOST";

default:

return "Unknown error";

}

}

// 定期输出性能信息

void FFMediaPlayer::logPerformanceStats() {

static int64_t last_log_time = 0;

int64_t current_time = av_gettime();

if (current_time - last_log_time > 500000) {

last_log_time = current_time;

PerformanceStats stats;

stats.demuxPackets = mAudioPackets.size() + mVideoPackets.size();

stats.audioQueueSize = mAudioInfo.audioQueue.size();

stats.videoQueueSize = mVideoInfo.videoQueue.size();

stats.audioClock = getAudioClock();

stats.videoClock = getVideoClock();

stats.syncDiff = stats.videoClock - stats.audioClock;

LOGW("Performance: Demux=%ld, AudioQ=%ld, VideoQ=%ld, A-V=%.3fs",

stats.demuxPackets, stats.audioQueueSize, stats.videoQueueSize, stats.syncDiff);

playAudioInfo =

"Performance: Demux=" + to_string(stats.demuxPackets)

+ ",AudioQ=" + to_string(stats.audioQueueSize)

+ ",VideoQ=" + to_string(stats.videoQueueSize)

+ ",A-V=" + to_string(stats.syncDiff)

+ "s \n";

PostStatusMessage(playAudioInfo.c_str());

}

}源码地址:

以上的代码放在本人的GitHub项目:

https://github.com/wangyongyao1989/FFmpegPractices