libuvc初探

libuvc介绍

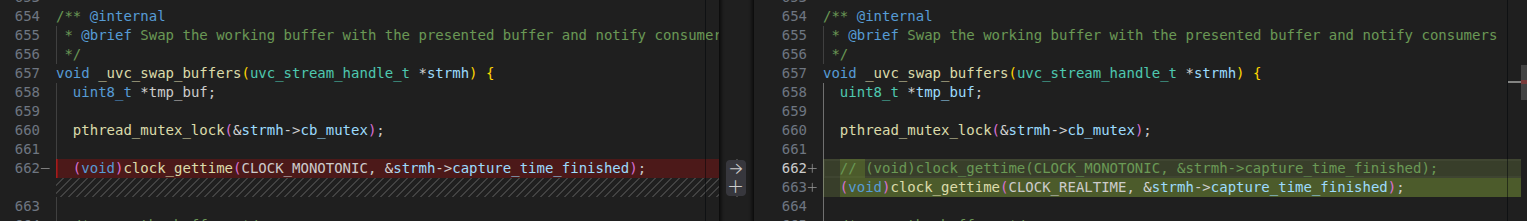

做 v4l2 编程实践时,发现从 v4l2_buffer 得到的时间戳 timestamp 是相对时间(CLOCK_MONOTONIC),而不是绝对时间(CLOCK_REALTIME),暂时没找到拿绝对时间戳的方法。不过 libuvc 可以拿到帧数据的绝对时间戳,因此转战 libuvc。

libuvc 是构建于 libusb 之上的用于 USB 视频设备的跨平台库。它能够对 USB 视频设备进行精细控制,使开发者能够为此前不受支持的设备编写驱动程序,或仅以通用方式访问 UVC(USB Video Class)设备。

编译libuvc库

先安装依赖库

官方说 libuvc 依赖 libusb 1.0+,在 ubuntu 24.04 上编译时发现还依赖 libjpeg 和 libudev。

编译安装libuvc

bash

git clone https://github.com/libuvc/libuvc

cd libuvc

mkdir build

cd build

cmake ..

make && sudo make install要得到 frame buffer 的绝对时间戳可以修改其源码stream.c第662行这里:

编程实践

流程步骤

用 libuvc 对 USB 视频设备进行编程大体包括以下步骤:

-

uvc 上下文初始化,调用 uvc_init,得到 ctx

cuvc_context_t *ctx; uvc_error_t res = uvc_init(&ctx, NULL); if (res < 0) { uvc_perror(res, "uvc_init"); return res; } puts("UVC initialized"); -

查找目标设备(比如"TSTC Web Camera"),组合调用 uvc_get_device_list 和 uvc_get_device_descriptor,得到 uvc_device 指针

cuvc_device_t* find_device(uvc_context_t* ctx) { uvc_device_t **list; if (uvc_get_device_list(ctx, &list) != UVC_SUCCESS) return NULL; uvc_device_t *dev = NULL; uvc_device_descriptor_t *desc; for (int i=0, f=0; !f && list[i]; ++i) if (uvc_get_device_descriptor(list[i], &desc) == UVC_SUCCESS) { if (strcasecmp(desc->product, "TSTC Web Camera") == 0) { uvc_ref_device(dev = list[i]); f = 1; } uvc_free_device_descriptor(desc); } uvc_free_device_list(list, 1); return dev; } -

打开设备,需要将目标设备的 uvc_device 指针作为参数传递给 uvc_open,得到设备句柄(uvc_device_handle 指针)

cuvc_device_handle_t *devh; uvc_error_t res = uvc_open(dev, &devh); if (res == UVC_SUCCESS) { puts("Device opened"); } else uvc_perror(res, "uvc_open"); -

设置视频格式(YUV/Motion-JPEG、宽、高、帧率等),调用 uvc_get_stream_ctrl_format_size

cres = uvc_get_stream_ctrl_format_size(devh, &ctrl, UVC_FRAME_FORMAT_MJPEG, 2560, 800, 60); if (res == UVC_SUCCESS) { uvc_print_stream_ctrl(&ctrl, stderr); } else uvc_perror(res, "get_mode"); -

选择视频和音频输入,调用 uvc_get_input_select,不过多数 USB 视频设备输入唯一,不需要选择

-

开启图像采集,调用 uvc_start_streaming,传递

callback回调可以拿到帧数据(uvc_frame)cres = uvc_start_streaming(devh, &ctrl, callback, (void *) 12345, 0); if (res == UVC_SUCCESS) { puts("Streaming..."); } else uvc_perror(res, "start_streaming"); -

修改曝光、亮度、对比度、饱和度、白平衡、增益等效果设置,注意 :一定要开启图像采集之后才能修改这些效果设置

cputs("Enabling auto exposure ..."); const uint8_t UVC_AUTO_EXPOSURE_MODE_AUTO = 2; res = uvc_set_ae_mode(devh, UVC_AUTO_EXPOSURE_MODE_AUTO); if (res == UVC_ERROR_PIPE) { puts(" ... full AE not supported, trying aperture priority mode"); const uint8_t UVC_AUTO_EXPOSURE_MODE_APERTURE_PRIORITY = 8; res = uvc_set_ae_mode(devh, UVC_AUTO_EXPOSURE_MODE_APERTURE_PRIORITY); if (res < 0) uvc_perror(res, " ... uvc_set_ae_mode failed to enable aperture priority mode"); else puts(" ... enabled aperture priority auto exposure mode"); } else if (res == UVC_SUCCESS) puts(" ... enabled auto exposure"); else uvc_perror(res, " ... uvc_set_ae_mode failed to enable auto exposure mode"); -

关闭图像采集,调用 uvc_stop_streaming

cuvc_stop_streaming(devh); puts("Done streaming."); -

关闭设备,调用 uvc_close

cuvc_close(devh); puts("Device closed"); -

退出 uvc 上下文,调用 uvc_exit

cuvc_exit(ctx); puts("UVC exited");

示例代码

C语言版本

c

#include <libuvc/libuvc.h>

#include <stdio.h>

#include <string.h>

#include <signal.h>

#include <stdbool.h>

uvc_device_t* find_device(uvc_context_t* ctx) {

uvc_device_t **list;

if (uvc_get_device_list(ctx, &list) != UVC_SUCCESS) return NULL;

uvc_device_t *dev = NULL; uvc_device_descriptor_t *desc;

for (int i=0, f=0; !f && list[i]; ++i) if (uvc_get_device_descriptor(list[i], &desc) == UVC_SUCCESS) {

if (strcasecmp(desc->product, "TSTC Web Camera") == 0) {

uvc_ref_device(dev = list[i]);

f = 1;

}

uvc_free_device_descriptor(desc);

}

uvc_free_device_list(list, 1);

return dev;

}

bool find_format(uvc_device_handle_t *devh, enum uvc_vs_desc_subtype frame_format, int width, int height, int fps) {

for (const uvc_format_desc_t *fmt_desc = uvc_get_format_descs(devh); fmt_desc; fmt_desc = fmt_desc -> next)

if (fmt_desc -> bDescriptorSubtype == frame_format)

for (const uvc_frame_desc_t *frm_desc = fmt_desc -> frame_descs; frm_desc; frm_desc = frm_desc -> next)

if (frm_desc -> wWidth == width && frm_desc -> wHeight == height &&

(int)(10000000. / frm_desc -> dwDefaultFrameInterval + .5) == fps) return true;

return false;

}

void callback(uvc_frame_t *frame, void *ptr) {

printf("%ld.%09ld\n",frame->capture_time_finished.tv_sec, frame->capture_time_finished.tv_nsec);

if (frame->frame_format == UVC_FRAME_FORMAT_MJPEG) {

// static char filename[30];

// sprintf(filename, "%ld.%06ld.jpg", frame->capture_time_finished.tv_sec, frame->capture_time_finished.tv_nsec);

// FILE *fp = fopen(filename, "w");

// fwrite(frame->data, 1, frame->data_bytes, fp);

// fclose(fp);

}

}

volatile bool streaming = true;

void terminate(int sig) {

streaming = false;

}

int main(int argc, char **argv) {

uvc_context_t *ctx;

uvc_error_t res = uvc_init(&ctx, NULL);

if (res < 0) {

uvc_perror(res, "uvc_init");

return res;

}

puts("UVC initialized");

uvc_device_t *dev = find_device(ctx);

if (dev != NULL) {

uvc_device_handle_t *devh;

uvc_error_t res = uvc_open(dev, &devh);

if (res == UVC_SUCCESS) {

puts("Device opened");

if (find_format(devh, UVC_VS_FORMAT_MJPEG, 2560, 800, 60)) {

puts("format: MJPG 2560x800 60fps");

uvc_stream_ctrl_t ctrl;

res = uvc_get_stream_ctrl_format_size(devh, &ctrl, UVC_FRAME_FORMAT_MJPEG, 2560, 800, 60);

if (res == UVC_SUCCESS) {

uvc_print_stream_ctrl(&ctrl, stderr);

res = uvc_start_streaming(devh, &ctrl, callback, (void *) 12345, 0);

if (res == UVC_SUCCESS) {

puts("Streaming...");

puts("Enabling auto exposure ...");

const uint8_t UVC_AUTO_EXPOSURE_MODE_AUTO = 2;

res = uvc_set_ae_mode(devh, UVC_AUTO_EXPOSURE_MODE_AUTO);

if (res == UVC_ERROR_PIPE) {

puts(" ... full AE not supported, trying aperture priority mode");

const uint8_t UVC_AUTO_EXPOSURE_MODE_APERTURE_PRIORITY = 8;

res = uvc_set_ae_mode(devh, UVC_AUTO_EXPOSURE_MODE_APERTURE_PRIORITY);

if (res < 0) uvc_perror(res, " ... uvc_set_ae_mode failed to enable aperture priority mode");

else puts(" ... enabled aperture priority auto exposure mode");

} else if (res == UVC_SUCCESS) puts(" ... enabled auto exposure");

else uvc_perror(res, " ... uvc_set_ae_mode failed to enable auto exposure mode");

signal(SIGINT, terminate);

signal(SIGTSTP, terminate);

signal(SIGQUIT, terminate);

signal(SIGTERM, terminate);

while (streaming);

uvc_stop_streaming(devh);

puts("Done streaming.");

} else uvc_perror(res, "start_streaming");

} else uvc_perror(res, "get_mode");

} else {

printf("unsupported format: MJPG 2560x800 60fps, available formats:\n");

uvc_print_diag(devh, stderr);

}

uvc_close(devh);

puts("Device closed");

} else uvc_perror(res, "uvc_open");

uvc_unref_device(dev);

} else uvc_perror(UVC_ERROR_NO_DEVICE, "uvc_find_device");

uvc_exit(ctx);

puts("UVC exited");

return 0;

}Python 版本

需要具备 python 调用 c 动态库的知识,这里再补充两条实践心得:

-

c 的结构体里面有指向自己/其他结构体的指针,可以在 python 的结构体中用通用指针

ctypes.c_void_p申明类型,然后在实际需要用到这个指针时再用ctypes.cast方法转换成实际指针类型,比如 uvc_format_desc 这个结构体:cuvc_format_desc { struct uvc_streaming_interface *parent; struct uvc_format_desc *prev, *next; /** Type of image stream, such as JPEG or uncompressed. */ enum uvc_vs_desc_subtype bDescriptorSubtype; /** Identifier of this format within the VS interface's format list */ uint8_t bFormatIndex; uint8_t bNumFrameDescriptors; /** Format specifier */ union { uint8_t guidFormat[16]; uint8_t fourccFormat[4]; }; /** Format-specific data */ union { /** BPP for uncompressed stream */ uint8_t bBitsPerPixel; /** Flags for JPEG stream */ uint8_t bmFlags; }; /** Default {uvc_frame_desc} to choose given this format */ uint8_t bDefaultFrameIndex; uint8_t bAspectRatioX; uint8_t bAspectRatioY; uint8_t bmInterlaceFlags; uint8_t bCopyProtect; uint8_t bVariableSize; /** Available frame specifications for this format */ struct uvc_frame_desc *frame_descs; struct uvc_still_frame_desc *still_frame_desc; }在 python 的 uvc_format_desc 结构体中,parent、prev、next、frame_descs、still_frame_desc 这几个指针都可以申明成通用指针

ctypes.c_void_p:pythonclass uvc_format_desc(ctypes.Structure): class U(ctypes.Union): _fields_ = [ ('guidFormat', ctypes.c_uint8 * 16), ('fourccFormat', ctypes.c_uint8 * 4) ] class V(ctypes.Union): _fields_ = [ ('bBitsPerPixel', ctypes.c_uint8), ('bmFlags', ctypes.c_uint8) ] _fields_ = [ ('parent', ctypes.c_void_p), ('prev', ctypes.c_void_p), ('next', ctypes.c_void_p), ('bDescriptorSubtype', ctypes.c_int), ('bFormatIndex', ctypes.c_uint8), ('bNumFrameDescriptors', ctypes.c_uint8), ('u', U), ('v', V), ('bDefaultFrameIndex', ctypes.c_uint8), ('bAspectRatioX', ctypes.c_uint8), ('bAspectRatioY', ctypes.c_uint8), ('bmInterlaceFlags', ctypes.c_uint8), ('bCopyProtect', ctypes.c_uint8), ('bVariableSize', ctypes.c_uint8), ('frame_descs', ctypes.c_void_p), ('still_frame_desc', ctypes.c_void_p) ]然后 python 在用到 uvc_format_desc 结构体的相应指针时用

ctypes.cast方法转换成实际指针类型即可。下面以frame_descs、next 指针的转换为例:pythondef find_format(devh: ctypes.c_void_p, frame_format: int, width: int, height: int, fps: int): libuvc.uvc_get_format_descs.restype = ctypes.POINTER(uvc_format_desc) fmt_desc = libuvc.uvc_get_format_descs(devh) while not_null(fmt_desc): if fmt_desc.contents.bDescriptorSubtype == frame_format: frm_desc = ctypes.cast(fmt_desc.contents.frame_descs, ctypes.POINTER(uvc_frame_desc)) while not_null(frm_desc): if frm_desc.contents.wWidth == width and frm_desc.contents.wHeight == height and \ int(10000000 / frm_desc.contents.dwDefaultFrameInterval + .5) == fps: return True frm_desc = ctypes.cast(frm_desc.contents.next, ctypes.POINTER(uvc_frame_desc)) fmt_desc = ctypes.cast(fmt_desc.contents.next, ctypes.POINTER(uvc_format_desc)) return False -

将 python 的方法传递给 C 动态库的函数作为回调,需要用到 ctypes.CFUNCTYPE 函数来做桥接,它的第一个参数是回调函数的返回值,后面的参数和回调函数的参数顺序一致并且类型也要兼容:

pythondef callback(frame_ptr: ctypes.c_void_p, ptr: ctypes.c_void_p): frame = frame_ptr.contents print(f'{frame.capture_time_finished.tv_sec}.{frame.capture_time_finished.tv_nsec:09d}') # 使用 ctypes.CFUNCTYPE 桥接 cb = ctypes.CFUNCTYPE(None, ctypes.POINTER(uvc_frame), ctypes.c_void_p)(callback) res = libuvc.uvc_start_streaming(devh, ctypes.byref(ctrl), cb, 12345, 0)

完整示例

python

# coding=utf-8

import os

import ctypes

import signal

import cv2

import numpy as np

libuvc = ctypes.CDLL('libuvc.so')

UVC_SUCCESS = 0

UVC_ERROR_PIPE = -9

UVC_ERROR_NO_DEVICE = -4

UVC_VS_FORMAT_UNCOMPRESSED = 4

UVC_VS_FORMAT_MJPEG = 6

UVC_VS_FORMAT_FRAME_BASED = 16

UVC_FRAME_FORMAT_YUYV = 3

UVC_FRAME_FORMAT_MJPEG = 7

UVC_FRAME_FORMAT_H264 = 8

UVC_AUTO_EXPOSURE_MODE_AUTO = 2

UVC_AUTO_EXPOSURE_MODE_APERTURE_PRIORITY = 8

class uvc_device(ctypes.Structure):

_fields_ = [

('ctx', ctypes.c_void_p),

('ref', ctypes.c_int),

('usb_dev', ctypes.c_void_p)

]

class uvc_device_handle(ctypes.Structure):

_fields_ = [

('dev', ctypes.POINTER(uvc_device)),

('prev', ctypes.c_void_p),

('next', ctypes.c_void_p),

('usb_devh', ctypes.c_void_p),

('info', ctypes.c_void_p),

('status_xfer', ctypes.c_void_p),

('status_buf', ctypes.c_uint8 * 32),

('status_cb', ctypes.c_void_p),

('status_user_ptr', ctypes.c_void_p),

('button_cb', ctypes.c_void_p),

('button_user_ptr', ctypes.c_void_p),

('streams', ctypes.c_void_p),

('is_isight', ctypes.c_uint8),

('claimed', ctypes.c_uint32)

]

class uvc_context(ctypes.Structure):

_fields_ = [

('usb_ctx', ctypes.c_void_p),

('own_usb_ctx', ctypes.c_uint8),

('open_devices', ctypes.POINTER(uvc_device_handle)),

('handler_thread', ctypes.c_ulong),

('kill_handler_thread', ctypes.c_int)

]

class uvc_device_descriptor(ctypes.Structure):

_fields_ = [

('idVendor', ctypes.c_uint16),

('idProduct', ctypes.c_uint16),

('bcdUVC', ctypes.c_uint16),

('serialNumber', ctypes.c_char_p),

('manufacturer', ctypes.c_char_p),

('product', ctypes.c_char_p)

]

class uvc_frame_desc(ctypes.Structure):

_fields_ = [

('parent', ctypes.c_void_p),

('prev', ctypes.c_void_p),

('next', ctypes.c_void_p),

('bDescriptorSubtype', ctypes.c_int),

('bFrameIndex', ctypes.c_uint8),

('bmCapabilities', ctypes.c_uint8),

('wWidth', ctypes.c_uint16),

('wHeight', ctypes.c_uint16),

('dwMinBitRate', ctypes.c_uint32),

('dwMaxBitRate', ctypes.c_uint32),

('dwMaxVideoFrameBufferSize', ctypes.c_uint32),

('dwDefaultFrameInterval', ctypes.c_uint32),

('dwMinFrameInterval', ctypes.c_uint32),

('dwMaxFrameInterval', ctypes.c_uint32),

('dwFrameIntervalStep', ctypes.c_uint32),

('bFrameIntervalType', ctypes.c_uint32),

('dwBytesPerLine', ctypes.c_uint32),

('intervals', ctypes.c_uint32)

]

class uvc_format_desc(ctypes.Structure):

class U(ctypes.Union):

_fields_ = [

('guidFormat', ctypes.c_uint8 * 16),

('fourccFormat', ctypes.c_uint8 * 4)

]

class V(ctypes.Union):

_fields_ = [

('bBitsPerPixel', ctypes.c_uint8),

('bmFlags', ctypes.c_uint8)

]

_fields_ = [

('parent', ctypes.c_void_p),

('prev', ctypes.c_void_p),

('next', ctypes.c_void_p),

('bDescriptorSubtype', ctypes.c_int),

('bFormatIndex', ctypes.c_uint8),

('bNumFrameDescriptors', ctypes.c_uint8),

('u', U),

('v', V),

('bDefaultFrameIndex', ctypes.c_uint8),

('bAspectRatioX', ctypes.c_uint8),

('bAspectRatioY', ctypes.c_uint8),

('bmInterlaceFlags', ctypes.c_uint8),

('bCopyProtect', ctypes.c_uint8),

('bVariableSize', ctypes.c_uint8),

('frame_descs', ctypes.c_void_p),

('still_frame_desc', ctypes.c_void_p)

]

@property

def guidFormat(self):

return self.u.guidFormat

@guidFormat.setter

def request_fd(self, value):

self.u.guidFormat = value

@property

def fourccFormat(self):

return self.u.fourccFormat

@fourccFormat.setter

def request_fd(self, value):

self.u.fourccFormat = value

@property

def bBitsPerPixel(self):

return self.u.bBitsPerPixel

@bBitsPerPixel.setter

def request_fd(self, value):

self.u.bBitsPerPixel = value

@property

def bmFlags(self):

return self.u.bmFlags

@bmFlags.setter

def request_fd(self, value):

self.u.bmFlags = value

class uvc_stream_ctrl(ctypes.Structure):

_fields_ = [

('bmHint', ctypes.c_time_t),

('bFormatIndex', ctypes.c_uint8),

('bFrameIndex', ctypes.c_uint8),

('dwFrameInterval', ctypes.c_uint32),

('wKeyFrameRate', ctypes.c_uint16),

('wPFrameRate', ctypes.c_uint16),

('wCompQuality', ctypes.c_uint16),

('wCompWindowSize', ctypes.c_uint16),

('wDelay', ctypes.c_uint16),

('dwMaxVideoFrameSize', ctypes.c_uint32),

('dwMaxPayloadTransferSize', ctypes.c_uint32),

('dwClockFrequency', ctypes.c_uint32),

('bmFramingInfo', ctypes.c_uint8),

('bPreferredVersion', ctypes.c_uint8),

('bMinVersion', ctypes.c_uint8),

('bMaxVersion', ctypes.c_uint8),

('bInterfaceNumber', ctypes.c_uint8)

]

class timeval(ctypes.Structure):

_fields_ = [

('tv_sec', ctypes.c_void_p),

('tv_usec', ctypes.c_long)

]

class timespec(ctypes.Structure):

_fields_ = [

('tv_sec', ctypes.c_void_p),

('tv_nsec', ctypes.c_long)

]

class uvc_frame(ctypes.Structure):

_fields_ = [

('data', ctypes.c_void_p),

('data_bytes', ctypes.c_size_t),

('width', ctypes.c_uint32),

('height', ctypes.c_uint32),

('frame_format', ctypes.c_int),

('step', ctypes.c_size_t),

('sequence', ctypes.c_uint32),

('capture_time', timeval),

('capture_time_finished', timespec),

('source', ctypes.POINTER(uvc_device_handle)),

('library_owns_data', ctypes.c_uint8),

('metadata', ctypes.c_void_p),

('metadata_bytes', ctypes.c_size_t)

]

def not_null(ptr):

try:

ptr.contents

except ValueError as e:

return False

except Exception:

pass

return True

def find_device(ctx: ctypes.c_void_p, device_name: str):

list = ctypes.pointer(ctypes.POINTER(uvc_device)())

if libuvc.uvc_get_device_list(ctx, ctypes.byref(list)) != UVC_SUCCESS:

return None

dev, desc, i, f = ctypes.POINTER(uvc_device)(), ctypes.POINTER(uvc_device_descriptor)(), 0, False

while f == False and not_null(list[i]):

if libuvc.uvc_get_device_descriptor(list[i], ctypes.byref(desc)) == UVC_SUCCESS:

if desc.contents.product.decode().lower() == device_name.lower():

dev = list[i]

libuvc.uvc_ref_device(dev)

f = True

libuvc.uvc_free_device_descriptor(desc)

i += 1

libuvc.uvc_free_device_list(list, 1)

return dev if f else None

def find_format(devh: ctypes.c_void_p, vs_format: int, width: int, height: int, fps: int):

libuvc.uvc_get_format_descs.restype = ctypes.POINTER(uvc_format_desc)

fmt_desc = libuvc.uvc_get_format_descs(devh)

while not_null(fmt_desc):

if fmt_desc.contents.bDescriptorSubtype == vs_format:

frm_desc = ctypes.cast(fmt_desc.contents.frame_descs, ctypes.POINTER(uvc_frame_desc))

while not_null(frm_desc):

if frm_desc.contents.wWidth == width and frm_desc.contents.wHeight == height and \

int(10000000 / frm_desc.contents.dwDefaultFrameInterval + .5) == fps:

return True

frm_desc = ctypes.cast(frm_desc.contents.next, ctypes.POINTER(uvc_frame_desc))

fmt_desc = ctypes.cast(fmt_desc.contents.next, ctypes.POINTER(uvc_format_desc))

return False

def get_frame_format(vs_format: int):

if vs_format == UVC_VS_FORMAT_MJPEG:

return UVC_FRAME_FORMAT_MJPEG

if vs_format == UVC_VS_FORMAT_FRAME_BASED:

return UVC_FRAME_FORMAT_H264

return UVC_FRAME_FORMAT_YUYV

def fourcc(frame_format: int):

if frame_format == UVC_FRAME_FORMAT_MJPEG:

return 'MJPG'

if frame_format == UVC_FRAME_FORMAT_H264:

return 'H264'

return 'YUYV'

def file_to_print(file_ptr: ctypes.c_void_p, file_path: str):

libuvc.fflush(file_ptr)

libuvc.fclose(file_ptr)

with open('tmp', 'r') as f:

print(f.read())

os.remove('tmp')

def default_callback(frame_ptr: ctypes.c_void_p, ptr: ctypes.c_void_p):

frame = frame_ptr.contents

if frame.frame_format == UVC_FRAME_FORMAT_MJPEG:

bytes = memoryview((ctypes.c_char * frame.data_bytes).from_address(frame.data)).tobytes()

cv2.namedWindow('tstc web cam preview', cv2.WINDOW_AUTOSIZE)

cv2.imshow('tstc web cam preview', cv2.imdecode(np.frombuffer(bytes, dtype=np.uint8), cv2.IMREAD_GRAYSCALE))

cv2.waitKey(1)

print(f'{frame.capture_time_finished.tv_sec}.{frame.capture_time_finished.tv_nsec:09d} {fourcc(frame.frame_format)}')

streaming = True

def exit(sig: int, frame):

global streaming

streaming = False

def start_stream(device_name: str, vs_format: int, width: int, height: int, fps: int, callback):

ctx = ctypes.POINTER(uvc_context)()

res = libuvc.uvc_init(ctypes.byref(ctx), None)

if res < 0:

return libuvc.uvc_perror(res, 'uvc_init')

print('UVC initialized')

dev = find_device(ctx, device_name)

if dev:

devh = ctypes.POINTER(uvc_device_handle)()

res = libuvc.uvc_open(dev, ctypes.byref(devh))

if res == UVC_SUCCESS:

print('Device opened')

if find_format(devh, vs_format, width, height, fps):

fmt = get_frame_format(vs_format)

print(f'format: {fourcc(fmt)} {width}x{height} {fps}fps')

ctrl = uvc_stream_ctrl()

res = libuvc.uvc_get_stream_ctrl_format_size(devh, ctypes.byref(ctrl), fmt, width, height, fps)

if res == UVC_SUCCESS:

file_ptr = libuvc.fopen(ctypes.c_char_p(b'tmp'), ctypes.c_char_p(b'w'))

libuvc.uvc_print_stream_ctrl(ctypes.byref(ctrl), file_ptr)

file_to_print(file_ptr, 'tmp')

cb = ctypes.CFUNCTYPE(None, ctypes.POINTER(uvc_frame), ctypes.c_void_p)(callback)

res = libuvc.uvc_start_streaming(devh, ctypes.byref(ctrl), cb, 12345, 0)

if res == UVC_SUCCESS:

print('treaming...')

print('Enabling auto exposure ...')

res = libuvc.uvc_set_ae_mode(devh, UVC_AUTO_EXPOSURE_MODE_AUTO)

if (res == UVC_ERROR_PIPE):

print(' ... full AE not supported, trying aperture priority mode')

res = libuvc.uvc_set_ae_mode(devh, UVC_AUTO_EXPOSURE_MODE_APERTURE_PRIORITY)

if res < 0: libuvc.uvc_perror(res, ' ... uvc_set_ae_mode failed to enable aperture priority mode')

else: print(' ... enabled aperture priority auto exposure mode')

elif res == UVC_SUCCESS:

print(' ... enabled auto exposure')

else:

libuvc(res, ' ... uvc_set_ae_mode failed to enable auto exposure mode')

signal.signal(signal.SIGINT, exit)

signal.signal(signal.SIGTSTP, exit)

signal.signal(signal.SIGQUIT, exit)

signal.signal(signal.SIGTERM, exit)

while streaming:

pass

libuvc.uvc_stop_streaming(devh)

print('Done streaming.')

else:

libuvc.uvc_perror(res, 'start_streaming')

else:

libuvc.uvc_perror(res, 'get_mode')

else:

print('unsupported format: MJPG 2560x800 60fps, available formats:')

file_ptr = libuvc.fopen(ctypes.c_char_p(b'tmp'), ctypes.c_char_p(b'w'))

libuvc.uvc_print_diag(devh, file_ptr)

file_to_print(file_ptr, 'tmp')

libuvc.uvc_close(devh)

print('Device closed')

else:

libuvc.uvc_perror(res, 'uvc_open')

libuvc.uvc_unref_device(dev)

else:

libuvc.uvc_perror(UVC_ERROR_NO_DEVICE, 'uvc_find_device')

libuvc.uvc_exit(ctx)

print('UVC exited')

if __name__ == '__main__':

# start_stream('TSTC Web Camera', UVC_VS_FORMAT_UNCOMPRESSED, 2560, 800, 5, default_callback)

start_stream('TSTC Web Camera', UVC_VS_FORMAT_MJPEG, 2560, 800, 60, default_callback)