Qwen-Image-Edit-2511作为一款性能出色的图像编辑模型,在ComfyUI中部署时却受限于显存资源。本文针对4090显卡(24G显存)场景,分享量化模型的部署流程、关键避坑点,以及不同采样步数下的效果对比,帮助大家快速落地实践。

一、前置准备:ComfyUI安装

ComfyUI基础安装流程此处不赘述,推荐参考官方中文指南,步骤清晰且适配Linux环境:ComfyUI Linux安装官方指南

二、核心问题:显存限制与解决方案

4090显卡的24G显存无法承载Qwen-Image-Edit-2511原始模型(显存溢出),因此必须使用量化模型。考虑到外网访问和下载限制,本文提供基于hugging-face镜像 和modelscope的国内可访问下载链接,同时明确各模型的存放路径(直接关系到模型能否正常加载)。

2.1 量化模型下载清单(含路径+命令)

所有模型需下载至ComfyUI对应目录,以下是完整的路径说明和wget下载命令(复制到终端直接执行即可):

1. LoRA模型(路径:ComfyUI/models/loras)

bash

wget https://hf-mirror.com/lightx2v/Qwen-Image-Edit-2511-Lightning/resolve/main/Qwen-Image-Edit-2511-Lightning-4steps-V1.0-bf16.safetensors2. VAE模型(路径:ComfyUI/models/vae)

bash

wget https://hf-mirror.com/Comfy-Org/Qwen-Image_ComfyUI/resolve/main/split_files/vae/qwen_image_vae.safetensors3. UNet模型(路径:ComfyUI/models/unet)

bash

wget "https://modelscope.cn/api/v1/models/unsloth/Qwen-Image-Edit-2511-GGUF/repo?Revision=master&FilePath=qwen-image-edit-2511-Q4_K_M.gguf" -O qwen-image-edit-2511-Q4_K_M.gguf4. CLIP模型(路径:ComfyUI/models/clip)

bash

# 主模型文件

wget -c "https://modelscope.cn/api/v1/models/unsloth/Qwen2.5-VL-7B-Instruct-GGUF/repo?Revision=master&FilePath=Qwen2.5-VL-7B-Instruct-Q4_K_M.gguf" -O Qwen2.5-VL-7B-Instruct-Q4_K_M.gguf

# 关键依赖文件(必下!)

wget -c "https://modelscope.cn/api/v1/models/unsloth/Qwen2.5-VL-7B-Instruct-GGUF/repo?Revision=master&FilePath=mmproj-F16.gguf" -O Qwen2.5-VL-7B-Instruct-mmproj-BF16.gguf2.2 致命坑点:缺失mmproj文件导致的报错解决方案

⚠️ 重点提醒:CLIP模型对应的mmproj文件是必下载项!缺失该文件会直接导致图像编辑时出现「矩阵维度不匹配」的致命错误,排查过程耗时耗力。

我首次部署时因遗漏该文件,出现如下报错(核心信息:mat1 and mat2 shapes cannot be multiplied):

Prompt executed in 10.11 seconds

got prompt

!!! Exception during processing !!! mat1 and mat2 shapes cannot be multiplied (748x1280 and 3840x1280)

Traceback (most recent call last):

File "/root/comfy/ComfyUI/execution.py", line 516, in execute

output_data, output_ui, has_subgraph, has_pending_tasks = await get_output_data(prompt_id, unique_id, obj, input_data_all, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb, v3_data=v3_data)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/execution.py", line 330, in get_output_data

return_values = await _async_map_node_over_list(prompt_id, unique_id, obj, input_data_all, obj.FUNCTION, allow_interrupt=True, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb, v3_data=v3_data)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/execution.py", line 304, in _async_map_node_over_list

await process_inputs(input_dict, i)

File "/root/comfy/ComfyUI/execution.py", line 292, in process_inputs

result = f(**inputs)

^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy_api/internal/__init__.py", line 149, in wrapped_func

return method(locked_class, **inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy_api/latest/_io.py", line 1520, in EXECUTE_NORMALIZED

to_return = cls.execute(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy_extras/nodes_qwen.py", line 103, in execute

conditioning = clip.encode_from_tokens_scheduled(tokens)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/sd.py", line 207, in encode_from_tokens_scheduled

pooled_dict = self.encode_from_tokens(tokens, return_pooled=return_pooled, return_dict=True)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/sd.py", line 271, in encode_from_tokens

o = self.cond_stage_model.encode_token_weights(tokens)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/text_encoders/qwen_image.py", line 62, in encode_token_weights

out, pooled, extra = super().encode_token_weights(token_weight_pairs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/sd1_clip.py", line 704, in encode_token_weights

out = getattr(self, self.clip).encode_token_weights(token_weight_pairs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/sd1_clip.py", line 45, in encode_token_weights

o = self.encode(to_encode)

^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/sd1_clip.py", line 297, in encode

return self(tokens)

^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1775, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1786, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/sd1_clip.py", line 257, in forward

embeds, attention_mask, num_tokens, embeds_info = self.process_tokens(tokens, device)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/sd1_clip.py", line 219, in process_tokens

emb, extra = self.transformer.preprocess_embed(emb, device=device)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/text_encoders/llama.py", line 593, in preprocess_embed

return self.visual(image.to(device, dtype=torch.float32), grid), grid

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1775, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1786, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/text_encoders/qwen_vl.py", line 425, in forward

hidden_states = block(hidden_states, position_embeddings, cu_seqlens_now, optimized_attention=optimized_attention)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1775, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1786, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/text_encoders/qwen_vl.py", line 252, in forward

hidden_states = self.attn(hidden_states, position_embeddings, cu_seqlens, optimized_attention)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1775, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1786, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/text_encoders/qwen_vl.py", line 195, in forward

qkv = self.qkv(hidden_states)

^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1775, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy-env/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1786, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/ops.py", line 164, in forward

return self.forward_comfy_cast_weights(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/custom_nodes/ComfyUI-GGUF/ops.py", line 217, in forward_comfy_cast_weights

out = super().forward_comfy_cast_weights(input, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/root/comfy/ComfyUI/comfy/ops.py", line 157, in forward_comfy_cast_weights

x = torch.nn.functional.linear(input, weight, bias)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

RuntimeError: mat1 and mat2 shapes cannot be multiplied (748x1280 and 3840x1280)最终通过GitHubissue找到解决方案(感谢开源社区):TextEncodeQwenImageEdit mat1 and mat2 shapes cannot be multiplied 问题解决方案,核心就是补全mmproj文件。建议大家直接按上文清单下载,避免重复踩坑。

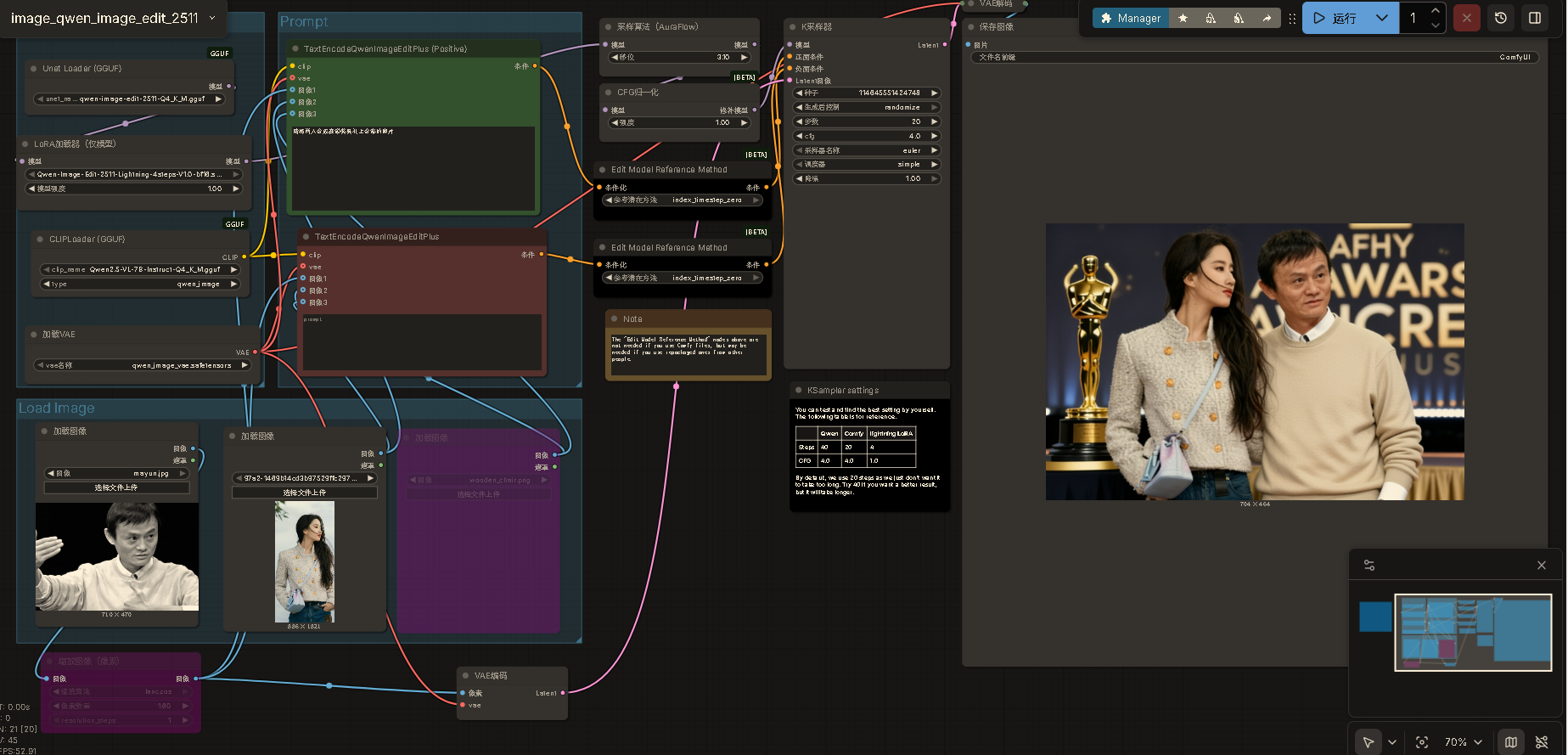

三、工作流配置与效果测试

模型部署完成后,需配置对应的工作流。以下是我测试用的工作流截图(可直接参考复刻):

本次测试以「三图编辑」为场景,重点验证不同K采样器步数对输出效果的影响,测试环境为4090显卡+Linux系统,具体结果如下:

3.1 20步采样:速度快但效果差

- 运行时长:1分40秒

- 效果问题:人物手臂存在明显割裂感;人物面部失真严重(如"马爸爸"面部完全识别不出)

- 效果截图:

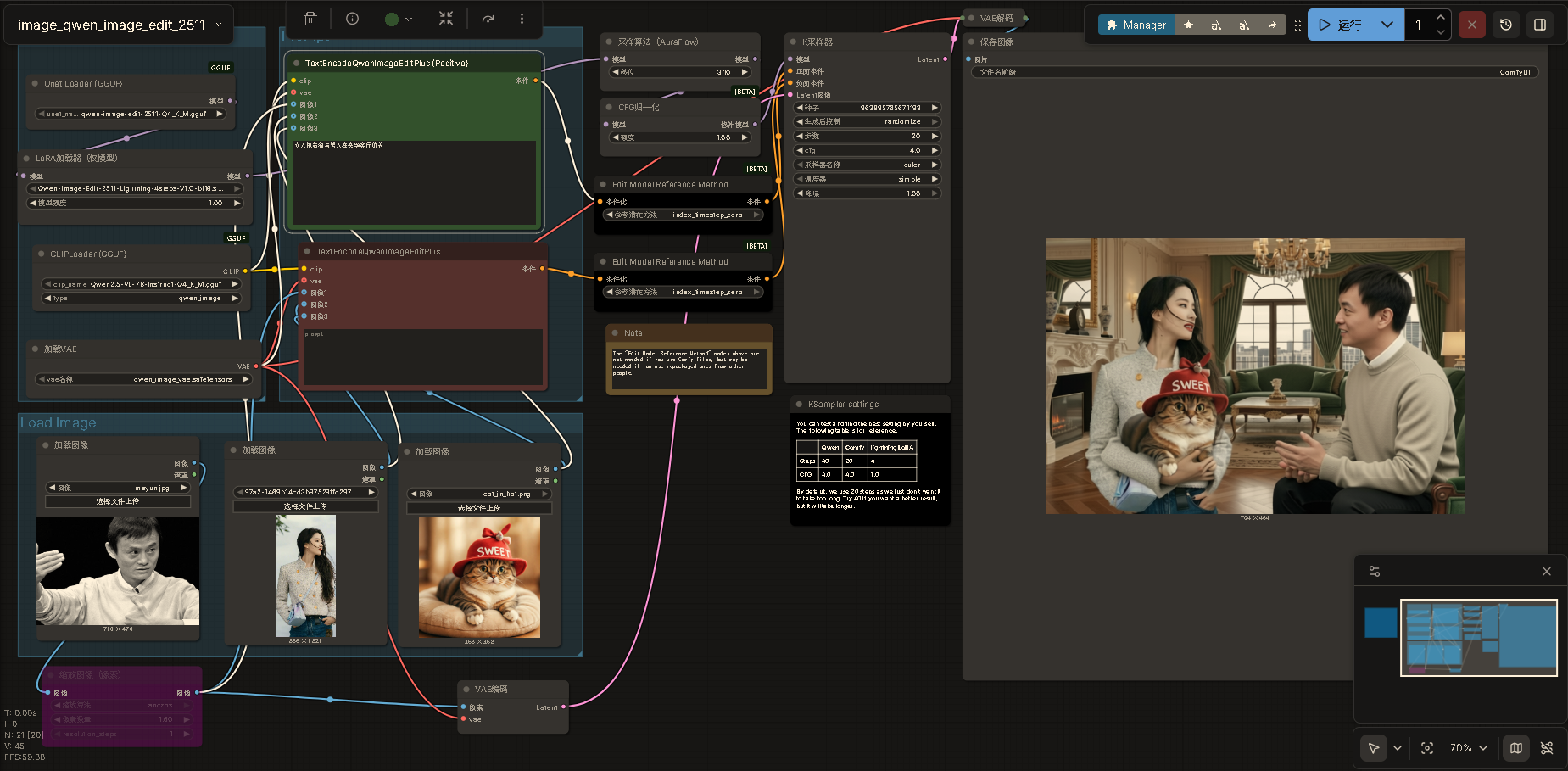

3.2 40步采样:效果略有提升但仍有缺陷

- 运行时长:4分37秒

- 效果问题:手部与手臂的割裂感未完全解决,仍存在明显衔接瑕疵

- 效果截图:

3.3 60步采样:效果达标但耗时增加

- 运行时长:6分57秒

- 效果表现:手臂衔接问题基本解决;但人物面部仍与原角色有较大差异,且出现非预期的衣物颜色变化(如浅灰色衣物变为黑色)

- 效果截图:

四、总结与后续优化方向

- 4090显卡运行Qwen-Image-Edit-2511需优先选择量化模型,按本文提供的国内镜像链接下载可规避网络问题,且mmproj文件不可遗漏;

- 采样步数与效果、速度呈正相关:20步适合快速预览,60步可解决核心瑕疵,但需接受更长耗时和人物脸部变更;

- 后续优化方向:可尝试调整工作流中的提示词精度、优化流程参数,或测试更高精度的量化模型(如Q2_K等),平衡效果与耗时。

如果大家在部署过程中遇到其他问题,欢迎在评论区交流~