kubernetes核心概念Service

service作用

Service 本质是 Kubernetes 中用于暴露 Pod 服务的稳定抽象层,核心解决 Pod 动态性带来的访问问题,同时提供负载均衡、访问策略等附加能力,整体作用可归纳为:

- 解决 Pod IP 动态变化导致的访问失效问题,提供稳定访问入口;

- 作为 Pod 与客户端之间的代理层,统一接收并转发请求;

- 通过标签关联实现 Pod 动态感知,自动维护端点列表;

- 提供 4 层(TCP/UDP)负载均衡能力,分发请求到后端 Pod;

- 定义 Pod 访问规则与策略,规范服务访问方式;

- 防止 Pod 动态重建 / 调度后的失联,保障服务连续性。

kube-proxy三种代理模式

userspace 模式(废弃)

- 转发:kube-proxy 进程做中间转发(用户态)

- 特点:性能差、有单点故障、端口竞争,仅老旧集群兼容

iptables 模式(默认)

- 转发:内核态 iptables NAT 规则转发,kube-proxy 只维护规则不转发

- 特点:性能优、无单点故障,仅轮询策略,大规模 Pod 规则易膨胀

- 适用:中小规模集群

ipvs 模式(高性能推荐)

- 转发:内核态 IPVS 模块转发,专为负载均衡设计

- 特点:性能极致、多负载策略(轮询 / 最少连接等)、支持大规模 Pod、内置健康检查

- 适用:大规模 / 高并发生产集群

| 模式 | 层级 | 性能 | 策略 | 状态 / 适用 |

|---|---|---|---|---|

| userspace | 用户态 | 极差 | 仅轮询 | 废弃 / 老旧集群 |

| iptables | 内核态 | 优秀 | 仅轮询 | 默认 / 中小集群 |

| ipvs | 内核态 | 极致 | 多策略 | 推荐 / 大集群高并发 |

kube-proxy 模式演进就是从用户态低效到内核态高性能,中小用 iptables,大用 ipvs。

iptables与ipvs对比

相同点

都是内核态转发,kube-proxy 仅维护规则,不参与转发,无单点故障

均基于 Service/Endpoints 动态更新规则,实现 Service 转发到 Pod

支持 TCP/UDP 4 层转发,适配 K8s Service 核心需求

核心差异

根本区别

iptables:通用防火墙工具,转发只是附加功能,规则是 "过滤 + 转发" 链式匹配

ipvs:专为负载均衡设计,内核级负载均衡组件,天生适配多后端转发场景

性能

iptables:优秀,但规则链式匹配,Pod 越多规则越复杂,匹配耗时增加 ipvs:极致更优,专用负载均衡算法,规则查找效率远高于 iptables,高并发下差距明显

负载均衡策略

iptables:仅支持轮询,策略单一,无法根据 Pod 负载调整

ipvs:多策略可选(核心优势),支持轮询 (rr)、最少连接 (lc)、加权轮询 (wrr)、加权最少连接 (wlc),适配不同业务场景

扩展性

iptables:中等,易膨胀。单个 Service 对应 N 个 Pod 就生成 N 条规则,数百 Pod 时规则量暴增,更新 / 匹配变慢

ipvs:极好,支持大规模。用 "虚拟服务 + 真实服务" 模型,规则量不随 Pod 数量线性增长,适配千级 Pod 场景

健康检查

iptables:无,仅靠 Endpoints 更新规则,转发到故障 Pod 会直接失败,需客户端重试

ipvs:内置健康检查,自动剔除故障 Pod,无需客户端感知,可靠性更高

会话保持

iptables:无原生支持,需额外配置复杂规则

ipvs:原生支持,支持 IP / 端口会话亲和性,确保同一客户端请求到同一 Pod

配置 & 依赖

iptables:无需额外配置,默认启用,所有 Linux 节点原生支持,兼容性拉满

ipvs:需手动加载内核模块(ip_vs、ip_vs_rr 等),部分老旧系统不支持,配置稍复杂

兼容性

iptables:与 K8s 网络策略(NetworkPolicy)、CNI 插件兼容性100%,无兼容问题

ipvs:兼容性稍弱,部分小众 CNI / 网络策略适配需调试

适用场景

用 iptables:中小规模集群、追求简单稳定、无复杂负载需求(默认首选)

用 ipvs:大规模集群、高并发服务、需要灵活负载策略 / 会话保持(高性能首选)

对比表

| 对比维度 | iptables(默认) | ipvs(高性能) |

|---|---|---|

| 设计定位 | 通用防火墙(转发是附加) | 专用内核负载均衡 |

| 转发性能 | 优秀 | 极致(高并发更明显) |

| 负载策略 | 仅轮询 | 轮询 / 最少连接 / 加权等多策略 |

| 大规模适配 | 一般(规则易膨胀) | 极佳(支持千级 Pod) |

| 健康检查 | 无(依赖客户端重试) | 内置(自动剔除故障 Pod) |

| 会话保持 | 无原生支持 | 原生支持 |

| 配置难度 | 无(默认启用) | 需加载内核模块,稍复杂 |

| 兼容性 | 完全兼容 K8s 网络策略 / CNI | 兼容大部分,部分需调试 |

| 适用场景 | 中小集群、简单稳定需求 | 大集群、高并发、复杂负载 |

service类型

4 种核心类型

- ClusterIP(默认)

- 核心:集群内唯一虚拟 IP

- 访问范围:仅集群内

- 关键:微服务内调,配 CoreDNS 可域名访问

- 适用:集群内服务通信

- NodePort

- 核心:所有节点绑定固定端口

- 访问范围:集群内 + 外

- 关键:端口范围 30000-32767,外部通过

节点IP:端口访问 - 适用:开发 / 测试环境

- LoadBalancer

- 核心:对接云厂商负载均衡器,分配公网 IP

- 访问范围:集群内 + 外

- 关键:依赖云厂商(AWS / 阿里云等),基于 NodePort

- 适用:生产环境外部高可用访问

- ExternalName

- 核心:映射外部域名,无 ClusterIP

- 访问范围:集群内 Pod 访问外部服务

- 关键:仅域名映射,不转发

- 适用:集群内访问外部固定域名服务

两种扩展类型

- ClusterIP(None)(无头服务)

- 核心:不分配虚拟 IP,DNS 返回所有 Pod 真实 IP

- 适用:有状态服务(MySQL 主从、ETCD)

- NodePort(独享节点)

- 核心:配置

externalTrafficPolicy: Local,仅转发当前节点 Pod - 优势:提升转发性能

- 核心:配置

类型对比表

| 类型 | 核心标识 | 访问范围 | 关键信息 | 适用场景 |

|---|---|---|---|---|

| ClusterIP | 默认,无需指定 | 仅集群内 | 集群内虚拟 IP | 集群内微服务互调 |

| NodePort | type:NodePort | 集群内 + 外 | 固定端口 30000-32767 | 开发 / 测试、少量外部访问 |

| LoadBalancer | type:LoadBalancer | 集群内 + 外 | 依赖云厂商,分配公网 IP | 生产环境、高可用外部访问 |

| ExternalName | type:ExternalName | 集群内→外 | 映射外部域名,无 ClusterIP | 集群内访问外部服务 |

总结

内访用 ClusterIP,默认稳当不暴露

外访测试 NodePort,3 万端口记清楚

生产外访 LoadBalancer,云厂商来扛得住;内外互通 ExternalName,域名映射最靠谱

service参数

port- 定义:Service 自身的访问端口(集群内访问 Service 时使用的端口)

- 特点:无固定范围限制,可自定义(如 80、8080)

- 用途:集群内通过「Service IP:port」访问服务

targetPort- 定义:后端 Pod 中容器的实际监听端口(服务真正运行的端口)

- 特点:需与容器内应用端口一致,可自定义

- 用途:Service 将请求转发到 Pod 的该端口,实现服务落地

nodePort- 定义:仅 NodePort 类型 Service 拥有,节点上暴露的外部访问端口

- 特点:端口范围固定为 30000-32767,不可超出

- 用途:外部通过「节点 IP:nodePort」访问集群内 Service

端口转发逻辑

外部请求(仅 NodePort 类型):节点IP:nodePort → Service clusterIP:port → Pod IP:targetPort

集群内请求:Service IP:port → Pod IP:targetPort

对比表

| 参数 | 核心作用 | 适用范围 | 端口限制 |

|---|---|---|---|

| port | 访问 Service 的端口 | 所有 Service 类型 | 无固定限制,自定义 |

| targetPort | 指向 Pod 容器的实际端口 | 所有 Service 类型 | 需与容器应用端口一致 |

| nodePort | 节点暴露的外部访问端口 | 仅 NodePort/LoadBalancer | 固定 30000-32767 |

service创建

ClusterIP类型

- 普通 ClusterIP(默认)

- 分配集群内固定虚拟 IP

- 集群内通过 IP / 域名访问,kube-proxy 做转发 + 4 层负载均衡

- 核心:集群内稳定访问入口,适配无状态服务

- Headless Service(无头服务)

- 不分配 ClusterIP,spec.clusterIP: None

- 无 kube-proxy 转发和负载均衡,靠 DNS 解析

- DNS 直接返回后端所有 Pod 真实 IP 列表

- 核心:获取 Pod 真实 IP,适配有状态服务(MySQL 主从、ETCD 等)

对比

| 类型 | ClusterIP | 转发 / 负载均衡 | 访问方式 | 适用场景 |

|---|---|---|---|---|

| 普通 ClusterIP | 分配 | kube-proxy 提供 | ClusterIP:port / 域名 | 无状态服务、内调 |

| Headless Service | 不分配 | 无 | DNS 解析 Pod IP 列表 | 有状态服务 |

ClusterIP Service

bash

[root@master ~]# mkdir -p service/clusterIP

[root@master ~]#

[root@master ~]#

[root@master ~]# cd service/clusterIP/

[root@master clusterIP]#

[root@master clusterIP]#

[root@master clusterIP]#

[root@master clusterIP]# rz -E

rz waiting to receive.

[root@master clusterIP]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master clusterIP]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 2/2 2 2 3m7s

#创建clusterIP类型service与Deployment类型应用关联

[root@master clusterIP]# kubectl expose deployment nginx-deploy --type=ClusterIP --target-port=80 --port=80

service/nginx-deploy exposed

[root@master clusterIP]# kubectl get endpoints

NAME ENDPOINTS AGE

k8s-sigs.io-nfs-subdir-external-provisioner <none> 22h

kubernetes 192.168.100.72:6443 14d

nginx 10.244.104.53:80,10.244.166.137:80 13d

nginx-deploy 10.244.104.53:80,10.244.166.137:80 9s

[root@master clusterIP]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-deploy-5b6d5cd699-krzk9 1/1 Running 0 94s

pod/nginx-deploy-5b6d5cd699-phbnk 1/1 Running 0 94s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

service/nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

service/nginx-deploy ClusterIP 10.108.233.164 <none> 80/TCP 23s

[root@master clusterIP]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-5b6d5cd699-krzk9 1/1 Running 0 105s 10.244.166.137 node1 <none> <none>

nginx-deploy-5b6d5cd699-phbnk 1/1 Running 0 105s 10.244.104.53 node2 <none> <none>

[root@master clusterIP]# kubectl describe svc nginx-deploy

Name: nginx-deploy

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.108.233.164

IPs: 10.108.233.164

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.53:80,10.244.166.137:80

Session Affinity: None

Events: <none>

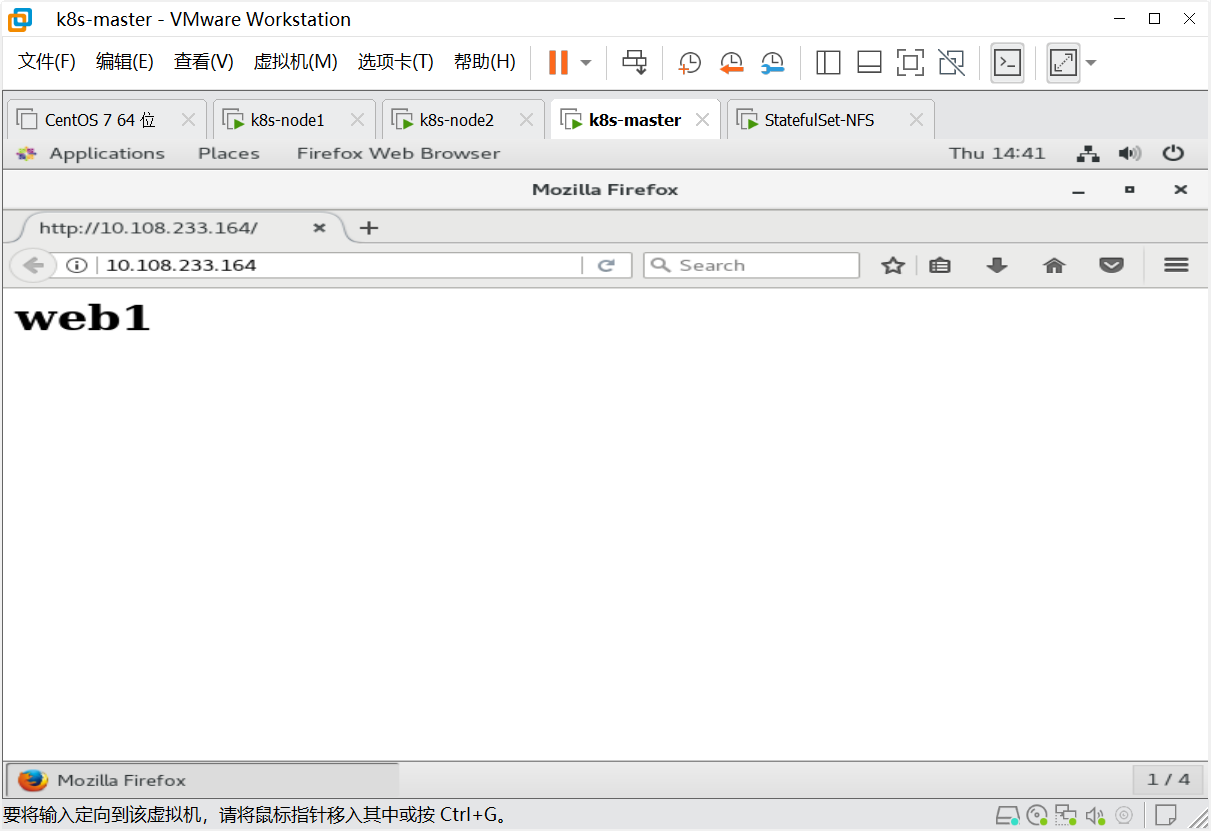

[root@master clusterIP]# kubectl exec -it pods/nginx-deploy-5b6d5cd699-krzk9 -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # echo "<h1>web1<h1>" > index.html

/usr/share/nginx/html # cat index.html

<h1>web1<h1>

/usr/share/nginx/html # exit

[root@master clusterIP]# kubectl exec -it pods/nginx-deploy-5b6d5cd699-phbnk -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # echo "<h1>web2<h1>" > index.html

/usr/share/nginx/html # exit

[root@master clusterIP]#

[root@master clusterIP]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

nginx-deploy ClusterIP 10.108.233.164 <none> 80/TCP 16m

[root@master clusterIP]# curl http://10.108.233.164

<h1>web1<h1>

[root@master clusterIP]# curl http://10.108.233.164

<h1>web2<h1>

[root@master clusterIP]# curl http://10.108.233.164

<h1>web1<h1>

[root@master clusterIP]# curl http://10.108.233.164

<h1>web2<h1>

[root@master clusterIP]#

bash

[root@master clusterIP]# kubectl delete svc nginx-deploy

service "nginx-deploy" deleted

[root@master clusterIP]#

[root@master clusterIP]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

[root@master clusterIP]# kubectl delete -f nginx-deploy.yaml

deployment.apps "nginx-deploy" deleted

[root@master clusterIP]# kubectl get pods

No resources found in default namespace.

[root@master clusterIP]# vim nginx-deploy.yaml

[root@master clusterIP]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

type: ClusterIP

selector:

app: nginx

ports:

- port: 80

protocol: TCP

targetPort: 80

[root@master clusterIP]# kubectl apply -f nginx-deploy.yaml

deployment.apps/nginx-deploy created

service/nginx-svc created

[root@master clusterIP]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-deploy-5b6d5cd699-fj2nr 1/1 Running 0 15s

pod/nginx-deploy-5b6d5cd699-l7wpn 1/1 Running 0 15s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

service/nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

service/nginx-svc ClusterIP 10.110.85.79 <none> 80/TCP 15s

[root@master clusterIP]# curl 10.110.85.79

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>headless service

bash

[root@master clusterIP]# cat nginx-headless.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: headless-svc

spec:

type: ClusterIP

clusterIP: None #无头服务

selector:

app: nginx

ports:

- port: 80

protocol: TCP

targetPort: 80

[root@master clusterIP]# kubectl get pods,svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

service/nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

[root@master clusterIP]# kubectl apply -f nginx-headless.yaml

deployment.apps/nginx-deploy created

service/headless-svc created

[root@master clusterIP]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deploy-5b6d5cd699-4qc6m 1/1 Running 0 5s

nginx-deploy-5b6d5cd699-kkjc8 1/1 Running 0 5s

[root@master clusterIP]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

headless-svc ClusterIP None <none> 80/TCP 10s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

[root@master clusterIP]# kubectl get endpoints

NAME ENDPOINTS AGE

headless-svc 10.244.104.51:80,10.244.166.139:80 32s

k8s-sigs.io-nfs-subdir-external-provisioner <none> 23h

kubernetes 192.168.100.72:6443 14d

nginx 10.244.104.51:80,10.244.166.139:80 13d

[root@master clusterIP]# kubectl get pods -n kube-system | grep dns

coredns-66f779496c-26pcb 1/1 Running 17 (3h6m ago) 14d

coredns-66f779496c-wpc57 1/1 Running 17 (3h6m ago) 14d

[root@master clusterIP]# kubectl get pods -n kube-system -o wide | grep coredns

coredns-66f779496c-26pcb 1/1 Running 17 (3h7m ago) 14d 10.244.166.141 node1 <none> <none>

coredns-66f779496c-wpc57 1/1 Running 17 (3h7m ago) 14d 10.244.166.135 node1 <none> <none>

[root@master clusterIP]# kubectl get svc -n kube-system | grep dns

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 14d

[root@master clusterIP]# kubectl get endpoints -n kube-system | grep dns

kube-dns 10.244.166.135:53,10.244.166.141:53,10.244.166.135:53 + 3 more... 14d

#解析

[root@master clusterIP]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

headless-svc ClusterIP None <none> 80/TCP 3m53s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

[root@master clusterIP]# dig -t a headless-svc.default.svc.cluster.local. @10.96.0.10

; <<>> DiG 9.9.4-RedHat-9.9.4-50.el7 <<>> -t a headless-svc.default.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 21032

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;headless-svc.default.svc.cluster.local. IN A

;; ANSWER SECTION:

headless-svc.default.svc.cluster.local. 30 IN A 10.244.104.51

headless-svc.default.svc.cluster.local. 30 IN A 10.244.166.139

;; Query time: 6 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Thu Nov 27 15:16:57 CST 2025

;; MSG SIZE rcvd: 175

#验证解析的地址

[root@master clusterIP]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-5b6d5cd699-4qc6m 1/1 Running 0 4m52s 10.244.166.139 node1 <none> <none>

nginx-deploy-5b6d5cd699-kkjc8 1/1 Running 0 4m52s 10.244.104.51 node2 <none> <none>在集群内创建pod进行解析

bash

[root@master clusterIP]# kubectl run -it centos --image=centos:7 --image-pull-policy=IfNotPresent

If you don't see a command prompt, try pressing enter.

[root@centos /]# curl http://headless-service.default.svc.cluster.local.

curl: (6) Could not resolve host: headless-service.default.svc.cluster.local.; Unknown error

[root@centos /]#

[root@centos /]# curl http://headless-svc.default.svc.cluster.local.

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@centos /]#

#清理资源

[root@master clusterIP]# kubectl get pods

NAME READY STATUS RESTARTS AGE

centos 1/1 Running 1 (4s ago) 117s

nginx-deploy-5b6d5cd699-4qc6m 1/1 Running 0 8m2s

nginx-deploy-5b6d5cd699-kkjc8 1/1 Running 0 8m2s

[root@master clusterIP]# kubectl delete -f nginx-headless.yaml

deployment.apps "nginx-deploy" deleted

service "headless-svc" deleted

[root@master clusterIP]# kubectl delete pod centos

pod "centos" deleted

[root@master clusterIP]# kubectl get pods

No resources found in default namespace.NodePort类型

bash

[root@master clusterIP]# cat nginx-nodeport.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-nodeport

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

resources:

requests:

memory: 150Mi

limits:

memory: 150Mi

---

apiVersion: v1

kind: Service

metadata:

name: nginx-nodeport-svc

spec:

type: NodePort

ports:

- port: 80

protocol: TCP

targetPort: 80

nodePort: 31111

selector:

app: nginx

[root@master service]# mkdir nodeport

[root@master service]# mv clusterIP/nginx-nodeport.yaml ./nodeport/

[root@master service]#

[root@master service]# cd nodeport/

[root@master nodeport]# ls

nginx-nodeport.yaml

[root@master nodeport]# kubectl apply -f nginx-nodeport.yaml

deployment.apps/nginx-nodeport created

service/nginx-nodeport-svc created

[root@master nodeport]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-nodeport-7f87fb64cc-gmk2k 1/1 Running 0 4s

nginx-nodeport-7f87fb64cc-ps2q6 1/1 Running 0 4s

[root@master nodeport]# kubectl get svc,endpoints

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

service/nginx NodePort 10.109.182.190 <none> 80:30578/TCP 13d

service/nginx-nodeport-svc NodePort 10.97.212.112 <none> 80:31111/TCP 12s

NAME ENDPOINTS AGE

endpoints/k8s-sigs.io-nfs-subdir-external-provisioner <none> 23h

endpoints/kubernetes 192.168.100.72:6443 14d

endpoints/nginx 10.244.104.62:80,10.244.166.140:80 13d

endpoints/nginx-nodeport-svc 10.244.104.62:80,10.244.166.140:80 12s

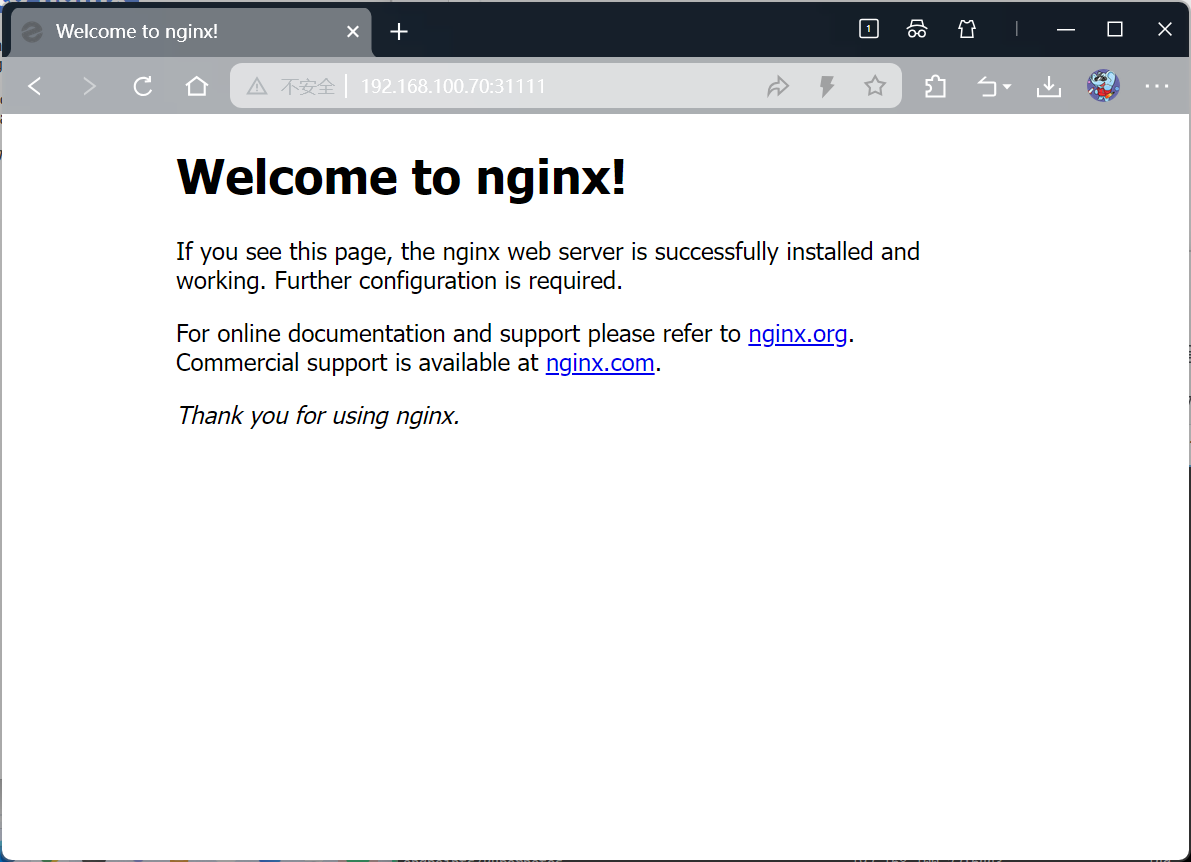

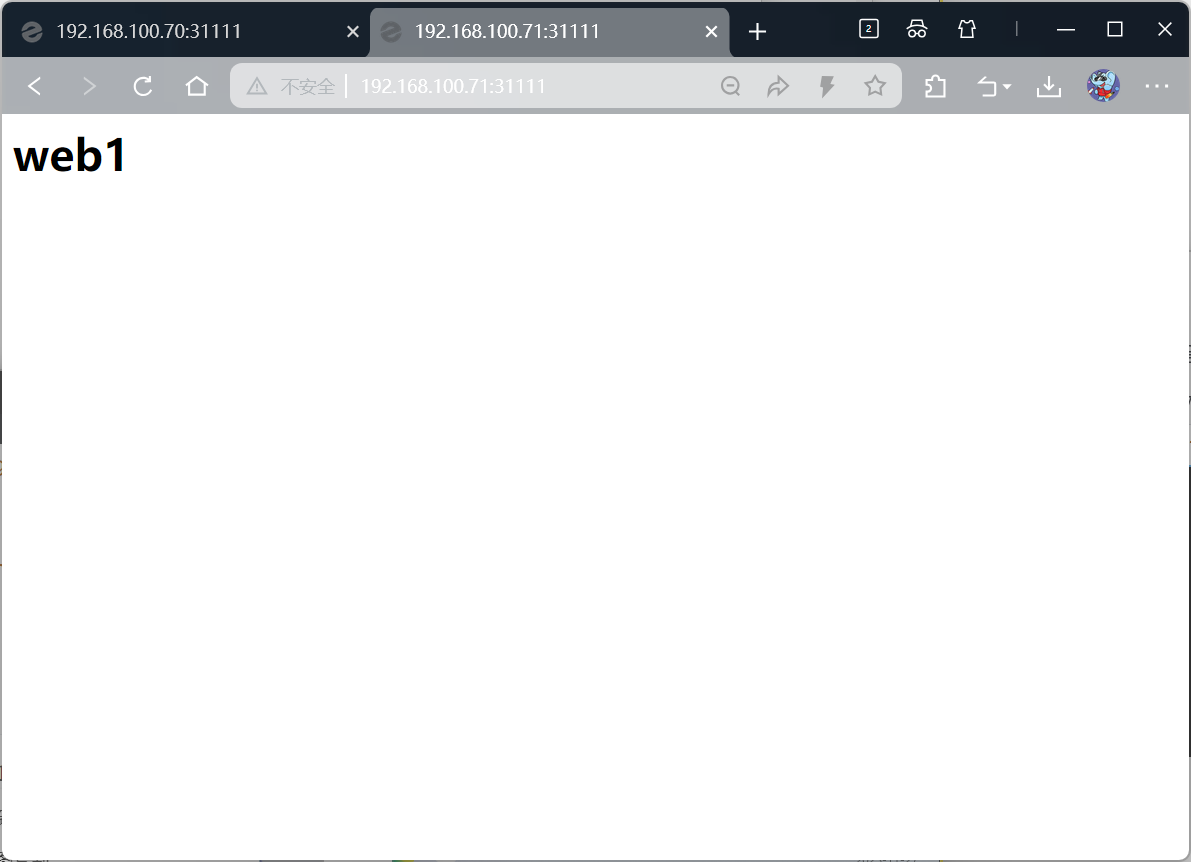

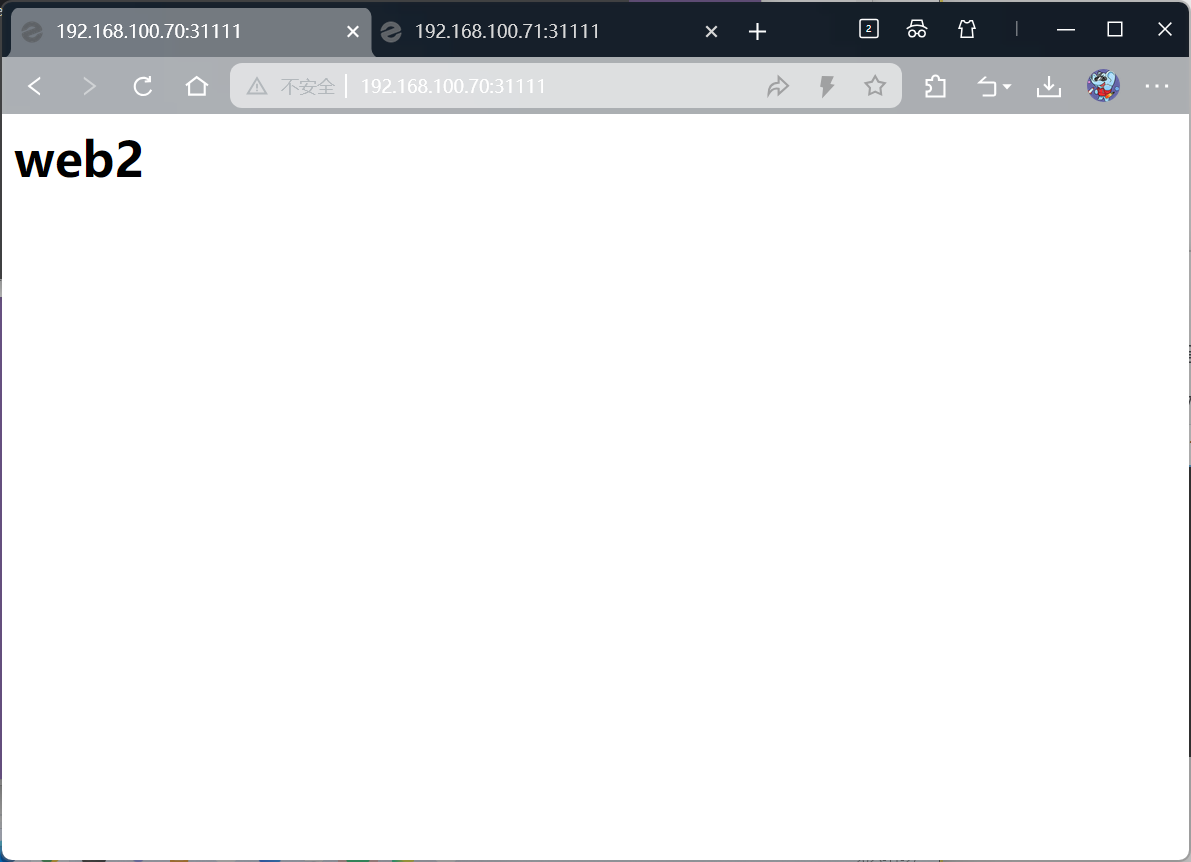

#浏览器访问node节点ip地址就可以访问nginx网站

验证是否可以实现负载均衡

bash

[root@master nodeport]# kubectl exec -it pods/nginx-nodeport-7f87fb64cc-gmk2k -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # echo "<h1>web1<h1>" > index.html

/usr/share/nginx/html #

/usr/share/nginx/html # exit

[root@master nodeport]#

[root@master nodeport]# kubectl exec -it pods/nginx-nodeport-7f87fb64cc-ps2q6 -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # echo "<h1>web2<h1>" > index.html

/usr/share/nginx/html #

/usr/share/nginx/html # exit

[root@master nodeport]#

#先去浏览器访问看

bash

#curl可以查看负载均衡的轮询效果

[root@master nodeport]#

[root@master nodeport]# curl http://192.168.100.70:31111

<h1>web1<h1>

[root@master nodeport]# curl http://192.168.100.70:31111

<h1>web2<h1>

[root@master nodeport]# curl http://192.168.100.70:31111

<h1>web1<h1>

[root@master nodeport]# curl http://192.168.100.70:31111

<h1>web2<h1>

[root@master nodeport]# curl http://192.168.100.70:31111

<h1>web1<h1>

[root@master nodeport]# curl http://192.168.100.70:31111

<h1>web2<h1>

[root@master nodeport]# curl http://192.168.100.71:31111

<h1>web1<h1>

[root@master nodeport]# curl http://192.168.100.71:31111

<h1>web2<h1>

[root@master nodeport]# curl http://192.168.100.71:31111

<h1>web1<h1>

[root@master nodeport]# curl http://192.168.100.71:31111

<h1>web2<h1>

[root@master nodeport]# curl http://192.168.100.71:31111

<h1>web1<h1>

[root@master nodeport]# curl http://192.168.100.71:31111

<h1>web2<h1>

[root@master nodeport]# LoadBalancer

LoadBalancer 集群外访问完整流程

完整访问链路

-

用户 → 域名

用户通过配置好的域名发起请求,域名提前解析到

云厂商 LB 的公网 IP

-

域名 → 云服务提供商 LB 服务

云 LB(负载均衡器)接收请求,做第一层负载均衡,分发到集群可用节点

-

云 LB → NodeIP:NodePort

云 LB 将请求转发到集群任意健康节点的NodeIP:NodePort(NodePort 是该 Service 绑定的节点端口)

-

NodeIP:NodePort → Service(ClusterIP:port)

节点接收请求后,通过 kube-proxy 规则转发到 Service 的 ClusterIP:port

-

Service → Pod IP:targetPort

Service 做第二层负载均衡,将请求转发到后端 Pod 的真实 IP 和容器监听端口(targetPort)

极简链路总结:用户→域名→云 LB 公网 IP→NodeIP:NodePort→Service→Pod IP:targetPort

对应关系

- 对外暴露入口:域名 → 云 LB 公网 IP

- 集群接入入口:NodeIP:NodePort

- 集群内转发:Service(ClusterIP:port)

- 服务落地:Pod IP:targetPort

MetalLB

MetalLB 是自建 / 裸金属 Kubernetes 集群的 LoadBalancer 替代方案,解决原生 LoadBalancer 依赖云厂商(AWS / 阿里云等)无法在自建集群使用的问题,为 Service 提供外部网络负载均衡能力。

两大核心功能

- 外部 IP 地址分配

- 类似 DHCP 功能,为 LoadBalancer 类型 Service 分配提前规划好的外部 IP 网段(需配置 IP 池),替代云厂商自动分配公网 IP 的能力。

- 网络外部通告

- 让集群外的网络感知到已分配的外部 IP 存在(即告知外部网络 "该 IP 归属 K8s 集群")。

- 通过两种标准路由协议实现:

- ARP/NDP(二层模式):适用于小型局域网集群,配置简单。

- BGP(三层模式):适用于大型、跨网络集群,路由效率更高。

bash

[root@master ~]# mkdir lb

[root@master ~]#

[root@master ~]# cd lb

[root@master lb]#

[root@master lb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml

--2025-11-28 09:46:24-- https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.110.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 91 [text/plain]

Saving to: 'namespace.yaml'

100%[======================================================================================================>] 91 --.-K/s in 0.007s

2025-11-28 09:46:36 (12.6 KB/s) - 'namespace.yaml' saved [91/91]

[root@master lb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml

--2025-11-28 09:46:40-- https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 9383 (9.2K) [text/plain]

Saving to: 'metallb.yaml'

100%[======================================================================================================>] 9,383 20.1KB/s in 0.5s

2025-11-28 09:46:41 (20.1 KB/s) - 'metallb.yaml' saved [9383/9383]

[root@master lb]# ls

metallb.yaml namespace.yaml

[root@master lb]# kubectl apply -f namespace.yaml

namespace/metallb-system created

[root@master lb]# kubectl get all -n metallb-system

No resources found in metallb-system namespace.

[root@master lb]# kubectl apply -f metallb.yaml

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

role.rbac.authorization.k8s.io/controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

rolebinding.rbac.authorization.k8s.io/controller created

daemonset.apps/speaker created

deployment.apps/controller created

resource mapping not found for name: "controller" namespace: "" from "metallb.yaml": no matches for kind "PodSecurityPolicy" in version "policy/v1beta1"

ensure CRDs are installed first

resource mapping not found for name: "speaker" namespace: "" from "metallb.yaml": no matches for kind "PodSecurityPolicy" in version "policy/v1beta1"

ensure CRDs are installed first

[root@master lb]# kubectl get ns

NAME STATUS AGE

default Active 14d

kube-node-lease Active 14d

kube-public Active 14d

kube-system Active 14d

kubernetes-dashboard Active 13d

metallb-system Active 41m

[root@master lb]# kubectl get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-8d6664589-nr6pl 1/1 Running 0 36s

speaker-47x95 1/1 Running 0 36s

speaker-5npql 1/1 Running 0 36s

[root@master lb]# kubectl get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-8d6664589-l9vq4 1/1 Running 0 80s

speaker-gzqb9 1/1 Running 0 80s

speaker-kxpk9 1/1 Running 0 80s

[root@master lb]# kubectl get pod -o wide -n metallb-system

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

controller-8d6664589-l9vq4 1/1 Running 0 4m26s 10.244.104.4 node2 <none> <none>

speaker-gzqb9 1/1 Running 0 4m26s 192.168.100.70 node1 <none> <none>

speaker-kxpk9 1/1 Running 0 4m26s 192.168.100.71 node2 <none> <none>

[root@master lb]# kubectl get configmap -n metallb-system

NAME DATA AGE

kube-root-ca.crt 1 5m33s

[root@master lb]# cat metallb-conf.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config

namespace: metallb-system

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.100.200-192.168.100.210

[root@master lb]# kubectl apply -f metallb-conf.yaml

configmap/config created

[root@master lb]# kubectl get configmap -n metallb-system

NAME DATA AGE

config 1 4s

kube-root-ca.crt 1 49m

#创建nginx应用资源

[root@master lb]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: metallb-config

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master lb]# kubectl apply -f nginx-deploy.yaml

deployment.apps/metallb-config created

[root@master lb]# kubectl get pods -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-8d6664589-vtvr8 1/1 Running 0 45s

metallb-config-5b6d5cd699-5k7dj 1/1 Running 0 4s

metallb-config-5b6d5cd699-sjsbl 1/1 Running 0 4s

speaker-jhqwb 1/1 Running 0 45s

speaker-w7xj5 1/1 Running 0 45s

#创建service资源

[root@master lb]# cat nginx-svc-lb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-lb

spec:

type: LoadBalancer

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

[root@master lb]# kubectl apply -f nginx-svc-lb.yaml

service/nginx-svc-lb created

[root@master lb]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 14d

nginx-nodeport-svc NodePort 10.97.212.112 <none> 80:31111/TCP 19h

[root@master lb]# kubectl get svc -n metallb-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-svc-lb LoadBalancer 10.103.20.209 192.168.100.200 80:31823/TCP 7s

#浏览器访问网页,有nginx页面

新版本MetalLB

bash

#先清空老版本资源

[root@master lb]# kubectl delete ns metallb-system

namespace "metallb-system" deleted

[root@master lb]# kubectl get ns

NAME STATUS AGE

default Active 14d

kube-node-lease Active 14d

kube-public Active 14d

kube-system Active 14d

kubernetes-dashboard Active 13d

[root@master lb]# kubectl edit configmap -n kube-system kube-proxy

configmap/kube-proxy edited

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs" #检查模式

ipvs:

strictARP: true #设置为true

[root@master new]# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.15.2/config/manifests/metallb-native.yaml

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/servicebgpstatuses.metallb.io created

customresourcedefinition.apiextensions.k8s.io/servicel2statuses.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller configured

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker configured

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller unchanged

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker unchanged

configmap/metallb-excludel2 created

secret/metallb-webhook-cert created

service/metallb-webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created

[root@master new]# kubectl get ns

NAME STATUS AGE

default Active 14d

kube-node-lease Active 14d

kube-public Active 14d

kube-system Active 14d

kubernetes-dashboard Active 13d

metallb-system Active 19s

[root@master new]# kubectl get all -n metallb-system

NAME READY STATUS RESTARTS AGE

pod/controller-8666ddd68b-d6rqq 0/1 ContainerCreating 0 31s

pod/speaker-6j55v 0/1 ContainerCreating 0 31s

pod/speaker-tqdl5 0/1 ContainerCreating 0 31s

pod/speaker-vm8v9 0/1 ContainerCreating 0 31s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/metallb-webhook-service ClusterIP 10.97.128.59 <none> 443/TCP 31s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 3 3 0 3 0 kubernetes.io/os=linux 31s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 0/1 1 0 31s

NAME DESIRED CURRENT READY AGE

replicaset.apps/controller-8666ddd68b 1 1 0 31s

[root@master new]# kubectl get pods -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-8666ddd68b-d6rqq 1/1 Running 0 3m14s

speaker-6j55v 1/1 Running 0 3m14s

speaker-tqdl5 1/1 Running 0 3m14s

speaker-vm8v9 1/1 Running 0 3m14s不需要创建configmap资源对象,而是直接使用IPAddressPool资源

bash

[root@master new]# cat ipaddresspool.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 192.168.100.210-192.168.100.220

[root@master new]# kubectl apply -f ipaddresspool.yaml

ipaddresspool.metallb.io/first-pool created

[root@master new]# cd ..

[root@master lb]#

[root@master lb]# kubectl apply -f nginx-deploy.yaml

deployment.apps/metallb-config created

[root@master lb]# kubectl get pods -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-8666ddd68b-d6rqq 1/1 Running 0 150m

metallb-config-5b6d5cd699-9gwc7 1/1 Running 0 27s

metallb-config-5b6d5cd699-m2vq9 1/1 Running 0 27s

speaker-6j55v 1/1 Running 0 150m

speaker-tqdl5 1/1 Running 0 150m

speaker-vm8v9 1/1 Running 0 150m

[root@master lb]#

[root@master lb]# kubectl apply -f nginx-svc-lb.yaml

service/nginx-svc-lb created

[root@master lb]# kubectl get pods,svc -n metallb-system

NAME READY STATUS RESTARTS AGE

pod/controller-8666ddd68b-d6rqq 1/1 Running 0 151m

pod/metallb-config-5b6d5cd699-9gwc7 1/1 Running 0 55s

pod/metallb-config-5b6d5cd699-m2vq9 1/1 Running 0 55s

pod/speaker-6j55v 1/1 Running 0 151m

pod/speaker-tqdl5 1/1 Running 0 151m

pod/speaker-vm8v9 1/1 Running 0 151m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/metallb-webhook-service ClusterIP 10.97.128.59 <none> 443/TCP 151m

service/nginx-svc-lb LoadBalancer 10.103.177.60 192.168.100.210 80:32220/TCP 10s

#获取到地址210

#去浏览器查看验证

#如下图

ExternalName

为 Kubernetes 集群内的 Pod 提供访问外部服务的便捷、稳定途径,本质是通过域名映射实现集群内与外部服务的互通,无需复杂代理配置。

公网域名引入

bash

#清空状态

[root@master ~]# cd service/

[root@master service]# mkdir exname

[root@master service]# cd exname/

[root@master exname]# kubectl get ns

NAME STATUS AGE

default Active 14d

kube-node-lease Active 14d

kube-public Active 14d

kube-system Active 14d

kubernetes-dashboard Active 13d

metallb-system Active 162m

[root@master exname]# kubectl delete ns metallb-system

namespace "metallb-system" deleted

[root@master exname]#

[root@master exname]# kubectl get svc -n kube-system | grep dns

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 14d

[root@master exname]# dig -t a www.baidu.com @10.96.0.10

; <<>> DiG 9.9.4-RedHat-9.9.4-50.el7 <<>> -t a www.baidu.com @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 51448

;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.baidu.com. IN A

;; ANSWER SECTION:

www.baidu.com. 5 IN CNAME www.a.shifen.com.

www.a.shifen.com. 5 IN CNAME www.wshifen.com.

www.wshifen.com. 5 IN A 103.235.46.102

www.wshifen.com. 5 IN A 103.235.46.115

;; Query time: 178 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Fri Nov 28 14:01:10 CST 2025

;; MSG SIZE rcvd: 192

bash

[root@master exname]# cat external.yaml

apiVersion: v1

kind: Service

metadata:

name: my-external

namespace: default

spec:

type: ExternalName

externalName: www.baidu.com

[root@master exname]# kubectl apply -f external.yaml

service/my-external created

[root@master exname]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

my-external ExternalName <none> www.baidu.com <none> 5s

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 14d

nginx-nodeport-svc NodePort 10.97.212.112 <none> 80:31111/TCP 22h

#解析

[root@master exname]# dig -t A my-external.default.svc.cluster.local. @10.96.0.10

; <<>> DiG 9.9.4-RedHat-9.9.4-50.el7 <<>> -t A my-external.default.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 22438

;; flags: qr aa rd; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;my-external.default.svc.cluster.local. IN A

;; ANSWER SECTION:

my-external.default.svc.cluster.local. 5 IN CNAME www.baidu.com.

www.baidu.com. 5 IN CNAME www.a.shifen.com.

www.a.shifen.com. 5 IN A 180.101.51.73

www.a.shifen.com. 5 IN A 180.101.49.44

;; Query time: 186 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Fri Nov 28 14:07:31 CST 2025

;; MSG SIZE rcvd: 237

#开启测试Pod来解析域名

[root@master exname]# kubectl run -it expod --image=busybox:1.28

If you don't see a command prompt, try pressing enter.

/ # nslookup www.baidu.com

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: www.baidu.com

Address 1: 103.235.46.115

Address 2: 103.235.46.102

/ # nslookup my-external.default.svc.cluster.local.

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: my-external.default.svc.cluster.local.

Address 1: 240e:e9:6002:1fd:0:ff:b0e1:fe69

Address 2: 240e:e9:6002:1ac:0:ff:b07e:36c5

Address 3: 180.101.51.73

Address 4: 180.101.49.44

/ # 不同命名空间访问

bash

[root@master exname]# cat pod-ns1.yaml

apiVersion: v1

kind: Namespace

metadata:

namespace: ns1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ns1

namespace: ns1

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc1

namespace: ns1

spec:

clusterIP: None

selector:

app: nginx

ports:

- port: 80

targetPort: 80

---

#跨命名空间访问

apiVersion: v1

kind: Service

metadata:

name: externalname1

namespace: ns1

spec:

type: ExternalName

externalName: svc2.ns2.svc.cluster.local

[root@master exname]# kubectl apply -f pod-ns1.yaml

namespace/ns1 created

deployment.apps/nginx-ns1 created

service/svc1 created

service/externalname1 created

[root@master exname]#

[root@master exname]#

[root@master exname]# kubectl get pod,svc -n ns1

NAME READY STATUS RESTARTS AGE

pod/nginx-ns1-5b6d5cd699-c2thd 1/1 Running 0 23s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/externalname1 ExternalName <none> svc2.ns2.svc.cluster.local <none> 23s

service/svc1 ClusterIP None <none> 80/TCP 23s

[root@master exname]# kubectl get pod -o wide -n ns1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ns1-5b6d5cd699-c2thd 1/1 Running 0 33s 10.244.104.17 node2 <none> <none>

[root@master exname]# dig -t a svc1.ns1.svc.cluster.local. @10.96.0.10

; <<>> DiG 9.9.4-RedHat-9.9.4-50.el7 <<>> -t a svc1.ns1.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 58197

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;svc1.ns1.svc.cluster.local. IN A

;; ANSWER SECTION:

svc1.ns1.svc.cluster.local. 30 IN A 10.244.104.17

;; Query time: 1 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Fri Nov 28 15:14:37 CST 2025

;; MSG SIZE rcvd: 97

bash

[root@master exname]# cat pod-ns2.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ns2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ns2

namespace: ns2

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc2

namespace: ns2

spec:

clusterIP: None

selector:

app: nginx

ports:

- port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: externalname2

namespace: ns2

spec:

type: ExternalName

externalName: svc1.ns1.svc.cluster.local

[root@master exname]# kubectl apply -f pod-ns2.yaml

namespace/ns2 created

deployment.apps/nginx-ns2 created

service/svc2 created

service/externalname2 created

[root@master exname]# kubectl get pods,svc -n ns2

NAME READY STATUS RESTARTS AGE

pod/nginx-ns2-5b6d5cd699-zxs2j 1/1 Running 0 41s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/externalname2 ExternalName <none> svc1.ns1.svc.cluster.local <none> 41s

service/svc2 ClusterIP None <none> 80/TCP 41s

[root@master exname]# kubectl get pods -o wide -n ns2

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ns2-5b6d5cd699-zxs2j 1/1 Running 0 63s 10.244.104.15 node2 <none> <none>

#解析:在1解2得1

[root@master exname]# kubectl get pods -n ns1

NAME READY STATUS RESTARTS AGE

nginx-ns1-5b6d5cd699-c2thd 1/1 Running 0 9m17s

[root@master exname]# kubectl exec -it pods/nginx-ns1-5b6d5cd699-c2thd -n ns1 -- /bin/sh

/ # nslookup svc2.ns2.svc.cluster.local.

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: svc2.ns2.svc.cluster.local

Address: 10.244.104.15

/ #

[root@master exname]# kubectl get pods -o wide -n ns2

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ns2-5b6d5cd699-zxs2j 1/1 Running 0 3m54s 10.244.104.15 node2 <none> <none>sessionAffinity

会话粘黏

设置sessionAffinity为clientip(类似nginx的ip_hash算法、lvs的sh算法)

bash

#创建nginx资源使用clusterip访问

[root@master ~]# cd service/

[root@master service]# mkdir session

[root@master service]#

[root@master service]# cd session/

[root@master session]# rz -E

rz waiting to receive.

[root@master session]# cat nginx-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: c1

image: nginx:1.26-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

app: nginx

type: ClusterIP

ports:

- port: 80

protocol: TCP

targetPort: 80

#应用并查看资源

[root@master session]# kubectl apply -f nginx-svc.yaml

deployment.apps/nginx-deploy created

service/nginx-svc created

[root@master session]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deploy-5b6d5cd699-8rwf5 1/1 Running 0 2m6s

nginx-deploy-5b6d5cd699-9mb72 1/1 Running 0 4s

nginx-nodeport-7f87fb64cc-gmk2k 1/1 Running 2 (2d17h ago) 3d18h

nginx-nodeport-7f87fb64cc-ps2q6 1/1 Running 2 (2d17h ago) 3d18h

[root@master session]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 17d

nginx NodePort 10.109.182.190 <none> 80:30578/TCP 17d

nginx-nodeport-svc NodePort 10.97.212.112 <none> 80:31111/TCP 3d18h

nginx-svc ClusterIP 10.97.188.204 <none> 80/TCP 2m26s

[root@master session]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.97.188.204

IPs: 10.97.188.204

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.14:80,10.244.104.8:80,10.244.166.144:80 + 1 more...

Session Affinity: None

Events: <none>

#修改首页文件内容,便于区分

[root@master session]# kubectl exec -it pods/nginx-deploy-5b6d5cd699-8rwf5 -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls

50x.html index.html

/usr/share/nginx/html # echo "web1" > index.html

/usr/share/nginx/html # cat index.html

web1

/usr/share/nginx/html # exit

[root@master session]#

[root@master session]# kubectl exec -it pods/nginx-deploy-5b6d5cd699-9mb72 -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # echo "web2" > index.html

/usr/share/nginx/html # cat index.html

web2

/usr/share/nginx/html # exit

[root@master session]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-5b6d5cd699-8rwf5 1/1 Running 0 6m44s 10.244.166.144 node1 <none> <none>

nginx-deploy-5b6d5cd699-9mb72 1/1 Running 0 4m42s 10.244.104.8 node2 <none> <none>

nginx-nodeport-7f87fb64cc-gmk2k 1/1 Running 2 (2d17h ago) 3d18h 10.244.104.14 node2 <none> <none>

nginx-nodeport-7f87fb64cc-ps2q6 1/1 Running 2 (2d17h ago) 3d18h 10.244.166.148 node1 <none> <none>

[root@master session]# curl http://10.244.166.144

web1

[root@master session]# curl http://10.244.104.8

web2

#更改Session Affinity选项

[root@master session]# kubectl patch svc nginx-svc -p '{"spec":{"sessionAffinity":"ClientIP"}}'

service/nginx-svc patched

#查看结果

[root@master session]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.97.188.204

IPs: 10.97.188.204

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.14:80,10.244.104.8:80,10.244.166.144:80 + 1 more...

Session Affinity: ClientIP

Events: <none>

#访问clusterip后发现不会实现负载均衡

#访问粘黏,第1次访问哪个pod,后面就一直访问这个pod,直到失效时间

[root@master session]# curl http://10.97.188.204

web2

[root@master session]# curl http://10.97.188.204

web2

[root@master session]# curl http://10.97.188.204

web2

[root@master session]# curl http://10.97.188.204

web2S

nginx-deploy-5b6d5cd699-8rwf5 1/1 Running 0 6m44s 10.244.166.144 node1

nginx-deploy-5b6d5cd699-9mb72 1/1 Running 0 4m42s 10.244.104.8 node2

nginx-nodeport-7f87fb64cc-gmk2k 1/1 Running 2 (2d17h ago) 3d18h 10.244.104.14 node2

nginx-nodeport-7f87fb64cc-ps2q6 1/1 Running 2 (2d17h ago) 3d18h 10.244.166.148 node1

root@master session\]# curl http://10.244.166.144 web1 \[root@master session\]# curl http://10.244.104.8 web2 #更改Session Affinity选项 \[root@master session\]# kubectl patch svc nginx-svc -p '{"spec":{"sessionAffinity":"ClientIP"}}' service/nginx-svc patched #查看结果 \[root@master session\]# kubectl describe svc nginx-svc Name: nginx-svc Namespace: default Labels: Annotations: Selector: app=nginx Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 10.97.188.204 IPs: 10.97.188.204 Port: 80/TCP TargetPort: 80/TCP Endpoints: 10.244.104.14:80,10.244.104.8:80,10.244.166.144:80 + 1 more... Session Affinity: ClientIP Events: #访问clusterip后发现不会实现负载均衡 #访问粘黏,第1次访问哪个pod,后面就一直访问这个pod,直到失效时间 \[root@master session\]# curl http://10.97.188.204 web2 \[root@master session\]# curl http://10.97.188.204 web2 \[root@master session\]# curl http://10.97.188.204 web2 \[root@master session\]# curl http://10.97.188.204 web2 ``` ```