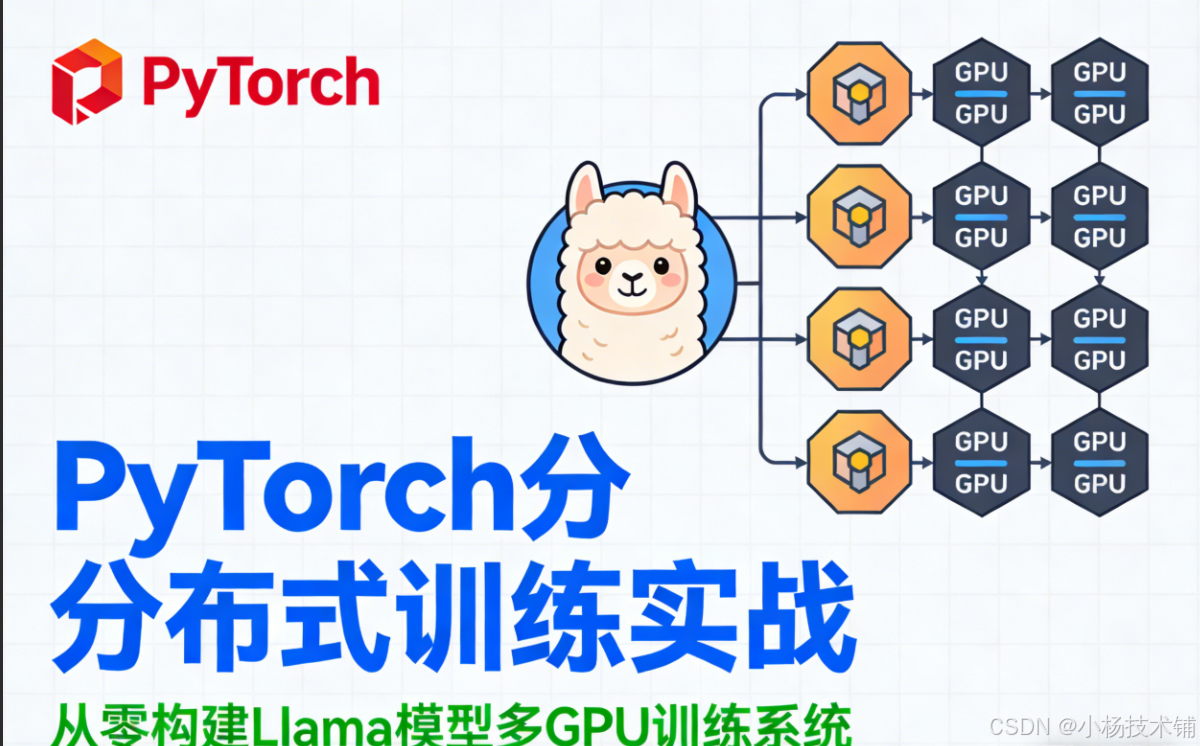

本文将手把手教你如何实现完整的Llama模型分布式训练系统,涵盖模型架构、数据预处理、多GPU并行训练等核心技术。

1. 引言:大模型训练的分布式挑战

随着大语言模型参数规模突破千亿级别,单卡训练已无法满足需求。本文将以Llama架构为例,详细讲解如何使用PyTorch的DistributedDataParallel(DDP)技术实现高效的多GPU分布式训练。

2. 模型架构设计

2.1 Llama配置类

python

import dataclasses

import torch

import torch.nn as nn

@dataclasses.dataclass

class LlamaConfig:

"""Llama模型超参数配置"""

vocab_size: int = 50000 # 词表大小

max_position_embeddings: int = 2048 # 最大序列长度

hidden_size: int = 768 # 隐藏层维度

intermediate_size: int = 4 * 768 # MLP中间层维度

num_hidden_layers: int = 12 # Transformer层数

num_attention_heads: int = 12 # 注意力头数

num_key_value_heads: int = 3 # GQA的KV头数2.2 旋转位置编码(RoPE)

python

class RotaryPositionEncoding(nn.Module):

"""旋转位置编码(RoPE)模块"""

def __init__(self, dim: int, max_position_embeddings: int):

super().__init__()

self.dim = dim

self.max_position_embeddings = max_position_embeddings

# 计算频率矩阵

inv_freq = 1.0 / (10000.0 ** (torch.arange(0, dim, 2) / dim))

inv_freq = torch.cat([inv_freq, inv_freq], dim=-1)

# 生成位置序列

position = torch.arange(max_position_embeddings)

sinusoid_inp = torch.outer(position, inv_freq)

# 注册为缓冲区(不参与梯度更新)

self.register_buffer("cos", sinusoid_inp.cos())

self.register_buffer("sin", sinusoid_inp.sin())

def forward(self, x: torch.Tensor) -> torch.Tensor:

batch_size, seq_len, num_heads, head_dim = x.shape

dtype = x.dtype

# 获取当前序列长度的cos/sin值

cos = self.cos[:seq_len].view(1, seq_len, 1, -1).to(dtype)

sin = self.sin[:seq_len].view(1, seq_len, 1, -1).to(dtype)

# 应用旋转位置编码

x1, x2 = x.chunk(2, dim=-1)

rotated = torch.cat([-x2, x1], dim=-1)

return x * cos + rotated * sin2.3 分组查询注意力(GQA)

python

class LlamaAttention(nn.Module):

"""分组查询注意力机制"""

def __init__(self, config: LlamaConfig):

super().__init__()

self.hidden_size = config.hidden_size

self.num_heads = config.num_attention_heads

self.head_dim = self.hidden_size // self.num_heads

self.num_kv_heads = config.num_key_value_heads

# 验证维度可整除

assert self.head_dim * self.num_heads == self.hidden_size

# 投影层

self.q_proj = nn.Linear(self.hidden_size, self.num_heads * self.head_dim, bias=False)

self.k_proj = nn.Linear(self.hidden_size, self.num_kv_heads * self.head_dim, bias=False)

self.v_proj = nn.Linear(self.hidden_size, self.num_kv_heads * self.head_dim, bias=False)

self.o_proj = nn.Linear(self.num_heads * self.head_dim, self.hidden_size, bias=False)

def forward(self, hidden_states, rope, attn_mask):

batch_size, seq_len, _ = hidden_states.shape

# 计算Q、K、V

query_states = self.q_proj(hidden_states).view(

batch_size, seq_len, self.num_heads, self.head_dim

)

key_states = self.k_proj(hidden_states).view(

batch_size, seq_len, self.num_kv_heads, self.head_dim

)

value_states = self.v_proj(hidden_states).view(

batch_size, seq_len, self.num_kv_heads, self.head_dim

)

# 应用RoPE

query_states = rope(query_states)

key_states = rope(key_states)

# 调整维度用于高效注意力计算

query_states = query_states.transpose(1, 2)

key_states = key_states.transpose(1, 2)

value_states = value_states.transpose(1, 2)

# 使用PyTorch优化版注意力

attn_output = torch.nn.functional.scaled_dot_product_attention(

query_states,

key_states,

value_states,

attn_mask=attn_mask,

dropout_p=0.0,

enable_gqa=True, # 启用GQA优化

)

# 输出投影

attn_output = attn_output.transpose(1, 2).reshape(

batch_size, seq_len, self.hidden_size

)

return self.o_proj(attn_output)2.4 完整的Llama模型

python

class LlamaForPretraining(nn.Module):

"""用于预训练的完整Llama模型"""

def __init__(self, config: LlamaConfig):

super().__init__()

self.base_model = LlamaModel(config)

self.lm_head = nn.Linear(config.hidden_size, config.vocab_size, bias=False)

def forward(self, input_ids, attn_mask):

hidden_states = self.base_model(input_ids, attn_mask)

return self.lm_head(hidden_states)3. 数据预处理与加载

3.1 自定义数据集类

python

class PretrainingDataset(torch.utils.data.Dataset):

"""预训练数据集处理"""

def __init__(self, dataset, tokenizer, seq_length):

self.dataset = dataset

self.tokenizer = tokenizer

self.seq_length = seq_length

# 特殊token ID

self.bot = tokenizer.token_to_id("[BOT]")

self.eot = tokenizer.token_to_id("[EOT]")

self.pad = tokenizer.token_to_id("[PAD]")

def __len__(self):

return len(self.dataset)

def __getitem__(self, index):

"""获取单个样本并处理为固定长度序列"""

text = self.dataset[index]["text"]

# 编码并添加特殊token

tokens = [self.bot] + self.tokenizer.encode(text).ids + [self.eot]

# 填充或截断到固定长度

token_len = len(tokens)

if token_len < self.seq_length + 1:

pad_len = self.seq_length + 1 - token_len

tokens += [self.pad] * pad_len

# 创建输入和目标序列

input_ids = torch.tensor(tokens[:self.seq_length], dtype=torch.int64)

target_ids = torch.tensor(tokens[1:self.seq_length + 1], dtype=torch.int64)

return input_ids, target_ids3.2 注意力掩码生成

python

def create_causal_mask(batch: torch.Tensor, dtype=torch.float32):

"""创建因果注意力掩码"""

_, seq_len = batch.shape

mask = torch.full(

(seq_len, seq_len),

float('-inf'),

device=batch.device,

dtype=dtype

).triu(diagonal=1)

return mask

def create_padding_mask(batch: torch.Tensor, padding_token_id, dtype=torch.float32):

"""创建填充注意力掩码"""

padded = torch.zeros_like(batch, device=batch.device, dtype=dtype)

padded = padded.masked_fill(batch == padding_token_id, float('-inf'))

mask = padded[:, :, None] + padded[:, None, :]

return mask[:, None, :, :]4. 分布式训练配置

4.1 初始化分布式环境

python

import torch.distributed as dist

from torch.nn.parallel import DistributedDataParallel as DDP

from torch.utils.data.distributed import DistributedSampler

def setup_distributed():

"""初始化分布式训练环境"""

# 初始化进程组(使用NCCL后端)

dist.init_process_group(backend="nccl")

# 获取进程信息

rank = dist.get_rank() # 全局进程ID

local_rank = int(os.environ["LOCAL_RANK"]) # 当前节点内的GPU编号

world_size = dist.get_world_size() # 进程总数

# 设置设备

device = torch.device(f"cuda:{local_rank}")

torch.cuda.set_device(device)

print(f"进程总数: {world_size}, 全局排名: {rank}, 本地排名: {local_rank}")

return rank, local_rank, world_size, device4.2 创建分布式数据加载器

python

def create_dataloader(dataset, tokenizer, seq_length, batch_size, world_size):

"""创建分布式数据加载器"""

# 创建数据集

pretrain_dataset = PretrainingDataset(dataset, tokenizer, seq_length)

# 分布式采样器(确保数据不重复)

sampler = DistributedSampler(

pretrain_dataset,

shuffle=False, # 如需shuffle,需在每个epoch调用sampler.set_epoch()

num_replicas=world_size

)

# 调整批次大小(每个GPU的微批次大小)

micro_batch_size = batch_size // world_size

# 创建数据加载器

dataloader = torch.utils.data.DataLoader(

pretrain_dataset,

batch_size=micro_batch_size,

sampler=sampler,

pin_memory=True, # 启用内存锁定,加速数据传输

num_workers=world_size, # 数据加载进程数

persistent_workers=True, # 保持worker进程活跃

)

return dataloader, sampler5. 完整训练流程

5.1 主训练函数

python

def train_model():

"""主训练函数"""

# 1. 初始化分布式环境

rank, local_rank, world_size, device = setup_distributed()

# 2. 加载数据和分词器

tokenizer = tokenizers.Tokenizer.from_file("bpe_50K.json")

dataset = datasets.load_dataset("HuggingFaceFW/fineweb", "sample-10BT", split="train")

# 3. 创建数据加载器

batch_size = 64 # 总批次大小

seq_length = 512

dataloader, sampler = create_dataloader(

dataset, tokenizer, seq_length, batch_size, world_size

)

# 4. 创建模型

config = LlamaConfig()

model = LlamaForPretraining(config).to(device)

# 5. 使用DDP包装模型

model = DDP(model, device_ids=[local_rank])

model.train()

# 6. 配置优化器和学习率调度器

optimizer = torch.optim.AdamW(

model.parameters(),

lr=1e-3,

betas=(0.9, 0.99),

eps=1e-8,

weight_decay=0.1

)

# 7. 训练循环

epochs = 3

for epoch in range(epochs):

# 设置采样器epoch(如需shuffle)

sampler.set_epoch(epoch)

# 进度条(只在主进程显示)

if rank == 0:

pbar = tqdm.tqdm(dataloader, desc=f"Epoch {epoch+1}/{epochs}")

else:

pbar = dataloader

for batch_id, (input_ids, target_ids) in enumerate(pbar):

# 8. 数据移动到设备

input_ids = input_ids.to(device)

target_ids = target_ids.to(device)

# 9. 创建注意力掩码

attn_mask = create_causal_mask(input_ids) + \

create_padding_mask(input_ids, tokenizer.token_to_id("[PAD]"))

# 10. 前向传播

logits = model(input_ids, attn_mask)

# 11. 计算损失

loss_fn = torch.nn.CrossEntropyLoss(

ignore_index=tokenizer.token_to_id("[PAD]")

)

loss = loss_fn(

logits.view(-1, logits.size(-1)),

target_ids.view(-1)

)

# 12. 反向传播和优化

optimizer.zero_grad()

loss.backward()

# 梯度裁剪

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

# 13. 定期保存检查点(只在主进程)

if rank == 0 and batch_id % 1000 == 0:

save_checkpoint(

model.module, # 获取原始模型

optimizer,

epoch,

batch_id,

f"checkpoint_epoch{epoch}_batch{batch_id}.pth"

)

# 14. 更新进度条

if rank == 0:

pbar.set_postfix({"loss": loss.item()})

# 15. 保存最终模型(只在主进程)

if rank == 0:

save_final_model(model.module, "final_model.pth")

# 16. 清理分布式环境

dist.destroy_process_group()5.2 模型保存与加载

python

def save_checkpoint(model, optimizer, epoch, batch, filename):

"""保存训练检查点"""

checkpoint = {

"model_state_dict": model.state_dict(),

"optimizer_state_dict": optimizer.state_dict(),

"epoch": epoch,

"batch": batch,

}

torch.save(checkpoint, filename)

print(f"检查点已保存: {filename}")

def save_final_model(model, filename):

"""保存最终模型"""

torch.save(model.state_dict(), filename)

print(f"模型已保存: {filename}")6. 启动与监控

6.1 启动脚本

python

#!/bin/bash

# train.sh - 分布式训练启动脚本

# 单机多卡训练(4张GPU)

torchrun --standalone --nproc_per_node=4 train_ddp.py

# 多机多卡训练

# 主节点(IP: 192.168.1.100)

# torchrun --nnodes=2 --nproc_per_node=4 --node_rank=0 \

# --master_addr=192.168.1.100 --master_port=29500 \

# train_ddp.py6.2 训练监控

python

def monitor_training():

"""训练过程监控"""

import wandb

# 初始化监控工具

if dist.get_rank() == 0:

wandb.init(project="llama-pretraining", config={

"model_size": "768M",

"batch_size": 64,

"learning_rate": 1e-3,

"num_gpus": dist.get_world_size(),

})

# 在训练循环中添加日志

if dist.get_rank() == 0 and batch_id % 100 == 0:

wandb.log({

"loss": loss.item(),

"learning_rate": optimizer.param_groups[0]["lr"],

"epoch": epoch,

"batch": batch_id,

})7. 性能优化技巧

7.1 混合精度训练

python

from torch.cuda.amp import GradScaler, autocast

def train_with_amp():

"""使用混合精度训练"""

scaler = GradScaler()

for batch in dataloader:

with autocast():

logits = model(input_ids, attn_mask)

loss = loss_fn(logits, target_ids)

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()7.2 梯度累积

python

def train_with_gradient_accumulation(accumulation_steps=4):

"""梯度累积训练"""

optimizer.zero_grad()

for i, batch in enumerate(dataloader):

loss = compute_loss(batch)

# 缩放损失(重要!)

loss = loss / accumulation_steps

loss.backward()

# 每accumulation_steps步更新一次

if (i + 1) % accumulation_steps == 0:

optimizer.step()

optimizer.zero_grad()8. 常见问题排查

8.1 内存溢出问题

python

# 解决方案1:减少批次大小

batch_size = 32 # 调整为更小的值

# 解决方案2:使用梯度检查点

from torch.utils.checkpoint import checkpoint

class LlamaDecoderLayerWithCheckpoint(nn.Module):

def forward(self, x):

return checkpoint(self._forward, x, use_reentrant=False)8.2 数据加载瓶颈

python

# 优化数据加载

dataloader = DataLoader(

dataset,

batch_size=batch_size,

num_workers=4, # 增加worker数量

pin_memory=True,

prefetch_factor=2, # 预取数据

persistent_workers=True,

)9. 完整代码总结

本文提供的完整实现包含以下核心组件:

-

Llama模型架构:包含RoPE、GQA等现代Transformer技术

-

分布式训练框架:基于PyTorch DDP的多GPU并行训练

-

数据处理管道:支持大规模数据集的高效加载

-

训练监控系统:实时监控训练指标

10. 结语

通过本文的实践指南,你可以掌握:

-

大语言模型的核心架构实现

-

PyTorch分布式训练的最佳实践

-

多GPU训练的性能优化技巧

-

生产级训练系统的构建方法

分布式训练是AI工程师必备的核心技能。希望本文能帮助你在实际项目中快速部署高效的训练系统!

资源推荐:

实战建议:建议从单卡调试开始,逐步扩展到多卡,最后实现多机训练。同时建立完善的日志和监控系统,便于问题排查和性能优化。