前面几章我们部署了Kafka及Clickhouse,能够做到Kafka生产并使用Clickhouse进行消费,从而将从其它流式处理系统输出的数据转储到clickhouse数据库中,支持做更进一步的联机数据分析。但某些场合下,从其它系统输出的数据不一定适合需要,那么安装部署一个Flink进行实时的清洗处理就很必要了。

一、本地Flink安装

Flink本身的安装并不复杂,但是与其它Java底色的开源项目一样,它与Java及各种依赖项的版本冲突才是相当要命的地方,尤其是Flink自身在1.11后还经过重构,在部署尤其是在编程过程中带来的麻烦不小。因此,不论是部署还是编程,建议尽量跟随官方的节奏,网上的一些部署方法和程序例子,如果没有明确标明版本,请谨慎参考,往往容易事倍功半------此坑亲摔,三天才爬出来。

老规矩,先关了firewalld.service,disable了/etc/selinux/config再说。

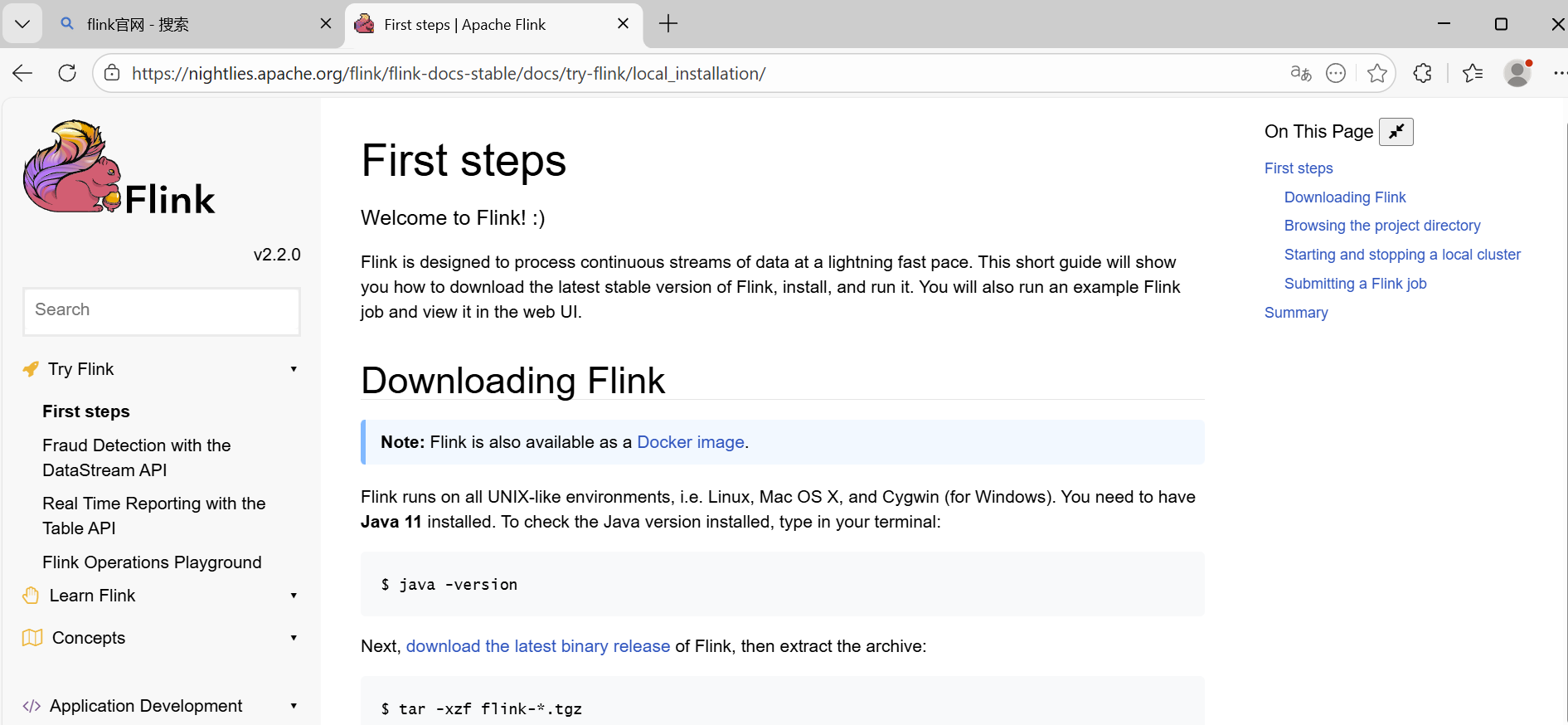

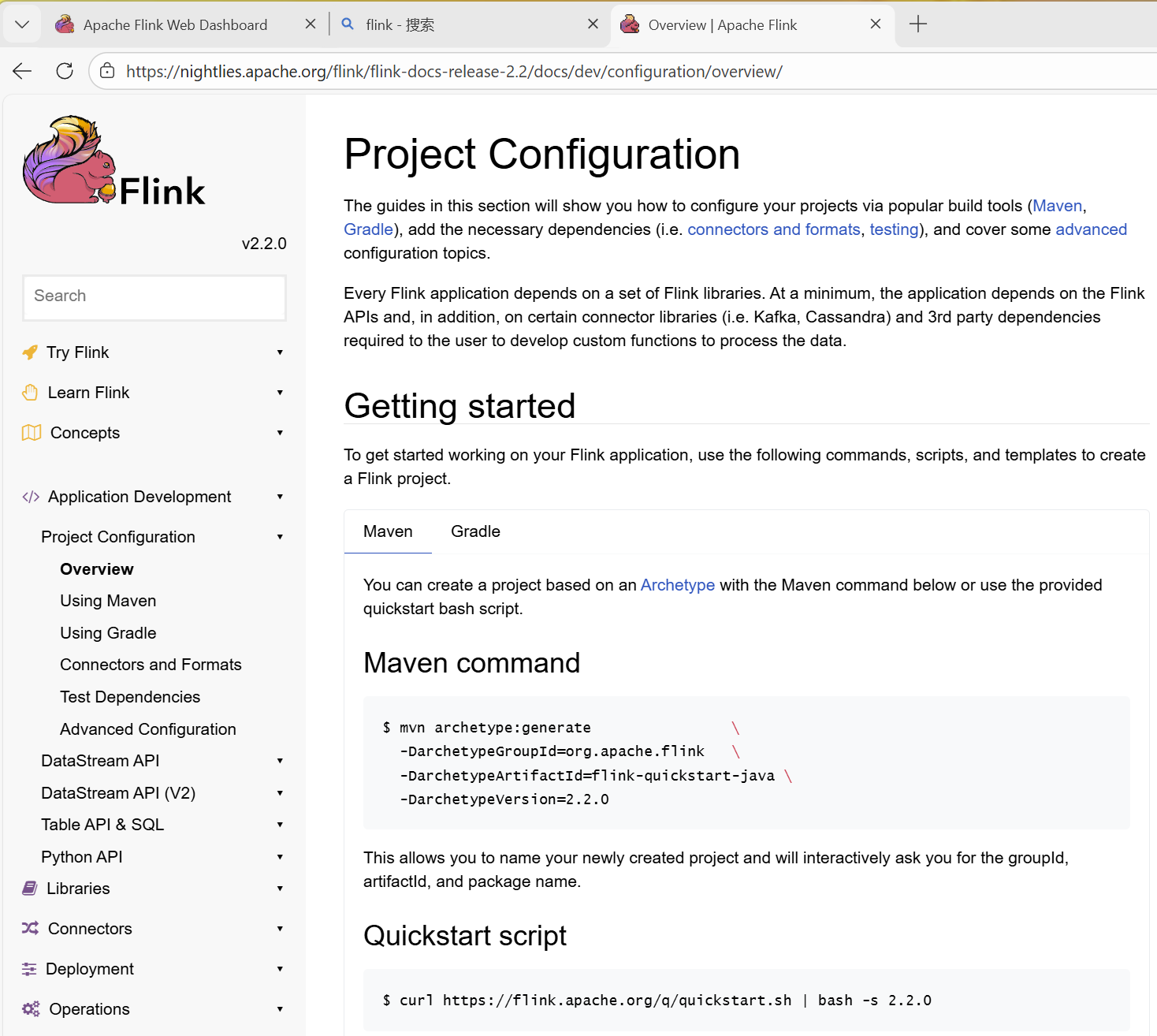

所以即使是下文,一是尽量标注清楚版本号,二是也仅仅建议参考,因为不知猴年马月Flink就又大升级了。官方的部署步骤目前是在下面这个地方官方部署指南:

1. 确认Java版本

官方当前的这个部署指南链接版本如小松鼠右小角所示,是2.2.0版本,其推荐的Java版本是11,如图中所示。**注意是Java 11,不是Java 11+。**网上一些文章虽然也成功在1.8.0和17、21等版本上成功部署,但我没有尝试成功------不是说没有部署成功,而是部署成功后在提交程序时遇到一系列反射操作权限问题(从Java11以后增加了对反射操作的权限控制),并且用网上一些帖子给出的增加执行选项和自定义序列化器等操作均未能解决,想来应该还是Java11与Flink更契合些。

(1) 检查是否安装其它的java版本

既然一定得是java11,首先就需要检查一下是否安装了其它的java版本,使用rpm -qa|grep java命令查看:

[root@localhost ~]# rpm -qa|grep java

java-11-openjdk-demo-11.0.20.1.1-2.el8.x86_64

java-11-openjdk-devel-11.0.20.1.1-2.el8.x86_64

tzdata-java-2024a-1.el8.noarch

java-11-openjdk-11.0.20.1.1-2.el8.x86_64

java-11-openjdk-javadoc-11.0.20.1.1-2.el8.x86_64

java-11-openjdk-jmods-11.0.20.1.1-2.el8.x86_64

java-11-openjdk-headless-11.0.20.1.1-2.el8.x86_64

java-11-openjdk-src-11.0.20.1.1-2.el8.x86_64

javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch

java-11-openjdk-javadoc-zip-11.0.20.1.1-2.el8.x86_64

java-11-openjdk-static-libs-11.0.20.1.1-2.el8.x86_64

[root@localhost ~]# 如果是安装了其它的java版本,比如java17,使用yum remove java-17-*删除即可。

(2)安装Java11-openjdk

[root@localhost ~]# yum search java*

上次元数据过期检查:0:30:58 前,执行于 2026年01月02日 星期五 06时18分05秒。

======================================================================= 名称 和 概况 匹配:java* =======================================================================

java-atk-wrapper.x86_64 : Java ATK Wrapper

javapackages-filesystem.noarch : Java packages filesystem layout

=========================================================================== 名称 匹配:java* ===========================================================================

java-1.8.0-openjdk.x86_64 : OpenJDK 8 Runtime Environment

java-1.8.0-openjdk-accessibility.x86_64 : OpenJDK 8 accessibility connector

java-1.8.0-openjdk-demo.x86_64 : OpenJDK 8 Demos

java-1.8.0-openjdk-devel.x86_64 : OpenJDK 8 Development Environment

java-1.8.0-openjdk-headless.x86_64 : OpenJDK 8 Headless Runtime Environment

java-1.8.0-openjdk-headless-slowdebug.x86_64 : OpenJDK 8 Runtime Environment unoptimised with full debugging on

java-1.8.0-openjdk-javadoc.noarch : OpenJDK 8 API documentation

java-1.8.0-openjdk-javadoc-zip.noarch : OpenJDK 8 API documentation compressed in a single archive

java-1.8.0-openjdk-slowdebug.x86_64 : OpenJDK 8 Runtime Environment unoptimised with full debugging on

java-1.8.0-openjdk-src.x86_64 : OpenJDK 8 Source Bundle

java-11-openjdk.x86_64 : OpenJDK 11 Runtime Environment

java-11-openjdk-demo.x86_64 : OpenJDK 11 Demos

java-11-openjdk-devel.x86_64 : OpenJDK 11 Development Environment

java-11-openjdk-headless.x86_64 : OpenJDK 11 Headless Runtime Environment

java-11-openjdk-javadoc.x86_64 : OpenJDK 11 API documentation

java-11-openjdk-javadoc-zip.x86_64 : OpenJDK 11 API documentation compressed in a single archive

java-11-openjdk-jmods.x86_64 : JMods for OpenJDK 11

java-11-openjdk-src.x86_64 : OpenJDK 11 Source Bundle

java-11-openjdk-static-libs.x86_64 : OpenJDK 11 libraries for static linking

java-17-openjdk.x86_64 : OpenJDK 17 Runtime Environment

java-17-openjdk-demo.x86_64 : OpenJDK 17 Demos

java-17-openjdk-devel.x86_64 : OpenJDK 17 Development Environment

java-17-openjdk-headless.x86_64 : OpenJDK 17 Headless Runtime Environment

java-17-openjdk-javadoc.x86_64 : OpenJDK 17 API documentation

java-17-openjdk-javadoc-zip.x86_64 : OpenJDK 17 API documentation compressed in a single archive

java-17-openjdk-jmods.x86_64 : JMods for OpenJDK 17

java-17-openjdk-src.x86_64 : OpenJDK 17 Source Bundle

java-17-openjdk-static-libs.x86_64 : OpenJDK 17 libraries for static linking

java-21-openjdk.x86_64 : OpenJDK 18 Runtime Environment

java-21-openjdk-demo.x86_64 : OpenJDK 18 Demos

java-21-openjdk-devel.x86_64 : OpenJDK 18 Development Environment

java-21-openjdk-headless.x86_64 : OpenJDK 18 Headless Runtime Environment

java-21-openjdk-javadoc.x86_64 : OpenJDK 18 API documentation

java-21-openjdk-javadoc-zip.x86_64 : OpenJDK 18 API documentation compressed in a single archive

java-21-openjdk-jmods.x86_64 : JMods for OpenJDK 18

java-21-openjdk-src.x86_64 : OpenJDK 18 Source Bundle

java-21-openjdk-static-libs.x86_64 : OpenJDK 18 libraries for static linking

javapackages-tools.noarch : Macros and scripts for Java packaging support在centos stream 8的repo中是有java1.8.0、java11、java17和java21的,使用命令yum install java-11-* -y安装即可

[root@localhost ~]# yum install java-11-* -y

上次元数据过期检查:0:35:57 前,执行于 2026年01月02日 星期五 06时18分05秒。

依赖关系解决。

========================================================================================================================================================================

软件包 架构 版本 仓库 大小

========================================================================================================================================================================

安装:

java-11-openjdk x86_64 1:11.0.20.1.1-2.el8 appstream 473 k

java-11-openjdk-demo x86_64 1:11.0.20.1.1-2.el8 appstream 4.5 M

java-11-openjdk-devel x86_64 1:11.0.20.1.1-2.el8 appstream 3.4 M

java-11-openjdk-headless x86_64 1:11.0.20.1.1-2.el8 appstream 42 M

java-11-openjdk-javadoc x86_64 1:11.0.20.1.1-2.el8 appstream 18 M

java-11-openjdk-javadoc-zip x86_64 1:11.0.20.1.1-2.el8 appstream 42 M

java-11-openjdk-jmods x86_64 1:11.0.20.1.1-2.el8 appstream 342 M

java-11-openjdk-src x86_64 1:11.0.20.1.1-2.el8 appstream 51 M

java-11-openjdk-static-libs x86_64 1:11.0.20.1.1-2.el8 appstream 35 M

安装依赖关系:

copy-jdk-configs noarch 4.0-2.el8 appstream 31 k

javapackages-filesystem noarch 5.3.0-1.module_el8.0.0+11+5b8c10bd appstream 30 k

lksctp-tools x86_64 1.0.18-3.el8 baseos 100 k

ttmkfdir x86_64 3.0.9-54.el8 appstream 62 k

tzdata-java noarch 2024a-1.el8 appstream 268 k

xorg-x11-fonts-Type1 noarch 7.5-19.el8 appstream 522 k

启用模块流:

javapackages-runtime 201801

事务概要

========================================================================================================================================================================

安装 15 软件包

总下载:539 M(3) 检查Java版本以确定安装无误

[root@localhost ~]# java -version

openjdk version "11.0.20.1" 2023-08-24 LTS

OpenJDK Runtime Environment (Red_Hat-11.0.20.1.1-1) (build 11.0.20.1+1-LTS)

OpenJDK 64-Bit Server VM (Red_Hat-11.0.20.1.1-1) (build 11.0.20.1+1-LTS, mixed mode, sharing)2. 下载Flink 2.2.0 并解压安装

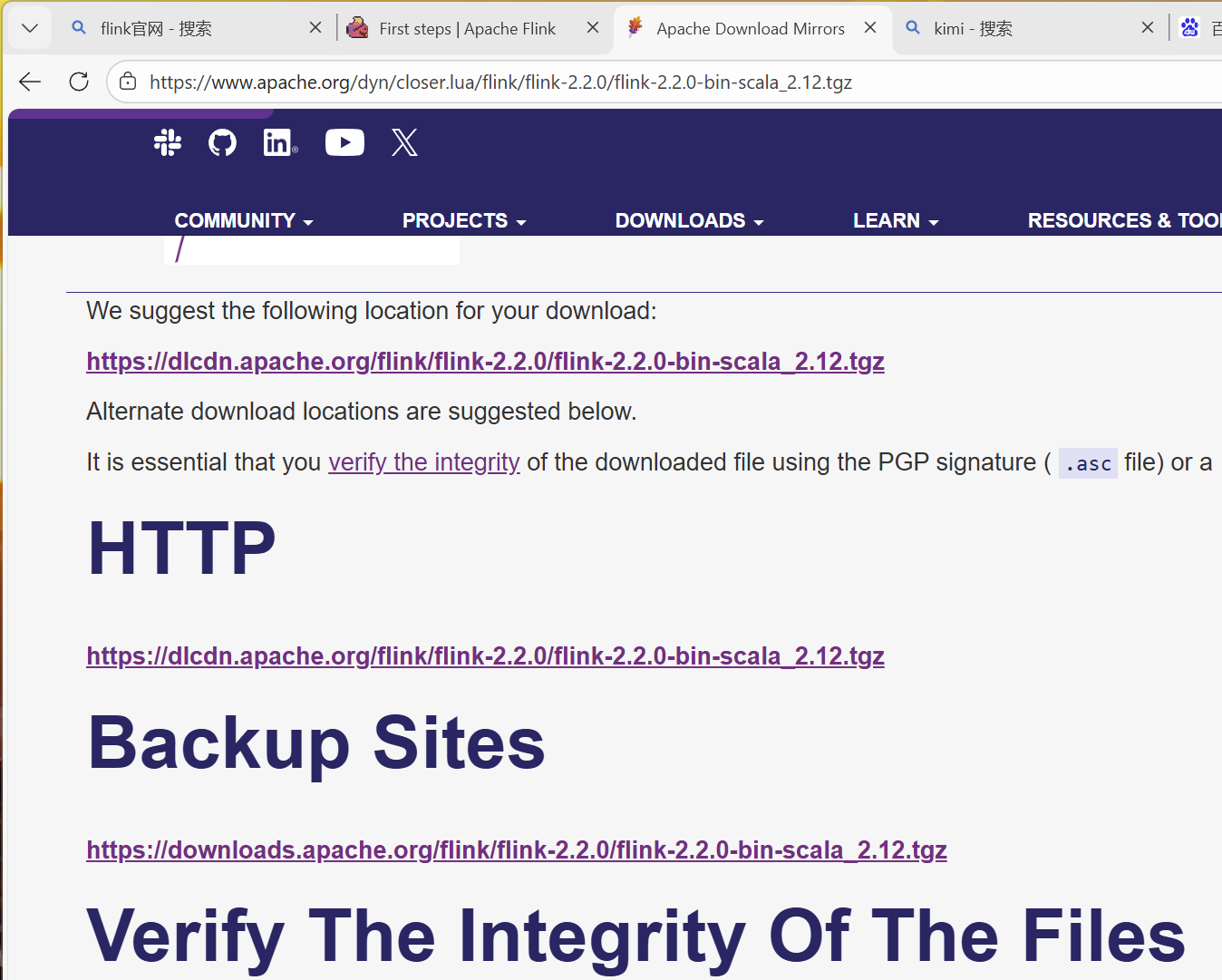

跟随官网步骤给出的链接,可以使用wget下载2.2.0版本的压缩包

(1)下载

[root@localhost ~]# wget https://dlcdn.apache.org/flink/flink-2.2.0/flink-2.2.0-bin-scala_2.12.tgz

--2026-01-02 07:08:24-- https://dlcdn.apache.org/flink/flink-2.2.0/flink-2.2.0-bin-scala_2.12.tgz

正在解析主机 dlcdn.apache.org (dlcdn.apache.org)... 151.101.2.132

正在连接 dlcdn.apache.org (dlcdn.apache.org)|151.101.2.132|:443... 已连接。

已发出 HTTP 请求,正在等待回应... (2)解压

将压缩包解压到opt文件夹(哪都行,一般习惯是在这里):

[root@localhost ~]# tar -xzf flink-2.2.0-bin-scala_2.12.tgz -C /opt解压后:

[root@localhost flink-2.2.0]# ls

bin conf examples lib LICENSE licenses log NOTICE opt plugins README.txt

[root@localhost flink-2.2.0]# 虽然官网没说,也许需要给bin文件夹增加执行权限:

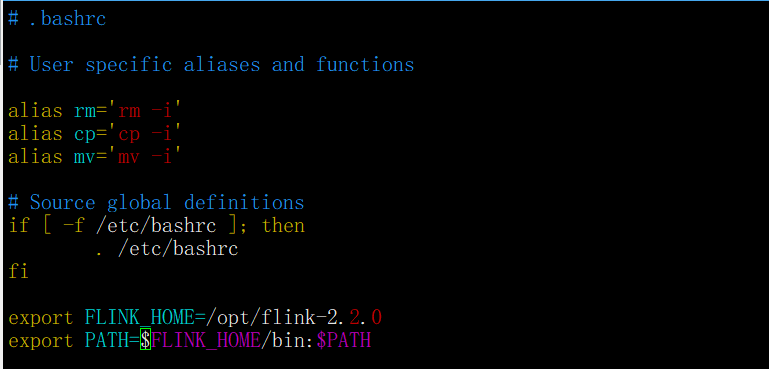

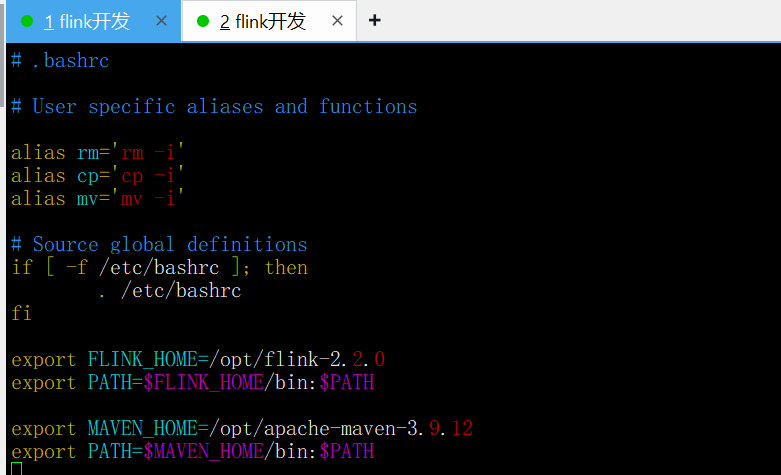

[root@localhost flink-2.2.0]# chmod -R +x bin虽然官网也没说,但是也可以考虑加入PATH参数方便后面执行:

[root@localhost ~]# vim .bashrc

[root@localhost ~]# source .bashrc

3. 启动Flink

bin目录被添加到PATH并更改可执行权限后,可以直接调用旗下的start-cluster.sh脚本启动本地的flink,对应的,调用stop-cluster.sh脚本可以停止。

[root@localhost ~]# start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host localhost.localdomain.

Starting taskexecutor daemon on host localhost.localdomain.

[root@localhost ~]# stop-cluster.sh

Stopping taskexecutor daemon (pid: 4855) on host localhost.localdomain.

Stopping standalonesession daemon (pid: 4280) on host localhost.localdomain.(1)检查进程启动情况

若确实启动了flink,使用ps aux查看时,会有一行很长的flink相关进程,并且可以看到,其中java.lang.reflect=ALL-UNNAMED选项本身就是有的,所以也证实光依靠更改选项,确实无法解决反射权限问题------毕竟这个选项官方默认就是开的。

[root@localhost ~]# ps aux|grep flink

root 4280 56.6 3.8 7057580 302784 pts/1 Sl 07:42 0:05 java -Xmx1073741824 -Xms1073741824 -XX:MaxMetaspaceSize=268435456 -XX:+IgnoreUnrecognizedVMOptions --add-exports=java.rmi/sun.rmi.registry=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.api=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.tree=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED --add-exports=java.security.jgss/sun.security.krb5=ALL-UNNAMED --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/java.net=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.base/java.nio=ALL-UNNAMED --add-opens=java.base/sun.nio.ch=ALL-UNNAMED --add-opens=java.base/java.lang.reflect=ALL-UNNAMED --add-opens=java.base/java.text=ALL-UNNAMED --add-opens=java.base/java.time=ALL-UNNAMED --add-opens=java.base/java.util=ALL-UNNAMED --add-opens=java.base/java.util.concurrent=ALL-UNNAMED --add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED --add-opens=java.base/java.util.concurrent.locks=ALL-UNNAMED -Dlog.file=/opt/flink-2.2.0/log/flink-root-standalonesession-0-localhost.localdomain.log -Dlog4j.configuration=file:/opt/flink-2.2.0/conf/log4j.properties -Dlog4j.configurationFile=file:/opt/flink-2.2.0/conf/log4j.properties -Dlogback.configurationFile=file:/opt/flink-2.2.0/conf/logback.xml -classpath /opt/flink-2.2.0/lib/flink-cep-2.2.0.jar:/opt/flink-2.2.0/lib/flink-connector-files-2.2.0.jar:/opt/flink-2.2.0/lib/flink-csv-2.2.0.jar:/opt/flink-2.2.0/lib/flink-json-2.2.0.jar:/opt/flink-2.2.0/lib/flink-scala_2.12-2.2.0.jar:/opt/flink-2.2.0/lib/flink-table-api-java-uber-2.2.0.jar:/opt/flink-2.2.0/lib/flink-table-planner-loader-2.2.0.jar:/opt/flink-2.2.0/lib/flink-table-runtime-2.2.0.jar:/opt/flink-2.2.0/lib/log4j-1.2-api-2.24.3.jar:/opt/flink-2.2.0/lib/log4j-api-2.24.3.jar:/opt/flink-2.2.0/lib/log4j-core-2.24.3.jar:/opt/flin-2.2.0/lib/log4j-layout-template-json-2.24.3.jar:/opt/flink-2.2.0/lib/log4j-slf4j-impl-2.24.3.jar:/opt/flink-2.2.0/lib/flink-dist-2.2.0.jar:::: org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint -D jobmanager.memory.off-heap.size=134217728b -D jobmanager.memory.jvm-overhead.min=201326592b -D jobmanager.memory.jvm-metaspace.size=268435456b -D jobmanager.memory.heap.size=1073741824b -D jobmanager.memory.jvm-overhead.max=201326592b --configDir /opt/flink-2.2.0/conf

root 4855 65.8 5.0 6775872 394840 pts/1 Sl 07:42 0:05 java -Xmx536870902 -Xms536870902 -XX:MaxDirectMemorySize=268435458 -XX:MaxMetaspaceSize=268435456 -XX:+IgnoreUnrecognizedVMOptions --add-exports=java.rmi/sun.rmi.registry=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.api=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.tree=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED --add-exports=java.security.jgss/sun.security.krb5=ALL-UNNAMED --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/java.net=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.base/java.nio=ALL-UNNAMED --add-opens=java.base/sun.nio.ch=ALL-UNNAMED --add-opens=java.base/java.lang.reflect=ALL-UNNAMED --add-opens=java.base/java.text=ALL-UNNAMED --add-opens=java.base/java.time=ALL-UNNAMED --add-opens=java.base/java.util=ALL-UNNAMED --add-opens=java.base/java.util.concurrent=ALL-UNNAMED --add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED --add-opens=java.base/java.util.concurrent.locks=ALL-UNNAMED -Dlog.file=/opt/flink-2.2.0/log/flink-root-taskexecutor-0-localhost.localdomain.log -Dlog4j.configuration=file:/opt/flink-2.2.0/conf/log4j.properties -Dlog4j.configurationFile=file:/opt/flink-2.2.0/conf/log4j.properties -Dlogback.configurationFile=file:/opt/flink-2.2.0/conf/logback.xml -classpath /opt/flink-2.2.0/lib/flink-cep-2.2.0.jar:/opt/flink-2.2.0/lib/flink-connector-files-2.2.0.jar:/opt/flink-2.2.0/lib/flink-csv-2.2.0.jar:/opt/flink-2.2.0/lib/flink-json-2.2.0.jar:/opt/flink-2.2.0/lib/flink-scala_2.12-2.2.0.jar:/opt/flink-2.2.0/lib/flink-table-api-java-uber-2.2.0.jar:/opt/flink-2.2.0/lib/flink-table-planner-loader-2.2.0.jar:/opt/flink-2.2.0/lib/flink-table-runtime-2.2.0.jar:/opt/flink-2.2.0/lib/log4j-1.2-api-2.24.3.jar:/opt/flink-2.2.0/lib/log4j-api-2.24.3.jar:/opt/flink-2.2.0/lib/log4j-core-2.24.3.jar:/opt/flink-2.2.0/lib/log4j-layout-template-json-2.24.3.jar:/opt/flink-2.2.0/lib/log4j-slf4j-impl-2.24.3.jar:/opt/flink-2.2.0/lib/flink-dist-2.2.0.jar:::: org.apache.flink.runtime.taskexecutor.TaskManagerRunner --configDir /opt/flink-2.2.0/conf -D taskmanager.memory.network.min=134217730b -D taskmanager.cpu.cores=1.0 -D taskmanager.memory.task.off-heap.size=0b -D taskmanager.memory.jvm-metaspace.size=268435456b -D external-resources=none -D taskmanager.memory.jvm-overhead.min=201326592b -D taskmanager.memory.framework.off-heap.size=134217728b -D taskmanager.memory.network.max=134217730b -D taskmanager.memory.framework.heap.size=134217728b -D taskmanager.memory.managed.size=536870920b -D taskmanager.memory.task.heap.size=402653174b -D taskmanager.numberOfTaskSlots=1 -D taskmanager.memory.jvm-overhead.max=201326592b

root 4971 0.0 0.0 222016 1088 pts/1 S+ 07:42 0:00 grep --color=auto flink

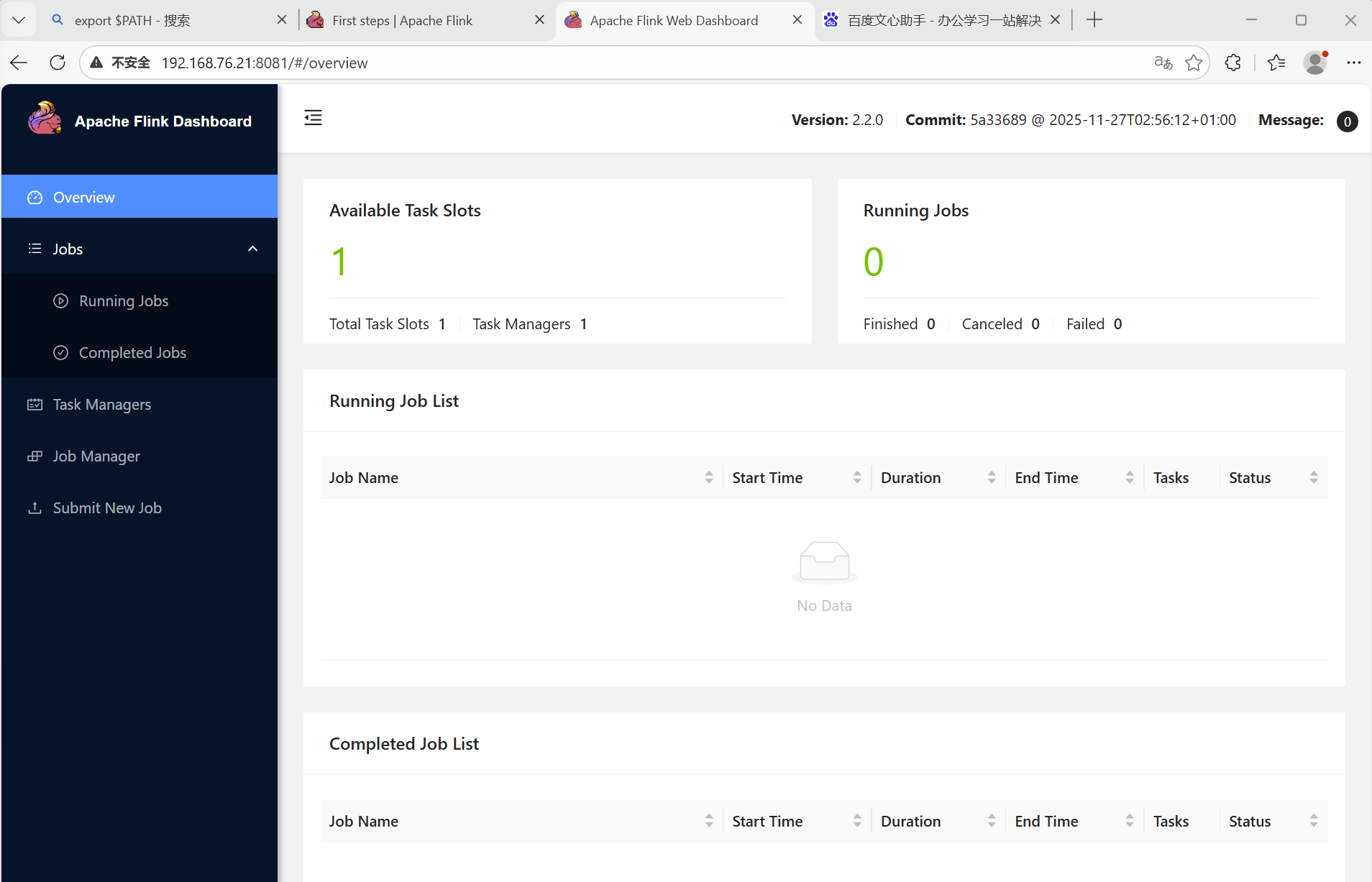

[root@localhost ~]# (2)检查网络服务情况

[root@localhost ~]# netstat -nult |grep 8081

tcp6 0 0 :::8081 :::* LISTEN 进一步,还可以使用当前主机IP地址的8081端口访问WEB页面来确定Flink服务是否已经正常启动:

能看到这个页面,就说明在单机上Flink已经启动成功了。

二、本地任务提交测试

1. 检查输出目录

在提交任务前,首先检查一下输出结果的日志目录,向flink提交的任务,其print输出在默认情况下会打印到.out 日志文件中。它有可能是这样的:

[root@localhost ~]# cd /opt/flink-2.2.0/

[root@localhost flink-2.2.0]# ls

bin conf examples lib LICENSE licenses log NOTICE opt plugins README.txt

[root@localhost flink-2.2.0]# cd log

[root@localhost log]# ls

flink-root-standalonesession-0-localhost.localdomain.log flink-root-taskexecutor-0-localhost.localdomain.log

flink-root-standalonesession-0-localhost.localdomain.log.1 flink-root-taskexecutor-0-localhost.localdomain.log.1

flink-root-standalonesession-0-localhost.localdomain.out flink-root-taskexecutor-0-localhost.localdomain.out

[root@localhost log]# cd ..

[root@localhost flink-2.2.0]# tail log/flink-*.out

==> log/flink-root-standalonesession-0-localhost.localdomain.out <==

==> log/flink-root-taskexecutor-0-localhost.localdomain.out <==

[root@localhost flink-2.2.0]# 2. 提交任务

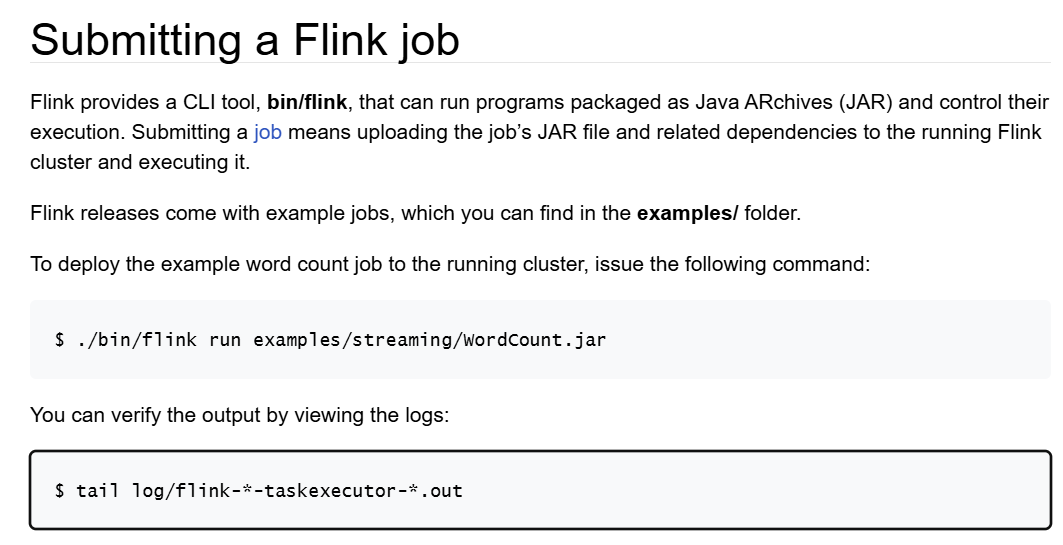

按照官网给出的步骤,可以从命令行提交WordCount测试用例:

执行过程是这样的:

[root@localhost ~]# flink run /opt/flink-2.2.0/examples/streaming/WordCount.jar

Executing example with default input data.

Use --input to specify file input.

Printing result to stdout. Use --output to specify output path.

Job has been submitted with JobID 77021b4b29f2500ff1aaa2bed4cc12b2

Program execution finished

Job with JobID 77021b4b29f2500ff1aaa2bed4cc12b2 has finished.

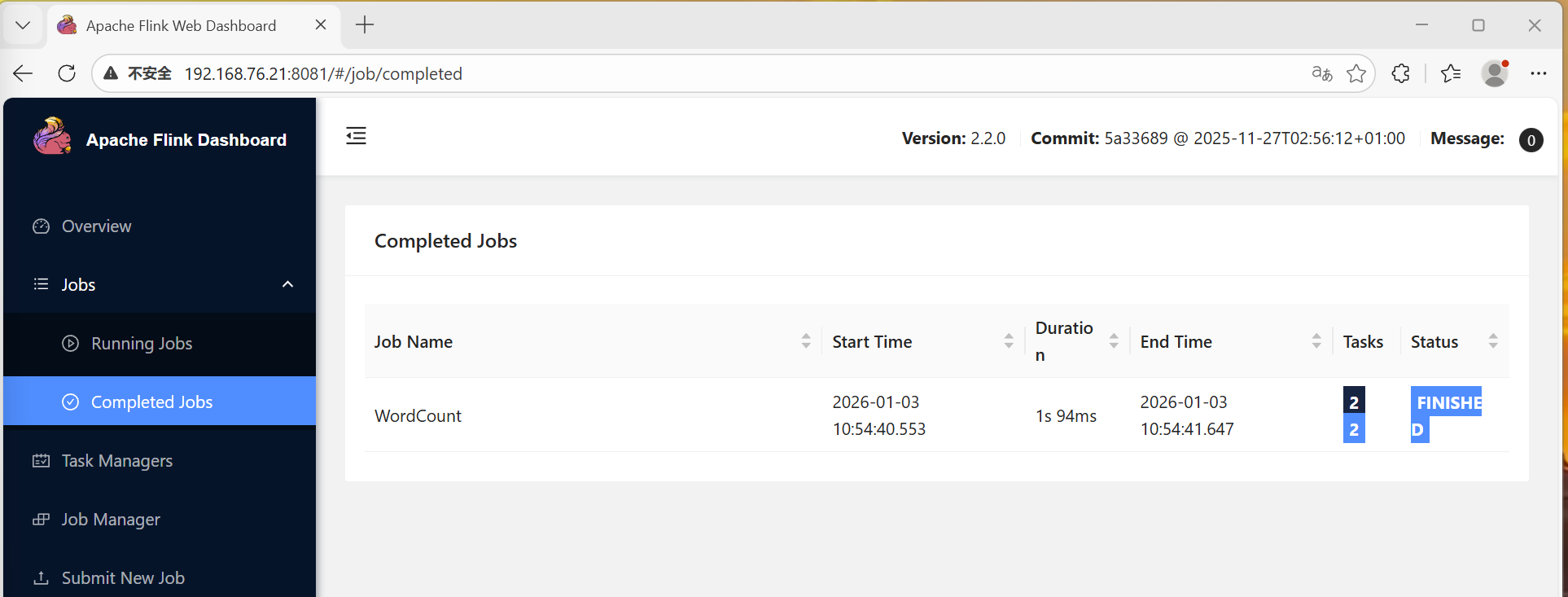

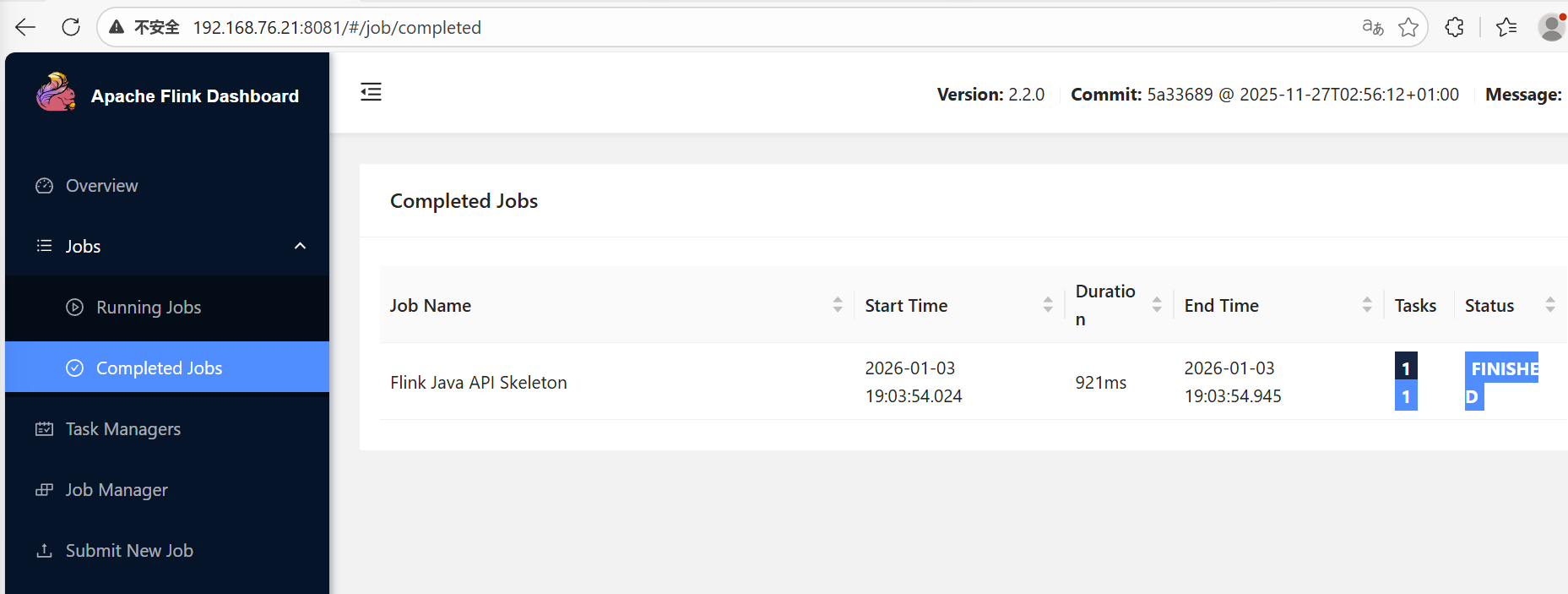

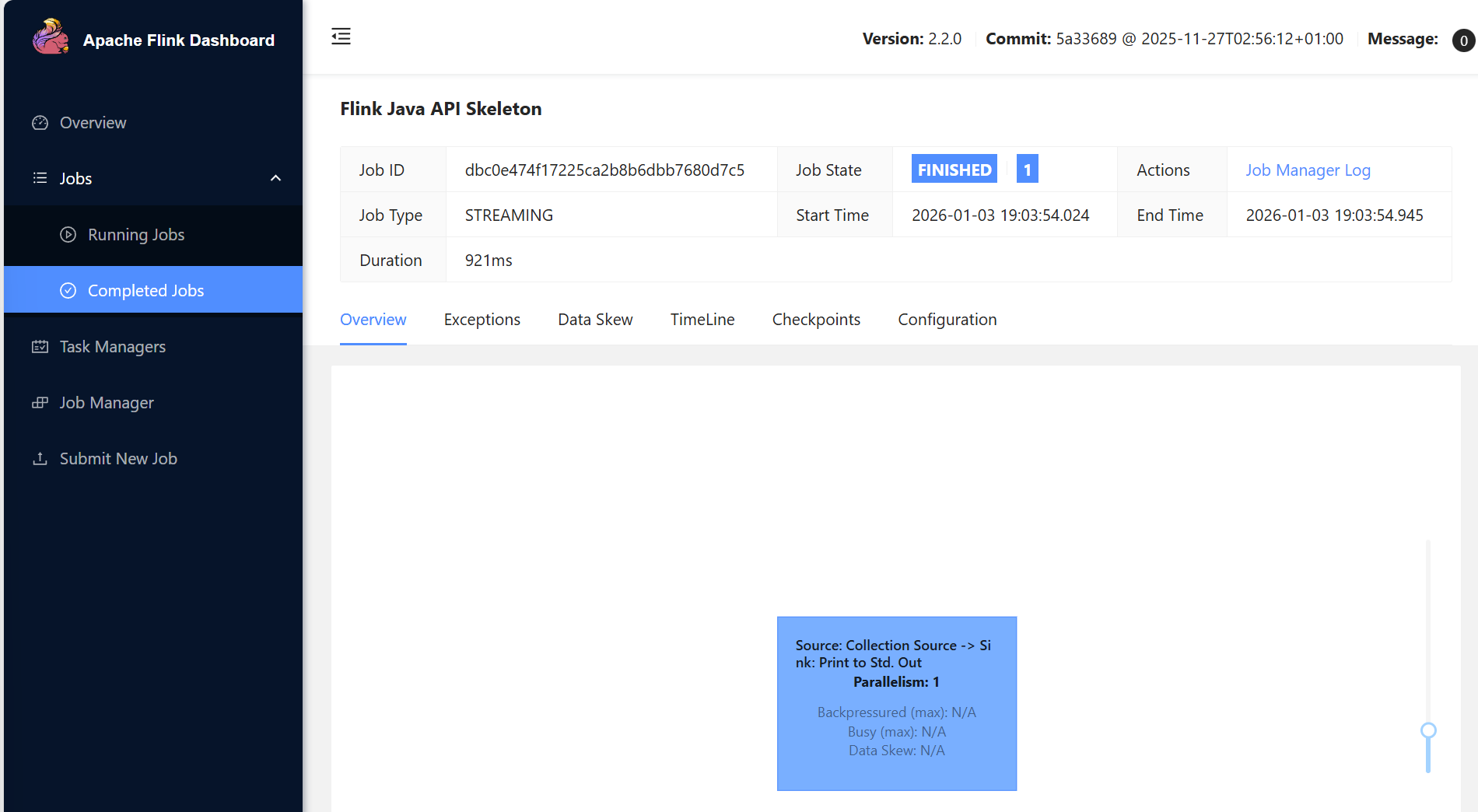

Job Runtime: 1094 ms也可以在WEB管理界面中看到,当然如果你手快,有可能在Running Jobs中看到......:

3. 查看任务执行情况

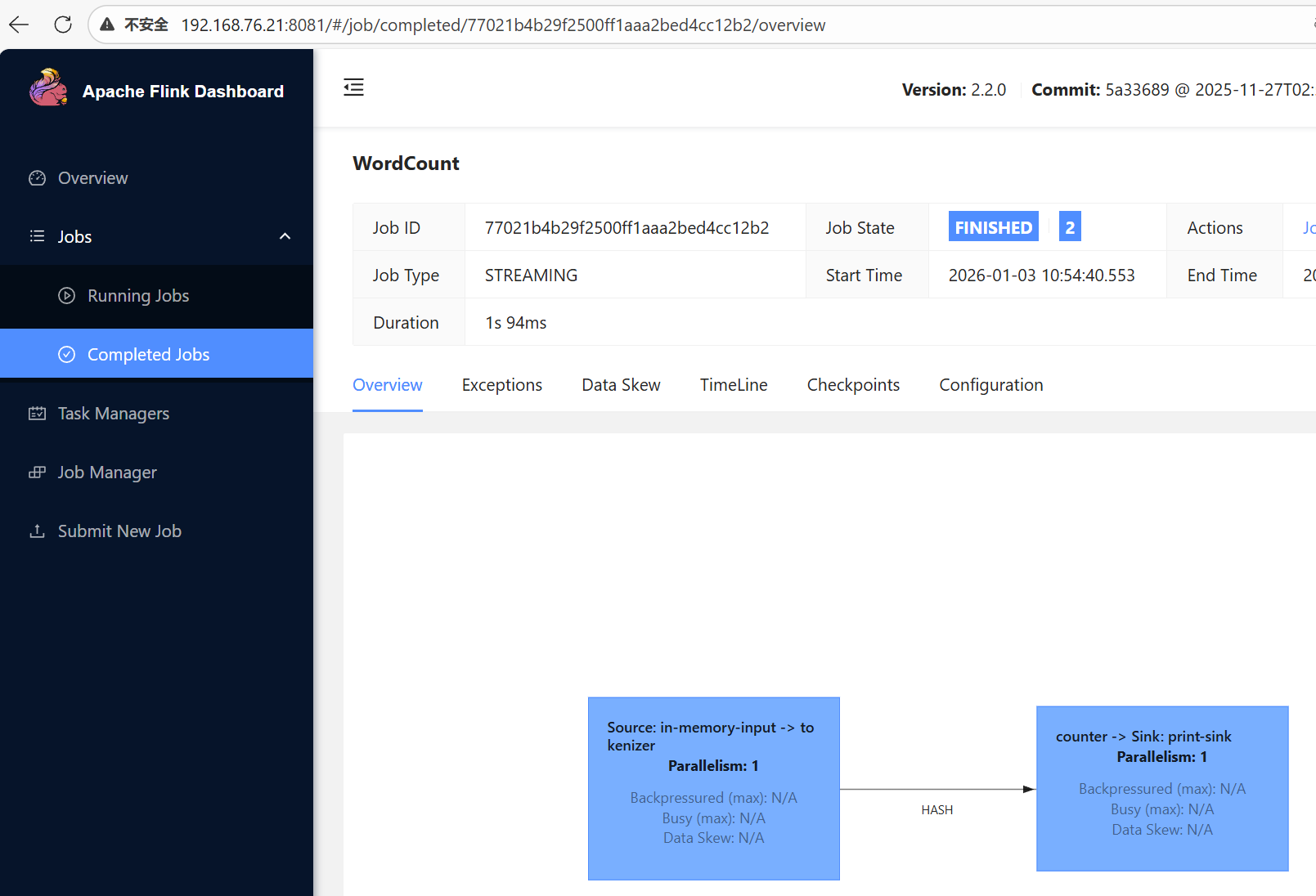

点击任务名,可以看到任务的执行策略情况:

再次tail flink-*.out,可以看到示例程序的打印结果:

[root@localhost flink-2.2.0]# tail log/flink-*.out

==> log/flink-root-standalonesession-0-localhost.localdomain.out <==

==> log/flink-root-taskexecutor-0-localhost.localdomain.out <==

(nymph,1)

(in,3)

(thy,1)

(orisons,1)

(be,4)

(all,2)

(my,1)

(sins,1)

(remember,1)

(d,4)

[root@localhost flink-2.2.0]# 三、本地开发环境构建

完成前面的步骤,就证明我们得到一个比较稳定的Flink本地环境了,以此为基础,在上面添加maven和scala(可选),就能够进行进一步的开发。

1. 安装编程包

先把常规的编程包安装上,后面可能有用gdb来调试java程序的需要

[root@localhost ~]# yum group install "Development tools" -y

上次元数据过期检查:16:35:37 前,执行于 2026年01月02日 星期五 06时18分05秒。

依赖关系解决。

=======================================================================================================================================================================

软件包 架构 版本 仓库 大小

=======================================================================================================================================================================

安装组/模块包:

asciidoc noarch 8.6.10-0.5.20180627gitf7c2274.el8 appstream 216 k

autoconf noarch 2.69-29.el8 appstream 710 k

automake noarch 1.16.1-8.el8 appstream 740 k

bison x86_64 3.0.4-10.el8 appstream 688 k

byacc x86_64 1.9.20170709-4.el8 appstream 91 k

ctags x86_64 5.8-23.el8 baseos 170 k

diffstat x86_64 1.61-7.el8 appstream 44 k

elfutils-libelf-devel x86_64 0.190-2.el8 baseos 62 k

...... ......我的版本如下:

[root@localhost ~]# gcc --version

gcc (GCC) 8.5.0 20210514 (Red Hat 8.5.0-22)

Copyright © 2018 Free Software Foundation, Inc.

本程序是自由软件;请参看源代码的版权声明。本软件没有任何担保;

包括没有适销性和某一专用目的下的适用性担保。

[root@localhost ~]# gdb --version

GNU gdb (GDB) Red Hat Enterprise Linux 8.2-20.el8

Copyright (C) 2018 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.2. 安装maven

在CentOS 8 Stream里,maven是可以直接安装的,------但是先别装:

[root@localhost ~]# yum install maven -y

上次元数据过期检查:2:32:58 前,执行于 2026年01月02日 星期五 22时54分55秒。

依赖关系解决。

=======================================================================================================================================================================

软件包 架构 版本 仓库 大小

=======================================================================================================================================================================

安装:

maven noarch 1:3.5.4-5.module_el8.6.0+1030+8d97e896 appstream 27 k

安装依赖关系:

aopalliance noarch 1.0-17.module_el8.6.0+1030+8d97e896 appstream 17 k

apache-commons-cli noarch 1.4-4.module_el8.0.0+39+6a9b6e22 appstream 74 k

apache-commons-codec noarch 1.11-3.module_el8.0.0+39+6a9b6e22 appstream 288 k

apache-commons-io noarch 1:2.6-3.module_el8.6.0+1030+8d97e896 appstream 224 k

apache-commons-lang3 noarch 3.7-3.module_el8.0.0+39+6a9b6e22 appstream 483 k

apache-commons-logging noarch 1.2-13.module_el8.6.0+1030+8d97e896 appstream 85 k

atinject noarch 1-28.20100611svn86.module_el8.6.0+1030+8d97e896 appstream 20 k

cdi-api noarch 1.2-8.module_el8.6.0+1030+8d97e896 appstream 70 k

...... ......但这个maven和java-1.8.0捆绑在一起:

[root@localhost ~]# mvn --version

Apache Maven 3.5.4 (Red Hat 3.5.4-5)

Maven home: /usr/share/maven

Java version: 1.8.0_362, vendor: Red Hat, Inc., runtime: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.362.b08-3.el8.x86_64/jre

Default locale: zh_CN, platform encoding: UTF-8

OS name: "linux", version: "4.18.0-553.6.1.el8.x86_64", arch: "amd64", family: "unix"(1)下载

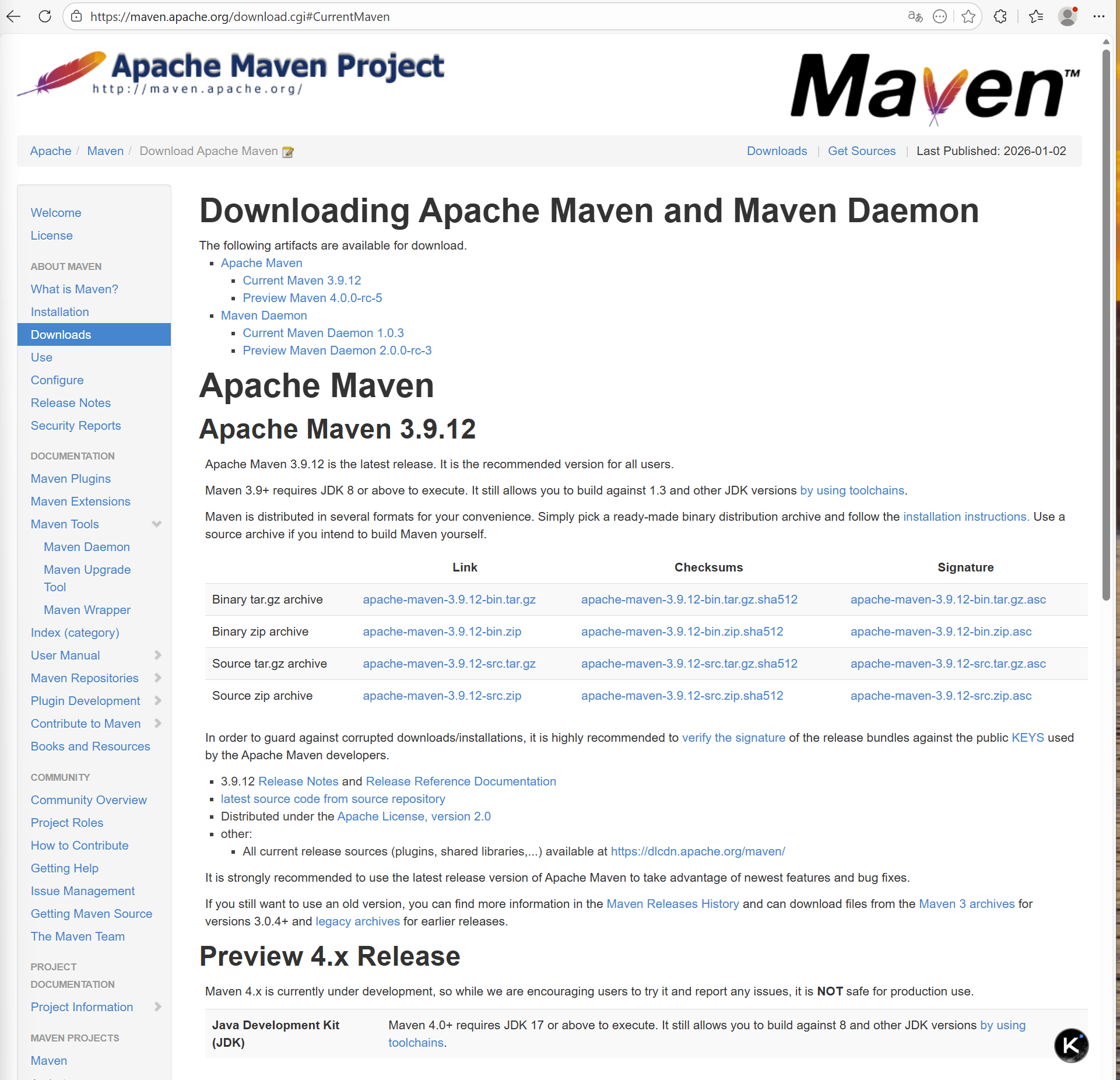

第一个坑就在这里,如果选用这个maven,它默认将系统的java版本调回到1.8.0,而1.8.0版本似乎在匹配flink的新框架(source和sink)时会有些问题,导致pom文件中几个依赖项总是存在或多或少的冲突。所以,建议还是直接使用maven的官方安装包:

拷贝链接直接下载:

[root@localhost ~]# wget https://dlcdn.apache.org/maven/maven-3/3.9.12/binaries/apache-maven-3.9.12-bin.tar.gz

--2026-01-03 01:36:26-- https://dlcdn.apache.org/maven/maven-3/3.9.12/binaries/apache-maven-3.9.12-bin.tar.gz

正在解析主机 dlcdn.apache.org (dlcdn.apache.org)... 151.101.2.132, 2a04:4e42::644

正在连接 dlcdn.apache.org (dlcdn.apache.org)|151.101.2.132|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:9233336 (8.8M) [application/x-gzip]

正在保存至: "apache-maven-3.9.12-bin.tar.gz"(2)解压

同样解压到/opt目录下

[root@localhost ~]# tar -zxf apache-maven-3.9.12-bin.tar.gz -C /opt

[root@localhost ~]#

[root@localhost opt]# ls

apache-maven-3.9.12 flink-2.2.0并且将其bin目录加入到.bashrc文件中:

source以后我们可以看到,这次的java版本时满足预期了:

[root@localhost ~]# source .bashrc

[root@localhost ~]# echo $PATH

/opt/apache-maven-3.9.12/bin:/opt/flink-2.2.0/bin:/opt/flink-2.2.0/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

[root@localhost ~]# mvn -version

Apache Maven 3.9.12 (848fbb4bf2d427b72bdb2471c22fced7ebd9a7a1)

Maven home: /opt/apache-maven-3.9.12

Java version: 11.0.20.1, vendor: Red Hat, Inc., runtime: /usr/lib/jvm/java-11-openjdk-11.0.20.1.1-2.el8.x86_64

Default locale: zh_CN, platform encoding: UTF-8

OS name: "linux", version: "4.18.0-553.6.1.el8.x86_64", arch: "amd64", family: "unix"(3)配置文件

对于maven来说,在其conf子目录下的setting.xml文件中的localRepository参数确定了所有依赖项下载后存放的位置(本地仓库),如果后续出现依赖项问题, 就需要到这个目录(默认一般是~\.m2)下找问题了。

<!-- localRepository

| The path to the local repository maven will use to store artifacts.

|

| Default: ${user.home}/.m2/repository

<localRepository>/path/to/local/repo</localRepository>随着依赖项越来越多,这个目录会比较大(1G左右),如果有需要,可以通过这个配置文件给它换个地方。

3. 安装scala(可选)

scala可装可不装,如果后面不用scala编程的话......

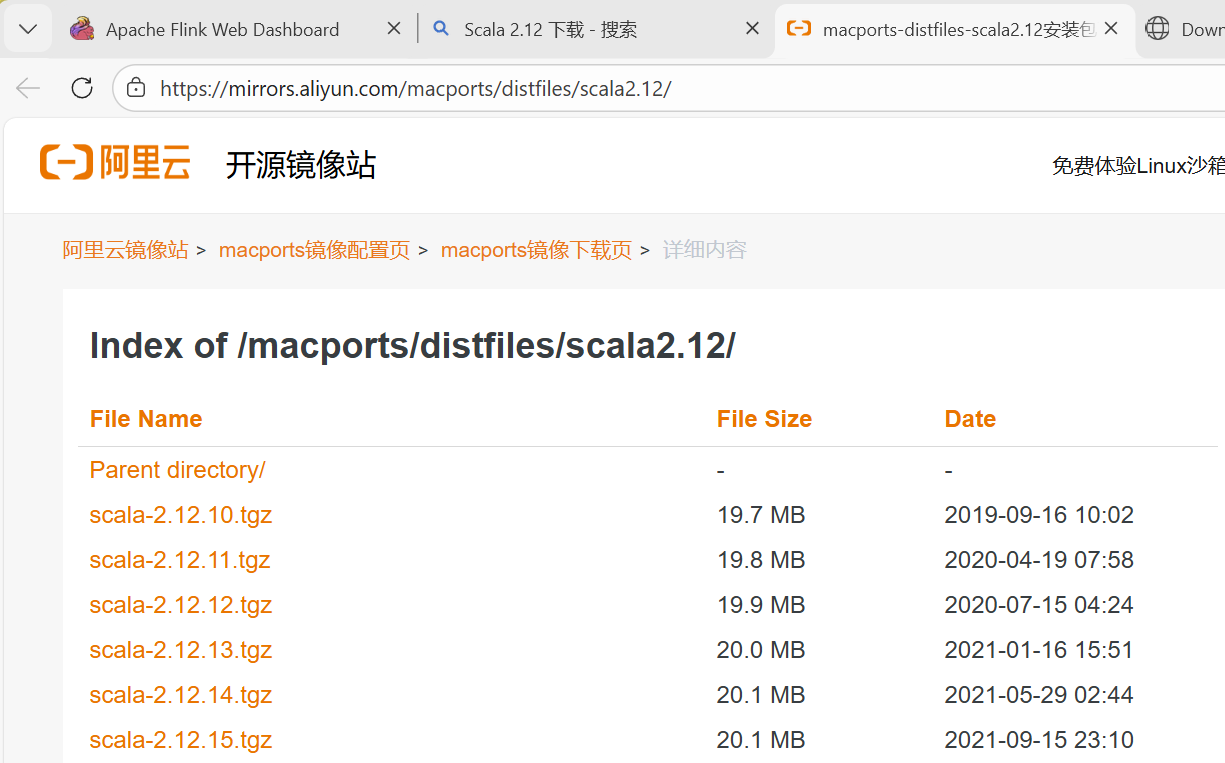

(1)下载

如果装则推荐2.12版本的(当前2026年1月哈)。不过官网下载需要魔法,在国内找一个替代难度也不大:

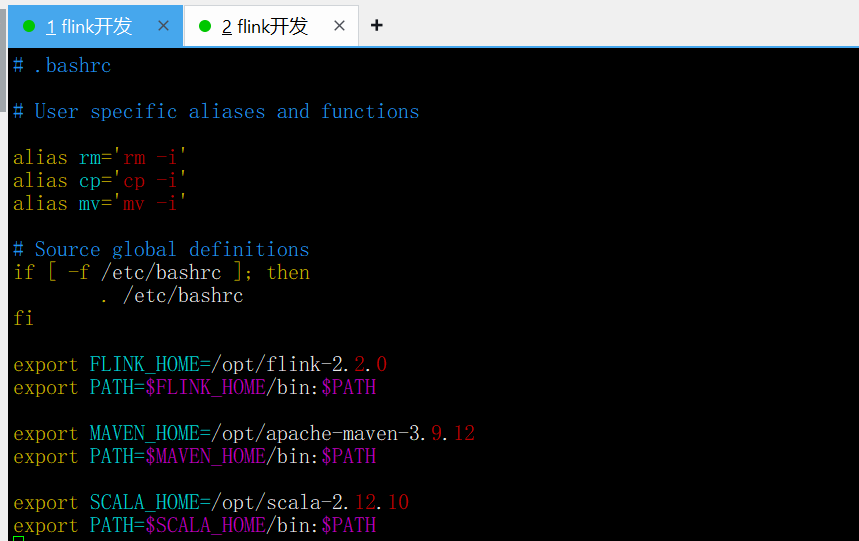

(2)解压

[root@localhost ~]# tar -zxf scala-2.12.10.tgz -C /opt

[root@localhost ~]# ls /opt

apache-maven-3.9.12 flink-2.2.0 scala-2.12.10同样,在.bashrc里设置PATH

source以后,可以直接执行scala命令就行了:

[root@localhost ~]# scala -version

Scala code runner version 2.12.10 -- Copyright 2002-2019, LAMP/EPFL and Lightbend, Inc.4. 使用flink官方脚本新建项目

使用mvn命令和快速开始脚本都可以创建flink项目,但是下载快速脚本似乎时不时需要点魔法,所以不如还是mvn吧:

(1)使用mvn创建一个flink框架

用官网给出的maven命令交互式创建一个项目,其中:

-

**groupId:**必填。对应组织名称,可以认为是组织URL确定,比如pighome.com,那就填com.pighome;

-

**artifactId:**必填。对应创建的这个项目的名称,比如helloFlink。

-

**version:**选填,默认选项就在交互式代码的冒号的前面,不填的话应该是1.0-SNAPSHOT。

-

**package:**选填,不填的话和groupId一致。不过一般来说一个公司不可能只开发一个项目,所以原则上还是应该包groupId和artifactId连起来填,比如com.pighome.helloFlink。

[root@localhost ~]# mvn archetype:generate \

-DarchetypeGroupId=org.apache.flink

-DarchetypeArtifactId=flink-quickstart-java

-DarchetypeVersion=2.2.0

[INFO] Scanning for projects...

Downloading from central: https://repo.maven.apache.org/maven2/org/codehaus/mojo/maven-metadata.xml

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-metadata.xml

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-metadata.xml (14 kB at 15 kB/s)

Downloaded from central: https://repo.maven.apache.org/maven2/org/codehaus/mojo/maven-metadata.xml (20 kB at 21 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-archetype-plugin/maven-metadata.xml

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-archetype-plugin/maven-metadata.xml (1.1 kB at 5.5 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-archetype-plugin/3.4.1/maven-archetype-plugin-3.4.1.pom

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-archetype-plugin/3.4.1/maven-archetype-plugin-3.4.1.pom (11 kB at 53 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/maven/archetype/maven-archetype/3.4.1/maven-archetype-3.4.1.pom

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/maven/archetype/maven-archetype/3.4.1/maven-archetype-3.4.1.pom (9.8 kB at 50 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/maven/maven-parent/45/maven-parent-45.pom

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/maven/maven-parent/45/maven-parent-45.pom (53 kB at 270 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/apache/35/apache-35.pom

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/apache/35/apache-35.pom (24 kB at 120 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/groovy/groovy-bom/4.0.28/groovy-bom-4.0.28.pom

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/groovy/groovy-bom/4.0.28/groovy-bom-4.0.28.pom (27 kB at 136 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/junit/junit-bom/5.13.1/junit-bom-5.13.1.pom

Downloaded from central: https://repo.maven.apache.org/maven2/org/junit/junit-bom/5.13.1/junit-bom-5.13.1.pom (5.6 kB at 28 kB/s)

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-archetype-plugin/3.4.1/maven-archetype-plugin-3.4.1.jar

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/maven/plugins/maven-archetype-plugin/3.4.1/maven-archetype-plugin-3.4.1.jar (100 kB at 263 kB/s)

[INFO]

[INFO] ------------------< org.apache.maven:standalone-pom >-------------------

[INFO] Building Maven Stub Project (No POM) 1

[INFO] --------------------------------[ pom ]---------------------------------

[INFO]

[INFO] >>> archetype:3.4.1:generate (default-cli) > generate-sources @ standalone-pom >>>

[INFO]

[INFO] <<< archetype:3.4.1:generate (default-cli) < generate-sources @ standalone-pom <<<

[INFO]

[INFO]

[INFO] --- archetype:3.4.1:generate (default-cli) @ standalone-pom ---............

............

............Downloaded from central: https://repo.maven.apache.org/maven2/com/github/luben/zstd-jni/1.5.7-4/zstd-jni-1.5.7-4.jar (7.4 MB at 1.6 MB/s)

Downloaded from central: https://repo.maven.apache.org/maven2/com/ibm/icu/icu4j/77.1/icu4j-77.1.jar (15 MB at 2.8 MB/s)

[INFO] Generating project in Interactive mode

Downloading from central: https://repo.maven.apache.org/maven2/archetype-catalog.xml

Downloaded from central: https://repo.maven.apache.org/maven2/archetype-catalog.xml (17 MB at 59 MB/s)

[INFO] Archetype repository not defined. Using the one from [org.apache.flink:flink-quickstart-java:2.2.0] found in catalog remote

Downloading from central: https://repo.maven.apache.org/maven2/org/apache/flink/flink-quickstart-java/2.2.0/flink-quickstart-java-2.2.0.jar

Downloaded from central: https://repo.maven.apache.org/maven2/org/apache/flink/flink-quickstart-java/2.2.0/flink-quickstart-java-2.2.0.jar (11 kB at 55 kB/s)

Define value for property 'groupId': com.pighome

Define value for property 'artifactId': helloFlink

Define value for property 'version' 1.0-SNAPSHOT: 1.0

Define value for property 'package' com.pighome: com.pighome.helloFlink

Confirm properties configuration:

groupId: com.pighome

artifactId: helloFlink

version: 1.0

package: com.pighome.helloFlink

Y: y

[INFO] ----------------------------------------------------------------------------

[INFO] Using following parameters for creating project from Archetype: flink-quickstart-java:2.2.0

[INFO] ----------------------------------------------------------------------------

[INFO] Parameter: groupId, Value: com.pighome

[INFO] Parameter: artifactId, Value: helloFlink

[INFO] Parameter: version, Value: 1.0

[INFO] Parameter: package, Value: com.pighome

[INFO] Parameter: packageInPathFormat, Value: com/pighome

[INFO] Parameter: package, Value: com.pighome

[INFO] Parameter: groupId, Value: com.pighome

[INFO] Parameter: artifactId, Value: helloFlink

[INFO] Parameter: version, Value: 1.0

[WARNING] CP Don't override file /root/helloFlink/src/main/resources

[INFO] Project created from Archetype in dir: /root/helloFlink

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 48.275 s

[INFO] Finished at: 2026-01-03T03:54:18-05:00

[INFO] ------------------------------------------------------------------------

[root@localhost ~]#

此时,在执行命令的当前目录下,可以找到一个名为helloFlink的文件夹,文件夹结构如下:

[root@localhost ~]# ls -lR helloFlink/

helloFlink/:

总用量 8

-rw-r--r-- 1 root root 6873 1月 3 04:13 pom.xml

drwxr-xr-x 3 root root 18 1月 3 04:13 src

helloFlink/src:

总用量 0

drwxr-xr-x 4 root root 35 1月 3 04:13 main

helloFlink/src/main:

总用量 0

drwxr-xr-x 3 root root 17 1月 3 04:13 java

drwxr-xr-x 2 root root 31 1月 3 04:13 resources

helloFlink/src/main/java:

总用量 0

drwxr-xr-x 3 root root 21 1月 3 04:13 com

helloFlink/src/main/java/com:

总用量 0

drwxr-xr-x 3 root root 24 1月 3 04:13 pighome

helloFlink/src/main/java/com/pighome:

总用量 0

drwxr-xr-x 2 root root 32 1月 3 04:13 helloFlink

helloFlink/src/main/java/com/pighome/helloFlink:

总用量 4

-rw-r--r-- 1 root root 2275 1月 3 04:13 DataStreamJob.java

helloFlink/src/main/resources:

总用量 4

-rw-r--r-- 1 root root 1226 1月 3 04:13 log4j2.properties其源代码文件在helloFlink/src/main/java/com/pighome目录下,名为DataStreamJob.java,源码如下:

package com.pighome.helloFlink;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* Skeleton for a Flink DataStream Job.

*

* <p>For a tutorial how to write a Flink application, check the

* tutorials and examples on the <a href="https://flink.apache.org">Flink Website</a>.

*

* <p>To package your application into a JAR file for execution, run

* 'mvn clean package' on the command line.

*

* <p>If you change the name of the main class (with the public static void main(String[] args))

* method, change the respective entry in the POM.xml file (simply search for 'mainClass').

*/

public class DataStreamJob {

public static void main(String[] args) throws Exception {

// Sets up the execution environment, which is the main entry point

// to building Flink applications.

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

/*

* Here, you can start creating your execution plan for Flink.

*

* Start with getting some data from the environment, like

* env.fromSequence(1, 10);

*

* then, transform the resulting DataStream<Long> using operations

* like

* .filter()

* .flatMap()

* .window()

* .process()

*

* and many more.

* Have a look at the programming guide:

*

* https://nightlies.apache.org/flink/flink-docs-stable/

*

*/

// Execute program, beginning computation.

env.execute("Flink Java API Skeleton");

}

}在其中加几行做个hello world效果:

public static void main(String[] args) throws Exception {

// Sets up the execution environment, which is the main entry point

// to building Flink applications.

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

/*

* Here, you can start creating your execution plan for Flink.

*

* Start with getting some data from the environment, like

* env.fromSequence(1, 10);

*

* then, transform the resulting DataStream<Long> using operations

* like

* .filter()

* .flatMap()

* .window()

* .process()

*

* and many more.

* Have a look at the programming guide:

*

* https://nightlies.apache.org/flink/flink-docs-stable/

*

*/

String[] data = {"hello flink"};

DataStream<String> lines = env.fromElements(data);

lines.print();

// Execute program, beginning computation.

env.execute("Flink Java API Skeleton");

}(2)编译项目

直接使用mvn 命令验证并编译项目,主要的mvn命令及作用如下所示:

**mvn clean**

作用 :删除 target 目录及其内容(编译文件、日志等)

场景:清理旧文件,确保后续构建不受干扰

**mvn compile**

作用 :编译 src/main/java 目录下的源代码,生成 .class 文件到 target/classes

场景:快速验证代码编译是否通过

**mvn test**

作用 :运行 src/test/java 目录下的单元测试类

场景:验证代码逻辑正确性

**mvn package**

作用 :编译+测试+打包(生成 JAR/WAR 文件到 target 目录)

场景:生成可部署包但不安装到本地仓库

mvn verify

**作用:**验证构建质量符合install要求

**场景:**必须在package之后。可使用mvn dependency:tree来分析依赖树

**mvn install**

作用 :编译+测试+打包,并将生成的包安装到本地仓库(.m2/repository)

场景:多模块项目中提供本地依赖

常用的也就是clean和pacakge,install。在flink编程中,其实到package就足够了:

[root@localhost helloFlink]# mvn package

[INFO] Scanning for projects...

[INFO]

[INFO] -----------------------< com.pighome:helloFlink >-----------------------

[INFO] Building Flink Quickstart Job 1.0

[INFO] from pom.xml

[INFO] --------------------------------[ jar ]---------------------------------

[INFO]

[INFO] --- resources:3.3.1:resources (default-resources) @ helloFlink ---

[INFO] Copying 1 resource from src/main/resources to target/classes

[INFO]

[INFO] --- compiler:3.1:compile (default-compile) @ helloFlink ---

[INFO] Nothing to compile - all classes are up to date

[INFO]

[INFO] --- resources:3.3.1:testResources (default-testResources) @ helloFlink ---

[INFO] skip non existing resourceDirectory /root/helloFlink/src/test/resources

[INFO]

[INFO] --- compiler:3.1:testCompile (default-testCompile) @ helloFlink ---

[INFO] No sources to compile

[INFO]

[INFO] --- surefire:3.2.5:test (default-test) @ helloFlink ---

[INFO] No tests to run.

[INFO]

[INFO] --- jar:3.4.1:jar (default-jar) @ helloFlink ---

[INFO]

[INFO] --- shade:3.1.1:shade (default) @ helloFlink ---

[INFO] Excluding org.slf4j:slf4j-api:jar:1.7.36 from the shaded jar.

[INFO] Excluding org.apache.logging.log4j:log4j-slf4j-impl:jar:2.24.3 from the shaded jar.

[INFO] Excluding org.apache.logging.log4j:log4j-api:jar:2.24.3 from the shaded jar.

[INFO] Excluding org.apache.logging.log4j:log4j-core:jar:2.24.3 from the shaded jar.

[INFO] Replacing original artifact with shaded artifact.

[INFO] Replacing /root/helloFlink/target/helloFlink-1.0.jar with /root/helloFlink/target/helloFlink-1.0-shaded.jar

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 1.359 s

[INFO] Finished at: 2026-01-03T04:55:08-05:00

[INFO] ------------------------------------------------------------------------package过程中,如果缺少依赖,mvn会自行到repo仓库下载,所以是需要保持网络畅通的。package成功,则可以在target目录下找到打包成功的jar包:

[root@localhost helloFlink]# ls target

classes generated-sources helloFlink-1.0.jar maven-archiver maven-status original-helloFlink-1.0.jar

[root@localhost helloFlink]# 5. 提交helloFlink

(1)使用命令行提交

事实上使用WEB页面也可以提交,但是需要把jar包先拷贝出来,所以还是简单点:

[root@localhost helloFlink]# flink run target/helloFlink-1.0.jar

Job has been submitted with JobID dbc0e474f17225ca2b8b6dbb7680d7c5

Program execution finished

Job with JobID dbc0e474f17225ca2b8b6dbb7680d7c5 has finished.

Job Runtime: 921 ms(2)检查结果

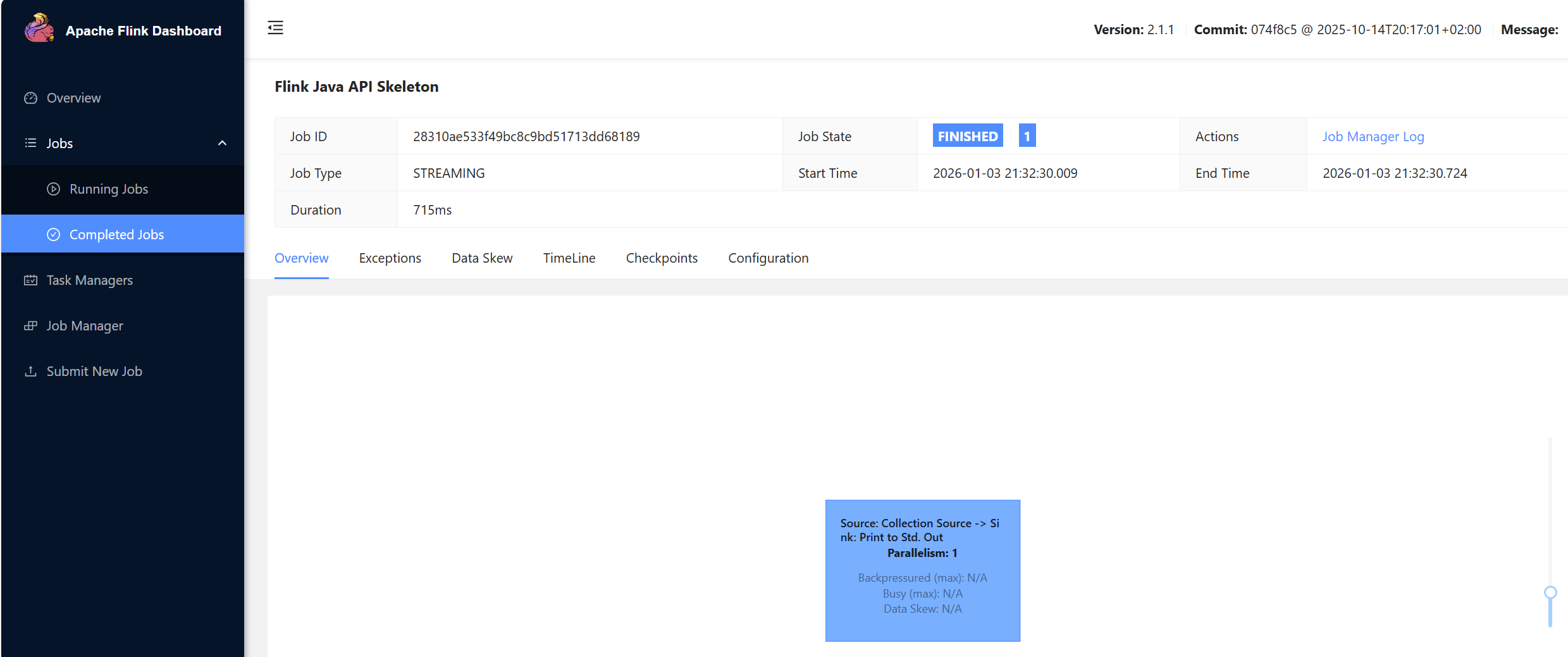

在WEB管理界面中可以看到任务已经完成:

点进去可以看到这个示例的执行策略极为简单,直接从source到sink,因为确实没有经过任何处理。不过这个source-> sink恰好展示了flink程序的基本框架,这个下章再说,

检查out输出:

[root@localhost helloFlink]# tail /opt/flink-2.2.0/log/flink-*.out

==> /opt/flink-2.2.0/log/flink-root-standalonesession-0-localhost.localdomain.out <==

==> /opt/flink-2.2.0/log/flink-root-taskexecutor-0-localhost.localdomain.out <==

hello flink

[root@localhost helloFlink]# 四、Flink程序框架简述

可以参考知乎上相关文章。 总之就是kafka提供了一个StreamExecutionEnviroment类封装了处理流程(包括批处理和流处理),从而可以通过source读取数据到DataStream类,再DataStream类中进行map、filter等操作,然后通过sink输出处理结果。

1. source

flink提供了常用的source,可以用对象env来直接调用,包括但不限于:

- env.readTextFile()从文本文件中读取

- env.socketTextStream() 从文件夹流式读取(只要出现文件)

- env.fromCollection() 从List、Set对象读取,与Element的区别在于Collection支持如嵌套列表、自定义对象等更复杂的集合机构

- env.fromElement() 从List、Set对象中读取,前面我们加的几行代码正是使用的该source

- env.generateSequence() 仅用于生成数据序列流

2. sink

常用的sink包括但不限于:

- writeAsText

- writeAsCsv

- writeToSocket

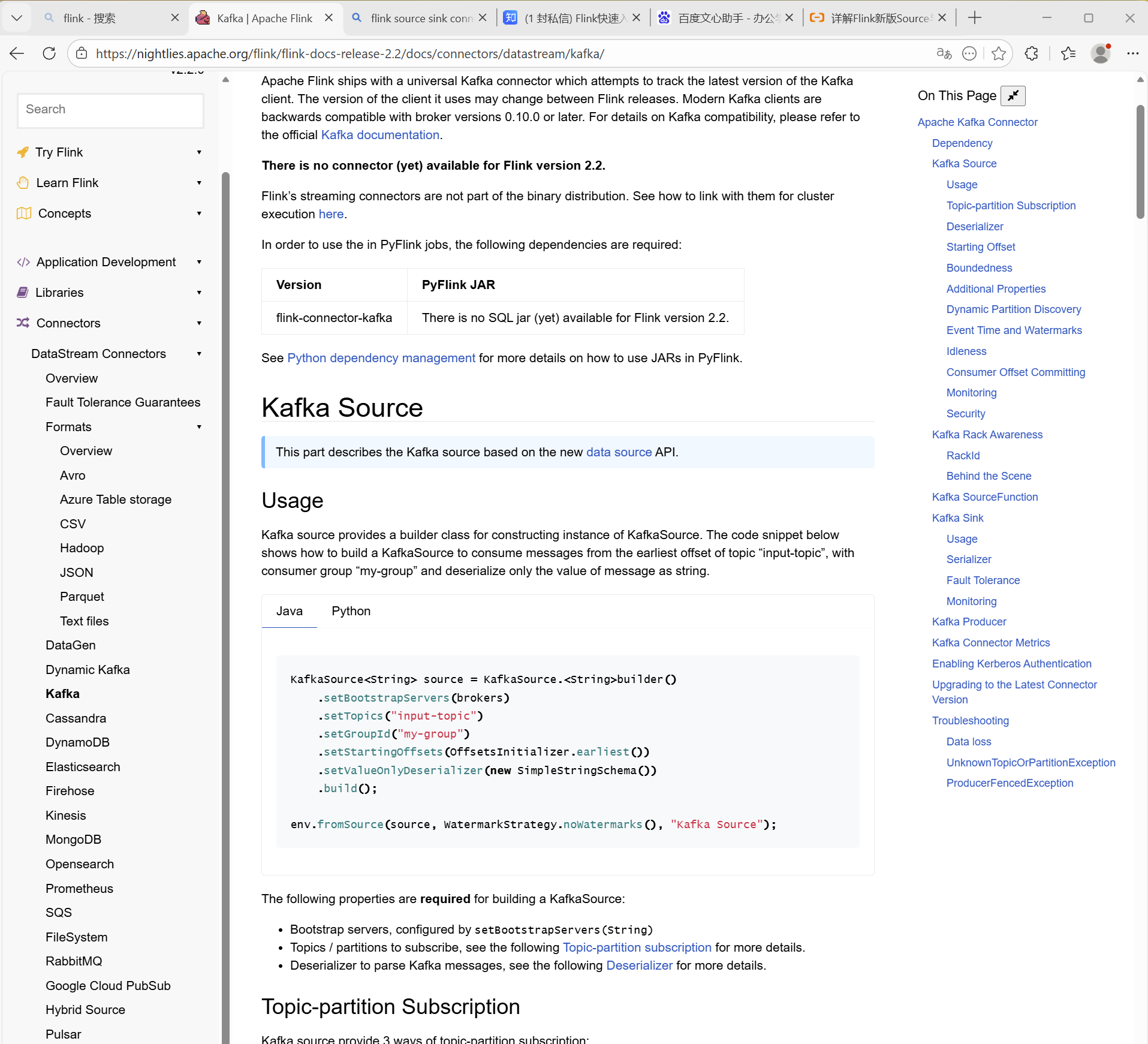

3. connector

当然,除了已经封装好的source、sink,flink也支持通过实现接口方式自定义source和sink,另外,flink还提供了connector来集成被广泛使用的文件格式、传输协议、数据处理/存储产品到source/sink接口中,在官网上均有提及及简单示例:

五、Flink集群部署

flink有本地、standalone(集群部署)及yarn、k8s、mesos等部署方式。这里除了使用本地模式来调试代码外,生产环境采用Flink的standalone模式来进行集群部署。在standalong模式下,flink独立部署在多台物理机上,由Flink自身通过JobManager和TaskManager协同管理集群资源,支持资源隔离和负载均衡。所以,在部署中只需要明确节点的JobManager和TaskManager角色即可。

1. 下载镜像

直接到docker hub下载需要魔法,所以在国内下更简单些:

(1)下载

最简单0配置下法:

[root@node2 ~]# docker pull docker.1ms.run/flink:latest

latest: Pulling from flink

7e49dc6156b0: Pull complete

7e27b670a0f5: Pull complete

070c1638c21b: Pull complete

4e292c31f904: Pull complete

b5e329fb7a0e: Pull complete

986387ee1379: Pull complete

027bb93edc85: Pull complete

838c5bac3671: Pull complete

f43a41ca5395: Pull complete

a682e6da4f2a: Pull complete

fc310bac846b: Pull complete

Digest: sha256:4ac9d0123bb77d1d9338545760883692fff3735b20d13abcda70e98904020034

Status: Downloaded newer image for docker.1ms.run/flink:latest

docker.1ms.run/flink:latest

[root@node2 ~]# (2)tag

建一个tag,简化一下镜像的名称:

[root@node2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.1ms.run/apache/flink 2.2 8bda0ea75a45 4 weeks ago 883MB

docker.1ms.run/flink latest 729e7f014aea 7 weeks ago 883MB

clickhouse/clickhouse-server head-alpine b6ed8f160e84 7 weeks ago 718MB

clickhouse/clickhouse-keeper head-alpine 125d5acd6e3b 7 weeks ago 488MB

bitnami/kafka latest 43ac8cf6ca80 5 months ago 454MB

docker.m.daocloud.io/bitnami/kafka latest 43ac8cf6ca80 5 months ago 454MB

[root@node2 ~]# docker image tag docker.1ms.run/flink:latest flink:latest

[root@node2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.1ms.run/apache/flink 2.2 8bda0ea75a45 4 weeks ago 883MB

docker.1ms.run/flink latest 729e7f014aea 7 weeks ago 883MB

flink latest 729e7f014aea 7 weeks ago 883MB

clickhouse/clickhouse-server head-alpine b6ed8f160e84 7 weeks ago 718MB

clickhouse/clickhouse-keeper head-alpine 125d5acd6e3b 7 weeks ago 488MB

bitnami/kafka latest 43ac8cf6ca80 5 months ago 454MB

docker.m.daocloud.io/bitnami/kafka latest 43ac8cf6ca80 5 months ago 454MB

[root@node2 ~]# (3) 导出与向所有节点分发镜像

[root@node1 ~]# docker image save -o flink.tar flink:latest

[root@node1 ~]# for i in {2..6};do scp flink.tar node$i:~/.;done

flink.tar 100% 848MB 187.6MB/s 00:04

flink.tar 100% 848MB 177.9MB/s 00:04

flink.tar 100% 848MB 64.2MB/s 00:13

flink.tar (4)在各节点导入镜像

[root@node1 ~]# docker image load -i flink.tar

73974f74b436: Loading layer [==================================================>] 80.42MB/80.42MB

fa85cf75d7a0: Loading layer [==================================================>] 45.49MB/45.49MB

7da15da61ae6: Loading layer [==================================================>] 140.8MB/140.8MB

61cd51038a8c: Loading layer [==================================================>] 2.56kB/2.56kB

5c41ef53b2b3: Loading layer [==================================================>] 7.168kB/7.168kB

94ca437cbc08: Loading layer [==================================================>] 3.998MB/3.998MB

e0233dcfd669: Loading layer [==================================================>] 2.3MB/2.3MB

2484442f7571: Loading layer [==================================================>] 3.254MB/3.254MB

ee6467243015: Loading layer [==================================================>] 2.048kB/2.048kB

e8585c14f08e: Loading layer [==================================================>] 612.8MB/612.8MB

e8feaabfa78f: Loading layer [==================================================>] 7.68kB/7.68kB

Loaded image: flink:latest

[root@node1 ~]# 2. 使用Portainer启动

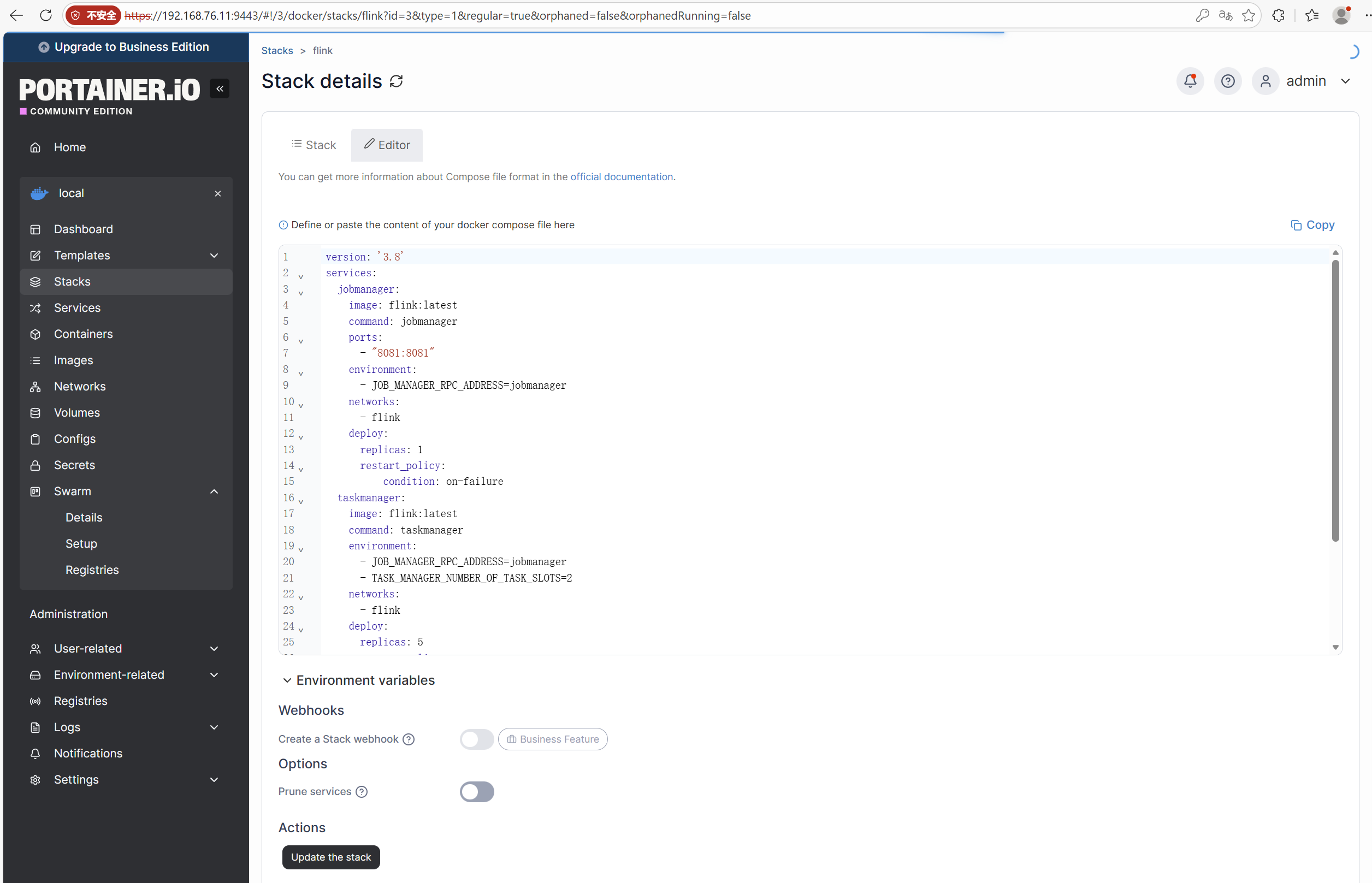

(1)yml文件:

version: '3.8'

services:

jobmanager:

image: flink:latest

command: jobmanager

ports:

- "8081:8081"

environment:

- JOB_MANAGER_RPC_ADDRESS=jobmanager

networks:

- flink

deploy:

replicas: 1

restart_policy:

condition: on-failure

taskmanager:

image: flink:latest

command: taskmanager

environment:

- JOB_MANAGER_RPC_ADDRESS=jobmanager

- TASK_MANAGER_NUMBER_OF_TASK_SLOTS=2

networks:

- flink

deploy:

replicas: 5

restart_policy:

condition: on-failure

depends_on:

- jobmanager

networks:

flink:

driver: overlay

attachable: true(2)启动

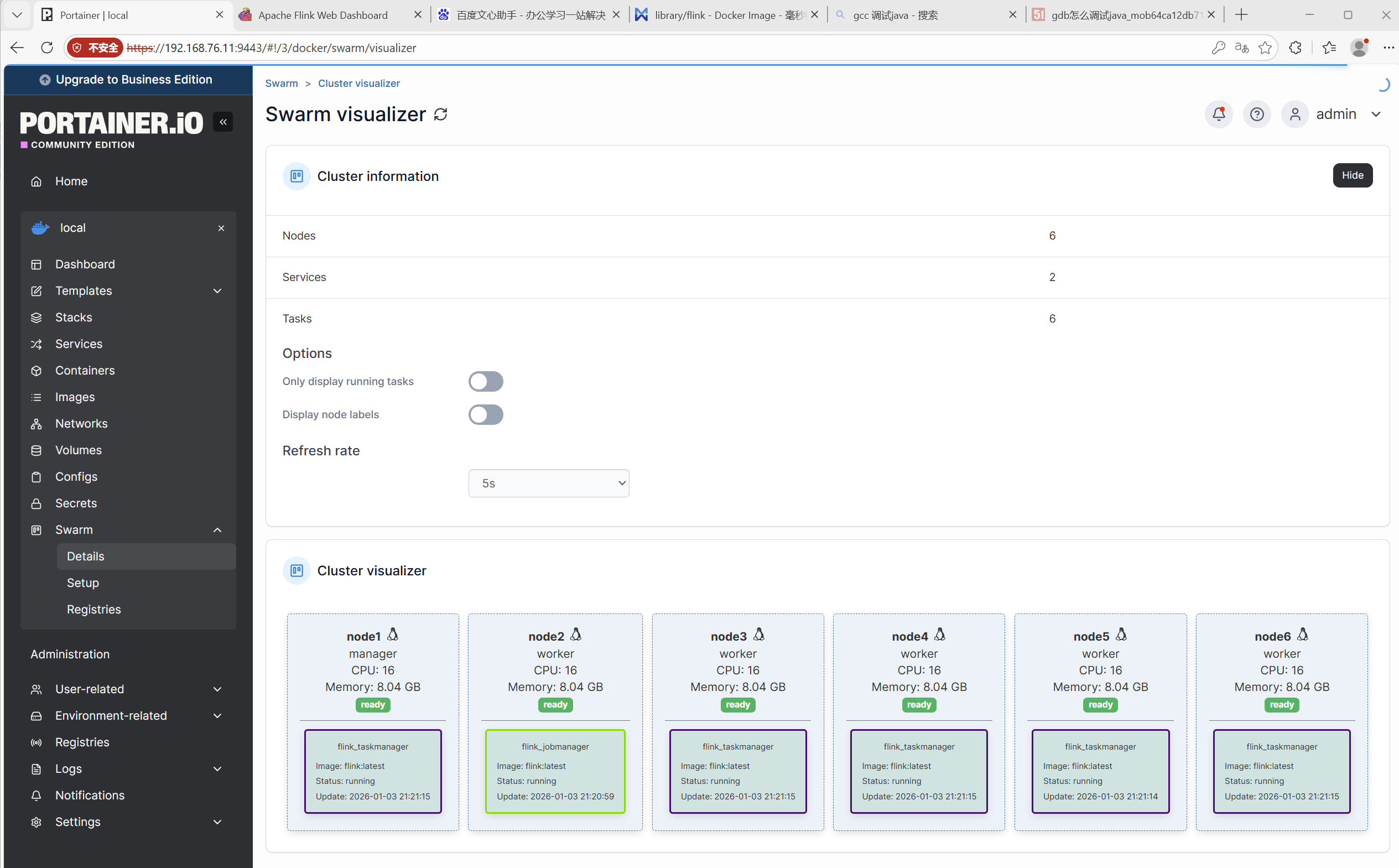

启动后在Portainer中可观察到各节点加载情况

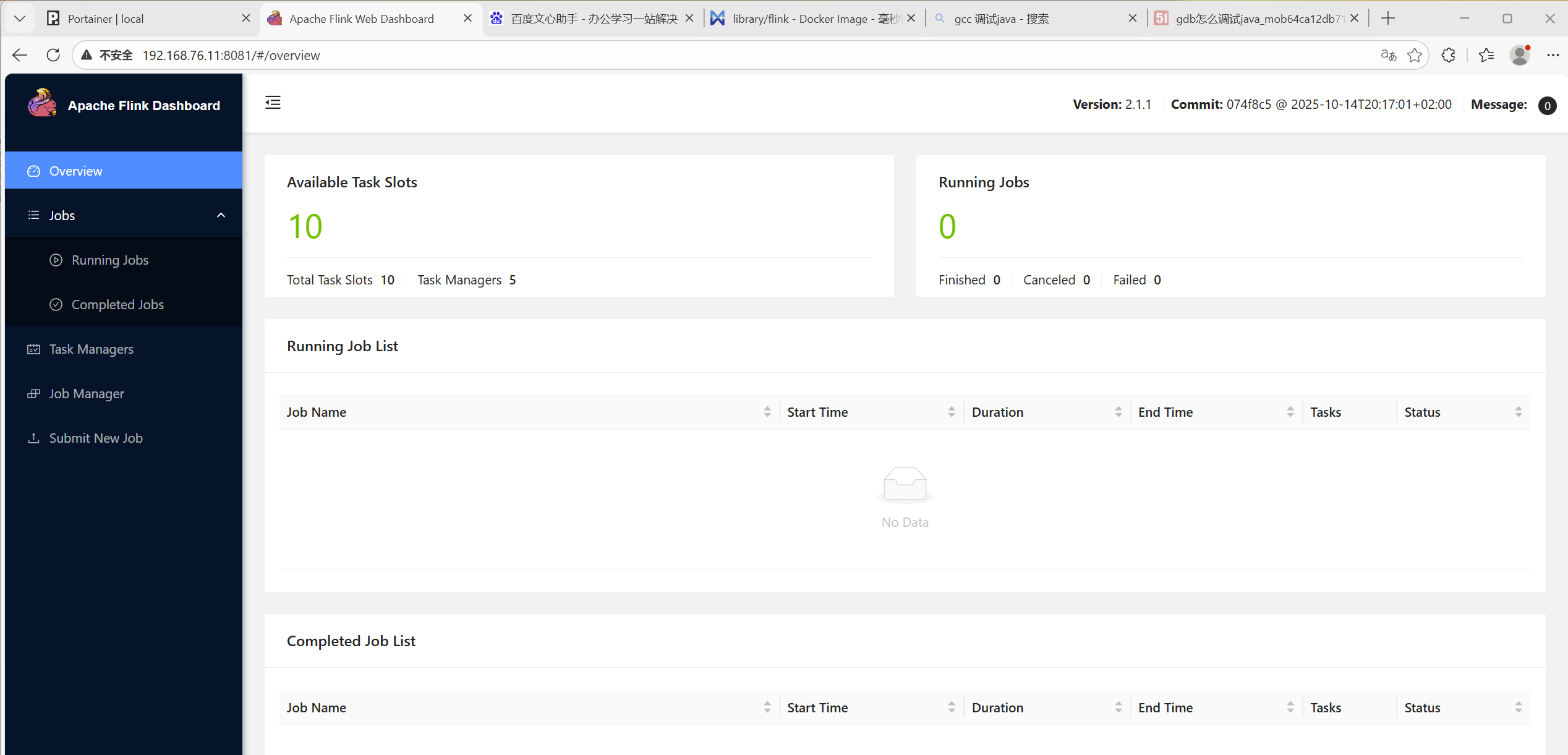

访问jobmanager节点暴漏的8081端口:

图中可见,我们开了5个工作节点(taskmanager replicas = 5),每个节点可以并发2个任务插槽(- TASK_MANAGER_NUMBER_OF_TASK_SLOTS=2),所以一共使10个Slot。

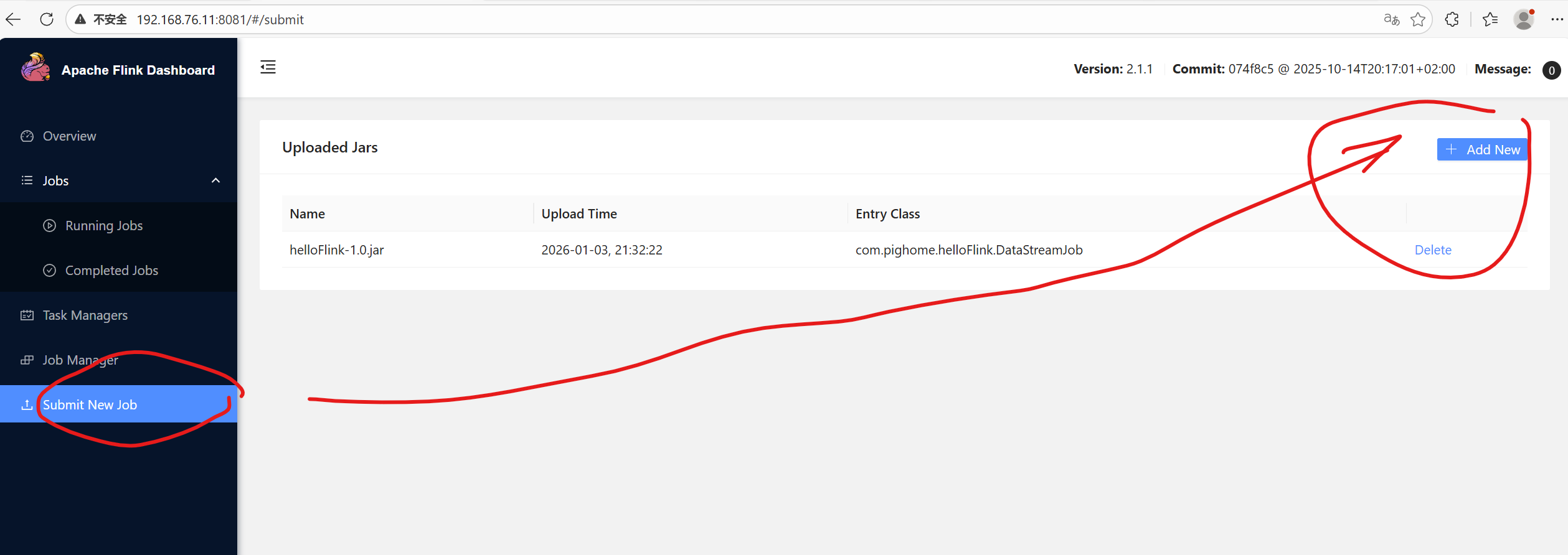

3. 提交任务

(1)拷贝出jar包

PS D:\> scp root@192.168.76.21:/root/helloFlink/target/helloFlink-1.0.jar .

root@192.168.76.21's password:

helloFlink-1.0.jar 100% 5262 1.7MB/s 00:00

PS D:\>(2)提交

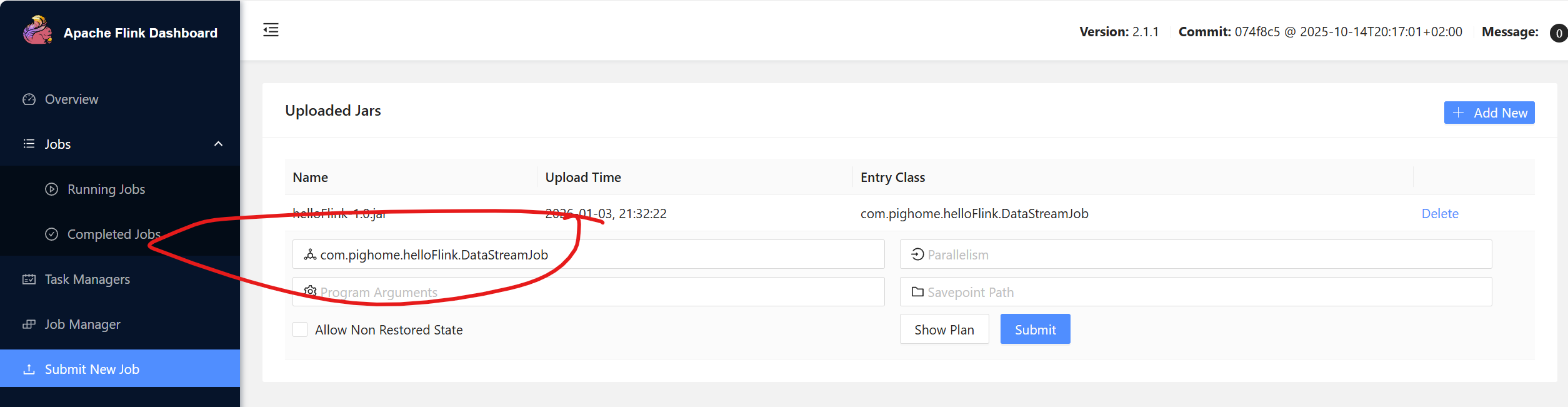

一般来说,正确的jar包是能够识别出main函数入口的

提交后就能如前面一样看到完成的任务,及点进任务看到任务的执行计划情况:

(3)查看结果

不过在集群模式下,DataStream对象print的结果------那个在本地模式下的out文件,却不会如本地模式般输出了。其原因应如该文回答1所述,摘抄如下:

在Docker上运行Flink时,docker-entrypoint.sh脚本将启动Flink进程(TaskExecutor和JobMaster) in the foreground。这样做的效果是,Flink既不会将其STDOUT重定向到文件中,也不会登录到文件中。取而代之的是,Flink还将记录到STDOUT。这样,您就可以通过docker logs查看docker容器的日志和标准输出。

如果要更改此行为,只需更改docker-entrypoint.sh并传递start而不是start-foreground就足够了

if [ "${CMD}" == "${TASK_MANAGER}" ]; then

$FLINK_HOME/bin/taskmanager.sh start "$@"

else

$FLINK_HOME/bin/standalone-job.sh start "$@"

fi

sleep 1

exec /bin/bash -c "tail -f $FLINK_HOME/log/*.log"

更新

在使用 Flink 的 DataSet API 时,调用 DataSet::print 方法实际上会从集群中检索相应的 DataSet 返回到客户端,然后将其打印到 STDOUT。由于需要检索,此方法仅在 Flink 的 CLI 客户端通过bin/flink run <job.jar> 提交作业时才有效。此行为与 DataStream::print方法不同,后者在执行程序的 TaskManagers 上打印 DataStream。

如果您想在TaskManager上打印DataSet结果,则需要调用DataSet::printOnTaskManager而不是print。不过不论是printToTaskManager还是printOnTaskManager,都是比较早版本的用法了,新版本应该还是用sink进行print,这个还是熟练了框架以后再说吧。