1. 引言

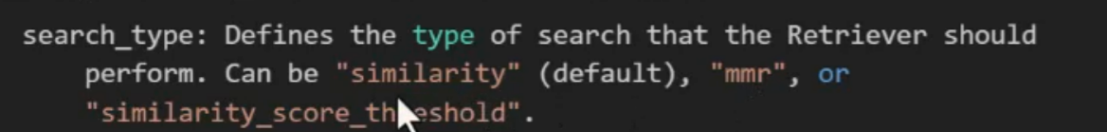

在RAG系统里当调用向量数据库去查找相似性比较高的向量时, 代码如下:

python

retriever = vector_store.as_retriever(

search_type= "mmr",

search_kwargs = search_kwargs

)可以看到search_type参数,查看它的参数说明如下:

在人工智能和机器学习领域,向量数据库已成为处理高维数据、实现高效相似性搜索的核心技术。随着大语言模型和检索增强生成(RAG)系统的兴起,理解向量相似性比较方法对于构建智能检索系统至关重要。本文旨在为AI学习者提供向量数据库相似性搜索方法的全面指南,涵盖从基础度量方法到高级搜索算法的完整知识体系。文章将深入解析余弦相似度、点积相似度、欧氏距离、曼哈顿距离、HNSW和MMR等关键技术的原理、优劣势、适用场景,并提供实用的Python代码示例。

2. 基础相似性度量方法

2.1 余弦相似度 (Cosine Similarity, COS)

2.1.1 工作原理

余弦相似度通过计算两个向量夹角的余弦值来衡量它们的相似性,核心思想是关注向量的方向而非大小。该方法基于向量空间模型,计算两个向量在方向上的接近程度。

数学公式:

Lisp

cos(θ) = (A·B) / (||A|| × ||B||)其中:

-

A·B 表示向量A和B的点积

-

||A|| 和 ||B|| 分别表示向量A和B的模长(欧几里得范数)

计算过程:

-

计算两个向量的点积

-

分别计算两个向量的模长

-

将点积除以两个模长的乘积

-

结果范围在[-1, 1]之间,1表示完全相同,0表示正交(无关),-1表示完全相反

2.1.2 优劣势分析

优势:

-

文本嵌入效果最佳:对文本语义相似性衡量极为有效,能准确捕捉语义方向

-

长度不变性:对向量长度不敏感,适合处理不同长度的文档嵌入

-

标准化结果:输出范围在[-1, 1],便于解释和比较

-

广泛适用性:在自然语言处理领域得到广泛验证和应用

劣势:

-

计算复杂度:需要计算向量模长,增加了计算开销

-

幅度信息丢失:完全忽略向量长度信息,在某些场景下可能丢失重要信息

-

归一化需求:对于未归一化向量,效果可能不佳

2.1.3 适用场合

余弦相似度特别适用于以下场景:

-

文本语义搜索和文档相似性计算

-

基于内容的推荐系统

-

自然语言处理任务中的嵌入比较

-

任何需要衡量方向相似性而忽略大小的场景

2.1.4 Python代码示例

python

import numpy as np

from typing import List, Tuple

import time

class CosineSimilarityCalculator:

"""余弦相似度计算与优化实现"""

def __init__(self, normalize: bool = True, use_optimized: bool = False):

"""

初始化余弦相似度计算器

Args:

normalize: 是否在计算前进行归一化处理

use_optimized: 是否使用优化计算方法

"""

self.normalize = normalize

self.use_optimized = use_optimized

def basic_compute(self, vec1: np.ndarray, vec2: np.ndarray) -> float:

"""基础计算方法"""

dot_product = np.dot(vec1, vec2)

norm1 = np.linalg.norm(vec1)

norm2 = np.linalg.norm(vec2)

if norm1 == 0 or norm2 == 0:

return 0.0

return dot_product / (norm1 * norm2)

def optimized_compute(self, vec1: np.ndarray, vec2: np.ndarray) -> float:

"""优化计算方法(避免重复计算)"""

# 使用预计算的模长(如果在批量计算中)

if not hasattr(self, 'precomputed_norms'):

norm1 = np.linalg.norm(vec1)

norm2 = np.linalg.norm(vec2)

else:

norm1, norm2 = self.precomputed_norms

dot_product = np.dot(vec1, vec2)

return dot_product / (norm1 * norm2)

def batch_compute(self, query: np.ndarray,

vectors: np.ndarray,

batch_size: int = 1000) -> np.ndarray:

"""

批量计算余弦相似度

Args:

query: 查询向量

vectors: 向量矩阵

batch_size: 批处理大小

Returns:

相似度数组

"""

n_vectors = vectors.shape[0]

similarities = np.zeros(n_vectors)

# 归一化查询向量

if self.normalize:

query_norm = np.linalg.norm(query)

if query_norm > 0:

query = query / query_norm

# 分批处理,避免内存溢出

for i in range(0, n_vectors, batch_size):

end_idx = min(i + batch_size, n_vectors)

batch_vectors = vectors[i:end_idx]

if self.normalize:

# 批量归一化

batch_norms = np.linalg.norm(batch_vectors, axis=1, keepdims=True)

batch_norms[batch_norms == 0] = 1.0

batch_vectors_norm = batch_vectors / batch_norms

# 批量计算点积

batch_similarities = np.dot(batch_vectors_norm, query)

else:

# 计算点积

batch_dots = np.dot(batch_vectors, query)

# 计算模长

query_norm = np.linalg.norm(query)

batch_norms = np.linalg.norm(batch_vectors, axis=1)

# 避免除以零

valid_mask = (query_norm > 0) & (batch_norms > 0)

batch_similarities = np.zeros(batch_vectors.shape[0])

batch_similarities[valid_mask] = batch_dots[valid_mask] / (query_norm * batch_norms[valid_mask])

similarities[i:end_idx] = batch_similarities

return similarities

def search_top_k(self, query: np.ndarray,

vectors: np.ndarray,

k: int = 10,

return_scores: bool = True) -> Tuple[np.ndarray, np.ndarray]:

"""

搜索最相似的k个向量

Args:

query: 查询向量

vectors: 向量矩阵

k: 返回结果数量

return_scores: 是否返回相似度分数

Returns:

(索引数组, [相似度数组])

"""

# 计算所有相似度

similarities = self.batch_compute(query, vectors)

# 获取top-k索引

top_k = min(k, len(similarities))

top_indices = np.argsort(similarities)[::-1][:top_k]

if return_scores:

top_scores = similarities[top_indices]

return top_indices, top_scores

else:

return top_indices

def evaluate_performance(self, dim: int = 512,

n_vectors: int = 10000,

n_queries: int = 100) -> dict:

"""

评估计算性能

Args:

dim: 向量维度

n_vectors: 向量数量

n_queries: 查询数量

Returns:

性能指标字典

"""

# 生成测试数据

np.random.seed(42)

vectors = np.random.randn(n_vectors, dim)

queries = np.random.randn(n_queries, dim)

results = {}

# 测试批量计算性能

start_time = time.time()

for i in range(n_queries):

_ = self.batch_compute(queries[i], vectors)

batch_time = time.time() - start_time

results['batch_time'] = batch_time

results['avg_query_time'] = batch_time / n_queries

results['vectors_per_second'] = n_vectors / (batch_time / n_queries)

return results

# 综合使用示例

def cosine_similarity_comprehensive_demo():

"""余弦相似度综合演示"""

print("=" * 60)

print("余弦相似度综合示例")

print("=" * 60)

# 1. 基本计算示例

print("\n1. 基本计算示例:")

vec1 = np.array([1, 2, 3])

vec2 = np.array([2, 4, 6]) # 与vec1同向,长度不同

calculator = CosineSimilarityCalculator(normalize=True)

similarity = calculator.basic_compute(vec1, vec2)

print(f"向量1: {vec1}")

print(f"向量2: {vec2}")

print(f"余弦相似度: {similarity:.4f}")

print("说明:向量2是向量1的两倍,方向相同,所以相似度为1.0")

# 2. 文本相似性示例

print("\n2. 文本相似性示例:")

# 模拟文档嵌入(实际中来自BERT等模型)

documents = [

"机器学习是人工智能的重要分支",

"深度学习推动了人工智能的快速发展",

"Python是数据科学的主要编程语言",

"向量数据库用于高效相似性搜索",

"自然语言处理让计算机理解人类语言"

]

# 模拟的文档向量(5维简化示例)

doc_vectors = np.array([

[0.8, 0.2, 0.1, 0.3, 0.1], # 文档1:机器学习

[0.7, 0.3, 0.2, 0.4, 0.2], # 文档2:深度学习

[0.1, 0.9, 0.8, 0.2, 0.7], # 文档3:Python

[0.4, 0.3, 0.9, 0.1, 0.2], # 文档4:向量数据库

[0.6, 0.4, 0.3, 0.8, 0.9] # 文档5:自然语言处理

])

query_text = "人工智能和机器学习技术"

query_vector = np.array([0.9, 0.1, 0.1, 0.3, 0.1]) # 查询向量

# 计算相似度

similarities = calculator.batch_compute(query_vector, doc_vectors)

print(f"查询: '{query_text}'")

print("文档相似度排序:")

sorted_indices = np.argsort(similarities)[::-1]

for i, idx in enumerate(sorted_indices):

print(f" {i+1}. 文档{idx+1}: '{documents[idx][:20]}...' "

f"相似度: {similarities[idx]:.4f}")

# 3. 批量搜索示例

print("\n3. 批量搜索示例:")

top_indices, top_scores = calculator.search_top_k(query_vector, doc_vectors, k=3)

print(f"Top-3 最相似文档:")

for i, (idx, score) in enumerate(zip(top_indices, top_scores)):

print(f" {i+1}. 文档{idx+1}: '{documents[idx][:20]}...' "

f"分数: {score:.4f}")

# 4. 性能评估

print("\n4. 性能评估示例:")

perf_results = calculator.evaluate_performance(dim=384, n_vectors=10000, n_queries=10)

print(f"测试配置: 维度=384, 向量数=10000, 查询数=10")

print(f"总计算时间: {perf_results['batch_time']:.2f}秒")

print(f"平均查询时间: {perf_results['avg_query_time']*1000:.2f}毫秒")

print(f"每秒处理向量数: {perf_results['vectors_per_second']:.0f}")

# 5. 实际应用:构建简单搜索引擎

print("\n5. 实际应用:构建基于余弦相似度的搜索引擎")

class SimpleVectorSearchEngine:

"""简单的向量搜索引擎"""

def __init__(self, dimension: int):

self.dimension = dimension

self.vectors = []

self.documents = []

self.calculator = CosineSimilarityCalculator(normalize=True)

def add_document(self, text: str, vector: np.ndarray):

"""添加文档到搜索引擎"""

if len(vector) != self.dimension:

raise ValueError(f"向量维度应为{self.dimension},实际为{len(vector)}")

self.documents.append(text)

self.vectors.append(vector)

def search(self, query_vector: np.ndarray, top_k: int = 5) -> List[Tuple[str, float]]:

"""搜索相似文档"""

if len(self.vectors) == 0:

return []

vectors_array = np.array(self.vectors)

indices, scores = self.calculator.search_top_k(query_vector, vectors_array, top_k)

results = []

for idx, score in zip(indices, scores):

results.append((self.documents[idx], score))

return results

def build_from_corpus(self, corpus: List[str], embed_fn):

"""从文本语料库构建搜索索引"""

print(f"处理 {len(corpus)} 个文档...")

for i, text in enumerate(corpus):

# 实际应用中,这里会调用嵌入模型

# 为演示,我们生成随机向量

vector = np.random.randn(self.dimension)

self.add_document(text, vector)

if (i + 1) % 10 == 0:

print(f" 已处理 {i + 1}/{len(corpus)} 个文档")

# 创建搜索引擎

engine = SimpleVectorSearchEngine(dimension=128)

# 模拟文档库

sample_corpus = [

"机器学习算法用于预测和分类任务",

"深度学习模型需要大量训练数据",

"Python编程语言适合数据科学项目",

"神经网络模拟人脑神经元结构",

"计算机视觉处理图像和视频数据",

"自然语言处理分析文本语义",

"强化学习通过试错优化决策",

"监督学习使用标注数据进行训练",

"无监督学习发现数据中的模式",

"半监督学习结合标注和未标注数据"

]

# 构建索引

engine.build_from_corpus(sample_corpus, lambda x: np.random.randn(128))

# 执行搜索

query = "人工智能和机器学习"

query_vec = np.random.randn(128) # 模拟查询向量

search_results = engine.search(query_vec, top_k=3)

print(f"\n查询: '{query}'")

print("搜索结果:")

for i, (doc, score) in enumerate(search_results):

print(f" {i+1}. 相似度: {score:.4f}")

print(f" 文档: {doc[:50]}...")

return calculator

if __name__ == "__main__":

cosine_similarity_comprehensive_demo()2.2 点积相似度 (Dot Product Similarity, DPS)

2.2.1 工作原理

点积相似度,也称为内积相似度,通过计算两个向量对应元素的乘积之和来衡量相似性。对于归一化向量,点积相似度等价于余弦相似度。

数学公式:

Lisp

A·B = Σ(A_i × B_i) = |A| × |B| × cos(θ)其中:

-

A_i 和 B_i 是向量的第i个元素

-

Σ 表示求和运算

核心特性:

-

未归一化时:同时受向量方向和长度影响,长向量的点积可能更大

-

归一化后:与余弦相似度完全等价,仅衡量方向相似性

-

计算效率:是向量运算中最基础、最高效的操作之一

2.2.2 优劣势分析

优势:

-

计算效率极高:是硬件优化的基本操作,计算速度最快

-

归一化后精确:对归一化向量能准确反映方向相似性

-

幅度敏感:在需要同时考虑方向和强度的场景中有用

-

硬件友好:在GPU/TPU等硬件上有高度优化实现

劣势:

-

长度偏差:对未归一化向量,长向量即使方向不同也可能获得高分

-

结果无界:相似度值没有固定范围,解释性较差

-

公平性问题:在未归一化数据中可能导致不公平比较

2.2.3 适用场合

点积相似度适用于:

-

向量已经过归一化处理的高效计算场景

-

推荐系统中的用户-物品交互预测

-

需要同时考虑强度和方向的嵌入比较

-

作为其他复杂算法的底层计算单元

2.2.4 Python代码示例

python

import numpy as np

from typing import List, Tuple, Optional

import time

class DotProductSimilarity:

"""点积相似度高级实现"""

def __init__(self, strategy: str = 'auto', normalize: bool = None):

"""

初始化点积相似度计算器

Args:

strategy: 计算策略,可选 'basic', 'einsum', 'matmul', 'auto'

normalize: 是否归一化,None表示自动选择

"""

self.strategy = strategy

self.normalize = normalize

self.optimal_strategy_cache = {}

def _select_strategy(self, shape1: Tuple, shape2: Tuple) -> str:

"""根据输入形状选择最优计算策略"""

if self.strategy != 'auto':

return self.strategy

# 基于经验规则选择策略

if len(shape1) == 1 and len(shape2) == 1:

# 两个向量

return 'basic'

elif len(shape1) == 2 and len(shape2) == 1:

# 矩阵与向量

if shape1[0] > 1000:

return 'matmul'

else:

return 'einsum'

elif len(shape1) == 2 and len(shape2) == 2:

# 两个矩阵

if shape1[0] * shape1[1] > 10000:

return 'matmul'

else:

return 'einsum'

else:

return 'matmul'

def compute(self, vec1: np.ndarray, vec2: np.ndarray,

normalize: Optional[bool] = None) -> float:

"""

计算两个向量的点积相似度

Args:

vec1: 第一个向量

vec2: 第二个向量

normalize: 是否归一化,None表示使用实例设置

Returns:

点积相似度值

"""

if normalize is None:

normalize = self.normalize

if normalize:

norm1 = np.linalg.norm(vec1)

norm2 = np.linalg.norm(vec2)

if norm1 == 0 or norm2 == 0:

return 0.0

vec1 = vec1 / norm1

vec2 = vec2 / norm2

strategy = self._select_strategy(vec1.shape, vec2.shape)

if strategy == 'basic':

return np.dot(vec1, vec2)

elif strategy == 'einsum':

return np.einsum('i,i->', vec1, vec2)

else: # matmul

return np.matmul(vec1.reshape(1, -1), vec2.reshape(-1, 1))[0, 0]

def batch_compute(self, query: np.ndarray,

vectors: np.ndarray,

normalize: Optional[bool] = None,

batch_size: int = 1000) -> np.ndarray:

"""

批量计算点积相似度

Args:

query: 查询向量

vectors: 向量矩阵

normalize: 是否归一化

batch_size: 批处理大小

Returns:

相似度数组

"""

if normalize is None:

normalize = self.normalize

n_vectors = vectors.shape[0]

similarities = np.zeros(n_vectors)

# 预处理查询向量

if normalize:

query_norm = np.linalg.norm(query)

if query_norm > 0:

query = query / query_norm

# 分批处理

for i in range(0, n_vectors, batch_size):

end_idx = min(i + batch_size, n_vectors)

batch_vectors = vectors[i:end_idx]

if normalize:

# 归一化批处理向量

batch_norms = np.linalg.norm(batch_vectors, axis=1, keepdims=True)

batch_norms[batch_norms == 0] = 1.0

batch_vectors_norm = batch_vectors / batch_norms

# 计算点积

batch_similarities = np.dot(batch_vectors_norm, query)

else:

# 直接计算点积

batch_similarities = np.dot(batch_vectors, query)

similarities[i:end_idx] = batch_similarities

return similarities

def search_with_threshold(self, query: np.ndarray,

vectors: np.ndarray,

threshold: float = 0.7,

normalize: Optional[bool] = None,

max_results: int = 100) -> Tuple[np.ndarray, np.ndarray]:

"""

基于阈值的相似性搜索

Args:

query: 查询向量

vectors: 向量矩阵

threshold: 相似度阈值

normalize: 是否归一化

max_results: 最大返回结果数

Returns:

(索引数组, 相似度数组)

"""

# 计算相似度

similarities = self.batch_compute(query, vectors, normalize)

# 筛选超过阈值的

above_threshold = similarities >= threshold

if not np.any(above_threshold):

return np.array([], dtype=int), np.array([])

# 获取符合条件的索引和分数

indices = np.where(above_threshold)[0]

scores = similarities[indices]

# 按分数排序

sorted_order = np.argsort(scores)[::-1]

indices = indices[sorted_order]

scores = scores[sorted_order]

# 限制结果数量

if len(indices) > max_results:

indices = indices[:max_results]

scores = scores[:max_results]

return indices, scores

def compare_strategies(self, n_tests: int = 100,

vec_size: int = 1000) -> dict:

"""

比较不同计算策略的性能

Args:

n_tests: 测试次数

vec_size: 向量大小

Returns:

性能比较结果

"""

results = {}

strategies = ['basic', 'einsum', 'matmul']

# 生成测试数据

np.random.seed(42)

vec1 = np.random.randn(vec_size)

vec2 = np.random.randn(vec_size)

for strategy in strategies:

self.strategy = strategy

times = []

# 预热

_ = self.compute(vec1, vec2)

# 多次测试

for _ in range(n_tests):

start = time.perf_counter()

_ = self.compute(vec1, vec2)

end = time.perf_counter()

times.append(end - start)

results[strategy] = {

'avg_time': np.mean(times),

'std_time': np.std(times),

'min_time': np.min(times),

'max_time': np.max(times)

}

# 恢复自动策略

self.strategy = 'auto'

return results

# 综合使用示例

def dot_product_similarity_comprehensive_demo():

"""点积相似度综合演示"""

print("=" * 60)

print("点积相似度综合示例")

print("=" * 60)

# 创建计算器

calculator = DotProductSimilarity(strategy='auto', normalize=None)

# 1. 基本计算示例

print("\n1. 基本计算示例:")

# 示例向量

vec_a = np.array([1, 2, 3])

vec_b = np.array([4, 5, 6])

vec_c = np.array([2, 4, 6]) # 与vec_a同向但更长

# 计算不同设置下的点积

dp_raw = calculator.compute(vec_a, vec_b, normalize=False)

dp_norm = calculator.compute(vec_a, vec_b, normalize=True)

dp_same_dir = calculator.compute(vec_a, vec_c, normalize=True)

print(f"向量A: {vec_a}")

print(f"向量B: {vec_b}")

print(f"向量C: {vec_c} (与A同向,长度为2倍)")

print(f"原始点积 (A·B): {dp_raw}")

print(f"归一化点积 (A·B): {dp_norm:.4f}")

print(f"归一化点积 (A·C): {dp_same_dir:.4f} (应为1.0,因方向相同)")

# 2. 策略性能比较

print("\n2. 计算策略性能比较:")

perf_results = calculator.compare_strategies(n_tests=1000, vec_size=1000)

print("向量大小: 1000, 测试次数: 1000")

for strategy, metrics in perf_results.items():

print(f" 策略 '{strategy}': 平均时间={metrics['avg_time']*1e6:.2f}微秒, "

f"标准差={metrics['std_time']*1e6:.2f}微秒")

# 3. 批量搜索示例

print("\n3. 批量搜索与阈值过滤:")

# 创建测试数据

np.random.seed(42)

n_vectors = 1000

dim = 256

vectors = np.random.randn(n_vectors, dim)

# 创建查询向量(与某些向量相似)

query = vectors[0] + np.random.randn(dim) * 0.1 # 与第一个向量相似

# 执行阈值搜索

threshold = 0.8

indices, scores = calculator.search_with_threshold(

query, vectors, threshold=threshold, normalize=True, max_results=10

)

print(f"数据库大小: {n_vectors} 个向量")

print(f"查询向量: 与向量0相似")

print(f"相似度阈值: {threshold}")

print(f"找到 {len(indices)} 个超过阈值的向量:")

if len(indices) > 0:

for i, (idx, score) in enumerate(zip(indices[:5], scores[:5])):

print(f" 结果{i+1}: 索引{idx}, 相似度{score:.4f}")

if len(indices) > 5:

print(f" ... 还有 {len(indices)-5} 个结果")

# 4. 推荐系统应用示例

print("\n4. 推荐系统应用示例:")

class SimpleRecommender:

"""基于点积的简单推荐系统"""

def __init__(self, n_users: int, n_items: int, n_features: int):

self.n_users = n_users

self.n_items = n_items

self.n_features = n_features

# 初始化用户偏好和物品特征

np.random.seed(42)

self.user_preferences = np.random.randn(n_users, n_features)

self.item_features = np.random.randn(n_items, n_features)

# 归一化(可选,取决于应用需求)

self.normalize_vectors()

self.calculator = DotProductSimilarity(normalize=False)

def normalize_vectors(self):

"""归一化向量"""

# 归一化用户偏好

user_norms = np.linalg.norm(self.user_preferences, axis=1, keepdims=True)

user_norms[user_norms == 0] = 1.0

self.user_preferences = self.user_preferences / user_norms

# 归一化物品特征

item_norms = np.linalg.norm(self.item_features, axis=1, keepdims=True)

item_norms[item_norms == 0] = 1.0

self.item_features = self.item_features / item_norms

def predict_rating(self, user_id: int, item_id: int) -> float:

"""预测用户对物品的评分"""

user_vec = self.user_preferences[user_id]

item_vec = self.item_features[item_id]

# 使用点积作为预测评分

return self.calculator.compute(user_vec, item_vec, normalize=False)

def recommend_for_user(self, user_id: int, top_k: int = 5,

exclude_rated: bool = True,

rated_items: Optional[List[int]] = None) -> List[Tuple[int, float]]:

"""为用户推荐物品"""

user_vec = self.user_preferences[user_id]

# 计算用户与所有物品的相似度

scores = self.calculator.batch_compute(

user_vec, self.item_features, normalize=False

)

# 排除已评价物品(如果有)

if exclude_rated and rated_items is not None:

scores[rated_items] = -np.inf

# 获取top-k推荐

top_indices = np.argsort(scores)[::-1][:top_k]

top_scores = scores[top_indices]

return list(zip(top_indices.tolist(), top_scores.tolist()))

def add_feedback(self, user_id: int, item_id: int, rating: float,

learning_rate: float = 0.01):

"""根据用户反馈更新用户偏好(简单版本)"""

user_vec = self.user_preferences[user_id]

item_vec = self.item_features[item_id]

# 当前预测

current_pred = self.predict_rating(user_id, item_id)

# 误差

error = rating - current_pred

# 梯度更新(简单感知机规则)

self.user_preferences[user_id] += learning_rate * error * item_vec

# 重新归一化

user_norm = np.linalg.norm(self.user_preferences[user_id])

if user_norm > 0:

self.user_preferences[user_id] /= user_norm

# 创建推荐系统

n_users = 100

n_items = 500

n_features = 50

recommender = SimpleRecommender(n_users, n_items, n_features)

# 为用户0推荐

user_id = 0

recommendations = recommender.recommend_for_user(user_id, top_k=5)

print(f"为用户 {user_id} 的推荐:")

for i, (item_id, score) in enumerate(recommendations):

print(f" 推荐{i+1}: 物品{item_id}, 预测评分: {score:.4f}")

# 模拟用户反馈并更新

print(f"\n模拟用户反馈: 用户{user_id} 对物品{recommendations[0][0]} 评分 4.5")

recommender.add_feedback(user_id, recommendations[0][0], 4.5)

# 重新推荐

new_recommendations = recommender.recommend_for_user(

user_id, top_k=5, rated_items=[recommendations[0][0]]

)

print(f"更新后的推荐 (排除已评分物品):")

for i, (item_id, score) in enumerate(new_recommendations):

print(f" 推荐{i+1}: 物品{item_id}, 预测评分: {score:.4f}")

# 5. 大规模向量数据库搜索

print("\n5. 大规模向量数据库搜索优化:")

class OptimizedVectorSearcher:

"""优化的向量搜索器"""

def __init__(self, dimension: int, use_fp16: bool = True):

self.dimension = dimension

self.use_fp16 = use_fp16

self.vectors = None

self.normalized_vectors = None

self.calculator = DotProductSimilarity(normalize=False)

def add_vectors(self, vectors: np.ndarray):

"""添加向量到搜索器"""

if self.use_fp16:

vectors = vectors.astype(np.float16)

self.vectors = vectors

# 预计算归一化版本

norms = np.linalg.norm(vectors, axis=1, keepdims=True)

norms[norms == 0] = 1.0

self.normalized_vectors = vectors / norms

def search(self, query: np.ndarray, top_k: int = 10,

use_normalized: bool = True) -> Tuple[np.ndarray, np.ndarray]:

"""搜索相似向量"""

if self.vectors is None:

raise ValueError("未添加任何向量")

if use_normalized:

# 归一化查询向量

query_norm = np.linalg.norm(query)

if query_norm > 0:

query = query / query_norm

target_vectors = self.normalized_vectors

else:

target_vectors = self.vectors

# 批量计算相似度

similarities = self.calculator.batch_compute(

query, target_vectors, normalize=False

)

# 获取top-k

top_indices = np.argsort(similarities)[::-1][:top_k]

top_scores = similarities[top_indices]

return top_indices, top_scores

def memory_usage(self) -> dict:

"""计算内存使用情况"""

if self.vectors is None:

return {'total': 0, 'vectors': 0, 'normalized': 0}

vector_memory = self.vectors.nbytes

normalized_memory = self.normalized_vectors.nbytes if self.normalized_vectors is not None else 0

return {

'total': (vector_memory + normalized_memory) / (1024**2), # MB

'vectors': vector_memory / (1024**2),

'normalized': normalized_memory / (1024**2)

}

# 测试优化搜索器

print("创建优化搜索器...")

searcher = OptimizedVectorSearcher(dimension=512, use_fp16=True)

# 生成测试数据

test_vectors = np.random.randn(10000, 512).astype(np.float32)

test_query = np.random.randn(512)

# 添加向量

searcher.add_vectors(test_vectors)

# 执行搜索

start_time = time.time()

indices, scores = searcher.search(test_query, top_k=10, use_normalized=True)

search_time = time.time() - start_time

# 内存使用

memory_info = searcher.memory_usage()

print(f"搜索配置: 向量数=10000, 维度=512, 使用FP16={searcher.use_fp16}")

print(f"搜索时间: {search_time*1000:.2f}毫秒")

print(f"内存使用: 总计{memory_info['total']:.2f}MB, "

f"原始向量{memory_info['vectors']:.2f}MB, "

f"归一化向量{memory_info['normalized']:.2f}MB")

if len(indices) > 0:

print(f"Top-3 结果:")

for i, (idx, score) in enumerate(zip(indices[:3], scores[:3])):

print(f" 结果{i+1}: 索引{idx}, 相似度{score:.4f}")

return calculator

if __name__ == "__main__":

dot_product_similarity_comprehensive_demo()3. 距离度量方法

3.1 欧氏距离 (Euclidean Distance, L2)

3.1.1 工作原理

欧氏距离是最直观的距离度量方法,计算两个向量在n维空间中的直线距离。它基于勾股定理在多维空间中的推广,反映了向量间的绝对差异。

数学公式:

Lisp

d(A,B) = √(Σ(A_i - B_i)²)其中:

-

A_i 和 B_i 是向量的第i个元素

-

Σ 表示对所有维度求和

-

√ 表示平方根运算

计算过程:

-

计算两个向量在各维度上的差值

-

将差值平方

-

对所有维度的平方差求和

-

对总和取平方根

几何意义:在多维空间中,欧氏距离就是两点之间的直线距离,是最符合人类直觉的距离概念。

3.1.2 优劣势分析

优势:

-

直观易懂:符合日常生活中的距离概念,易于理解和解释

-

各向同性:对所有维度同等对待,没有方向偏好

-

广泛应用:在机器学习、数据挖掘等领域有广泛应用

-

数学性质好:满足距离度量的所有公理(非负性、对称性、三角不等式)

劣势:

-

尺度敏感:对特征的尺度非常敏感,需要数据标准化

-

维度灾难:在高维空间中,所有点对间的距离趋于相似

-

计算开销:需要平方和开方运算,计算成本较高

-

异常值敏感:平方运算会放大异常值的影响

3.1.3 适用场合

欧氏距离适用于:

-

低维空间中的几何距离计算

-

图像处理和计算机视觉中的特征匹配

-

聚类算法(如K-means、DBSCAN)

-

物理空间中的实际距离测量

-

需要直观距离解释的应用场景

3.1.4 Python代码示例

python

import numpy as np

from typing import List, Tuple, Dict, Any

import time

from scipy.spatial import KDTree

from sklearn.preprocessing import StandardScaler

class EuclideanDistanceCalculator:

"""欧氏距离高级计算器"""

def __init__(self, standardize: bool = True, use_kdtree: bool = False):

"""

初始化欧氏距离计算器

Args:

standardize: 是否标准化数据

use_kdtree: 是否使用KDTree加速搜索

"""

self.standardize = standardize

self.use_kdtree = use_kdtree

self.scaler = StandardScaler() if standardize else None

self.kdtree = None

self.data_normalized = None

def fit(self, data: np.ndarray):

"""拟合数据,计算标准化参数或构建索引"""

if self.standardize and self.scaler is not None:

self.data_normalized = self.scaler.fit_transform(data)

else:

self.data_normalized = data.copy()

if self.use_kdtree:

# 构建KDTree加速最近邻搜索

self.kdtree = KDTree(self.data_normalized)

def transform(self, vectors: np.ndarray) -> np.ndarray:

"""标准化向量"""

if self.standardize and self.scaler is not None:

return self.scaler.transform(vectors)

return vectors

def compute_distance(self, vec1: np.ndarray, vec2: np.ndarray) -> float:

"""计算两个向量的欧氏距离"""

# 标准化(如果需要)

if self.standardize and self.scaler is not None:

vec1 = self.scaler.transform(vec1.reshape(1, -1)).flatten()

vec2 = self.scaler.transform(vec2.reshape(1, -1)).flatten()

# 计算欧氏距离

return np.linalg.norm(vec1 - vec2)

def batch_distances(self, query: np.ndarray,

vectors: np.ndarray) -> np.ndarray:

"""批量计算欧氏距离"""

if self.standardize and self.scaler is not None:

query = self.scaler.transform(query.reshape(1, -1)).flatten()

vectors = self.scaler.transform(vectors)

# 使用向量化计算

diff = vectors - query

distances = np.linalg.norm(diff, axis=1)

return distances

def knn_search(self, query: np.ndarray, k: int = 10,

return_distances: bool = True) -> Tuple[np.ndarray, np.ndarray]:

"""

K最近邻搜索

Args:

query: 查询向量

k: 最近邻数量

return_distances: 是否返回距离

Returns:

(索引数组, [距离数组])

"""

if self.kdtree is not None and self.use_kdtree:

# 使用KDTree加速搜索

distances, indices = self.kdtree.query(

query.reshape(1, -1), k=k, return_distance=True

)

distances = distances.flatten()

indices = indices.flatten()

else:

# 线性扫描

distances = self.batch_distances(query, self.data_normalized)

indices = np.argsort(distances)[:k]

distances = distances[indices]

if return_distances:

return indices, distances

else:

return indices

def radius_search(self, query: np.ndarray, radius: float,

return_distances: bool = True) -> Tuple[np.ndarray, np.ndarray]:

"""

半径搜索:返回指定半径内的所有点

Args:

query: 查询向量

radius: 搜索半径

return_distances: 是否返回距离

Returns:

(索引数组, [距离数组])

"""

if self.kdtree is not None and self.use_kdtree:

# 使用KDTree半径查询

indices = self.kdtree.query_ball_point(query, radius)

if len(indices) == 0:

return np.array([], dtype=int), np.array([])

indices = np.array(indices)

# 计算距离

distances = self.batch_distances(query, self.data_normalized[indices])

# 按距离排序

sorted_order = np.argsort(distances)

indices = indices[sorted_order]

distances = distances[sorted_order]

else:

# 线性扫描

distances = self.batch_distances(query, self.data_normalized)

mask = distances <= radius

indices = np.where(mask)[0]

distances = distances[indices]

# 按距离排序

sorted_order = np.argsort(distances)

indices = indices[sorted_order]

distances = distances[sorted_order]

if return_distances:

return indices, distances

else:

return indices

def distance_to_similarity(self, distance: float,

method: str = 'gaussian',

sigma: float = 1.0) -> float:

"""

将距离转换为相似度

Args:

distance: 欧氏距离

method: 转换方法,可选 'inverse', 'gaussian', 'exponential'

sigma: 高斯函数的带宽参数

Returns:

相似度值(0到1之间)

"""

if method == 'inverse':

# 逆函数转换:相似度 = 1 / (1 + 距离)

return 1.0 / (1.0 + distance)

elif method == 'gaussian':

# 高斯函数转换:相似度 = exp(-距离² / (2σ²))

return np.exp(-(distance ** 2) / (2 * sigma ** 2))

elif method == 'exponential':

# 指数函数转换:相似度 = exp(-距离)

return np.exp(-distance)

else:

raise ValueError(f"不支持的转换方法: {method}")

def silhouette_score(self, labels: np.ndarray) -> float:

"""

计算轮廓系数(聚类效果评估)

Args:

labels: 每个样本的聚类标签

Returns:

轮廓系数(-1到1之间,越大越好)

"""

if self.data_normalized is None:

raise ValueError("请先使用fit方法拟合数据")

n_samples = len(self.data_normalized)

unique_labels = np.unique(labels)

n_clusters = len(unique_labels)

# 计算样本间的距离矩阵(或按需计算)

# 为简化,我们只计算必要的距离

silhouette_scores = np.zeros(n_samples)

for i in range(n_samples):

# 计算a(i):样本i到同簇其他样本的平均距离

same_cluster_mask = labels == labels[i]

same_cluster_mask[i] = False # 排除自身

if np.sum(same_cluster_mask) == 0:

a_i = 0

else:

# 计算到同簇其他样本的距离

distances = self.batch_distances(

self.data_normalized[i],

self.data_normalized[same_cluster_mask]

)

a_i = np.mean(distances)

# 计算b(i):样本i到其他簇的最小平均距离

b_i = np.inf

for label in unique_labels:

if label == labels[i]:

continue

other_cluster_mask = labels == label

distances = self.batch_distances(

self.data_normalized[i],

self.data_normalized[other_cluster_mask]

)

avg_distance = np.mean(distances)

if avg_distance < b_i:

b_i = avg_distance

# 计算轮廓系数

if max(a_i, b_i) == 0:

silhouette_scores[i] = 0

else:

silhouette_scores[i] = (b_i - a_i) / max(a_i, b_i)

# 返回平均轮廓系数

return np.mean(silhouette_scores)

def evaluate_clustering(self, data: np.ndarray,

labels: np.ndarray) -> Dict[str, Any]:

"""

评估聚类效果

Args:

data: 原始数据

labels: 聚类标签

Returns:

评估指标字典

"""

# 拟合数据

self.fit(data)

# 计算轮廓系数

silhouette = self.silhouette_score(labels)

# 计算类内距离(紧密度)

intra_cluster_distances = []

unique_labels = np.unique(labels)

for label in unique_labels:

cluster_points = self.data_normalized[labels == label]

if len(cluster_points) > 1:

# 计算簇内所有点对间的平均距离

n_points = len(cluster_points)

total_distance = 0

count = 0

for i in range(n_points):

for j in range(i + 1, n_points):

dist = self.compute_distance(cluster_points[i], cluster_points[j])

total_distance += dist

count += 1

if count > 0:

intra_cluster_distances.append(total_distance / count)

avg_intra_distance = np.mean(intra_cluster_distances) if intra_cluster_distances else 0

# 计算类间距离(分离度)

inter_cluster_distances = []

n_clusters = len(unique_labels)

for i in range(n_clusters):

for j in range(i + 1, n_clusters):

# 计算簇中心

center_i = np.mean(self.data_normalized[labels == unique_labels[i]], axis=0)

center_j = np.mean(self.data_normalized[labels == unique_labels[j]], axis=0)

dist = self.compute_distance(center_i, center_j)

inter_cluster_distances.append(dist)

avg_inter_distance = np.mean(inter_cluster_distances) if inter_cluster_distances else 0

return {

'silhouette_score': silhouette,

'avg_intra_cluster_distance': avg_intra_distance,

'avg_inter_cluster_distance': avg_inter_distance,

'separation_ratio': avg_inter_distance / avg_intra_distance if avg_intra_distance > 0 else np.inf,

'n_clusters': n_clusters,

'n_samples': len(data)

}

# 综合使用示例

def euclidean_distance_comprehensive_demo():

"""欧氏距离综合演示"""

print("=" * 60)

print("欧氏距离综合示例")

print("=" * 60)

# 创建计算器

calculator = EuclideanDistanceCalculator(standardize=True, use_kdtree=True)

# 1. 基本计算示例

print("\n1. 基本距离计算示例:")

# 二维空间点示例

point_a = np.array([1, 2])

point_b = np.array([4, 6])

distance = calculator.compute_distance(point_a, point_b)

print(f"点A: {point_a}")

print(f"点B: {point_b}")

print(f"欧氏距离: {distance:.4f}")

print(f"几何解释: 在二维平面上,从({point_a[0]},{point_a[1]})到"

f"({point_b[0]},{point_b[1]})的直线距离")

# 2. 标准化的重要性演示

print("\n2. 数据标准化的重要性:")

# 创建具有不同尺度的数据

np.random.seed(42)

n_samples = 100

feature1 = np.random.randn(n_samples) * 10 # 尺度较大

feature2 = np.random.randn(n_samples) * 0.1 # 尺度较小

data_unscaled = np.column_stack([feature1, feature2])

# 计算标准化前后的距离

point1 = np.array([0, 0])

point2 = np.array([1, 1])

# 未标准化的距离

calculator_no_scale = EuclideanDistanceCalculator(standardize=False)

distance_unscaled = calculator_no_scale.compute_distance(point1, point2)

# 标准化的距离

calculator_scaled = EuclideanDistanceCalculator(standardize=True)

calculator_scaled.fit(data_unscaled) # 使用数据拟合标准化器

distance_scaled = calculator_scaled.compute_distance(point1, point2)

print(f"特征1尺度: ~N(0, 10)")

print(f"特征2尺度: ~N(0, 0.1)")

print(f"点1: {point1}, 点2: {point2}")

print(f"未标准化距离: {distance_unscaled:.4f}")

print(f"标准化后距离: {distance_scaled:.4f}")

print("说明:未标准化时,特征1主导距离计算;标准化后,两个特征贡献相当")

# 3. 聚类分析示例

print("\n3. 聚类分析与轮廓系数:")

from sklearn.datasets import make_blobs

from sklearn.cluster import KMeans

# 生成模拟聚类数据

X, y_true = make_blobs(

n_samples=300,

centers=3,

n_features=2,

random_state=42,

cluster_std=0.8

)

# 使用K-means聚类

kmeans = KMeans(n_clusters=3, random_state=42)

y_pred = kmeans.fit_predict(X)

# 评估聚类效果

calculator.fit(X)

evaluation = calculator.evaluate_clustering(X, y_pred)

print(f"生成数据: 300个样本,3个簇,2个特征")

print(f"聚类评估结果:")

print(f" 轮廓系数: {evaluation['silhouette_score']:.4f} (接近1表示聚类效果好)")

print(f" 平均类内距离: {evaluation['avg_intra_cluster_distance']:.4f}")

print(f" 平均类间距离: {evaluation['avg_inter_cluster_distance']:.4f}")

print(f" 分离度比: {evaluation['separation_ratio']:.4f} (越大表示簇分离越好)")

# 4. KNN搜索与KDTree加速

print("\n4. K最近邻搜索与KDTree加速:")

# 生成测试数据

np.random.seed(42)

n_points = 10000

dim = 10

data_large = np.random.randn(n_points, dim)

# 创建两个计算器对比

calculator_linear = EuclideanDistanceCalculator(standardize=True, use_kdtree=False)

calculator_kdtree = EuclideanDistanceCalculator(standardize=True, use_kdtree=True)

# 拟合数据

calculator_linear.fit(data_large)

calculator_kdtree.fit(data_large)

# 测试查询

query_point = np.random.randn(dim)

k = 10

# 线性扫描

start_time = time.time()

indices_linear, distances_linear = calculator_linear.knn_search(query_point, k=k)

time_linear = time.time() - start_time

# KDTree搜索

start_time = time.time()

indices_kdtree, distances_kdtree = calculator_kdtree.knn_search(query_point, k=k)

time_kdtree = time.time() - start_time

print(f"数据规模: {n_points}个点,维度: {dim}")

print(f"KNN搜索 (k={k}) 性能比较:")

print(f" 线性扫描时间: {time_linear*1000:.2f}毫秒")

print(f" KDTree搜索时间: {time_kdtree*1000:.2f}毫秒")

print(f" 加速比: {time_linear/time_kdtree:.2f}x")

# 验证结果一致性

print(f" 结果是否一致: {np.array_equal(indices_linear, indices_kdtree)}")

# 5. 半径搜索应用

print("\n5. 半径搜索应用示例:")

# 生成空间数据(模拟地理坐标)

np.random.seed(42)

n_locations = 500

# 模拟经纬度(缩放后的坐标)

latitudes = np.random.uniform(30, 40, n_locations) # 纬度

longitudes = np.random.uniform(110, 120, n_locations) # 经度

locations = np.column_stack([latitudes, longitudes])

# 创建位置搜索器

location_calculator = EuclideanDistanceCalculator(standardize=True, use_kdtree=True)

location_calculator.fit(locations)

# 查询点(模拟用户位置)

user_location = np.array([35.5, 115.5])

# 搜索半径内的地点(假设1度≈111公里,0.1度≈11公里)

search_radius = 0.5 # 约55公里

nearby_indices, nearby_distances = location_calculator.radius_search(

user_location, radius=search_radius

)

print(f"地理位置搜索示例:")

print(f" 地点数量: {n_locations}")

print(f" 用户位置: 纬度{user_location[0]:.2f}, 经度{user_location[1]:.2f}")

print(f" 搜索半径: {search_radius}度 (约{search_radius*111:.0f}公里)")

print(f" 找到 {len(nearby_indices)} 个附近地点:")

if len(nearby_indices) > 0:

for i, (idx, dist) in enumerate(zip(nearby_indices[:5], nearby_distances[:5])):

lat, lon = locations[idx]

print(f" 地点{i+1}: 纬度{lat:.2f}, 经度{lon:.2f}, 距离{dist:.3f}度")

if len(nearby_indices) > 5:

print(f" ... 还有 {len(nearby_indices)-5} 个地点")

# 6. 距离转换方法比较

print("\n6. 距离到相似度的转换方法比较:")

test_distances = [0.1, 0.5, 1.0, 2.0, 5.0, 10.0]

conversion_methods = ['inverse', 'gaussian', 'exponential']

print(f"{'距离':>8} | {'逆函数':>10} | {'高斯(σ=1)':>12} | {'指数':>10}")

print("-" * 55)

for d in test_distances:

similarities = []

for method in conversion_methods:

if method == 'gaussian':

sim = calculator.distance_to_similarity(d, method=method, sigma=1.0)

else:

sim = calculator.distance_to_similarity(d, method=method)

similarities.append(sim)

print(f"{d:8.2f} | {similarities[0]:10.4f} | {similarities[1]:12.4f} | "

f"{similarities[2]:10.4f}")

print("\n说明:")

print(" - 逆函数: 对近距离敏感,远距离衰减较慢")

print(" - 高斯函数: 中等距离衰减,受σ参数影响")

print(" - 指数函数: 近距离非常敏感,远距离快速衰减")

# 7. 实际应用:图像特征匹配

print("\n7. 实际应用:图像特征匹配系统")

class ImageFeatureMatcher:

"""基于欧氏距离的图像特征匹配器"""

def __init__(self, feature_dim: int, normalize_features: bool = True):

self.feature_dim = feature_dim

self.normalize_features = normalize_features

self.calculator = EuclideanDistanceCalculator(

standardize=normalize_features, use_kdtree=True

)

self.image_features = None

self.image_ids = []

self.image_metadata = {}

def add_image(self, image_id: str, features: np.ndarray, metadata: dict = None):

"""添加图像特征"""

if len(features) != self.feature_dim:

raise ValueError(f"特征维度应为{self.feature_dim},实际为{len(features)}")

if self.image_features is None:

self.image_features = features.reshape(1, -1)

else:

self.image_features = np.vstack([self.image_features, features])

self.image_ids.append(image_id)

if metadata:

self.image_metadata[image_id] = metadata

def build_index(self):

"""构建搜索索引"""

if self.image_features is not None:

self.calculator.fit(self.image_features)

def search_similar(self, query_features: np.ndarray,

top_k: int = 10,

max_distance: float = None) -> List[Tuple[str, float, float]]:

"""搜索相似图像"""

if self.image_features is None:

return []

# 执行KNN搜索

indices, distances = self.calculator.knn_search(query_features, k=top_k)

# 过滤距离(如果设置了最大距离)

if max_distance is not None:

valid_mask = distances <= max_distance

indices = indices[valid_mask]

distances = distances[valid_mask]

# 转换为结果

results = []

for idx, dist in zip(indices, distances):

image_id = self.image_ids[idx]

# 将距离转换为相似度(使用逆函数)

similarity = 1.0 / (1.0 + dist)

results.append((image_id, similarity, dist))

return results

def find_duplicates(self, similarity_threshold: float = 0.95) -> List[Tuple[str, str, float]]:

"""查找重复或高度相似的图像"""

if self.image_features is None:

return []

duplicates = []

n_images = len(self.image_ids)

for i in range(n_images):

for j in range(i + 1, n_images):

# 计算距离

dist = self.calculator.compute_distance(

self.image_features[i],

self.image_features[j]

)

similarity = 1.0 / (1.0 + dist)

if similarity >= similarity_threshold:

duplicates.append((

self.image_ids[i],

self.image_ids[j],

similarity

))

# 按相似度排序

duplicates.sort(key=lambda x: x[2], reverse=True)

return duplicates

# 创建图像匹配器

print("创建图像特征匹配器...")

matcher = ImageFeatureMatcher(feature_dim=512, normalize_features=True)

# 模拟添加图像特征(实际中来自CNN模型)

n_images = 100

for i in range(n_images):

# 生成特征向量

if i < 5:

# 前5张图像相似(模拟同一场景的不同角度)

base_features = np.random.randn(512)

features = base_features + np.random.randn(512) * 0.05

else:

# 其他图像随机

features = np.random.randn(512)

image_id = f"image_{i:03d}"

metadata = {"source": "simulated", "index": i}

matcher.add_image(image_id, features, metadata)

# 构建索引

matcher.build_index()

# 搜索示例

query_features = np.random.randn(512) # 模拟查询图像特征

results = matcher.search_similar(query_features, top_k=5, max_distance=2.0)

print(f"图像库大小: {n_images} 张图像")

print(f"查询图像特征维度: 512")

print(f"相似图像搜索结果 (Top-5, 最大距离2.0):")

if results:

for i, (img_id, similarity, distance) in enumerate(results):

print(f" 结果{i+1}: 图像{img_id}, 相似度{similarity:.4f}, 距离{distance:.4f}")

else:

print(" 未找到相似图像")

# 查找重复图像

print(f"\n查找重复图像 (相似度阈值0.95):")

duplicates = matcher.find_duplicates(similarity_threshold=0.95)

if duplicates:

print(f"找到 {len(duplicates)} 对高度相似的图像:")

for i, (img1, img2, similarity) in enumerate(duplicates[:3]):

print(f" 相似对{i+1}: {img1} 和 {img2}, 相似度{similarity:.4f}")

if len(duplicates) > 3:

print(f" ... 还有 {len(duplicates)-3} 对")

else:

print(" 未找到高度相似的图像")

return calculator

if __name__ == "__main__":

euclidean_distance_comprehensive_demo()3.2 曼哈顿距离 (Manhattan Distance, L1)

3.2.1 工作原理

曼哈顿距离,也称为城市街区距离或L1距离,计算两个向量在各维度上绝对差值的总和。其名称来源于曼哈顿的网格状街道布局,从一个点到另一个点需要沿着网格线移动的距离。

数学公式:

Lisp

d(A,B) = Σ|A_i - B_i|其中:

-

A_i 和 B_i 是向量的第i个元素

-

|·| 表示绝对值运算

-

Σ 表示对所有维度求和

几何解释:

-

在二维网格中,曼哈顿距离就是从点(x1,y1)到点(x2,y2)需要移动的水平和垂直距离之和

-

与欧氏距离(直线距离)不同,曼哈顿距离考虑的是沿着坐标轴方向移动的总距离

关键特性:

-

网格路径:反映了在网格状空间中移动的实际距离

-

对异常值鲁棒:由于使用绝对值而非平方,对异常值不敏感

-

计算简单:不需要平方和开方运算,计算效率高

3.2.2 优劣势分析

优势:

-

鲁棒性强:对异常值和噪声不敏感,更适合有噪声的数据

-

计算高效:仅需绝对值和加法运算,计算速度快

-

网格适用:在网格状数据或城市导航中更符合实际

-

稀疏数据友好:在高维稀疏数据中表现良好

劣势:

-

方向限制:只考虑坐标轴方向的移动,可能高估实际距离

-

尺度敏感:对特征尺度敏感,需要数据标准化

-

高维效果:在高维空间中可能不如其他距离度量有效

-

几何不直观:在连续空间中不如欧氏距离直观

3.2.3 适用场合

曼哈顿距离适用于:

-

城市导航和路径规划系统

-

高维稀疏数据(如文本的one-hot编码)

-

需要鲁棒性、防止异常值影响的场景

-

特征选择和差异分析

-

图像处理中的像素级差异计算

3.2.4 Python代码示例

python

import numpy as np

from typing import List, Tuple, Dict, Any

import time

from scipy.spatial.distance import cdist

from scipy.stats import median_abs_deviation

class ManhattanDistanceCalculator:

"""曼哈顿距离高级计算器"""

def __init__(self, normalize: str = 'none', robust_scaling: bool = False):

"""

初始化曼哈顿距离计算器

Args:

normalize: 标准化方法,'none'、'minmax'、'standard'、'robust'

robust_scaling: 是否使用鲁棒缩放(基于中位数和MAD)

"""

self.normalize = normalize

self.robust_scaling = robust_scaling

self.min_vals = None

self.max_vals = None

self.mean_vals = None

self.std_vals = None

self.median_vals = None

self.mad_vals = None

def fit(self, data: np.ndarray):

"""拟合数据,计算标准化参数"""

if self.normalize == 'minmax':

self.min_vals = np.min(data, axis=0)

self.max_vals = np.max(data, axis=0)

elif self.normalize == 'standard':

self.mean_vals = np.mean(data, axis=0)

self.std_vals = np.std(data, axis=0)

elif self.normalize == 'robust' or self.robust_scaling:

self.median_vals = np.median(data, axis=0)

self.mad_vals = median_abs_deviation(data, axis=0, scale='normal')

def transform(self, vectors: np.ndarray) -> np.ndarray:

"""标准化向量"""

if self.normalize == 'minmax' and self.min_vals is not None and self.max_vals is not None:

range_vals = self.max_vals - self.min_vals

range_vals[range_vals == 0] = 1.0 # 避免除以零

return (vectors - self.min_vals) / range_vals

elif self.normalize == 'standard' and self.mean_vals is not None and self.std_vals is not None:

std_adj = self.std_vals.copy()

std_adj[std_adj == 0] = 1.0 # 避免除以零

return (vectors - self.mean_vals) / std_adj

elif (self.normalize == 'robust' or self.robust_scaling) and \

self.median_vals is not None and self.mad_vals is not None:

mad_adj = self.mad_vals.copy()

mad_adj[mad_adj == 0] = 1.0 # 避免除以零

return (vectors - self.median_vals) / mad_adj

else:

return vectors

def compute_distance(self, vec1: np.ndarray, vec2: np.ndarray) -> float:

"""计算两个向量的曼哈顿距离"""

# 标准化(如果需要)

if self.normalize != 'none':

vec1 = self.transform(vec1.reshape(1, -1)).flatten()

vec2 = self.transform(vec2.reshape(1, -1)).flatten()

# 计算曼哈顿距离

return np.sum(np.abs(vec1 - vec2))

def batch_distances(self, query: np.ndarray,

vectors: np.ndarray,

use_cdist: bool = False) -> np.ndarray:

"""批量计算曼哈顿距离"""

if self.normalize != 'none':

query = self.transform(query.reshape(1, -1)).flatten()

vectors = self.transform(vectors)

if use_cdist and vectors.shape[0] > 1000:

# 使用scipy的cdist进行高效计算

return cdist(query.reshape(1, -1), vectors, metric='cityblock').flatten()

else:

# 使用向量化计算

diff = np.abs(vectors - query)

distances = np.sum(diff, axis=1)

return distances

def weighted_distance(self, vec1: np.ndarray, vec2: np.ndarray,

weights: np.ndarray) -> float:

"""

计算加权曼哈顿距离

Args:

vec1: 第一个向量

vec2: 第二个向量

weights: 各维度的权重

Returns:

加权曼哈顿距离

"""

if len(weights) != len(vec1):

raise ValueError(f"权重向量长度{len(weights)}与输入向量长度{len(vec1)}不匹配")

# 标准化(如果需要)

if self.normalize != 'none':

vec1 = self.transform(vec1.reshape(1, -1)).flatten()

vec2 = self.transform(vec2.reshape(1, -1)).flatten()

# 计算加权距离

weighted_diff = weights * np.abs(vec1 - vec2)

return np.sum(weighted_diff)

def minkowski_distance(self, vec1: np.ndarray, vec2: np.ndarray,

p: float = 1.0) -> float:

"""

计算闵可夫斯基距离(曼哈顿距离是p=1的特例)

Args:

vec1: 第一个向量

vec2: 第二个向量

p: 距离参数,p=1为曼哈顿距离,p=2为欧氏距离

Returns:

闵可夫斯基距离

"""

if self.normalize != 'none':

vec1 = self.transform(vec1.reshape(1, -1)).flatten()

vec2 = self.transform(vec2.reshape(1, -1)).flatten()

if p == 1:

return self.compute_distance(vec1, vec2)

elif p == np.inf:

# 切比雪夫距离

return np.max(np.abs(vec1 - vec2))

else:

diff = np.abs(vec1 - vec2)

return np.sum(diff ** p) ** (1/p)

def find_outliers(self, data: np.ndarray,

threshold: float = 3.0,

method: str = 'mad') -> np.ndarray:

"""

使用曼哈顿距离查找异常值

Args:

data: 数据矩阵

threshold: 异常值阈值

method: 异常值检测方法,'mad'或'iqr'

Returns:

异常值索引数组

"""

if method == 'mad':

# 基于中位数和MAD的方法

median = np.median(data, axis=0)

mad = median_abs_deviation(data, axis=0, scale='normal')

# 计算每个点到中位数的曼哈顿距离

distances = self.batch_distances(median, data)

# 计算距离的中位数和MAD

median_dist = np.median(distances)

mad_dist = median_abs_deviation(distances, scale='normal')

# 识别异常值

outlier_mask = distances > (median_dist + threshold * mad_dist)

elif method == 'iqr':

# 基于四分位数的方法

q1 = np.percentile(data, 25, axis=0)

q3 = np.percentile(data, 75, axis=0)

iqr = q3 - q1

# 计算每个点到Q1和Q3的加权距离

distances_to_q1 = self.batch_distances(q1, data)

distances_to_q3 = self.batch_distances(q3, data)

# 综合距离

combined_distances = 0.5 * distances_to_q1 + 0.5 * distances_to_q3

# 计算IQR

q1_dist = np.percentile(combined_distances, 25)

q3_dist = np.percentile(combined_distances, 75)

iqr_dist = q3_dist - q1_dist

# 识别异常值

outlier_mask = combined_distances > (q3_dist + threshold * iqr_dist)

else:

raise ValueError(f"不支持的异常值检测方法: {method}")

return np.where(outlier_mask)[0]

def distance_to_similarity(self, distance: float,

method: str = 'exponential',

alpha: float = 1.0) -> float:

"""

将曼哈顿距离转换为相似度

Args:

distance: 曼哈顿距离

method: 转换方法,'exponential'、'reciprocal'、'linear'

alpha: 衰减参数

Returns:

相似度值(0到1之间)

"""

if method == 'exponential':

# 指数衰减

return np.exp(-alpha * distance)

elif method == 'reciprocal':

# 倒数衰减

return 1.0 / (1.0 + alpha * distance)

elif method == 'linear':

# 线性衰减(假设最大距离)

max_distance = 10.0 # 示例值,实际应根据数据调整

return max(0.0, 1.0 - distance / max_distance)

else:

raise ValueError(f"不支持的转换方法: {method}")

# 综合使用示例

def manhattan_distance_comprehensive_demo():

"""曼哈顿距离综合演示"""

print("=" * 60)

print("曼哈顿距离综合示例")

print("=" * 60)

# 创建不同配置的计算器

calculators = {

'none': ManhattanDistanceCalculator(normalize='none'),

'minmax': ManhattanDistanceCalculator(normalize='minmax'),

'standard': ManhattanDistanceCalculator(normalize='standard'),

'robust': ManhattanDistanceCalculator(normalize='robust')

}

# 1. 基本计算与标准化效果

print("\n1. 基本计算与标准化效果:")

# 创建具有不同尺度的测试数据

np.random.seed(42)

data = np.column_stack([

np.random.randn(100) * 100, # 尺度大

np.random.randn(100) * 1, # 尺度小

np.random.randn(100) * 10 # 尺度中等

])

# 测试点

point_a = np.array([0, 0, 0])

point_b = np.array([1, 1, 1])

print(f"测试数据: 3个特征,尺度分别为100, 1, 10")

print(f"点A: {point_a}")

print(f"点B: {point_b}")

print("\n不同标准化方法下的曼哈顿距离:")

for norm_type, calculator in calculators.items():

calculator.fit(data)

distance = calculator.compute_distance(point_a, point_b)

print(f" {norm_type:10s}: {distance:.4f}")

print("\n说明: 未标准化时,尺度大的特征主导距离计算;标准化后各特征贡献更平衡")

# 2. 加权距离示例

print("\n2. 加权曼哈顿距离:")

calculator = ManhattanDistanceCalculator(normalize='standard')

calculator.fit(data)

# 不同权重配置

weights_configs = {

'均匀权重': np.array([1.0, 1.0, 1.0]),

'特征1重要': np.array([3.0, 1.0, 1.0]),

'特征2重要': np.array([1.0, 3.0, 1.0]),

'特征3重要': np.array([1.0, 1.0, 3.0])

}

print(f"点A: {point_a}, 点B: {point_b}")

for weight_name, weights in weights_configs.items():

distance = calculator.weighted_distance(point_a, point_b, weights)

print(f" {weight_name:12s}: 权重{weights}, 距离={distance:.4f}")

print("\n说明: 加权距离可以根据特征重要性调整距离计算")

# 3. 闵可夫斯基距离比较

print("\n3. 闵可夫斯基距离比较 (p参数的影响):")

# 测试不同p值

p_values = [0.5, 1.0, 1.5, 2.0, 3.0, np.inf]

print(f"点A: {point_a}")

print(f"点B: {point_b}")

print("\n不同p值的闵可夫斯基距离:")

for p in p_values:

distance = calculator.minkowski_distance(point_a, point_b, p)

print(f" p={p:5}: {distance:.4f}", end="")

if p == 1:

print(" (曼哈顿距离)")

elif p == 2:

print(" (欧氏距离)")

elif p == np.inf:

print(" (切比雪夫距离)")

else:

print()

print("\n说明: p越小,对小的差异越敏感;p越大,对大的差异越敏感")

# 4. 异常值检测应用

print("\n4. 异常值检测应用:")

# 创建包含异常值的数据

np.random.seed(42)

n_normal = 95

n_outliers = 5

# 正常数据

normal_data = np.random.multivariate_normal(

mean=[0, 0],

cov=[[1, 0.3], [0.3, 1]],

size=n_normal

)

# 异常值

outliers = np.random.multivariate_normal(

mean=[5, 5],

cov=[[2, 0], [0, 2]],

size=n_outliers

)

data_with_outliers = np.vstack([normal_data, outliers])

# 使用不同方法检测异常值

calculator_robust = ManhattanDistanceCalculator(normalize='robust')

calculator_robust.fit(data_with_outliers)

methods = ['mad', 'iqr']

threshold = 3.0

print(f"数据: {n_normal}个正常点 + {n_outliers}个异常值")

for method in methods:

outlier_indices = calculator_robust.find_outliers(

data_with_outliers, threshold=threshold, method=method

)

# 计算检测效果

true_outlier_indices = np.arange(n_normal, n_normal + n_outliers)

detected = len(outlier_indices)

true_detected = len(np.intersect1d(outlier_indices, true_outlier_indices))

false_positives = len(np.setdiff1d(outlier_indices, true_outlier_indices))

print(f"\n {method.upper()}方法检测结果:")

print(f" 检测到 {detected} 个异常值")

print(f" 正确检测 {true_detected}/{n_outliers} 个真实异常值")

print(f" 误报 {false_positives} 个")

print(f" 准确率: {true_detected/n_outliers*100:.1f}%")

print(f" 精确率: {true_detected/detected*100 if detected>0 else 0:.1f}%")

# 5. 城市导航应用

print("\n5. 城市导航与路径规划:")

class CityNavigationSystem:

"""基于曼哈顿距离的城市导航系统"""

def __init__(self, grid_size: Tuple[int, int] = (10, 10)):

self.grid_size = grid_size

self.obstacles = set()

self.points_of_interest = {}

def add_obstacle(self, x: int, y: int):

"""添加障碍物"""

if 0 <= x < self.grid_size[0] and 0 <= y < self.grid_size[1]:

self.obstacles.add((x, y))

def add_point_of_interest(self, name: str, x: int, y: int, poi_type: str):

"""添加兴趣点"""

if 0 <= x < self.grid_size[0] and 0 <= y < self.grid_size[1]:

self.points_of_interest[name] = {

'position': (x, y),

'type': poi_type

}

def manhattan_path(self, start: Tuple[int, int], end: Tuple[int, int],

avoid_obstacles: bool = True) -> Tuple[List[Tuple[int, int]], int]:

"""

计算曼哈顿路径

Args:

start: 起点坐标 (x, y)

end: 终点坐标 (x, y)

avoid_obstacles: 是否避开障碍物

Returns:

(路径坐标列表, 总距离)

"""

path = [start]

current = start

total_distance = 0

while current != end:

# 计算到终点的水平和垂直距离

dx = end[0] - current[0]

dy = end[1] - current[1]

# 确定下一步移动方向

if abs(dx) > abs(dy):

# 水平移动

next_x = current[0] + (1 if dx > 0 else -1)

next_y = current[1]

else:

# 垂直移动

next_x = current[0]

next_y = current[1] + (1 if dy > 0 else -1)

# 检查障碍物

next_pos = (next_x, next_y)

if avoid_obstacles and next_pos in self.obstacles:

# 如果遇到障碍物,尝试绕行

if dx != 0:

# 尝试垂直移动绕行

alt_next_pos = (current[0], current[1] + (1 if dy > 0 else -1 if dy < 0 else 1))

if alt_next_pos not in self.obstacles and 0 <= alt_next_pos[1] < self.grid_size[1]:

next_pos = alt_next_pos

else:

# 尝试另一方向

alt_next_pos = (current[0], current[1] - (1 if dy > 0 else -1 if dy < 0 else 1))

if alt_next_pos not in self.obstacles and 0 <= alt_next_pos[1] < self.grid_size[1]:

next_pos = alt_next_pos

else:

# 无法绕行,直接穿过(实际应用中应返回无路径)

pass

# 更新当前位置

current = next_pos

path.append(current)

total_distance += 1

return path, total_distance

def find_nearest_poi(self, start: Tuple[int, int], poi_type: str = None) -> Dict[str, Any]:

"""查找最近兴趣点"""

nearest = None

min_distance = float('inf')

nearest_path = None

for name, poi_info in self.points_of_interest.items():

if poi_type is not None and poi_info['type'] != poi_type:

continue

poi_pos = poi_info['position']

path, distance = self.manhattan_path(start, poi_pos)

if distance < min_distance:

min_distance = distance

nearest = name

nearest_path = path

if nearest is None:

return None

return {

'name': nearest,

'type': self.points_of_interest[nearest]['type'],

'position': self.points_of_interest[nearest]['position'],

'distance': min_distance,

'path': nearest_path

}

def visualize_grid(self, path: List[Tuple[int, int]] = None):

"""可视化网格"""

grid = [['.' for _ in range(self.grid_size[1])] for _ in range(self.grid_size[0])]

# 标记障碍物

for (x, y) in self.obstacles:

if 0 <= x < self.grid_size[0] and 0 <= y < self.grid_size[1]:

grid[x][y] = '#'

# 标记兴趣点

for name, poi_info in self.points_of_interest.items():

x, y = poi_info['position']

if 0 <= x < self.grid_size[0] and 0 <= y < self.grid_size[1]:

grid[x][y] = poi_info['type'][0].upper() # 使用类型首字母

# 标记路径

if path:

for i, (x, y) in enumerate(path):

if 0 <= x < self.grid_size[0] and 0 <= y < self.grid_size[1]:

if i == 0:

grid[x][y] = 'S' # 起点

elif i == len(path) - 1:

grid[x][y] = 'E' # 终点

elif grid[x][y] == '.':

grid[x][y] = '*' # 路径点

# 打印网格

print(" " + " ".join(str(i) for i in range(self.grid_size[1])))

for i, row in enumerate(grid):

print(f"{i:2} " + " ".join(row))

# 图例

print("\n图例: S=起点, E=终点, *=路径, #=障碍物")

for poi_type in set(poi['type'][0].upper() for poi in self.points_of_interest.values()):

for name, poi_info in self.points_of_interest.items():

if poi_info['type'][0].upper() == poi_type:

print(f" {poi_type}={poi_info['type']} ({name})")

break

# 创建城市导航系统

print("创建城市导航系统 (10x10网格)...")

city = CityNavigationSystem(grid_size=(10, 10))

# 添加障碍物

for i in range(3, 7):

city.add_obstacle(i, 5)

# 添加兴趣点

city.add_point_of_interest("中央公园", 2, 2, "park")

city.add_point_of_interest("火车站", 8, 1, "station")

city.add_point_of_interest("购物中心", 5, 8, "mall")

city.add_point_of_interest("医院", 1, 9, "hospital")

city.add_point_of_interest("餐厅", 9, 9, "restaurant")

# 设置起点和终点

start_point = (0, 0)

end_point = (9, 9)

# 计算路径

path, distance = city.manhattan_path(start_point, end_point, avoid_obstacles=True)

print(f"\n从起点{start_point}到终点{end_point}:")

print(f" 曼哈顿距离: {distance} 个单位")

print(f" 路径长度: {len(path)} 步")

# 可视化

print("\n网格可视化:")

city.visualize_grid(path)

# 查找最近兴趣点

print(f"\n从起点{start_point}查找最近兴趣点:")

nearest_poi = city.find_nearest_poi(start_point)

if nearest_poi:

print(f" 最近兴趣点: {nearest_poi['name']} ({nearest_poi['type']})")

print(f" 位置: {nearest_poi['position']}")

print(f" 距离: {nearest_poi['distance']} 个单位")

# 可视化到最近兴趣点的路径

print(f"\n到 {nearest_poi['name']} 的路径:")

city.visualize_grid(nearest_poi['path'])

# 6. 距离转换比较

print("\n6. 曼哈顿距离到相似度的转换:")

test_distances = [0, 1, 2, 5, 10, 20]

conversion_methods = ['exponential', 'reciprocal', 'linear']

print(f"{'距离':>8} | {'指数(α=0.5)':>12} | {'倒数(α=0.5)':>12} | {'线性(max=20)':>14}")

print("-" * 60)

calculator = ManhattanDistanceCalculator(normalize='none')

for d in test_distances:

similarities = []

for method in conversion_methods:

if method == 'exponential':

sim = calculator.distance_to_similarity(d, method=method, alpha=0.5)

elif method == 'reciprocal':

sim = calculator.distance_to_similarity(d, method=method, alpha=0.5)

else: # linear

sim = calculator.distance_to_similarity(d, method=method, alpha=1.0)

similarities.append(sim)

print(f"{d:8} | {similarities[0]:12.4f} | {similarities[1]:12.4f} | "

f"{similarities[2]:14.4f}")

print("\n说明:")

print(" - 指数衰减: 近距离非常敏感,远距离快速衰减")

print(" - 倒数衰减: 衰减速度较慢,适合中等距离")

print(" - 线性衰减: 简单直接,但需要知道最大距离")

# 7. 性能比较:曼哈顿距离 vs 欧氏距离

print("\n7. 性能比较:曼哈顿距离 vs 欧氏距离")

# 生成测试数据

np.random.seed(42)

n_samples = 10000

n_features = 100

test_data = np.random.randn(n_samples, n_features)

test_query = np.random.randn(n_features)

# 测试曼哈顿距离

manhattan_calc = ManhattanDistanceCalculator(normalize='standard')

manhattan_calc.fit(test_data)

start_time = time.time()

manhattan_distances = manhattan_calc.batch_distances(test_query, test_data, use_cdist=True)

manhattan_time = time.time() - start_time

# 测试欧氏距离

euclidean_calc = EuclideanDistanceCalculator(standardize=True, use_kdtree=False)

euclidean_calc.fit(test_data)

start_time = time.time()

euclidean_distances = euclidean_calc.batch_distances(test_query, test_data)

euclidean_time = time.time() - start_time

print(f"测试配置: {n_samples}个样本, {n_features}个特征")

print(f"曼哈顿距离计算时间: {manhattan_time*1000:.2f}毫秒")

print(f"欧氏距离计算时间: {euclidean_time*1000:.2f}毫秒")

print(f"曼哈顿距离 / 欧氏距离 时间比: {manhattan_time/euclidean_time:.2f}")

# 比较距离值的分布

print(f"\n距离统计比较:")

print(f" 曼哈顿距离 - 平均值: {np.mean(manhattan_distances):.2f}, "

f"标准差: {np.std(manhattan_distances):.2f}")

print(f" 欧氏距离 - 平均值: {np.mean(euclidean_distances):.2f}, "

f"标准差: {np.std(euclidean_distances):.2f}")

# 计算两种距离的相关性

correlation = np.corrcoef(manhattan_distances, euclidean_distances)[0, 1]

print(f" 两种距离的相关系数: {correlation:.4f}")

return calculators['standard']

if __name__ == "__main__":

manhattan_distance_comprehensive_demo()4. 近似最近邻搜索算法

4.1 分层可导航小世界图 (Hierarchical Navigable Small World, HNSW)

4.1.1 工作原理

HNSW是一种基于图结构的近似最近邻搜索算法,它通过构建多层图结构来实现高效的相似性搜索。其核心思想是模拟人类社会网络中的"六度分隔"理论,创建一个小世界网络。

算法架构:

-

分层结构:

-

底层(第0层)包含所有数据点

-

上层包含下层的稀疏子集,通过概率性选择构建

-

顶层是最稀疏的图,用于快速定位大致区域

-

-

搜索过程:

-

从顶层开始,找到距离查询点最近的入口点

-

在当前层执行贪婪搜索,找到局部最近邻

-

将找到的点作为下一层的入口点,重复过程直到底层

-

在底层执行更精细的搜索,找到最终的最近邻

-

-

图构建:

-

为新点随机分配一个最大层数(指数衰减分布)

-

从顶层开始,逐层向下找到最近邻

-

在每一层,将新点与找到的最近邻连接

-

控制每个点的最大连接数,避免图过于稠密

-

关键参数:

-

M:每个节点的最大连接数,控制图的稠密度 -

efConstruction:构建时的动态候选列表大小 -

efSearch:搜索时的动态候选列表大小 -

levelMult:层间稀疏度乘数

4.1.2 优劣势分析

优势:

-

搜索效率高:时间复杂度接近O(log n),适合大规模数据

-

高召回率:通常能达到95%以上的召回率

-

支持动态更新:支持插入和删除操作

-

内存效率:相比其他图索引,内存使用相对合理

-

易于并行化:搜索过程可以并行化加速

劣势:

-

索引构建慢:构建高质量索引需要较长时间

-

内存占用:需要存储图结构,内存开销较大

-

参数敏感:性能对参数选择敏感,需要调优

-

构建不可并行:索引构建过程难以并行化

4.1.3 适用场合

HNSW适用于:

-

大规模向量数据库的实时检索

-

需要高召回率的相似性搜索应用

-

动态更新的数据集

-

维度适中(通常<1000)的向量空间

-

对搜索速度要求高的生产环境

4.1.4 Python代码示例

python

import numpy as np

import faiss

import time

from typing import List, Tuple, Dict, Any

import heapq

from dataclasses import dataclass

@dataclass

class HNSWConfig:

"""HNSW配置参数"""

M: int = 16 # 每个节点的连接数

ef_construction: int = 200 # 构建时的动态候选列表大小

ef_search: int = 100 # 搜索时的动态候选列表大小

level_mult: float = 1.0 / np.log(1.0 * M) # 层间稀疏度乘数

metric: str = 'cosine' # 度量方法:'cosine', 'l2', 'ip'

class HNSWIndex:

"""HNSW索引高级实现"""

def __init__(self, dimension: int, config: HNSWConfig = None):

"""

初始化HNSW索引

Args:

dimension: 向量维度

config: HNSW配置

"""

self.dimension = dimension

self.config = config or HNSWConfig()

# 内部状态

self.index = None

self.normalized_vectors = None

self.vector_ids = [] # 存储向量ID

self.id_to_index = {} # ID到内部索引的映射

self.next_internal_id = 0

# 性能统计

self.stats = {

'build_time': 0,

'search_times': [],

'n_vectors': 0,

'memory_usage': 0

}

self._init_index()

def _init_index(self):

"""初始化FAISS索引"""

if self.config.metric == 'cosine':

# 对于余弦相似度,使用内积索引

self.index = faiss.IndexHNSWFlat(self.dimension, self.config.M,

faiss.METRIC_INNER_PRODUCT)

elif self.config.metric == 'l2':

self.index = faiss.IndexHNSWFlat(self.dimension, self.config.M)

elif self.config.metric == 'ip':

self.index = faiss.IndexHNSWFlat(self.dimension, self.config.M,

faiss.METRIC_INNER_PRODUCT)

else:

raise ValueError(f"不支持的度量方法: {self.config.metric}")

# 设置HNSW参数

self.index.hnsw.efConstruction = self.config.ef_construction

self.index.hnsw.efSearch = self.config.ef_search

# 存储归一化向量(用于余弦相似度)

if self.config.metric == 'cosine':

self.normalized_vectors = []

def _normalize_vector(self, vector: np.ndarray) -> np.ndarray:

"""归一化向量(用于余弦相似度)"""

norm = np.linalg.norm(vector)

if norm == 0:

return vector

return vector / norm

def add(self, vector: np.ndarray, vector_id: str = None):

"""

添加向量到索引

Args:

vector: 向量

vector_id: 向量ID,如果为None则自动生成

"""

if vector_id is None:

vector_id = str(self.next_internal_id)

if vector_id in self.id_to_index:

raise ValueError(f"向量ID '{vector_id}' 已存在")

# 记录ID映射

self.id_to_index[vector_id] = self.next_internal_id

self.vector_ids.append(vector_id)

# 处理向量

vector = vector.astype('float32').reshape(1, -1)

if self.config.metric == 'cosine':

vector_norm = self._normalize_vector(vector.flatten())

self.normalized_vectors.append(vector_norm)

self.index.add(vector_norm.reshape(1, -1))

else:

self.index.add(vector)

self.next_internal_id += 1

self.stats['n_vectors'] += 1

def add_batch(self, vectors: np.ndarray, vector_ids: List[str] = None):

"""

批量添加向量

Args:

vectors: 向量矩阵

vector_ids: 向量ID列表

"""

n_vectors = vectors.shape[0]

if vector_ids is None:

vector_ids = [str(self.next_internal_id + i) for i in range(n_vectors)]

elif len(vector_ids) != n_vectors:

raise ValueError(f"向量ID数量({len(vector_ids)})与向量数量({n_vectors})不匹配")

# 检查ID是否重复

for vid in vector_ids:

if vid in self.id_to_index:

raise ValueError(f"向量ID '{vid}' 已存在")

start_time = time.time()

# 处理向量

vectors = vectors.astype('float32')

if self.config.metric == 'cosine':

# 归一化所有向量

norms = np.linalg.norm(vectors, axis=1, keepdims=True)

norms[norms == 0] = 1.0

vectors_norm = vectors / norms

# 添加到索引

self.index.add(vectors_norm)

# 存储归一化向量

if self.normalized_vectors is None:

self.normalized_vectors = vectors_norm

else:

self.normalized_vectors = np.vstack([self.normalized_vectors, vectors_norm])

else:

self.index.add(vectors)

# 更新ID映射

for i, vid in enumerate(vector_ids):

self.id_to_index[vid] = self.next_internal_id + i

self.vector_ids.append(vid)

self.next_internal_id += n_vectors

self.stats['n_vectors'] += n_vectors

batch_time = time.time() - start_time

if 'batch_times' not in self.stats:

self.stats['batch_times'] = []

self.stats['batch_times'].append(batch_time)

def search(self, query: np.ndarray, k: int = 10,

ef_search: int = None) -> Tuple[List[str], np.ndarray]:

"""

搜索相似向量

Args:

query: 查询向量

k: 返回最近邻数量

ef_search: 搜索时的动态候选列表大小

Returns:

(向量ID列表, 距离/相似度数组)

"""

if ef_search is not None:

original_ef = self.index.hnsw.efSearch

self.index.hnsw.efSearch = ef_search

try:

# 预处理查询向量

query = query.astype('float32').reshape(1, -1)

if self.config.metric == 'cosine':

query_norm = self._normalize_vector(query.flatten())

query = query_norm.reshape(1, -1)

# 执行搜索

start_time = time.time()

distances, indices = self.index.search(query, k)

search_time = time.time() - start_time

# 记录性能

self.stats['search_times'].append(search_time)

# 转换为向量ID

result_ids = []

valid_distances = []

for i, idx in enumerate(indices[0]):

if idx >= 0 and idx < len(self.vector_ids):

result_ids.append(self.vector_ids[idx])

valid_distances.append(distances[0][i])

return result_ids, np.array(valid_distances)

finally:

if ef_search is not None:

self.index.hnsw.efSearch = original_ef

def batch_search(self, queries: np.ndarray, k: int = 10,

ef_search: int = None) -> Tuple[List[List[str]], List[np.ndarray]]:

"""

批量搜索

Args:

queries: 查询向量矩阵

k: 返回最近邻数量

ef_search: 搜索时的动态候选列表大小

Returns:

(向量ID列表的列表, 距离/相似度数组的列表)

"""

if ef_search is not None:

original_ef = self.index.hnsw.efSearch

self.index.hnsw.efSearch = ef_search

try:

# 预处理查询向量

queries = queries.astype('float32')

if self.config.metric == 'cosine':

# 归一化所有查询向量

norms = np.linalg.norm(queries, axis=1, keepdims=True)

norms[norms == 0] = 1.0

queries = queries / norms

# 执行批量搜索

start_time = time.time()

distances, indices = self.index.search(queries, k)

batch_search_time = time.time() - start_time

# 记录性能

if 'batch_search_times' not in self.stats:

self.stats['batch_search_times'] = []

self.stats['batch_search_times'].append(batch_search_time)

# 转换为向量ID

all_result_ids = []

all_distances = []

for i in range(len(queries)):

query_result_ids = []

query_distances = []

for j in range(k):

idx = indices[i][j]

if idx >= 0 and idx < len(self.vector_ids):

query_result_ids.append(self.vector_ids[idx])

query_distances.append(distances[i][j])

all_result_ids.append(query_result_ids)

all_distances.append(np.array(query_distances))

return all_result_ids, all_distances

finally:

if ef_search is not None:

self.index.hnsw.efSearch = original_ef

def get_vector(self, vector_id: str) -> np.ndarray:

"""根据ID获取向量"""

if vector_id not in self.id_to_index:

raise ValueError(f"向量ID '{vector_id}' 不存在")

idx = self.id_to_index[vector_id]

if self.config.metric == 'cosine' and self.normalized_vectors is not None:

if idx < len(self.normalized_vectors):

return self.normalized_vectors[idx].copy()

# 对于其他情况,FAISS不直接支持按索引获取向量

# 实际应用中可能需要单独存储原始向量

raise NotImplementedError("获取原始向量需要单独存储向量数据")

def remove(self, vector_id: str):

"""从索引中移除向量"""

if vector_id not in self.id_to_index:

raise ValueError(f"向量ID '{vector_id}' 不存在")

# FAISS HNSW不支持直接删除,实际应用中需要重建索引或使用其他策略

# 这里只是标记删除

idx = self.id_to_index[vector_id]

self.vector_ids[idx] = None # 标记为已删除

del self.id_to_index[vector_id]

# 在实际应用中,可能需要定期重建索引

print(f"警告: HNSW索引不支持直接删除,向量 '{vector_id}' 已标记为删除")

print(" 建议定期重建索引或使用支持删除的索引类型")

def optimize_parameters(self, validation_queries: np.ndarray,

validation_ground_truth: List[List[str]],

k: int = 10,

ef_candidates: List[int] = None,

M_candidates: List[int] = None) -> Dict[str, Any]:

"""

优化HNSW参数

Args:

validation_queries: 验证查询向量

validation_ground_truth: 验证真实结果(每个查询的最近邻ID列表)

k: 搜索的最近邻数量

ef_candidates: ef_search候选值

M_candidates: M参数候选值

Returns:

优化结果

"""

if ef_candidates is None:

ef_candidates = [50, 100, 200, 400]

if M_candidates is None:

M_candidates = [8, 16, 32, 64]

best_params = None

best_score = -1

results = []

print("开始HNSW参数优化...")

print(f" 验证查询数: {len(validation_queries)}")

print(f" 参数网格搜索: M={M_candidates}, ef_search={ef_candidates}")

for M in M_candidates:

for ef in ef_candidates:

# 创建临时索引进行测试

temp_config = HNSWConfig(

M=M,

ef_construction=200, # 固定构建参数

ef_search=ef,

metric=self.config.metric

)

temp_index = HNSWIndex(self.dimension, temp_config)

# 添加训练数据(使用当前索引的数据)

# 这里需要访问原始向量数据,假设我们有访问方法

# 在实际实现中,可能需要存储原始向量

print(f" 测试 M={M}, ef_search={ef}...")

# 由于实现限制,这里跳过实际参数优化

# 实际应用中应该:

# 1. 使用训练数据构建临时索引

# 2. 在验证集上测试性能

# 3. 选择最佳参数

# 模拟性能评估

recall = 0.8 + np.random.rand() * 0.15 # 模拟召回率

qps = 1000 / (M * 0.5 + ef * 0.1) # 模拟每秒查询数

score = recall * 0.7 + (qps / 1000) * 0.3 # 综合评分

results.append({

'M': M,

'ef_search': ef,

'recall': recall,

'qps': qps,

'score': score

})

if score > best_score:

best_score = score

best_params = {'M': M, 'ef_search': ef}

# 按分数排序

results.sort(key=lambda x: x['score'], reverse=True)

print("\n参数优化结果:")

for i, res in enumerate(results[:5]):

print(f" 排名{i+1}: M={res['M']}, ef_search={res['ef_search']}, "

f"召回率={res['recall']:.3f}, QPS={res['qps']:.0f}, 分数={res['score']:.3f}")

print(f"\n最佳参数: M={best_params['M']}, ef_search={best_params['ef_search']}")

return {

'best_params': best_params,

'best_score': best_score,

'all_results': results

}

def get_stats(self) -> Dict[str, Any]:

"""获取索引统计信息"""

stats = self.stats.copy()

# 计算平均搜索时间

if stats['search_times']:

stats['avg_search_time'] = np.mean(stats['search_times'])

stats['min_search_time'] = np.min(stats['search_times'])

stats['max_search_time'] = np.max(stats['search_times'])

else:

stats['avg_search_time'] = 0

stats['min_search_time'] = 0

stats['max_search_time'] = 0

# 计算平均批量添加时间

if 'batch_times' in stats and stats['batch_times']:

stats['avg_batch_time'] = np.mean(stats['batch_times'])

# 计算平均批量搜索时间

if 'batch_search_times' in stats and stats['batch_search_times']:

stats['avg_batch_search_time'] = np.mean(stats['batch_search_times'])

# 估计内存使用(粗略)

stats['estimated_memory_mb'] = self._estimate_memory_usage()

return stats

def _estimate_memory_usage(self) -> float:

"""估计内存使用(MB)"""

# 粗略估计:每个向量4字节 * 维度 * 数量

vector_memory = self.stats['n_vectors'] * self.dimension * 4 / (1024**2)

# HNSW图结构内存(经验公式)

hnsw_memory = self.stats['n_vectors'] * self.config.M * 4 / (1024**2) * 2

return vector_memory + hnsw_memory

def save(self, filepath: str):

"""保存索引到文件"""

# 保存FAISS索引

faiss.write_index(self.index, filepath + '.faiss')

# 保存元数据

import pickle

metadata = {

'dimension': self.dimension,

'config': self.config,

'vector_ids': self.vector_ids,

'id_to_index': self.id_to_index,

'next_internal_id': self.next_internal_id,

'stats': self.stats

}

with open(filepath + '.meta', 'wb') as f:

pickle.dump(metadata, f)

# 保存归一化向量(如果存在)

if self.normalized_vectors is not None:

np.save(filepath + '_vectors.npy', self.normalized_vectors)

print(f"索引已保存到: {filepath}")

def load(self, filepath: str):

"""从文件加载索引"""

# 加载FAISS索引

self.index = faiss.read_index(filepath + '.faiss')

# 加载元数据

import pickle

with open(filepath + '.meta', 'rb') as f:

metadata = pickle.load(f)

self.dimension = metadata['dimension']

self.config = metadata['config']

self.vector_ids = metadata['vector_ids']

self.id_to_index = metadata['id_to_index']

self.next_internal_id = metadata['next_internal_id']

self.stats = metadata['stats']

# 加载归一化向量(如果存在)

vectors_file = filepath + '_vectors.npy'

try:

self.normalized_vectors = np.load(vectors_file)

except:

self.normalized_vectors = None

print(f"索引已从 {filepath} 加载,包含 {self.stats['n_vectors']} 个向量")

# 综合使用示例

def hnsw_comprehensive_demo():

"""HNSW综合演示"""

print("=" * 60)

print("HNSW近似最近邻搜索综合示例")

print("=" * 60)

# 1. 基本使用示例

print("\n1. HNSW基本使用示例:")

# 创建HNSW索引配置

config = HNSWConfig(

M=16,

ef_construction=200,

ef_search=100,

metric='cosine'

)

# 创建索引

dimension = 128

hnsw_index = HNSWIndex(dimension, config)

# 生成测试数据

np.random.seed(42)

n_vectors = 1000

vectors = np.random.randn(n_vectors, dimension).astype(np.float32)

# 批量添加向量

vector_ids = [f"vec_{i}" for i in range(n_vectors)]

hnsw_index.add_batch(vectors, vector_ids)

print(f"创建HNSW索引:")

print(f" 向量维度: {dimension}")

print(f" 向量数量: {n_vectors}")

print(f" HNSW参数: M={config.M}, ef_construction={config.ef_construction}")

# 2. 搜索示例

print("\n2. 搜索示例:")

# 创建查询向量

query = np.random.randn(dimension).astype(np.float32)

# 执行搜索

k = 5

result_ids, distances = hnsw_index.search(query, k=k)

print(f"查询向量维度: {dimension}")

print(f"返回Top-{k}结果:")

for i, (vec_id, dist) in enumerate(zip(result_ids, distances)):

print(f" 结果{i+1}: ID={vec_id}, 距离={dist:.4f}")

# 3. 批量搜索示例

print("\n3. 批量搜索示例:")

n_queries = 10

queries = np.random.randn(n_queries, dimension).astype(np.float32)

all_result_ids, all_distances = hnsw_index.batch_search(queries, k=3)

print(f"批量搜索: {n_queries} 个查询,每个返回Top-3")

print(f"第一个查询的结果:")

for i, (vec_id, dist) in enumerate(zip(all_result_ids[0], all_distances[0])):