docker 安装kafka

一、准备

打开windows power shell 以管理员身份运行

创建文件夹并切换目录

javascript

mkdir kafka-docker

cd kafka-docker二、编写配置文件

在这个目录下面创建一个docker-compose.yml文件,并配置下面内容

javascript

# 无version字段,消除警告

services:

zookeeper:

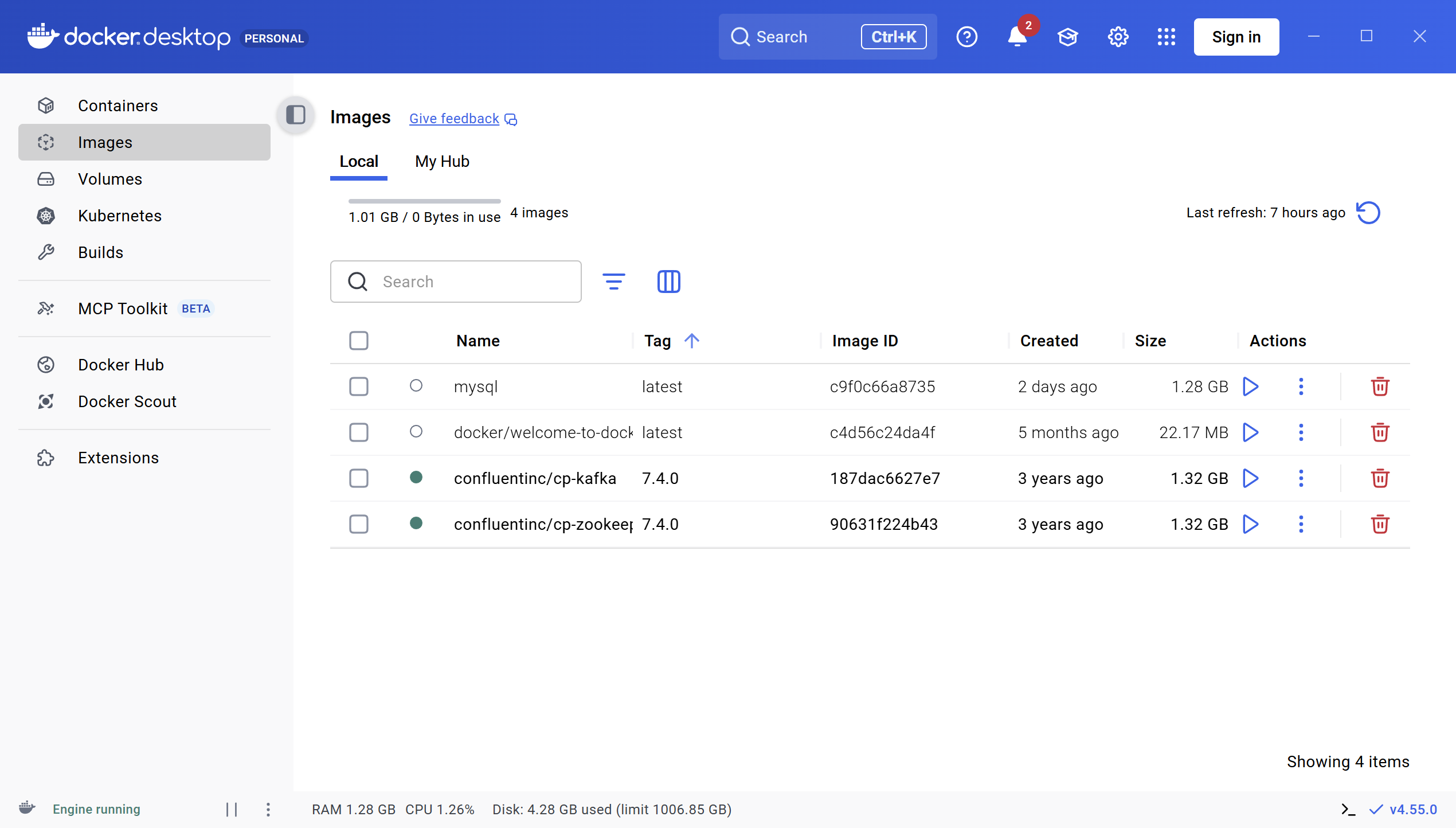

image: confluentinc/cp-zookeeper:7.4.0 # 兼容Manifest V2,适配新版Docker

ports:

- "2181:2181"

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

restart: always

networks:

- kafka-network

kafka:

image: confluentinc/cp-kafka:7.4.0 # 与zookeeper版本匹配,兼容Manifest V2

depends_on:

- zookeeper

ports:

- "9092:9092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_HEAP_OPTS: "-Xms256M -Xmx256M" # 限制内存,避免Windows资源不足

restart: always

networks:

- kafka-network

networks:

kafka-network:

driver: bridge三、启动 Kafka 服务

-

打开终端 / 命令行,进入

docker-kafka文件夹(配置文件所在目录); -

执行启动命令(后台运行):

启动服务(第一次运行会自动下载镜像,耐心等待)

docker-compose up -d

-

验证服务是否启动成功:

-

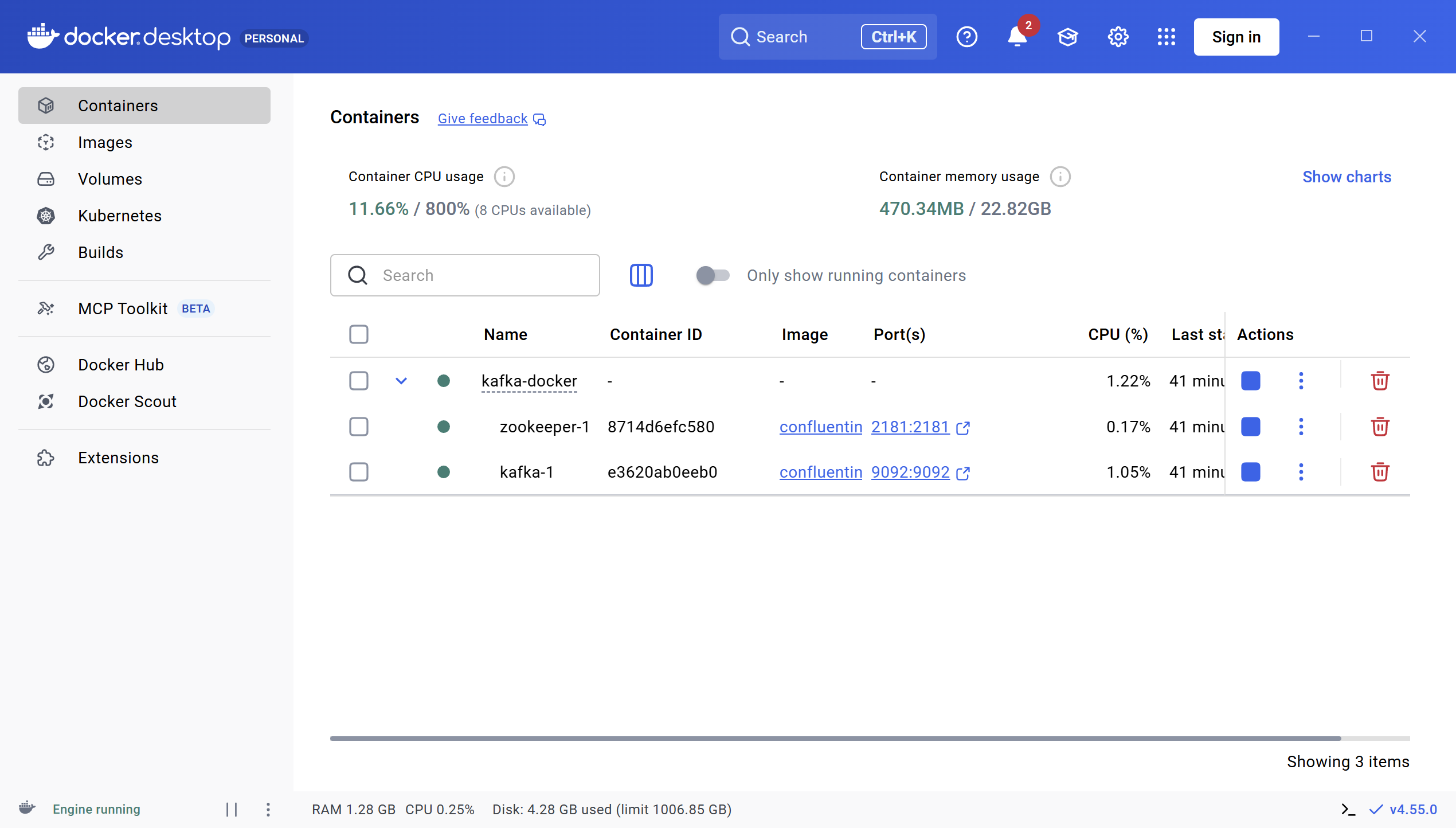

方式 1:查看 Docker Desktop 的

Containers页面,确认zookeeper和kafka容器状态为Running; -

方式 2:终端执行命令:

# 查看运行中的容器 docker ps # 若显示zookeeper、kafka容器,说明启动成功

-

四、验证 Kafka 可用性(关键)

进入 Kafka 容器

javascript

docker exec -it kafka-docker-kafka-1 /bin/bash

五、集成kafka

1、在项目中添加maven的kafka依赖

XML

<!-- Kafka依赖 -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>2、配置application.yml

javascript

spring:

kafka:

bootstrap-servers: 127.0.0.1:9092 # 替换为实际地址

producer:

retries: 3 # 重试次数

batch-size: 16384 # 批量大小

buffer-memory: 33554432 # 生产端缓冲区大小

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

consumer:

group-id: position-receive-group # 自定义消费者组名

# earliest:当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,从头开始消费

# latest:当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,消费新产生的该分区下的数据

# none:topic各分区都存在已提交的offset时,从offset后开始消费;只要有一个分区不存在已提交的offset,则抛出异常

auto-offset-reset: earliest

# 是否自动提交offset

enable-auto-commit: true

# 提交offset延时(接收到消息后多久提交offset)

auto-commit-interval: 1000

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer3、写生产者和消费者代码

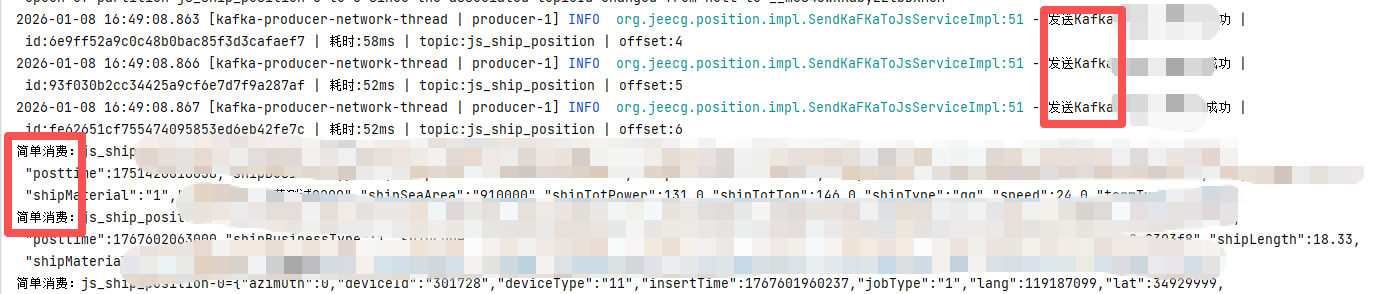

生产者:

java

@Component

@Slf4j

public class Producer {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@Value("${spring.kafka.topic-js}")

private String KAFKA_TOPIC_JS_SHIP_POSITION; // 江苏信息中心船位主题

public void sendMessage(long startTime, String id, String msg) {

// 1. 构建Kafka消息(可选:将id作为消息key,保证唯一性)

ProducerRecord<String, String> kafkaRecord = new ProducerRecord<>(

KAFKA_TOPIC_JS_SHIP_POSITION, // Kafka主题

id, // 消息key(用UUID保证唯一)

msg // 消息体

);

// 2. 异步发送Kafka消息,添加回调记录耗时/成功/失败

ListenableFuture<SendResult<String, String>> future = kafkaTemplate.send(kafkaRecord);

future.addCallback(new ListenableFutureCallback<SendResult<String, String>>() {

@Override

public void onSuccess(SendResult<String, String> result) {

long costTime = System.currentTimeMillis() - startTime;

log.info("发送Kafka成功 | id:{} | 耗时:{}ms | topic:{} | offset:{}",

id, costTime, KAFKA_TOPIC_JS_SHIP_POSITION, result.getRecordMetadata().offset());

}

@Override

public void onFailure(Throwable ex) {

long costTime = System.currentTimeMillis() - startTime;

log.error("发送Kafka失败 | id:{} | 耗时:{}ms | 原因:{}",

id, costTime, ex.getMessage(), ex);

}

});

}

}消费者:需要注意yml配置文件里面要配置消费者的组id信息

group-id: position-receive-group # 自定义消费者组名

java

package org.jeecg.controller;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

public class KafkaConsumer {

//监听消费

@KafkaListener(topics = {"js_ship_position"}, groupId = "position-receive-group-01")

public void onNormalMessage(ConsumerRecord<String, Object> record) {

System.out.println("简单消费:" + record.topic() + "-" + record.partition() + "=" +

record.value());

}

}controller

java

@Api(tags = "kafka测试")

@RestController

@RequestMapping("/kafka")

public class KafkaController {

@Autowired

private Producer producer;

@ApiOperation(value = "发送kafka", httpMethod = "POST")

@PostMapping("/sendKafka")

public AjaxResult sendKafka(String msg) {

long startTime = System.currentTimeMillis();

String id = IdUtil.simpleUUID();

//cn.hutool.json.JSONObject jsonObject = new cn.hutool.json.JSONObject();

//jsonObject.put("msg", msg);

sendKaFKaToJsService.sendMessage(startTime,id,msg);

return AjaxResult.success("成功");

}

}