I 简介

近年来,多算子多方法算法取得成功,这促进了它们在单一框架内的组合。尽管成果令人鼓舞,但仍有改进空间,因为只有部分进化算法(EAs)能在所有优化问题上持续表现优异。本文提出mLSHADE-RL算法,作为LSHADE-cnEpSin(CEC 2017实参数单目标优化竞赛优胜算法之一)的增强版本,通过集成多种进化算法和搜索算子来进一步提升性能。我们在原始LSHADE-cnEpSin中整合了三种变异策略:带存档的DE/current-to-pbest-weight/1、无存档的DE/current-to-pbest/1以及DE/current-to-ordpbest-weight/1。同时提出重启机制以克服局部最优倾向,并在进化过程后期采用局部搜索方法来增强mLSHADE-RL的开发能力。该算法在CEC 2024单目标边界约束优化竞赛的30维测试中表现卓越,在各种优化场景下生成高质量解的能力均优于其他前沿算法。

II 相关工作

本节介绍了原始差分进化算法及其变体,这些变体用于设计所提出的算法。

II-A 差分进化算法

本节简要介绍差分进化(Differential Evolution, DE)算法[1],涵盖其所有设计要素。DE中的种群,也称为DE/rand/1/bin,记作 P S PS PS,由 N p N_p Np个个体组成。每个个体,称为目标向量或父向量,表示为一个解向量 x i = { x i 1 , x i 2 , . . . , x i D } x_i = \{x_{i1}, x_{i2}, ..., x_{iD}\} xi={xi1,xi2,...,xiD},其中 D D D是搜索空间的维度。在整个进化过程中,个体在世代 G = 1 , 2 , . . . , G m a x G = 1, 2, ..., G_{max} G=1,2,...,Gmax中更新,其中 G m a x G_{max} Gmax是最大世代数。 P S PS PS中的第 i i i个个体在搜索边界 x l b = { x i 1 , x i 2 , . . . , x i D } x_{lb} = \{x_{i1}, x_{i2}, ..., x_{iD}\} xlb={xi1,xi2,...,xiD}和 x u b = { x i 1 , x i 2 , . . . , x i D } x_{ub} = \{x_{i1}, x_{i2}, ..., x_{iD}\} xub={xi1,xi2,...,xiD}内随机初始化。在第 G G G代,该初始化表示为 x i G = { x i 1 G , x i 2 G , . . . , x i D G } x_i^G = \{x_{i1}^G, x_{i2}^G, ..., x_{iD}^G\} xiG={xi1G,xi2G,...,xiDG}。

初始化之后,DE采用变异和交叉等算子来进化 P S PS PS中的个体,生成试验向量。这些试验向量与目标向量之间的比较(称为选择)决定了哪些个体能够存活到下一代。DE的详细步骤如下:

(i) 变异 :对于第 G G G代的每个目标向量 x i G x_i^G xiG,DE使用以下变异过程创建一个变异向量 v i G + 1 = { v i 1 G + 1 , v i 2 G + 1 , . . . , v i D G + 1 } v_i^{G+1} = \{v_{i1}^{G+1}, v_{i2}^{G+1}, ..., v_{iD}^{G+1}\} viG+1={vi1G+1,vi2G+1,...,viDG+1}:

D E / r a n d / 1 : v i G + 1 = x r 1 G + F ⋅ ( x r 2 G − x r 3 G ) , (1) DE/rand/1: \quad v_i^{G+1} = x_{r1}^G + F \cdot (x_{r2}^G - x_{r3}^G), \tag{1} DE/rand/1:viG+1=xr1G+F⋅(xr2G−xr3G),(1)

其中 r j r_j rj(对于 j = 1 , 2 , 3 j = 1, 2, 3 j=1,2,3)是从范围 [ 1 , N p ] [1, N_p] [1,Np]中随机选择的互不相同的索引,并且与基索引 i i i不同。参数 F F F是一个缩放因子,控制差向量 ( x r 2 G − x r 3 G ) (x_{r2}^G - x_{r3}^G) (xr2G−xr3G)的影响,范围在0到2之间。 F F F值越高促进探索,越低则促进开发。

(ii) 交叉 :通过变异生成变异个体 v i G + 1 v_i^{G+1} viG+1后,应用交叉操作。在交叉中,变异向量 v i G + 1 v_i^{G+1} viG+1以概率 C R ∈ [ 0 , 1 ] CR \in [0, 1] CR∈[0,1]与目标个体 x i G x_i^G xiG交换分量,形成试验个体 u i G + 1 = { u i 1 G + 1 , u i 2 G + 1 , . . . , u i D G + 1 } u_i^{G+1} = \{u_{i1}^{G+1}, u_{i2}^{G+1}, ..., u_{iD}^{G+1}\} uiG+1={ui1G+1,ui2G+1,...,uiDG+1}。DE中使用两种类型的交叉:二项式交叉和指数交叉。这里,我们详细说明首选的二项式交叉。在二项式交叉中,目标向量与变异向量根据以下条件组合:

u i j G + 1 = { v i j G + 1 , 如果 ( r a n d j ( 0 , 1 ) ≤ C R 或 j = j r a n d ) x i j G , 否则 , (2) u_{ij}^{G+1} = \begin{cases} v_{ij}^{G+1}, & \text{如果 } (rand_j(0,1) \leq CR \text{ 或 } j = j_{rand}) \\ x_{ij}^G, & \text{否则}, \end{cases} \tag{2} uijG+1={vijG+1,xijG,如果 (randj(0,1)≤CR 或 j=jrand)否则,(2)

其中 r a n d j ( 0 , 1 ) rand_j(0,1) randj(0,1)是均匀分布的随机数, j r a n d ∈ { 1 , 2 , . . . , D } j_{rand} \in \{1, 2, ..., D\} jrand∈{1,2,...,D}是随机选择的索引,确保试验个体至少从变异个体继承一个分量。在此等式中,交叉率 C R CR CR控制从变异向量继承的分量数量。

(iii) 选择 :在选择过程中,DE采用贪婪选择方案来确定哪个向量将存活。在每一代中,如果试验向量 u i G + 1 u_i^{G+1} uiG+1比目标向量 x i G x_i^G xiG更优,则试验向量将替换目标向量。否则,保留目标向量 x i G x_i^G xiG。对于最小化情况,选择策略定义如下:

x i G + 1 = { u i G + 1 , 如果 f ( u i G + 1 ) < f ( x i G ) , x i G , 否则 . (3) x_i^{G+1} = \begin{cases} u_i^{G+1}, & \text{如果 } f(u_i^{G+1}) < f(x_i^G), \\ x_i^G, & \text{否则}. \end{cases} \tag{3} xiG+1={uiG+1,xiG,如果 f(uiG+1)<f(xiG),否则.(3)

其中 f ( u i G + 1 ) f(u_i^{G+1}) f(uiG+1)是在 u i G + 1 u_i^{G+1} uiG+1处评估的适应度函数。

II-B 统一差分进化算法

统一差分进化算法(Unified DE, UDE)由Trivedi等人[2]提出,是CEC 2017约束优化竞赛的获胜者之一。受JADE、CoDE、SaDE和基于排序的变异算子的启发,UDE采用了三种类似于CoDE的试验向量生成策略:rank-DE/rand/1/bin、rank-DE/current-to-rand/1和rank-DE/current-to-pbest/1。UDE使用了双子种群框架:顶部子种群和底部子种群,每个子种群具有不同的学习机制。顶部子种群采用所有三种试验向量生成策略来更新每一代的目标向量 x i G x_i^G xiG。相比之下,底部子种群使用策略自适应,根据在顶部子种群中生成有希望解的经验和见解,定期自调整其试验向量 u i G u_i^G uiG的生成策略。此外,UDE还结合了基于局部搜索操作的变异以进一步提升其性能。

II-C SHADE

基于成功历史的自适应差分进化算法(Success History-based Adaptive DE, SHADE)[2]扩展了JADE[10]。在JADE中,每个个体使用DE/current-to-pbest/1变异策略进行更新:

u i G = x i G + F ⋅ ( x p b e s t G − x i G ) + F ⋅ ( x r 1 G − x r 2 G ) , (4) u_i^G = x_i^G + F \cdot (x_{pbest}^G - x_i^G) + F \cdot (x_{r1}^G - x_{r2}^G), \tag{4} uiG=xiG+F⋅(xpbestG−xiG)+F⋅(xr1G−xr2G),(4)

其中 p b e s t pbest pbest是从种群前 100 p % 100p\% 100p%中选出的。每个 x i G x_i^G xiG有其自己的控制参数 F i F_i Fi和 C R i CR_i CRi,这些参数是从自适应参数 μ F \mu_F μF和 μ C R \mu_{CR} μCR中概率生成的。使用柯西分布和正态分布,将那些改进试验向量的成功 C R CR CR和 F i F_i Fi值记录到 S C R S_{CR} SCR和 S F S_F SF中。在每代结束时,分别使用 S C R S_{CR} SCR的算术平均和 S F S_F SF的Lehmer均值更新 μ C R \mu_{CR} μCR和 μ F \mu_F μF,其中 j j j决定在内存中更新的位置。

II-D EBSHADE

在EBSHADE中,提出了一种更贪婪的变异策略DE/current-to-optimistic/1,以增强开发能力[11]。该策略对从当前代选择的三个向量进行排序,以扰动目标向量,使用定向差异来模拟梯度下降并将搜索导向更好的解。在optimistic策略中,一个向量从全局前 p p p个最佳向量中选择,另外两个从整个种群中随机选择。然后按适应度值对这三个向量进行排序:最佳的是 x o p t i m a l G x_{optimal}^G xoptimalG,中等的是 x o m e d G x_{omed}^G xomedG,最差的是 x o w s t G x_{owst}^G xowstG。试验向量 u i G u_i^G uiG由目标向量 x i G x_i^G xiG和上述三个排序后的向量生成,如下所示:

u i G = x i G + F ⋅ ( x o p t i m a l G − x i G ) + F ⋅ ( x o m e d G − x o w s t G ) . (5) u_i^G = x_i^G + F \cdot (x_{optimal}^G - x_i^G) + F \cdot (x_{omed}^G - x_{owst}^G). \tag{5} uiG=xiG+F⋅(xoptimalG−xiG)+F⋅(xomedG−xowstG).(5)

II-E L-SHADE

L-SHADE[12]是CEC 2014单目标优化问题的获胜者,它通过线性种群规模缩减(Linear Population Size Reduction, LPSR)扩展了SHADE,根据线性函数持续减少种群规模。初始时,种群规模为 N p i n i t N_p^{init} Npinit,在运行结束时减少到 N p m i n N_p^{min} Npmin。在每代 G G G之后,下一代的种群规模 N p G + 1 N_p^{G+1} NpG+1计算如下:

N p G + 1 = r o u n d ( N p i n i t − N p m i n n f e m a x ) ⋅ n f e , (6) N_p^{G+1} = round\left(\frac{N_p^{init} - N_p^{min}}{nfe_{max}}\right) \cdot nfe, \tag{6} NpG+1=round(nfemaxNpinit−Npmin)⋅nfe,(6)

其中 n f e nfe nfe和 n f e m a x nfe_{max} nfemax分别是当前评估次数和最大评估次数。如果 N p G + 1 N_p^{G+1} NpG+1小于 N p G N_p^{G} NpG,则从种群底部移除 ( N p G − N p G + 1 ) (N_p^{G} - N_p^{G+1}) (NpG−NpG+1)个个体。

II-F LSHADE-EpsSin

为了提升LSHADE的性能,LSHADE-EpsSin引入了一种集成方法,使用高效的正弦方案[7]来自适应缩放因子。该方法结合了两个正弦公式:一个非自适应的正弦递减调整和一个自适应的基于历史的正弦递增调整。此外,在后代中使用基于高斯行走的局部搜索方法来改进开发。在LSHADE-EpsSin中,定义了一种新的带外部档案的变异策略DE/current-to-pbest/1:

u i G = x i G + F ⋅ ( x p b e s t G − x i G ) + F ⋅ ( x r 1 G − x r 3 G ) , (7) u_i^G = x_i^G + F \cdot (x_{pbest}^G - x_i^G) + F \cdot (x_{r1}^G - x_{r3}^G), \tag{7} uiG=xiG+F⋅(xpbestG−xiG)+F⋅(xr1G−xr3G),(7)

其中 r 1 ≠ r 2 ≠ r 3 r_1 \ne r_2 \ne r_3 r1=r2=r3是从整个种群中随机选择的, x r 3 G x_{r3}^G xr3G是从整个种群 P S PS PS和外部档案 A A A( A A A存储最近被后代替换的劣质解)的并集中随机选择的(即 x r 3 G ∈ P S G ∪ A G x_{r3}^G \in PS^G \cup A^G xr3G∈PSG∪AG)。

参数自适应 :提出了一个参数自适应方法的集成来调整缩放因子 F F F。这两种策略是:

{ F 1 G = 0.5 ⋅ ( sin ( 2 π ⋅ f 1 ⋅ G + 1 ) ⋅ G m a x − G G m a x + 1 ) , F 2 G = 0.5 ⋅ ( sin ( 2 π ⋅ f 2 ⋅ G + 1 ) ⋅ G G m a x + 1 ) , (8) \begin{cases} F_1^G = 0.5 \cdot \left( \sin(2\pi \cdot f_1 \cdot G + 1) \cdot \frac{G_{max} - G}{G_{max}} + 1 \right), \\ F_2^G = 0.5 \cdot \left( \sin(2\pi \cdot f_2 \cdot G + 1) \cdot \frac{G}{G_{max}} + 1 \right), \end{cases} \tag{8} ⎩ ⎨ ⎧F1G=0.5⋅(sin(2π⋅f1⋅G+1)⋅GmaxGmax−G+1),F2G=0.5⋅(sin(2π⋅f2⋅G+1)⋅GmaxG+1),(8)

其中 f 1 f_1 f1和 f 2 f_2 f2是正弦函数的频率, f 1 f_1 f1固定,而 f 2 f_2 f2每代使用柯西分布自适应:

f 2 = r a n d c ( μ f 2 , 0.1 ) , (9) f_2 = randc(\mu_{f_2}, 0.1), \tag{9} f2=randc(μf2,0.1),(9)

其中 μ f 2 \mu_{f_2} μf2是Lehmer均值,从外部记忆 M f 2 M_{f_2} Mf2中随机选择,该记忆存储了先前各代在 S f 2 S_{f_2} Sf2中的成功平均频率。索引 r i r_i ri在每代结束时从 [ 1 , H ] [1, H] [1,H]中选择。在前半代中使用两种正弦策略。在后半代中,缩放因子 F i G F_i^G FiG使用柯西分布更新:

F i G = r a n d c ( μ F , 0.1 ) . (10) F_i^G = randc(\mu_F, 0.1). \tag{10} FiG=randc(μF,0.1).(10)

此外,交叉率 C R i G CR_i^G CRiG在整个进化过程中使用正态分布进行自适应:

C R i G = r a n d n ( μ C R i , 0.1 ) . (11) CR_i^G = randn(\mu_{CR_i}, 0.1). \tag{11} CRiG=randn(μCRi,0.1).(11)

II-G LSHADE-cnEpsSin

LSHADE-EpsSin被引入,通过有效使用缩放因子 F F F的正弦公式的自适应集成来增强LSHADE的性能。它在前半代中随机选择两个正弦公式之一:一个非自适应的正弦递减调整和一个自适应的正弦递增调整。此过程通过性能自适应方案得到增强,以有效地在两个公式之间进行选择。LSHADE-cnEpsSin是LSHADE-EpsSin的改进版本,它用于缩放参数 F F F的有效选择,以及使用欧几里得邻域的协方差矩阵学习来优化交叉算子。

有效选择 :根据先前各代的性能,选择两种正弦策略之一。在学习期 L p L_p Lp内,记录每种配置生成的试验向量的成功次数和丢弃次数,分别为 n S i G nS_i^G nSiG和 n F i G nF_i^G nFiG。对于前 L p L_p Lp代,两种策略具有相等的概率 P j P_j Pj并被随机选择。之后,概率更新如下:

P j = S j G S 1 G + S 2 G , (12) P_j = \frac{S_j^G}{S_1^G + S_2^G}, \tag{12} Pj=S1G+S2GSjG,(12)

其中 S j G S_j^G SjG表示每种正弦策略生成的试验向量的成功率。

新交叉算子 :在LSHADE-cnEpsSin中,交叉算子以概率 P c P_c Pc使用带欧几里得邻域的协方差矩阵学习(CMLwithEN)。个体按适应度排序,并计算与最佳个体 x b e s t x_{best} xbest的欧几里得距离。使用前 N p × P c N_p \times P_c Np×Pc个个体(此处 P c P_c Pc为0.5)围绕 x b e s t x_{best} xbest形成邻域区域。随着种群规模减小,该邻域规模也随之减小。协方差矩阵 C C C根据该区域计算:

C = O b D b O b T , (13) C = O_b D_b O_b^T, \tag{13} C=ObDbObT,(13)

其中 O b O_b Ob和 O b T O_b^T ObT是正交矩阵, D b D_b Db是具有特征值的对角矩阵。目标向量和变异向量使用正交矩阵 O b T O_b^T ObT进行更新:

x i G = O b T x i G , v i G = O b T v i G . (14) x_i^G = O_b^T x_i^G, \quad v_i^G = O_b^T v_i^G. \tag{14} xiG=ObTxiG,viG=ObTviG.(14)

将二项式交叉(式(2))应用于更新后的向量以创建试验向量 u i G u_i^G uiG。

III 提出的算法(MLSHADE-RL)

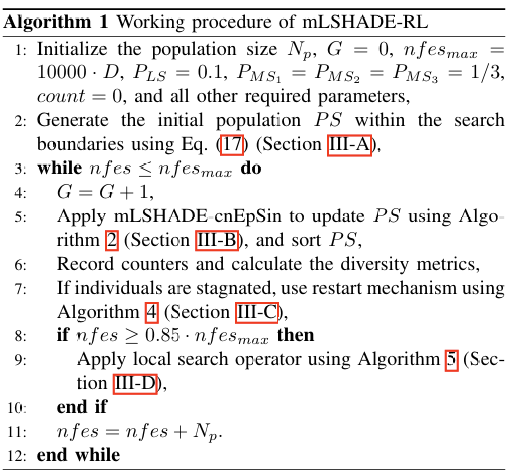

所提算法的工作流程在算法1中说明。首先生成规模为 N p N_p Np的随机种群,每个个体位于搜索边界内(第III-A节)。种群 P S P^S PS通过多算子(mLSHADE)框架下的LSHADE-cnEpSin进行演化(第III-B节的算法2),并在mLSHADE框架中融入带有水平与垂直交叉的重启机制(第III-C节)。为增强开发能力,后期以动态概率引入局部搜索方法------序列二次规划(SQP)(第III-D节)。该过程重复执行,直至满足停止准则。算法各组件的详细讨论见以下小节。

III-A 种群初始化

第 G G G代中, P S P^S PS的第 i i i个个体在搜索边界 x lb = [ x 1 , lb , x 2 , lb , ... , x D , lb ] x_{\text{lb}}=[x_{1,\text{lb}},x_{2,\text{lb}},\dots,x_{D,\text{lb}}] xlb=[x1,lb,x2,lb,...,xD,lb]与 x ub = [ x 1 , ub , x 2 , ub , ... , x D , ub ] x_{\text{ub}}=[x_{1,\text{ub}},x_{2,\text{ub}},\dots,x_{D,\text{ub}}] xub=[x1,ub,x2,ub,...,xD,ub]内随机初始化,记为 x i G = [ x i 1 G , x i 2 G , ... , x i D G ] x_i^G=[x_{i1}^G,x_{i2}^G,\dots,x_{iD}^G] xiG=[xi1G,xi2G,...,xiDG],定义如下:

x i j G = x j , lb + ( x j , ub − x j , lb ) ⋅ rand ( 1 , N p ) , (17) x_{ij}^G = x_{j,\text{lb}} + (x_{j,\text{ub}}-x_{j,\text{lb}})\cdot\text{rand}(1,N_p),\tag{17} xijG=xj,lb+(xj,ub−xj,lb)⋅rand(1,Np),(17)

其中 i = 1 , 2 , ... , N p i=1,2,\dots,N_p i=1,2,...,Np, j = 1 , 2 , ... , D j=1,2,\dots,D j=1,2,...,D,rand为 [ 0 , 1 ] [0,1] [0,1]内均匀分布的实数。外部存档 A A A由初始种群 x x x初始化。

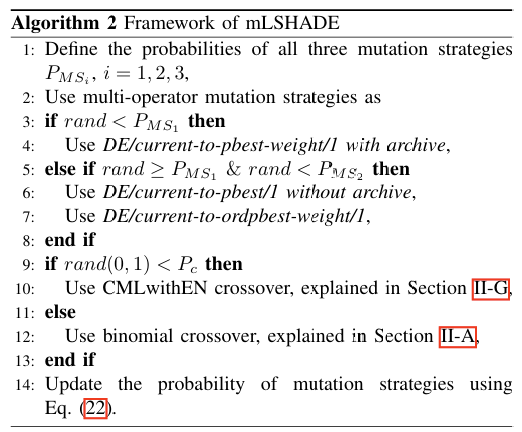

III-B 多算子LSHADE-cnEpSin(mLSHADE)

在mLSHADE中,进化过程采用三种变异策略:带存档的DE/current-to-pbest-weight/1、不带存档的DE/current-to-pbest/1,以及DE/current-to-ordpbest-weight/1。以下给出用于演化规模为 N p N_p Np的种群 P S , G P^{S,G} PS,G的DE变异策略(MS 1 _1 1至MS 3 _3 3):

(i) DE/current-to-pbest-weight/1 带存档(MS 1 _1 1):

u i G = x i G + F i , w ⋅ ( x pbest G − x i G ) + F i ⋅ ( x r 1 G − x r 2 G ) , (18) u_i^G = x_i^G + F_{i,w}\cdot(x_{\text{pbest}}^G-x_i^G) + F_i\cdot(x_{r1}^G-x_{r2}^G),\tag{18} uiG=xiG+Fi,w⋅(xpbestG−xiG)+Fi⋅(xr1G−xr2G),(18)

(ii) DE/current-to-pbest/1 不带存档(MS 2 _2 2):

u i G = x i G + F i ⋅ ( x pbest G − x i G + x r 1 G − x r 2 G ) , (19) u_i^G = x_i^G + F_i\cdot(x_{\text{pbest}}^G-x_i^G + x_{r1}^G-x_{r2}^G),\tag{19} uiG=xiG+Fi⋅(xpbestG−xiG+xr1G−xr2G),(19)

(iii) DE/current-to-ordpbest-weight/1(MS 3 _3 3):

u i G = x i G + F i , w ⋅ ( x ordpbest G − x i G + x ordm G − x orduo G ) , (20) u_i^G = x_i^G + F_{i,w}\cdot(x_{\text{ordpbest}}^G-x_i^G + x_{\text{ordm}}^G-x_{\text{orduo}}^G),\tag{20} uiG=xiG+Fi,w⋅(xordpbestG−xiG+xordmG−xorduoG),(20)

其中 r 1 , r 2 , r 3 , r 4 r_1,r_2,r_3,r_4 r1,r2,r3,r4为随机选取的互异索引, x r 1 G , x r 2 G x_{r1}^G,x_{r2}^G xr1G,xr2G选自 P S , G P^{S,G} PS,G, x r 2 G x_{r2}^G xr2G亦可选自 P S , G ∪ A G P^{S,G}\cup A^G PS,G∪AG。自适应最优向量 x pbest G x_{\text{pbest}}^G xpbestG从第 G G G代前 N p × p N_p\times p Np×p( p ∈ [ 0 , 1 ] p\in[0,1] p∈[0,1]固定)个体中选取。受jSO[22]启发,采用加权缩放因子 F w F_w Fw:

F w = { 0.7 ⋅ F i G , 若 n fes ≤ 0.2 ⋅ n fes,max 0.8 ⋅ F i G , 若 n fes ≤ 0.4 ⋅ n fes,max 1.2 ⋅ F i G , 否则 (21) F_w= \begin{cases} 0.7\cdot F_i^G,& \text{若 }n_{\text{fes}}\le 0.2\cdot n_{\text{fes,max}}\\[2mm] 0.8\cdot F_i^G,& \text{若 }n_{\text{fes}}\le 0.4\cdot n_{\text{fes,max}}\\[2mm] 1.2\cdot F_i^G,& \text{否则} \end{cases} \tag{21} Fw=⎩ ⎨ ⎧0.7⋅FiG,0.8⋅FiG,1.2⋅FiG,若 nfes≤0.2⋅nfes,max若 nfes≤0.4⋅nfes,max否则(21)

变异策略应用后,依第III-G节定义的CMLwithEN概率 P c P_c Pc选择交叉算子。生成随机数 rand ( 0 , 1 ) \text{rand}(0,1) rand(0,1)并与 P c P_c Pc比较:若 rand ( 0 , 1 ) < P c \text{rand}(0,1)<P_c rand(0,1)<Pc,使用CMLwithEN交叉;否则应用二项交叉。

算法2 mLSHADE框架

III-B1 各策略中解数量的更新

初始时,使用 M S 1 MS_1 MS1、 M S 2 MS_2 MS2和 M S 3 MS_3 MS3进化任何个体的概率设置为 P M S 1 = P M S 2 = P M S 3 = 1 / 3 P_{MS_1} = P_{MS_2} = P_{MS_3} = 1/3 PMS1=PMS2=PMS3=1/3。对于 P S t PS_t PSt中的每个个体 i i i,如果 r a n d i < 0.33 rand_i < 0.33 randi<0.33,则使用 M S 1 MS_1 MS1进化 x i t x_i^t xit。如果 0.33 ≤ r a n d i < 0.667 0.33 \leq rand_i < 0.667 0.33≤randi<0.667,则使用 M S 2 MS_2 MS2。否则,应用 M S 3 MS_3 MS3。为了确保鲁棒的性能,使用适应度值的改进率来更新每个变体的概率( P M S i P_{MS_i} PMSi)[20]。这些比率在每代结束时计算如下:

P M S i = max ( 0.1 , min ( 0.9 , I M S i ∑ j = 1 3 I M S j ) ) , (21) P_{MS_i} = \max \left( 0.1, \min \left( 0.9, \frac{I_{MS_i}}{\sum_{j=1}^{3} I_{MS_j}} \right) \right), \tag{21} PMSi=max(0.1,min(0.9,∑j=13IMSjIMSi)),(21)

I M S i = ∑ j = 1 N M S i max ( 0 , f j , o l d − f j , n e w ) f j , o l d , (22) I_{MS_i} = \frac{\sum_{j=1}^{N_{MS_i}} \max(0, f_{j, old} - f_{j, new})}{f_{j, old}}, \tag{22} IMSi=fj,old∑j=1NMSimax(0,fj,old−fj,new),(22)

其中 f j , o l d f_{j, old} fj,old和 f j , n e w f_{j, new} fj,new分别是旧的和新的适应度值。由于一个算子可能在进化过程的不同阶段表现良好,而在其他阶段表现不佳,因此保持 P M S i P_{MS_i} PMSi的最小值以确保每个变体都有改进的机会[20]。

III-B2 F和CR的自适应

参数自适应方法的集成[7]使用两个正弦策略来调整缩放因子 F F F,这在本论文的II-E节式(2)中定义。初始时,两种策略具有相等的概率 P j P_j Pj,并在前 L p L_p Lp代中随机选择。随后,使用式(13)更新概率,倾向于概率较高的策略。两种策略在前半代中使用。在后半代中,缩放因子 F i t F_i^t Fit使用柯西分布更新:

F i t = randc ( μ F r , 0.1 ) . (23) F_i^t = \text{randc}(\mu_F^r, 0.1). \tag{23} Fit=randc(μFr,0.1).(23)

在整个进化过程中,交叉率 C R i t CR_i^t CRit使用正态分布进行自适应:

C R i t = randn ( μ C R r , 0.1 ) . (24) CR_i^t = \text{randn}(\mu_{CR}^r, 0.1). \tag{24} CRit=randn(μCRr,0.1).(24)

如果一个试验向量 u i t u_i^t uit在与 x i t x_i^t xit竞争后存活,则相应的 F i t F_i^t Fit和 C R i t CR_i^t CRit值被记录为成功的( S F S_F SF和 S C R S_{CR} SCR)。在该代结束时, F F F和 C R CR CR的记忆( μ F r \mu_F^r μFr和 μ C R r \mu_{CR}^r μCRr)使用Lehmer均值( μ n e w \mu_{new} μnew)进行更新:

μ F r , t + 1 = { μ n e w ( S F ) , 如果 S F ≠ ∅ , μ F r , t , 否则 , \mu_F^{r,t+1} = \begin{cases} \mu_{new}(S_F), & \text{如果 } S_F \neq \emptyset, \\ \mu_F^{r,t}, & \text{否则}, \end{cases} μFr,t+1={μnew(SF),μFr,t,如果 SF=∅,否则,

μ C R r , t + 1 = { μ n e w ( S C R ) , 如果 S C R ≠ ∅ , μ C R r , t , 否则 , \mu_{CR}^{r,t+1} = \begin{cases} \mu_{new}(S_{CR}), & \text{如果 } S_{CR} \neq \emptyset, \\ \mu_{CR}^{r,t}, & \text{否则}, \end{cases} μCRr,t+1={μnew(SCR),μCRr,t,如果 SCR=∅,否则,

μ n e w ( S ) = ∑ i = 1 ∣ S ∣ Q i ⋅ S i ∑ i = 1 ∣ S ∣ Q i , Q i = ∣ f ( u i t ) − f ( x i t ) ∣ ∑ j = 1 ∣ S ∣ ∣ f ( u j t ) − f ( x j t ) ∣ . (25) \mu_{new}(S) = \frac{\sum_{i=1}^{|S|} Q_i \cdot S_i}{\sum_{i=1}^{|S|} Q_i}, \quad Q_i = \frac{|f(u_i^t) - f(x_i^t)|}{\sum_{j=1}^{|S|} |f(u_j^t) - f(x_j^t)|}. \tag{25} μnew(S)=∑i=1∣S∣Qi∑i=1∣S∣Qi⋅Si,Qi=∑j=1∣S∣∣f(ujt)−f(xjt)∣∣f(uit)−f(xit)∣.(25)

III-B3 种群规模缩减

为了在进化过程早期保持多样性,同时在后期增强开发能力[12],应用线性缩减方法,在每代结束时通过移除最差个体来更新种群 P S PS PS的规模。LPSR方法在II-E节的式(2)中详述。

III-C 重启机制

如[23]中所讨论的,DE在进化过程中会停滞,特别是在多模态优化问题上。这个问题通常源于进化后期阶段种群多样性的缺乏。为了解决这个问题,我们整合了一个增强种群多样性的机制,该机制由两部分组成:检测停滞个体和增强种群多样性。

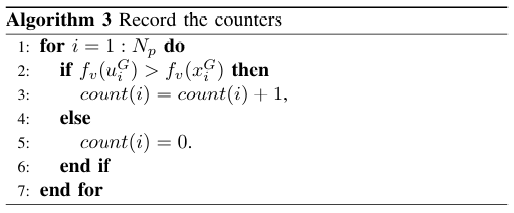

III-C1 停滞个体的检测

进化过程在一定数量的代数后趋于稳定,一些个体在若干代内无法改进,导致停滞[22]。为了记录停滞,本文使用计数器和多样性度量。当两个条件都满足时,我们称之为停滞。每个个体的计数器初始定义为零,并在子代不优于父代时更新(算法3)。

算法3 记录计数器

与搜索空间边界相关的体积 V o l b e s t Vol_{best} Volbest和每代种群体积 V o l p o p Vol_{pop} Volpop的比率的平方根用于计算多样性度量,如下所示:

V o l = V o l p o p / V o l b e s t , (26) Vol = \sqrt{Vol_{pop} / Vol_{best}}, \tag{26} Vol=Volpop/Volbest ,(26)

其中 V o l b e s t = ∏ j = 1 D ( x j , u b − x j , l b ) Vol_{best} = \sqrt{\prod_{j=1}^{D} (x_{j, ub} - x_{j, lb})} Volbest=∏j=1D(xj,ub−xj,lb) 和 V o l p o p = ∑ j = 1 D ( max ( x j ) − min ( x j ) ) / 2 Vol_{pop} = \sqrt{\sum_{j=1}^{D} (\max(x_j) - \min(x_j)) / 2} Volpop=∑j=1D(max(xj)−min(xj))/2 。

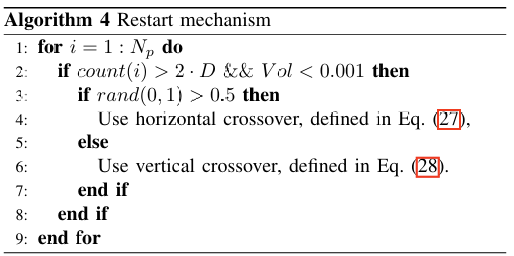

III-C2 停滞个体的替换

受遗传算法启发的水平和垂直交叉,形成了一个简单的竞争机制来增强立方体,执行边缘搜索以提高全局搜索能力。对相同维度的个体进行随机预筛选和配对以进行更新,此过程可以重复。垂直交叉允许在不同尺寸上进行交叉操作,有助于避免特定维度上的局部最优,而不影响其他维度。

水平交叉定义为:

H C i t = r 1 ⋅ x i 1 t + ( 1 − r 1 ) ⋅ x i 2 t + b ⋅ ( x i 1 t − x i 2 t ) , (27) HC_i^t = r_1 \cdot x_{i1}^t + (1 - r_1) \cdot x_{i2}^t + b \cdot (x_{i1}^t - x_{i2}^t), \tag{27} HCit=r1⋅xi1t+(1−r1)⋅xi2t+b⋅(xi1t−xi2t),(27)

其中 x i 1 t x_{i1}^t xi1t和 x i 2 t x_{i2}^t xi2t是从种群中随机选择的两个个体。 r 1 r_1 r1是 [ 0 , 1 ] [0, 1] [0,1]内均匀分布的随机数, b b b是 [ − 1 , 1 ] [-1, 1] [−1,1]内均匀分布的随机数。

垂直交叉定义为:

V C i t = r 2 ⋅ x i , d 1 t + ( 1 − r 2 ) ⋅ x i , d 2 t , (28) VC_i^t = r_2 \cdot x_{i,d1}^t + (1 - r_2) \cdot x_{i,d2}^t, \tag{28} VCit=r2⋅xi,d1t+(1−r2)⋅xi,d2t,(28)

其中 r 2 r_2 r2是 [ 0 , 1 ] [0, 1] [0,1]内均匀分布的随机数, d 1 d1 d1和 d 2 d2 d2( d 1 ≠ d 2 d1 \ne d2 d1=d2)是某个停滞个体的两个不同维度。重启机制的详细描述在算法4中给出。

算法4 重启机制

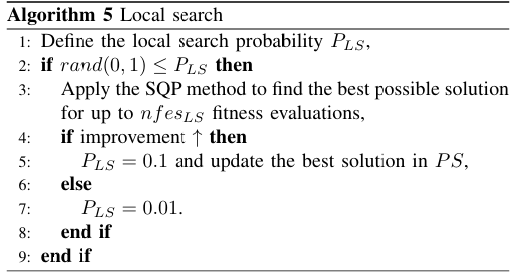

III-D 局部搜索

为增强 mLSHADE-RL 的开发能力,在进化过程的最后 25 % 阶段,对当前最优个体应用 SQP 方法。该操作以概率 P s = 0.1 P_s=0.1 Ps=0.1 执行,且最多消耗 n fesLs n_{\text{fesLs}} nfesLs 次适应度评估。值得注意的是, P s P_s Ps 是动态的:若局部搜索未找到更优解,则将 P s P_s Ps 降至较小值(如 0.01);否则, P s P_s Ps 保持为 0.1。

matlab

clc;

clear all;

format long;

format compact;

problem_size = 30;

%%% change freq

freq_inti = 0.5;

max_nfes = 10000 * problem_size;

rand('seed', sum(100 * clock));

val_2_reach = 10^(-8);

max_region = 100.0;

min_region = -100.0;

lu = [-100 * ones(1, problem_size); 100 * ones(1, problem_size)];

fhd=@cec17_func;

pb = 0.4;

ps = .5;

n_opr=3;

prob=1./n_opr .* ones(1,n_opr);

S.Ndim = problem_size;

S.Lband = ones(1, S.Ndim)*(-100);

S.Uband = ones(1, S.Ndim)*(100);

%%%% Count the number of maximum generations before as NP is dynamically

G_Max = 2745;

Printing=0;

num_prbs =1;

runs = 25;

run_funcvals = [];

result=zeros(num_prbs,5);

fprintf('Running mLSHADE_RL on D= %d\n', problem_size)

for func = 1:30

optimum = func * 100.0;

S.FuncNo = func;

%% Record the best results

outcome = [];

fprintf('\n-------------------------------------------------------\n')

fprintf('Function = %d, Dimension size = %d\n', func, problem_size)

% parfor run_id = 1 : runs

for run_id = 1 : runs

run_funcvals = [];

col=1; %% to print in the first column in all_results.mat

%% parameter settings for L-SHADE

p_best_rate = 0.11; %0.11

arc_rate = 1.4;

memory_size = 5;

pop_size = 18 * problem_size; %18*D

SEL = round(ps*pop_size);

prob_ls=0.01;

max_pop_size = pop_size;

min_pop_size = 4.0;

nfes = 0;

%% Initialize the main population

popold = repmat(lu(1, :), pop_size, 1) + rand(pop_size, problem_size) .* (repmat(lu(2, :) - lu(1, :), pop_size, 1));

pop = popold; % the old population becomes the current population

fitness = feval(fhd,pop',func);

fitness = fitness';

bsf_fit_var = 1e+30;

bsf_index = 0;

bsf_solution = zeros(1, problem_size);

%%%%%%%%%%%%%%%%%%%%%%%% for in

for i = 1 : pop_size

nfes = nfes + 1;

if fitness(i) < bsf_fit_var

bsf_fit_var = fitness(i);

bsf_solution = pop(i, :);

bsf_index = i;

end

if nfes > max_nfes; break; end

end

%%%%%%%%%%%%%%%%%%%%%%%% for out

best_fit_var=min(fitness);

Best_Func_Val=repmat(best_fit_var,1,pop_size);

memory_sf = 0.5 .* ones(memory_size, 1);

memory_cr = 0.5 .* ones(memory_size, 1);

memory_freq = freq_inti*ones(memory_size, 1);

memory_pos = 1;

archive.NP = arc_rate * pop_size; % the maximum size of the archive

archive.pop = zeros(0, problem_size); % the solutions stored in te archive

archive.funvalues = zeros(0, 1); % the function value of the archived solutions

gg=0; %%% generation counter used For Sin

igen =1; %%% generation counter used For LS

flag1 = false;

flag2 = false;

goodF1all = [];

goodF2all =[];

badF1all = [];

badF2all = [];

goodF1 = [];

goodF2 = [];

badF1 = [];

badF2 = [];

Par_restart.lu = lu;

Par_restart.V_lim = 1;

for i=1:problem_size

Par_restart.V_lim = (Par_restart.V_lim .*(abs(Par_restart.lu(1,i) - Par_restart.lu(2,i))));

end

Par_restart.V_lim = sqrt(Par_restart.V_lim);

Par_restart.counter = zeros(pop_size,1);

Par_restart.m = 1;

Par_restart.m_max = 4;

Par_restart.m_min = 1;

%% main loop

while nfes <= max_nfes

gg=gg+1;

pop = popold; % the old population becomes the current population

[temp_fit, sorted_index] = sort(fitness, 'ascend');

mem_rand_index = ceil(memory_size * rand(pop_size, 1));

mu_sf = memory_sf(mem_rand_index);

mu_cr = memory_cr(mem_rand_index);

mu_freq = memory_freq(mem_rand_index);

%% for generating crossover rate

cr = normrnd(mu_cr, 0.1);

term_pos = find(mu_cr == -1);

cr(term_pos) = 0;

cr = min(cr, 1);

cr = max(cr, 0);

%% for generating scaling factor

sf = mu_sf + 0.1 * tan(pi * (rand(pop_size, 1) - 0.5));

pos = find(sf <= 0);

while ~ isempty(pos)

sf(pos) = mu_sf(pos) + 0.1 * tan(pi * (rand(length(pos), 1) - 0.5));

pos = find(sf <= 0);

end

freq = mu_freq + 0.1 * tan(pi*(rand(pop_size, 1) - 0.5));

pos_f = find(freq <=0);

while ~ isempty(pos_f)

freq(pos_f) = mu_freq(pos_f) + 0.1 * tan(pi * (rand(length(pos_f), 1) - 0.5));

pos_f = find(freq <= 0);

end

sf = min(sf, 1);

freq = min(freq, 1);

LP = 20;

flag1 = false;

flag2 = false;

if(nfes <= max_nfes/2)

flag1 = false;

flag2 = false;

if (gg <= LP)

%% Both have the same probability

%% Those generations are the learning period

%% Choose one of them randomly

p1 = 0.5;

p2 = 0.5;

c=rand;

if(c < p1)

sf = 0.5.*( sin(2.*pi.*freq_inti.*gg+pi) .* ((G_Max-gg)/G_Max) + 1 ) .* ones(pop_size,problem_size);

flag1 = true;

else

sf = 0.5 *( sin(2*pi .* freq(:, ones(1, problem_size)) .* gg) .* (gg/G_Max) + 1 ) .* ones(pop_size,problem_size);

flag2 = true;

end

else

%% compute the probability as used in SaDE

ns1 = size(goodF1,1);

ns1_sum = 0;

nf1_sum = 0;

% for hh = 1 : size(goodF1all,2)

for hh = gg-LP : gg-1

ns1_sum = ns1_sum + goodF1all(1,hh);

nf1_sum = nf1_sum + badF1all(1,hh);

end

sumS1 = (ns1_sum/(ns1_sum + nf1_sum)) + 0.01;

ns2 = size(goodF2,1);

ns2_sum = 0;

nf2_sum = 0;

% for hh = gg-LP : gg-1

% for hh = 1 : size(goodF2all,2)

for hh = gg-LP : gg-1

ns2_sum = ns2_sum + goodF2all(1,hh);

nf2_sum = nf2_sum + badF2all(1,hh);

end

sumS2 = (ns2_sum/(ns2_sum + nf2_sum)) + 0.01;

p1 = sumS1/(sumS1 + sumS2);

p2 = sumS2/(sumS2 + sumS1);

if(p1 > p2)

sf = 0.5.*( sin(2.*pi.*freq_inti.*gg+pi) .* ((G_Max-gg)/G_Max) + 1 ) .* ones(pop_size,problem_size);

flag1 = true;

% size(goodF1,1)

else

sf = 0.5 *( sin(2*pi .* freq(:, ones(1, problem_size)) .* gg) .* (gg/G_Max) + 1 ) .* ones(pop_size,problem_size);

flag2 = true;

% size(goodF2,1)

end

end

end

%% mutation

bb= rand(pop_size, 1);

probiter = prob(1,:);

l2= sum(prob(1:2));

op_1 = bb <= probiter(1)*ones(pop_size, 1);

op_2 = bb > probiter(1)*ones(pop_size, 1) & bb <= (l2*ones(pop_size, 1)) ;

op_3 = bb > l2*ones(pop_size, 1) & bb <= (ones(pop_size, 1)) ;

r0 = [1 : pop_size];

popAll = [pop; archive.pop];

[r1, r2, r3] = gnR1R2R3(pop_size, size(popAll, 1), r0);

pNP = max(round(p_best_rate * pop_size), 2); %% choose at least two best solutions

randindex = ceil(rand(1, pop_size) .* pNP); %% select from [1, 2, 3, ..., pNP]

randindex = max(1, randindex); %% to avoid the problem that rand = 0 and thus ceil(rand) = 0

pbest = pop(sorted_index(randindex), :); %% randomly choose one of the top 100p% solutions

if(nfes <= 0.2*max_nfes)

FW=0.7*sf;

elseif(nfes > 0.2*max_nfes)&& (nfes <= 0.4*max_nfes)

FW=0.8*sf;

else

FW=1.2*sf;

end

vi=zeros(pop_size,problem_size);

%% Multi-operators

% DE/current-to-pbest/1 with archive

vi(op_1==1,:) = pop(op_1==1,:)+ FW(op_1==1, ones(1, problem_size)) .*(pbest(op_1==1,:) - pop(op_1==1,:) + pop(r1(op_1==1), :) - popAll(r2(op_1==1), :));

% DE/current-to-pbest/1 without archive

vi(op_2==1,:) = pop(op_2==1,:)+ sf(op_2==1, ones(1, problem_size)) .*(pbest(op_2==1,:) - pop(op_2==1,:) + pop(r1(op_2==1), :) - pop(r3(op_2==1), :));

% DE/current-to-ordpbest-weight/1

EDEpNP = max(round(p_best_rate * pop_size), 2); %% choose at least two best solutions

EDErandindex = ceil(rand(1, pop_size) .* EDEpNP); %% select from [1, 2, 3, ..., pNP]

EDErandindex = max(1, EDErandindex); %% to avoid the problem that rand = 0 and thus ceil(rand) = 0

EDEpestind=sorted_index(EDErandindex);

R1 = Gen_R(pop_size,2);

R1(:,1)=[];

R1=[R1 EDEpestind];

fr=fitness(R1);

[~,I1] = sort(fr,2);

R_S=[];

for i=1:pop_size

R_S(i,:)=R1(i,I1(i,:));

end

rb=R_S(:,1)';

rm=R_S(:,2)';

rw=R_S(:,3)';

vi(op_3==1,:) = pop(op_3==1,:) + FW(op_3==1, ones(1, problem_size)) .* (pop(rb(op_3==1), :) - pop(op_3==1,:) + pop(rm(op_3==1), :) - popAll(rw(op_3==1), :));

vi = boundConstraint(vi, pop, lu);

% %% Bin Crx

% mask = rand(pop_size, problem_size) > cr(:, ones(1, problem_size)); % mask is used to indicate which elements of ui comes from the parent

% rows = (1 : pop_size)'; cols = floor(rand(pop_size, 1) * problem_size)+1; % choose one position where the element of ui doesn't come from the parent

% jrand = sub2ind([pop_size problem_size], rows, cols); mask(jrand) = false;

% ui = vi; ui(mask) = pop(mask);

% %%

%% Bin crossover according to the Eigen coordinate system

J_= mod(floor(rand(pop_size, 1)*problem_size), problem_size) + 1;

J = (J_-1)*pop_size + (1:pop_size)';

crs = rand(pop_size, problem_size) < cr(:, ones(1, problem_size));

if rand<pb

%% coordinate ratation

%%%%% Choose neighbourhood region to the best individual

best = pop(sorted_index(1), :);

Dis = pdist2(pop,best,'euclidean'); % euclidean distance

%D2 = sqrt(sum((pop(1,:) - best).^2, 2));

%%%% Sort

[Dis_ordered idx_ordered] = sort(Dis, 'ascend');

SEL;

Neighbour_best_pool = pop(idx_ordered(1:SEL), :); %%% including best also so start from 1

Xsel = Neighbour_best_pool;

% sizz = size(Xsel)

%%%%%%%%%%%%%%%%%%%%%%%%%%%

%Xsel = pop(sorted_index(1:SEL), :);

xmean = mean(Xsel);

% covariance matrix calculation

C = 1/(SEL-1)*(Xsel - xmean(ones(SEL,1), :))'*(Xsel - xmean(ones(SEL,1), :));

C = triu(C) + transpose(triu(C,1)); % enforce symmetry

[R,D] = eig(C);

% limit condition of C to 1e20 + 1

if max(diag(D)) > 1e20*min(diag(D))

tmp = max(diag(D))/1e20 - min(diag(D));

C = C + tmp*eye(problem_size);

[R, D] = eig(C);

end

TM = R;

TM_=R';

Xr = pop*TM;

vi = vi*TM;

%% crossover according to the Eigen coordinate system

Ur = Xr;

Ur(J) = vi(J);

Ur(crs) = vi(crs);

%%

ui = Ur*TM_;

else

ui = pop;

ui(J) = vi(J);

ui(crs) = vi(crs);

end

%%%%%%%%

children_fitness = feval(fhd, ui', func);

children_fitness = children_fitness';

%%%% To check stagnation

flag = false;

bsf_fit_var_old = bsf_fit_var;

%%%%%%%%%%%%%%%%%%%%%%%% for out

for i = 1 : pop_size

% nfes = nfes + 1;

if children_fitness(i) < bsf_fit_var

bsf_fit_var = children_fitness(i);

bsf_solution = ui(i, :);

bsf_index = i;

end

end

%%%%%%%%%%%%%%%%%%%%%%%% for out

dif = abs(fitness - children_fitness);

%% I == 1: the parent is better; I == 2: the offspring is better

I = (fitness > children_fitness);

goodCR = cr(I == 1);

goodF = sf(I == 1);

goodFreq = freq(I == 1);

dif_val = dif(I == 1);

%% change here also

%% recored bad too

badF = sf(I == 0);

if flag1 == true

goodF1 = goodF;

goodF1all = [goodF1all size(goodF1,1)];

badF1 = badF;

badF1all = [badF1all size(badF1,1)];

%% Add zero for other one or add 1 to prevent the case of having NaN

goodF2all = [goodF2all 1];

badF2all = [badF2all 1];

end

if flag2 == true

goodF2 = goodF;

goodF2all = [goodF2all size(goodF2,1)];

badF2 = badF;

badF2all = [badF2all size(badF2,1)];

%% Add zero for other one

goodF1all = [goodF1all 1];

badF1all = [badF1all 1];

end

%% ==================== update Prob. of each DE operators ===========================

diff2 = max(0,(fitness - children_fitness))./abs(children_fitness);

count_S(1)=max(0,mean(diff2(op_1==1)));

count_S(2)=max(0,mean(diff2(op_2==1)));

count_S(3)=max(0,mean(diff2(op_3==1)));

%% update probs.

if count_S~=0

prob= max(0.1,min(0.9,count_S./(sum(count_S))));

else

prob=1/3 * ones(1,3);

end

% isempty(popold(I == 1, :))

archive = updateArchive(archive, popold(I == 1, :), fitness(I == 1));

[fitness, I] = min([fitness, children_fitness], [], 2);

run_funcvals = [run_funcvals; fitness];

popold = pop;

popold(I == 2, :) = ui(I == 2, :);

num_success_params = numel(goodCR);

if num_success_params > 0

sum_dif = sum(dif_val);

dif_val = dif_val / sum_dif;

%% for updating the memory of scaling factor

memory_sf(memory_pos) = (dif_val' * (goodF .^ 2)) / (dif_val' * goodF);

%% for updating the memory of crossover rate

if max(goodCR) == 0 || memory_cr(memory_pos) == -1

memory_cr(memory_pos) = -1;

else

memory_cr(memory_pos) = (dif_val' * (goodCR .^ 2)) / (dif_val' * goodCR);

end

%% for updating the memory of freq

if max(goodFreq) == 0 || memory_freq(memory_pos) == -1

memory_freq(memory_pos) = -1;

else

memory_freq(memory_pos) = (dif_val' * (goodFreq .^ 2)) / (dif_val' * goodFreq);

end

memory_pos = memory_pos + 1;

if memory_pos > memory_size; memory_pos = 1; end

end

%% Restart mechanism

[~, bes_l]=min(fitness);

[popold,fitness,Par_restart]=Restart_mechanism(popold,fitness,Par_restart,I,bes_l,func,nfes);

%% for resizing the population size

plan_pop_size = round((((min_pop_size - max_pop_size) / max_nfes) * nfes) + max_pop_size);

if pop_size > plan_pop_size

reduction_ind_num = pop_size - plan_pop_size;

if pop_size - reduction_ind_num < min_pop_size; reduction_ind_num = pop_size - min_pop_size;end

pop_size = pop_size - reduction_ind_num;

SEL = round(ps*pop_size);

for r = 1 : reduction_ind_num

[valBest indBest] = sort(fitness, 'ascend');

worst_ind = indBest(end);

popold(worst_ind,:) = [];

pop(worst_ind,:) = [];

fitness(worst_ind,:) = [];

end

archive.NP = round(arc_rate * pop_size);

if size(archive.pop, 1) > archive.NP

rndpos = randperm(size(archive.pop, 1));

rndpos = rndpos(1 : archive.NP);

archive.pop = archive.pop(rndpos, :);

end

end

[bsf_fit_var,indx]=min(fitness);

bestx=popold(indx,:);

%% ============================ LS2 ====================================

if nfes>0.85*max_nfes

if rand<prob_ls

[bestx,bestold,~,succ] = LS2 (bestx,bsf_fit_var,nfes,func,max_nfes,lu(1,:),lu(2,:));

if succ==1 %% if LS2 was successful

popold(pop_size,:)=bestx';

fitness(pop_size)=bestold;

[fitness, sort_indx]=sort(fitness);

popold= popold(sort_indx,:);

prob_ls=0.1;

else

prob_ls=0.01; %% set p_LS to a small value it LS was not successful

end

end

end

[bsf_fit_var,~]=min(fitness);

bsf_fit_var_old=bsf_fit_var;

Best_Func_Val=[Best_Func_Val repmat(bsf_fit_var,1,pop_size)];

nfes=nfes+pop_size;

end %% End nfes

bsf_error_val = bsf_fit_var - optimum;

if bsf_error_val < val_2_reach

bsf_error_val = 0;

end

fprintf('%d th run, best-so-far error value = %1.8e\n', run_id , bsf_error_val)

outcome = [outcome bsf_error_val];

res_val1=Best_Func_Val(1:max_nfes)- optimum;

end %% end 2 run

% fprintf('\n')

% fprintf('min error value = %1.8e, max = %1.8e, median = %1.8e, mean = %1.8e, std = %1.8e\n', min(outcome), max(outcome), median(outcome), mean(outcome), std(outcome))

result(func,1)= min(outcome);

result(func,2)= max(outcome);

result(func,3)= median(outcome);

result(func,4)= mean(outcome);

result(func,5)= std(outcome);

Final_results= [min(outcome),max(outcome),median(outcome), mean(outcome),std(outcome)];

if Printing==1

save('mLSAHDE_LR_results_30D.csv', 'Final_results', '-ascii','-append');

lim=10*problem_size:10*problem_size:max_nfes;

res_to_print=res_val1(:,lim);

name1 = 'Results_Record_mLSHADE_LR\mLSAHDE_LR_F#';

name2 = num2str(func);

name3 = '_D#';

name4 = num2str(problem_size);

name5 = '.mat';

f_name=strcat(name1,name2,name3,name4,name5);

res_to_print=res_to_print';

save(f_name, 'res_to_print1', '-ascii');

name5 = '.dat';

f_name=strcat(name1,name2,name3,name4,name5);

save(f_name, 'res_to_print', '-ascii');

end

end %% end 1 function run