CV之VLM之LLM-OCR:《DeepSeek-OCR 2: Visual Causal Flow》翻译与解读

导读 :DeepSeek-OCR 2(DeepEncoder V2)通过引入"因果流查询(causal flow queries)"与定制注意掩码,把视觉 token 的双向全局性与查询级别的因果重排序结合起来,形成"编码端语义重排 + 解码端自回归推理"的两级因果架构。该设计既保留了图像压缩与效率(支持 256--1120 视觉 token 的预算与多 crop 策略),又显著提升了文档阅读的语义理解能力(在 OmniDocBench 上约 +3.73% 的提升),为实现更接近人类视觉扫描逻辑的 2D 推理、以及面向原生多模态的统一编码提供了可行路径与工程化参考。

> > 背景痛点

● 阅读顺序偏差问题:现有视觉-语言模型(VLM)通常将二维图像补丁按固定的 raster-scan(从左上到右下)顺序扁平化并输入下游 LLM,这种固定一维顺序与人类视觉的语义驱动、可变扫描路径不符,导致对复杂文档(表格、公式、多栏布局等)的语义处理效率与准确性受限。

● 结构不匹配痛点---1D 与 2D 不匹配:LLM 天然处理一维因果序列,而图像有二维空间结构,单纯扁平化引入不必要的位置信息偏置,妨碍"真正的二维推理"能力发挥。

● 文档阅读痛点---复杂布局挑战:文档类图像含复杂的局部-全局逻辑(如表格行列顺序、公式阅读顺序、图例与正文的跨引用),现有压缩/编码器无法灵活重排视觉信息以匹配这些语义顺序。

> > 具体的解决方案

● 模型设计总览:DeepSeek-OCR2 方案,提出 DeepEncoder V2 + DeepSeek-MoE decoder 的整体架构------将视觉 token 的双向建模与"因果重排查询(causal flow queries)"结合,以实现语义驱动的视觉 token 重排序并交付给自回归 LLM 解码器以完成因果式理解与生成。

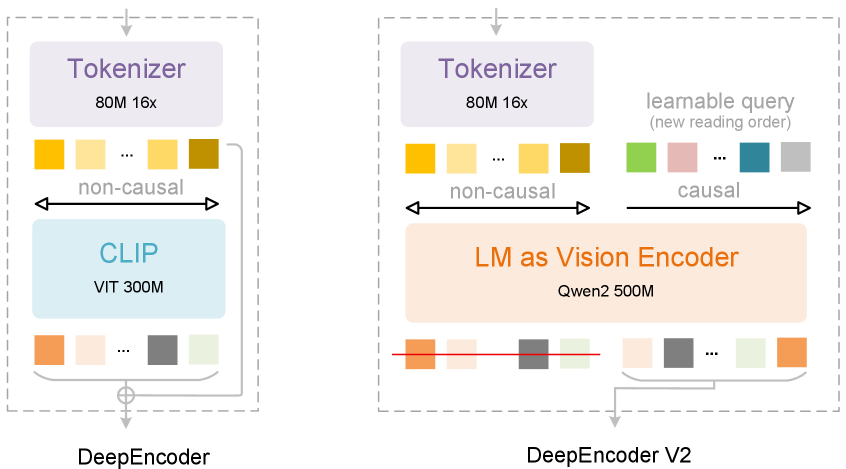

● 架构替换---替换 CLIP 为 LLM-style encoder:在 DeepEncoder V2 中将原先的 CLIP/ViT 组件用一个小型 LLM 风格的网络(示例实现为 Qwen2-0.5B)替代------视觉 token 保持 bidirectional attention,而新加入的 causal flow queries 使用 causal attention,从而使编码器既能获得全局视觉感受域,又能对视觉信息做语义性重排序。

● 注意机制---视觉---查询混合注意机制:通过在输入中将视觉 tokens 放在前缀、causal flow tokens 放在后缀,并用定制 attention mask(视觉 token 双向,流 token 因果三角掩码),使得流 token 能在层层堆叠中逐步对视觉 token 进行语义驱动的重排序与蒸馏,然后仅将流 token 输出送入 LLM decoder。

● token 预算策略---兼顾效率与容量:保持对 LLM 的视觉 token 预算在 256 至 1120 之间(采用多 crop 策略以支持 0→6 个 local views),在兼顾压缩效率的同时为重排序与再聚合留足容量,保证训练/推理效率与性能兼容。

> > 核心思路步骤(可操作化流程)

● 步骤一:**视觉 token 化:**用轻量 vision tokenizer(基于 SAM-base + 卷积层,约 80M 参数)将图像分解并做初步压缩,得到可控数量的视觉 tokens(作为后续模块输入)。

● 步骤二:**引入 causal flow queries:**在编码器中追加等量的 learnable queries(causal flow tokens),这些 queries 在每一层通过 causal attention 按语义顺序"抽取/重排"视觉信息。

● 步骤三:**自定义 attention mask:**采用 prefix(视觉 tokens,双向)+ suffix(流 tokens,因果三角)掩码组合,使视觉 tokens 保持全局可见性而流 tokens 能基于先前流 token 的输出逐步构造语义顺序。

● 步骤四:**只送流 token 至 LLM 解码器:**编码器最终只将 causal flow queries 的输出作为 LLM 解码器的视觉输入,利用 LLM 的自回归能力在语义顺序上进行下游生成与推理。

● 步骤五:**训练与多视图支持:**采用多 crop(local view)策略以提高对局部细节与全局结构的兼顾,并在训练管线中继续训练/微调 LLM 风格编码器与 decoder(包含 Query enhancement、continue-training LLM 等步骤)。

> > 优势

● 能力优势---语义驱动的顺序建模:通过 causal flow queries,模型能在编码阶段按语义重排视觉信息,从而将 2D 空间关系更自然地映射为 LLM 可处理的 1D 因果序列,改善文档内在阅读逻辑的建模。

● 工程优势---保持压缩效率与可扩展性:在不显著增加视觉 token 预算的前提下(256--1120 token 范围),DeepSeek-OCR2 保留了 DeepSeek-OCR 的图像压缩与解码效率,同时引入更强的视觉顺序建模能力。

● 迁移优势---与 LLM 基础设施兼容:把 encoder 设计成 LLM-style(decoder-prefix 结构)可利用 LLM 社区的多项优化(例如高效注意力、MoE 等),便于后续扩展与部署到已有大模型生态。

● 效果优势---实证性能提升:论文报告在 OmniDocBench v1.5 上相较于 DeepSeek-OCR 基线取得约 **3.73%** 的整体性能提升,并在文档阅读逻辑方面展现明显改进,证明方法在实际数据集上有效。

> > 后续系列结论观点

● 研究建议---向 2D 真正推理迈进:作者强调两级(encoder-level 重排序 + decoder-level 自回归推理)的因果架构是朝向"真正 2D 推理" 的有希望路径,建议社区探索更多跨模态的"重排序→自回归"范式。

● 工程建议---模态统一化方向:将视觉 encoder 设计为 LLM-style 有助于实现"原生多模态(native multimodality)"------通过修改 learnable queries 与 tokenizer,可将图像/音频/文本按同一流水线处理,简化模型统一预训练的流程。

● 实践经验---数据与视图策略注意:在文档类任务中,合理的多 crop/local view 与视觉 token 预算对性能有显著影响;建议在工程落地时权衡 token 预算(延迟/成本)与视图细粒度,以找到任务最佳点。

● 未来研究:作者列出若干后续方向:进一步验证对更广泛多模态数据集的可迁移性、探索更紧凑/高效的 causal query 结构、以及从认知科学角度优化"视觉因果流"以接近人类观察策略。

《DeepSeek-OCR 2: Visual Causal Flow》翻译与解读

|------------|--------------------------------------------------------------------------------------------------------------|

| 地址 | 论文地址:https://arxiv.org/abs/2601.20552 |

| 时间 | 2026年01月28日 |

| 作者 | DeepSeek-AI |

Abstract

|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| We present DeepSeek-OCR 2 to investigate the feasibility of a novel encoder---DeepEncoder V2---capable of dynamically reordering visual tokens upon image semantics. Conventional vision-language models (VLMs) invariably process visual tokens in a rigid raster-scan order (top-left to bottom-right) with fixed positional encoding when fed into LLMs. However, this contradicts human visual perception, which follows flexible yet semantically coherent scanning patterns driven by inherent logical structures. Particularly for images with complex layouts, human vision exhibits causally-informed sequential processing. Inspired by this cognitive mechanism, DeepEncoder V2 is designed to endow the encoder with causal reasoning capabilities, enabling it to intelligently reorder visual tokens prior to LLM-based content interpretation. This work explores a novel paradigm: whether 2D image understanding can be effectively achieved through two-cascaded 1D causal reasoning structures, thereby offering a new architectural approach with the potential to achieve genuine 2D reasoning. Codes and model weights are publicly accessible at http://github.com/deepseek-ai/DeepSeek-OCR-2. | 我们推出 DeepSeek-OCR 2 以探究一种新型编码器------DeepEncoder V2------的可行性,该编码器能够根据图像语义动态重新排列视觉标记。传统的视觉语言模型(VLM)在将视觉标记输入到大型语言模型(LLM)时,总是以固定的栅格扫描顺序(从左上角到右下角)处理视觉标记,并使用固定的定位编码。然而,这与人类的视觉感知相矛盾,人类的视觉感知遵循灵活但语义连贯的扫描模式,这种模式由内在的逻辑结构驱动。特别是对于布局复杂的图像,人类视觉表现出因果信息引导的顺序处理。受此认知机制启发,DeepEncoder V2 被设计为赋予编码器因果推理能力,使其能够在基于 LLM 的内容解释之前智能地重新排列视觉标记。这项工作探索了一种新的范式:二维图像理解是否可以通过两个级联的一维因果推理结构来有效实现,从而提供一种新的架构方法,有可能实现真正的二维推理。代码和模型权重可在 http://github.com/deepseek-ai/DeepSeek-OCR-2 公开获取。 |

Figure 1:We substitute the CLIP component in DeepEncoder with an LLM-style architecture. By customizing the attention mask, visual tokens utilize bidirectional attention while learnable queries adopt causal attention. Each query token can thus attend to all visual tokens and preceding queries, allowing progressive causal reordering over visual information.图 1:我们将 DeepEncoder 中的 CLIP 组件替换为 LLM 风格的架构。通过自定义注意力掩码,视觉标记利用双向注意力,而可学习的查询采用因果注意力。因此,每个查询标记都可以关注所有视觉标记和之前的查询,从而实现对视觉信息的逐步因果重排序。

1、Introduction

|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| The human visual system closely mirrors transformer-based vision encoders [dosovitskiy2020image, dehghani2023patch]: foveal fixations function as visual tokens, locally sharp yet globally aware. However, unlike existing encoders that rigidly scan tokens from top-left to bottom-right, human vision follows a causally-driven flow guided by semantic understanding. Consider tracing a spiral---our eye movements follow inherent logic where each subsequent fixation causally depends on previous ones. By analogy, visual tokens in models should be selectively processed with ordering highly contingent on visual semantics rather than spatial coordinates. This insight motivates us to fundamentally reconsider the architectural design of vision-language models (VLMs), particularly the encoder component. LLMs are inherently trained on 1D sequential data, while images are 2D structures. Directly flattening image patches in a predefined raster-scan order introduces unwarranted inductive bias that ignores semantic relationships. To address this, we present DeepSeek-OCR 2 with a novel encoder design---DeepEncoder V2---to advance toward more human-like visual encoding. Following DeepSeek-OCR [wei2025deepseek], we adopt document reading as our primary experimental testbed. Documents present rich challenges including complex layout orders, intricate formulas, and tables. These structured elements inherently carry causal visual logic, demanding sophisticated reasoning capabilities that make document OCR an ideal platform for validating our approach. | 人类视觉系统与基于转换器的视觉编码器(如 dosovitskiy2020image 和 dehghani2023patch 中所述)极为相似:中央凹注视点充当视觉标记,局部清晰且全局感知。然而,与现有编码器从左上角到右下角机械扫描标记不同,人类视觉遵循由语义理解引导的因果驱动流程。以追踪螺旋为例------我们的眼睛运动遵循内在逻辑,每次后续注视点都因果性地依赖于之前的注视点。以此类推,模型中的视觉标记应根据视觉语义而非空间坐标进行选择性处理,其处理顺序应高度依赖于视觉语义。 这一见解促使我们从根本上重新审视视觉语言模型(VLM)的架构设计,尤其是编码器组件。大型语言模型(LLM)本质上是基于一维序列数据训练的,而图像则是二维结构。直接将图像补丁按预定义的光栅扫描顺序展平会引入不必要的归纳偏差,忽略语义关系。为解决这一问题,我们提出了 DeepSeek-OCR2,其采用了全新的编码器设计------DeepEncoder V2,以朝着更接近人类的视觉编码迈进。我们沿用 DeepSeek-OCR [wei2025deepseek] 的做法,将文档阅读作为主要的实验测试平台。文档具有丰富的挑战性,包括复杂的布局顺序、复杂的公式和表格。这些结构化元素本身蕴含着因果视觉逻辑,需要复杂的推理能力,这使得文档 OCR 成为验证我们方法的理想平台。 |

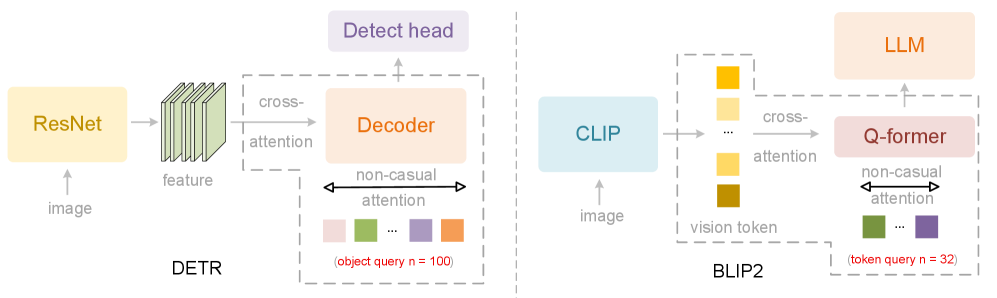

| Our main contributions are threefold: First, we present DeepEncoder V2, featuring several key innovations: (1) we replace the CLIP [radford2021learning] component in DeepEncoder [wei2025deepseek] with a compact LLM [wang2024qwen2] architecture, as illustrated in Figure 1, to achieve visual causal flow; (2) to enable parallelized processing, we introduce learnable queries [carion2020end], termed causal flow tokens, with visual tokens prepended as a prefix---through a customized attention mask, visual tokens maintain global receptive fields, while causal flow tokens can obtain visual token reordering ability; (3) we maintain equal cardinality between causal and visual tokens (with redundancy such as padding and borders) to provide sufficient capacity for re-fixation; (4) only the causal flow tokens---the latter half of the encoder outputs---are fed to the LLM [deepseekv2] decoder, enabling cascade causal-aware visual understanding. Second, leveraging DeepEncoder V2, we present DeepSeek-OCR 2, which preserves the image compression ratio and decoding efficiency of DeepSeek-OCR while achieving substantial performance improvements. We constrain visual tokens fed to the LLM between 256 and 1120. The lower bound (256) corresponds to DeepSeek-OCR's tokenization of 1024×1024 images, while the upper bound (1120) matches Gemini-3 pro's [team2023gemini] maximum visual token budget. This design positions DeepSeek-OCR 2 as both a novel VLM architecture for research exploration and a practical tool for generating high-quality training data for LLM pretraining. | 我们的主要贡献有三点: 首先,我们提出了 DeepEncoder V2,具有几个关键创新: (1)我们用紧凑的 LLM [wang2024qwen2] 架构替换了 DeepEncoder [wei2025deepseek] 中的 CLIP [radford2021learning] 组件,如图 1 所示,以实现视觉因果流; (2)为了实现并行处理,我们引入了可学习的查询 [carion2020end],称为因果流标记,将其作为视觉标记的前缀------通过定制的注意力掩码,视觉标记保持全局感受野,而因果流标记能够获得视觉标记的重新排序能力; (3)我们保持因果标记和视觉标记的基数相等(包括填充和边框等冗余),以提供足够的重新定位能力; (4)仅将因果流标记------即编码器输出的后半部分------输入到 LLM [deepseekv2] 解码器中,从而实现级联因果感知视觉理解。 其次,借助 DeepEncoder V2,我们推出了 DeepSeek-OCR 2 ,它在保持 DeepSeek-OCR 的图像压缩率和解码效率的同时,实现了显著的性能提升。我们将输入到 LLM 的视觉标记数量限制在 256 到 1120 之间。下限(256)对应于 DeepSeek-OCR 对 1024×1024 图像的标记化,而上限(1120)与 Gemini-3 pro 的 [team2023gemini] 最大视觉标记预算相匹配。这种设计使 DeepSeek-OCR 2 成为一种新颖的 VLM 架构,既可用于研究探索,也可作为生成高质量 LLM 预训练数据的实用工具。 |

| Finally, we provide preliminary validation for employing language model architectures as VLM encoders---a promising pathway toward unified omni-modal encoding. This framework enables feature extraction and token compression across diverse modalities (images, audio, text [liu2025context]) by simply configuring modality-specific learnable queries. Crucially, it naturally succeeds to advanced infrastructure optimizations from the LLM community, including Mixture-of-Experts (MoE) architectures, efficient attention mechanisms [deepseek32], and so on. In summary, we propose DeepEncoder V2 for DeepSeek-OCR 2, employing specialized attention mechanisms to effectively model the causal visual flow of document reading. Compared to the DeepSeek-OCR baseline, DeepSeek-OCR 2 achieves 3.73% performance gains on OmniDocBench v1.5 [ouyang2025omnidocbench] and yields considerable advances in visual reading logic. | 最后,我们为将语言模型架构用作视觉语言模型(VLM)编码器提供了初步验证------这是迈向统一多模态编码的一个有前景的途径。该框架通过简单配置特定模态的可学习查询,即可实现跨多种模态(图像、音频、文本[liu2025context])的特征提取和标记压缩。至关重要的是,它自然地继承了来自大型语言模型(LLM)社区的先进基础设施优化,包括专家混合(MoE)架构、高效注意力机制[deepseek32]等等。 总之,我们为 DeepSeek-OCR 2 提出了 DeepEncoder V2,采用专门的注意力机制来有效建模文档阅读的因果视觉流程。与 DeepSeek-OCR 基线相比,DeepSeek-OCR 2 在 OmniDocBench v1.5 [ouyang2025omnidocbench] 上实现了 3.73% 的性能提升,并在视觉阅读逻辑方面取得了显著进展。 |

Figure 2:This figure shows two computer vision models with parallelized queries: DETR's decoder [carion2020end] for object detection and BLIP2's Q-former [li2023blip] for visual token compression. Both employ bidirectional self-attention among queries.图 2:此图展示了两个具有并行化查询的计算机视觉模型:用于目标检测的 DETR 解码器 [carion2020end] 和用于视觉标记压缩的 BLIP2 的 Q-former [li2023blip]。两者都在查询之间采用了双向自注意力机制。

6 Conclusion

|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| In this technical report, we present DeepSeek-OCR 2, a significant upgrade to DeepSeek-OCR, that maintains high visual token compression while achieving meaningfully performance improvements. This advancement is powered by the newly proposed DeepEncoder V2, which implicitly distills causal understanding of the visual world through the integration of both bidirectional and causal attention mechanisms, leading to causal reasoning capabilities in the vision encoder and, consequently, marked lifts in visual reading logic. While optical text reading, particularly document parsing, represents one of the most practical vision tasks in the LLM era, it constitutes only a small part of the broader visual understanding landscape. Looking ahead, we will refine and adapt this architecture to more diverse scenarios, seeking deeper toward a more comprehensive vision of multimodal intelligence. | 在本技术报告中,我们介绍了 DeepSeek-OCR 2,这是 DeepSeek-OCR 的重大升级版本,它在保持高视觉标记压缩率的同时实现了显著的性能提升。这一进步得益于新提出的 DeepEncoder V2,它通过整合双向和因果注意力机制,隐性地提炼出对视觉世界的因果理解,从而在视觉编码器中具备了因果推理能力,并且显著提升了视觉阅读逻辑。 尽管光学文本阅读,尤其是文档解析,在 LLM 时代是最具实用性的视觉任务之一,但它仅是更广泛的视觉理解领域的一小部分。展望未来,我们将进一步优化和调整此架构以适应更多样化的场景,朝着更全面的多模态智能愿景更进一步。 |